- Random article

- Teaching guide

- Privacy & cookies

What is speech synthesis?

How does speech synthesis work.

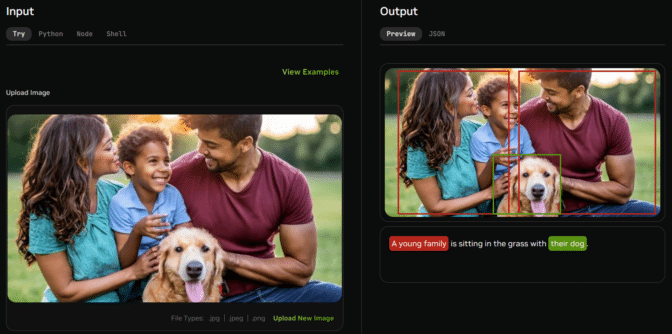

Artwork: Context matters: A speech synthesizer needs some understanding of what it's reading.

Artwork: Concatenative versus formant speech synthesis. Left: A concatenative synthesizer builds up speech from pre-stored fragments; the words it speaks are limited rearrangements of those sounds. Right: Like a music synthesizer, a formant synthesizer uses frequency generators to generate any kind of sound.

Articulatory

What are speech synthesizers used for.

Photo: Will humans still speak to one another in the future? All sorts of public announcements are now made by recorded or synthesized computer-controlled voices, but there are plenty of areas where even the smartest machines would fear to tread. Imagine a computer trying to commentate on a fast-moving sports event, such as a rodeo, for example. Even if it could watch and correctly interpret the action, and even if it had all the right words to speak, could it really convey the right kind of emotion? Photo by Carol M. Highsmith, courtesy of Gates Frontiers Fund Wyoming Collection within the Carol M. Highsmith Archive, Library of Congress , Prints and Photographs Division.

Who invented speech synthesis?

Artwork: Speak & Spell—An iconic, electronic toy from Texas Instruments that introduced a whole generation of children to speech synthesis in the late 1970s. It was built around the TI TMC0281 chip.

Anna (c. ~2005)

Olivia (c. ~2020).

If you liked this article...

Find out more, on this website.

- Voice recognition software

Technical papers

Current research, notes and references ↑ pre-processing in described in more detail in "chapter 7: speech synthesis from textual or conceptual input" of speech synthesis and recognition by wendy holmes, taylor & francis, 2002, p.93ff. ↑ for more on concatenative synthesis, see chapter 14 ("synthesis by concatenation and signal-process modification") of text-to-speech synthesis by paul taylor. cambridge university press, 2009, p.412ff. ↑ for a much more detailed explanation of the difference between formant, concatenative, and articulatory synthesis, see chapter 2 ("low-lever synthesizers: current status") of developments in speech synthesis by mark tatham, katherine morton, wiley, 2005, p.23–37. please do not copy our articles onto blogs and other websites articles from this website are registered at the us copyright office. copying or otherwise using registered works without permission, removing this or other copyright notices, and/or infringing related rights could make you liable to severe civil or criminal penalties. text copyright © chris woodford 2011, 2021. all rights reserved. full copyright notice and terms of use . follow us, rate this page, tell your friends, cite this page, more to explore on our website....

- Get the book

- Send feedback

- There are no suggestions because the search field is empty.

What is Text-to-Speech (TTS): Initial Speech Synthesis Explained

Sep 28, 2021 1:08:38 pm.

Today, speech synthesis technologies are in demand more than ever. Businesses, film studios, game producers, and video bloggers use speech synthesis to speed up and reduce the cost of content production as well as improve the customer experience.

Let's start our immersion in speech technologies by understanding how text-to-speech technology (TTS) works.

What is TTS speech synthesis?

TTS is a computer simulation of human speech from a textual representation using machine learning methods. Typically, speech synthesis is used by developers to create voice robots, such as IVR (Interactive Voice Response).

TTS saves a business time and money as it generates sound automatically, thus saving the company from having to manually record (and rewrite) audio files.

You can have any text read aloud in a voice that is as close to natural as possible, thanks to TTS synthesis. To make TTS synthesized speech sound natural, the painstaking process of honing its timbre, smoothness, placement of accents and pauses, intonation, and other areas is a long and unavoidable burden.

There are two ways developers can go about getting it done:

Concatenative - gluing together fragments of recorded audio. This synthesized speech is of high quality but requires a lot of data for machine learning.

Parametric - building a probabilistic model that selects the acoustic properties of a sound signal for a given text. Using this approach, one can synthesize a speech that is virtually indistinguishable from a real human.

What is text-to-speech technology?

To convert text to speech, the ML system must perform the following:

- Convert text to words

Firstly, the ML algorithm must convert text into a readable format. The challenge here is that the text contains not only words but numbers, abbreviations, dates, etc.

These must be translated and written in words. The algorithm then divides the text into distinct phrases, which the system then reads with the appropriate intonation. While doing that, the program follows the punctuation and stable structures in the text.

- Complete phonetic transcription

Each sentence can be pronounced differently depending on the meaning and emotional tone. To understand the right pronunciation, the system uses built-in dictionaries.

If the required word is missing, the algorithm creates the transcription using general academic rules. The algorithm also checks on the recordings of the speakers and determines which parts of the words they accentuate.

The system then calculates how many 25 millisecond fragments are in the compiled transcription. This is known as phoneme processing.

A phoneme is the minimum unit of a language’s sound structure.

The system describes each piece with different parameters: which phoneme it is a part of, the place it occupies in it, which syllable this phoneme belongs to, and so on. After that, the system recreates the appropriate intonation using data from the phrases and sentences.

- Convert transcription to speech

Finally, the system uses an acoustic model to read the processed text. The ML algorithm establishes the connection between phonemes and sounds, giving them accurate intonations.

The system uses a sound wave generator to create a vocal sound. The frequency characteristics of phrases obtained from the acoustic model are eventually loaded into the sound wave generator.

Industry TTS applications

In general, there are three most common areas to apply TTS voice conversions for your business or content production. They are:

- Voice notifications and reminders. This allows for the delivery of any information to your customers all over the world with a phone call. The good news is that the messages are delivered in the customers' native languages.

- Listening to the written content. You can hear the synthesized voice reading your favorite book, email, or website content. This is very important for people with limited reading and writing abilities, or for those who prefer listening over reading.

- Localization. It might be costly to hire employees who can speak multiple customer languages if you operate internationally. TTS allows for practically instant vocalization from English (or other languages) to any foreign language. This is considering that you use a proper translation service.

With these three in mind, you can imagine the full-scale application that covers almost any industry that you operate in with customers and that may lack personalized language experience.

Speech to speech (STS) voice synthesis helps where TTS falls short

We have extensively covered STS technology in previous blog posts. Learn more on how the deepfake tech that powers STS conversion works and some of the most disrupting applications like AI-powered dubbing or voice cloning in marketing and branding .

In short, speech synthesis powered by AI allows for covering critical use cases where you use speech (not text) as a source to generate speech in another voice.

With speech-to-speech voice cloning technology , you can make yourself sound like anyone you can imagine. Like here, where our pal Grant speaks in Barack Obama’s voice .

For those of you who want to discover more, check our FAQ page to find answers to questions about speech-to-speech voice conversion .

So why choose STS over the TTS tech? Here are just a couple of reasons:

- For obvious reasons, STS allows you to do what is impossible with TTS. Like synthesizing iconic voices of the past or saving time and money on ADR for movie production .

- STS voice cloning allows you to achieve speech of a more colorful emotional palette. The generated voice will be absolutely indistinguishable from the target voice.

- STS technology allows for the scaling of content production for those celebrities who want but can't spend time working simultaneously on several projects.

How do I find out more about speech-to-speech voice synthesis?

Try Respeecher . We have a long history of successful collaborations with Hollywood studios, video game developers, businesses, and even YouTubers for their virtual projects.

We are always willing to help ambitious projects or businesses get the most out of STS technology. Drop us a line to get a demo customized just for you.

Share this post

Alex Serdiuk

CEO and Co-founder

Alex founded Respeecher with Dmytro Bielievtsov and Grant Reaber in 2018. Since then the team has been focused on high-fidelity voice cloning. Alex is in charge of Business Development and Strategy. Respeecher technology is already applied in Feature films and TV projects, Video Games, Animation studios, Localization, media agencies, Healthcare, and other areas.

Stay relevant in a constantly evolving industry.

Get the monthly newsletter keeping thousands of sound professionals in the loop.

Related Articles

Text-to-Speech AI Voice Generator: Creating a Human-like Voice

Mar 15, 2022 1:36:00 PM

The latest technologies in voice synthesis and recognition are constantly disrupting the...

Speech Synthesis Is No More a Villain than Photoshop Was 10+ Years Ago

Sep 14, 2021 12:00:00 PM

Modern technologies deliver many benefits, completely transforming many areas of our...

AI Voices and the Future of Speech-Based Applications

Jan 26, 2022 9:23:00 AM

While the pandemic slowed down the development of businesses and entire industries, it...

- Productivity

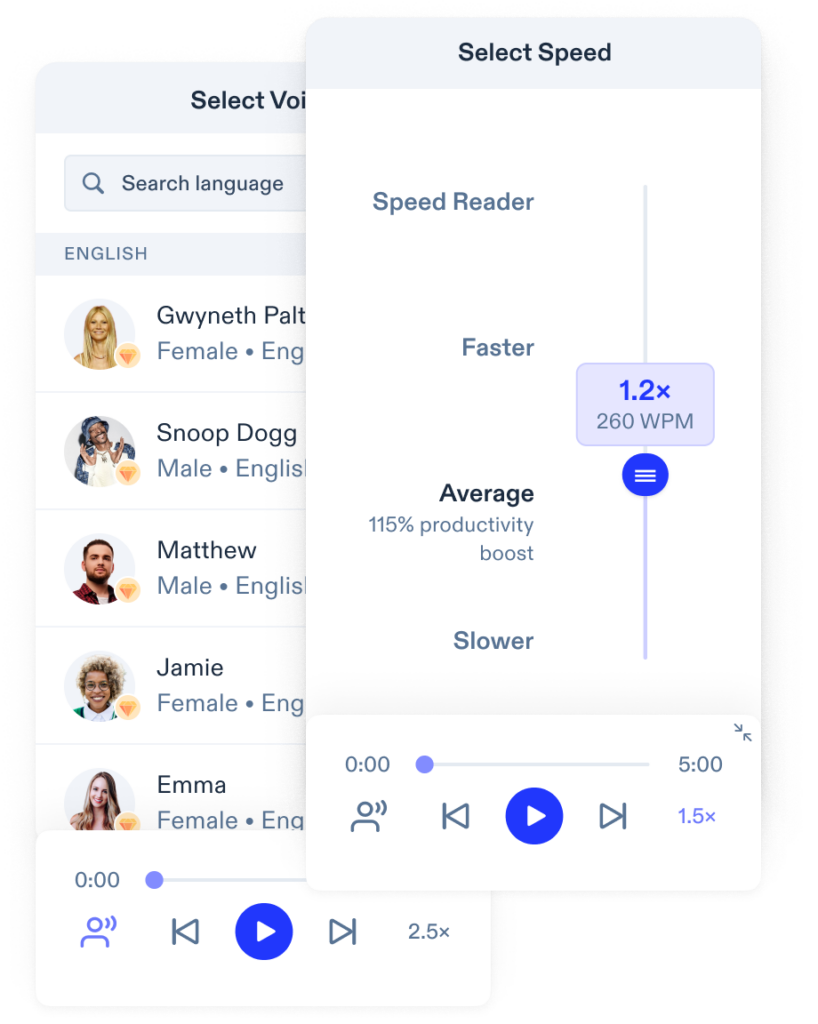

The Ultimate Guide to Speech Synthesis

Table of contents.

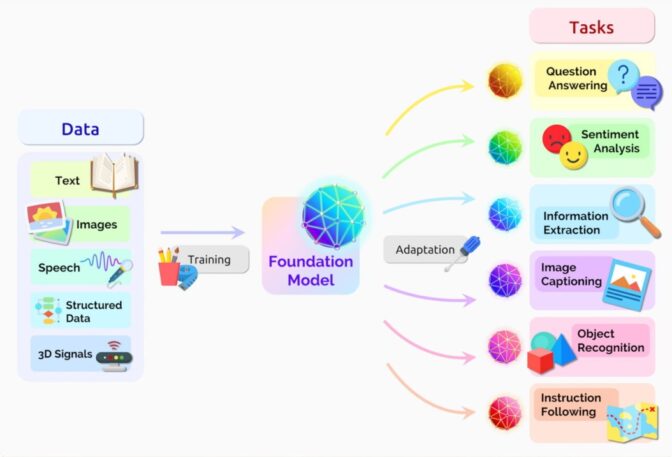

Speech synthesis is an intriguing area of artificial intelligence (AI) that’s been extensively developed by major tech corporations like Microsoft, Amazon, and Google Cloud. It employs deep learning algorithms, machine learning, and natural language processing (NLP) to convert written text into spoken words.

Basics of Speech Synthesis

Speech synthesis, also known as text-to-speech (TTS), involves the automatic production of human speech. This technology is widely used in various applications such as real-time transcription services, automated voice response systems, and assistive technology for the visually impaired. The pronunciation of words, including “robot,” is achieved by breaking down words into basic sound units or phonemes and stringing them together.

Three Stages of Speech Synthesis

Speech synthesizers go through three primary stages: Text Analysis, Prosodic Analysis, and Speech Generation.

- Text Analysis : The text to be synthesized is analyzed and parsed into phonemes, the smallest units of sound. Segmentation of the sentence into words and words into phonemes happens in this stage.

- Prosodic Analysis : The intonation, stress patterns, and rhythm of the speech are determined. The synthesizer uses these elements to generate human-like speech.

- Speech Generation : Using rules and patterns, the synthesizer forms sounds based on the phonemes and prosodic information. Concatenative and unit selection synthesizers are the two main types of speech generation. Concatenative synthesizers use pre-recorded speech segments, while unit selection synthesizers select the best unit from a large speech database.

Most Realistic TTS and Best TTS for Android

While many TTS systems produce high quality and realistic speech, Google’s TTS, part of the Google Cloud service, and Amazon’s Alexa stand out. These systems leverage machine learning and deep learning algorithms, creating seamless and almost indistinguishable-from-human speech. The best TTS engine for Android smartphones is Google’s Text-to-Speech, with a wide range of languages and high-quality voices.

Best Python Library for Text to Speech

For Python developers, the gTTS (Google Text-to-Speech) library stands out due to its simplicity and quality. It interfaces with Google Translate’s text-to-speech API, providing an easy-to-use, high-quality solution.

Speech Recognition and Text-to-Speech

While speech synthesis converts text into speech, speech recognition does the opposite. Automatic Speech Recognition (ASR) technology, like IBM’s Watson or Apple’s Siri, transcribes human speech into text. This forms the basis of voice assistants and real-time transcription services.

Pronunciation of the word “Robot”

The pronunciation of the word “robot” varies slightly depending on the speaker’s accent, but the standard American English pronunciation is /ˈroʊ.bɒt/. Here is a breakdown:

- The first syllable, “ro”, is pronounced like ‘row’ in rowing a boat.

- The second syllable, “bot”, is pronounced like ‘bot’ in ‘bottom’, but without the ‘om’ part.

Example of a Text-to-Speech Program

Google Text-to-Speech is a prominent example of a text-to-speech program. It converts written text into spoken words and is widely used in various Google services and products like Google Translate, Google Assistant, and Android devices.

Best TTS Engine for Android

The best TTS engine for Android devices is Google Text-to-Speech. It supports multiple languages, has a variety of voices to choose from, and is natively integrated with Android, providing a seamless user experience.

Difference Between Concatenative and Unit Selection Synthesizers

Concatenative and unit selection are two main techniques employed in the speech generation stage of a speech synthesizer.

- Concatenative Synthesizers : They work by stitching together pre-recorded samples of human speech. The recorded speech is divided into small pieces, each representing a phoneme or a group of phonemes. When a new speech is synthesized, the appropriate pieces are selected and concatenated together to form the final speech.

- Unit Selection Synthesizers : This approach also relies on a large database of recorded speech but uses a more sophisticated selection process to choose the best matching unit of speech for each segment of the text. The goal is to reduce the amount of ‘stitching’ required, thus producing more natural-sounding speech. It considers factors like prosody, phonetic context, and even speaker emotion while selecting the units.

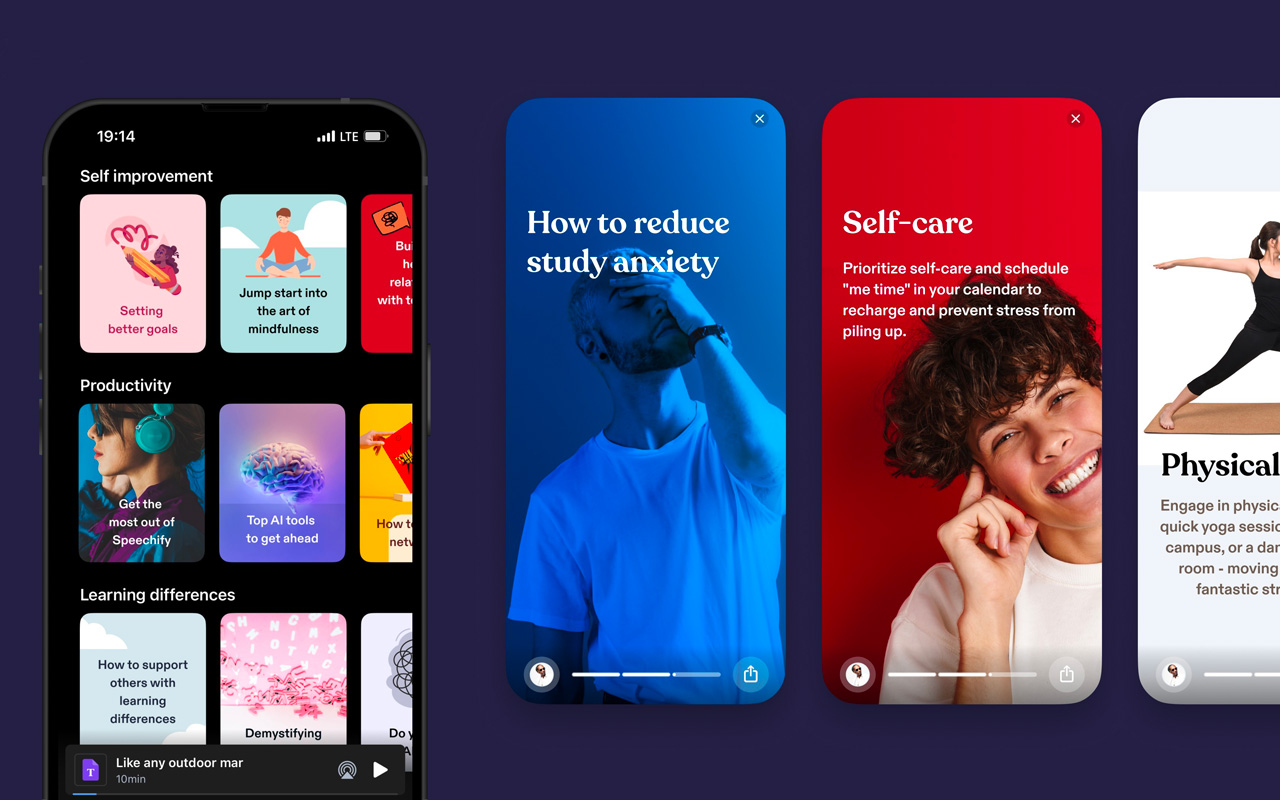

Top 8 Speech Synthesis Software or Apps

- Google Text-to-Speech : A versatile TTS software integrated into Android. It supports different languages and provides high-quality voices.

- Amazon Polly : An AWS service that uses advanced deep learning technologies to synthesize speech that sounds like a human voice.

- Microsoft Azure Text to Speech : A robust TTS system with neural network capabilities providing natural-sounding speech.

- IBM Watson Text to Speech : Leverages AI to produce speech with human-like intonation.

- Apple’s Siri : Siri isn’t only a voice assistant but also provides high-quality TTS in several languages.

- iSpeech : A comprehensive TTS platform supporting various formats, including WAV.

- TextAloud 4 : A TTS software for Windows, offering conversion of text from various formats to speech.

- NaturalReader : An online TTS service with a range of natural-sounding voices.

- Previous Understanding Veed: Terms of Service, Commercial Rights, and Safe Usage

- Next How to Avoid Voice AI Scams

Cliff Weitzman

Cliff Weitzman is a dyslexia advocate and the CEO and founder of Speechify, the #1 text-to-speech app in the world, totaling over 100,000 5-star reviews and ranking first place in the App Store for the News & Magazines category. In 2017, Weitzman was named to the Forbes 30 under 30 list for his work making the internet more accessible to people with learning disabilities. Cliff Weitzman has been featured in EdSurge, Inc., PC Mag, Entrepreneur, Mashable, among other leading outlets.

Recent Blogs

Is Text to Speech HSA Eligible?

Can You Use an HSA for Speech Therapy?

Surprising HSA-Eligible Items

Ultimate guide to ElevenLabs

Voice changer for Discord

How to download YouTube audio

Speechify 3.0 is the Best Text to Speech App Yet.

Voice API: Everything You Need to Know

Best text to speech generator apps

The best AI tools other than ChatGPT

Top voice over marketplaces reviewed

Speechify Studio vs. Descript

Everything to Know About Google Cloud Text to Speech API

Source of Joe Biden deepfake revealed after election interference

How to listen to scientific papers

How to add music to CapCut

What is CapCut?

VEED vs. InVideo

Speechify Studio vs. Kapwing

Voices.com vs. Voice123

Voices.com vs. Fiverr Voice Over

Fiverr voice overs vs. Speechify Voice Over Studio

Voices.com vs. Speechify Voice Over Studio

Voice123 vs. Speechify Voice Over Studio

Voice123 vs. Fiverr voice overs

HeyGen vs. Synthesia

Hour One vs. Synthesia

HeyGen vs. Hour One

Speechify makes Google’s Favorite Chrome Extensions of 2023 list

How to Add a Voice Over to Vimeo Video: A Comprehensive Guide

Speechify text to speech helps you save time

Popular blogs.

The Best Celebrity Voice Generators in 2024

YouTube Text to Speech: Elevating Your Video Content with Speechify

The 7 best alternatives to Synthesia.io

Everything you need to know about text to speech on TikTok

The 10 best text-to-speech apps for android, how to convert a pdf to speech, the top girl voice changers, how to use siri text to speech, obama text to speech.

Robot Voice Generators: The Futuristic Frontier of Audio Creation

Pdf read aloud: free & paid options, alternatives to fakeyou text to speech.

All About Deepfake Voices

TikTok voice generator

Only available on iPhone and iPad

To access our catalog of 100,000+ audiobooks, you need to use an iOS device.

Coming to Android soon...

Join the waitlist

Enter your email and we will notify you as soon as Speechify Audiobooks is available for you.

You’ve been added to the waitlist. We will notify you as soon as Speechify Audiobooks is available for you.

- Subject List

- Take a Tour

- For Authors

- Subscriber Services

- Publications

- African American Studies

- African Studies

- American Literature

- Anthropology

- Architecture Planning and Preservation

- Art History

- Atlantic History

- Biblical Studies

- British and Irish Literature

- Childhood Studies

- Chinese Studies

- Cinema and Media Studies

- Communication

- Criminology

- Environmental Science

- Evolutionary Biology

- International Law

- International Relations

- Islamic Studies

- Jewish Studies

- Latin American Studies

- Latino Studies

Linguistics

- Literary and Critical Theory

- Medieval Studies

- Military History

- Political Science

- Public Health

- Renaissance and Reformation

- Social Work

- Urban Studies

- Victorian Literature

- Browse All Subjects

How to Subscribe

- Free Trials

In This Article Expand or collapse the "in this article" section Speech Synthesis

Introduction, textbooks, edited collections, surveys, and introductions.

- Journals and Conferences

- Formant Synthesis

- Concatenative Synthesis Based on Diphones

- Text Processing, Pronunciation Dictionaries, and Letter-to-Sound

- Fundamental Frequency Estimation

- Representing Prosody

- Predicting Prosody

- Mainstream Unit Selection

- Trainable Unit Selection and Hybrid Methods

- Source-Filter Signal Processing: Linear Prediction

- Abstracting away from the Vocal Tract Filter: The Spectral Envelope

- Avoiding Explicit Source-Filter Separation

- Statistical Parametric Speech Synthesis

- Subjective Tests

- Objective Measures

Related Articles Expand or collapse the "related articles" section about

About related articles close popup.

Lorem Ipsum Sit Dolor Amet

Vestibulum ante ipsum primis in faucibus orci luctus et ultrices posuere cubilia Curae; Aliquam ligula odio, euismod ut aliquam et, vestibulum nec risus. Nulla viverra, arcu et iaculis consequat, justo diam ornare tellus, semper ultrices tellus nunc eu tellus.

- Acoustic Phonetics

- Computational Linguistics

- Machine Translation

- Speech Perception

- Speech Production

- Voice and Voice Quality

Other Subject Areas

Forthcoming articles expand or collapse the "forthcoming articles" section.

- Cognitive Grammar

- Edward Sapir

- Teaching Pragmatics

- Find more forthcoming articles...

- Export Citations

- Share This Facebook LinkedIn Twitter

Speech Synthesis by Simon King LAST REVIEWED: 15 November 2022 LAST MODIFIED: 25 February 2016 DOI: 10.1093/obo/9780199772810-0024

Speech synthesis has a long history, going back to early attempts to generate speech- or singing-like sounds from musical instruments. But in the modern age, the field has been driven by one key application: Text-to-Speech (TTS), which means generating speech from text input. Almost universally, this complex problem is divided into two parts. The first problem is the linguistic processing of the text, and this happens in the front end of the system. The problem is hard because text clearly does not contain all the information necessary for reading out loud. So, just as human talkers use their knowledge and experience when reading out loud, machines must also bring additional information to bear on the problem; examples include rules regarding how to expand abbreviations into standard words, or a pronunciation dictionary that converts spelled forms into spoken forms. Many of the techniques currently used for this part of the problem were developed in the 1990s and have only advanced very slowly since then. In general, techniques used in the front end are designed to be applicable to almost any language, although the exact rules or model parameters will depend on the language in question. The output of the front end is a linguistic specification that contains information such as the phoneme sequence and the positions of prosodic phrase breaks. In contrast, the second part of the problem, which is to take the linguistic specification and generate a corresponding synthetic speech waveform, has received a great deal of attention and is where almost all of the exciting work has happened since around 2000. There is far more recent material available on the waveform generation part of the text-to-speech problem than there is on the text processing part. There are two main paradigms currently in use for waveform generation, both of which apply to any language. In concatenative synthesis, small snippets of prerecorded speech are carefully chosen from an inventory and rearranged to construct novel utterances. In statistical parametric synthesis, the waveform is converted into two sets of speech parameters: one set captures the vocal tract frequency response (or spectral envelope) and the other set represents the sound source, such as the fundamental frequency and the amount of aperiodic energy. Statistical models are learned from annotated training data and can then be used to generate the speech parameters for novel utterances, given the linguistic specification from the front end. A vocoder is used to convert those speech parameters back to an audible speech waveform.

Steady progress in synthesis since around 1990, and the especially rapid progress in the early 21st century, is a challenge for textbooks. Taylor 2009 provides the most up-to-date entry point to this field and is an excellent starting point for students at all levels. For a wider-ranging textbook that also provides coverage of Natural Language Processing and Automatic Speech Recognition, Jurafsky and Martin 2009 is also excellent. For those without an electrical engineering background, the chapter by Ellis giving “An Introduction to Signal Processing for Speech” in Hardcastle, et al. 2010 is essential background reading, since most other texts are aimed at readers with some previous knowledge of signal processing. Most of the advances in the field since around 2000 have been in the statistical parametric paradigm. No current textbook covers this subject in sufficient depth. King 2011 gives a short and simple introduction to some of the main concepts, and Taylor 2009 contains one relatively brief chapter. For more technical depth, it is necessary to venture beyond textbooks, and the tutorial article Tokuda, et al. 2013 is the best place to start, followed by the more technical article Zen, et al. 2009 . Some older books, such as Dutoit 1997 , still contain relevant material, especially in their treatment of the text processing part of the problem. Sproat’s comment that “text-analysis has not received anything like half the attention of the synthesis community” (p. 73) in his introduction to text processing in van Santen, et al. 1997 is still true, and Yarowsky’s chapter on homograph disambiguation in the same volume still represents a standard solution to that particular problem. Similarly, the modular system architecture described by Sproat and Olive in that volume is still the standard way of configuring a text-to-speech system.

Dutoit, Thierry. 1997. An introduction to text-to-speech synthesis . Norwell, MA: Kluwer Academic.

DOI: 10.1007/978-94-011-5730-8

Starting to get dated, but still contains useful material.

Hardcastle, W. J., J. Laver, and F. E. Gibbon. 2010. The handbook of phonetic sciences . Blackwell Handbooks in Linguistics. Oxford: Wiley-Blackwell.

DOI: 10.1002/9781444317251

A wealth of information, one highlight being the excellent chapter by Ellis introducing speech signal processing to readers with minimal technical background. The chapter on speech synthesis is too dated. Other titles in this series are worth consulting, such as the one on speech perception.

Jurafsky, D., and J. H. Martin. 2009. Speech and language processing: An introduction to natural language processing, computational linguistics, and speech recognition . 2d ed. Upper Saddle River, NJ: Prentice Hall.

A complete course in speech and language processing, very widely used for teaching at advanced undergraduate and graduate levels. The authors have a free online video lecture course covering the Natural Language Processing parts. A third edition of the book is expected.

King, S. 2011. An introduction to statistical parametric speech synthesis. Sadhana 36.5: 837–852.

DOI: 10.1007/s12046-011-0048-y

A gentle and nontechnical introduction to this topic, designed to be accessible to readers from any background. Should be read before attempting the more advanced material.

Taylor, P. 2009. Text-to-speech synthesis . Cambridge, UK: Cambridge Univ. Press.

DOI: 10.1017/CBO9780511816338

The most comprehensive and authoritative textbook ever written on the subject. The content is still up-to-date and highly relevant. Of course, developments since 2009—such as advanced techniques for HMM-based synthesis and the resurgence of Neural Networks—are not covered.

Tokuda, K., Y. Nankaku, T. Toda, H. Zen, J. Yamagishi, and K. Oura. 2013. Speech synthesis based on Hidden Markov Models. Proceedings of the IEEE 101.5: 1234–1252.

DOI: 10.1109/JPROC.2013.2251852

A tutorial article covering the main concepts of statistical parametric speech synthesis using Hidden Markov Models. Also touches on singing synthesis and controllable models.

van Santen, J. P. H., R. W. Sproat, J. P. Oliver, and J. Hirschberg, eds. 1997. Progress in speech synthesis . New York: Springer.

Covering most aspects of text-to-speech, but now dated. Material that remains relevant: Yarowsky on homograph disambiguation; Sproat’s introduction to the Linguistic Analysis section; Campbell and Black’s inclusion of prosody in the unit selection target cost, to minimize the need for subsequent signal processing (implementation details no longer relevant).

Zen, H., K. Tokuda, and A. W. Black. 2009. Statistical parametric speech synthesis. Speech Communication 51.11: 1039–1064.

DOI: 10.1016/j.specom.2009.04.004

Written before the resurgence of neural networks, this is an authoritative and technical introduction to HMM-based statistical parametric speech synthesis.

back to top

Users without a subscription are not able to see the full content on this page. Please subscribe or login .

Oxford Bibliographies Online is available by subscription and perpetual access to institutions. For more information or to contact an Oxford Sales Representative click here .

- About Linguistics »

- Meet the Editorial Board »

- Acceptability Judgments

- Acquisition, Second Language, and Bilingualism, Psycholin...

- Adpositions

- African Linguistics

- Afroasiatic Languages

- Algonquian Linguistics

- Altaic Languages

- Ambiguity, Lexical

- Analogy in Language and Linguistics

- Animal Communication

- Applicatives

- Applied Linguistics, Critical

- Arawak Languages

- Argument Structure

- Artificial Languages

- Australian Languages

- Austronesian Linguistics

- Auxiliaries

- Balkans, The Languages of the

- Baudouin de Courtenay, Jan

- Berber Languages and Linguistics

- Bilingualism and Multilingualism

- Biology of Language

- Borrowing, Structural

- Caddoan Languages

- Caucasian Languages

- Celtic Languages

- Celtic Mutations

- Chomsky, Noam

- Chumashan Languages

- Classifiers

- Clauses, Relative

- Clinical Linguistics

- Cognitive Linguistics

- Colonial Place Names

- Comparative Reconstruction in Linguistics

- Comparative-Historical Linguistics

- Complementation

- Complexity, Linguistic

- Compositionality

- Compounding

- Conditionals

- Conjunctions

- Connectionism

- Consonant Epenthesis

- Constructions, Verb-Particle

- Contrastive Analysis in Linguistics

- Conversation Analysis

- Conversation, Maxims of

- Conversational Implicature

- Cooperative Principle

- Coordination

- Creoles, Grammatical Categories in

- Critical Periods

- Cross-Language Speech Perception and Production

- Cyberpragmatics

- Default Semantics

- Definiteness

- Dementia and Language

- Dene (Athabaskan) Languages

- Dené-Yeniseian Hypothesis, The

- Dependencies

- Dependencies, Long Distance

- Derivational Morphology

- Determiners

- Dialectology

- Distinctive Features

- Dravidian Languages

- Endangered Languages

- English as a Lingua Franca

- English, Early Modern

- English, Old

- Eskimo-Aleut

- Euphemisms and Dysphemisms

- Evidentials

- Exemplar-Based Models in Linguistics

- Existential

- Existential Wh-Constructions

- Experimental Linguistics

- Fieldwork, Sociolinguistic

- Finite State Languages

- First Language Attrition

- Formulaic Language

- Francoprovençal

- French Grammars

- Gabelentz, Georg von der

- Genealogical Classification

- Generative Syntax

- Genetics and Language

- Grammar, Categorial

- Grammar, Construction

- Grammar, Descriptive

- Grammar, Functional Discourse

- Grammars, Phrase Structure

- Grammaticalization

- Harris, Zellig

- Heritage Languages

- History of Linguistics

- History of the English Language

- Hmong-Mien Languages

- Hokan Languages

- Humor in Language

- Hungarian Vowel Harmony

- Idiom and Phraseology

- Imperatives

- Indefiniteness

- Indo-European Etymology

- Inflected Infinitives

- Information Structure

- Interface Between Phonology and Phonetics

- Interjections

- Iroquoian Languages

- Isolates, Language

- Jakobson, Roman

- Japanese Word Accent

- Jones, Daniel

- Juncture and Boundary

- Khoisan Languages

- Kiowa-Tanoan Languages

- Kra-Dai Languages

- Labov, William

- Language Acquisition

- Language and Law

- Language Contact

- Language Documentation

- Language, Embodiment and

- Language for Specific Purposes/Specialized Communication

- Language, Gender, and Sexuality

- Language Geography

- Language Ideologies and Language Attitudes

- Language in Autism Spectrum Disorders

- Language Nests

- Language Revitalization

- Language Shift

- Language Standardization

- Language, Synesthesia and

- Languages of Africa

- Languages of the Americas, Indigenous

- Languages of the World

- Learnability

- Lexical Access, Cognitive Mechanisms for

- Lexical Semantics

- Lexical-Functional Grammar

- Lexicography

- Lexicography, Bilingual

- Linguistic Accommodation

- Linguistic Anthropology

- Linguistic Areas

- Linguistic Landscapes

- Linguistic Prescriptivism

- Linguistic Profiling and Language-Based Discrimination

- Linguistic Relativity

- Linguistics, Educational

- Listening, Second Language

- Literature and Linguistics

- Maintenance, Language

- Mande Languages

- Mass-Count Distinction

- Mathematical Linguistics

- Mayan Languages

- Mental Health Disorders, Language in

- Mental Lexicon, The

- Mesoamerican Languages

- Minority Languages

- Mixed Languages

- Mixe-Zoquean Languages

- Modification

- Mon-Khmer Languages

- Morphological Change

- Morphology, Blending in

- Morphology, Subtractive

- Munda Languages

- Muskogean Languages

- Nasals and Nasalization

- Niger-Congo Languages

- Non-Pama-Nyungan Languages

- Northeast Caucasian Languages

- Oceanic Languages

- Papuan Languages

- Penutian Languages

- Philosophy of Language

- Phonetics, Acoustic

- Phonetics, Articulatory

- Phonological Research, Psycholinguistic Methodology in

- Phonology, Computational

- Phonology, Early Child

- Policy and Planning, Language

- Politeness in Language

- Positive Discourse Analysis

- Possessives, Acquisition of

- Pragmatics, Acquisition of

- Pragmatics, Cognitive

- Pragmatics, Computational

- Pragmatics, Cross-Cultural

- Pragmatics, Developmental

- Pragmatics, Experimental

- Pragmatics, Game Theory in

- Pragmatics, Historical

- Pragmatics, Institutional

- Pragmatics, Second Language

- Prague Linguistic Circle, The

- Presupposition

- Psycholinguistics

- Quechuan and Aymaran Languages

- Reading, Second-Language

- Reciprocals

- Reduplication

- Reflexives and Reflexivity

- Register and Register Variation

- Relevance Theory

- Representation and Processing of Multi-Word Expressions in...

- Salish Languages

- Sapir, Edward

- Saussure, Ferdinand de

- Second Language Acquisition, Anaphora Resolution in

- Semantic Maps

- Semantic Roles

- Semantic-Pragmatic Change

- Semantics, Cognitive

- Sentence Processing in Monolingual and Bilingual Speakers

- Sign Language Linguistics

- Sociolinguistics

- Sociolinguistics, Variationist

- Sociopragmatics

- Sound Change

- South American Indian Languages

- Specific Language Impairment

- Speech, Deceptive

- Speech Synthesis

- Switch-Reference

- Syntactic Change

- Syntactic Knowledge, Children’s Acquisition of

- Tense, Aspect, and Mood

- Text Mining

- Tone Sandhi

- Transcription

- Transitivity and Voice

- Translanguaging

- Translation

- Trubetzkoy, Nikolai

- Tucanoan Languages

- Tupian Languages

- Usage-Based Linguistics

- Uto-Aztecan Languages

- Valency Theory

- Verbs, Serial

- Vocabulary, Second Language

- Vowel Harmony

- Whitney, William Dwight

- Word Classes

- Word Formation in Japanese

- Word Recognition, Spoken

- Word Recognition, Visual

- Word Stress

- Writing, Second Language

- Writing Systems

- Zapotecan Languages

- Privacy Policy

- Cookie Policy

- Legal Notice

- Accessibility

Powered by:

- [66.249.64.20|185.148.24.167]

- 185.148.24.167

- Enroll & Pay

- Prospective Students

- Current Students

- Degree Programs

What is Speech Synthesis?

Speech synthesis, or text-to-speech, is a category of software or hardware that converts text to artificial speech. A text-to-speech system is one that reads text aloud through the computer's sound card or other speech synthesis device. Text that is selected for reading is analyzed by the software, restructured to a phonetic system, and read aloud. The computer looks at each word, calculates its pronunciation then says the word in its context (Cavanaugh, 2003).

How can speech synthesis help your students?

Speech synthesis has a wide range of components that can aid in the reading process. It assists in word decoding for improved reading comprehension (Montali & Lewandowski, 1996). The software gives voice to difficult words with which students struggle by reading either scanned-in documents or imported files (such as eBooks). In word processing, it will read back students' typed text for them to hear what they have written and then make revisions. The software provides a range in options for student control such as tone, pitch, speed of speech, and even gender of speaker. Highlighting features allow the student to highlight a word or passage as it is being read.

Who can benefit from speech synthesis?

According to O'Neill (1999), there are a wide range of users who may benefit from this software, including:

- Students with a reading, learning, and/or attention disorder

- Students who are struggling with reading

- Students who speak English as a second language

- Students with low vision or certain mobility problems

What are some speech synthesis programs?

eReader by CAST

The CAST eReader has the ability to read content from the Internet, word processing files, scanned-in text or typed-in text, and further enhances that text by adding spoken voice, visual highlighting, document navigation, page navigation, type and talk capabilities. eReader is available in both Macintosh and Windows versions.

40 Harvard Mills Square, Suite 3 Wakefield, MA 01880-3233 Tel: 781-245-2212 Fax: 781-245-5212 TTY: 781-245-9320 E-mail: [email protected]

ReadPlease 2003 This free software can be used as a simple word processor that reads what is typed.

ReadPlease ReadingBar ReadingBar (a toolbar for Internet Explorer) allows users to do much more than they were able to before: have web pages read aloud, create MP3 sound files, magnify web pages, make text-only versions of any web page, dictionary look-up, and even translate web pages to and from other languages. ReadingBar is not limited to reading and recording web pages - it is just as good at reading and recording text you see on your screen from any application. ReadingBar is often used to proofread documents and even to learn other languages.

ReadPlease Corporation 121 Cherry Ridge Road Thunder Bay, ON, Canada - P7G 1A7 Phone: 807-474-7702 Fax: 807-768-1285

Read & Write v.6 Software that provides both text reading and work processing support. Features include: speech, spell checking, homophones support, word prediction, dictionary, word wizard, and teacher's toolkit.

textHELP! Systems Ltd. Enkalon Business Centre, 25 Randalstown Road, Antrim Co. Antrim BT41 4LJ N. Ireland [email protected]

Kurweil 3000 Offers a variety of reading tools to assist students with reading difficulties. Tools include: dual highlighting, tools for decoding, study skills, and writing, test taking capabilities, web access and online books, human sounding speech, bilingual and foreign language benefits, and network access and monitoring.

Kurzweil Educational Systems, Inc. 14 Crosby Drive Bedford, MA 01730-1402 From the USA or Canada: 800-894-5374 From all other countries: 781-276-0600

Max's Sandbox In MaxWrite (the Word interface), students type and then hear "Petey" the parrot read their words. In addition, it is easy to add the student's voice to the document (if you have a microphone for your computer). It is a powerful tool for documenting student writing and reading and could even be used in creating a portfolio of student language skills. In addition, MaxWrite has more than 300 clip art images for students to use, or you can easily have students access your own collection of images (scans, digital photos, or clip art). Student work can be printed to the printer you designate and saved to the folder you determine (even network folders).

Publisher: eWord Development

Where can you find more information about speech synthesis?

Research Articles

MacArthur, Charles A. (1998). Word processing with

speech synthesis and word prediction: Effects on the

Descriptive Articles

Center for Applied Special Technology (CAST) Founded in 1984 as the Center for Applied Special Technology, CAST is a not-for-profit organization whose mission is to expand educational opportunities for individuals with disabilities through the development and innovative uses of technology. CAST advances Universal Design for Learning (UDL), producing innovative concepts, educational methods, and effective, inclusive learning technologies based on theoretical and applied research. To achieve this goal, CAST:

- Conducts applied research in UDL,

- Develops and releases products that expand opportunities for learning through UDL,

- Disseminates UDL concepts through public and professional channels.

LD Online LD OnLine is a collaboration between public broadcasting and the learning disabilities community. The site offers a wide range of articles and links to information on assistive technology such as speech synthesis.

What is Speech Synthesis? A Detailed Guide

Aug 24, 2022 13 mins read

Have you ever wondered how those little voice-enabled devices like Amazon’s Alexa or Google Home work? The answer is speech synthesis! Speech synthesis is the artificial production of human speech that sounds almost like a human voice and is more precise with pitch, speech, and tone. Automation and AI-based system designed for this purpose is called a text-to-speech synthesizer and can be implemented in software or hardware.

The people in the business are fully into audio technology to automate management tasks, internal business operations, and product promotions. The super quality and cheaper audio technology are taking everyone with awe and amazement. If you’re a product marketer or content strategist, you might be wondering how you can use text-to-speech synthesis to your advantage.

Speech Synthesis for Translations of Different Languages

One of the benefits of using text to speech in translation is that it can help improve translation accuracy . It is because the synthesized speech can be controlled more precisely than human speech, making it easier to produce an accurate rendition of the original text. It saves you ample time while saving you the labor of manual work that may have a chance of being error-prone. The speech synthesis translator does not need to spend time recording themselves speaking the translated text. It can be a significant time-saving for long or complex texts.

If you’re looking for a way to improve your translation work, consider using TTS synthesis software. It can help you produce more accurate translations and save you time in the process!

If you’re considering using a text-to-speech tool for translation work, there are a few things to keep in mind:

- Choosing a high-quality speech synthesizer is essential to avoid potential errors in the synthesis process.

- You’ll need to create a script for the synthesizer that includes all the necessary pronunciations for the words and phrases in the text.

- You’ll need to test the synthesized speech to ensure it sounds natural and intelligible.

Text to Speech Synthesis for Visually Impaired People

With speech synthesis, you can not only convert text into spoken words but also control how the words are spoken. This means you can change the pitch, speed, and tone of voice. TTS is used in many applications, websites, audio newspapers, and audio blogs .

They are great for helping people who are blind or have low vision or for people who want to listen to a book instead of reading it.

Text to Speech Synthesis for Video Content Creation

With speech synthesis, you can create engaging videos that sound natural and are easy to understand. Let’s face it; not everyone is a great speaker. But with speech synthesis, anyone can create videos that sound professional and are easy to follow.

All you need to do is type out your script. Then, the program will convert your text into spoken words . You can preview the audio to make sure it sounds like you want it to. Then, just record your video and add the audio file.

It’s that simple! With speech synthesis, anyone can create high-quality videos that sound great and are easy to understand. So if you’re looking for a way to take your YouTube channel, Instagram, or TikTok account to the next level, give speech-to-text tools a try! Boost your TikTok views with engaging audio content produced effortlessly through these innovative tools.

What Uses Does Speech Synthesis Have?

The text-to-speech tool has come a long way since its early days in the 1950s. It is now used in various applications, from helping those with speech impairments to creating realistic-sounding computer-generated characters in movies, video games, podcasts, and audio blogs.

Here are some of the most common uses for text-to-speech today:

1. Assistive Technology for Those with Speech Impairments

One of the most important uses of TTS is to help those with speech impairments. Various assistive technologies, including text-to-speech (TTS) software, communication aids, and mobile apps, use speech synthesis to convert text into speech.

People with a wide range of speech impairments, including those with dysarthria (a motor speech disorder), mutism (an inability to speak), and aphasia (a language disorder), use audio tools. Nonverbal people with difficulty speaking due to temporary conditions, such as laryngitis, use TTS software.

It includes screen readers read aloud text from websites and other digital documents. Moreover, it includes navigational aids that help people with visual impairments get around.

2. Helping People with Speech Impairments Communicate

People with difficulty speaking due to a stroke or other condition can also benefit from speech synthesis. This can be a lifesaver for people who have trouble speaking but still want to be able to communicate with loved ones. Several apps and devices use this technology to help people communicate.

3. Navigation and Voice Commands—Enhancing GPS Navigation with Spoken Directions

Navigation systems and voice-activated assistants like Siri and Google Assistant are prime examples of TTS software. They convert text-based directions into speech, making it easier for drivers to stay focused on the road. The voice assistants offer voice commands for various tasks, such as sending a text message or setting a reminder. This technology benefits people unfamiliar with an area or who have trouble reading maps.

4. Educational Materials

Speech synthesizers are great to help in preparing educational materials , such as audiobooks, audio blogs and language-learning materials. Some visual learners or those who prefer to listen to material rather than read it. Now educational content creators can create materials for those with reading impairments, such as dyslexia .

After the pandemic, and so many educational programs sent online, you must give your students audio learning material to hear it out on the go. For some people, listening to material helps them focus, understand and memorize things better instead of just reading it.

5. Text-to-Speech for Language Learning

Another great use for text-to-speech is for language learning. Hearing the words spoken aloud can be a lot easier to learn how to pronounce them and remember their meaning. Several apps and software programs use text-to-speech to help people learn new languages.

6. Audio Books

Another widespread use for speech synthesis is in audiobooks. It allows people to listen to books instead of reading them. It can be great for commuters or anyone who wants to be able to multitask while they consume content .

7. Accessibility Features in Electronic Devices

Many electronic devices, such as smartphones, tablets, and computers, now have built-in accessibility features that use speech synthesis. These features are helpful for people with visual impairments or other disabilities that make it difficult to use traditional interfaces. For example, Apple’s iPhone has a built-in screen reader called VoiceOver that uses TTS to speak the names of icons and other elements on the screen.

8. Entertainment Applications

Various entertainment applications, such as video games and movies, use speech synthesizers. In video games, they help create realistic-sounding character dialogue. In movies, adding special effects, such as when a character’s voice is artificially generated or altered. It allows developers to create unique voices for their characters without having to hire actors to provide the voices. It can save time and money and allow for more creative freedom.

These are just some of the many uses for speech synthesis today. As the technology continues to develop, we can expect to see even more innovative and exciting applications for this fascinating technology.

9. Making Videos More Engaging with Lip Sync

Lip sync is a speech synthesizer often used in videos and animations. It allows the audio to match the movement of the lips, making it appear as though the character is speaking the words. Hence, they are used for both educational and entertainment purposes.

Related: Text to Speech and Branding: How Voice Technology Enhance your Brand?

10. Generating Speech from Text in Real-Time

Several tools also use text-to-speech synthesis to generate speech from the text, like live captioning or real-time translation. Audio technology is becoming increasingly important as we move towards a more globalized world.

How to Choose and Integrate Speech Synthesis?

With the increasing use of speech synthesizer systems, choosing and integrating the right system for a particular application is necessary. This can be difficult as many factors to consider, such as price, quality, performance, accuracy, portability, and platform support. This article will discuss some important factors to consider when choosing and integrating a speech synthesizer system.

- The quality of a speech synthesizer means its similarity to the human voice and its ability to be understood clearly. Speech synthesis systems were first developed to aid the blind by providing a means of communicating with the outside world. The first systems were based on rule-based methods and simple concatenative synthesis . Over time, however, the quality of text-to-audio tools has improved dramatically. They are now used in various applications, including text-to-speech systems for the visually impaired, voice response systems for telephone services, children’s toys, and computer game characters.

- Another important factor to consider is the accuracy of the synthetic speech . The accuracy of synthetic speech means its ability to pronounce words and phrases correctly. Many text-to-audio tools use rule-based methods to generate synthetic speech, resulting in errors if the rules are not correctly applied. To avoid these errors, choosing a system that uses high-quality algorithms and has been tuned for the specific application is important.

- The performance of a speech synthesis system is another important factor to consider. The performance of synthetic speech means its ability to generate synthetic speech in real-time. Many TTS use pre-recorded speech units concatenated together to create synthetic speech. This can result in delays if the units are not properly aligned or if the system does not have enough resources to generate the synthetic speech in real-time. To avoid these delays, choosing a system that uses high-quality algorithms and has been tuned for the specific application is essential.

- The portability of a speech synthesis system is another essential factor to consider. The portability of synthetic speech means its ability to run on different platforms and devices. Many text-to-audio tools are designed for specific platforms and devices, limiting their portability. To avoid these limitations, choosing a system designed for portability and tested on different platforms and devices is important.

- The price of a speech synthesis system is another essential factor to consider. The price of synthetic speech is often judged by its quality and accuracy. Many text-to-audio tools are costly, so choosing a system that offers high quality and accuracy at a reasonable price is important.

The Bottom Line With technology

With the unstoppable revolution of technology, audio technology is about to bring the boom and multidimensional benefits for the people in business. You must use audio technology today to upgrade your game in the digital world.

Improve accessibility and drive user engagement with WebsiteVoice text-to-speech tool

Our solution, websitevoice.

Add voice to your website by using WebsiteVoice for free.

Share this post

Top articles.

Why Your Website Needs a Text-To-Speech Auto Reader?

9 Tips to Make a WordPress Website More Readable

11 Best WordPress Audio Player Plugins of 2022

10 Assistive Technology Tools to Help People with Disabilities in 2022 and Beyond

How to Make Your Website Accessible? Tips and Techniques for Website Creators

Most read from voice technology tutorials

22 apps for kids with reading issues.

Aug 10, 2021 18 mins read

What is an AI Audiobook Narration?

Jun 21, 2023 16 mins read

How AI Can Help in Creating Podcast?

Jan 18, 2024 13 mins read

We're a group of avid readers and podcast listeners who realized that sometimes it's difficult to read up on our favourite blogs, newsmedia and articles online when we're busy commuting, working, driving, doing chorse, and having our eyes and hands busy.

And so asked ourselves: wouldn't it be great if we can listen to these websites like a podcast, instead of reading? Thenext question also came up: how do people with learning disabilities and visual impairment are able to process information that are online in text?

Thus we created WebsiteVoice. The text-to-speech solution for bloggers and web content creators to allow their audience to tune in to theircontent for better user engagement, accessibility and growing more subscribers for their website.

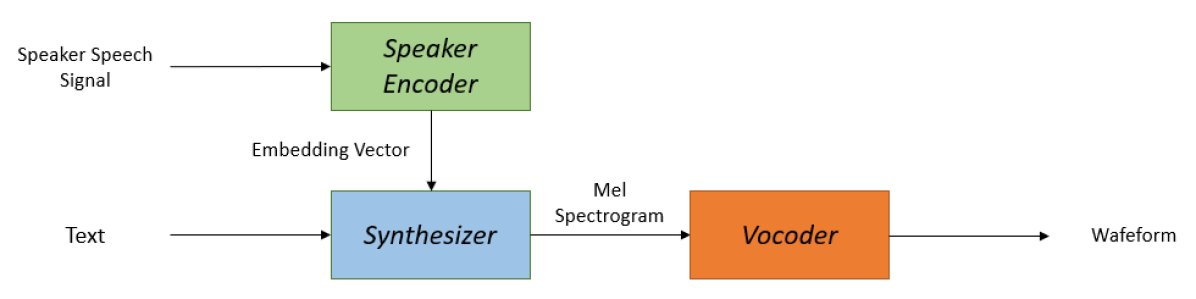

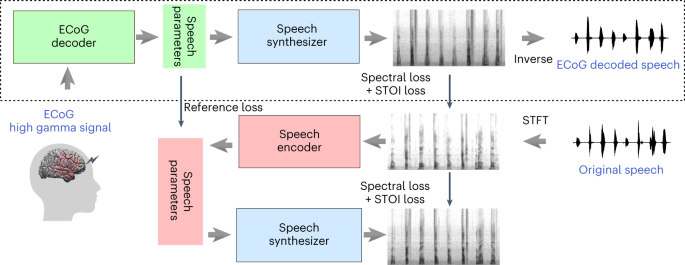

VOICE CLONING: A MULTI-SPEAKER TEXT-TO-SPEECH SYNTHESIS APPROACH BASED ON TRANSFER LEARNING

Deep learning models are becoming predominant in many fields of machine learning. Text-to-Speech (TTS), the process of synthesizing artificial speech from text, is no exception. To this end, a deep neural network is usually trained using a corpus of several hours of recorded speech from a single speaker. Trying to produce the voice of a speaker other than the one learned is expensive and requires large effort since it is necessary to record a new dataset and retrain the model. This is the main reason why the TTS models are usually single speaker. The proposed approach has the goal to overcome these limitations trying to obtain a system which is able to model a multi-speaker acoustic space. This allows the generation of speech audio similar to the voice of different target speakers, even if they were not observed during the training phase.

Index Terms — text-to-speech, deep learning, multi-speaker speech synthesis, speaker embedding, transfer learning

1 Introduction

Text-to-Speech (TTS) synthesis, the process of generating natural speech from text, remains a challenging task despite decades of investigation. Nowadays there are several TTS systems able to get impressive results in terms of synthesis of natural voices very close to human ones. Unfortunately, many of these systems learn to synthesize text only with a single voice. The goal of this work is to build a TTS system which can generate in a data efficient manner natural speech for a wide variety of speakers, not necessarily seen during the training phase. The activity that allows the creation of this type of models is called Voice Cloning and has many applications, such as restoring the ability to communicate naturally to users who have lost their voice or customizing digital assistants such as Siri.

Over time, there has been a significant interest in end-to-end TTS models trained directly from text-audio pairs; Tacotron 2 [ 1 ] used WaveNet [ 2 ] as a vocoder to invert spectrograms generated by sequence-to-sequence with attention [ 3 ] model architecture that encodes text and decodes spectrograms, obtaining a naturalness close to the human one. It only supported a single speaker. Gibiansky et al. [ 4 ] proposed a multi-speaker variation of Tacotron able to learn a low-dimensional speaker embedding for each training speaker. Deep Voice 3 [ 5 ] introduced a fully convolutional encoder-decoder architecture which supports thousands of speakers from LibriSpeech [ 6 ] . However, these systems only support synthesis of voices seen during training since they learn a fixed set of speaker embeddings. Voiceloop [ 7 ] proposed a novel architecture which can generate speech from voices unseen during training but requires tens of minutes of speech and transcripts of the target speaker. In recent extensions, only a few seconds of speech per speaker can be used to generate new speech in that speaker’s voice. Nachmani et al. [ 8 ] for example, extended Voiceloop to utilize a target speaker encoding network to predict speaker embedding directly from a spectrogram. This network is jointly trained with the synthesis network to ensure that embeddings predicted from utterances by the same speaker are closer than embeddings computed from different speakers. Jia et al. [ 9 ] proposed a speaker encoder model similar to [ 8 ] , except that they used a network independently-trained exploring transfer learning from a pre-trained speaker verification model towards the synthesis model.

This work is similar to [ 9 ] however introduces different architectures and uses a new transfer learning technique still based on a pre-trained speaker verification model but exploiting utterance embeddings rather than speaker embeddings. In addition, we use a different strategy to condition the speech synthesis with the voice of speakers not observed before and compared several neural architectures for the speaker encoder model. The paper is organized as follows: Section 2 describes the model architecture and its formal definition; Section 3 reports experiments and results done to evaluate the proposed solution; finally conclusions are reported in Section 4 .

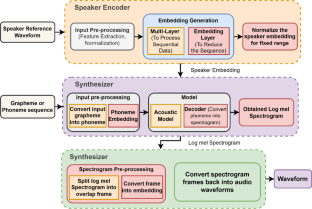

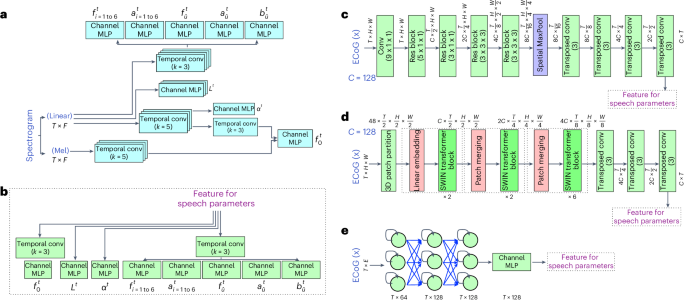

2 Model Architecture

Following [ 9 ] , the proposed system consists of three components: a speaker encoder , which computes a fixed-dimensional embedding vector from a few seconds of reference speech of a target speaker; a synthesizer , which predicts a mel spetrogram from an input text and an embedding vector; a neural vocoder , which infers time-domain waveforms from the mel spectrograms generated by the synthesizer. At inference time, the speaker encoder takes as input a short reference utterance of the target speaker and generates, according to its internal learned speaker characteristics space, an embedding vector. The synthesizer takes as input a phoneme (or grapheme) sequence and generates a mel spectrogram, conditioned by the speaker encoder embedding vector. Finally the vocoder takes the output of the synthesizer and generates the speech waveform. This is illustrated in Figure 1 .

2.1 Problem Definition

Consider a dataset of N speakers each of which has M utterances in the time-domain. Let’s denote the j-th utterance of the i-th speaker as u ij while the feature extraced from the j-th utterance of the i-th speaker as x ij ( 1 ≤ i ≤ N 1 𝑖 𝑁 1\leq i\leq N and 1 ≤ j ≤ M 1 𝑗 𝑀 1\leq j\leq M ). We chose as feature vector x ij the mel spectrogram.

The speaker encoder ℰ ℰ \mathcal{E} has the task to produce meaningful embedding vectors that should characterize the voices of the speakers. It computes the embedding vector e ij corresponding to the utterance u ij as:

where w E represents the encoder model parameters. Let’s define it utterance embedding . In addition to defining embedding at the utterance level, we can also define the speaker embedding :

In [ 9 ] , the synthesizer S 𝑆 S predicts x ij given c ij and t ij , the transcript of the utterance u ij :

where w S represents the synthesizer model parameters. In our approach, we propose to use the utterance embedding rather than the speaker embedding:

We will motivate this choice in Paragraph 2.4 .

Finally, the vocoder 𝒱 𝒱 \mathcal{V} generates u ij given x ^ i j subscript ^ x 𝑖 𝑗 \hat{\mathbf{\textbf{x}}}_{ij} . So we have:

where w V represents the vocoder model parameters.

This system could be trained in an end-to-end mode trying to optimize the following objective function:

where L V is a loss function in the time-domain. However, it requires to train the three models using the same dataset, moreover, the convergence of the combined model could be hard to reach. To overcome this drawback, the synthesizer can be trained independently to directly predict the mel spectrogram x ij of a target utterance u ij trying to optimize the following objective function:

where L S is a loss function in the time-frequency domain. It is necessary to have a pre-trained speaker encoder model available to compute the utterance embedding e ij .

The vocoder can be trained either directly on the mel spectrograms predicted by the synthesizer or on the groundtruth mel spectrograms:

where L V is a loss function in the time-domain. In the second case, a pre-trained synthesizer model is needed.

If the definition of the objective function was quite simple for both the synthesizer and the vocoder, unfortunately this is not the case for the speaker encoder. The encoder does not have labels to be trained on because its task is only to create the space of characteristics necessary to create the embedding vectors. The Generalized End-to-End (GE2E) [ 10 ] loss brings a solution to this problem and it allows the training of the speaker encoder independently. Consequently, we can define the following objective function:

where S represents a similarity matrix and L G is the GE2E loss function.

2.2 Speaker Encoder

The speaker encoder must be able to produce an embedding vector that meaningfully represents speaker characteristics in the transformed space starting from a target speaker’s utterance. Furthermore, the model should identify these characteristics using a short speech signal, regardless of its phonetic content and background noise. This can be achieved by training a neural network model on a text-independent speaker verification task that tries to optimize the GE2E loss so that embeddings of utterances from the same speaker have high cosine similarity, while those of utterances from different speakers are far apart in the embedding space.

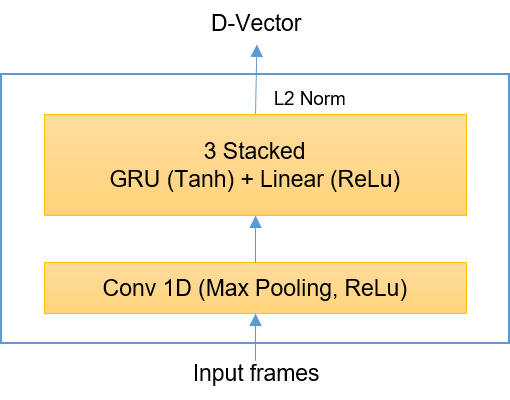

The network maps a sequence of mel spectrogram frames to a fixed-dimensional embedding vector, known as d-vector [ 11 , 12 ] . Input mel spectrograms are fed to a network consisting of one Conv1D [ 13 ] layer of 512 units followed by a stack of 3 GRU [ 14 ] layers of 512 units, each followed by a linear projection of 256 dimension. Following [ 9 ] , the final embedding dimension is 256 and it is created by L2-normalizing the output of the top layer at the final frame. This is shown in Figure 2 . We noticed that this architecture was the best among the various tried and tested, as we will see in Section 3 .

During the training phase, all the utterances are split into partial utterances that are 1.6 seconds long (160 frames). Also at inference time, the input utterance is split into segments of 1.6 seconds with 50% overlap and the model processes each segment individually. Following [ 9 , 10 ] , the final utterance-wise d-vector is generated by L2 normalizing the window-wise d-vectors and taking the element-wise average.

2.3 Synthesizer and Vocoder

The synthesizer component of the system is a sequence-to-sequence model with attention [ 1 , 3 ] which is trained on pairs of text derived token sequences and audio derived mel spectrogram sequences. Furthermore, the network is trained in a transfer learning configuration (see Paragraph 2.4 ), using an independently-trained speaker encoder to extract embedding vectors useful to condition the outcomes of this component. In view of reproducibility, the adopted vocoder component of the system is a Pytorch github implementation 1 1 1 https://github.com/fatchord/WaveRNN of the neural vocoder WaveRNN [ 15 ] . This model is not directly conditioned on the output of the speaker encoder but just on the input mel spectrogram. The multi-speaker vocoder is simply trained by using data from many speakers (see Section 3 ).

2.4 Transfer Learning Modality

The conditioning of the synthesizer via speaker encoder is the fundamental part that makes the system multi-speaker: the embedding vectors computed by the speaker encoder allow the conditioning of the mel spectrograms generated by the synthesizer so that they can incorporate the new speaker voice. In [ 9 ] , the embedding vectors are speaker embeddings obtained by Equation 2 . We used the utterance embeddings computed by Equation 1 . In fact, at inference time only one utterance of the target speaker is fed to the speaker encoder which therefore produces a single utterance-level d-vector. Thus, in this case, it is not possible to create an embedding at the speaker level since the average operation cannot be applied. This implies that only utterance embeddings can be used during the inference phase. In addition, an average mechanism could cause some loss in terms of accuracy. This is due to larger variations in pitch and voice quality often occurring in utterances of the same speaker while utterances have lower intra-variation. Following [ 9 ] , the embedding vectors computed by the speaker encoder are concatenated only with the synthesizer encoder output in order to condition the synthesis. However, we experimented with a new concatenation technique: first we passed the embedding through a single linear layer and then we applied the concatenation between the output of this layer and the synthesizer encoder one. The goal was to exploit the weights of the linear layer to make the embedding vector more meaningful, since the layer was trained together with the synthesizer. We noticed that this method achieved good convergence of training and was about 75% times faster than the former vector concatenation.

3 Experiments and Results

We used different publicly available datasets to train and evaluate the components of the system. For the speaker encoder, different neural network architectures were tested. Each of them was trained using a combination of three public sets: LibriTTS [ 16 ] train-other and dev-other; VoxCeleb [ 17 ] dev and VoxCeleb2 [ 18 ] dev. In this way, we obtained a number of speakers equal to 8,381 and a number of utterances equal to 1,419,192, not necessarily all clean and noiseless. Furthermore, transcripts were not required. The models were trained using Adam [ 19 ] as optimizer with an initial learning rate equal to 0.001. Moreover, we experimented with different learning rate decay strategies.

During the evaluation phase, we used a combination of the corresponding test sets of the training ones, obtaining a number of speakers equal to 191 and a number of utterances equal to 45,132. Both training and test sets have been sampled at 16 kHz and input mel spectrograms were computed from 25ms STFT analysis windows with a 10ms step and passed through a 40-channel mel-scale filterbank.

We separately trained the synthesizer and the vocoder using the same training set given by the combination of the two “clean” sets of LibriTTS, obtaining a number of speakers equal to 1,151, a number of utterances equal to 149,736 and a total number of hours equal to 245,14 of 22.05 kHz audio. We trained the synthesizer using the L1 loss [ 20 ] and Adam as optimizer. Moreover, the input texts were converted into phoneme sequences and target mel spectrogram features are computed on 50 ms signal windows, shifted by 12.5 ms and passed through an 80-channel mel-scale filterbank. The vocoder was trained using groundtruth waveforms rather than the synthesizer outputs.

3.1 Baseline System

We choose as baseline for our work the Corentin Jemine’s real-time voice cloning system [ 21 ] , a public re-implementation of the Google system [ 9 ] available on github 2 2 2 https://github.com/CorentinJ/Real-Time-Voice-Cloning . This system is composed out of three components: a recurrent speaker encoder consisting of 3 LSTM [ 22 ] layers and a final linear layer, each of which has 256 units; a sequence-to-sequence with attention synthesizer based on [ 1 ] and WaveRNN [ 15 ] as vocoder.

3.2 Speaker Encoder: Proposed System

To evaluate all the speaker encoder models and choose the best one, the Speaker Verification Equal Error Rate (SV-EER) was estimated by pairing each test utterance with each enrollment speaker. The models implemented are:

rec_conv network : 5 Conv1D layers, 1 GRU layer and a final linear layer;

rec_conv_2 network : 3 Conv1D layers, 2 GRU layers each followed by a linear projection layer;

gru network : 3 GRU layers each followed by a linear projection layer;

advanced_gru network : 1 Conv1D layer and 3 GRU layers each followed by a linear projection layer (Figure 2 );

lstm network : 1 Conv1D layer and 3 LSTM [ 22 ] layers each followed by a linear projection layer.

All layers have 512 units except the linear ones which have 256. Moreover, dropout rate of 0.2 was used after all the layers except before the first and after the last. All the models were trained using a batch size of 64 speakers and 10 utterances for each speaker. The results obtained are shown in Table 1 .

Name Step Time Train Loss SV-EER LR Decay rec_conv 0.33s 0.36 0.073 Reduce on Plateau rec_conv_2 0.45s 0.49 0.075 Reduce on Plateau gru 1,45s 0.33 0.054 Every 100,000 step advanced_gru 0.86s 0.14 0.040 Exponential lstm 1.08s 0.17 0.052 Exponential

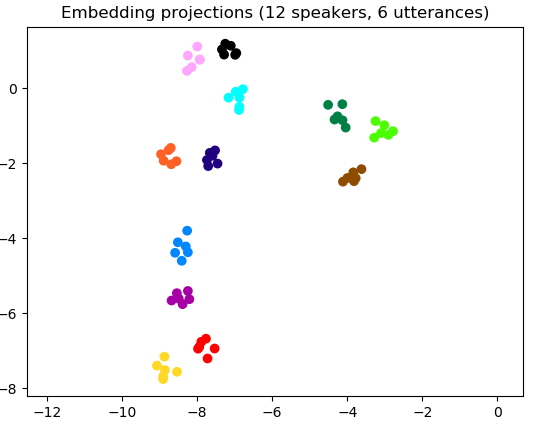

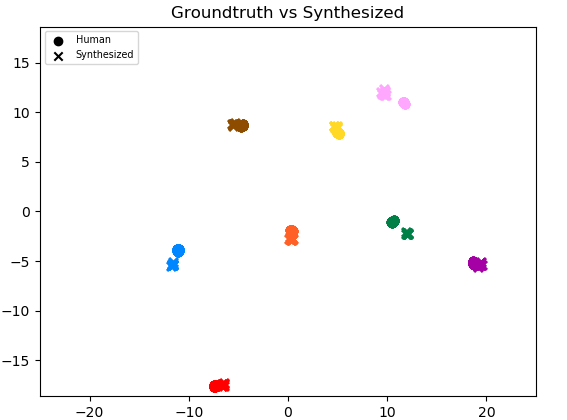

We designed the advanced gru network trying to combine the advantages of convolutional and gru networks. In fact, looking at the table, this architecture was much faster than the gru network during training, and obtained the best SV-EER on the test set. Figure 3 illustrates the projection in a two-dimensional space of the utterance embeddings computed by the advanced gru network on the basis of 6 utterances extracted from 12 speakers of the test set. In Figure 4 , the 12 speakers are 6 men and 6 women. The projections were made using UMAP [ 23 ] . Both the figures show that the model has created a space of internal features that is robust regarding the speakers, creating well-formed clusters of speakers based on their utterances and nicely separating male speakers from female ones.

The SV-EER obtained on the test set from the speaker encoder model of the proposed system is 0.040 vs the baseline one which is 0.049.

3.3 Similarity Evaluation

To assess how similar the waveforms generated by the system were from the original ones, we transformed the audio signals produced into utterance embeddings (using the speaker encoder advanced gru network) and then projected them in a two-dimensional space together with the utterance embeddings computed on the basis of the groundtruth audio. As test speakers, we randomly choose eight target speakers: four speakers (two male and two female) were extracted from the test-set-clean of LibriTTS [ 16 ] , three (two male and one female) from VCTK [ 24 ] and finally a female proprietary voice. For each speaker we randomly extracted 10 utterances and compared them with the utterances generated by the system calculating the cosine similarity. The speakers averaged values of cosine similarity between the generated and groundtruth utterance embeddings range from 0.56 to 0.76. Figure 5 shows that synthesized utterances tend to lie close to real speech from the same speaker in the embedding space.

3.4 Subjective Evaluation

Finally, we evaluated how the generated utterances were, subjectively speaking, similar in terms of speech timbre to the original ones. To do this, we gathered Mean Similarity Scores (MSS) based on a 5 points mean opinion score scale, where 1 stands for “very different” and 5 for “very similar”. Ten utterances of the proprietary female voice were cloned using both the proposed and the baseline system and then 12 subjects, most of them TTS experts, were asked to listen to the 20 samples, randomly mixed, and rate them. Participants were also provided with an original utterance as reference. The question asked was: “How do you rate the similarity of these samples with respect to the reference audio? Try to focus on vocal timbre and not on content, intonation or acoustic quality of the audio”. The results obtained are shown in Table 2 . Although not conclusive, this experiment highlights a subjective evidence of the goodness of the proposed approach, despite the significant variance of both systems: this is largely due to the low number of test participants.

System MSS baseline 2.59 ± 1.03 plus-or-minus 2.59 1.03 2.59\pm 1.03 proposed 3.17 ± 0.97 plus-or-minus 3.17 0.97 3.17\pm 0.97

4 Conclusions

In this work, our goal was to build a Voice Cloning system which could generate natural speech for a variety of target speakers in a data efficient manner. Our system combines an independently trained speaker encoder network with a sequence-to-sequence with attention architecture and a neural vocoder model. Using a transfer learning technique from a speaker-discriminative encoder model based on utterance embeddings rather than speaker embeddings, the synthesizer and the vocoder are able to generate good quality speech also for speakers not observed before. Despite the experiments showed a reasonable similarity with real speech and improvements over the baseline, the proposed system does not fully reach human-level naturalness in contrast to the single speaker results from [ 1 ] . Additionally, the system is not able to reproduce the speaker prosody of the target audio. These are consequences of the additional difficulty of generating speech for a variety of speakers given significantly less data per speaker unlike when training a model on a single speaker.

5 Acknowledgements

The authors thank Roberto Esposito, Corentin Jemine, Quan Wang, Ignacio Lopez Moreno, Skjalg Lepsøy, Alessandro Garbo and Jürgen Van de Walle for their helpful discussions and feedback.

- [1] J. Shen, R. Pang, R. J. Weiss, M. Schuster, N. Jaitly, Z. Yang, Z. Chen, Y. Zhang, Y. Wang, R. Skerrv-Ryan, R. A. Saurous, Y. Agiomvrgiannakis, and Y. Wu, “Natural tts synthesis by conditioning wavenet on mel spectrogram predictions,” in 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) , 2018, pp. 4779–4783.

- [2] Aäron van den Oord, Sander Dieleman, Heiga Zen, Karen Simonyan, Oriol Vinyals, Alex Graves, Nal Kalchbrenner, Andrew W. Senior, and Koray Kavukcuoglu, “Wavenet: A generative model for raw audio,” CoRR , vol. abs/1609.03499, 2016.

- [3] Dzmitry Bahdanau, Kyunghyun Cho, and Yoshua Bengio, “Neural machine translation by jointly learning to align and translate,” CoRR , vol. abs/1409.0473, 2015.

- [4] Andrew Gibiansky, Sercan Arik, Gregory Diamos, John Miller, Kainan Peng, Wei Ping, Jonathan Raiman, and Yanqi Zhou, “Deep voice 2: Multi-speaker neural text-to-speech,” in Advances in Neural Information Processing Systems 30 , I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, Eds., pp. 2962–2970. Curran Associates, Inc., 2017.

- [5] Wei Ping, Kainan Peng, Andrew Gibiansky, Sercan O. Arik, Ajay Kannan, Sharan Narang, Jonathan Raiman, and John Miller, “Deep voice 3: 2000-speaker neural text-to-speech,” in International Conference on Learning Representations , 2018.

- [6] V. Panayotov, G. Chen, D. Povey, and S. Khudanpur, “Librispeech: An asr corpus based on public domain audio books,” in 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) , 2015, pp. 5206–5210.