The Science of People at Work

Workplace Psychology

- How to Use Copilot In Word

- Traveling? Get These Gadgets!

How AI Writing Tools Are Helping Students Fake Their Homework

Creativity could be on the way out

:max_bytes(150000):strip_icc():format(webp)/sascha-0ab186b7216b417480ab3a77683d6409.jpg)

- Macalester College

- Columbia University

:max_bytes(150000):strip_icc():format(webp)/Lifewire_Jerri-Ledford_webOG-2e65eb56f97e413284c155dade245eeb.jpg)

- Western Kentucky University

- Gulf Coast Community College

- AI & Everyday Life News

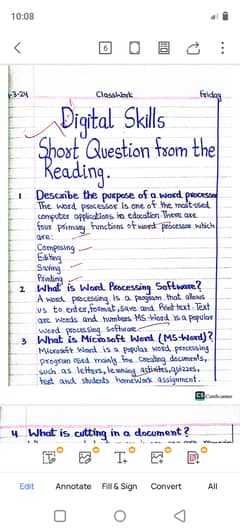

- The increasing use of AI writing tools could help students cheat.

- Teachers say software that helps generate text can be used to fake homework assignments.

- One teacher says content from programs that rewrite or paraphrase content sticks out like a "sore thumb" at the middle school level.

Cavan Images / Getty Images

Getting good grades in school may soon be about artificial intelligence (AI) as much as hard work.

Online software tools that help students write essays using AI have become so effective that some teachers worry the new technology is replacing creativity during homework assignments. Students are increasingly turning to these programs that can write entire paragraphs or essays with just a few prompts, often leaving teachers none the wiser.

"As far as I can tell, it is currently not that easy to detect AI writing," Vincent Conitzer , a professor of computer science at Carnegie Mellon University , told Lifewire in an email interview. "These systems do not straightforwardly plagiarize existing text that can then be found. I am also not aware of any features of the writing that obviously signal that it came from AI."

Homework Helpers

The use of AI writing tools by students is on the rise, anecdotes suggest. Conitzer said he’s heard one philosophy professor say he would shift away from the use of essays in his classes due to concern over AI-generated reports.

Tools based on Large Language Models (LLMs), such as GPT-3/X, have seen tremendous improvement over the last few years, Robert Weißgraeber , the managing director of AX Semantics , an AI-powered, natural language generation (NLG) software company, said in an email interview. Users enter a short phrase or paragraph, and the tool extends that phrase or section into a lengthy article.

These systems do not straightforwardly plagiarize existing text that can then be found.

Don't expect LLMs to replace real authors anytime soon, though, Weissgraeber said. GPT-3X tools are just "stochastic parrots" that produce perfect-sounding text, "however when looked at in detail, they produce defects called 'hallucinations,'—which means they are outputting things that cannot be deduced from the arguments built into the input, data, or the text itself. The perfect syntax and word choices can dazzle the reader, but when looked at closely, they actually produce semantic and pragmatic gibberish."

Catching AI Cheaters

AI-assisted writing programs are now so effective that it's hard to catch cheaters, experts say. Other than making students write in a supervised setting, perhaps the best way for teachers to avoid the use of AI writing is to come up with unusual topics that require common sense to write about, Conitzer said.

"For example, I just had GPT-3 write the beginning of two essays," he added. "The first was about whether free speech should sometimes be restricted to keep people safe, a generic essay topic about which you can find all kinds of writing online, and GPT-3 produced sensible text listing the pros and cons.

"The second was about what a teenager who was accidentally transported to the year 1000 but still has her phone in her pocket should do with her phone. GPT-3 recommended using it to call her friends and family and do research about the year 1000."

The perfect syntax and word choices can dazzle the reader...

Erin Beers , a middle school language arts teacher in the Cincinnati area, told Lifewire in an email interview that content from programs that rewrite or paraphrase content sticks out like a "sore thumb" at the middle school level.

"I can usually spot fraudulent activity due to a student's use of complex sentence structure and an abundance of adjectives," Beers said. "Most 7th-grade writers simply don't write at that level."

Beers said she's against students using most AI writing programs, saying, "Anything that attempts to replicate creativity is likely limiting a writer's growth."

Krit of Studio OMG / Getty Images

Weißgraeber recommends teachers not be fooled by smooth-looking prose that may have been generated by AI. "Look at the argumentation chains," he added. "Are all statements grounded in correlating facts and data that are also listed?"

However, despairing teachers take note. There's at least one upside to students using AI tools, Conitzer contends.

"In principle, students could learn quite a few things from AI writing," he said. "It often produces clear and well-structured prose that could serve as a good example, though the style is usually generic. Students could also learn more about AI from it, including how it sometimes still fails miserably at commonsense reasoning and how it reflects the human writing it was trained on."

Get the Latest Tech News Delivered Every Day

- How to Use Google Duet in Docs

- The 15 Best Free AI Courses of 2024

- How to Use Google Duet in Gmail

- The 6 Best Homework Apps to Help Students (and Parents)

- The 10 Best ChatGPT Alternatives (2024)

- The 10 Best Writing Apps of 2024

- What Is a Large Language Model?

- Back to School: The 9 Tech Items Every Student Must Have

- How to Use Bing AI to Get the Answers You Need

- School Project Ideas for IT and Computer Networking Students

- 10 Positive Impacts of Artificial Intelligence

- The Benefits of a Using a Smartpen

- What is OneNote Class Notebook and How Does it Work?

- OpenAI Playground vs. ChatGPT: What's the Difference?

- 8 Free Back-to-School Apps for Students

- Back to School: Laptops, Smartphones, & Books, Oh My!

- Skip to main content

- Keyboard shortcuts for audio player

Ask Me Another

- Latest Show

- Meet Our Guests

- LISTEN & FOLLOW

- Apple Podcasts

- Google Podcasts

Your support helps make our show possible and unlocks access to our sponsor-free feed.

Real or Fake College Essay

Which is tougher, writing a college admissions essay or guessing which college admissions essay prompts are real? Ask Me Another is playing this game because two hosts and three producers are soon going to be out of work and looking for something to do. Maybe... grad school!?

Heard on The Penultimate Puzzles

- PRO Courses Guides New Tech Help Pro Expert Videos About wikiHow Pro Upgrade Sign In

- EDIT Edit this Article

- EXPLORE Tech Help Pro About Us Random Article Quizzes Request a New Article Community Dashboard This Or That Game Popular Categories Arts and Entertainment Artwork Books Movies Computers and Electronics Computers Phone Skills Technology Hacks Health Men's Health Mental Health Women's Health Relationships Dating Love Relationship Issues Hobbies and Crafts Crafts Drawing Games Education & Communication Communication Skills Personal Development Studying Personal Care and Style Fashion Hair Care Personal Hygiene Youth Personal Care School Stuff Dating All Categories Arts and Entertainment Finance and Business Home and Garden Relationship Quizzes Cars & Other Vehicles Food and Entertaining Personal Care and Style Sports and Fitness Computers and Electronics Health Pets and Animals Travel Education & Communication Hobbies and Crafts Philosophy and Religion Work World Family Life Holidays and Traditions Relationships Youth

- Browse Articles

- Learn Something New

- Quizzes Hot

- This Or That Game New

- Train Your Brain

- Explore More

- Support wikiHow

- About wikiHow

- Log in / Sign up

- School Stuff

- Managing Time During School Years

How to Buy More Time on an Overdue Assignment

Last Updated: March 28, 2024 References

This article was co-authored by Alexander Ruiz, M.Ed. . Alexander Ruiz is an Educational Consultant and the Educational Director of Link Educational Institute, a tutoring business based in Claremont, California that provides customizable educational plans, subject and test prep tutoring, and college application consulting. With over a decade and a half of experience in the education industry, Alexander coaches students to increase their self-awareness and emotional intelligence while achieving skills and the goal of achieving skills and higher education. He holds a BA in Psychology from Florida International University and an MA in Education from Georgia Southern University. This article has been viewed 265,538 times.

Deadlines sneak up fast. If you’re short on time, you can always request an extension from your professor—your request may be based on real or fictionalized reasons. Alternatively, you could submit a corrupted file (a file your professor can’t open) and make the extension appear like an unintentional, happy accident.

Asking Your Teacher for an Extension

- If you're in college or graduate school, drop by your professor’s office hours.

- If you're in high school or middle school, ask to speak to your teacher after class or set up a time to meet with them.

- If you're making up an excuse, your professor might be able to see right through your lie. It might better to skip the face-to-face meeting and email them instead. [1] X Research source

- If you are struggling with depression and/or anxiety, don’t just say “I am overwhelmed.” Instead, explain how your mental health is affecting your ability to complete the assignment. “I’ve been struggling with depression all since midterms. I’ve learned that when I feel depressed, I have a very hard time focusing on my assignments. It has been very difficult for me to sit down a complete the paper.”

- “Due to my financial situation, I had to start working this semester. My work schedule and class schedule are very demanding. I am struggling to manage both.”

- "My parents are both working overtime right now. I have been watching my little siblings for them. I am having a hard time balancing school and my responsibilities at home"

- "I am training for a big competition. My practices are going way longer than expected and by the time I get home I am too exhausted to do my work." [2] X Research source

- “May I have the weekend to complete the assignment?”

- “Can I have three days to finish my paper?” [3] X Research source

- If they say “yes,” thank them profusely and work hard to meet your new deadline.

- If they say “no,” thank them for their time and start working on the assignment as soon as you can.

- If your teacher says “yes” but attaches a grade penalty, accept the grade penalty, thank them for the extension, and work diligently to meet your new deadline. [4] X Research source

Finding an Excuse

- If you have to print out your paper, experiencing “printer problems” may grant you a few extra hours to work on the assignment.

- If you typically store all of your work on a USB Drive, tell your teacher the thumb drive was stolen or misplaced. They may give you a few days to search for the missing drive. [5] X Research source

- “I am taking the MCAT next month and have been studying for the test non-stop. As a result, the assignment for your class fell off my radar. May I have a few days to complete it?”

- I am taking the SAT on Saturday and I really need to study for my Latin subject test. Can I have a few more days on my project?"

- “I have three papers due at the same time. I am struggling to devote attention to each assignment. May I please have an extension so I can produce a paper I am proud of?” [6] X Research source

- Be prepared for your professor to ask for proof or to look into your situation. [7] X Research source

Turning In a Corrupted File

- Professors and teachers are aware of this common trick. If you get caught, you may get a zero on the assignment and/or sent to the school's administrators. Before you consider this method, explore all of your other options and check your school's policies on the matter.

- You can copy and paste text from the internet, your rough draft, or even use an old paper.

- Name the document as your professor requested.

- Save the file to your desktop.

- Click Save .

- Navigate to [ Corrupt-A-File.net ].

- Scroll down to “Select the file to corrupt” and select one of the following options: “From Your Device”, “From Dropbox”, or “From Google Drive”. If you saved the document on your desktop, click “From Your Device”.

- Locate the file and click [[button|Select}} or Open .

- Click Corrupt File . Once corrupted you will receive the following message: “Your file was dutifully corrupted”.

- Click on the download button (black, downward pointing arrow).

- Rename the document (if desired), change the location (if desired), and click Save .

- Right-click on the document’s icon, hover over “Open with” and select “Notepad”. A Notepad file will open. In addition to the filler text, you will see the document’s code (a jumble of letters, numbers, punctuation marks etc.).

- Delete a portion of the code. Do not delete it all!

- Press Ctrl + S and click Save . [8] X Research source

- Mac users will see a “Convert File” dialog box.

- Windows users will see the message “The document name or path is not valid”. [9] X Research source

- If your professor or teacher discovers you intentionally corrupted the file, you may get in serious trouble. Ask for an extension or simply submit what you have completed before you try this method. If you are doing online school just be sure to send an email explaining why and you can even make up a lie on why it wasn't turned in on time. Tell them you were stressed and had too much work to do so you forgot about it.

Community Q&A

- Your professor has the right to say “no” when you ask for an extension. Be prepared for this response. Thanks Helpful 0 Not Helpful 0

Tips from our Readers

- Although students see lying as the best possible way to get an extension, it’s really not! Only lie as a last resort or when the teacher absolutely won’t offer an extension.

- Try not to lie to your professors if you can help it. You may be kicked out of school for violations of the academic honesty policy or have other consequences.

- Do not submit several corrupted files to the same professor. They will catch on. Thanks Helpful 16 Not Helpful 1

You Might Also Like

- ↑ http://www.complex.com/pop-culture/2013/09/how-to-get-an-extenstion-on-a-paper/ask-in-person

- ↑ http://www.complex.com/pop-culture/2013/09/how-to-get-an-extenstion-on-a-paper/plan-ahead

- ↑ http://www.complex.com/pop-culture/2013/09/how-to-get-an-extenstion-on-a-paper/dont-ask-for-a-long-extension

- ↑ http://www.ivoryresearch.com/how-to-get-an-assignment-essay-coursework-extension/

- ↑ https://www.youtube.com/watch?v=EgC-_9ZE5WA

About This Article

If your assignment is overdue, you may be able to buy more time by asking for an extension. Talk to your teacher as soon as you can and go after class or during break when they’ll have time to listen to you. Explain specifically why you’ve fallen behind and ask if it’s possible to get an extension. For example, if you’ve been struggling with depression, you’ve had to work a job to help support your family, or you’ve had technical problems, your teacher might offer you some extra time to finish your assignment. Try not to take it personally if they say no, since the decision might be out of your teacher’s hands, and it might be unfair to other students. For more tips, including how to make a corrupted file to buy you time on your assignment, read on. Did this summary help you? Yes No

- Send fan mail to authors

Reader Success Stories

Oct 12, 2017

Did this article help you?

Featured Articles

Trending Articles

Watch Articles

- Terms of Use

- Privacy Policy

- Do Not Sell or Share My Info

- Not Selling Info

Get all the best how-tos!

Sign up for wikiHow's weekly email newsletter

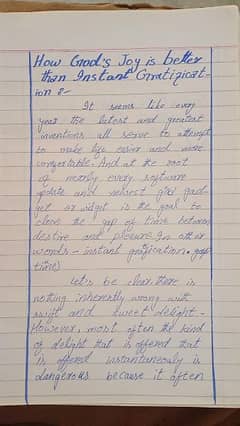

My fake homework

Quiz by Kathy Stanley

Feel free to use or edit a copy

includes Teacher and Student dashboards

Measure skills from any curriculum

Tag the questions with any skills you have. Your dashboard will track each student's mastery of each skill.

- edit the questions

- save a copy for later

- start a class game

- automatically assign follow-up activities based on students’ scores

- assign as homework

- share a link with colleagues

- print as a bubble sheet

- Q 1 / 3 Score 0 What is the name of Natalie's dog? 29 Burrito Dude Rufus Hannah

Our brand new solo games combine with your quiz, on the same screen

Correct quiz answers unlock more play!

- Q 1 What is the name of Natalie's dog? Burrito Dude Rufus Hannah 30 s

- Q 2 Solve the following equation: 2x + 3 = 11 x = 4 x = 2 x = 5 x = 3 30 s

- Q 3 Which property is this: x + (y + z) = (x + y) + z Identify property of addition. Commutative property of addition Associative property of addition Distributive property 30 s

Teachers give this quiz to your class

- Updated Terms of Use

- New Privacy Policy

- Your Privacy Choices

- Closed Caption Policy

- Accessibility Statement

This material may not be published, broadcast, rewritten, or redistributed. ©2024 FOX News Network, LLC. All rights reserved. Quotes displayed in real-time or delayed by at least 15 minutes. Market data provided by Factset . Powered and implemented by FactSet Digital Solutions . Legal Statement . Mutual Fund and ETF data provided by Refinitiv Lipper .

Arizona alleged ‘fake electors’ who backed Trump in 2020 indicted by grand jury

While former president trump wasn't named, he was described in the indictment as an unindicted co-conspirator.

Trump charged with 4 counts in 2020 election case

Bret Baier reacts to the four charges against former President Donald Trump regarding 2020 election case on ‘The Five.’

Eleven Republicans have been indicted by a grand jury in Arizona and charged with conspiracy, fraud and forgery for falsely claiming that former President Trump had won the state in 2020 over then-Democratic nominee Joe Biden.

"I will not allow American democracy to be undermined," Arizona Attorney General Kris Mayes said in a Wednesday video announcing the indictments over the "fake elector scheme."

She added, "The investigators and attorneys assigned to this case took the time to thoroughly piece together the details of the events that began nearly four years ago. They followed the facts where they led, and I’m very proud of the work they’ve done today."

She added that the co-conspirators were "unwilling to accept" that Arizonans voted for President Biden in an election that was "free and fair" and "schemed to prevent the lawful transfer of the presidency."

MICHIGAN AG CHARGES 16 ‘FLASE ELECTORS’ FOR DONALD TRUMP IN 2020 PRESIDENTIAL ELECTION

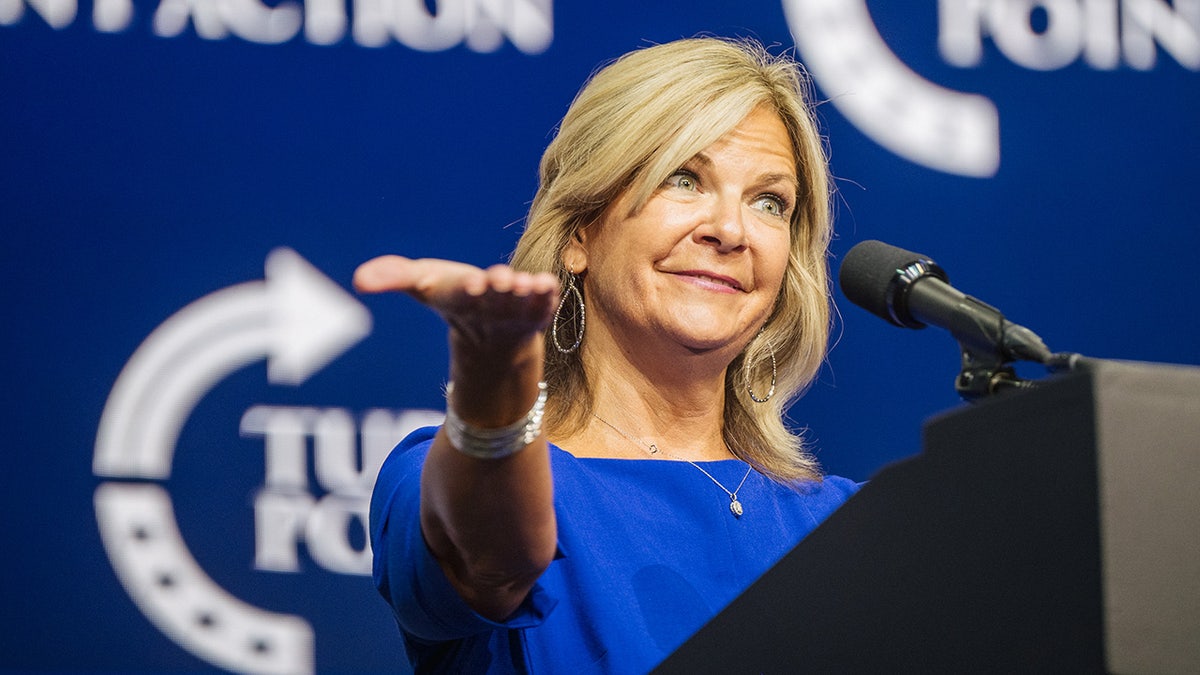

Former Arizona Chairwoman Kelli Ward was among those charged Wednesday as a "fake elector" for Trump. (Brandon Bell/Getty Images)

The defendants include former chair of the Arizona Republican Party Kelli Ward, sitting state Sens. Jake Hoffman and Anthony Kern and an unindicted co-conspirator described as "a former president of the United States who spread false claims of election fraud following the 2020 election," a clear reference to Trump.

WITH PRESIDENTIAL RACE ON THE HORIZON, NM LAWMAKERS LOOK TO OUTLAW FAKE ELECTORS

Former President Donald Trump was listed - without being named - as an unindicted co-conspirator. (Probe-Media for Fox News Digital)

In December 2020, the defendants wrote on a certificate sent to Congress that they were "duly elected and qualified" electors for Trump, claiming he had won the state.

Seven others were indicted but had their names redacted, pending charges being served.

Arizona State Sen. Anthony Kern was among the 11 alleged "fake electors" charged. (Rebecca Noble/Getty Images)

Some outlets reported that former White House chief of staff Mark Meadows and Rudy Giuliani and were also unindicted co-conspirators along with Trump.

George Terwilliger, a lawyer representing Meadows, told Fox News he had not yet seen the indictment.

"If Mr. Meadows is named in this indictment; it is a blatantly political and politicized accusation and will be contested and defeated," he said.

CLICK HERE TO GET THE FOX NEWS APP

Alleged "fake electors" have also been charged in Georgia, Michigan and Nevada .

Get the latest updates from the 2024 campaign trail, exclusive interviews and more Fox News politics content.

You've successfully subscribed to this newsletter!

More from Politics

Biden admin cracks down on power plants fueling nation's grid

Biden sparks Christian group's anger after making sign of the cross at abortion rally: 'Disgusting insult'

Supreme Court to hear arguments in Trump presidential immunity case

Wisconsin GOP Senate candidate says Republicans 'making a mistake' by not discussing health care

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

Determinants of individuals’ belief in fake news: A scoping review determinants of belief in fake news

Kirill bryanov.

Laboratory for Social and Cognitive Informatics, National Research University Higher School of Economics, St. Petersburg, Russia

Victoria Vziatysheva

Associated data.

All relevant data are available within the paper. Search protocol is described in the text, and Table 3 contains information about all studies included in the review.

Proliferation of misinformation in digital news environments can harm society in a number of ways, but its dangers are most acute when citizens believe that false news is factually accurate. A recent wave of empirical research focuses on factors that explain why people fall for the so-called fake news. In this scoping review, we summarize the results of experimental studies that test different predictors of individuals’ belief in misinformation.

The review is based on a synthetic analysis of 26 scholarly articles. The authors developed and applied a search protocol to two academic databases, Scopus and Web of Science. The sample included experimental studies that test factors influencing users’ ability to recognize fake news, their likelihood to trust it or intention to engage with such content. Relying on scoping review methodology, the authors then collated and summarized the available evidence.

The study identifies three broad groups of factors contributing to individuals’ belief in fake news. Firstly, message characteristics—such as belief consistency and presentation cues—can drive people’s belief in misinformation. Secondly, susceptibility to fake news can be determined by individual factors including people’s cognitive styles, predispositions, and differences in news and information literacy. Finally, accuracy-promoting interventions such as warnings or nudges priming individuals to think about information veracity can impact judgements about fake news credibility. Evidence suggests that inoculation-type interventions can be both scalable and effective. We note that study results could be partly driven by design choices such as selection of stimuli and outcome measurement.

Conclusions

We call for expanding the scope and diversifying designs of empirical investigations of people’s susceptibility to false information online. We recommend examining digital platforms beyond Facebook, using more diverse formats of stimulus material and adding a comparative angle to fake news research.

Introduction

Deception is not a new phenomenon in mass communication: people had been exposed to political propaganda, strategic misinformation, and rumors long before much of public communication migrated to digital spaces [ 1 ]. In the information ecosystem centered around social media, however, digital deception took on renewed urgency, with the 2016 U.S. presidential election marking the tipping point where the gravity of the issue became a widespread concern [ 2 , 3 ]. A growing body of work documents the detrimental effects of online misinformation on political discourse and people’s societally significant attitudes and beliefs. Exposure to false information has been linked to outcomes such as diminished trust in mainstream media [ 4 ], fostering the feelings of inefficacy, alienation, and cynicism toward political candidates [ 5 ], as well as creating false memories of fabricated policy-relevant events [ 6 ] and anchoring individuals’ perceptions of unfamiliar topics [ 7 ].

According to some estimates, the spread of politically charged digital deception in the buildup to and following the 2016 election became a mass phenomenon: for example, Allcott and Gentzkow [ 1 ] estimated that the average US adult could have read and remembered at least one fake news article in the months around the election (but see Allen et al. [ 8 ] for an opposing claim regarding the scale of the fake news issue). Scholarly reflections upon this new reality sparked a wave of research concerned with a specific brand of false information, labelled fake news and most commonly conceptualized as non-factual messages resembling legitimate news content and created with an intention to deceive [ 3 , 9 ]. One research avenue that has seen a major uptick in the volume of published work is concerned with uncovering the factors driving people’s ability to discern fake from legitimate news. Indeed, in order for deceitful messages to exert the hypothesized societal effects—such as catalyzing political polarization [ 10 ], distorting public opinion [ 11 ], and promoting inaccurate beliefs [ 12 ]—the recipients have to believe that the claims these messages present are true [ 13 ]. Furthermore, research shows that the more people find false information encountered on social media credible, the more likely they are to amplify it by sharing [ 14 ]. The factors and mechanisms underlying individuals’ judgements of fake news’ accuracy and credibility thus become a central concern for both theory and practice.

While message credibility has been a longstanding matter of interest for scholars of communication [ 15 ], the post-2016 wave of scholarship can be viewed as distinct on account of its focus on particular news formats, contents, and mechanisms of spread that have been prevalent amid the recent fake news crisis [ 16 ]. Furthermore, unlike previous studies of message credibility, the recent work is increasingly taking a turn towards developing and testing potential solutions to the problem of digital misinformation, particularly in the form of interventions aimed at improving people’s accuracy judgements.

Some scholars argue that the recent rise of fake news is a manifestation of a broader ongoing epistemological shift, where significant numbers of online information consumers move away from the standards of evidence-based reasoning and pursuit of objective truth toward “alternative facts” and partisan simplism—a malaise often labelled as the state of “post-truth” [ 17 , 18 ]. Lewandowsky and colleagues identify large-scale trends such as declining social capital, rising economic inequality and political polarization, diminishing trust in science, and an increasingly fragmented media landscape as the processes underlying the shift toward the “post-truth.” In order to narrow the scope of this report, we specifically focus on the news media component of the larger “post-truth” puzzle. This leads us to consider only the studies that explore the effects of misinformation packaged in news-like formats, perforce leaving out investigations dealing with other forms of online deception–for example, messages coming from political figures and parties [ 19 ] or rumors [ 20 ].

The apparently vast amount and heterogeneity of recent empirical research output addressing the antecedents to people’s belief in fake news calls for integrative work summarizing and mapping the newly generated findings. We are aware of a single review article published to date synthesizing empirical findings on the factors of individuals’ susceptibility to believing fake news in political contexts, a narrative summary of a subset of relevant evidence [ 21 ]. In order to systematically survey the available literature in a way that permits both transparency and sufficient conceptual breadth, we employ a scoping review methodology, most commonly used in medical and public health research. This method prescribes specifying a research question, search strategy, and criteria for inclusion and exclusion, along with the general logic of charting and arranging the data, thus allowing for a transparent, replicable synthesis [ 22 ]. Because it is well-suited for identifying diverse subsets of evidence pertaining to a broad research question [ 23 ], scoping review methodology is particularly relevant to our study’s objectives. We begin our investigation with articulating the following research questions:

- RQ1: What factors have been found to predict individuals’ belief in fake news and their capacity to discern between false and real news?

- RQ2: What interventions have been found to reduce individuals’ belief in fake news and boost their capacity to discern between false and real news?

In the following sections, we specify our methodology and describe the findings using an inductively developed framework organized around groups of factors and dependent variables extracted from the data. Specifically, we approached the analysis without a preconceived categorization of the factors in mind. Following our assessment of the studies included in the sample, we divided them into three groups based on whether the antecedents of belief in fake news that they focus on 1) reside within the individual or 2) are related to the features of the message, source, or information environment or 3) represent interventions specifically designed to tackle the problem of online misinformation. We conclude with a discussion of the state of play in the research area under review, identify strengths and gaps in existing scholarship, and offer potential avenues for further advancing this body of knowledge.

Materials and methods

Our research pipeline has been developed in accordance with PRISMA guidelines for systematic scoping reviews [ 24 ] and contains the following steps: a) development of a review protocol; b) identification of the relevant studies; c) extraction and charting of the data from selected studies, elaboration of the emerging themes; d) collation and summarization of the results; e) assessment of the strengths and limitations of the body of literature, identification of potential paths for addressing the existing gaps and theory advancement.

Search strategy and protocol development

At the outset, we defined the target population of texts as English-language scholarly articles published in peer-reviewed journals between January 1, 2016 and November 1, 2020 and using experimental methodology to investigate the factors underlying individuals’ belief in false news. We selected this time frame with the intention to specifically capture the research output that emerged in response to the “post-truth” turn in the public and scholarly discourse that many observers link to the political events of 2016, most notably Donald Trump’s ascent to U.S. presidency [ 17 ]. Because we were primarily interested in causal evidence for the role of various antecedents to fake news credibility perceptions, we decided to focus on experimental studies. Our definition of experiment has been purposefully lax, since we acknowledged the possibility that not all relevant studies could employ rigorous experimental design with random assignment and a control group. For example, this would likely be the case for studies testing factors that are more easily measured than manipulated, such as individual psychological predispositions, as predictors of fake news susceptibility. We therefore included investigations where researchers varied at least one of the elements of news exposure: Either a hypothesized factor driving belief in fake news (both between or within subjects), or veracity of news used as a stimulus (within-subjects). Consequently, the studies included in our review presented both causal and correlational evidence.

Upon the initial screening of relevant texts already known to the authors or discovered through cross-referencing, it became apparent that proposed remedies and interventions enhancing news accuracy judgements should also be included into the scope of the review. In many cases practical solutions are presented alongside fake news believability factors, while in several instances testing such interventions is the reports’ primary concern. We began with developing the string of search terms informed by the language found in the titles of the already known relevant studies [ 14 , 25 – 27 ], then enhanced it with plausible synonymous terms drawn from the online service Thesaurus . com . As the initial version of this report went into peer review, we received reviewer feedback suggesting that some of the relevant studies, particularly on the topic of inoculation-based interventions, were left out. We modified our search query accordingly, adding further three inoculation-related terms. The ultimate query looked as follows:

- (belie* OR discern* OR identif* OR credib* OR evaluat* OR assess* OR rating OR

- rate OR suspic* OR "thinking" OR accura* OR recogn* OR susceptib* OR malleab* OR trust* OR resist* OR immun* or innocul*) AND (false* OR fake OR disinform* OR misinform*).

Based on our understanding that the relevant studies should fall within the scope of such disciplines as media and communication studies, political science, psychology, cognitive science, and information sciences, we identified two citation databases, Scopus and Web of Science, as the target corpora of scholarly texts. Web of Science and Scopus are consistently ranked among leading academic databases providing citation indexing [ 28 , 29 ]. Norris and Oppenheim [ 30 ] argue that in terms of record processing quality and depth of coverage these databases provide valid instruments for evaluating scholarly contributions in social sciences. Another possible alternative is Google Scholar, which also provides citation indexing and is often considered the largest academic database [ 31 ]. Yet, according to some appraisals, this database lacks quality control [ 32 ], transparency, and can contribute to parts of relevant evidence being overlooked when used in systematic reviews [ 33 ]. Thus, for the purposes of this paper, we chose WoS and Scopus as sources of data.

Relevance screening and inclusion/exclusion criteria

Using title search, our queries resulted in 1622 and 1074 publications in Scopus and Web of Science, respectively. The study selection process is demonstrated in Fig 1 .

We began the search with crude title screening performed by the authors (KB and VV) on each database independently. On this stage, we mainly excluded obviously irrelevant articles (e.g. research reports mentioning false-positive biochemical tests results) and those whose titles unambiguously indicated that the item was outside of our original scope, such as work in the field of machine learning on automated fake news detection. Both authors’ results were then cross-checked, and disagreements resolved. This stage narrowed our selection down to 109 potentially relevant Scopus articles and 76 WoS articles. Having removed duplicate items present in both databases, we arrived at the list of 117 unique articles retained for abstract review.

On the abstract screening stage, we excluded items that could be identified as utilizing non-experimental research designs. Furthermore, on this stage we determined that all articles that fit our intended scope include at least one of the following outcome variables: 1) perceived credibility, believability, or accuracy of false news messages and 2) a measure of the capacity to discern false from authentic news. Screening potentially eligible abstracts suggested that studies not addressing one of these two outcomes do not answer the research questions at the center of our study. Seventy articles were thus removed, leaving us with 45 articles for full-text review.

The remaining articles were read in full by the authors independently, disagreements on whether specific items fit the inclusion criteria resolved, resulting in the final sample of 26 articles (see Table 1 for the full list of included studies). Since our primary focus is on perceptions of false media content and corresponding interventions designed to improve news delivery and consumption practices, we only included the experiments that utilized a news-like format of the stimulus material. As a result, we forwent investigations focusing on online rumors, individual politicians’ social media posts, and other stimuli that were not meant to represent content produced by a news organization. We did not limit the range of platforms where the news articles were presented to participants, since many studies simulated the processes of news selection and consumption in high-choice environments such as social media feeds. We then charted the evidence contained therein according to a categorization based on the outcome and independent variables that the included studies investigate.

* Note: In study design statements, all factors are between-subjects unless stated otherwise.

Outcome variables

Having arranged the available evidence along a number of ad-hoc dimensions, including the primary independent variables/correlates and focal outcome variables, we opted for a presentation strategy that opens with a classification of study dependent variables. Our analysis revealed that the body of scholarly literature under review is characterized by a significant heterogeneity of outcome variables. The concepts central to our synthesis are operationalized and measured in a variety of ways across studies, which presents a major hindrance to comparability of their results. In addition, in the absence of established terminology these variables are often labelled differently even when they represent similar constructs.

In addition to several variations of the dependent variables that we used as one of the inclusion criteria, we discovered a range of additional DVs relevant to the issue of online misinformation that the studies under review explored. The resulting classification is presented in Table 2 below.

Note: A single study could yield several observations if it considered multiple outcome variables.

As visible from Table 2 , the majority of studies in our sample measured the degree to which participants identified news messages or headlines as credible, believable or accurate. This strategy was utilized in experiments that both exposed individuals to made-up messages only, and those where stimulus material included a combination of real and fake items. Studies of the former type examined the effects of message characteristics or presentation cues on perceived credibility of misinformation, while the latter stimulus format also enabled scholars to examine the factors driving the accuracy of people’s identification of news as real or fake. In most instances, these synthetic “media truth discernment” scores were constructed post-hoc by matching participants’ credibility responses to the known “ground truth” of messages that they were asked to assess. These individual discernment scores could then be matched with the respondent’s or message’s features to infer the sources of systematic variation in the aggregate judgement accuracy.

Looking at credibility perceptions of real and false news separately also enabled scholars to determine whether the effects of factors or interventions were symmetric for both message types. In a media environment where the overwhelming majority of news is real after all [ 27 ], it is essential to ensure both that fake news is dismissed, and high-quality content is trusted.

Another outcome that several studies in our sample investigated is the self-reported likelihood to share the message on social media. Given that social platforms like Facebook are widely believed to be responsible for the rapid spread of deceitful political content in recent years [ 2 ], the determinants of sharing behavior are central to developing effective measures for limiting the reach of fake news. Moreover, in at least one study [ 34 ] researchers explicitly used sharing intent as a proxy for a news accuracy judgement in order to estimate perceived accuracy without priming participants’ thinking about veracity of information. This approach appears promising given that this as well as other studies reported sizable correlations between perceived accuracy and sharing intent [ 35 – 37 ], yet it is obviously limited as a host of considerations beyond credibility can inform the decision to share a news item on social media.

Having extracted and classified the dependent variables in the reviewed studies, we proceed to mapping our observations against the factors and correlates that were theorized to exert effects on them (see Table 3 ).

Note: Only outcome variables with more than one observation are included in the table.

A single study could yield several observations if it considered multiple independent and/or outcome variables.

We observed that the experimental studies in our sample measure or manipulate three types of factors hypothesized to influence individuals’ belief in fake news. The first category encompasses variables related to the news message, the way it is presented, or the features of the information environment where exposure to information occurs. In other words, these tests seek to answer the question: What kinds of fake news are people more likely to fall for? The second category takes a different approach and examines respondents’ individual traits predictive of their susceptibility to disinformation. Put simply, these tests address the broad question of who falls for fake news. Finally, the effects of measures specifically designed to combat the spread of fake news constitute a qualitatively distinct group. Granted, this is a necessarily simplified categorization, as factors do not always easily lend themselves to inclusion into one of these baskets. For example, the effect of a pro-attitudinal message can be seen as a combination of both message-level (e. g. conservative-friendly wording of the headline) and an individual-level predisposition (recipient embracing politically conservative views). For presentation purposes, we base our narrative synthesis of the reviewed evidence on the following categorization: 1) Factors residing entirely outside of the individual recipient (message features, presentation cues, information environment); 2) Recipient’s individual features; 3) Interventions. For each category, we discuss theoretical frameworks that the authors employ and specific study designs.

A fundamental question at the core of many investigations that we reviewed is whether people are generally predisposed to believe fake news that they encounter online. Previous research suggests that individuals go about evaluating the veracity of falsehoods similarly to how they process true information [ 38 ]. Generally, most individuals tend to accept information that others communicate to them as accurate, provided that there are no salient markers suggesting otherwise [ 39 ].

Informed by these established notions, some of the authors whose work we reviewed expect to find the effects of “truth bias,” a tendency to accept all incoming claims at face value, including false ones. This, however, does not seem to be the case. No study under review reported the majority of respondents trusting most fake messages or perceiving false and real messages as equally credible. If anything, in some cases a “deception bias” emerges, where individuals’ credibility judgements are biased in the direction of rating both real and false news as fake. For example, Luo et al. [ 40 ] found that across two experiments where stimuli consisted of equal numbers of real and fake headlines participants were more likely to rate all headlines as fake, resulting in just 44.6% and 40% of headlines marked as real across two studies. Yet, it is possible that this effect is a product of the experimental setting where individuals are alerted to the possibility that some of the news is fake and prompted to scrutinize each message more thoroughly than they would while leisurely browsing their newsfeed at home.

The reviewed evidence of individuals’ overall credibility perceptions of fake news as compared to real news, as well as of people’s ability to tell one from another, is somewhat contradictory. Several studies that examined participants’ accuracy in discerning real from fake news report estimates that are either below or indistinguishable from random chance: Moravec et al. [ 41 ] report a mean detection rate of 43.9%, with only 17% of participants performing better than chance; in Luo et al. [ 40 ], detection accuracy is slightly better than chance (53.5%) in study 1 and statistically indistinguishable from chance in study 2 (49.2%). Encouragingly, the majority of other studies where respondents were exposed to both real and fake news items provide evidence suggesting that people’s average capacity to tell one from another is considerably greater than chance. In all studies reported in Pennycook and Rand [ 25 ], average perceived credibility of real headlines is above 2.5 on a four-point scale from 1 to 4, while average credibility of fake headlines is below 1.6. A similar distance—about one point on a four-point scale—marks the difference between real and fake news’ perceived credibility in experiments reported in Bronstein et al. [ 42 ]. In Bago et al. [ 43 ], participants rated less than 40% of fake headlines and more than 60% of real headlines as accurate. In Jones-Jang et al. [ 44 ], respondents correctly identified fake news 6.35 attempts out of 10.

Following the aggregate-level assessment, we proceed to describing three main groups of factors that researchers identify as sources of variation in perceived credibility of fake news.

Message-level and environmental factors

When apparent signs of authenticity or fakeness of a news item are not immediately available, individuals can rely on certain message characteristics when making a credibility judgement. Two major message-level factors stand out in this cluster of evidence as most frequently tested (see Table 3 ). Firstly, alignment of the message source, topic, or content with the respondent’s prior beliefs and ideological predispositions; secondly, social endorsement cues. Theoretical expectations within this approach are largely shaped by dual-process models of learning and information processing [ 58 , 59 ] borrowed from the field of psychology and adapted for online information environments. These theories emphasize how people’s information processing can occur through either the more conscious, analytic route or the intuitive, heuristic route. The general assumption traceable in nearly every theoretical argument is that consumers of digital news routinely face information overload and have to resort to fast and economical heuristic modes of processing [ 60 ], which leads to reliance on cues embedded in messages or the way they are presented. For example, some studies that examine the influence of online social heuristics on evaluations of fake news’ credibility build on Sundar’s [ 61 ] concept of bandwagon cues, or indicators of collective endorsement of online content as a sign of its quality. More generally, these studies continue the line of research investigating how perceived social consensus on certain issues, gauged from online information environments, contributes to opinion formation (e. g. Lewandowsky et al. [ 62 ]).

Exploring the interaction between message topic and bandwagon heuristics on perceived credibility of fake news headlines, Luo et al. [ 40 ] find that a high number of likes associated with the post modestly increases (by 0.34 points on a 7-point scale) perceived credibility of both real and fake news compared to few likes. Notably, this effect is observed for health and science headlines, but not for political ones. In contrast, Kluck et al. [ 35 ] fail to find the effect of the numeric indicator of Facebook post endorsement on perceived credibility. This discrepancy could be explained by differences in the design of these two studies: whereas in Luo et al. participants were exposed to multiple headlines, both real and fake, Kluck et al. only assessed perceived credibility of just one made-up news story. This may have led to the unique properties of this single news story contributing to the observed result., Kluck et al. further reveal that negative comments questioning the stimulus post’s authenticity do dampen both perceived credibility (by 0.21 standard deviations) and sharing intent. In a rare investigation of news evaluation on Instagram, Mena et al. [ 46 ] demonstrate that trusted endorsements by celebrities do increase credibility of a made-up non-political news post, while bandwagon endorsements do not. Again, this study relies on one fabricated news post as a stimulus. These discrepant results of social influence studies suggest that the likelihood of detecting such effects may be contingent on specific study design choices, particularly the format, veracity, and sampling of stimulus messages. Generalizability and comparability of the results generated in experiments that use only one message as a stimulus should be enhanced by replications that employ stimulus sampling techniques [ 63 ].

Following one of the most influential paradigms in political communication research—the motivated reasoning account postulating that people are more likely to pursue, consume, endorse and otherwise favor information that matches their preexisting beliefs or comes from an ideologically aligned source—most studies in our sample measure the ideological or political concordance of the experimental messages and most commonly use it in statistical models as covariates or hypothesized moderators. Where they are reported, the pattern of direct effects of ideological concordance largely conforms to expectations, as people tend to rate congenial messages as more credible. In Bago et al. [ 43 ], headline political concordance increased the likelihood of participants rating it as accurate (b = 0.21), which was still meager compared to the positive effect of the headline’s actual veracity (b = 1.56). In Kim, Moravec and Dennis [ 50 ], headline political concordance was a significant predictor of believability (b = 0.585 in study 1; b = 0.153 in study 2), but the magnitude of this effect was surpassed by that of low source ratings by experts (b = −0.784 in study 1; b = -0.365 in study 2). In turn, increased believability heightened the reported intent to read, like, and share the story. In the same study, both expert and user ratings of the source displayed alongside the message influenced its perceived believability in both directions. According to the results of the study by Kim and Dennis [ 14 ], increased relevance and pro-attitudinal directionality of the statement contained in the headline predicted increased believability and sharing intent. Similarly, Moravec et al. [ 41 ] argued that the confirmatory nature of the headline is the single most powerful predictor of belief in false but not true news headlines. Tsang [ 55 ] found sizable effects of the respondents’ stance on the Hong Kong extradition bill on perceived fakeness of a news story covering the topic in line with the motivated reasoning mechanism.

At the same time, the expectation that individuals will use the ideological leaning of the source as a credibility cue when faced with ambiguous messages lacking other credibility indicators was not supported by data. Relying on the data collected from almost 4000 Amazon Mechanical Turk workers, Clayton et al. [ 45 ] failed to detect the hypothesized influence of motivated reasoning, induced by the right or left-leaning mainstream news source label, on belief in a false statement presented in a news report.

Several studies tested the effects of factors beyond social endorsement and directional cues. Schaewitz et al. [ 13 ] looked at the effects of such message characteristics as source credibility, content inconsistencies, subjectivity, sensationalism, and the presence of manipulated images on message and source credibility appraisals, and found no association between these factors and focal outcome variables—against the background of the significant influence of personal-level factors such as the need for cognition. As already mentioned, Luo et al. [ 40 ] found that fake news detection accuracy can also vary by the topic, with respondents recording the highest accuracy rates in the context of political news—a finding that could be explained by users’ greater familiarity and knowledge of politics compared to science and health.

One study under review investigated the possibility that news credibility perceptions can be influenced not by the features of specific messages, but by characteristics of a broader information environment, for example, the prevalence of certain types of discourse. Testing the effects of exposure to the widespread elite rhetoric about “fake news,” van Duyn and Collier [ 26 ] discovered evidence that it can dampen believability of all news, damaging people’s ability to identify legitimate content in addition to reducing general media trust. These effects were sizable, with primed participants ascribing real articles on average 0.47 credibility points less than those who haven’t been exposed to politicians’ tweets about fake news, on a 3-point scale.

As this brief overview demonstrates, the message-level approaches to fake news susceptibility consider a patchwork of diverse factors, whose effects may vary depending on the measurement instruments, context, and operationalization of independent and outcome variables. Compared to individual-level factors, scholars espousing this paradigm tend to rely on more diverse experimental stimuli. In addition to headlines, they often employ story leads and full news reports, while the stimulus new stories cover a broader range of topics than just politics. At the same time, out of ten studies attributed to this category, five used either one or two variations of a single stimulus news post. This constitutes an apparent limitation to the generalizability of their findings. To generate evidence generalizable beyond specific messages and topics, future studies in this domain should rely on more diverse sets of stimuli.

Individual-level factors

This strain of research recognizes the differences in people’s individual cognitive styles, predispositions, and conditions as the main source of variation in fake news credibility judgements. Theoretically, they largely rely on dual-process approaches to human cognition as well [ 64 , 65 ]. Scholars embracing this approach explain some people’s tendency to fall for fake news by their reliance, either innate or momentary, on less analytical and more reflexive modes of thinking [ 37 , 42 ]. Generally, they tend to ascribe fake news susceptibility to lack of reasoning rather than to directionally motivated reasoning.

Pennycook and Rand [ 25 ] employ the established measure of analytical thinking, the Cognitive Reflection Test, to demonstrate that respondents who are more prone to override intuitive thinking with further reflection are also better at discerning false from real news. This effect holds regardless of whether the headlines are ideologically concordant or discordant with individuals’ views. Importantly, the authors also find that headline plausibility (understood as the extent to which it contains a statement that sounds outrageous or patently false to an average person) moderates the observed effect, suggesting that more analytical individuals can use extreme implausibility as a cue indicating news’ fakeness.

In a 2020 study [ 37 ], Pennycook and Rand replicated the relationship between CRT and fake news discernment, in addition to testing novel measures—pseudo-profound bullshit receptivity (the tendency to ascribe profound meaning to randomly generated phrases) and a tendency to overclaim one’s level of knowledge—as potential correlates of respondents’ likelihood to accept claims contained in false headlines. Pearson’s r ranged from 0.30 to 0.39 in study 1 and from 0.20 to 0.26 in study 2 (all significant at p<0.001 in both studies), indicating modestly sized yet significant correlations. All three measures were correlated with perceived accuracy of fake news headlines as well as with each other, based on which the authors speculated that these measures are all connected to a common underlying trait that manifests as the propensity to uncritically accept various claims of low epistemic value. The researchers labelled this trait reflexive open-mindedness , as opposed to reflective open-mindedness observed in more analytical individuals. In a similar vein, Bronstein et al. [ 42 ] added cognitive tendencies such as delusion-like ideation, dogmatism, and religious fundamentalism to the list of individual-level traits weakly associated with heightened belief in fake news, while analytical and open-minded thinking slightly decreased this belief.

Schaewitz et al. [ 13 ] linked the classic concept from credibility research, need for cognition, to the tendency to rate down credibility (in some models but not others) and accuracy of non-political fake news. This concept overlaps with analytical thinking from Pennycook and Rand’s experiments, yet distinct in that it captures the self-reported pleasure from (and not just the proneness to) performing cognitively effortful tasks.

Much like the studies reviewed above, experiments by Martel et al. [ 48 ] and Bago et al. [ 43 ] challenged the motivated reasoning argument as applied to fake news detection, focusing instead on the classical reasoning explanation: the more analytic the reasoning, the higher the likelihood to accurately detect false headlines. In contrast to the above accounts, both studies investigate the momentary conditions, rather than stable cognitive features, as sources of variation in fake news detection accuracy. In Martel et al. [ 48 ], increased emotionality (as both the current mental state at the time of task completion and the induced mode of information processing) was strongly associated with the increased belief in fake news, with induced emotional processing resulting in a 10% increase in believability of false headlines. Fernández-López and Perea [ 49 ] reached similar conclusions about the role of emotion drawing on a sample of Spanish residents.

Bago et al. [ 43 ] relied on the two-response approach to test the effects of the increased time for deliberation on perceived accuracy of real and false headlines. Compared to the first response, given under time constraints and additional cognitive load, the final response to the same news items for which participants had no time limit and no additional cognitive task indicated significantly lower perceived accuracy of fake (but not real) headlines, both ideologically concordant and discordant. The effect of heightened deliberation (b = 0.36) was larger than the effect of headline political concordance (b = -0.21). These findings lend additional support to the argument that decision conditions favoring more measured, analytical modes of cognitive processing are also more likely to yield higher rates of fake news discernment.

Pennycook et al. [ 47 ] provide evidence supporting the existence of the illusory truth effect—the increased likelihood to view the already seen statements as true, regardless of the actual veracity—in the context of fake news. In their experiments, a single exposure to either a fake or real news headline slightly yet consistently (by 0.09 or 0.11 points on a 4-point scale) increased the likelihood to rate it as true on the second encounter, regardless of political concordance, and this effect persists after as long as a week.

It is not always how individuals process messages, but how competent they are about the information environment, that affects their ability to resist misinformation. Amazeen and Bucy [ 57 ] introduce a measure of procedural news knowledge (PNK), or working knowledge of how news media organizations operate, as a predictor of the ability to identify fake news and other online messages that can be viewed as deliberately deceptive (such as native advertising). In their analysis, one standard deviation decrease in PNK increased perceived accuracy of fabricated news headlines by 0.19 standard deviation. Interestingly, Jones-Jang et al. [ 44 ] find a significant correlation between information literacy (but not media and news literacies) and identification between fake news stories.

Taken together, the evidence reviewed in this section provides robust support to the idea that analytic processing is associated with more accurate discernment of fake news. Yet, it has to be noted that the generalizability of these findings could be constrained by the stimulus selection strategy that many of these studies share. All experiments reviewed above, excluding Schaewitz et al. [ 13 ] and Fernández-López and Perea [ 49 ], rely on stimulus material constructed from equal shares of real mainstream news headlines and real fake news headlines sourced from fact-checking websites like Snopes . com . As these statements are intensely political and often blatantly untrue, the sheer implausibility of some of the headlines can offer a “fakeness” cue easily picked up by more analytical—or simply politically knowledgeable—individuals, a proposition tested by Pennycook and Rand [ 25 ]. While they preserve the authenticity of the information environment around the 2016 U.S. presidential election, it is unclear what these findings can tell us about the reasons behind people’s belief in fake news that are less egregiously “fake” and therefore do not carry a conspicuous mark of falsehood.

Accuracy-promoting interventions

The normative foundation of much of the research investigating the reasons behind people’s vulnerability to misinformation is the need to develop measures limiting its negative effects on individuals and society. Two major approaches to countering fake news and its negative effects can be distinguished in the literature under review. The first approach, often labelled inoculation, is aimed at preemptively alerting individuals to the dangers of online deception and equipping them with the tools to combat it [ 44 , 56 ]. The second manifests in tackling specific questionable news stories or sources by labelling them in a way that triggers increased scrutiny by information consumers [ 51 , 54 ]. The key difference between the two is that inoculation-based strategies are designed to work preemptively, while labels and flags are most commonly presented to information consumers alongside the message itself.

Some of the most promising inoculation interventions are those designed to enhance various aspects of media and information literacy. Recent studies demonstrated that preventive techniques—like exposing people to anti-conspiracy arguments [ 66 ] or explaining deception strategies [ 67 ]—can help neutralize harmful effects of misinformation before the exposure. Grounded in the idea that the lack of adequate knowledge and skills among news consumers makes people less critical and, thus, more susceptible to fake news [ 68 ], such measures aim at making online deception-related considerations salient in the minds of large swaths of users, as well as at equipping them with basic techniques that help spot false news.

In a cross-national study that involved respondents from the United States and India, Guess et al [ 52 ] find that exposing users to a set of simple guidelines for detecting misinformation modelled after similar Facebook guidelines (e.g., “Be skeptical of headlines,” “Watch for unusual formatting”) improves fake news discernment rate by 26% in the U.S. sample and by 19% in the Indian sample, regardless of whether the headlines are politically concordant or discordant. These effects persist several weeks post-exposure. Interestingly, it might be that the effect is caused not so much by participants heeding the instructions as by simply priming them to think about accuracy. When testing the effects of accuracy priming in the context of COVID-19 misinformation, Pennycook et al. [ 34 ] reveal that inattention to accuracy considerations is rampant: people asked whether they would share false stories appear to rarely consider their veracity unless prompted to do so. Yet, asking them to rate the accuracy of a single unrelated headline before going into the task dramatically improved accuracy and reduced the likelihood to share false stories: the difference in sharing likelihood of true relative to false headlines was 2.8 times higher in the treatment group comparatively to the control group.

On a more general note, the latter finding could suggest that the results of all experiments that include false news discernment tasks could be biased in the direction of more accuracy simply by the virtue of priming participants to think about news’ veracity, compared to their usual state of mind when browsing online news. Lutzke et al. [ 36 ] reach similar results when they prime critical thinking in the context of climate change news, resulting in diminished trust and sharing intentions for falsehoods even among climate change doubters.

A study by Roozenbeek and van der Linden [ 56 ] demonstrated the capacity of a scalable inoculation intervention in the format of a choice-based online game to confer resistance against several common misinformation strategies. Over the average of 15 minutes of gameplay, users were tasked with choosing the most efficient ways of misinforming the audience in a series of hypothetical scenarios. Post-gameplay credibility scores of fake news items embedded in the game were significantly lower than pre-test scores using a one-way repeated measures F(5, 13559) = 980.65, Wilk’s Λ = 0.73, p < 0.001, η 2 = 0.27. These findings were replicated in a between-subjects design with a control group in Basol et al. [ 69 ], although this study was not included in our sample based on formal criteria.

Fact-checking is arguably the most publicly visible format of real measures used to combat online misinformation. Studies in our sample present mixed evidence of the effectiveness of fact-checking interventions in reducing credibility of misinformation. Using different formats of fact-checking warnings before exposing participants to a set of verifiably fake news stories, Morris et al. [ 53 ] demonstrated that the effects of such measures can be limited and contingent on respondents’ ideology (liberals tend to be more responsive to fact-checking warnings than conservatives). Encouragingly, Clayton et al. [ 51 ] found that labels indicating the fact that a particular false story has been either disputed or rated false do decrease belief in this story, regardless of partisanship. The “Disputed” tag placed next to the story headline decreased believability by 10%, while the “Rated false” tag was 13% effective. At the same time, in line with van Duyn and Collier [ 26 ], they showed that general warnings that are not specific to particular messages are less effective and can reduce belief in real news. Finally, Garrett and Poulsen [ 54 ], comparing the effects of three types of Facebook flags (fact-checking warning; peer warning; humorous label) found that only self-identification of the source as humorous reduces both belief and sharing intent. The discrepant conclusions that these three studies reach are unsurprising given differences in format and meaning of warnings that they test.

In sum, findings in this section suggest that the general warnings and non-specific rhetoric of “fake news” should be employed with caution so as to avoid the outcomes that can be opposite to the desired effects. Recent advances in scholarship on the backfire effect of misinformation corrections have called into question the empirical soundness of this phenomenon [ 70 , 71 ]. However, multiple earlier studies across several issue contexts have documented specific instances where attitude-challenging corrections were linked to compounding misperceptions rather than rectifying them [ 72 , 73 ]. Designers of accuracy-promoting interventions should at least be aware of the possibility that such effects could follow.

Overall, while the evidence of the effects of labelling and flagging specific social media messages and sources remains inconclusive, it appears that priming users to think of online news’ accuracy is a scalable and cheap way to improve the rates of fake news detection. Gamified inoculation strategies also hold potential to reach mass audiences while preemptively familiarizing users with the threat of online deception.

We have applied a scoping review methodology to map the existing evidence of the effects various antecedents to people’s belief in false news, predominantly in the context of social media. The research landscape presents a complex picture, suggesting that the focal phenomenon is driven by the interplay of cognitive, psychological and environmental factors, as well as characteristics of a specific message.

Overall, the evidence under review speaks to the fact that people on average are not entirely gullible, and they can detect deceitful messages reasonably well. While there has been no evidence to support the notion of “truth bias,” i.e., people’s propensity to accept most incoming messages as true, the results of some studies in our sample suggested that under certain conditions the opposite—a scenario that can be labelled “deception bias”—can be at work. This is consistent with some recent theoretical and empirical accounts suggesting that a large share of online information consumers today approach news content with skepticism [ 74 , 75 ]. In this regard, the problem with fake news could be not only that people fall for it, but also that it erodes trust in legitimate news.

At the same time, given the scarcity of attention and cognitive resources, individuals often rely on simple rules of thumb to make efficient credibility judgements. Depending on many contextual variables, such heuristics can be triggered by bandwagon and celebrity endorsements, topic relevance, or presentation format. In many cases, messages’ concordance with prior beliefs remains a predictor of increased credibility perceptions.

There is also consistent evidence supporting the notion that certain cognitive styles and predilections are associated with the ability to discern real from fake headlines. The overarching concept of reflexive open-mindedness captures an array of related constructs that are predictive of propensity to accept claims of questionable epistemological value, an entity of which fake news is representative. Yet, while many of the studies focusing on individual-level factors demonstrate that the effects of cognitive styles and mental states are robust across both politically concordant and discordant headlines, the overall effects of belief consistency remain powerful. For example, in Pennycook and Rand [ 25 ] politically concordant items were rated as significantly more accurate than politically discordant items overall (this analysis was used as a manipulation check). This suggests that individuals may not be necessarily engaging in motivated reasoning, yet still using belief consistency as a credibility cue.

The line of research concerned with accuracy-improving interventions reveals limited efficiency of general warnings and Facebook-style tags. Available evidence suggests that simple inoculation interventions embedded in news interfaces to prime critical thinking and exposure to news literacy guidelines can induce more reliable improvements while avoiding normatively undesirable effects.

Conclusions and future research

The review highlighted a number of blind spots in the existing experimental research on fake news perceptions. Since this literature has to a large extent emerged as a response to particular societal developments, the scope of investigations and study design choices bear many contextual similarities. The sample is heavily skewed toward the U.S. news and news consumers, with the majority of studies using a limited set of politically charged falsehoods for stimulus material. While this approach enhances external validity of studies, it also limits the universe of experimental fake news to a rather narrow subset of this sprawling genre. Future studies should transcend the boundaries of the “fake news canon” and look beyond Snopes and Politifact for stimulus material in order to investigate the effects of already established factors on perceived credibility of misinformation that is not political or has not yet been debunked by major fact-checking organizations.

Similarly, the overwhelming majority of experiments under review seek to replicate the environment where many information consumers encountered fake news during and after the misinformation crisis of 2016, to which end they present stimulus news items in the format of Facebook posts. As a result, there is currently a paucity of studies looking at all other rapidly emerging venues for political speech and fake news propagation: Instagram, messenger services like WhatsApp, and video platforms like YouTube and TikTok.

The comparative aspect of fake news perceptions, too, is conspicuously understudied. The only truly comparative study in our sample [ 52 ] uncovered meaningful differences in effect sizes and decay time between U.S. and Indian samples. More comparative research is needed to specify whether the determinants of fake news credibility are robust across various national political and media systems.

Two methodological concerns also stand out. Firstly, a dominant approach to constructing experimental stimuli rests on the assumption that the bulk of news consumption on social media occurs on the level of headline exposure—i.e. users process news and make sharing decisions based largely on news headlines. While there are strong reasons to believe that it is true for some news consumers, others might engage with news content more thoroughly, which can yield differences in effects observed on the headline level. Future studies could benefit from accounting for this potential divergence. For example, researchers can borrow the logic of Arceneaux and Johnson [ 76 ] and introduce an element of choice, thus enabling comparisons between those who only skim headlines and those who prefer to click on articles to read.

Finally, the results of most existing fake news studies could be systematically biased by the mere presence of a credibility assessment task. As Kim and Dennis [ 14 ] argue, browsing social media feeds is normally associated with a hedonic mindset, which is less conducive to critical assessment of information compared to a utilitarian mindset. This is corroborated by Pennycook et al. [ 34 ] who show that people who are not primed to think about accuracy are significantly more likely to share false news. A small credibility rating task produces massive accuracy improvement, underscoring the difference that a simple priming intervention can make. Asking respondents to rate credibility of treatment news items could work similarly, thus distorting the estimates compared to respondents’ “real” accuracy rates. In this light, future research should incorporate indirect measures of perceived fake and real news accuracy that could measure the focal construct without priming respondents to think about credibility and veracity of information.

Limitations

The necessary conceptual and temporal boundaries that constitute the framework of this review can also be viewed as its limitation. By focusing on a specific type of online misinformation—fake news—we intentionally excluded other variations of deceitful messages that can be influential in the public sphere, such as rumors, hoaxes, conspiracy theories, etc. This focus on the relatively recent species of misinformation led us to apply specific criteria to the stimulus material, as well as to limit the search by the period beginning in 2016. Since belief in both fake news and adjacent genres of misinformation could be driven by same mechanisms, focusing on just fake news could result in leaving out some potentially relevant evidence.

Another limitation is related to our methodological criteria. We selected studies to review based on the experimental design. Yet, the evidence of how people interact with misinformation may also be generated from questionnaires, behavioral data analysis, or qualitative inquiry. For example, recent non-experimental studies reveal certain demographic characteristics, political attitudes or media use habits associated with increased susceptibility to fake news [ 77 , 78 ]. Finally, our focus on articles published in peer-reviewed scholarly journals means that potentially relevant evidence that appeared in formats more oriented toward practitioners and policymakers could be overlooked. Future systematic reviews can present a more comprehensive view of the research area by expanding their focus beyond the exclusively “news-like” online misinformation formats, relaxing methodological criteria, and diversifying the range of data sources.

Funding Statement