- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2024 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

How to Write a Great Hypothesis

Hypothesis Definition, Format, Examples, and Tips

Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

:max_bytes(150000):strip_icc():format(webp)/IMG_9791-89504ab694d54b66bbd72cb84ffb860e.jpg)

Amy Morin, LCSW, is a psychotherapist and international bestselling author. Her books, including "13 Things Mentally Strong People Don't Do," have been translated into more than 40 languages. Her TEDx talk, "The Secret of Becoming Mentally Strong," is one of the most viewed talks of all time.

:max_bytes(150000):strip_icc():format(webp)/VW-MIND-Amy-2b338105f1ee493f94d7e333e410fa76.jpg)

Verywell / Alex Dos Diaz

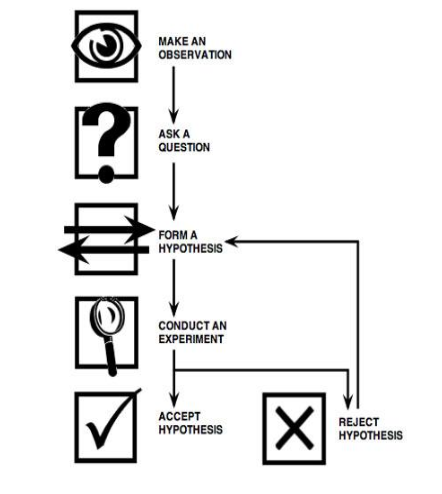

- The Scientific Method

Hypothesis Format

Falsifiability of a hypothesis.

- Operationalization

Hypothesis Types

Hypotheses examples.

- Collecting Data

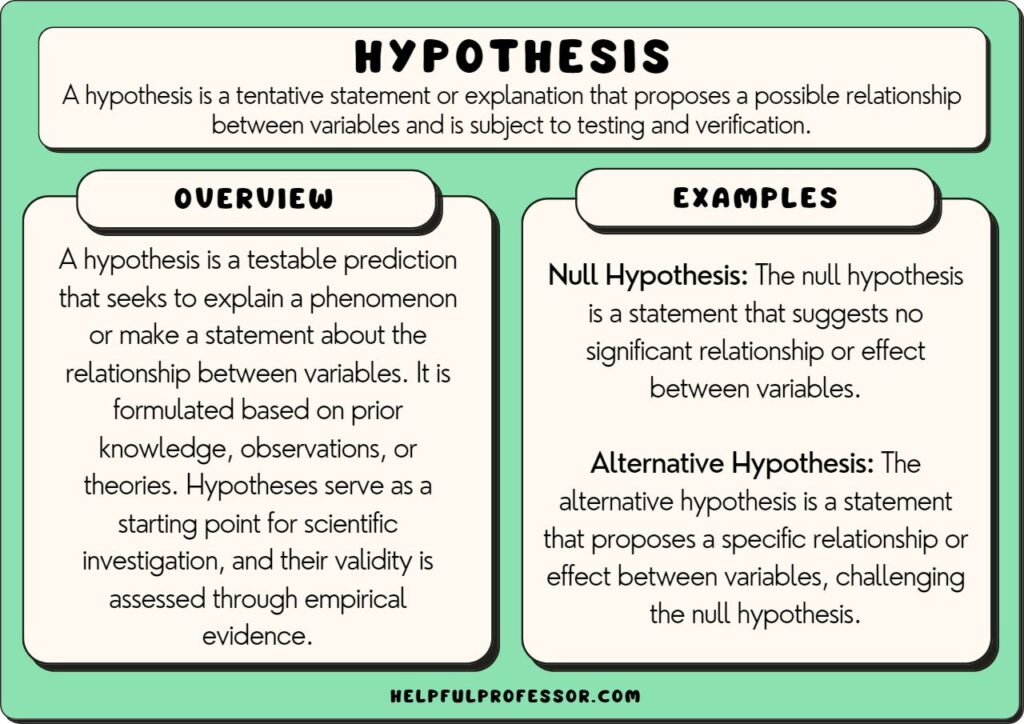

A hypothesis is a tentative statement about the relationship between two or more variables. It is a specific, testable prediction about what you expect to happen in a study. It is a preliminary answer to your question that helps guide the research process.

Consider a study designed to examine the relationship between sleep deprivation and test performance. The hypothesis might be: "This study is designed to assess the hypothesis that sleep-deprived people will perform worse on a test than individuals who are not sleep-deprived."

At a Glance

A hypothesis is crucial to scientific research because it offers a clear direction for what the researchers are looking to find. This allows them to design experiments to test their predictions and add to our scientific knowledge about the world. This article explores how a hypothesis is used in psychology research, how to write a good hypothesis, and the different types of hypotheses you might use.

The Hypothesis in the Scientific Method

In the scientific method , whether it involves research in psychology, biology, or some other area, a hypothesis represents what the researchers think will happen in an experiment. The scientific method involves the following steps:

- Forming a question

- Performing background research

- Creating a hypothesis

- Designing an experiment

- Collecting data

- Analyzing the results

- Drawing conclusions

- Communicating the results

The hypothesis is a prediction, but it involves more than a guess. Most of the time, the hypothesis begins with a question which is then explored through background research. At this point, researchers then begin to develop a testable hypothesis.

Unless you are creating an exploratory study, your hypothesis should always explain what you expect to happen.

In a study exploring the effects of a particular drug, the hypothesis might be that researchers expect the drug to have some type of effect on the symptoms of a specific illness. In psychology, the hypothesis might focus on how a certain aspect of the environment might influence a particular behavior.

Remember, a hypothesis does not have to be correct. While the hypothesis predicts what the researchers expect to see, the goal of the research is to determine whether this guess is right or wrong. When conducting an experiment, researchers might explore numerous factors to determine which ones might contribute to the ultimate outcome.

In many cases, researchers may find that the results of an experiment do not support the original hypothesis. When writing up these results, the researchers might suggest other options that should be explored in future studies.

In many cases, researchers might draw a hypothesis from a specific theory or build on previous research. For example, prior research has shown that stress can impact the immune system. So a researcher might hypothesize: "People with high-stress levels will be more likely to contract a common cold after being exposed to the virus than people who have low-stress levels."

In other instances, researchers might look at commonly held beliefs or folk wisdom. "Birds of a feather flock together" is one example of folk adage that a psychologist might try to investigate. The researcher might pose a specific hypothesis that "People tend to select romantic partners who are similar to them in interests and educational level."

Elements of a Good Hypothesis

So how do you write a good hypothesis? When trying to come up with a hypothesis for your research or experiments, ask yourself the following questions:

- Is your hypothesis based on your research on a topic?

- Can your hypothesis be tested?

- Does your hypothesis include independent and dependent variables?

Before you come up with a specific hypothesis, spend some time doing background research. Once you have completed a literature review, start thinking about potential questions you still have. Pay attention to the discussion section in the journal articles you read . Many authors will suggest questions that still need to be explored.

How to Formulate a Good Hypothesis

To form a hypothesis, you should take these steps:

- Collect as many observations about a topic or problem as you can.

- Evaluate these observations and look for possible causes of the problem.

- Create a list of possible explanations that you might want to explore.

- After you have developed some possible hypotheses, think of ways that you could confirm or disprove each hypothesis through experimentation. This is known as falsifiability.

In the scientific method , falsifiability is an important part of any valid hypothesis. In order to test a claim scientifically, it must be possible that the claim could be proven false.

Students sometimes confuse the idea of falsifiability with the idea that it means that something is false, which is not the case. What falsifiability means is that if something was false, then it is possible to demonstrate that it is false.

One of the hallmarks of pseudoscience is that it makes claims that cannot be refuted or proven false.

The Importance of Operational Definitions

A variable is a factor or element that can be changed and manipulated in ways that are observable and measurable. However, the researcher must also define how the variable will be manipulated and measured in the study.

Operational definitions are specific definitions for all relevant factors in a study. This process helps make vague or ambiguous concepts detailed and measurable.

For example, a researcher might operationally define the variable " test anxiety " as the results of a self-report measure of anxiety experienced during an exam. A "study habits" variable might be defined by the amount of studying that actually occurs as measured by time.

These precise descriptions are important because many things can be measured in various ways. Clearly defining these variables and how they are measured helps ensure that other researchers can replicate your results.

Replicability

One of the basic principles of any type of scientific research is that the results must be replicable.

Replication means repeating an experiment in the same way to produce the same results. By clearly detailing the specifics of how the variables were measured and manipulated, other researchers can better understand the results and repeat the study if needed.

Some variables are more difficult than others to define. For example, how would you operationally define a variable such as aggression ? For obvious ethical reasons, researchers cannot create a situation in which a person behaves aggressively toward others.

To measure this variable, the researcher must devise a measurement that assesses aggressive behavior without harming others. The researcher might utilize a simulated task to measure aggressiveness in this situation.

Hypothesis Checklist

- Does your hypothesis focus on something that you can actually test?

- Does your hypothesis include both an independent and dependent variable?

- Can you manipulate the variables?

- Can your hypothesis be tested without violating ethical standards?

The hypothesis you use will depend on what you are investigating and hoping to find. Some of the main types of hypotheses that you might use include:

- Simple hypothesis : This type of hypothesis suggests there is a relationship between one independent variable and one dependent variable.

- Complex hypothesis : This type suggests a relationship between three or more variables, such as two independent and dependent variables.

- Null hypothesis : This hypothesis suggests no relationship exists between two or more variables.

- Alternative hypothesis : This hypothesis states the opposite of the null hypothesis.

- Statistical hypothesis : This hypothesis uses statistical analysis to evaluate a representative population sample and then generalizes the findings to the larger group.

- Logical hypothesis : This hypothesis assumes a relationship between variables without collecting data or evidence.

A hypothesis often follows a basic format of "If {this happens} then {this will happen}." One way to structure your hypothesis is to describe what will happen to the dependent variable if you change the independent variable .

The basic format might be: "If {these changes are made to a certain independent variable}, then we will observe {a change in a specific dependent variable}."

A few examples of simple hypotheses:

- "Students who eat breakfast will perform better on a math exam than students who do not eat breakfast."

- "Students who experience test anxiety before an English exam will get lower scores than students who do not experience test anxiety."

- "Motorists who talk on the phone while driving will be more likely to make errors on a driving course than those who do not talk on the phone."

- "Children who receive a new reading intervention will have higher reading scores than students who do not receive the intervention."

Examples of a complex hypothesis include:

- "People with high-sugar diets and sedentary activity levels are more likely to develop depression."

- "Younger people who are regularly exposed to green, outdoor areas have better subjective well-being than older adults who have limited exposure to green spaces."

Examples of a null hypothesis include:

- "There is no difference in anxiety levels between people who take St. John's wort supplements and those who do not."

- "There is no difference in scores on a memory recall task between children and adults."

- "There is no difference in aggression levels between children who play first-person shooter games and those who do not."

Examples of an alternative hypothesis:

- "People who take St. John's wort supplements will have less anxiety than those who do not."

- "Adults will perform better on a memory task than children."

- "Children who play first-person shooter games will show higher levels of aggression than children who do not."

Collecting Data on Your Hypothesis

Once a researcher has formed a testable hypothesis, the next step is to select a research design and start collecting data. The research method depends largely on exactly what they are studying. There are two basic types of research methods: descriptive research and experimental research.

Descriptive Research Methods

Descriptive research such as case studies , naturalistic observations , and surveys are often used when conducting an experiment is difficult or impossible. These methods are best used to describe different aspects of a behavior or psychological phenomenon.

Once a researcher has collected data using descriptive methods, a correlational study can examine how the variables are related. This research method might be used to investigate a hypothesis that is difficult to test experimentally.

Experimental Research Methods

Experimental methods are used to demonstrate causal relationships between variables. In an experiment, the researcher systematically manipulates a variable of interest (known as the independent variable) and measures the effect on another variable (known as the dependent variable).

Unlike correlational studies, which can only be used to determine if there is a relationship between two variables, experimental methods can be used to determine the actual nature of the relationship—whether changes in one variable actually cause another to change.

The hypothesis is a critical part of any scientific exploration. It represents what researchers expect to find in a study or experiment. In situations where the hypothesis is unsupported by the research, the research still has value. Such research helps us better understand how different aspects of the natural world relate to one another. It also helps us develop new hypotheses that can then be tested in the future.

Thompson WH, Skau S. On the scope of scientific hypotheses . R Soc Open Sci . 2023;10(8):230607. doi:10.1098/rsos.230607

Taran S, Adhikari NKJ, Fan E. Falsifiability in medicine: what clinicians can learn from Karl Popper [published correction appears in Intensive Care Med. 2021 Jun 17;:]. Intensive Care Med . 2021;47(9):1054-1056. doi:10.1007/s00134-021-06432-z

Eyler AA. Research Methods for Public Health . 1st ed. Springer Publishing Company; 2020. doi:10.1891/9780826182067.0004

Nosek BA, Errington TM. What is replication ? PLoS Biol . 2020;18(3):e3000691. doi:10.1371/journal.pbio.3000691

Aggarwal R, Ranganathan P. Study designs: Part 2 - Descriptive studies . Perspect Clin Res . 2019;10(1):34-36. doi:10.4103/picr.PICR_154_18

Nevid J. Psychology: Concepts and Applications. Wadworth, 2013.

By Kendra Cherry, MSEd Kendra Cherry, MS, is a psychosocial rehabilitation specialist, psychology educator, and author of the "Everything Psychology Book."

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 18 January 2024

Learning in hybrid classes: the role of off-task activities

- Carli Ochs 1 ,

- Caroline Gahrmann 2 &

- Andreas Sonderegger ORCID: orcid.org/0000-0003-0054-0544 2 , 3

Scientific Reports volume 14 , Article number: 1629 ( 2024 ) Cite this article

1280 Accesses

1 Altmetric

Metrics details

- Human behaviour

Hybrid teaching (synchronous online and on-site teaching) offers many advantages (e.g., increased flexibility). However, previous research has suggested that students who join classes online suffer higher levels of distractibility, which might translate into students engaging in more off-task activities. This, in turn, can impair students’ learning performance. The following quasi-experimental field study investigated this specific link between teaching mode, engagement in off-task activities, and learning performance. We collected survey data from N = 690 students in six hybrid classes ( N = 254 online, N = 436 on-site). Participants reported the amount of time they spent engaging in digital and non-digital off-task activities and responded to a quiz on the course material. Results revealed that online students spent more time engaging in off-task activities than on-site students. Further, results were consistent with our hypothesis that joining the class online is associated with lower learning performance via time spent on digital off-task activities.

Similar content being viewed by others

Self-regulated spacing in a massive open online course is related to better learning

The science of effective learning with spacing and retrieval practice

Online vs in-person learning in higher education: effects on student achievement and recommendations for leadership

Introduction.

Since the start of the SARS COVID-19 pandemic, hybrid teaching has risen to an unprecedented level. Hybrid teaching is a teaching format where students can synchronously follow the class on-site or online 1 . This was especially useful in the later stages of the pandemic because it allowed for non-vaccinated or immuno-compromised students to follow classes from the safety of their homes 2 , 3 .

Overall, hybrid classes have been argued to augment the accessibility of classes 1 . The flexibility of such a format allows people who live further away or have other commitments to follow the same lectures as students who can physically join the class 1 , 4 . The hybrid format is easy to set up: it often consists of a live stream of the on-site class with more or less opportunities for online students to interact (e.g., commenting using the chat function, unmuting the microphone and asking questions or answering questions of the teacher). Because of its reputed benefits and its low cost, there is a high probability that universities and other institutions in the education system will continue to employ hybrid teaching even once there is no longer need for social distancing.

However, a major concern with hybrid teaching is whether the quality of learning is the same for online and on-site students 5 , 6 . Previous research has argued that online students might face more distractions and consequently engage in more off-task activities, which may lead to lower learning performance 5 , 6 . Yet, little research has addressed this question empirically, and scientists have called for further research in this field 5 , 6 . Therefore, in the following study, we investigated student behavior and learning in hybrid teaching, specifically the effect of teaching mode (online vs. on-site) on learning performance mediated via off-task activities.

Hybrid teaching: the difference between online and on-site learning

Hybrid classes allow online and on-site students to simultaneously follow the same class. However, while the class content is the same for both student groups, the learning environment is not. Online and on-site students’ environments may vary in terms of various physical, social and technological aspects, which might influence students’ learning engagement, experience of boredom, and distractions 7 , 8 , 9 , 10 . In the following paragraphs, we demonstrate how differences between the online and on-site environments may lead online students to be more vulnerable to distractions.

First, students following classes online might be in an overall more distracting environment. On-site students are in a classroom, (a supervised space dedicated to teaching and learning), while online students often are following their classes in a space that is cluttered, noisy and disturbing, hence less favorable to learning 11 , 12 .

Second, the social environment between both groups varies in terms of social control. On-site students are in a classroom where other students and the lecturer are physically present. This is not the case for online students. Indeed, students that were asked about their experience with online courses admitted that they miss the presence of peers and engage in more off-task activities because of a lack of social control 13 .

Third, computer-mediated communication may make it harder for online students to communicate with their teachers compared to on-site students 3 , 7 . For example, online students cannot discuss a matter face to face with a teacher after the course 7 . Furthermore, online students have reported feeling less comfortable asking questions due to slight delays caused by the online nature of the communication 3 . In addition, online students may face technical issues during class, such as internet connection and communication problems 7 , 14 , 15 . Both factors may lead online students to feel excluded or neglected 16 . Thus, students might feel disconnected from the class and, therefore, might be more vulnerable to distractions.

In summary, online students are likely to suffer from distraction due to an overall more distracting environment (cluttered, noisy and disturbing environment), reduced social control, and feelings of exclusion or neglect. All of these factors might impinge on students’ ability to concentrate and focus on learning content. Higher levels of distraction imply lower attention on the class.

Impact of off-task activities on learning

Research has shown that students' attention may fluctuate during a lesson 6 , 17 and that reduced attention may be associated with reduced learning performance 18 , 19 , 20 . This link has been established for various forms of attentional disengagement, such as mind wandering and engagement in digital as well as non-digital off-task activities 21 , 22 , 23 .

Mind wandering can be defined as “a situation in which executive control shifts away from a primary task to the processing of personal goals 24 ”, p. 946. Mind wandering is prevalent in teaching and learning situations 12 , 25 , 26 , 27 . Various studies adopting different methodological approaches (e.g., using probes of attention in recorded video lectures or live courses, self-reports, diary studies etc.) have shown that the occurrence of mind wandering is negatively linked to academic performance 6 , 17 , 23 , 27 , 28 . Interestingly, more recent work has indicated that the negative effect of mind wandering has less of an impact compared to other indicators of attentional disengagement, such as engagement in digital off-task activities 29 .

Research on engagement in digital off-task activities (also referred to as multimedia multitasking) has primarily focused on different forms of digital off-task activities 22 , 30 , 31 , such as computer use in the classroom, e.g., 32 , 33 , 34 , smartphone use in the classroom 19 , 35 , 36 , 37 , 38 , 39 , 40 , or the use of specific content such as social media 41 , 42 , 43 , 44 , 45 . Overall, empirical evidence mostly suggests that students regularly engage in digital off-task activities and that this negatively affects their learning 29 , 32 , 34 , 35 , 39 .

While the impact of digital off-task activities in the classroom have been studied quite intensely, there has been a relative lack of research on the impact of non-digital off-task activities 46 . One non-digital off-task activity that has received some attention is doodling. Doodling is defined as absentmindedly drawing symbols, figures, and patterns that are unrelated to the primary task (e.g., listening to the lecture). Results on doodling and learning performance are mixed. Doodling has been shown to positively influence the retention of information in an auditory task 47 , 48 , 49 but to negatively influence retention and learning in visual tasks 21 , 50 . There is only little empirical research addressing the consequence of other types of non-digital off-task activities. This could be related to the fact that the range of such activities is very broad and diverse. Moreover, specific non-digital off-task activities, such as ironing clothing, cleaning, cooking, and yoga, can only be carried out when participating in the lesson online. This last type of attentional disengagement might be particularly relevant in hybrid lessons as online students have a wider variability of potential non-digital off-task activities they could engage in (e.g., cleaning or taking a walk). In this regard, one experimental lab study addressing the effect of doing laundry while following a recorded online lecture has indicated negative consequences on learning 46 .

Concerning hybrid teaching, previous research on attentional disengagement in hybrid classes and their effect on performance is scarce, and researchers have called for further research in this field 5 , 6 . One study on attentional disengagement and learning in the hybrid context examined how mind wandering affected learning performance between students watching a class live on-site versus watching a video of the class in a lab 6 . This study found that mind wandering decreased in the on-site class condition but increased in the lab condition. Students in the video-lecture showed non-significantly higher learning scores than in the on-site session. The authors suspected this was due to the students' sterile settings while they viewed the lesson (e.g., in a lab without phones or computers). Contrary to natural settings, students in the classroom were exposed to more digital distractions than those watching the lesson online. This may have confounded the results, as digital distractions also lead to attentional disengagement and have been linked to lower learning performance 35 , 40 .

In summary, mind wandering, and digital and non-digital off-task activities reflect attentional disengagement that can hinder learning. As mentioned in the preceding section, online students may be more distracted due to their learning environment and the lack of social control. There is little empirical evidence comparing digital and non-digital off-task activities in on-site and online classes and their effects on learning performance. Thus, we intend to fill this research gap by studying hybrid teaching and learning performance mediated by students' digital and non-digital off-task activities.

The present study

In the present quasi-experimental mixed-method field study, we investigated the mediating effect of attentional disengagement between teaching mode (online vs. on-site) and learning performance. Specifically, we assessed the digital and non-digital activities of students as well as their learning performance in classes that were taught in hybrid form (i.e., students following the same class either on-site in a lecture theatre or online in a location of their choice). We were particularly interested in these two forms of attentional disengagement for the following reasons. First, digital off-task activities have been shown to be worse for learning than mind wandering. Second, non-digital activities are of particular interest in hybrid classes as the possibility of activities for online students is vast.

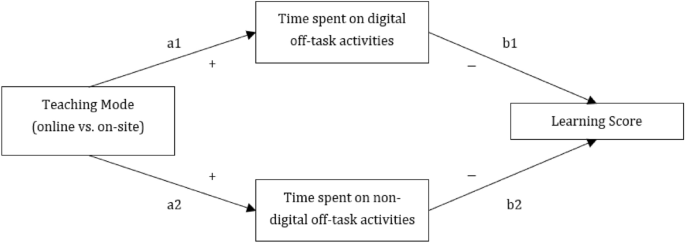

Based on our review of the literature, we put forward the following hypotheses (a depiction of our proposed model can be found in Fig. 1 ):

Proposed conceptual model.

H1: Teaching Mode is associated with time spent on digital off-task activities. Specifically, we expect students who participate online to spend more time on digital off-task activities than students who participate on-site.

H2: Teaching Mode is associated with time spent on non-digital off-task activities. Specifically, we expect students who participate online to spend more time on non-digital activities than students who participate on-site.

H3: Time spent on digital off-task activities mediates the relationship between teaching mode and learning score, such that online students spend more time on digital off-task activities, which, in turn, leads to a decreased learning score.

H4: Time spent on non-digital off-task activities mediates the relationship between teaching mode and learning score, such that online students spend more time on non-digital off-task activities, which, in turn, leads to a decreased learning score.

Teaching mode was coded in the following manner: 0 = on-site; 1 = online.

Participants

We collected 690 responses to our survey (556 participants identified themselves as female, 129 as male, and 5 as other). The ages of participants ranged between 18 and 64 ( M = 21.46; SD = 4.42). Participants were recruited at the end of a ninety-minute course in six different psychology lectures (i.e., introduction to work and organizational psychology ( N = 47), clinical psychology ( N = 107), introduction to developmental psychology ( N = 161), introduction to cognitive psychology ( N = 188), research methods ( N = 142), diagnostical tests ( N = 45) held in hybrid teaching mode by six different teachers. For each course some participants (total N = 436) were following a class on-site, and some participants (total N = 254) were following the class online. The choice whether to participate online or on-site was at the student’s discretion. In total, 74% of the students from these classes took part in the survey. Detailed information on participation rates per class and teaching mode can be found in the supplementary materials (Table S1 ). Based on Schönbrodt and Perugini (2013), we consider the total sample size of 690 as large enough to generate stable correlation estimates and offer a solid foundation for the correlation based statistical approaches used.

Observations of two independent raters indicated that the lectures did not differ considerably with regard to number of questions asked by the teacher ( M = 7.33, SD = 6.57), online student interactions ( M = 0.33, SD = 0.82), on-site student interactions ( M = 7.33, SD = 5.49), technical problems ( M = 0.25, SD = 0.61) and break duration ( M = 10.83, SD = 5.15).

Ethical approval

This study and its experimental protocols were approved by the Internal Review Board of the Department of Psychology at the University of Fribourg. All methods were carried out in accordance with relevant guidelines and regulations. Informed consent was obtained from all participants.

Self-report measures

Questions regarding digital off-task activities.

Participants were asked a series of questions about their computer/tablet and smartphone use during the lecture. These questions can be found in Table 1 . Second-order questions (e.g., 1a. How many notifications/messages did you receive on your computer or tablet during this lecture?) were only presented if participants answered yes to the first-order question (e.g., 1. Did you use your computer or tablet during this lecture?). Items 2.a, and 3.c left enough space to report up to 5 activities.

Questions regarding non-digital off-task activities

Participants were asked if they engaged in any off-task activity that was not performed on a digital device. If they did engage in such activities, they were asked to list the activities and estimate how much time they spent on each separate activity (cf. Table 2 ).

Context variables

To get a better understanding for the physical and mental context of participants, participants were asked to rate their experience on 5 items on a 7-point Likert scale (1 = totally disagree, 7 = totally agree) measuring their motivation ( During the class I felt motivated ), boredom ( During the class I felt bored. ), fatigue ( During the class I felt tired. ) as well as how engaged they perceived their teacher ( The teacher kept the subject interesting .) and how distracting they rated their environment ( During this class my environment was distracting .).

Learning score

Six multiple-choice questions on the content of the specific topic of the class were asked to assess students' learning scores. For each question, four answer options were provided, of which either one or two were correct. An example of a question can be found in Table 3 . The learning score was based on the number of correctly responded answer options. Each question had 4 answer options, allowing participants to score 4 points per question. Therefore, the maximum achievable score is 24 (based on the multiplication of the number of question (6) and the number of answer options (4)).

We implemented the exact same procedure for the six classes from which we recruited participants. Before each class, lecturers were contacted and asked for permission to perform recruitment in their class. Once they had agreed, we collaborated with them to create six exam questions on the course material for the upcoming class session. In each class, two researchers attended the course both online and on-site. Researchers sat at the back of the classroom and connected to the digital collaboration platform used to stream the class online (Microsoft Teams), where they listened with one earphone to the online class. With the other ear, they listened to the on-site class. This allowed them to code for aspects such as interactions on-site (questions from students) as well as interactions online (e.g., in chat) or issues with connections or lag.

The classes ended approximately 15 min before the scheduled time. The two researchers came to the front of the class and asked students to participate in the study. Students were told that the survey was on the effects of teaching mode (online/ on-site) on their learning. As incentives, participants were told that there would be a quiz on course material that might help them to prepare for the end-of-term exam, and participants were also rewarded points toward course credits. Great emphasis was given (orally and in written form on the first page of the survey) to the anonymous nature of the study and it was stressed that students should answer as honestly as possible. After the participants had confirmed their informed consent, they answered the questionnaire and responded to the quiz on the course material.

Qualitative data analysis

Participants' responses regarding their digital and non-digital off-task activities were analyzed using inductive coding. Eleven categories were identified for digital off-task activities, and eleven were identified for non-digital off-task activities (category descriptions can be found in supplementary materials Table S2 .). Activities were then coded into their respective categories by two coders. The inter-rater reliability was estimated using Cohen's kappa and showed to be satisfactory for digital off-task activities ( k = 0.93) as well as for non-digital off-task activities ( k = 0.97). The few differences in coding were then solved through discussion.

Quantitative data analysis

We performed a single-level mediated linear regression analysis via the R package lavaan 51 to probe our hypotheses. To ensure robust statistical inference while accounting for the nested structure of the data, we treated classrooms as fixed effects. This approach effectively addresses the issue of violating independence assumptions within clusters, ensuring the accuracy of our results without the inherent unreliability that would have ensued from a multilevel model with a small number of units at Level 2. Moreover, to account for the different learning tests employed across classes, we centered students' learning scores around their respective class mean.

Preceding the analysis, we checked for potential violations of relevant inference assumptions. We performed visual inspections of residuals. Our inspections did not reveal any apparent violations of the linearity assumption. However, residuals were non-normally distributed, thus violating the homoscedasticity assumption. We hence based our inference on bootstrapped confidence intervals (1.000 bootstrapped samples) to ensure robust estimation.

Descriptive data

Table 4 presents a comprehensive overview of participant enrollment, delineated by class and learning environment, alongside their corresponding average learning scores. The data discerns that, on the whole, the mean learning scores per class tended to be higher for students attending on-site, with the exception of one class, where scores were higher for online participants.

Table 5 reveals that a higher proportion of online students engaged in non-digital and digital activities compared to students that joined the class on-site. On average, online students also spent more time engaging in these activities. A table with the detailed summary of students’ percentage of activity and average time per specific activity can be found in supplementary materials (Table S3 ).

Hypotheses testing

Table 6 shows the means, standard deviations, intra-class coefficients and intercorrelations of the study variables. All hypotheses were tested by means of a single level mediated linear regression model. Table 7 displays the results of this analysis.

We proposed a direct association between teaching mode and time spent on digital (H1) and non-digital (H2) off-task activities by students in hybrid classrooms. In line with H1, data analysis (see Table 7 ) revealed that teaching mode was associated with time spent on digital off-task activities, with students who participated online spending more time on digital off-task activities than students who participated on-site ( a1 = 3.76, 95% CI [0.99; 6.42]). Hypothesis 1 was thus supported. In line with H2, teaching mode was associated with time spent on non-digital off-task activities, with students who participated online spending more time on non-digital off-task activities than students who participated on-site ( a2 = 2.40, 95% CI [0.98; 3.75]). Hypothesis 2 was thus supported.

Test of indirect effects

We estimated the total effect of teaching mode on students' learning performance ( a1b1 + a2b2 = − 0.09, 95% CI [− 0.17; − 0.03]). This suggests that, on the learning performance scale ranging from 0 to 24 points, the expected difference in learning performance between a student attending online and one attending on-site is 0.09 points, with the online student being expected to score lower. Crucially, this total effect is attributed entirely to the indirect influences of teaching mode on learning outcomes through engagement in both digital and non-digital off-task activities. Specifically, we assumed time spent on digital (H3) and non-digital (H4) off-task activities to mediate the relationship between teaching mode and learning scores. Our results revealed an indirect effect of teaching mode via time spent on digital off-task activities on learning scores ( a1b1 = − 0.07, 95% CI [− 0.12; − 0.02]), nominally accounting for 77.78% of the total effect of teaching mode on learning performance detailed above. Hypothesis 3 was thus supported. However, our results did not reveal an indirect effect of teaching mode via time spent on non-digital off-task activities on learning scores ( a2b2 = − 0.02, 95% CI [− 0.09; 0.01]), nominally accounting for only 22.22% of the total effect of teaching mode on learning performance detailed above. Hypothesis 4 was thus not supported.

Analysis of context variables

The analysis of the context variables revealed very small, non-significant differences between online and on-site ratings of student motivation, experienced boredom and fatigue, as well as the evaluation of the teacher’s engagement. The environment however was rated as more distracting by students taking the class online compared to students participating on-site. A table summarizing the results can be found in the supplementary materials (see Table S4 ).

This study aimed to evaluate potential differences in levels of distractibility and learning performance between online and on-site students in hybrid classrooms. As predicted, we found that students following a class online spend more time on digital and non-digital off-task activities than on-site students. Results were consistent with our hypothesis that engagement in digital off-task activities mediates a detrimental effect of online teaching on learning. Notably, results were not consistent with our hypothesis that engagement in non-digital off-task activities mediates a detrimental effect of online teaching on learning.

The results regarding the effects of teaching mode on engagement in off-task activities corroborate the assumptions outlined in the introduction. The descriptive data revealed that online students did not only engage in more off-task activities overall, but they also engaged in these activities for longer compared to on-site students. Some of the off-task activities were exclusively carried out by online students. This might be because some activities can only be carried out at home (e.g., cat care) while others might be considered inappropriate in class (e.g., watching a video). These examples indicate that online students might be confronted with more potential distractors and might feel less restricted to engage in certain behaviors due to a lack of social control.

This pattern of results indicates that despite the important advantages of hybrid teaching (e.g., increased accessibility of teaching), online students are particularly vulnerable within this setting: They report a higher degree of distractibility that is linked with an increased engagement in off-task activities. It is important to note that the teachers participating in this study did not implement any measures to optimize online students' learning experiences such as regularly integrating online students into class discussions, or using online quizzes and other tools to generate an interactive learning environment 1 , 52 , 53 . The focus on on-site students and the absence of particular measures to improve online students’ experience observed in this study may be attributable to the particular context of the COVID-19 pandemic. These classes were held as a temporary solution, meaning that they were not originally conceived as hybrid classes and that teachers had not undergone training on how to ideally teach in such a format 54 . Therefore, future research should address this research question in courses that are more geared towards integrating online students. Investigating hybrid teaching during the pandemic is important since the experiences made during this period are bound to influence and shape future use and understanding of hybrid teaching 15 , 54 , 55 , 56 . For example, now that teachers and institutions have discovered how simple it is to live stream a class, they might do this more frequently without investigating any further into the potential pitfalls of this format.

Regarding the effect of off-task activities on learning, the question arises why students' engagement in non-digital off-task activities did not show the same negative effects as the engagement in digital off-task activities. This might be related to the nature of the activities students engaged in. Most of the reported non-digital activities are manual tasks (e.g., eating and drinking, drawing), while digital activities mainly require verbal and visual resources (e.g., writing text messages and reading). Listening to a class and learning are tasks that require verbal resources 46 . Wickens’ Multiple Resource Theory 57 states that individuals can engage concurrently in tasks that require different resources, such as verbal and manual resources, without negative consequences on task performance. In contrast, concurrent engagement in tasks that require similar resources is linked to reduced task performance. In the context of learning, such effects have been shown already in research on doodling, where positive effects of absentminded drawing (a task requiring some visual resources) on learning were reported for auditory tasks 47 , 48 , 49 , while negative effects were observed for visual tasks 21 , 50 . In this study, verbal non-digital off-task activities (e.g., social interactions or writing and reading) were less common than manual ones. In contrast, reported digital activities relied largely on students' verbal and visual resources, interfering with class support.

Our findings have several practical and scientific implications. First, we found that student, especially online students, in hybrid lessons engage in off-task activities. This shows the need of interactive and engaging teaching to keep students focused on class topic and away from off-task activities. Online students should be reminded to study in a distraction-free atmosphere because they are more susceptible to distraction. Second, this study also revealed a negative effect of digital off-task activities on learning performance. Therefore, it is important to consider measures such as warning students of the negative consequences of partaking in off-task activities as well as suggesting rules of conduct regarding digital devices during class. Third, concerning non-digital off-task activities, a negative effect on learning was not found as, most likely, most activities did not interfere with the resources required for learning. However, for future research it might be interesting to conduct a more fine-grained analysis of the specific off-task activities. Lastly, while literature on how to conduct good hybrid teaching was available, it was not used in the examined classes. This suggests a gap between practice and research and should stimulate reflection on how to effectively communicate this knowledge to practitioners.

There are limitations to the present study. First, due to the quasi-experimental nature of the study design, participants self-selected their teaching mode (online vs. on-site). A random assignment of students to the two teaching modes was not possible because of ethical and legal constraints. In other studies, this problem was circumvented by simulating a course in the laboratory 6 , 17 . However, this implies that the occurrence of off-task activities cannot be observed in a naturalistic environment, which severely undermines the ecological validity of any study. Therefore, we are convinced that this first limitation regarding randomization is outweighed by gains in ecological validity. Second, the assessment of participants' engagement and time spent on off-task activities was based on self-reports. This might be problematic for two reasons: (1) a social desirability bias, (2) memory distortions. To reduce the risk of (1) a social desirability bias, we highlighted the importance of answering the survey honestly and correctly and stressed the anonymity of the data. With regard to potential (2) memory distortions, results of a meta-analysis indicated that self-report data only moderately correlated with objective measures of technology use 58 . This meta-analysis, however, summarized data from studies comparing self-reports and objective technology use over multiple days and weeks, while this study recorded self-reports for the last 90 min. For such short time frames, previous research has indicated that self-report measures regarding technology use are reliable 59 .

Other, more objective ways to assess students’ off-task activities in class would imply installing tracking software on students’ devices or using thought-probe techniques, all of which would reveal the nature of the study to the students. Because previous research has shown that knowledge about the objective of a study can influence participants’ behavior during the study 60 , it was important to us that the participants have no previous knowledge about our research objectives. Other researchers have made similar methodological choices and used self-reports for their studies in this context as well 35 , 59 , 61 , 62 . Third, we only recruited participants in psychology classes. For generalization purposes it would be ideal to conduct such a study in classes of other fields of study. Lastly, we note that teaching mode and off-task activities explained a relatively small portion of variance in students' learning scores overall. While learning performance represents a particularly important objective teaching outcome, it is endogenous to many contextual and student-specific factors 63 . Considering any one factor is therefore bound to explain relatively limited proportions of variance.

In conclusion, while hybrid teaching might be a useful tool to make classes more accessible, it does not guarantee equal opportunities in terms of learning performance. To ensure that students have an equal opportunity to achieve important learning objectives, instructors must be aware of the negative consequences of off-task activities, especially digital off-task activities for students participating online. In addition, they must adapt to the specific requirements of hybrid teaching in order to create optimal learning conditions for both on-site and online students. At the same time, students need to be made aware of creating remote learning conditions that offer as few distractions as possible and enable concentrated and focused learning.

Taking these measures into account is extremely important. Indeed, the past pandemic has shown that individuals with special needs, such as those who are immunocompromised or have physical disabilities, and those with family obligations benefit from being able to participate in courses online. Therefore, it is an ethical and social necessity to create learning environments that do not disadvantage them and enable them to succeed in their studies.

Data availability

The dataset is available on OSF ( https://osf.io/q4vf5/?view_only=21662f508cf44f68be78794d55f83be2 ).

Raes, A., Detienne, L., Windey, I. & Depaepe, F. A systematic literature review on synchronous hybrid learning: Gaps identified. Learn. Environ. Res. 23 , 269–290 (2020).

Article Google Scholar

Bower, M., Lee, M. J. W. & Dalgarno, B. Collaborative learning across physical and virtual worlds: Factors supporting and constraining learners in a blended reality environment. Brit. J. Educ. Technol. 48 , 407–430 (2017).

Weitze, C. L., Ørngreen, R. & Levinsen, K. The global classroom video conferencing model and first evaluations: european conference on e-learning. Proc. of the 12th European Conf. on E-Learning 2 , 503–510 (2013).

Bülow, M. W. Designing Synchronous Hybrid Learning Spaces: Challenges and Opportunities. in Hybrid Learning Spaces (eds. Gil, E., Mor, Y., Dimitriadis, Y. & Köppe, C.) 135–163 (Springer International Publishing, 2022). doi: https://doi.org/10.1007/978-3-030-88520-5_9 .

Schacter, D. L. & Szpunar, K. K. Enhancing attention and memory during video-recorded lectures. Scholars. Teach. Learn. Psychol. 1 , 60–71 (2015).

Wammes, J. D. & Smilek, D. Examining the influence of lecture format on degree of mind wandering. J. Appl. Res. Memory Cogn. 6 , 174–184 (2017).

Kelly, K. Building on students’ perspectives on moving to online learning during the COVID-19 pandemic. Can. J. Scholars. Teach. Learn. 13 , 5666 (2022).

Google Scholar

Saurabh, M. K., Patel, T., Bharbhor, P., Patel, P. & Kumar, S. Students’ perception on online teaching and learning during COVID-19 pandemic in medical education. Maed. Bucur 16 , 439–444 (2021).

Weitze, C. L. Pedagogical innovation in teacher teams: ECEL 2015. Proc. of 14th European Conf. on e-Learning ECEL-2015 629–638 (2015).

Zahra, R. & Sheshasaayee, A. Challenges Identified for the Efficient Implementation of the Hybrid E- Learning Model During COVID-19. in 2021 IEEE International Conf. on Mobile Networks and Wireless Communications (ICMNWC) 1–5 (2021). doi: https://doi.org/10.1109/ICMNWC52512.2021.9688533 .

Gundabattini, E., Solomon, D. G. & Darius, P. S. H. Discomfort Experienced by Students While Attending Online Classes During the Pandemic Period. In Ergonomics for Design and Innovation (eds. Chakrabarti, D., Karmakar, S. & Salve, U. R.) 1787–1798 (Springer International Publishing, 2022). doi: https://doi.org/10.1007/978-3-030-94277-9_152 .

Szpunar, K., Moulton, S. & Schacter, D. Mind wandering and education: from the classroom to online learning. Front. Psychol. 4 , 56656 (2013).

Cowit, N. Q. & Barker, L. J. Student Perspectives on Distraction and Engagement in the Synchronous Remote Classroom. Digital Distractions in the College Classroom 243–266 https://www.igi-global.com/chapter/student-perspectives-on-distraction-and-engagement-in-the-synchronous-remote-classroom/www.igi-global.com/chapter/student-perspectives-on-distraction-and-engagement-in-the-synchronous-remote-classroom/296135 (2022) doi: https://doi.org/10.4018/978-1-7998-9243-4.ch012 .

Chhetri, C. ‘I Lost Track of Things’: Student Experiences of Remote Learning in the Covid-19 Pandemic. in Proc. of the 21st Annual Conf. on Information Technology Education 314–319 (Association for Computing Machinery, 2020). doi: https://doi.org/10.1145/3368308.3415413 .

Fichten, C. et al. Digital tools faculty expected students to use during the COVID-19 pandemic in 2021: Problems and solutions for future hybrid and blended courses. J. Educ. Train. Stud. 9 , 24–30 (2021).

Huang, Y., Zhao, C., Shu, F. & Huang, J. Investigating and Analyzing Teaching Effect of Blended Synchronous Classroom. In 2017 International Conf. of Educational Innovation through Technology (EITT) 134–135 (2017). doi: https://doi.org/10.1109/EITT.2017.40 .

Risko, E. F., Anderson, N., Sarwal, A., Engelhardt, M. & Kingstone, A. Everyday attention: Variation in mind wandering and memory in a lecture. Appl. Cogn. Psychol. 26 , 234–242 (2012).

Altmann, E. M., Trafton, J. G. & Hambrick, D. Z. Momentary interruptions can derail the train of thought. J. Exp. Psychol. Gen. 143 , 215–226 (2014).

Article PubMed Google Scholar

Anshari, M., Almunawar, M. N., Shahrill, M., Wicaksono, D. K. & Huda, M. Smartphones usage in the classrooms: Learning aid or interference?. Educ. Inf. Technol. 22 , 3063–3079 (2017).

Winter, J., Cotton, D., Gavin, J. & Yorke, J. D. Effective e-learning? Multi-tasking, distractions and boundary management by graduate students in an online environment. ALT-J 18 , 71–83 (2010).

Aellig, A., Cassady, S., Francis, C. & Toops, D. Do attention span and doodling relate to ability to learn content from an educational video. Epistimi 4 , 21–24 (2009).

Junco, R. In-class multitasking and academic performance. Comput. Hum. Behav. 28 , 2236–2243 (2012).

Wammes, J. D., Seli, P., Cheyne, J. A., Boucher, P. O. & Smilek, D. Mind wandering during lectures II: Relation to academic performance. Scholars. Teach. Learn. Psychol. 2 , 33–48 (2016).

Smallwood, J. & Schooler, J. W. The restless mind. Psychol. Bull. 132 , 946–958 (2006).

Pachai, A. A., Acai, A., LoGiudice, A. B. & Kim, J. A. The mind that wanders: Challenges and potential benefits of mind wandering in education. Scholars. Teach. Learn. Psychol. 2 , 134–146 (2016).

Schooler, J. W., Reichle, E. D. & Halpern, D. V. Zoning Out while Reading: Evidence for Dissociations between Experience and Metaconsciousness. In Thinking and Seeing: Visual Metacognition in Adults and Children 203–226 (MIT Press, 2004).

Unsworth, N., McMillan, B. D., Brewer, G. A. & Spillers, G. J. Everyday attention failures: An individual differences investigation. J. Exp. Psychol. Learn. Memory Cogn. 38 , 1765–1772 (2012).

Randall, J. G., Oswald, F. L. & Beier, M. E. Mind-wandering, cognition, and performance: A theory-driven meta-analysis of attention regulation. Psychol. Bull. 140 , 1411–1431 (2014).

Wammes, J. D. et al. Disengagement during lectures: Media multitasking and mind wandering in university classrooms. Comput. Educ. 132 , 76–89 (2019).

May, K. E. & Elder, A. D. Efficient, helpful, or distracting? A literature review of media multitasking in relation to academic performance. Int. J. Educ. Technol. High Educ. 15 , 13 (2018).

Parry, D. A. & le Roux, D. B. Off-Task Media Use in Lectures: Towards a Theory of Determinants. In ICT Education (eds. Kabanda, S., Suleman, H. & Gruner, S.) vol. 963 49–64 (Springer International Publishing, 2019).

Aaron, L. S. & Lipton, T. Digital distraction: shedding light on the 21st-century college classroom. J. Educ. Technol. Syst. 46 , 363–378 (2018).

Ravizza, S. M., Uitvlugt, M. G. & Fenn, K. M. Logged in and zoned out: How laptop internet use relates to classroom learning. Psychol. Sci. 28 , 171–180 (2017).

Sana, F., Weston, T. & Cepeda, N. J. Laptop multitasking hinders classroom learning for both users and nearby peers. Comput. Educ. 62 , 24–31 (2013).

Bjornsen, C. A. & Archer, K. J. Relations between college students’ cell phone use during class and grades. Scholarsh. Teach. Learn. Psychol. 1 , 326–336 (2015).

Chen, Q. & Yan, Z. Does multitasking with mobile phones affect learning? A review. Comput. Hum. Behav. 54 , 34–42 (2016).

Dietz, S. & Henrich, C. Texting as a distraction to learning in college students. Comput. Hum. Behav. 36 , 163–167 (2014).

Gingerich, A. C. & Lineweaver, T. T. OMG! texting in class = U Fail :(empirical evidence that text messaging during class disrupts comprehension. Teach. Psychol. 41 , 44–51 (2014).

Kuznekoff, J. H., Munz, S. & Titsworth, S. Mobile phones in the classroom: Examining the effects of texting, twitter, and message content on student learning. Commun. Educ. 64 , 344–365 (2015).

Mendoza, J. S., Pody, B. C., Lee, S., Kim, M. & McDonough, I. M. The effect of cellphones on attention and learning: The influences of time, distraction, and nomophobia. Comput. Hum. Behav. 86 , 52–60 (2018).

Barton, B. A., Adams, K. S., Browne, B. L. & Arrastia-Chisholm, M. C. The effects of social media usage on attention, motivation, and academic performance. Active Learn. High. Educ. 22 , 11–22 (2021).

Junco, R. & Cotten, S. R. No A 4 U: The relationship between multitasking and academic performance. Comput. Educ. 59 , 505–514 (2012).

Karpinski, A. C., Kirschner, P. A., Ozer, I., Mellott, J. A. & Ochwo, P. An exploration of social networking site use, multitasking, and academic performance among United States and European university students. Comput. Hum. Behav. 29 , 1182–1192 (2013).

Rosen, L. D., Mark Carrier, L. & Cheever, N. A. Facebook and texting made me do it: Media-induced task-switching while studying. Comput. Hum. Behav. 29 , 948–958 (2013).

Wood, E. et al. Examining the impact of off-task multi-tasking with technology on real-time classroom learning. Comput. Educ. 58 , 365–374 (2012).

Blasiman, R. N., Larabee, D. & Fabry, D. Distracted students: A comparison of multiple types of distractions on learning in online lectures. Scholarsh. Teach. Learn. Psychol. 4 , 222–230 (2018).

Andrade, J. What does doodling do?. Appl. Cogn. Psychol. 24 , 100–106 (2010).

Nayar, B. & Koul, S. The journey from recall to knowledge: A study of two factors – structured doodling and note-taking on a student’s recall ability. Int. J. Educ. Manag. 34 , 127–138 (2019).

Tadayon, M. & Afhami, R. Doodling effects on junior high school students’ learning. Int. J. Art Design Educ. 36 , 118–125 (2017).

Chan, E. The negative effect of doodling on visual recall task performance. Univ. Brit. Columbia’s Undergrad. J. Psychol. 1 , 89656 (2012).

Rosseel, Y. lavaan: An R package for structural equation modeling. J. Stat. Softw. 48 , 1–36 (2012).

Bower, M., Dalgarno, B., Kennedy, G. E., Lee, M. J. W. & Kenney, J. Design and implementation factors in blended synchronous learning environments: Outcomes from a cross-case analysis. Comput. Educ. 86 , 1–17 (2015).

Ørngreen, R., Levinsen, K., Jelsbak, V., Møller, K. L. & Bendsen, T. Simultaneous class-based and live video streamed teaching: Experiences and derived principles from the bachelor programme in biomedical laboratory analysis. In European Conf. on e-Learning 451–XVII (Academic Conferences International Limited, 2015).

Hodges, C. B., Moore, S., Lockee, B. B., Trust, T. & Bond, M. A. The Difference Between Emergency Remote Teaching and Online Learning (Educause, 2020).

Littlejohn, A. Seeking and sending signals: Remodelling teaching practice during the Covid-19 crisis. ACCESS Contemp. Issues Educ. 40 , 56–62 (2020).

Rapanta, C., Botturi, L., Goodyear, P., Guàrdia, L. & Koole, M. Online university teaching during and after the covid-19 crisis: Refocusing teacher presence and learning activity. Postdigit. Sci. Educ. 2 , 923–945 (2020).

Wickens, C. D. Multiple resources and mental workload. Hum. Factors 50 , 449–455 (2008).

Parry, D. A. et al. A systematic review and meta-analysis of discrepancies between logged and self-reported digital media use. Nat. Hum. Behav. 5 , 1–13. https://doi.org/10.1038/s41562-021-01117-5 (2021).

Article MathSciNet Google Scholar

Ochs, C. & Sonderegger, A. What students do while you are teaching – computer and smartphone use in class and its implication on learning. In Human-Computer Interaction – INTERACT 2021 (eds. Ardito, C. et al.) 501–520 (Springer International Publishing, 2021). doi: https://doi.org/10.1007/978-3-030-85616-8_29 .

Ochs, C. & Sauer, J. Curtailing smartphone use: a field experiment evaluating two interventions. Behav Inf Technol 23 , 1–19 (2021).

Leysens, J-L., le Roux, D. B. & Parry, D. A. Can I have your attention, please?: An empirical investigation of media multitasking during university lectures. In Proc. of the Annual Conf. of the South African Institute of Computer Scientists and Information Technologists on - SAICSIT ’16 1–10 (ACM Press, 2016). https://doi.org/10.1145/2987491.2987498 .

McCoy, B. Digital distractions in the classroom phase II: Student classroom use of digital devices for non-class related purposes. J. Media Educ. 7 , 5–32 (2016).

Kauffman, H. A review of predictive factors of student success in and satisfaction with online learning. Res. Learn. Technol. 23 , 99563 (2015).

Download references

Acknowledgements

The authors thank Cindy Niclasse, Noemie Stadelmann, Valentine Boillat and Viviane Gaillard for their support in data collection. They also want to thank Anne-Raphaëlle Caldara, Valérie Camos, Alain Chavaillaz, Viktoria Gochmann, Pascal Gygax and Chantal Martin Sölch for their support and for providing access to their classes.

Author information

Authors and affiliations.

Department of Psychology, Georgetown University, Washington, D.C., USA

University of Fribourg, Fribourg, Switzerland

Caroline Gahrmann & Andreas Sonderegger

Bern University of Applied Sciences, Bern, Switzerland

Andreas Sonderegger

You can also search for this author in PubMed Google Scholar

Contributions

C.O.: Study concept and design, study supervision, manuscript writing, and interpretation of data. C.G.: data analysis and manuscript writing. A.S.: Study concept and design, manuscript writing, data analysis and interpretation of data.

Corresponding author

Correspondence to Andreas Sonderegger .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary information., rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Ochs, C., Gahrmann, C. & Sonderegger, A. Learning in hybrid classes: the role of off-task activities. Sci Rep 14 , 1629 (2024). https://doi.org/10.1038/s41598-023-50962-z

Download citation

Received : 19 October 2023

Accepted : 27 December 2023

Published : 18 January 2024

DOI : https://doi.org/10.1038/s41598-023-50962-z

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

10 learning hypothesis – Activity 8 H817

Rough notes

Very little academic literature on the theory of eLearning. It tends to be pragmatic and based on experience. Surprising considering the volume of literature on the subject.

What is a theory? “A theory can be described as a set of hypotheses that apply to all instances of a particular phenomenon, assisting in decision-making, philosophy of practice and effective implementation through practice” interestingly……. “Theory can only be effectively communicated if a common set of terms is used and if their meaning is popularly adhered to”

Terms defined: Online learning, eLearning, learning Object, LMS, Interactive, pedagogy and Mixed-mode/blended/resource-based learning

Ten hypothesis for learning: (Direct from the article)

- eLearning is a means of implementing education that can be applied within varying education models (for example, face to face or distance education) and educational philosophies (for example behaviourism and constructivism).

- eLearning enables unique forms of education that fits within the existing paradigms of face to face and distance education.

- The choice of eLearning tools should reflect rather than determine the pedagogy of a course; how technology is used is more important than which technology is used.

- eLearning advances primarily through the successful implementation of pedagogical innovation.

- eLearning can be used in two major ways; the presentation of education content, and the facilitation of education processes.

- eLearning tools are best made to operate within a carefully selected and optimally integrated course design model.

- eLearning tools and techniques should be used only after consideration has been given to online vs offline trade-offs.

- Effective eLearning practice considers the ways in which end-users will engage with the learning opportunities provided to them.

- The overall aim of education, that is, the development of the learner in the context of a predetermined curriculum or set of learning objectives, does not change when eLearning is applied.

- Only pedagogical advantages will provide a lasting rationale for implementing eLearning approaches.

In order to eLearning to develop the theoretical underpinnings must be made explicit and available for critique. Ravenscroft (2001:150) “given that the pace of change of educational technology is unlikely to slow down, the need for relatively more stable and theoretically founded interaction models is becoming increasingly important.”

Activity questions

Q1 – with which hypotheses do you agree.

As there is no one confined way of using eLearning, it fits into a broad array of forms and can be adapted into various educational philosophies. An example is Second Life , its use a platform for learning fits many different ways of creating learning and engaging with students: From one on one conversations, presentations, simulations, group discussions and activities. It hosts regular “ inworld ” conferences to innovate in eLearning. The next one being March 9th to 12th 2016 if you fancy popping along.

Image from page promoting Second Life Education Conference

2. eLearning enables unique forms of education that fits within the existing paradigms of face to face and distance education.

Similar to the previous point, learning is not binary “traditional” or “learning” but rather a continuum of learning processes that are more or less supported by technology. As an example of this I really like this diagram that Phillippa Cleaves has put together, as it shows a myriad of ways technology can intertwine with “traditional” education:

Phillippa Cleaves Technology Continuum

3 The choice of eLearning tools should reflect rather than determine the pedagogy of a course; how technology is used is more important than which technology is used.

This is the same for eLearning tools as with any other tool. Appropriate use of ANY tool is essential, even books, pen & paper, flip charts, whiteboards etc. from a learning design perspective I see the flow go something like: Overall learning goals > Specific learning goals > Appropriate interactions / learning processes > Appropriate tools to be used > Design of materials / activities

The selection tools used is, for the most part, the penultimate part of the learning design process.

8 – Effective eLearning practice considers the ways in which end-users will engage with the learning opportunities provided to them

This hypothesis is common sense for using any tool or resource. Having said that, practitioners should also consider and be open to unplanned ways resources may be used.

9 – The overall aim of education, that is, the development of the learner in the context of a predetermined curriculum or set of learning objectives, does not change when eLearning is applied

No, but the process of education may be expanded as learners use and master the toolset provided to support the curriculum.

Unsure about:

4 – eLearning advances primarily through the successful implementation of pedagogical innovation.

“Advances” is a very wooly term. I am sure eLearning has advanced without “successful implementation” as the pace of the industry is quicker than the capacity to research and draw results together of pedagogical success. Innovation in eLearning like all innovation is often “push” driven off the back of companies developing new technologies / services rather than “pull” from practitioners.

5 – eLearning can be used in two major ways; the presentation of education content, and the facilitation of education processes.

eLearning provides a whole range of ways its support learning, from tools to design, through to delivery. A lot of the advances in the eLearning areas are in the creation and sharing of curriculum success as OER. An example of that is Cloudworks , a collaboration between the Open University and JISC (Joint Information Systems Committee):

Cloudworks – a resource for learning practitioners to share ideas and resources

6 – eLearning tools are best made to operate within a carefully selected and optimally integrated course design model.

I am unsure of this, simply due to the creative nature of designing and developing learning. Without experimentation and play, new ways of using technologies or even the development of doing technologies would not happen. An example of this is Twitter as a tool for learning. Originally not conceived as a carefully selected and optimally integrated tool as part of a course design model, it works well for learners and practitioners in a myriad of ways. One example being #edchat , an award winning, weekly chat about education, developed on the twitter platform. Another perspective if that of learning theories and constructivism and connectivism rely on social interaction and this in itself can create innovation around what technologies work best for the learners in their learning process. H817 itself is designed around a forum focused VLE, yet students are self organising to use Twitter as a collaboration platform.

7 – eLearning tools and techniques should be used only after consideration has been given to online vs offline trade-offs.

The perspective of this point is very much from a hypothesis driven from a classroom delivery perspective. Online learning, supported by self study, is sometimes the only option to learners in rural or hard to reach areas. The UNESCO and many others have a done a great deal of work in this area to consider how to support learning in areas where even classroom learning is a challenge.

10 – Only pedagogical advantages will provide a lasting rationale for implementing eLearning approaches.

Unsure about this as many tools, techniques and technologies get taken up without a deep consideration of the advantages. A challenge here is that it is assumed that tools will work well in all settings. The way the eLearning is introduced and supported has such a critical impact. A great example are the ideas in THIS paper: “Success and Failure of e-Learning Projects: Alignment of Vision and Reality, Change and Culture”. There are clearly advantages to eLearning but there are as many, if not more barriers.

Q2 – Consider hypothesis 4 that ‘elearning advances primarily through the successful implementation of pedagogical innovation’.

As I posted above: “Advances” is a very wooly term. I am sure eLearning has advanced without “successful implementation” as the pace of the industry is quicker than the capacity to research and draw results together of pedagogical success. Innovation in eLearning like all innovation is often “push” driven off the back of companies developing new technologies / services rather than “pull” from practitioners.

References:

I tend to link, rather than churn out here lots of references:

Nichols, M. (2003) ‘A theory for elearning’, Educational Technology & Society , vol. 6, no. 2, pp. 1–10; also available online at http://ifets.ieee.org/ discussions/ discuss_march2003.html (last accessed 29 January 2016)).

Ravenscroft, A. (2001). “Designing E-learning Interactions in the 21st Century: revisiting and rethinking the role of theory.” European Journal of Education 36(2), pp.133-156

Image Credit: FreeImage.com Author: Anna Maria lopez Lopez

Share This Story, Choose Your Platform!

About the author: andi roberts, what are your thoughts on this.... cancel reply.

Solutions for education

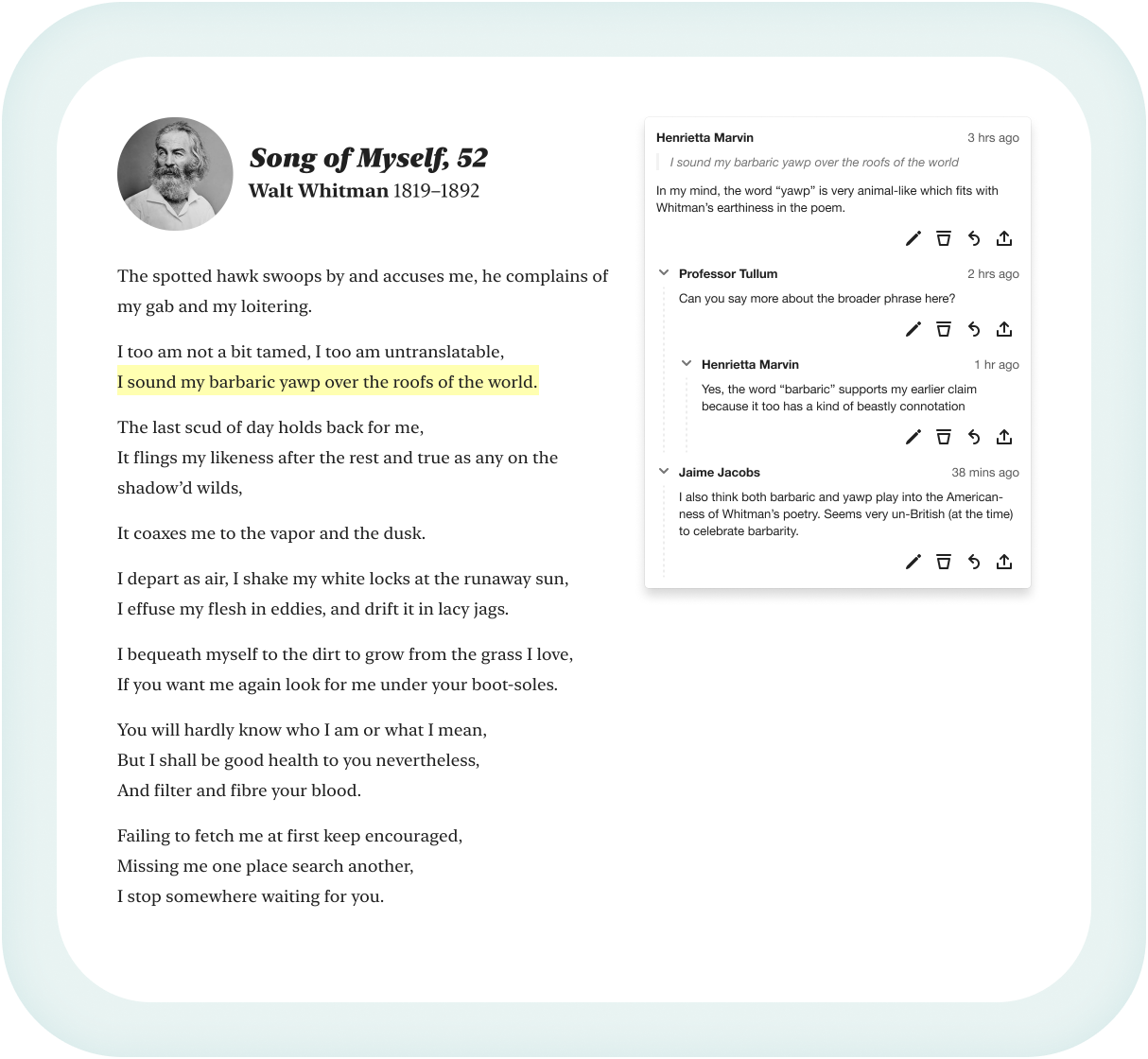

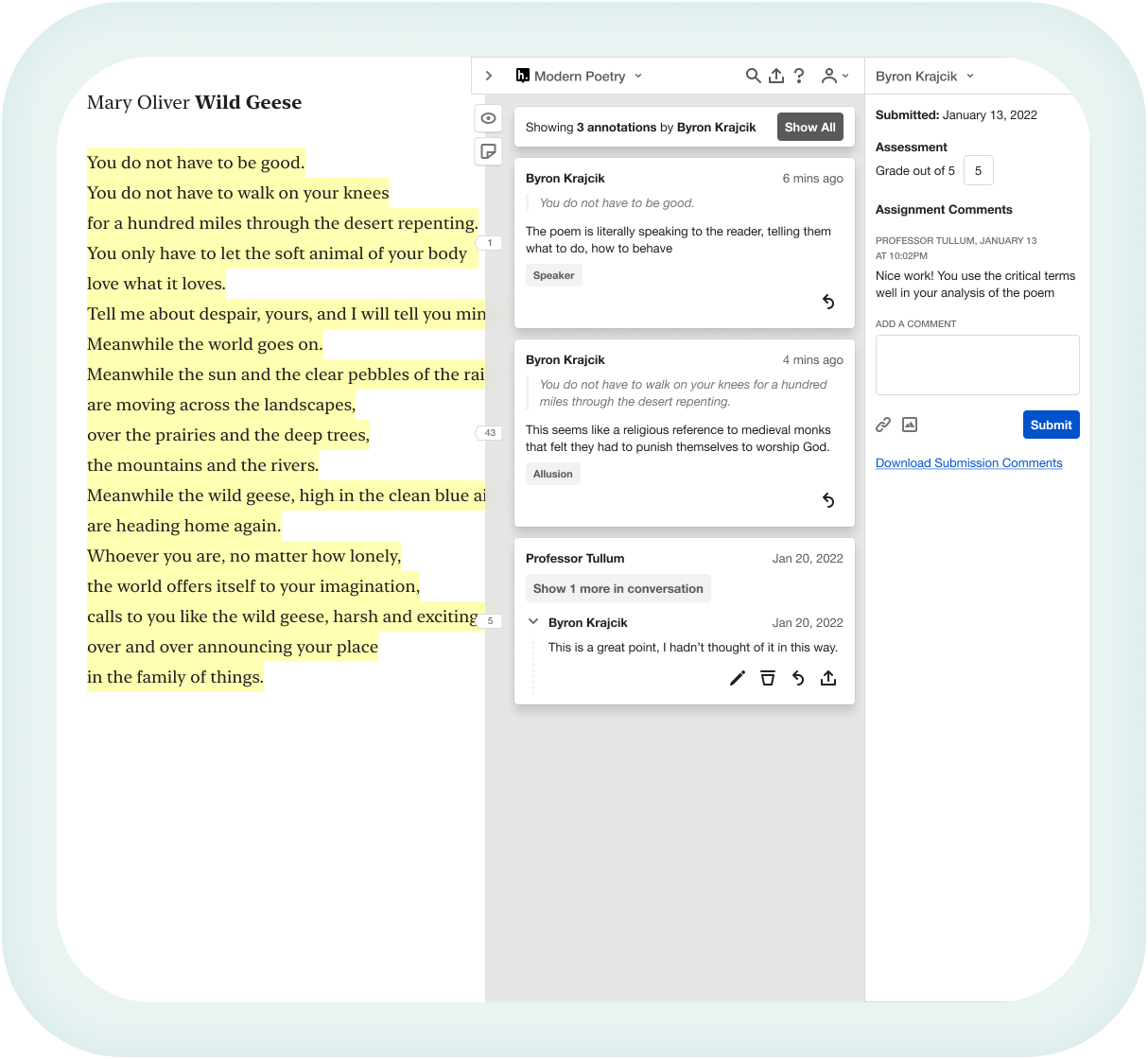

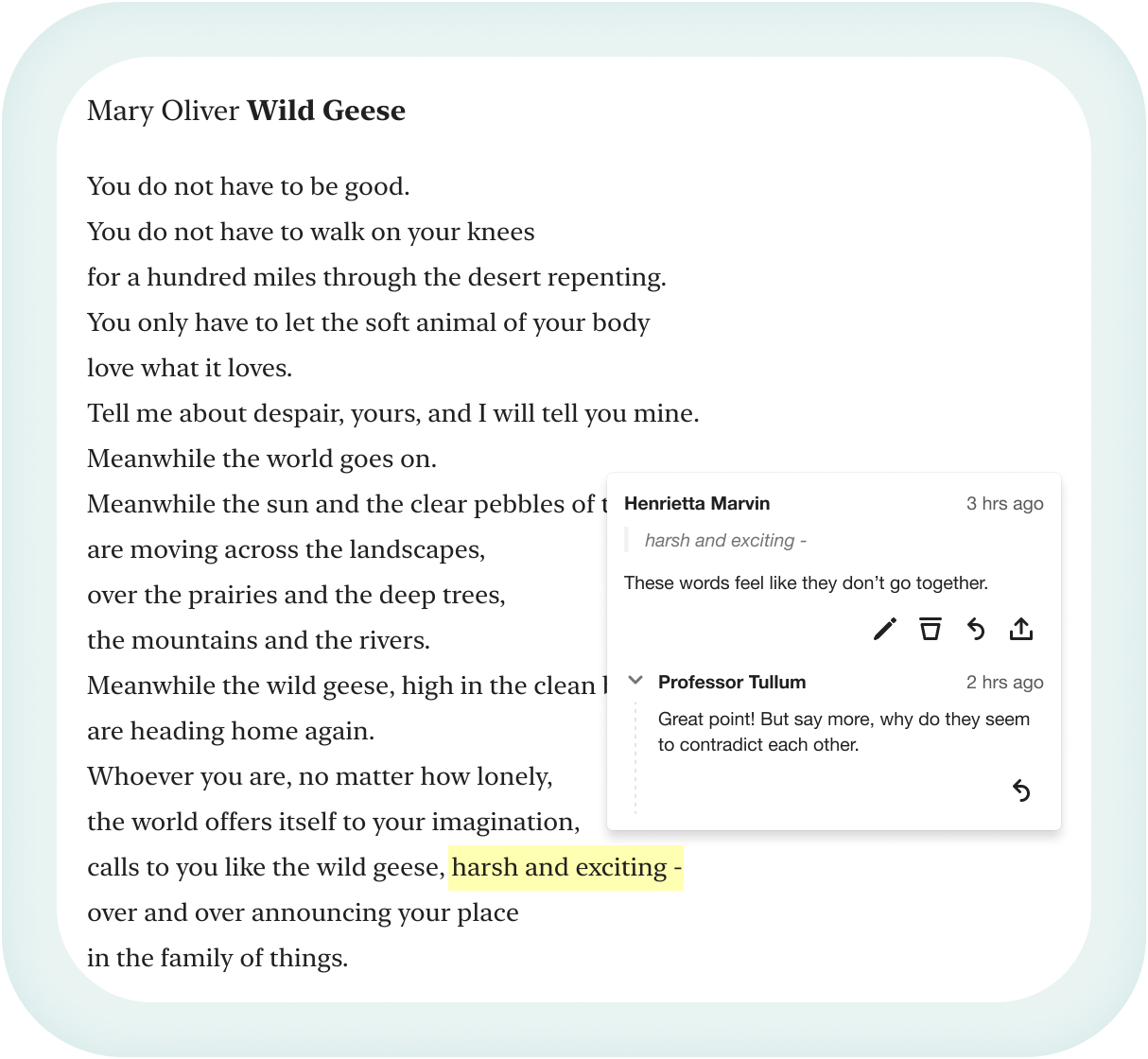

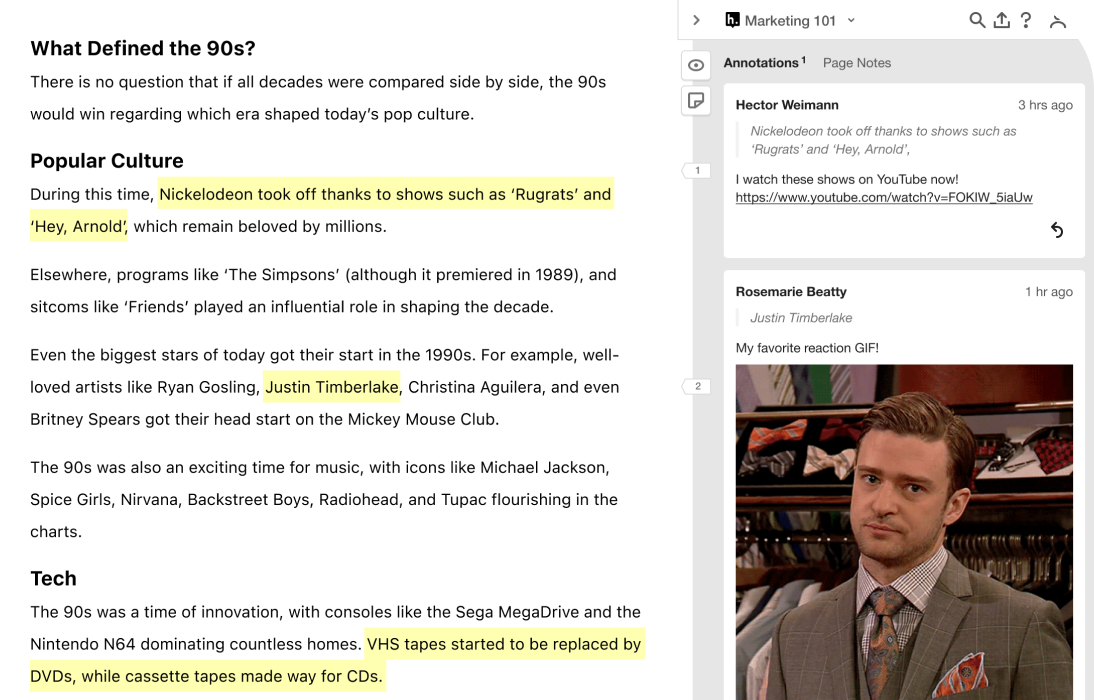

Keep students engaged and connected with social annotation..

Hypothesis turns any piece of digital content into a collaborative learning experience.

Get Started

Make learning more active, visible, and social.

Social annotation enhances every course by adding a new, engaging dimension to learning. With Hypothesis, educators and students work together to improve reading proficiency and critical thinking skills.

Trusted by hundreds of institutions.

Hypothesis is a simple yet powerful pedagogical tool that integrates with your LMS and is effective for any class format.

Empower all types of learners.

Social annotation makes reading more active and engaging for in-person, online, and hybrid classes.

- Personalized

Add visual learning to text.

Hypothesis allows students to highlight readings and view comments from classmates to validate ideas and make new connections.

Spark creative discussions.

Students can annotate assignments with images, emojis, links, and other engaging formats.

Encourage everyone to have a voice.

Increase participation from students who otherwise feel uncomfortable sharing their thoughts in class.

Experience a transformation in your courses.

Educators use Hypothesis directly within their LMS, seeing immediate impact and results for both their students and the teaching experience.

- Comprehension

Improve coursework completion and comprehension.

Social annotation holds students accountable and pushes them to read closely, thoroughly, and thoughtfully – proven to lead to a 2X increase in comprehension of materials.

See Impact Here

Easy to grade, easy to see impact.

We seamlessly integrate into your LMS gradebook so you can keep track of your students progress. Institutions have seen students grades improve by 18% after adopting Hypothesis.

Retain more students in your courses.

Students love how engaging and interactive Hypothesis is. The result? A demonstrated 32% increase in class retention after adopting Hypothesis.

Hear from Faculty About the Impact of Hypothesis

Works seamlessly within your lms..

Hypothesis is LTI Advantage compliant, offering deep integrations with leading learning management systems. Talk to our sales team for a demo in your LMS.

Talk to Sales Team

Brightspace

Blackboard Learn

Experience Hypothesis Today

Hypothesis promotes peer-to-peer education and fosters a sense of belonging in the classroom and beyond.

Try Hypothesis for Free.

Test out Hypothesis in our playground where you can experience the LMS integration.

YouTube Video Annotations.

Incorporate YouTube videos effortlessly into your lesson plans and students can annotate over them, creating a more engaging and interactive viewing experience.

Testimonials

Associate Professor of Teaching/Instruction Temple University Marcia Bailey "Hypothesis is an excellent tool to engage students with the text and with each other. I used it almost weekly this semester."

Distinguished Service Professor of Anthropology Knox College Nancy Eberhardt, Ph.D. "Hypothesis gets students talking to each other about the reading outside of the classroom in a way that I have never been able to see happen before. They come to class much more ready to talk about the reading, and I have a sneak preview of the issues they found most compelling, as well as the ones they might have missed"

Professor of English Florida State University Candace Ward, Ph.D. "I’ve found Hypothesis invaluable in engaging students with assigned reading material…The students say very smart things; they engage with each other very civilly and respectfully, and they synthesize their annotations with other class discussions, projects, and readings."

ESOL Instructor Peralta Community College District Danitza Lopez "We love Hypothesis! This handy tool allows us to go deeper than any other platform because we can directly have discussions about a particular word/sentence/paragraph within a text. We even use it for peer reviews, and it works super well. It is such an easy tool to use, too!"