Communication

iResearchNet

Custom Writing Services

Quantitative content analysis.

Quantitative content analysis is an empirical method used in the social sciences primarily for analyzing recorded human communication in a quantitative, systematic, and intersubjective way. This material can include newspaper articles, films, advertisements, interview transcripts, or observational protocols, for instance. Thus, a quantitative content analysis can be applied to verbal material, and also to visual material like the evening news or television entertainment. Surveys, observations, and quantitative content analysis are the main three methods of data collection in empirical communication research, with quantitative content analysis the most prominent in the field. In other disciplines like psychology or sociology quantitative content analysis is not used as widely.

Quantitative And Qualitative Content Analysis

Ole R. Holsti (1969) defines quantitative content analysis as “any technique for making inferences by objectively and systematically identifying specified characteristics of messages.” Bernhard Berelson (1952) speaks of “a research technique for the objective, systematic and quantitative description of the manifest content of communication.” There has been much debate on this classical definition of quantitative content analysis: what does the word “manifest” mean, and is it possible to analyze latent structures of human communication beyond the surface of the manifest text, i.e., the “black marks on white”? In a more practical sense, the word “manifest” should be interpreted in terms of “making it manifest.” For example, if one were to look for irony in political commentaries, the construct of “irony” is not manifest in the sense of being directly identifiable from “black marks on white.” Whether or not there is irony in a commentary has to be interpreted. Thus, before a commentary is coded, it has to be precisely determined what words, phrases, key words, or arguments should serve as indicators for the category “irony.” In other words, this “latent” aspect of communication is made manifest by its definition.

With newspaper articles, the logic of quantitative content analysis can be described as a systematic form of “newspaper reading.” Here, the reader, i.e., the person charged with coding, assigns numeric codes (taken from a category system) to certain elements of the articles (e.g., issues or politicans mentioned in articles) by following a fixed plan (written down in a codebook). Of course, one does not analyze only one but many articles, reports, or commentaries from different newspapers. In this respect, quantitative content analysis differs from qualitative content analysis. While qualitative content analysis is limited to a number of roughly 50 to 100 sample elements (e.g., newspaper reports, interview transcripts, or observational protocols), quantitative content analysis can deal with a huge number of sample elements. Qualitative content analysis works rather inductively by summarizing and classifying elements or parts of the text material and by assigning labels or categories to them. Quantitative content analysis, however, works deductively and measures quantitatively by assigning numeric codes to parts of the material to be coded – which is called coding in quantitative content analysis.

Fields Of Application

Originally, quantitative content analysis was linked to propaganda research, for instance, propaganda material in World War II. Nowadays, quantitative content analysis is applied to many different forms of human communication like print and television coverage, public relations, entertainment, advertisements, photographs, pictures, or films. For instance, subjects of analysis can include national images in media coverage about foreign affairs, the role models presented in television advertisements, the kind and quality of arguments in company press releases, or the features of actors in modern Asian film. In theoretical terms, quantitative content analysis is being applied to many different fields of research in communication science, for example, in analyzing the kind of issues covered by the media in the context of agenda-setting. Similarly, quantitative content analysis is a key method in the cultivation approach, where it is called message system analysis. In both these approaches, content analysis is combined with a survey in field design. Further applications of quantitative content analysis are in: election studies, where it is used to examine election campaigns themselves or media coverage about the candidates, parties, and campaigns; and in media coverage of or public discourses on social problems and issues like racism, social movements, or collective violence.

Purposes Of Quantitative Content Analysis

Generally speaking, there are three major purposes and thus three basic types of quantitative content analysis which follow the popular “Lasswell formula,” which asks who says what, to whom, why, to what extent, and with what effect. The first purpose is to make inferences about the antecedents of communication, for example, examining the coverage of a liberal and a conservative newspaper (who). If there is a political bias in the commentaries or in the news reports (what), the bias would be explained (why), e.g., differences between the newspapers in their editorial lines or political orientation.

The second purpose is merely to describe communication (what, to what extent). Here, different techniques can be applied (which are described below). The third purpose is to draw inferences about the effects of communication (to whom, with what effect). Agendasetting and cultivation studies are good examples for this basic type of quantitative content analysis. Yet, strictly speaking, such an inference is not possible when nothing but the message is examined. Additionally, a survey should be carried out, for instance, before a statement is made about mass media coverage influencing people’s thoughts and perceptions of the world, as in the cultivation approach. The reason for this can be explained by constructivism. From the constructivist point of view, quantitative content analysis is a reactive method, like surveys for example, since the message to be coded is not fixed in an objective sense. Several people reading the same message may interpret it differently due to their individual schemata, beliefs, and attitudes. Thus, when making inferences about message effects, one should carry out not only a quantitative content analysis but also a reception study or an effects study.

Types Of Quantitative Content Analysis

There are different types of quantitative content analysis, i.e., different techniques of describing human communication. The first focuses on frequencies, where one merely counts, e.g., the appearance of issues, persons, or arguments in newspaper coverage. Many studies using the agenda-setting approach are such simple frequency content analyses, and most rely only on media archives. Thus, they do not examine the content or text, e.g. of a newspaper article, but focus only on the headline. This is a simplified version of quantitative content analysis.

The second technique focuses on tendencies. The above-mentioned example of political bias in the commentaries of a liberal and a conservative newspaper is a good example. In another example, the analysis would not only measure the number of articles on nuclear energy, but also their viewpoint on the issue, e.g., by noting the advantages and disadvantages mentioned. If the advantages reported exceed the disadvantages, the article would be observed to be “in favor” of nuclear energy, while if the disadvantages reported exceed the advantages, it would be considered to show “disapproval” or a “negative tendency.”

The third technique does not only focus on tendencies, but also on intensities. Here, one would not just code on an ordinal scale (using, e.g., “positive,” “ambivalent,” and “negative”), but use interval measurement (e.g., “strongly positive,” “positive,” “ambivalent,” “negative,” and “strongly negative”).

Finally, there are also several techniques that meet the popular objection that quantitative content analysis “disassembles” communication. Critics of quantitative content analysis (e.g., Siegfried Kracauer) claim that quantitative content analysis cannot examine relations between elements of communication, i.e., the semantic and syntactical structures of communication. They argue, for instance, that when arguments and persons in newspaper reports are counted separately, the fact that arguments are raised by persons is neglected; or that when statements are separately coded, the fact that a statement can refer to another statement, e.g., by supporting it, is not taken into account. The latter objection may apply with reference to most agenda-setting studies, but it is not a justified objection against quantitative content analysis in general.

A good example of a technique that meets the semantic and syntactical structures of communication is the Semantische Strukturund Inhaltsanalyse (Semantic Structure and Content Analysis) developed by Werner Früh. Without going into detail, the technique considers various elements of communication as well as the relations between them; for instance, it analyzes persons and roles mentioned in newspaper articles, but it also examines time aspects like anteriority and so-called “modifications” like persons’ features or local specifications. In addition, it looks for so-called “k-relations,” such as causal, intentional, or conditional relations as well as for “R-relations” mentioned in, e.g., news reports. Thus, newspaper articles are deconstructed into micro-propositions, but the semantic structure and content analysis reconstructs all relations between these micropropositions.

Most quantitative content analyses examine text or verbal material, i.e., transcribed or recorded human communication. Studies analyzing visual material like films, television advertisements, or televised debates between presidential candidates are comparably rare. There are three major reasons for this. First, copies of newspaper articles are easier to access than copies of the evening news, for instance; in retrospective studies (which most quantitative content analyses are), especially, visual material is often no longer available. Second, visual material is more complex than verbal or text material. For example, television news not only provides information via the audio channel but also via the visual channel. Since verbal and visual information can deliver different messages, one has to code both streams of information. This is more expensive than just coding print news. Finally, coding visual material like evening news on television requires more detailed coding instructions and more complex category definitions than a codebook for analyzing newspaper coverage.

A more or less recent development in quantitative content analysis is automatic, that is, computer-assisted, content analysis, where a computer program counts keywords and searches for related words in the same paragraph, for example. Before the coding process begins, all the relevant keywords or phrases in a so-called coding dictionary – an equivalent of the codebook of a conventional content analysis – have to be listed. While there has been some progress in this technique, it will be a while before the human coder becomes redundant.

Current challenges for quantitative content analysis stem from the world wide web, where the content of a private weblog, arguments in online chat, or the pictures in an online gallery can be subject to analysis. Compared to newspaper coverage, for example, a content analysis of online communication can be quite a problem. Here, the population from which a sample is taken for analysis is not fixed but changes from day to day, or even more quickly. It is therefore important to store all relevant communication for a specific study. But even if this were possible, one seldom has a view of the complete population since the world wide web, or the Internet, as a whole is not easy to grasp. Thus most studies that analyze online communication work with samples that are more or less clearly defined.

Like any other method in the social sciences, quantitative content analysis has to meet certain standards of quantitative empirical research. The first criterion of intersubjectivity calls for transparent research. This means that all the details of a quantitative content analysis have to be described and explained so that exactly what has been done is clear. The second criterion of systematics requires that the coding rules and sampling criteria are invariantly applied to all material. The third criterion of reliability calls for the codebook to be dependable. Different coders do not always agree on coding. For example, one coder may identify an argument in a newspaper article as argument 13 from the argument list in the codebook, while another coder will choose argument 15, with the result that the numeric codes assigned to the argument in the newspaper article do not match. Yet, in other cases the two coders may agree. Using all codings of both coders, one can divide the doubled number of matching pairs (e.g., 17 identical codings) by the number of all codings of the first coder and the number of all codings of the second coder (e.g., 20 codings each), to obtain a ratio called the Holsti-formula, which is a simple reliability coefficient. In this example we would find R = 17 * 2 / (20 + 20) = 0.85. The values of all reliability coefficients range from 0 (no matching at all) to 1 (perfect matching). Another popular reliability coefficient – better known from index calculation – is Cronbach’s alpha α.

The fourth standard for a quantitative content analysis is validity. An instrument of empirical research, i.e., the codebook with reference to CA, can claim to be valid when it measures what it intends to measure. For instance, if the codebook contains a category “stereotype,” then the coders should not measure political bias or irony when applying the coding rules for this category, but code stereotypes. According to Klaus Krippendorff, there are different forms of validity. The type of validity in the example can be called face validity. Predictive validity and concurrent validity both refer to an external criterion measure for validating data obtained by a quantitative CA. Such a measure may be another quantitative content analysis or may be statistical data from governmental sources. With concurrent validity the validity test is administered at the same time as the criterion is collected. With predictive validity, scores are predicted on some criterion measure.

Content Analysis As Research Practice

The research process using quantitative content analysis comprises six steps. It usually begins with theoretical considerations, literature review, and deducing empirical hypotheses. In the second step, the sample material that is to be coded, i.e., examined with the codebook, is defined. In the third step, the coding units (e.g., articles or arguments) are described. In the fourth step, the codebook with the category system is developed and pre-tested. The actual measurement, i.e., the process of coding, represents the fifth step of a CA. The final step is data analysis and data interpretation.

Most quantitative content analysis requires multilevel sampling, for instance, analysis of campaign coverage would involve the choice of a limited number of national newspapers which represent diverse political standpoints (e.g., from liberal to conservative). On the next level the time span to be analyzed (e.g., every day in the critical phase of the election campaign) is set. On the next level, the articles to be coded are determined (e.g., all articles on the front page). Usually the sample is called the unit of analysis. The coding unit, however, is the most important unit in quantitative CA. It defines the level of measurement. For example, if the features of an article (e.g., the main issue of the article) are examined, the single article is the coding unit, but if the attributes of an argument (e.g., the issues mentioned in an argument) are examined, then the single argument is the coding unit. In the first case, 100 articles (with 5 arguments per article) will lead to 100 codes, in the second case the same number of articles will produce 500 codes. The level of coding depends on the sample size as well as on the research question. If the the study focuses on argumentation structures, the article will not be chosen as the coding unit. If political coverage in 10 newspapers in the last 50 years is the focus of the study, the argument or statement will not be chosen as a coding unit, otherwise a vast number of cases will have to be coded.

References:

- Berelson, B. (1952). Content analysis in communication research. Glencoe, IL: Free Press.

- Früh, W. (1998). Inhaltsanalyse: Theorie und Praxis, 4th edn. [Content analysis: Theory and practice]. Constance: UVK.

- Holsti, O. R. (1969). Content analysis for the social sciences and humanities. Reading, MA: AddisonWesley.

- Krippendorff, K. (2004). Content analysis: An introduction to its methodology. Beverly Hills, CA: Sage.

- Neuendorf, K. A. (2002). The content analysis guidebook. Thousand Oaks, CA: Sage.

- Popping, R. (2000). Computer-assisted text analysis. Thousand Oaks, CA: Sage.

- Riffe, D., Lacy, S., & Fico, F. G. (2005). Analyzing media messages: Using quantitative content analysis in research. Mahwah, NJ: LEA.

- Weber, R. P. (1990). Basic content analysis, 2nd edn. Newbury Park, London, and New Delhi: Sage.

Guide to Communication Research Methodologies: Quantitative, Qualitative and Rhetorical Research

Overview of Communication

Communication research methods, quantitative research, qualitative research, rhetorical research, mixed methodology.

Students interested in earning a graduate degree in communication should have at least some interest in understanding communication theories and/or conducting communication research. As students advance from undergraduate to graduate programs, an interesting change takes place — the student is no longer just a repository for knowledge. Rather, the student is expected to learn while also creating knowledge. This new knowledge is largely generated through the development and completion of research in communication studies. Before exploring the different methodologies used to conduct communication research, it is important to have a foundational understanding of the field of communication.

Defining communication is much harder than it sounds. Indeed, scholars have argued about the topic for years, typically differing on the following topics:

- Breadth : How many behaviors and actions should or should not be considered communication.

- Intentionality : Whether the definition includes an intention to communicate.

- Success : Whether someone was able to effectively communicate a message, or merely attempted to without it being received or understood.

However, most definitions discuss five main components, which include: sender, receiver, context/environment, medium, and message. Broadly speaking, communication research examines these components, asking questions about each of them and seeking to answer those questions.

As students seek to answer their own questions, they follow an approach similar to most other researchers. This approach proceeds in five steps: conceptualize, plan and design, implement a methodology, analyze and interpret, reconceptualize.

- Conceptualize : In the conceptualization process, students develop their area of interest and determine if their specific questions and hypotheses are worth investigating. If the research has already been completed, or there is no practical reason to research the topic, students may need to find a different research topic.

- Plan and Design : During planning and design students will select their methods of evaluation and decide how they plan to define their variables in a measurable way.

- Implement a Methodology : When implementing a methodology, students collect the data and information they require. They may, for example, have decided to conduct a survey study. This is the step when they would use their survey to collect data. If students chose to conduct a rhetorical criticism, this is when they would analyze their text.

- Analyze and Interpret : As students analyze and interpret their data or evidence, they transform the raw findings into meaningful insights. If they chose to conduct interviews, this would be the point in the process where they would evaluate the results of the interviews to find meaning as it relates to the communication phenomena of interest.

- Reconceptualize : During reconceptualization, students ask how their findings speak to a larger body of research — studies related to theirs that have already been completed and research they should execute in the future to continue answering new questions.

This final step is crucial, and speaks to an important tenet of communication research: All research contributes to a better overall understanding of communication and moves the field forward by enabling the development of new theories.

In the field of communication, there are three main research methodologies: quantitative, qualitative, and rhetorical. As communication students progress in their careers, they will likely find themselves using one of these far more often than the others.

Quantitative research seeks to establish knowledge through the use of numbers and measurement. Within the overarching area of quantitative research, there are a variety of different methodologies. The most commonly used methodologies are experiments, surveys, content analysis, and meta-analysis. To better understand these research methods, you can explore the following examples:

Experiments : Experiments are an empirical form of research that enable the researcher to study communication in a controlled environment. For example, a researcher might know that there are typical responses people use when they are interrupted during a conversation. However, it might be unknown as to how frequency of interruption provokes those different responses (e.g., do communicators use different responses when interrupted once every 10 minutes versus once per minute?). An experiment would allow a researcher to create these two environments to test a hypothesis or answer a specific research question. As you can imagine, it would be very time consuming — and probably impossible — to view this and measure it in the real world. For that reason, an experiment would be perfect for this research inquiry.

Surveys : Surveys are often used to collect information from large groups of people using scales that have been tested for validity and reliability. A researcher might be curious about how a supervisor sharing personal information with his or her subordinate affects way the subordinate perceives his or her supervisor. The researcher could create a survey where respondents answer questions about a) the information their supervisors self-disclose and b) their perceptions of their supervisors. The data collected about these two variables could offer interesting insights about this communication. As you would guess, an experiment would not work in this case because the researcher needs to assess a real relationship and they need insight into the mind of the respondent.

Content Analysis : Content analysis is used to count the number of occurrences of a phenomenon within a source of media (e.g., books, magazines, commercials, movies, etc.). For example, a researcher might be interested in finding out if people of certain races are underrepresented on television. They might explore this area of research by counting the number of times people of different races appear in prime time television and comparing that to the actual proportions in society.

Meta-Analysis : In this technique, a researcher takes a collection of quantitative studies and analyzes the data as a whole to get a better understanding of a communication phenomenon. For example, a researcher might be curious about how video games affect aggression. This researcher might find that many studies have been done on the topic, sometimes with conflicting results. In their meta-analysis, they could analyze the existing statistics as a whole to get a better understanding of the relationship between the two variables.

Qualitative research is interested in exploring subjects’ perceptions and understandings as they relate to communication. Imagine two researchers who want to understand student perceptions of the basic communication course at a university. The first researcher, a quantitative researcher, might measure absences to understand student perception. The second researcher, a qualitative researcher, might interview students to find out what they like and dislike about a course. The former is based on hard numbers, while the latter is based on human experience and perception.

Qualitative researchers employ a variety of different methodologies. Some of the most popular are interviews, focus groups, and participant observation. To better understand these research methods, you can explore the following examples:

Interviews : This typically consists of a researcher having a discussion with a participant based on questions developed by the researcher. For example, a researcher might be interested in how parents exert power over the lives of their children while the children are away at college. The researcher could spend time having conversations with college students about this topic, transcribe the conversations and then seek to find themes across the different discussions.

Focus Groups : A researcher using this method gathers a group of people with intimate knowledge of a communication phenomenon. For example, if a researcher wanted to understand the experience of couples who are childless by choice, he or she might choose to run a series of focus groups. This format is helpful because it allows participants to build on one another’s experiences, remembering information they may otherwise have forgotten. Focus groups also tend to produce useful information at a higher rate than interviews. That said, some issues are too sensitive for focus groups and lend themselves better to interviews.

Participant Observation : As the name indicates, this method involves the researcher watching participants in their natural environment. In some cases, the participants may not know they are being studied, as the researcher fully immerses his or herself as a member of the environment. To illustrate participant observation, imagine a researcher curious about how humor is used in healthcare. This researcher might immerse his or herself in a long-term care facility to observe how humor is used by healthcare workers interacting with patients.

Rhetorical research (or rhetorical criticism) is a form of textual analysis wherein the researcher systematically analyzes, interprets, and critiques the persuasive power of messages within a text. This takes on many forms, but all of them involve similar steps: selecting a text, choosing a rhetorical method, analyzing the text, and writing the criticism.

To illustrate, a researcher could be interested in how mass media portrays “good degrees” to prospective college students. To understand this communication, a rhetorical researcher could take 30 articles on the topic from the last year and write a rhetorical essay about the criteria used and the core message argued by the media.

Likewise, a researcher could be interested in how women in management roles are portrayed in television. They could select a group of popular shows and analyze that as the text. This might result in a rhetorical essay about the behaviors displayed by these women and what the text says about women in management roles.

As a final example, one might be interested in how persuasion is used by the president during the White House Correspondent’s Dinner. A researcher could select several recent presidents and write a rhetorical essay about their speeches and how they employed persuasion during their delivery.

Taking a mixed methods approach results in a research study that uses two or more techniques discussed above. Often, researchers will pair two methods together in the same study examining the same phenomenon. Other times, researchers will use qualitative methods to develop quantitative research, such as a researcher who uses a focus group to discuss the validity of a survey before it is finalized.

The benefit of mixed methods is that it offers a richer picture of a communication phenomenon by gathering data and information in multiple ways. If we explore some of the earlier examples, we can see how mixed methods might result in a better understanding of the communication being studied.

Example 1 : In surveys, we discussed a researcher interested in understanding how a supervisor sharing personal information with his or her subordinate affects the way the subordinate perceives his or her supervisor. While a survey could give us some insight into this communication, we could also add interviews with subordinates. Exploring their experiences intimately could give us a better understanding of how they navigate self-disclosure in a relationship based on power differences.

Example 2 : In content analysis, we discussed measuring representation of different races during prime time television. While we can count the appearances of members of different races and compare that to the composition of the general population, that doesn’t tell us anything about their portrayal. Adding rhetorical criticism, we could talk about how underrepresented groups are portrayed in either a positive or negative light, supporting or defying commonly held stereotypes.

Example 3 : In interviews, we saw a researcher who explored how power could be exerted by parents over their college-age children who are away at school. After determining the tactics used by parents, this interview study could have a phase two. In this phase, the researcher could develop scales to measure each tactic and then use those scales to understand how the tactics affect other communication constructs. One could argue, for example, that student anxiety would increase as a parent exerts greater power over that student. A researcher could conduct a hierarchical regression to see how each power tactic effects the levels of stress experienced by a student.

As you can see, each methodology has its own merits, and they often work well together. As students advance in their study of communication, it is worthwhile to learn various research methods. This allows them to study their interests in greater depth and breadth. Ultimately, they will be able to assemble stronger research studies and answer their questions about communication more effectively.

Note : For more information about research in the field of communication, check out our Guide to Communication Research and Scholarship .

Quantitative Data Analysis: A Comprehensive Guide

By: Ofem Eteng Published: May 18, 2022

Related Articles

A healthcare giant successfully introduces the most effective drug dosage through rigorous statistical modeling, saving countless lives. A marketing team predicts consumer trends with uncanny accuracy, tailoring campaigns for maximum impact.

Table of Contents

These trends and dosages are not just any numbers but are a result of meticulous quantitative data analysis. Quantitative data analysis offers a robust framework for understanding complex phenomena, evaluating hypotheses, and predicting future outcomes.

In this blog, we’ll walk through the concept of quantitative data analysis, the steps required, its advantages, and the methods and techniques that are used in this analysis. Read on!

What is Quantitative Data Analysis?

Quantitative data analysis is a systematic process of examining, interpreting, and drawing meaningful conclusions from numerical data. It involves the application of statistical methods, mathematical models, and computational techniques to understand patterns, relationships, and trends within datasets.

Quantitative data analysis methods typically work with algorithms, mathematical analysis tools, and software to gain insights from the data, answering questions such as how many, how often, and how much. Data for quantitative data analysis is usually collected from close-ended surveys, questionnaires, polls, etc. The data can also be obtained from sales figures, email click-through rates, number of website visitors, and percentage revenue increase.

Quantitative Data Analysis vs Qualitative Data Analysis

When we talk about data, we directly think about the pattern, the relationship, and the connection between the datasets – analyzing the data in short. Therefore when it comes to data analysis, there are broadly two types – Quantitative Data Analysis and Qualitative Data Analysis.

Quantitative data analysis revolves around numerical data and statistics, which are suitable for functions that can be counted or measured. In contrast, qualitative data analysis includes description and subjective information – for things that can be observed but not measured.

Let us differentiate between Quantitative Data Analysis and Quantitative Data Analysis for a better understanding.

Data Preparation Steps for Quantitative Data Analysis

Quantitative data has to be gathered and cleaned before proceeding to the stage of analyzing it. Below are the steps to prepare a data before quantitative research analysis:

- Step 1: Data Collection

Before beginning the analysis process, you need data. Data can be collected through rigorous quantitative research, which includes methods such as interviews, focus groups, surveys, and questionnaires.

- Step 2: Data Cleaning

Once the data is collected, begin the data cleaning process by scanning through the entire data for duplicates, errors, and omissions. Keep a close eye for outliers (data points that are significantly different from the majority of the dataset) because they can skew your analysis results if they are not removed.

This data-cleaning process ensures data accuracy, consistency and relevancy before analysis.

- Step 3: Data Analysis and Interpretation

Now that you have collected and cleaned your data, it is now time to carry out the quantitative analysis. There are two methods of quantitative data analysis, which we will discuss in the next section.

However, if you have data from multiple sources, collecting and cleaning it can be a cumbersome task. This is where Hevo Data steps in. With Hevo, extracting, transforming, and loading data from source to destination becomes a seamless task, eliminating the need for manual coding. This not only saves valuable time but also enhances the overall efficiency of data analysis and visualization, empowering users to derive insights quickly and with precision

Hevo is the only real-time ELT No-code Data Pipeline platform that cost-effectively automates data pipelines that are flexible to your needs. With integration with 150+ Data Sources (40+ free sources), we help you not only export data from sources & load data to the destinations but also transform & enrich your data, & make it analysis-ready.

Start for free now!

Now that you are familiar with what quantitative data analysis is and how to prepare your data for analysis, the focus will shift to the purpose of this article, which is to describe the methods and techniques of quantitative data analysis.

Methods and Techniques of Quantitative Data Analysis

Quantitative data analysis employs two techniques to extract meaningful insights from datasets, broadly. The first method is descriptive statistics, which summarizes and portrays essential features of a dataset, such as mean, median, and standard deviation.

Inferential statistics, the second method, extrapolates insights and predictions from a sample dataset to make broader inferences about an entire population, such as hypothesis testing and regression analysis.

An in-depth explanation of both the methods is provided below:

- Descriptive Statistics

- Inferential Statistics

1) Descriptive Statistics

Descriptive statistics as the name implies is used to describe a dataset. It helps understand the details of your data by summarizing it and finding patterns from the specific data sample. They provide absolute numbers obtained from a sample but do not necessarily explain the rationale behind the numbers and are mostly used for analyzing single variables. The methods used in descriptive statistics include:

- Mean: This calculates the numerical average of a set of values.

- Median: This is used to get the midpoint of a set of values when the numbers are arranged in numerical order.

- Mode: This is used to find the most commonly occurring value in a dataset.

- Percentage: This is used to express how a value or group of respondents within the data relates to a larger group of respondents.

- Frequency: This indicates the number of times a value is found.

- Range: This shows the highest and lowest values in a dataset.

- Standard Deviation: This is used to indicate how dispersed a range of numbers is, meaning, it shows how close all the numbers are to the mean.

- Skewness: It indicates how symmetrical a range of numbers is, showing if they cluster into a smooth bell curve shape in the middle of the graph or if they skew towards the left or right.

2) Inferential Statistics

In quantitative analysis, the expectation is to turn raw numbers into meaningful insight using numerical values, and descriptive statistics is all about explaining details of a specific dataset using numbers, but it does not explain the motives behind the numbers; hence, a need for further analysis using inferential statistics.

Inferential statistics aim to make predictions or highlight possible outcomes from the analyzed data obtained from descriptive statistics. They are used to generalize results and make predictions between groups, show relationships that exist between multiple variables, and are used for hypothesis testing that predicts changes or differences.

There are various statistical analysis methods used within inferential statistics; a few are discussed below.

- Cross Tabulations: Cross tabulation or crosstab is used to show the relationship that exists between two variables and is often used to compare results by demographic groups. It uses a basic tabular form to draw inferences between different data sets and contains data that is mutually exclusive or has some connection with each other. Crosstabs help understand the nuances of a dataset and factors that may influence a data point.

- Regression Analysis: Regression analysis estimates the relationship between a set of variables. It shows the correlation between a dependent variable (the variable or outcome you want to measure or predict) and any number of independent variables (factors that may impact the dependent variable). Therefore, the purpose of the regression analysis is to estimate how one or more variables might affect a dependent variable to identify trends and patterns to make predictions and forecast possible future trends. There are many types of regression analysis, and the model you choose will be determined by the type of data you have for the dependent variable. The types of regression analysis include linear regression, non-linear regression, binary logistic regression, etc.

- Monte Carlo Simulation: Monte Carlo simulation, also known as the Monte Carlo method, is a computerized technique of generating models of possible outcomes and showing their probability distributions. It considers a range of possible outcomes and then tries to calculate how likely each outcome will occur. Data analysts use it to perform advanced risk analyses to help forecast future events and make decisions accordingly.

- Analysis of Variance (ANOVA): This is used to test the extent to which two or more groups differ from each other. It compares the mean of various groups and allows the analysis of multiple groups.

- Factor Analysis: A large number of variables can be reduced into a smaller number of factors using the factor analysis technique. It works on the principle that multiple separate observable variables correlate with each other because they are all associated with an underlying construct. It helps in reducing large datasets into smaller, more manageable samples.

- Cohort Analysis: Cohort analysis can be defined as a subset of behavioral analytics that operates from data taken from a given dataset. Rather than looking at all users as one unit, cohort analysis breaks down data into related groups for analysis, where these groups or cohorts usually have common characteristics or similarities within a defined period.

- MaxDiff Analysis: This is a quantitative data analysis method that is used to gauge customers’ preferences for purchase and what parameters rank higher than the others in the process.

- Cluster Analysis: Cluster analysis is a technique used to identify structures within a dataset. Cluster analysis aims to be able to sort different data points into groups that are internally similar and externally different; that is, data points within a cluster will look like each other and different from data points in other clusters.

- Time Series Analysis: This is a statistical analytic technique used to identify trends and cycles over time. It is simply the measurement of the same variables at different times, like weekly and monthly email sign-ups, to uncover trends, seasonality, and cyclic patterns. By doing this, the data analyst can forecast how variables of interest may fluctuate in the future.

- SWOT analysis: This is a quantitative data analysis method that assigns numerical values to indicate strengths, weaknesses, opportunities, and threats of an organization, product, or service to show a clearer picture of competition to foster better business strategies

How to Choose the Right Method for your Analysis?

Choosing between Descriptive Statistics or Inferential Statistics can be often confusing. You should consider the following factors before choosing the right method for your quantitative data analysis:

1. Type of Data

The first consideration in data analysis is understanding the type of data you have. Different statistical methods have specific requirements based on these data types, and using the wrong method can render results meaningless. The choice of statistical method should align with the nature and distribution of your data to ensure meaningful and accurate analysis.

2. Your Research Questions

When deciding on statistical methods, it’s crucial to align them with your specific research questions and hypotheses. The nature of your questions will influence whether descriptive statistics alone, which reveal sample attributes, are sufficient or if you need both descriptive and inferential statistics to understand group differences or relationships between variables and make population inferences.

Pros and Cons of Quantitative Data Analysis

1. Objectivity and Generalizability:

- Quantitative data analysis offers objective, numerical measurements, minimizing bias and personal interpretation.

- Results can often be generalized to larger populations, making them applicable to broader contexts.

Example: A study using quantitative data analysis to measure student test scores can objectively compare performance across different schools and demographics, leading to generalizable insights about educational strategies.

2. Precision and Efficiency:

- Statistical methods provide precise numerical results, allowing for accurate comparisons and prediction.

- Large datasets can be analyzed efficiently with the help of computer software, saving time and resources.

Example: A marketing team can use quantitative data analysis to precisely track click-through rates and conversion rates on different ad campaigns, quickly identifying the most effective strategies for maximizing customer engagement.

3. Identification of Patterns and Relationships:

- Statistical techniques reveal hidden patterns and relationships between variables that might not be apparent through observation alone.

- This can lead to new insights and understanding of complex phenomena.

Example: A medical researcher can use quantitative analysis to pinpoint correlations between lifestyle factors and disease risk, aiding in the development of prevention strategies.

1. Limited Scope:

- Quantitative analysis focuses on quantifiable aspects of a phenomenon , potentially overlooking important qualitative nuances, such as emotions, motivations, or cultural contexts.

Example: A survey measuring customer satisfaction with numerical ratings might miss key insights about the underlying reasons for their satisfaction or dissatisfaction, which could be better captured through open-ended feedback.

2. Oversimplification:

- Reducing complex phenomena to numerical data can lead to oversimplification and a loss of richness in understanding.

Example: Analyzing employee productivity solely through quantitative metrics like hours worked or tasks completed might not account for factors like creativity, collaboration, or problem-solving skills, which are crucial for overall performance.

3. Potential for Misinterpretation:

- Statistical results can be misinterpreted if not analyzed carefully and with appropriate expertise.

- The choice of statistical methods and assumptions can significantly influence results.

This blog discusses the steps, methods, and techniques of quantitative data analysis. It also gives insights into the methods of data collection, the type of data one should work with, and the pros and cons of such analysis.

Gain a better understanding of data analysis with these essential reads:

- Data Analysis and Modeling: 4 Critical Differences

- Exploratory Data Analysis Simplified 101

- 25 Best Data Analysis Tools in 2024

Carrying out successful data analysis requires prepping the data and making it analysis-ready. That is where Hevo steps in.

Want to give Hevo a try? Sign Up for a 14-day free trial and experience the feature-rich Hevo suite first hand. You may also have a look at the amazing Hevo price , which will assist you in selecting the best plan for your requirements.

Share your experience of understanding Quantitative Data Analysis in the comment section below! We would love to hear your thoughts.

Ofem is a freelance writer specializing in data-related topics, who has expertise in translating complex concepts. With a focus on data science, analytics, and emerging technologies.

No-code Data Pipeline for your Data Warehouse

- Data Analysis

- Data Warehouse

- Quantitative Data Analysis

Continue Reading

Principles of Running a High Performance Data Team

Willem Koenders

Offensive vs Defensive Data Strategy: Do You Really Need to Choose?

Kyle Kirwan

The Data Engineer’s Crystal Ball: How Data Observability Helps You See What’s Coming

I want to read this e-book.

Quantitative Data Analysis 101

The lingo, methods and techniques, explained simply.

By: Derek Jansen (MBA) and Kerryn Warren (PhD) | December 2020

Quantitative data analysis is one of those things that often strikes fear in students. It’s totally understandable – quantitative analysis is a complex topic, full of daunting lingo , like medians, modes, correlation and regression. Suddenly we’re all wishing we’d paid a little more attention in math class…

The good news is that while quantitative data analysis is a mammoth topic, gaining a working understanding of the basics isn’t that hard , even for those of us who avoid numbers and math . In this post, we’ll break quantitative analysis down into simple , bite-sized chunks so you can approach your research with confidence.

Overview: Quantitative Data Analysis 101

- What (exactly) is quantitative data analysis?

- When to use quantitative analysis

- How quantitative analysis works

The two “branches” of quantitative analysis

- Descriptive statistics 101

- Inferential statistics 101

- How to choose the right quantitative methods

- Recap & summary

What is quantitative data analysis?

Despite being a mouthful, quantitative data analysis simply means analysing data that is numbers-based – or data that can be easily “converted” into numbers without losing any meaning.

For example, category-based variables like gender, ethnicity, or native language could all be “converted” into numbers without losing meaning – for example, English could equal 1, French 2, etc.

This contrasts against qualitative data analysis, where the focus is on words, phrases and expressions that can’t be reduced to numbers. If you’re interested in learning about qualitative analysis, check out our post and video here .

What is quantitative analysis used for?

Quantitative analysis is generally used for three purposes.

- Firstly, it’s used to measure differences between groups . For example, the popularity of different clothing colours or brands.

- Secondly, it’s used to assess relationships between variables . For example, the relationship between weather temperature and voter turnout.

- And third, it’s used to test hypotheses in a scientifically rigorous way. For example, a hypothesis about the impact of a certain vaccine.

Again, this contrasts with qualitative analysis , which can be used to analyse people’s perceptions and feelings about an event or situation. In other words, things that can’t be reduced to numbers.

How does quantitative analysis work?

Well, since quantitative data analysis is all about analysing numbers , it’s no surprise that it involves statistics . Statistical analysis methods form the engine that powers quantitative analysis, and these methods can vary from pretty basic calculations (for example, averages and medians) to more sophisticated analyses (for example, correlations and regressions).

Sounds like gibberish? Don’t worry. We’ll explain all of that in this post. Importantly, you don’t need to be a statistician or math wiz to pull off a good quantitative analysis. We’ll break down all the technical mumbo jumbo in this post.

Need a helping hand?

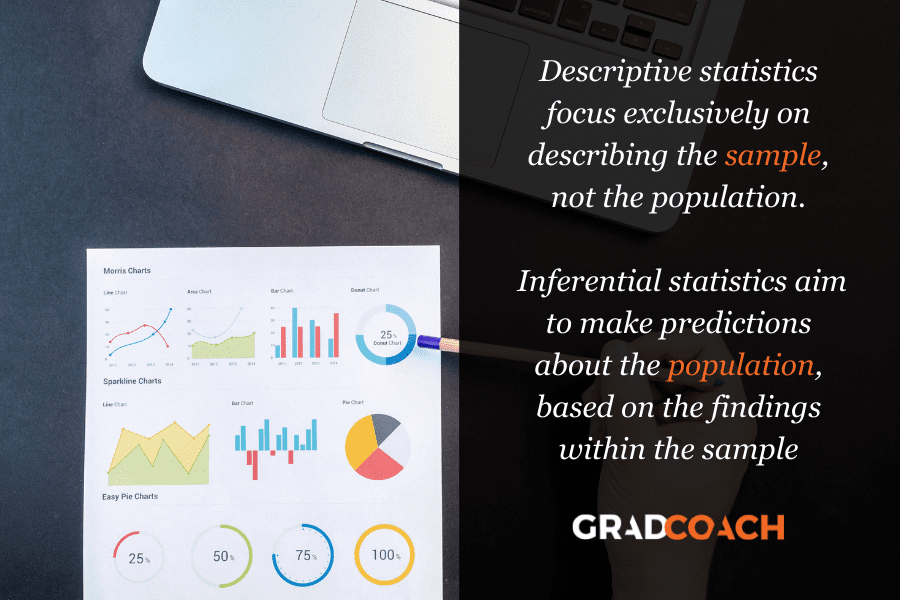

As I mentioned, quantitative analysis is powered by statistical analysis methods . There are two main “branches” of statistical methods that are used – descriptive statistics and inferential statistics . In your research, you might only use descriptive statistics, or you might use a mix of both , depending on what you’re trying to figure out. In other words, depending on your research questions, aims and objectives . I’ll explain how to choose your methods later.

So, what are descriptive and inferential statistics?

Well, before I can explain that, we need to take a quick detour to explain some lingo. To understand the difference between these two branches of statistics, you need to understand two important words. These words are population and sample .

First up, population . In statistics, the population is the entire group of people (or animals or organisations or whatever) that you’re interested in researching. For example, if you were interested in researching Tesla owners in the US, then the population would be all Tesla owners in the US.

However, it’s extremely unlikely that you’re going to be able to interview or survey every single Tesla owner in the US. Realistically, you’ll likely only get access to a few hundred, or maybe a few thousand owners using an online survey. This smaller group of accessible people whose data you actually collect is called your sample .

So, to recap – the population is the entire group of people you’re interested in, and the sample is the subset of the population that you can actually get access to. In other words, the population is the full chocolate cake , whereas the sample is a slice of that cake.

So, why is this sample-population thing important?

Well, descriptive statistics focus on describing the sample , while inferential statistics aim to make predictions about the population, based on the findings within the sample. In other words, we use one group of statistical methods – descriptive statistics – to investigate the slice of cake, and another group of methods – inferential statistics – to draw conclusions about the entire cake. There I go with the cake analogy again…

With that out the way, let’s take a closer look at each of these branches in more detail.

Branch 1: Descriptive Statistics

Descriptive statistics serve a simple but critically important role in your research – to describe your data set – hence the name. In other words, they help you understand the details of your sample . Unlike inferential statistics (which we’ll get to soon), descriptive statistics don’t aim to make inferences or predictions about the entire population – they’re purely interested in the details of your specific sample .

When you’re writing up your analysis, descriptive statistics are the first set of stats you’ll cover, before moving on to inferential statistics. But, that said, depending on your research objectives and research questions , they may be the only type of statistics you use. We’ll explore that a little later.

So, what kind of statistics are usually covered in this section?

Some common statistical tests used in this branch include the following:

- Mean – this is simply the mathematical average of a range of numbers.

- Median – this is the midpoint in a range of numbers when the numbers are arranged in numerical order. If the data set makes up an odd number, then the median is the number right in the middle of the set. If the data set makes up an even number, then the median is the midpoint between the two middle numbers.

- Mode – this is simply the most commonly occurring number in the data set.

- In cases where most of the numbers are quite close to the average, the standard deviation will be relatively low.

- Conversely, in cases where the numbers are scattered all over the place, the standard deviation will be relatively high.

- Skewness . As the name suggests, skewness indicates how symmetrical a range of numbers is. In other words, do they tend to cluster into a smooth bell curve shape in the middle of the graph, or do they skew to the left or right?

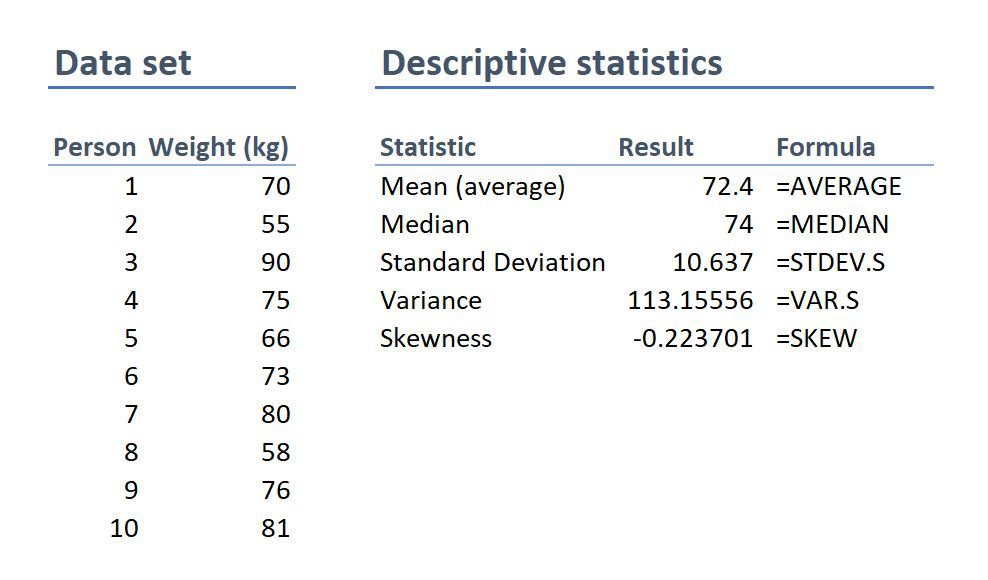

Feeling a bit confused? Let’s look at a practical example using a small data set.

On the left-hand side is the data set. This details the bodyweight of a sample of 10 people. On the right-hand side, we have the descriptive statistics. Let’s take a look at each of them.

First, we can see that the mean weight is 72.4 kilograms. In other words, the average weight across the sample is 72.4 kilograms. Straightforward.

Next, we can see that the median is very similar to the mean (the average). This suggests that this data set has a reasonably symmetrical distribution (in other words, a relatively smooth, centred distribution of weights, clustered towards the centre).

In terms of the mode , there is no mode in this data set. This is because each number is present only once and so there cannot be a “most common number”. If there were two people who were both 65 kilograms, for example, then the mode would be 65.

Next up is the standard deviation . 10.6 indicates that there’s quite a wide spread of numbers. We can see this quite easily by looking at the numbers themselves, which range from 55 to 90, which is quite a stretch from the mean of 72.4.

And lastly, the skewness of -0.2 tells us that the data is very slightly negatively skewed. This makes sense since the mean and the median are slightly different.

As you can see, these descriptive statistics give us some useful insight into the data set. Of course, this is a very small data set (only 10 records), so we can’t read into these statistics too much. Also, keep in mind that this is not a list of all possible descriptive statistics – just the most common ones.

But why do all of these numbers matter?

While these descriptive statistics are all fairly basic, they’re important for a few reasons:

- Firstly, they help you get both a macro and micro-level view of your data. In other words, they help you understand both the big picture and the finer details.

- Secondly, they help you spot potential errors in the data – for example, if an average is way higher than you’d expect, or responses to a question are highly varied, this can act as a warning sign that you need to double-check the data.

- And lastly, these descriptive statistics help inform which inferential statistical techniques you can use, as those techniques depend on the skewness (in other words, the symmetry and normality) of the data.

Simply put, descriptive statistics are really important , even though the statistical techniques used are fairly basic. All too often at Grad Coach, we see students skimming over the descriptives in their eagerness to get to the more exciting inferential methods, and then landing up with some very flawed results.

Don’t be a sucker – give your descriptive statistics the love and attention they deserve!

Branch 2: Inferential Statistics

As I mentioned, while descriptive statistics are all about the details of your specific data set – your sample – inferential statistics aim to make inferences about the population . In other words, you’ll use inferential statistics to make predictions about what you’d expect to find in the full population.

What kind of predictions, you ask? Well, there are two common types of predictions that researchers try to make using inferential stats:

- Firstly, predictions about differences between groups – for example, height differences between children grouped by their favourite meal or gender.

- And secondly, relationships between variables – for example, the relationship between body weight and the number of hours a week a person does yoga.

In other words, inferential statistics (when done correctly), allow you to connect the dots and make predictions about what you expect to see in the real world population, based on what you observe in your sample data. For this reason, inferential statistics are used for hypothesis testing – in other words, to test hypotheses that predict changes or differences.

Of course, when you’re working with inferential statistics, the composition of your sample is really important. In other words, if your sample doesn’t accurately represent the population you’re researching, then your findings won’t necessarily be very useful.

For example, if your population of interest is a mix of 50% male and 50% female , but your sample is 80% male , you can’t make inferences about the population based on your sample, since it’s not representative. This area of statistics is called sampling, but we won’t go down that rabbit hole here (it’s a deep one!) – we’ll save that for another post .

What statistics are usually used in this branch?

There are many, many different statistical analysis methods within the inferential branch and it’d be impossible for us to discuss them all here. So we’ll just take a look at some of the most common inferential statistical methods so that you have a solid starting point.

First up are T-Tests . T-tests compare the means (the averages) of two groups of data to assess whether they’re statistically significantly different. In other words, do they have significantly different means, standard deviations and skewness.

This type of testing is very useful for understanding just how similar or different two groups of data are. For example, you might want to compare the mean blood pressure between two groups of people – one that has taken a new medication and one that hasn’t – to assess whether they are significantly different.

Kicking things up a level, we have ANOVA, which stands for “analysis of variance”. This test is similar to a T-test in that it compares the means of various groups, but ANOVA allows you to analyse multiple groups , not just two groups So it’s basically a t-test on steroids…

Next, we have correlation analysis . This type of analysis assesses the relationship between two variables. In other words, if one variable increases, does the other variable also increase, decrease or stay the same. For example, if the average temperature goes up, do average ice creams sales increase too? We’d expect some sort of relationship between these two variables intuitively , but correlation analysis allows us to measure that relationship scientifically .

Lastly, we have regression analysis – this is quite similar to correlation in that it assesses the relationship between variables, but it goes a step further to understand cause and effect between variables, not just whether they move together. In other words, does the one variable actually cause the other one to move, or do they just happen to move together naturally thanks to another force? Just because two variables correlate doesn’t necessarily mean that one causes the other.

Stats overload…

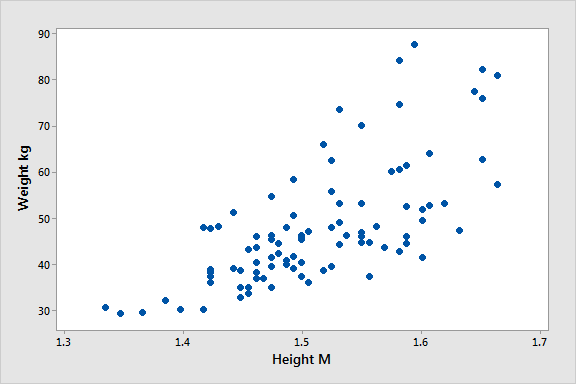

I hear you. To make this all a little more tangible, let’s take a look at an example of a correlation in action.

Here’s a scatter plot demonstrating the correlation (relationship) between weight and height. Intuitively, we’d expect there to be some relationship between these two variables, which is what we see in this scatter plot. In other words, the results tend to cluster together in a diagonal line from bottom left to top right.

As I mentioned, these are are just a handful of inferential techniques – there are many, many more. Importantly, each statistical method has its own assumptions and limitations.

For example, some methods only work with normally distributed (parametric) data, while other methods are designed specifically for non-parametric data. And that’s exactly why descriptive statistics are so important – they’re the first step to knowing which inferential techniques you can and can’t use.

How to choose the right analysis method

To choose the right statistical methods, you need to think about two important factors :

- The type of quantitative data you have (specifically, level of measurement and the shape of the data). And,

- Your research questions and hypotheses

Let’s take a closer look at each of these.

Factor 1 – Data type

The first thing you need to consider is the type of data you’ve collected (or the type of data you will collect). By data types, I’m referring to the four levels of measurement – namely, nominal, ordinal, interval and ratio. If you’re not familiar with this lingo, check out the video below.

Why does this matter?

Well, because different statistical methods and techniques require different types of data. This is one of the “assumptions” I mentioned earlier – every method has its assumptions regarding the type of data.

For example, some techniques work with categorical data (for example, yes/no type questions, or gender or ethnicity), while others work with continuous numerical data (for example, age, weight or income) – and, of course, some work with multiple data types.

If you try to use a statistical method that doesn’t support the data type you have, your results will be largely meaningless . So, make sure that you have a clear understanding of what types of data you’ve collected (or will collect). Once you have this, you can then check which statistical methods would support your data types here .

If you haven’t collected your data yet, you can work in reverse and look at which statistical method would give you the most useful insights, and then design your data collection strategy to collect the correct data types.

Another important factor to consider is the shape of your data . Specifically, does it have a normal distribution (in other words, is it a bell-shaped curve, centred in the middle) or is it very skewed to the left or the right? Again, different statistical techniques work for different shapes of data – some are designed for symmetrical data while others are designed for skewed data.

This is another reminder of why descriptive statistics are so important – they tell you all about the shape of your data.

Factor 2: Your research questions

The next thing you need to consider is your specific research questions, as well as your hypotheses (if you have some). The nature of your research questions and research hypotheses will heavily influence which statistical methods and techniques you should use.

If you’re just interested in understanding the attributes of your sample (as opposed to the entire population), then descriptive statistics are probably all you need. For example, if you just want to assess the means (averages) and medians (centre points) of variables in a group of people.

On the other hand, if you aim to understand differences between groups or relationships between variables and to infer or predict outcomes in the population, then you’ll likely need both descriptive statistics and inferential statistics.

So, it’s really important to get very clear about your research aims and research questions, as well your hypotheses – before you start looking at which statistical techniques to use.

Never shoehorn a specific statistical technique into your research just because you like it or have some experience with it. Your choice of methods must align with all the factors we’ve covered here.

Time to recap…

You’re still with me? That’s impressive. We’ve covered a lot of ground here, so let’s recap on the key points:

- Quantitative data analysis is all about analysing number-based data (which includes categorical and numerical data) using various statistical techniques.

- The two main branches of statistics are descriptive statistics and inferential statistics . Descriptives describe your sample, whereas inferentials make predictions about what you’ll find in the population.

- Common descriptive statistical methods include mean (average), median , standard deviation and skewness .

- Common inferential statistical methods include t-tests , ANOVA , correlation and regression analysis.

- To choose the right statistical methods and techniques, you need to consider the type of data you’re working with , as well as your research questions and hypotheses.

Psst… there’s more (for free)

This post is part of our dissertation mini-course, which covers everything you need to get started with your dissertation, thesis or research project.

You Might Also Like:

74 Comments

Hi, I have read your article. Such a brilliant post you have created.

Thank you for the feedback. Good luck with your quantitative analysis.

Thank you so much.

Thank you so much. I learnt much well. I love your summaries of the concepts. I had love you to explain how to input data using SPSS

Amazing and simple way of breaking down quantitative methods.

This is beautiful….especially for non-statisticians. I have skimmed through but I wish to read again. and please include me in other articles of the same nature when you do post. I am interested. I am sure, I could easily learn from you and get off the fear that I have had in the past. Thank you sincerely.

Send me every new information you might have.

i need every new information

Thank you for the blog. It is quite informative. Dr Peter Nemaenzhe PhD

It is wonderful. l’ve understood some of the concepts in a more compréhensive manner

Your article is so good! However, I am still a bit lost. I am doing a secondary research on Gun control in the US and increase in crime rates and I am not sure which analysis method I should use?

Based on the given learning points, this is inferential analysis, thus, use ‘t-tests, ANOVA, correlation and regression analysis’

Well explained notes. Am an MPH student and currently working on my thesis proposal, this has really helped me understand some of the things I didn’t know.

I like your page..helpful

wonderful i got my concept crystal clear. thankyou!!

This is really helpful , thank you

Thank you so much this helped

Wonderfully explained

thank u so much, it was so informative

THANKYOU, this was very informative and very helpful

This is great GRADACOACH I am not a statistician but I require more of this in my thesis

Include me in your posts.

This is so great and fully useful. I would like to thank you again and again.

Glad to read this article. I’ve read lot of articles but this article is clear on all concepts. Thanks for sharing.

Thank you so much. This is a very good foundation and intro into quantitative data analysis. Appreciate!

You have a very impressive, simple but concise explanation of data analysis for Quantitative Research here. This is a God-send link for me to appreciate research more. Thank you so much!

Avery good presentation followed by the write up. yes you simplified statistics to make sense even to a layman like me. Thank so much keep it up. The presenter did ell too. i would like more of this for Qualitative and exhaust more of the test example like the Anova.

This is a very helpful article, couldn’t have been clearer. Thank you.

Awesome and phenomenal information.Well done

The video with the accompanying article is super helpful to demystify this topic. Very well done. Thank you so much.

thank you so much, your presentation helped me a lot

I don’t know how should I express that ur article is saviour for me 🥺😍

It is well defined information and thanks for sharing. It helps me a lot in understanding the statistical data.

I gain a lot and thanks for sharing brilliant ideas, so wish to be linked on your email update.

Very helpful and clear .Thank you Gradcoach.

Thank for sharing this article, well organized and information presented are very clear.

VERY INTERESTING AND SUPPORTIVE TO NEW RESEARCHERS LIKE ME. AT LEAST SOME BASICS ABOUT QUANTITATIVE.

An outstanding, well explained and helpful article. This will help me so much with my data analysis for my research project. Thank you!

wow this has just simplified everything i was scared of how i am gonna analyse my data but thanks to you i will be able to do so

simple and constant direction to research. thanks

This is helpful

Great writing!! Comprehensive and very helpful.

Do you provide any assistance for other steps of research methodology like making research problem testing hypothesis report and thesis writing?

Thank you so much for such useful article!

Amazing article. So nicely explained. Wow

Very insightfull. Thanks

I am doing a quality improvement project to determine if the implementation of a protocol will change prescribing habits. Would this be a t-test?

The is a very helpful blog, however, I’m still not sure how to analyze my data collected. I’m doing a research on “Free Education at the University of Guyana”

tnx. fruitful blog!

So I am writing exams and would like to know how do establish which method of data analysis to use from the below research questions: I am a bit lost as to how I determine the data analysis method from the research questions.

Do female employees report higher job satisfaction than male employees with similar job descriptions across the South African telecommunications sector? – I though that maybe Chi Square could be used here. – Is there a gender difference in talented employees’ actual turnover decisions across the South African telecommunications sector? T-tests or Correlation in this one. – Is there a gender difference in the cost of actual turnover decisions across the South African telecommunications sector? T-tests or Correlation in this one. – What practical recommendations can be made to the management of South African telecommunications companies on leveraging gender to mitigate employee turnover decisions?

Your assistance will be appreciated if I could get a response as early as possible tomorrow

This was quite helpful. Thank you so much.

wow I got a lot from this article, thank you very much, keep it up

Thanks for yhe guidance. Can you send me this guidance on my email? To enable offline reading?

Thank you very much, this service is very helpful.

Every novice researcher needs to read this article as it puts things so clear and easy to follow. Its been very helpful.

Wonderful!!!! you explained everything in a way that anyone can learn. Thank you!!

I really enjoyed reading though this. Very easy to follow. Thank you

Many thanks for your useful lecture, I would be really appreciated if you could possibly share with me the PPT of presentation related to Data type?

Thank you very much for sharing, I got much from this article

This is a very informative write-up. Kindly include me in your latest posts.

Very interesting mostly for social scientists

Thank you so much, very helpfull

You’re welcome 🙂

woow, its great, its very informative and well understood because of your way of writing like teaching in front of me in simple languages.

I have been struggling to understand a lot of these concepts. Thank you for the informative piece which is written with outstanding clarity.

very informative article. Easy to understand

Beautiful read, much needed.

Always greet intro and summary. I learn so much from GradCoach

Quite informative. Simple and clear summary.

I thoroughly enjoyed reading your informative and inspiring piece. Your profound insights into this topic truly provide a better understanding of its complexity. I agree with the points you raised, especially when you delved into the specifics of the article. In my opinion, that aspect is often overlooked and deserves further attention.

Absolutely!!! Thank you

Thank you very much for this post. It made me to understand how to do my data analysis.

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- Search Menu

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Urban Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Literature

- Classical Reception

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Archaeology

- Greek and Roman Papyrology

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Agriculture

- History of Education

- History of Emotions

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Acquisition

- Language Variation

- Language Families

- Language Evolution

- Language Reference

- Lexicography

- Linguistic Theories

- Linguistic Typology

- Linguistic Anthropology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Modernism)

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)