Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 26 February 2024

A Framework for the Interoperability of Cloud Platforms: Towards FAIR Data in SAFE Environments

- Robert L. Grossman ORCID: orcid.org/0000-0003-3741-5739 1 ,

- Rebecca R. Boyles 2 ,

- Brandi N. Davis-Dusenbery 3 ,

- Amanda Haddock 4 ,

- Allison P. Heath 5 ,

- Brian D. O’Connor 6 ,

- Adam C. Resnick 5 ,

- Deanne M. Taylor ORCID: orcid.org/0000-0002-3302-4610 5 , 7 &

- Stan Ahalt ORCID: orcid.org/0000-0002-8395-1279 8

Scientific Data volume 11 , Article number: 241 ( 2024 ) Cite this article

859 Accesses

1 Altmetric

Metrics details

- Computational platforms and environments

As the number of cloud platforms supporting scientific research grows, there is an increasing need to support interoperability between two or more cloud platforms. A well accepted core concept is to make data in cloud platforms Findable, Accessible, Interoperable and Reusable (FAIR). We introduce a companion concept that applies to cloud-based computing environments that we call a S ecure and A uthorized F AIR E nvironment (SAFE). SAFE environments require data and platform governance structures and are designed to support the interoperability of sensitive or controlled access data, such as biomedical data. A SAFE environment is a cloud platform that has been approved through a defined data and platform governance process as authorized to hold data from another cloud platform and exposes appropriate APIs for the two platforms to interoperate.

As the number of cloud platforms supporting scientific research grows 1 , there is an increasing need to support cross-platform interoperability. By a cloud platform, we mean a software platform in a public or private cloud 2 for managing and analyzing data and other authorized functions. With interoperability between cloud platforms, data does not have to be replicated in multiple cloud platforms but can be managed by one cloud platform and analyzed by researchers in another cloud platform. A common use case is to use specialized tools in another cloud platform that are unavailable in the cloud platform hosting the data. Interoperability also enables cross-platform functionality, allowing researchers analyzing data in one cloud platform to obtain the necessary amount of data required to power a statistical analysis, to validate an analysis using data from another cloud platform, or to bring together multiple data types for an integrated analysis when the data is distributed across two or more cloud platforms. In this paper, we are especially concerned with frameworks that are designed to support the interoperability of sensitive or controlled access data, such as biomedical data or qualitative research data.

There have been several attempts to provide frameworks for the interoperating cloud platforms for biomedical data, including those by the GA4GH organization 3 and by the European Open Science Cloud (EOSC) Interoperability Task Force of the FAIR Working Group 4 . A key idea in these frameworks is to make data in cloud platforms findable, accessible, interoperable and reusable (FAIR) 5 .

The authors have developed several cloud platforms operated by different organizations and were part of a working group, one of whose goals was to increase the interoperability between these cloud platforms. The challenge is that even when a dataset is FAIR and in a cloud platform (referred to here as Cloud Platform A), in general the governance structure put in place by the organization sponsoring Cloud Platform A (called the Project Sponsor below) requires that sensitive data remain in the platform and only be accessed by users within the platform. Therefore, even if a user was authorized to analyze the data, there was no simple way for the user to analyze the data in any cloud platform (referred to here as Cloud Platform B), except for the single cloud platform operated by the organization (Cloud Platform A).

There are several reasons for this lack of interoperability between cloud platforms hosting sensitive data: First, as just mentioned, for many cloud platforms, it is against policy to remove data from the cloud platform; instead, data must be analyzed within the cloud platform.

Second, in some cases, to manage the security and compliance of the data, often there is only a single cloud platform that has the right to distribute controlled access data; other cloud platforms may contain a copy of the data, but by policy cannot distribute it.

Third, a typical clause in a data access agreement requires that if the user elects not to use Cloud Platform A, the user’s organization is responsible for assessing and attesting to the security and compliance of Cloud Platform B. This can be difficult and time consuming unless there is a pre-existing relationship.

Fourth, once a Sponsor has approved a single cloud platform as authorized to host data and to analyze the hosted data, there may be a perception of increased risk to the Sponsor in allowing other third party platforms to be used to host or to analyze the data. Because of this increased risk, there has been limited interoperability of cloud platforms for controlled access data.

The consensus from the working group was that interoperability of data and an acceleration of research outcomes could be achieved if standard interoperating principals and interfaces could describe which platforms had the right to distribute a dataset and which cloud platforms could be used to analyze data.

In this note, we introduce a companion concept to FAIR that applies to cloud-based computing environments that we call a S ecure and A uthorized F AIR E nvironment (SAFE). The goal of the SAFE framework is to address the four issues described above that today limit the interoperability between cloud platforms. The cloud-based framework consisting of FAIR data in SAFE environments is intended to apply to research data that has restrictions on its access or its distribution or both its access and distribution. Some examples are: biomedical data 3 , 6 , including EHR data, clinical/phenotype data, genomics data, imaging data; social science data 7 and administrative data 8 . We emphasize that the environment itself is not FAIR in the sense of 5 , but rather that a SAFE environment contains FAIR data and is designed to be part of a framework to support the interoperability of FAIR data between two or more data platforms.

Also, SAFE cloud platforms are designed to support platform governance decisions about whether data in one cloud platform may be linked or transferred to another cloud platform, either for direct use by researchers or to redistribution. As we will argue below, SAFE is designed to support decisions between two or more cloud platforms to interoperate in the sense that data may be moved between them, but is not designed nor intended to be a security or compliance level describing a single cloud platform.

The proposed SAFE framework provides a way for a Sponsor to “extend its boundary” to selected third party platforms that can be used to analyze the data by authorized users. In this way, researchers can use the platform and tools that they are most comfortable with.

In order to discuss the complexities of an interoperability framework across cloud based resources, in the next section, we first define some important concepts from data and platform governance.

Distinguishing Data and Platform Governance

We assume that data is generated by research projects and that there is an organization that is responsible for the project. We call this organization the Project Sponsor . This can be any type of organization, including a government agency, an academic research center, a not-for-profit organization, or a commercial organization.

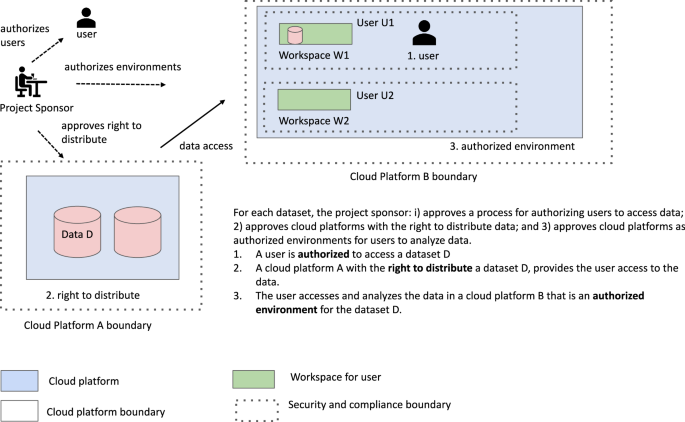

In the framework that we are proposing here, the Project Sponsor sets up and operates frameworks for (1) data governance and (2) platform governance. The Project Sponsor is ultimately responsible for the security and compliance of the data and of the cloud platform. Data governance includes: approving datasets to be distributed by cloud platforms, authorizing users to access data, and related activities. Platform governance includes: approving cloud platforms as having the right to distribute datasets to other platforms and to users and approving cloud platforms as authorized environments so that the cloud platforms can be used by users to access, analyze, and explore datasets.

By controlled access data , we mean data that is considered sensitive enough that agreements for the acceptable use of the data must be signed. One between the organization providing the data (the Data Contributor ) and the Project Sponsor and another between researchers (which we call Users in the framework) accessing the data and the Project Sponsor. Controlled access data arises, for example, when research participants contribute data for research purposes through a consent process, and a researcher signs an agreement to follow all the terms and conditions required by the consent agreements of the research participants or by an Institutional Review Board (IRB) that approves an exemption so that consents are not required.

Commonly used terms that are needed to describe SAFE are contained in Table 1 . Table 2 describes the roles and responsibilities of the Project Sponsor, Platform Operator, and User.

As is usual, we use the term authorized user , as someone who has applied for and been approved for access to controlled-access data. See Table 1 for a summary of definitions used in this paper.

One of the distinguishing features of our interoperability framework is that we formalize the concept of an authorized environment. An authorized environment is a cloud platform workspace or computing / analysis environment that is approved for the use or analysis of controlled access data.

Using the concepts of authorized user and authorized environment, we provide a framework enabling the interoperability between two or more cloud platforms.

SAFE Environments

Below we describe some suggested processes for authorizing environments, including having their security and compliance reviewed by the appropriate official or committee determined by the platform governance process. We also argue that the environments should have APIs so that they are findable, accessible and interoperable, enabling other cloud platforms to interoperate with it. As mentioned above, we use the acronym SAFE for S ecure and A uthorized F AIR E nvironments to describe these types of environments. In other words, a SAFE environment is a cloud platform that has been approved through a platform governance process as an authorized environment and exposes an API enabling other cloud platforms to interact with it (Fig. 1 ).

An overview of supporting FAIR data in SAFE environments.

In this paper, we make the case that SAFE environments are a natural complement to FAIR data and establishing a trust relationship between a cloud platform with FAIR data and a cloud platform that is a SAFE environment for analyzing data is a good basis for interoperability . Examples of the functionality to be exposed by the API and proposed identifiers are discussed below. Importantly, our focus is to provide a framework for attestation and approvals to support interoperability. Definition of the exact requirements for approvals is based on the needs of a particular project sponsor and out of scope of this manuscript.

Of course, a cloud platform can include both FAIR data and a SAFE environment for analyzing data. The issue of interoperability between cloud platforms arises when a researcher using a cloud platform that is a SAFE environment for analyzing data needs to access data from another cloud platform that contains data of interest.

We emphasize that the framework applies to all types of controlled-access data, (e.g., clinical, genomic, imaging, environmental, etc.) and that decisions about authorized users and authorized platforms depend upon the sensitivity of the data, with more conditions for data access and uses as the sensitivity of the data increases.

The SAFE framework that we are implementing uses the following identifiers:

SAFE assumes that cloud platforms have a globally unique identifier (GUID) identifying them, which we call an authorized platform identifier (APID) .

SAFE assumes that cloud platforms form networks consisting of 2 or more cloud platforms, which we call authorized platform network (APN) . Authorized platform networks have a globally unique identifier, which we call an authorized platform network identifier (APNI) . As an example, cloud platforms in an authorized platform network can sign a common set of agreements or otherwise agree to interoperate. A particular cloud platform can interoperate with all or selected cloud platforms in an authorized platform network.

SAFE assumes that geographic regions are identified by a globally unique identifier, which we call an Authorized Region ID (ARID). For example, the entire world may be an authorized region, or a single country may be the only authorized region. SAFE assumes that datasets that limit their distribution and analysis to specified regions identify these regions in their metadata.

To implement SAFE, we propose that a cloud environment support an API that exposes metadata with the following information:

Authorized Platform Identifier (APID)

A list of the Authorized Platform Network Identifiers (APNIs) that it belongs to.

A particular authorized platform network must also agree to a protocol for securely exchanging the APID and list of APNIs that it belongs to, such as transport layer security (TLS) protocol.

In addition, cloud platforms that host data that can be accessed and analyzed in other cloud platforms, should associated with each dataset metadata that specifies: a) whether the data can be removed from the platform (i.e. does the platform have the right to distribute data); b) a list of authorize platform networks that have been approved as authorized environments to access and analyze the data; and, c) an optional list of authorized region IDs (ARIDs) describing any regional restrictions on where the data may be accessed and analyzed.

Platform Governance

Examples of platform governance frameworks.

An example of a process for authorization of an environment is provided by the process used by the NIH Data Access Committees (DACs) through the dbGaP system 9 for sharing genomics data 10 . Currently, if a NIH DAC approves a user’s access to data, and if the user specifies in the data access request (DAR) application that a cloud platform will be used for analysis, then the user’s designated IT Director takes the responsibility for a cloud platform as an authorized environment for the user’s analysis of controlled access data, and a designated official at the user’s institution (the Signing Official) takes the overarching responsibility on behalf of the researcher’s institution.

As another example, the platform governance process may follow the “NIST 800-53 Security and Privacy Controls for Information Systems and Organizations” framework developed by the US National Institute for Standards and Technology (NIST) 11 . This framework has policies, procedures, and controls at three Levels - Low, Moderate and High, and each organization designates a person that can approve an environment by issuing what is called an Authority to Operate (ATO). More specifically, in this example, the platform governance process may require the following to approve a cloud platform as an authorized environment for hosting controlled access data: (1) a potential cloud platform implement the policies, procedures and controls specified by NIST SP 800-53 at the Moderate level; (2) a potential cloud platform have an independent assessment by a third party to ensure that the policies, controls and procedures are appropriately implemented and documented; (3) an appropriate official or committee evaluate the assessment, and if acceptable, approves the environment as an authorized environment by issuing an Authority to Operate (ATO) or following another agreed to process; (4) yearly penetration tests by an independent third party, which are reviewed by the appropriate committee or official.

Many US government agencies follow NIST SP 800-53, and a designated government official issues an Authority to Operate (ATO) when appropriate after the evaluation of a system 11 . In the example above, we are using the term “authority to operate” to refer to a more general process in which any organization decides to evaluate a cloud platform using any security and compliance framework and has completed all the steps necessary so that the cloud platform can be used. In the example, an organization, which may or may not be a government organization, uses the NIST SP 800-53 security and compliance framework and designates an individual within the organization with the role and responsibility to check that (1), (2) and (4) have been accomplished and issues an ATO when this is the case.

The right to distribute controlled access data

In general, when a user or a cloud platform is granted access to controlled access data, the user or platform does not have the right to redistribute the data to other users, even if the other user has signed the appropriate Data Access Agreements. Instead, to ensure there is the necessary security and compliance in place, any user accessing data as an authorized user must access the data from a platform approved for this purpose. We refer to platforms with the ability to share controlled access data in this way as having the right to distribute the authorized data.

One of the core ideas of SAFE is that data which has been approved for hosting in a cloud platform can be accessed and transferred to another cloud platform in the case that: the first cloud platform has the right to distribute the data and the second cloud platform is recognized as an authorized environment for the data following an approved process, such as described in the next section. There remains the possibility that the cloud platform requesting access to the data is in fact an imposter and not the authorized environment it appears to be. For this reason, as part of SAFE, we recommend that the cloud platform with the right to distribute data should verify through a chain of trust that it is indeed the intended authorized environment.

Basis for approving authorized environments

The guiding principle of SAFE is that research outcomes are accelerated by supporting interoperability of data across authorized environments. While the specific requirements may vary by project and project sponsor, in order to align with this principle, it is critical that Project Sponsors define requirements transparently and support interoperability when the requirements are met.

Above we provided examples of approaches and requirements project sponsors may use in approving an Authorized Environment. As mentioned above, NIST SP 800-53 provides a basis for authorizing an environment, but there are many frameworks for evaluating the security and compliance of a system that may be used. As an example, the organization evaluating the cloud platform may choose to use a framework such as NIST SP 800-171 12 , or may choose another process for approving a cloud platform as an authorized environment rather than issuing an ATO.

For example, both the Genomic Data Commons 6 and the AnVIL system 13 follow NIST SP 800-53 at the Moderate Level and the four steps described above. The authorizing official for the Genomic Data Commons is a government official at the US National Cancer Institute, while the authorizing official for AnVIL is an organizational official associated with the Platform Operator.

Two or more cloud platforms can interoperate when both the Sponsors and Operators each agree to: (1) use the same framework and process for evaluating cloud platforms as authorized environments; (2) each authorize one or more cloud platforms as authorized environments for particular datasets; (3) each agree to a common protocol or process for determining when a given cloud platform is following (1) and (2). Sometimes, this situation is described as the platforms having a trust relationship between them.

Basis for approving the right to distribute datasets

For each dataset, a data governance responsibility is to determine the right of a cloud based data repository to distribute data to an authorized user in an authorized environment. To reduce risk of privacy and confidentiality breach, the data governance process may choose to limit the number of data repositories that can distribute a particular controlled access dataset and to impose additional security and compliance requirements on those cloud based data repositories that have the right to distribute particular sensitive controlled-access datasets. These risks of course must be balanced with the imperative to accelerate research and improve patient outcomes which underlies the motivations of many study participants.

Interoperability

SAFE is focused on the specific aspect of interoperability of whether data hosted in one cloud platform can be analyzed in another cloud platform.

With the concepts of an authorized user, an authorized environment, and the right to distribute, interoperability is achieved when two or more cloud platforms have the right to distribute data to an authorized user in a cloud based authorized environment.

This suggests a general principle for interoperability: the data governance process for a dataset should authorize users, the platform governance process for a dataset should authorize cloud platform environments, and two or more cloud platforms can interoperate by trusting these authorizations .

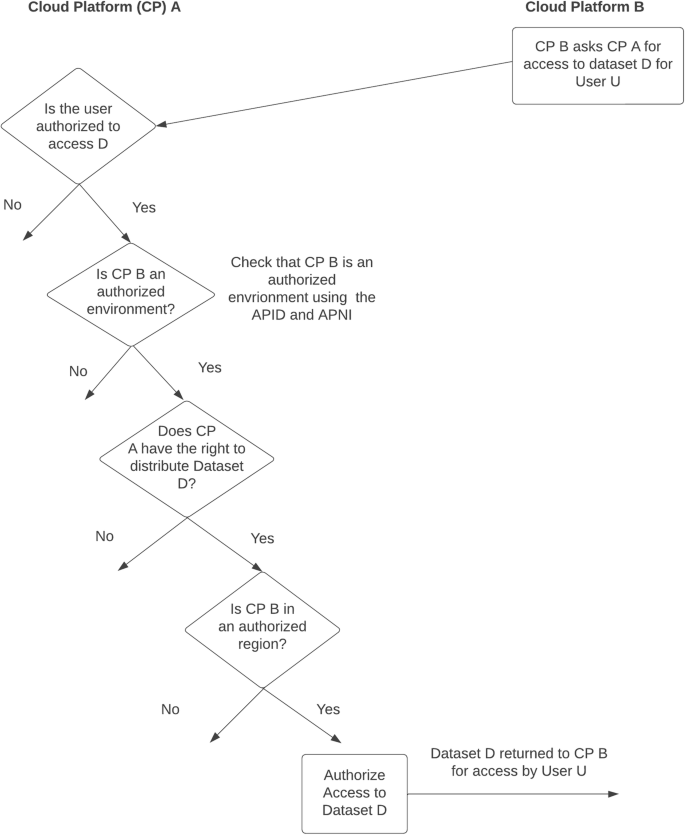

Figure 2 summarizes some of the key decisions enabling two cloud platforms to interoperate using the SAFE framework.

Some of the key decisions for interoperating two cloud platforms using the SAFE framework.

Towards Fair Data in SAFE Environments

Today there are a growing number of cloud platforms that hold biomedical data of interest to the research community, a growing number of cloud-based analysis tools for analyzing biomedical data, and a growing challenge for researchers to access the data they need, since often the analysis of data takes place in a different cloud platform than the cloud platform that hosts the data of interest.

We have presented the concept of cloud-based authorized environments that are called SAFE environments, which are secure and authorized environments that are appropriate for the analysis of sensitive biomedical data. The role of platform governance is to identify the properties required for a cloud platform to be an authorized environment for a particular dataset and to approve a cloud based platform that holds controlled access data to distribute the data to specific authorized platforms.

By standardizing the properties to be a SAFE environment and agreeing to the principle that the data governance process for a dataset should authorize users and the platform governance process should authorize cloud platform environments, then all that is required for two or more cloud platforms to interoperate is for the cloud platforms to trust these authorizations. We can shorten this principle to: “authorize the users, authorize the cloud platforms, and trust the authorizations.” This is the core basis for interoperability in the SAFE framework. See Table 3 for a summary.

This principle came out of the NIH NCPI Community and Governance Working Group and is the basis for the interoperability of the data platforms in this group. We are currently implementing APID, APNI and AIRD identifiers as described above, as well as the dataset metadata describing whether a dataset can be redistributed or transferred to other data platforms for analysis.

Navale, V. & Bourne, P. E. Cloud computing applications for biomedical science: A perspective. PLOS Comput. Biol. 14 (no. 6), e1006144, https://doi.org/10.1371/journal.pcbi.1006144 (2018). Jun.

Article ADS PubMed PubMed Central CAS Google Scholar

Mell, P. M. & Grance, T. The NIST definition of cloud computing, National Institute of Standards and Technology, Gaithersburg, MD, NIST SP 800–145, https://doi.org/10.6028/NIST.SP.800-145 (2011).

Rehm, H. L. et al ., GA4GH: International policies and standards for data sharing across genomic research and healthcare, Cell Genomics , vol. 1, no. 2, p. 100029, https://doi.org/10.1016/j.xgen.2021.100029 (Nov. 2021).

Achieving interoperability in EOSC: The Interoperability Framework | EOSCSecretariat. [Online]. Available: https://www.eoscsecretariat.eu/news-opinion/achieving-interoperability-eosc-interoperability-framework (Accessed: Jul. 30, 2021).

Wilkinson, M. D. et al ., The FAIR Guiding Principles for scientific data management and stewardship, Sci. Data , vol. 3, no. 1, p. 160018, https://doi.org/10.1038/sdata.2016.18 (Dec. 2016).

Heath, A. P. et al . The NCI Genomic Data Commons, Nat. Genet ., pp. 1–6, https://doi.org/10.1038/s41588-021-00791-5 (Feb. 2021).

ICPSR Data Excellence Research Impact. [Online]. Available: https://www.icpsr.umich.edu/web/pages/ (Accessed: Nov. 20, 2023).

Lane, J. Building an Infrastructure to Support the Use of Government Administrative Data for Program Performance and Social Science Research. Ann. Am. Acad. Pol. Soc. Sci. 675 (no. 1), 240–252, https://doi.org/10.1177/0002716217746652 (2018). Jan.

Article Google Scholar

Mailman, M. D. et al . The NCBI dbGaP database of genotypes and phenotypes,. Nat. Genet. 39 (no. 10), 1181–1186 (2007).

Article PubMed PubMed Central CAS Google Scholar

Paltoo, D. N. et al . Data use under the NIH GWAS Data Sharing Policy and future directions, Nat. Genet ., vol. 46, no. 9, pp. 934–938, https://doi.org/10.1038/ng.3062 (Sep. 2014).

Dempsey, K., Witte, G. & Rike, D. Summary of NIST SP 800-53 Revision 4, Security and Privacy Controls for Federal Information Systems and Organizations, National Institute of Standards and Technology, Gaithersburg, MD, NIST CSWP 02192014, https://doi.org/10.6028/NIST.CSWP.02192014 (Feb. 2014).

Ross, R., Pillitteri, V., Dempsey, K., Riddle, M. & Guissanie, G. Protecting Controlled Unclassified Information in Nonfederal Systems and Organizations, National Institute of Standards and Technology, NIST Special Publication (SP) 800-171 Rev. 2 , https://doi.org/10.6028/NIST.SP.800-171r2 (Feb. 2020).

Schatz, M. C. et al . Inverting the model of genomics data sharing with the NHGRI Genomic Data Science Analysis, Visualization, and Informatics Lab-space (AnVIL), https://doi.org/10.1101/2021.04.22.436044 (Apr. 2021).

Download references

Acknowledgements

This document captures discussions of the NIH Cloud-Based Platform Interoperability (NCPI) Community/Governance Working Group that have occurred over the past 24 months, and we want to acknowledge the contributions of this working group. This working group included personnel from federal agencies, health systems, industry, universities, and patient advocacy groups. However, this document does not represent any official decisions or endorsement of potential policy changes and is not an official work product of the NCPI Working Group. Rather, it is a summary of some of the working group discussions and is an opinion of the authors. Research reported in this publication was supported in part by the following grants and contracts: the NIH Common Fund under Award Number U2CHL138346, which is administered by the National Heart, Lung, and Blood Institute of the National Institutes of Health; the National Heart, Lung, and Blood Institute, National Institutes of Health, Department of Health and Human Services under the Agreement No. OT3 HL142478-01 and OT3 HL147154-01S1; National Cancer Institute, National Institutes of Health, Department of Health and Human Services under Contract No. HHSN261201400008C; and ID/IQ Agreement No. 17X146 under Contract No. HHSN261201500003I. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Author information

Authors and affiliations.

Center for Translational Data Science, University of Chicago, Chicago, IL, USA

Robert L. Grossman

RTI International, Research Triangle Park, Triangle Park, NC, USA

Rebecca R. Boyles

Velsera, Charlestown, MA, USA

Brandi N. Davis-Dusenbery

Dragon Master Initiative, Kechi, KS, USA

Amanda Haddock

Children’s Hospital of Philadelphia, Philadelphia, PA, USA

Allison P. Heath, Adam C. Resnick & Deanne M. Taylor

Nimbus Informatics, Carrboro, NC, USA

Brian D. O’Connor

University of Pennsylvania Perelman School of Medicine, Philadelphia, PA, USA

Deanne M. Taylor

University of North Carolina, Chapel Hill, Chapel Hill, NC, USA

You can also search for this author in PubMed Google Scholar

Contributions

All the authors contributed to the drafting and review of the manuscript.

Corresponding author

Correspondence to Robert L. Grossman .

Ethics declarations

Competing interests.

One of the authors (BND-D) is an employee of a for-profit company (Velsera).

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Manuscript with marked changes, rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Grossman, R.L., Boyles, R.R., Davis-Dusenbery, B.N. et al. A Framework for the Interoperability of Cloud Platforms: Towards FAIR Data in SAFE Environments. Sci Data 11 , 241 (2024). https://doi.org/10.1038/s41597-024-03041-5

Download citation

Received : 10 August 2023

Accepted : 03 February 2024

Published : 26 February 2024

DOI : https://doi.org/10.1038/s41597-024-03041-5

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing: AI and Robotics newsletter — what matters in AI and robotics research, free to your inbox weekly.

Subscribe to the PwC Newsletter

Join the community, add a new evaluation result row, cloud computing.

84 papers with code • 0 benchmarks • 0 datasets

Benchmarks Add a Result

Latest papers, privacy-preserving deep learning using deformable operators for secure task learning.

To address these challenges, we propose a novel Privacy-Preserving framework that uses a set of deformable operators for secure task learning.

IMPaCT: Interval MDP Parallel Construction for Controller Synthesis of Large-Scale Stochastic Systems

kiguli/impact • 7 Jan 2024

This paper is concerned with developing a software tool, called IMPaCT, for the parallelized verification and controller synthesis of large-scale stochastic systems using interval Markov chains (IMCs) and interval Markov decision processes (IMDPs), respectively.

LiPar: A Lightweight Parallel Learning Model for Practical In-Vehicle Network Intrusion Detection

Through experiments, we prove that LiPar has great detection performance, running efficiency, and lightweight model size, which can be well adapted to the in-vehicle environment practically and protect the in-vehicle CAN bus security.

CloudEval-YAML: A Practical Benchmark for Cloud Configuration Generation

alibaba/cloudeval-yaml • 10 Nov 2023

We develop the CloudEval-YAML benchmark with practicality in mind: the dataset consists of hand-written problems with unit tests targeting practical scenarios.

Deep learning based Image Compression for Microscopy Images: An Empirical Study

In the end, we hope the present study could shed light on the potential of deep learning based image compression and the impact of image compression on downstream deep learning based image analysis models.

MLatom 3: Platform for machine learning-enhanced computational chemistry simulations and workflows

dralgroup/mlatom • 31 Oct 2023

MLatom 3 is a program package designed to leverage the power of ML to enhance typical computational chemistry simulations and to create complex workflows.

Federated learning compression designed for lightweight communications

Federated Learning (FL) is a promising distributed method for edge-level machine learning, particularly for privacysensitive applications such as those in military and medical domains, where client data cannot be shared or transferred to a cloud computing server.

Recoverable Privacy-Preserving Image Classification through Noise-like Adversarial Examples

Extensive experiments demonstrate that 1) the classification accuracy of the classifier trained in the plaintext domain remains the same in both the ciphertext and plaintext domains; 2) the encrypted images can be recovered into their original form with an average PSNR of up to 51+ dB for the SVHN dataset and 48+ dB for the VGGFace2 dataset; 3) our system exhibits satisfactory generalization capability on the encryption, decryption and classification tasks across datasets that are different from the training one; and 4) a high-level of security is achieved against three potential threat models.

ADGym: Design Choices for Deep Anomaly Detection

Deep learning (DL) techniques have recently found success in anomaly detection (AD) across various fields such as finance, medical services, and cloud computing.

FastAiAlloc: A real-time multi-resources allocation framework proposal based on predictive model and multiple optimization strategies

marcosd3souza/fast-ai-alloc • Future Generation Computer Systems 2023

To integrate these steps, this work proposes a framework based on the following strategies, widely used in the literature: Genetic Algorithms (GA), Particle Swarm Optimization (PSO) and Linear Programming, besides our Heuristic approach.

Advances, Systems and Applications

- Open access

- Published: 13 June 2023

A survey of Kubernetes scheduling algorithms

- Khaldoun Senjab 1 ,

- Sohail Abbas 1 ,

- Naveed Ahmed 1 &

- Atta ur Rehman Khan 2

Journal of Cloud Computing volume 12 , Article number: 87 ( 2023 ) Cite this article

9918 Accesses

6 Citations

Metrics details

As cloud services expand, the need to improve the performance of data center infrastructure becomes more important. High-performance computing, advanced networking solutions, and resource optimization strategies can help data centers maintain the speed and efficiency necessary to provide high-quality cloud services. Running containerized applications is one such optimization strategy, offering benefits such as improved portability, enhanced security, better resource utilization, faster deployment and scaling, and improved integration and interoperability. These benefits can help organizations improve their application deployment and management, enabling them to respond more quickly and effectively to dynamic business needs. Kubernetes is a container orchestration system designed to automate the deployment, scaling, and management of containerized applications. One of its key features is the ability to schedule the deployment and execution of containers across a cluster of nodes using a scheduling algorithm. This algorithm determines the best placement of containers on the available nodes in the cluster. In this paper, we provide a comprehensive review of various scheduling algorithms in the context of Kubernetes. We characterize and group them into four sub-categories: generic scheduling, multi-objective optimization-based scheduling, AI-focused scheduling, and autoscaling enabled scheduling, and identify gaps and issues that require further research.

Introduction

Kubernetes is an open-source platform for automating the deployment, scaling, and management of containerized applications. It allows developers to focus on building and deploying their applications without worrying about the underlying infrastructure. Kubernetes uses a declarative approach to managing applications, where users specify desired application states, and the system maintains them. It also provides robust tools for monitoring and managing applications, including self-healing mechanisms for automatic failure detection and recovery. Overall, Kubernetes offers a powerful and flexible solution for managing containerized applications in production environments.

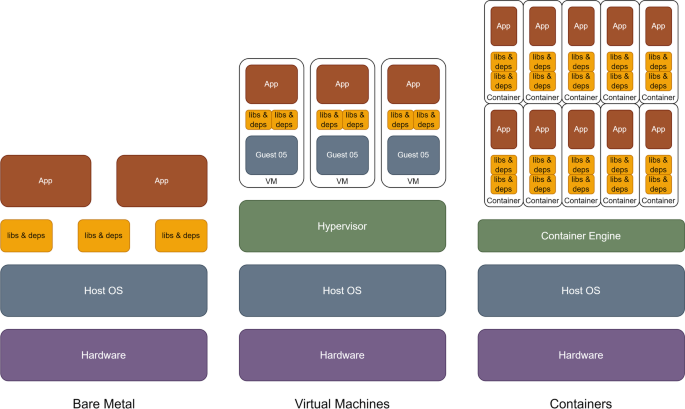

Kubernetes is well-suited for microservice-based web applications, where each component can be run in its own container. Containers are lightweight and can be easily created and destroyed, providing faster and more efficient resource utilization than virtual machines, as shown in Fig. 1 . Kubernetes automates the deployment, scaling, and management of containers across a cluster of machines, making resource utilization more efficient and flexible. This simplifies the process of building and maintaining complex applications.

Comparison between different types of applications deployments

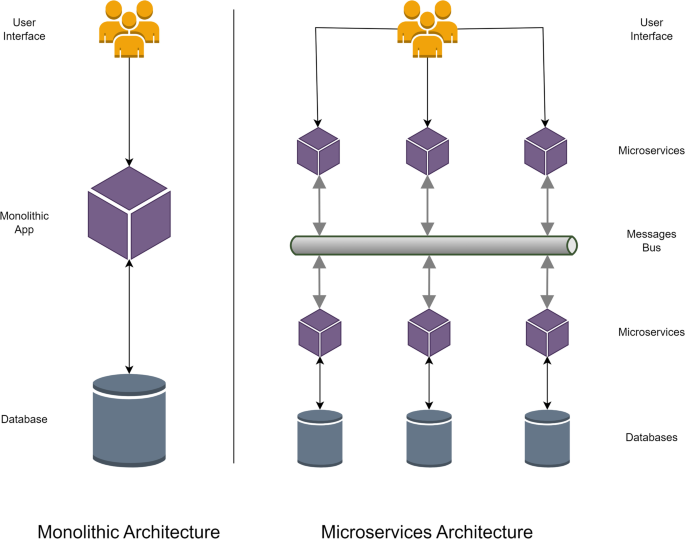

Microservice-based architecture involves dividing an application into small, independent modules called microservices, Fig. 2 . Each microservice is responsible for a specific aspect of the application, and they communicate through a message bus. This architecture offers several benefits, such as the ability to automate deployment, scaling, and management. Because each microservice is independent and can be managed and updated separately, it is easier to make changes without affecting the entire system. Additionally, microservices can be written in different languages and can run on different servers, providing greater flexibility in the development process.

Comparison between different applications architectures

Kubernetes can quickly adapt to various types of demand intensities. For example, if a web application has few visitors at a given time, it can be scaled down to a few pods using minimal resources to reduce costs. However, if the application becomes extremely popular and receives a large number of visitors simultaneously, it can be scaled up to be serviced by a large number of pods, making it capable of handling almost any level of demand.

Kubernetes have been employed by many organizations in a diverse area of underlying applications and have gained the trust of being the best option for the management and deployment of containerized applications. In terms of recent applications, Kubernetes are proving to be an invaluable resource for IT infrastructure as they provide a sustainable path towards serverless computing that will result in easing up challenges in IT administration [ 1 ]. Serverless computing will provide end-to-end security enhancements but will also result in new infrastructure and security challenges as discussed in [ 1 ].

As the computing paradigm moves towards edge and fog computing, Kubernetes is proving to be a versatile solution that provides seamless network management between cloud and edge nodes [ 2 , 3 , 4 ]. Kubernetes face multiple challenges when deployed in an IoT environment. These challenges range from optimizing network traffic distribution [ 2 ], optimizing flow routing policies [ 3 ], and edge device’s computational resources distribution [ 4 ].

As can be seen from the diverse range of applications, and challenges associated with Kubernetes, it is imperative to study proposed algorithms in the related area to identify the state-of-the-art and future research directions. Numerous studies have focused on the development of new algorithms for Kubernetes. The main motivation for this survey is to provide a comprehensive overview of the state-of-the-art in the field of Kubernetes scheduling algorithms. By reviewing the existing literature and identifying the key theories, methods, and findings from previous studies, we aim to provide a critical evaluation of the strengths and limitations of existing approaches. We also hope to identify gaps and open questions in the existing literature, and to offer suggestions for future research directions. Overall, our goal is to contribute to the advancement of knowledge in the field and to provide a useful resource for researchers and practitioners working with Kubernetes scheduling algorithms.

To the best of authors’ knowledge, there are no related surveys found that specifically address the topic at hand. The surveys found are mostly targeted at the container orchestration in general (including Kubernetes), such as [ 5 , 6 , 7 , 8 ]. These surveys address Kubernetes breadthwise without targeting scheduling and diving deep into it and some even did not focus on Kubernetes. For example, some concentrated on scheduling in the cloud [ 9 ] and its associated concerns [ 10 ]. Others targeted big data applications in data center networks [ 11 ], or fog computing environments [ 12 ]. The authors have found two closely related and well-organized surveys [ 13 ] and [ 14 ] that targeted Kubernetes scheduling in depth. However, our work is different than these two surveys in terms of taxonomy, i.e., they targeted different aspects and objectives in scheduling whereas we categorized the literature into different four sub-categories: generic scheduling, multi-objective optimization-based scheduling, AI focused scheduling, and autoscaling enabled scheduling. Thereby focusing specifically on wide range of schemes related to multi-objective optimization and AI, in addition to the main scheduling with autoscaling. Our categorization, we believe, is more fine-grained and novel as compared to the existing surveys.

In this paper, the literature has been divided into four sub-categories: generic scheduling, multi-objective optimization-based scheduling, AI-focused scheduling, and autoscaling enabled scheduling. The literature pertaining to each sub-category is analyzed and summarized based on six parameters outlined in Literature review section.

Our main contributions are as follows:

A comprehensive review of the literature on Kubernetes scheduling algorithms targeting four sub-categories: generic scheduling, multi-objective optimization-based scheduling, AI focused scheduling, and autoscaling enabled scheduling.

A critical evaluation of the strengths and limitations of existing approaches.

Identification of gaps and open questions in the existing literature.

The remainder of this paper is organized as follows: In Search methodology section, we describe the methodology used to conduct the survey. In Literature review section, we present the literature review along with results of our survey, including a critical evaluation of the strengths and limitations of existing approaches. A taxonomy of the identified research papers based on the literature review is presented as well. In Discussion, challenges & future suggestions section, we discuss the implications of our findings and suggest future research directions. Finally, in Conclusions section, we summarize the key contributions of the survey and provide our conclusions.

Search methodology

This section presents our search methodology for identifying relevant studies that are included in this review.

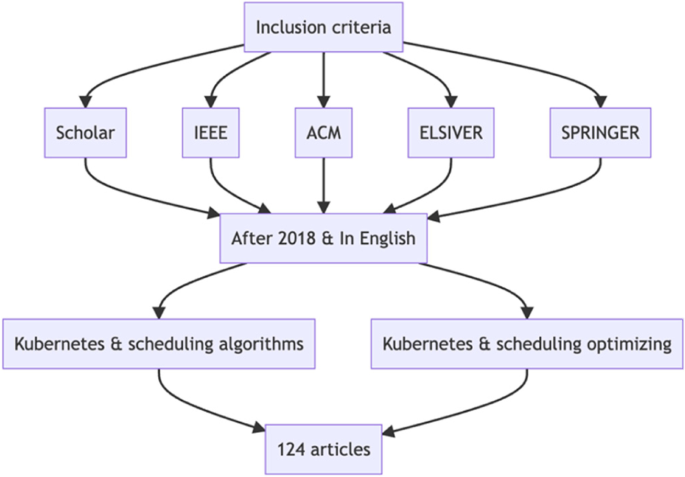

To identify relevant studies for our review, we conducted a comprehensive search of the literature using the following databases: IEEE, ACM, Elsevier, Springer, and Google Scholar. We used the following search terms: "Kubernetes," "scheduling algorithms," and "scheduling optimizing." We limited our search to studies published in the last 5 years and written in English.

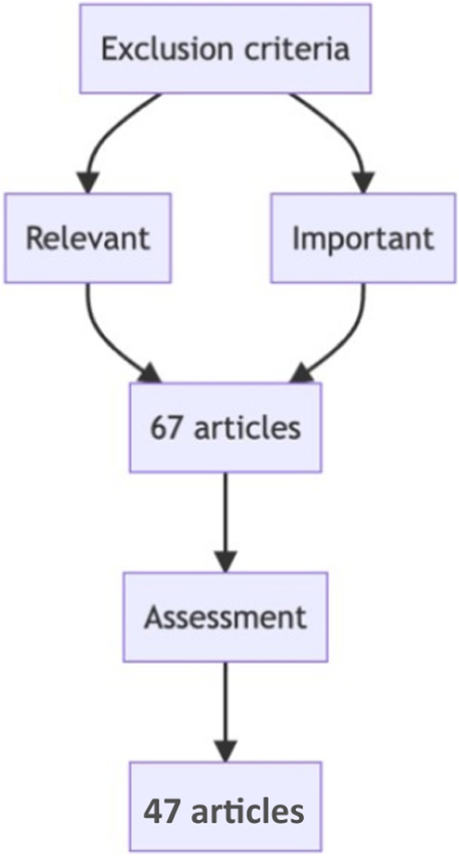

We initially identified a total of 124 studies from the database searches, see Fig. 3 . We then reviewed the abstracts of these studies to identify those that were relevant to our review. We excluded studies that did not focus on Kubernetes scheduling algorithms, as well as those that were not original research or review articles. After this initial screening, we were left with 67 studies, see Fig. 4 .

Inclusion criteria

Exclusion criteria

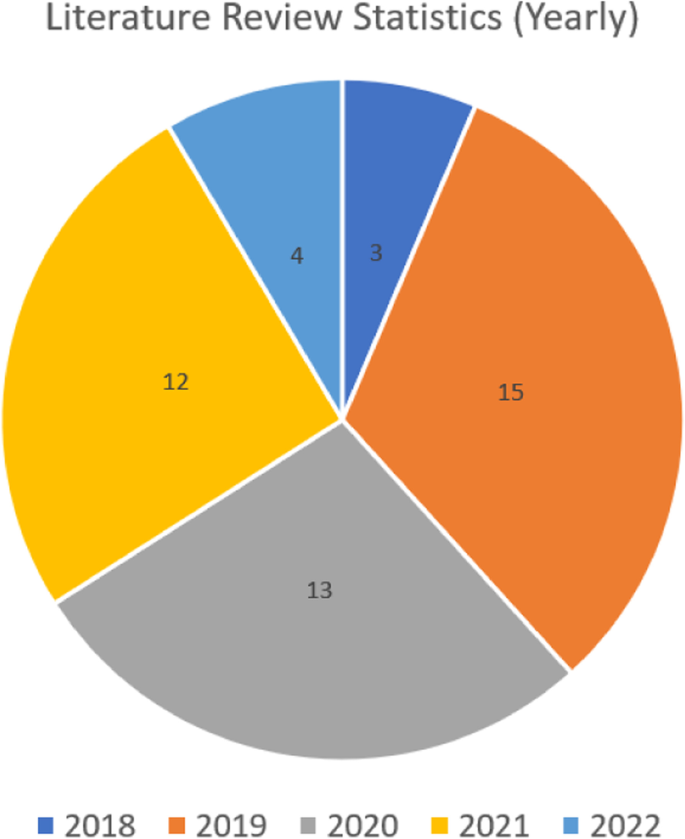

We then reviewed the texts of the remaining studies to determine their eligibility for inclusion in our review. We excluded studies that did not meet our inclusion criteria, which were: (1) focus on optimizing Kubernetes scheduling algorithms, (2) provide original research or a critical evaluation of existing approaches, and (3) be written in English and published in the last 5 years. After this final screening, we included 47 studies in our review, see Fig. 4 . A yearly distribution of papers can be seen in Fig. 5 .

Detailed statistics showing the yearly breakdown of analyzed studies

We also searched the reference lists of the included studies to identify any additional relevant studies that were not captured in our database searches. We did not identify any additional studies through this process. Therefore, our review includes 47 studies on Kubernetes scheduling algorithms published in the last 5 years. These studies represent a diverse range of research methods, including surveys, experiments, and simulations.

Literature review

This section has been organized into four sub-categories, i.e., generic scheduling, multi-objective optimization-based scheduling, AI focused scheduling, and autoscaling enabled scheduling. A distribution of analyzed research papers in each category can be seen in Fig. 6 . The literature in each sub-category is analyzed and then summarized based on six parameters given below:

Methodology/Algorithms

Experiments

Applications

Limitations

Detailed statistics for each category in terms of analyzed studies

Scheduling in Kubernetes

The field of Kubernetes scheduling algorithms has attracted significant attention from researchers and practitioners in recent years. A growing body of literature has explored the potential benefits and challenges of using different scheduling algorithms to optimize the performance of a Kubernetes cluster. In this section, we present a review of the key theories, methods, and findings from previous studies in this area.

One key theme in the literature is the need for efficient effective scheduling of workloads in a Kubernetes environment. Many studies have emphasized the limitations of traditional scheduling approaches, which often struggle to handle the complex and dynamic nature of workloads in a Kubernetes cluster. As a result, there has been increasing interest in the use of advanced scheduling algorithms to enable efficient, effective allocation of computing resources within the cluster.

Another key theme in the literature is the potential benefits of advanced scheduling algorithms for Kubernetes. Many studies have highlighted the potential for these algorithms to improve resource utilization, reduce latency, and enhance the overall performance of the cluster. Additionally, advanced scheduling algorithms have the potential to support the development of new applications and services within the Kubernetes environment, such as real-time analytics and machine learning and deep learning, see AI Focused Scheduling section.

Despite these potential benefits, the literature also identifies several challenges and limitations of Kubernetes scheduling algorithms. One key challenge is the need to address the evolving nature of workloads and applications within the cluster. Therefore, various authors focused on improving the autoscaling feature in Kubernetes scheduling to allow for automatic adjustment of the resources allocated to pods based on the current demand, more detailed discussion can be found in Autoscaling-enabled Scheduling section. Other challenges include the need to manage and coordinate multiple scheduling algorithms, and to ensure the stability and performance of the overall system.

Overall, the literature suggests that advanced scheduling algorithms offer a promising solution to the challenges posed by the complex and dynamic nature of workloads in a Kubernetes cluster. However, further research is needed to address the limitations and challenges of these algorithms, and to explore their potential applications and benefits.

In Santos et al. [ 15 ], for deployments in smart cities, the authors suggest a network-aware scheduling method for container-based apps. Their strategy is put into practice as an addition to Kubernetes' built-in default scheduling system, which is an open-source orchestrator for the automatic management and deployment of micro-services. By utilizing container-based smart city apps, the authors assess the suggested scheduling approach's performance and contrast it with that of Kubernetes' built-in default scheduling mechanism. Compared to the default technique, they discovered that the suggested solution reduces network latency by almost 80%.

In Chung et al. [ 16 ], the authors propose a new cluster scheduler called Stratus that is specialized for orchestrating batch job execution on virtual clusters in public Infrastructure-as-a-Service (IaaS) platforms. Stratus focuses on minimizing dollar costs by aggressively packing tasks onto machines based on runtime estimates, i.e., to save money, the allocated resources will be made either mostly full or empty so that they may then be released. Using the workload traces from TwoSigma and Google, the authors evaluate Stratus and establish that the proposed Stratus reduces cost by 17–44% compared to the benchmarks of virtual cluster scheduling.

In Le et al. [ 17 ], the authors propose a new scheduling algorithm called AlloX for optimizing job performance in shared clusters that use interchangeable resources such as CPUs, GPUs, and other accelerators. AlloX transforms the scheduling problem into a min-cost bipartite matching problem and provides dynamic fair allocation over time. The authors demonstrate theoretically and empirically that AlloX performs better than existing solutions in the presence of interchangeable resources, and they show that it can reduce the average job completion time significantly while providing fairness and preventing starvation.

In Zhong et al. [ 18 ], the authors propose a heterogeneous task allocation strategy for cost-efficient container orchestration in Kubernetes-based cloud computing infrastructures with elastic compute resources. The proposed strategy has three main features: support for heterogeneous job configurations, cluster size adjustment through autoscaling algorithms, and a rescheduling mechanism to shut down underutilized VM instances and reallocate relevant jobs without losing task progress. The authors evaluate their approach using the Australian National Cloud Infrastructure (Nectar) and show that it can reduce overall cost by 23–32% compared to the default Kubernetes framework.

In Thinakaran et al. [ 19 ], to create Kube-Knots, the authors combine their proposed GPU-aware resource orchestration layer, Knots, with the Kubernetes container orchestrator. Through dynamic container orchestration, Kube-Knots dynamically harvests unused computing cycles, enabling the co-location of batch and latency-critical applications and increasing overall resource utilization. The authors demonstrate that the proposed scheduling strategies increase average and 99th percentile cluster-wide GPU usage by up to 80% in the case of HPC workloads when used to plan datacenter-scale workloads using Kube-Knots on a ten-node GPU cluster. In addition, the suggested schedulers reduce energy consumption throughout the cluster by an average of 33% for three separate workloads and increase the average task completion times of deep learning workloads by up to 36% when compared to modern schedulers.

In Townend et al. [ 20 ], the authors propose a holistic scheduling system for Kubernetes that replaces the default scheduler and considers both software and hardware models to improve data center efficiency. The authors claim that by introducing hardware modeling into a software-based solution, an intelligent scheduler can make significant improvements in data center efficiency. In their initial deployment, the authors observed power consumption reductions of 10–20%.

In the work by Menouer [ 21 ], the author describes the KCSS, a brand-new Kubernetes container scheduling strategy. The purpose of KCSS is to increase performance in terms of makespan and power consumption by scheduling user-submitted containers as efficiently as possible. For each freshly submitted container, KCSS chooses the best node based on a number of factors linked to the cloud infrastructure and the user's requirements using a multi-criteria decision analysis technique. The author uses the Go programming language to create KCSS and shows how it works better than alternative container scheduling methods in a variety of situations.

In Song et al. [ 22 ], authors present a topology-based GPU scheduling framework for Kubernetes. The framework is based on the traditional Kubernetes GPU scheduling algorithm, but introduces the concept of a GPU cluster topology, which is restored in a GPU cluster resource access cost tree. This allows for more efficient scheduling of different GPU resource application scenarios. The proposed framework has been used in the production practice of Tencent and has reportedly improved the resource utilization of GPU clusters by about 10%.

In Ogbuachi et al. [ 23 ], the authors propose an improved design for Kubernetes scheduling that takes into account physical, operational, and network parameters in addition to software states in order to enable better orchestration and management of edge computing applications. They compare the proposed design to the default Kubernetes scheduler and show that it offers improved fault tolerance and dynamic orchestration capabilities.

In the work by Beltre et al. [ 24 ], utilizing fairness measures including dominant resource fairness, resource demand, and average waiting time, the authors outline a scheduling policy for Kubernetes clusters. KubeSphere, a policy-driven meta-scheduler created by the authors, enables tasks to be scheduled according to each user's overall resource requirements and current consumption. The proposed policy increased fairness in a multi-tenant cluster, according to experimental findings.

In Haja et al. [ 25 ], the authors propose a custom Kubernetes scheduler that takes into account delay constraints and edge reliability when making scheduling decisions. The authors argue that this type of scheduler is necessary for edge infrastructure, where applications are often delay-sensitive, and the infrastructure is prone to failures. The authors demonstrate their Kubernetes extension and release the solution as open source.

In Wojciechowski et al. [ 26 ], the authors propose a unique method for scheduling Kubernetes pods that makes advantage of dynamic network measurements gathered by Istio Service Mesh. According to the authors, this approach can fully automate saving up to 50% of inter-node bandwidth and up to 37% of application response time, which is crucial for the adoption of Kubernetes in 5G use cases.

In Cai et al. [ 27 ], the authors propose a feedback control method for elastic container provisioning in Kubernetes-based systems. The method uses a combination of a varying-processing-rate queuing model and a linear model to improve the accuracy of output errors. The authors compare their approach with several existing algorithms on a real Kubernetes cluster and find that it obtains the lowest percentage of service level agreement (SLA) violation and the second lowest cost.

In Ahmed et al. [ 28 ], the deployment of Docker containers in a heterogeneous cluster with CPU and GPU resources can be managed via the authors' dynamic scheduling framework for Kubernetes. The Kubernetes Pod timeline and previous data about the execution of the containers are taken into account by the platform, known as KubCG, to optimize the deployment of new containers. The time it took to complete jobs might be cut by up to 64% using KubCG, according to the studies the authors conducted to validate their algorithm.

In Ungureanu et al. [ 29 ], the authors propose a hybrid shared-state scheduling framework for Kubernetes that combines the advantages of centralized and distributed scheduling. The framework uses distributed scheduling agents to delegate most tasks, and a scheduling correction function to process unprioritized and unscheduled tasks. Based on the entire cluster state the scheduling decisions are made, which are then synchronized and updated by the master-state agent. The authors performed experiments to test the behavior of their proposed scheduler and found that it performed well in different scenarios, including failover and recovery. They also found that other centralized scheduling frameworks may not perform well in situations like collocation interference or priority preemption.

In Yang et al. [ 30 ], the authors present the design and implementation of KubeHICE, a performance-aware container orchestrator for heterogeneous-ISA architectures in cloud-edge platforms. KubeHICE extends Kubernetes with two functional approaches, AIM (Automatic Instruction Set Architecture Matching) and PAS (Performance-Aware Scheduling), to handle heterogeneous ISA and schedule containers according to the computing capabilities of cluster nodes. The authors performed experiments to evaluate KubeHICE and found that it added no additional overhead to container orchestration and was effective in performance estimation and resource scheduling. They also demonstrated the advantages of KubeHICE in several real-world scenarios, showing for example a 40% increase in CPU utilization when eliminating heterogeneity.

In Li et al. [ 31 ], the authors propose two dynamic scheduling algorithms, Balanced-Disk-IO-Priority (BDI) and Balanced-CPU-Disk-IO-Priority (BCDI), to address the issue of Kubernetes' scheduler not taking the disk I/O load of nodes into account. BDI is designed to improve the disk I/O balance between nodes, while BCDI is designed to solve the issue of load imbalance of CPU and disk I/O on a single node. The authors perform experiments to evaluate the algorithms and find that they are more effective than the Kubernetes default scheduling algorithms.

In Fan et al. [ 32 ], the authors propose an algorithm for optimizing the scheduling of pods in the Serverless framework on the Kubernetes platform. The authors argue that the default Kubernetes scheduler, which operates on a pod-by-pod basis, is not well-suited for the rapid deployment and running of pods in the Serverless framework. To address this issue, the authors propose an algorithm that uses simultaneous scheduling of pods to improve the efficiency of resource scheduling in the Serverless framework. Through preliminary testing, the authors found that their algorithm was able to greatly reduce the delay in pod startup while maintaining a balanced use of node resources.

In Bestari et al. [ 33 ], the authors propose a scheduler for distributed deep learning training in Kubeflow that combines features from existing works, including autoscaling and gang scheduling. The proposed scheduler includes modifications to increase the efficiency of the training process, and weights are used to determine the priority of jobs. The authors evaluate the proposed scheduler using a set of Tensorflow jobs and find that it improves training speed by over 26% compared to the default Kubernetes scheduler.

In Dua et al. [ 34 ], the authors present an alternative algorithm for load balancing in distributed computing environments. The algorithm uses task migration to balance the workload among processors of different capabilities and configurations. The authors define labels to classify tasks into different categories and configure clusters dedicated to specific types of tasks.

The above-mentioned schemes are summarized in Table 1 .

Scheduling using multi-objective optimization

Multi-objective optimization scheduling takes into account multiple objectives or criteria when deciding how to allocate resources and schedule containers on nodes in the cluster. This approach is particularly useful in complex distributed systems where there are multiple competing objectives that need to be balanced to achieve the best overall performance. In a multi-objective optimization scheduling approach, the scheduler considers multiple objectives simultaneously, such as minimizing response time, maximizing resource utilization, and reducing energy consumption. The scheduler uses optimization algorithms to find the optimal solution that balances these objectives.

Multi-objective optimization scheduling can help improve the overall performance and efficiency of Kubernetes clusters by taking into account multiple objectives when allocating resources and scheduling containers. This approach can result in better resource utilization, improved application performance, reduced energy consumption, and lower costs.

Some examples of multi-objective optimization scheduling algorithms used in Kubernetes include genetic algorithms, Ant Colony Optimization, and particle swarm optimization. These algorithms can help optimize different objectives, such as response time, resource utilization, energy consumption, and other factors, to achieve the best overall performance and efficiency in the Kubernetes cluster.

In this section, multi-objective scheduling proposals are discussed.

In Kaur et al. [ 35 ], the authors propose a new controller for managing containers on edge-cloud nodes in Industrial Internet of Things (IIoT) systems. The controller, called Kubernetes-based energy and interference driven scheduler (KEIDS), is based on Google Kubernetes and is designed to minimize energy utilization and interference in IIoT systems. KEIDS uses integer linear programming to formulate the task scheduling problem as a multi-objective optimization problem, taking into account factors such as energy consumption, carbon emissions, and interference from other applications. The authors evaluate KEIDS using real-time data from Google compute clusters and find that it outperforms existing state-of-the-art schemes.

In Lin et al. [ 36 ], the authors propose a multi-objective optimization model for container-based microservice scheduling in cloud architectures. They present an ant colony algorithm for solving the scheduling problem, which takes into account factors such as computing and storage resource utilization, the number of microservice requests, and the failure rate of physical nodes. The authors evaluate the proposed algorithm using experiments and compare its performance to other related algorithms. They find that the proposed algorithm achieves better results in terms of cluster service reliability, cluster load balancing, and network transmission overhead.

In Wei-guo et al. [ 37 ], the authors propose an improved scheduling algorithm for Kubernetes by combining ant colony optimization and particle swarm optimization to better balance task assignments and reduce resource costs. The authors implemented the algorithm in Java and tested it using the CloudSim tool, showing that it outperformed the original scheduling algorithm.

In the work by Oleghe [ 38 ], the idea of container placement and migration in edge servers, as well as the scheduling models created for this purpose, are discussed by the author. The majority of scheduling models, according to the author, are based mostly on heuristic algorithms and use multi-objective optimization models or graph network models. The study also points out the lack of studies on container scheduling models that take dispersed edge computing activities into account and predicts that future studies in this field will concentrate on scheduling containers for mobile edge nodes.

In Carvalho et al. [ 39 ], The authors offer an addition to the Kubernetes scheduler that uses Quality of Experience (QoE) measurements to help cloud management Service Level Objectives (SLOs) be more accurate. In the context of video streaming services that are co-located with other services, the authors assess the suggested architecture using the QoE metric from the ITU P.1203 standard. According to the findings, resource rescheduling increases average QoE by 135% while the proposed scheduler increases it by 50% when compared to other schedulers.

The above-mentioned schemes are summarized in Table 2 .

AI focused scheduling

Many large companies have recently started to provide AI based services. For this purpose, they have installed machine/deep learning clusters composed of tens to thousands of CPUs and GPUs for training their deep learning models in a distributed manner. Different machine learning frameworks are used such as MXNet [ 40 ], TensorFlow [ 41 ], and Petuum [ 42 ]. Training a deep learning model is usually very resource hungry and time consuming. In such a setting, efficient scheduling is crucial in order to fully utilize the expensive deep learning cluster and expedite the model training process. Different strategies have been used to schedule tasks in this arena, for examples, general purpose schedulers are customized to tackle distributed deep learning tasks, example include [ 43 ] and [ 44 ]; however, they statically allocate resources and do not adjust resource under different load conditions which lead to poor resource utilization. Others proposed dynamic allocation of resources after carefully analyzing the workloads, examples include [ 45 ] and [ 46 ].

In this section, deep learning focused schedulers are surveyed.

In Peng et al. [ 46 ], the authors propose a customized job scheduler for deep learning clusters called Optimus. The goal of Optimus is to minimize the time required for deep learning training jobs, which are resource-intensive and time-consuming. Optimus employs performance models to precisely estimate training speed as a function of resource allocation and online fitting to anticipate model convergence during training. These models inform how Optimus dynamically organizes tasks and distributes resources to reduce job completion time. The authors put Optimus into practice on a deep learning cluster and evaluate its efficiency in comparison to other cluster schedulers. They discover that Optimus beats conventional schedulers in terms of job completion time and makespan by roughly 139% and 63%, respectively.

In Mao et al. [ 47 ], the authors propose using modern machine learning techniques to develop highly efficient policies for scheduling data processing jobs on distributed compute clusters. They present their system, called Decima, which uses reinforcement learning (RL) and neural networks to learn workload-specific scheduling algorithms. Decima is designed to be scalable and able to handle complex job dependency graphs. The authors report that their prototype integration with Spark on a 25-node cluster improved average job completion time by at least 21% over existing hand-tuned scheduling heuristics, with up to 2 × improvement during periods of high cluster load.

In Chaudhary et al. [ 48 ], a distributed fair share scheduler for GPU clusters used for deep learning training termed as Gandivafair is presented by the authors. This GPU cluster utilization system offers performance isolation between users and is created to strike a balance between the competing demands of justice and efficiency. In spite of cluster heterogeneity, Gandivafair is the first scheduler to fairly distribute GPU time among all active users. The authors demonstrate that Gandivafair delivers both fairness and efficiency under realistic multi-user workloads by evaluating it using a prototype implementation on a heterogeneous 200-GPU cluster.

In Fu et al. [ 49 ], the authors propose a new container placement scheme called ProCon for scheduling jobs in a Kubernetes cluster. ProCon uses an estimation of future resource usage to balance resource contentions across the cluster and reduce the completion time and makespan of jobs. The authors demonstrate through experiments that ProCon decreases completion time by up to 53.3% for a specific job and enhances general performance by 23.0%. In addition, ProCon shows a makespan improvement of up to 37.4% in comparison to Kubernetes' built-in default scheduler.

In Peng et al. [ 50 ], the authors propose DL2, a deep learning-based scheduler for deep learning clusters that aims to improve global training job expedition by dynamically resizing resources allocated to jobs. The authors implement DL2 on Kubernetes and evaluate its performance against a fairness scheduler and an expert heuristic scheduler. The results show that DL2 outperforms the other schedulers in terms of average job completion time.

In Mao et al. [ 51 ], the authors propose a new container scheduler called SpeCon optimized for short-lived deep learning applications. SpeCon is designed to improve resource utilization and job completion times in a Kubernetes cluster by analyzing the progress of deep learning training processes and speculatively migrating slow-growing models to release resources for faster-growing ones. The authors conduct experiments that demonstrate that SpeCon improves individual job completion times by up to 41.5%, improves system-wide performance by 14.8%, and reduces makespan by 24.7%.

In Huang et al. [ 52 ], for scheduling independent batch jobs across many federated cloud computing clusters, the authors suggest a deep reinforcement learning-based job scheduler dubbed RLSK. The authors put RLSK into use on Kubernetes and tested its performance through simulations, demonstrating that it can outperform conventional scheduling methods.

The work by Wang et al. [ 53 ] describes MLFS, a feature-based task scheduling system for machine learning clusters that can conduct both data- and model-parallel processes. To determine task priority for work queue ordering, MLFS uses a heuristic scheduling method. The data from this method is then used to train a deep reinforcement learning model for job scheduling. In comparison to existing work schedules, the proposed system is shown to reduce job completion time by up to 53%, makespan by up to 52%, and increase accuracy by up to 64%. The system is tested using real experiments and large-scale simulations based on real traces.

In Han et al. [ 54 ], the authors present KaiS, an edge-cloud Kubernetes scheduling framework based on learning. KaiS models system state data using graph neural networks and a coordinated multi-agent actor-critic method for decentralized request dispatch. Research indicates that when compared to baselines, KaiS can increase average system throughput rate by 14.3% and decrease scheduling cost by 34.7%.

In Casquero et al. [ 55 ], the Kubernetes orchestrator's scheduling task is distributed among processing nodes by the authors' proposed custom scheduler, which makes use of a Multi-Agent System (MAS). According to the authors, this method is quicker than the centralized scheduling strategy employed by the default Kubernetes scheduler.

In Yang et al. [ 56 ], the authors propose a method for optimizing Kubernetes' container scheduling algorithm by combining the grey system theory with the LSTM (Long Short-Term Memory) neural network prediction method. They perform experiments to evaluate their approach and find that it can reduce the resource fragmentation problem of working nodes in the cluster and increase the utilization of cluster resources.

In Zhang et al. [ 57 ], a highly scalable cluster scheduling system for Kubernetes, termed as Zeus, is proposed by the authors. The main feature of Zeus is that based on the actual server utilization it schedules the best-effort jobs. It has the ability to adaptively divide resources between workloads of two different classes. Zeus is meant to enable the safe colocation of best-effort processes and latency-sensitive services. The authors test Zeus in a real-world setting and discover that it can raise average CPU utilization from 15 to 60% without violating Service Level Objectives (SLOs).

In Liu et al. [ 58 ], the authors suggest a scheduling strategy for deep learning tasks on Kubernetes that takes into account the tasks' resource usage characteristics. To increase task execution efficiency and load balancing, the suggested paradigm, dubbed FBSM, has modules for a GPU sniffer and a balance-aware scheduler. The execution of deep learning tasks is sped up by the suggested system, known as KubFBS, according to the authors' evaluation, which also reveals improved load balancing capabilities for the cluster.

In Rahali et al. [ 59 ], the authors propose a solution for resource allocation in a Kubernetes infrastructure hosting network service. The proposed solution aims to avoid resource shortages and protect the most critical functions. The authors use a statistical approach to model and solve the problem, given the random nature of the treated information.

The above-mentioned schemes are summarized in Table 3 .

Autoscaling-enabled scheduling

Autoscaling is an important feature in Kubernetes scheduling because it allows for automatic adjustment of the resources allocated to pods based on the current demand. It allows efficient resource utilization, improved performance, cost savings, and high availability of the application. Auto rescaling and scheduling are related in that auto rescaling can be used to ensure that there are always enough resources available to handle the tasks that are scheduled. For example, if the scheduler assigns a new task to a worker node, but that node does not have enough resources to execute the task, the auto scaler can add more resources to that node or spin up a new node to handle the task. In this way, auto rescaling and scheduling work together to ensure that a distributed system is able to handle changing workloads and optimize resource utilization. Some of the schemes related to this category are surveyed below.

In Taherizadeh et al. [ 60 ], the authors propose a new dynamic multi-level (DM) autoscaling method for container-based cloud applications. The DM method uses both infrastructure- and application-level monitoring data to determine when to scale up or down, and its thresholds are dynamically adjusted based on workload conditions. The authors compare the performance of the DM method to seven existing autoscaling methods using synthetic and real-world workloads. They find that the DM method has better overall performance than the other methods, particularly in terms of response time and the number of instantiated containers. SWITCH system was used to implement the DM method for time-critical cloud applications.

In Rattihalli et al. [ 61 ], the authors propose a new resource management system called RUBAS that can dynamically adjust the allocation of containers running in a Kubernetes cluster. RUBAS incorporates container migration to improve upon the Kubernetes Vertical Pod Autoscaler (VPA) system non-disruptively. The authors evaluate RUBAS using multiple scientific benchmarks and compare its performance to Kubernetes VPA. They find that RUBAS improves CPU and memory utilization by 10% and reduces runtime by 15% with an overhead for each application ranging from 5–20%.

In Toka et al. [ 62 ], the authors present a Kubernetes scaling engine that uses machine learning forecast methods to make better autoscaling decisions for cloud-based applications. The engine's short-term evaluation loop allows it to adapt to changing request dynamics, and the authors introduce a compact management parameter for cloud tenants to easily set their desired level of resource over-provisioning vs. service level agreement (SLA) violations. The proposed engine is evaluated in simulations and with measurements on Web trace data, and the results show that it results in fewer lost requests and slightly more provisioned resources compared to the default Kubernetes baseline.

In Balla et al. [ 63 ], the authors propose an adaptive autoscaler called Libra, which automatically detects the optimal resource set for a single pod and manages the horizontal scaling process. Libra is also able to adapt the resource definition for the pod and adjust the horizontal scaling process if the load or underlying virtualized environment changes. The authors evaluate Libra in simulations and show that it can reduce the average CPU and memory utilization by up to 48% and 39%, respectively, compared to the default Kubernetes autoscaler.

In another work by Toka et al. [ 64 ], the authors propose a Kubernetes scaling engine that uses multiple AI-based forecast methods to make autoscaling decisions that are better suited to handle the variability of incoming requests. The authors also introduce a compact management parameter to help application providers easily set their desired resource over-provisioning and SLA violation trade-off. The proposed engine is evaluated in simulations and with measurements on web traces, showing improved fitting of provisioned resources to service demand.