Operational Hypothesis

An Operational Hypothesis is a testable statement or prediction made in research that not only proposes a relationship between two or more variables but also clearly defines those variables in operational terms, meaning how they will be measured or manipulated within the study. It forms the basis of an experiment that seeks to prove or disprove the assumed relationship, thus helping to drive scientific research.

The Core Components of an Operational Hypothesis

Understanding an operational hypothesis involves identifying its key components and how they interact.

The Variables

An operational hypothesis must contain two or more variables — factors that can be manipulated, controlled, or measured in an experiment.

The Proposed Relationship

Beyond identifying the variables, an operational hypothesis specifies the type of relationship expected between them. This could be a correlation, a cause-and-effect relationship, or another type of association.

The Importance of Operationalizing Variables

Operationalizing variables — defining them in measurable terms — is a critical step in forming an operational hypothesis. This process ensures the variables are quantifiable, enhancing the reliability and validity of the research.

Constructing an Operational Hypothesis

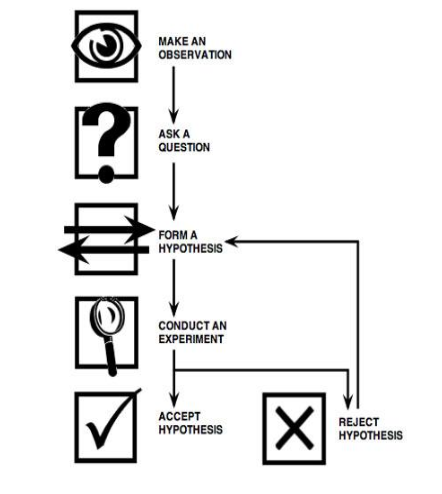

Creating an operational hypothesis is a fundamental step in the scientific method and research process. It involves generating a precise, testable statement that predicts the outcome of a study based on the research question. An operational hypothesis must clearly identify and define the variables under study and describe the expected relationship between them. The process of creating an operational hypothesis involves several key steps:

Steps to Construct an Operational Hypothesis

- Define the Research Question : Start by clearly identifying the research question. This question should highlight the key aspect or phenomenon that the study aims to investigate.

- Identify the Variables : Next, identify the key variables in your study. Variables are elements that you will measure, control, or manipulate in your research. There are typically two types of variables in a hypothesis: the independent variable (the cause) and the dependent variable (the effect).

- Operationalize the Variables : Once you’ve identified the variables, you must operationalize them. This involves defining your variables in such a way that they can be easily measured, manipulated, or controlled during the experiment.

- Predict the Relationship : The final step involves predicting the relationship between the variables. This could be an increase, decrease, or any other type of correlation between the independent and dependent variables.

By following these steps, you will create an operational hypothesis that provides a clear direction for your research, ensuring that your study is grounded in a testable prediction.

Evaluating the Strength of an Operational Hypothesis

Not all operational hypotheses are created equal. The strength of an operational hypothesis can significantly influence the validity of a study. There are several key factors that contribute to the strength of an operational hypothesis:

- Clarity : A strong operational hypothesis is clear and unambiguous. It precisely defines all variables and the expected relationship between them.

- Testability : A key feature of an operational hypothesis is that it must be testable. That is, it should predict an outcome that can be observed and measured.

- Operationalization of Variables : The operationalization of variables contributes to the strength of an operational hypothesis. When variables are clearly defined in measurable terms, it enhances the reliability of the study.

- Alignment with Research : Finally, a strong operational hypothesis aligns closely with the research question and the overall goals of the study.

By carefully crafting and evaluating an operational hypothesis, researchers can ensure that their work provides valuable, valid, and actionable insights.

Examples of Operational Hypotheses

To illustrate the concept further, this section will provide examples of well-constructed operational hypotheses in various research fields.

The operational hypothesis is a fundamental component of scientific inquiry, guiding the research design and providing a clear framework for testing assumptions. By understanding how to construct and evaluate an operational hypothesis, we can ensure our research is both rigorous and meaningful.

Examples of Operational Hypothesis:

- In Education : An operational hypothesis in an educational study might be: “Students who receive tutoring (Independent Variable) will show a 20% improvement in standardized test scores (Dependent Variable) compared to students who did not receive tutoring.”

- In Psychology : In a psychological study, an operational hypothesis could be: “Individuals who meditate for 20 minutes each day (Independent Variable) will report a 15% decrease in self-reported stress levels (Dependent Variable) after eight weeks compared to those who do not meditate.”

- In Health Science : An operational hypothesis in a health science study might be: “Participants who drink eight glasses of water daily (Independent Variable) will show a 10% decrease in reported fatigue levels (Dependent Variable) after three weeks compared to those who drink four glasses of water daily.”

- In Environmental Science : In an environmental study, an operational hypothesis could be: “Cities that implement recycling programs (Independent Variable) will see a 25% reduction in landfill waste (Dependent Variable) after one year compared to cities without recycling programs.”

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Dissertation

- Operationalisation | A Guide with Examples, Pros & Cons

Operationalisation | A Guide with Examples, Pros & Cons

Published on 6 May 2022 by Pritha Bhandari . Revised on 10 October 2022.

Operationalisation means turning abstract concepts into measurable observations. Although some concepts, like height or age, are easily measured, others, like spirituality or anxiety, are not.

Through operationalisation, you can systematically collect data on processes and phenomena that aren’t directly observable.

- Self-rating scores on a social anxiety scale

- Number of recent behavioural incidents of avoidance of crowded places

- Intensity of physical anxiety symptoms in social situations

Instantly correct all language mistakes in your text

Be assured that you'll submit flawless writing. Upload your document to correct all your mistakes.

Table of contents

Why operationalisation matters, how to operationalise concepts, strengths of operationalisation, limitations of operationalisation, frequently asked questions about operationalisation.

In quantitative research , it’s important to precisely define the variables that you want to study.

Without transparent and specific operational definitions, researchers may measure irrelevant concepts or inconsistently apply methods. Operationalisation reduces subjectivity and increases the reliability of your study.

Your choice of operational definition can sometimes affect your results. For example, an experimental intervention for social anxiety may reduce self-rating anxiety scores but not behavioural avoidance of crowded places. This means that your results are context-specific and may not generalise to different real-life settings.

Generally, abstract concepts can be operationalised in many different ways. These differences mean that you may actually measure slightly different aspects of a concept, so it’s important to be specific about what you are measuring.

If you test a hypothesis using multiple operationalisations of a concept, you can check whether your results depend on the type of measure that you use. If your results don’t vary when you use different measures, then they are said to be ‘robust’.

The only proofreading tool specialized in correcting academic writing

The academic proofreading tool has been trained on 1000s of academic texts and by native English editors. Making it the most accurate and reliable proofreading tool for students.

Correct my document today

There are three main steps for operationalisation:

- Identify the main concepts you are interested in studying.

- Choose a variable to represent each of the concepts.

- Select indicators for each of your variables.

Step 1: Identify the main concepts you are interested in studying

Based on your research interests and goals, define your topic and come up with an initial research question .

There are two main concepts in your research question:

- Social media behaviour

Step 2: Choose a variable to represent each of the concepts

Your main concepts may each have many variables , or properties, that you can measure.

For instance, are you going to measure the amount of sleep or the quality of sleep? And are you going to measure how often teenagers use social media, which social media they use, or when they use it?

- Alternate hypothesis: Lower quality of sleep is related to higher night-time social media use in teenagers.

- Null hypothesis: There is no relation between quality of sleep and night-time social media use in teenagers.

Step 3: Select indicators for each of your variables

To measure your variables, decide on indicators that can represent them numerically.

Sometimes these indicators will be obvious: for example, the amount of sleep is represented by the number of hours per night. But a variable like sleep quality is harder to measure.

You can come up with practical ideas for how to measure variables based on previously published studies. These may include established scales or questionnaires that you can distribute to your participants. If none are available that are appropriate for your sample, you can develop your own scales or questionnaires.

- To measure sleep quality, you give participants wristbands that track sleep phases.

- To measure night-time social media use, you create a questionnaire that asks participants to track how much time they spend using social media in bed.

After operationalising your concepts, it’s important to report your study variables and indicators when writing up your methodology section. You can evaluate how your choice of operationalisation may have affected your results or interpretations in the discussion section.

Operationalisation makes it possible to consistently measure variables across different contexts.

Scientific research is based on observable and measurable findings. Operational definitions break down intangible concepts into recordable characteristics.

Objectivity

A standardised approach for collecting data leaves little room for subjective or biased personal interpretations of observations.

Reliability

A good operationalisation can be used consistently by other researchers. If other people measure the same thing using your operational definition, they should all get the same results.

Operational definitions of concepts can sometimes be problematic.

Underdetermination

Many concepts vary across different time periods and social settings.

For example, poverty is a worldwide phenomenon, but the exact income level that determines poverty can differ significantly across countries.

Reductiveness

Operational definitions can easily miss meaningful and subjective perceptions of concepts by trying to reduce complex concepts to numbers.

For example, asking consumers to rate their satisfaction with a service on a 5-point scale will tell you nothing about why they felt that way.

Lack of universality

Context-specific operationalisations help preserve real-life experiences, but make it hard to compare studies if the measures differ significantly.

For example, corruption can be operationalised in a wide range of ways (e.g., perceptions of corrupt business practices, or frequency of bribe requests from public officials), but the measures may not consistently reflect the same concept.

Prevent plagiarism, run a free check.

Operationalisation means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioural avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalise the variables that you want to measure.

In scientific research, concepts are the abstract ideas or phenomena that are being studied (e.g., educational achievement). Variables are properties or characteristics of the concept (e.g., performance at school), while indicators are ways of measuring or quantifying variables (e.g., yearly grade reports).

The process of turning abstract concepts into measurable variables and indicators is called operationalisation .

Reliability and validity are both about how well a method measures something:

- Reliability refers to the consistency of a measure (whether the results can be reproduced under the same conditions).

- Validity refers to the accuracy of a measure (whether the results really do represent what they are supposed to measure).

If you are doing experimental research , you also have to consider the internal and external validity of your experiment.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bhandari, P. (2022, October 10). Operationalisation | A Guide with Examples, Pros & Cons. Scribbr. Retrieved 22 April 2024, from https://www.scribbr.co.uk/thesis-dissertation/operationalisation/

Is this article helpful?

Pritha Bhandari

- cognitive sophistication

- tolerance of diversity

- exposure to higher levels of math or science

- age (which is currently related to educational level in many countries)

- social class and other variables.

- For example, suppose you designed a treatment to help people stop smoking. Because you are really dedicated, you assigned the same individuals simultaneously to (1) a "stop smoking" nicotine patch; (2) a "quit buddy"; and (3) a discussion support group. Compared with a group in which no intervention at all occurred, your experimental group now smokes 10 fewer cigarettes per day.

- There is no relationship among two or more variables (EXAMPLE: the correlation between educational level and income is zero)

- Or that two or more populations or subpopulations are essentially the same (EXAMPLE: women and men have the same average science knowledge scores.)

- the difference between two and three children = one child.

- the difference between eight and nine children also = one child.

- the difference between completing ninth grade and tenth grade is one year of school

- the difference between completing junior and senior year of college is one year of school

- In addition to all the properties of nominal, ordinal, and interval variables, ratio variables also have a fixed/non-arbitrary zero point. Non arbitrary means that it is impossible to go below a score of zero for that variable. For example, any bottom score on IQ or aptitude tests is created by human beings and not nature. On the other hand, scientists believe they have isolated an "absolute zero." You can't get colder than that.

- Resources Home 🏠

- Try SciSpace Copilot

- Search research papers

- Add Copilot Extension

- Try AI Detector

- Try Paraphraser

- Try Citation Generator

- April Papers

- June Papers

- July Papers

The Craft of Writing a Strong Hypothesis

Table of Contents

Writing a hypothesis is one of the essential elements of a scientific research paper. It needs to be to the point, clearly communicating what your research is trying to accomplish. A blurry, drawn-out, or complexly-structured hypothesis can confuse your readers. Or worse, the editor and peer reviewers.

A captivating hypothesis is not too intricate. This blog will take you through the process so that, by the end of it, you have a better idea of how to convey your research paper's intent in just one sentence.

What is a Hypothesis?

The first step in your scientific endeavor, a hypothesis, is a strong, concise statement that forms the basis of your research. It is not the same as a thesis statement , which is a brief summary of your research paper .

The sole purpose of a hypothesis is to predict your paper's findings, data, and conclusion. It comes from a place of curiosity and intuition . When you write a hypothesis, you're essentially making an educated guess based on scientific prejudices and evidence, which is further proven or disproven through the scientific method.

The reason for undertaking research is to observe a specific phenomenon. A hypothesis, therefore, lays out what the said phenomenon is. And it does so through two variables, an independent and dependent variable.

The independent variable is the cause behind the observation, while the dependent variable is the effect of the cause. A good example of this is “mixing red and blue forms purple.” In this hypothesis, mixing red and blue is the independent variable as you're combining the two colors at your own will. The formation of purple is the dependent variable as, in this case, it is conditional to the independent variable.

Different Types of Hypotheses

Types of hypotheses

Some would stand by the notion that there are only two types of hypotheses: a Null hypothesis and an Alternative hypothesis. While that may have some truth to it, it would be better to fully distinguish the most common forms as these terms come up so often, which might leave you out of context.

Apart from Null and Alternative, there are Complex, Simple, Directional, Non-Directional, Statistical, and Associative and casual hypotheses. They don't necessarily have to be exclusive, as one hypothesis can tick many boxes, but knowing the distinctions between them will make it easier for you to construct your own.

1. Null hypothesis

A null hypothesis proposes no relationship between two variables. Denoted by H 0 , it is a negative statement like “Attending physiotherapy sessions does not affect athletes' on-field performance.” Here, the author claims physiotherapy sessions have no effect on on-field performances. Even if there is, it's only a coincidence.

2. Alternative hypothesis

Considered to be the opposite of a null hypothesis, an alternative hypothesis is donated as H1 or Ha. It explicitly states that the dependent variable affects the independent variable. A good alternative hypothesis example is “Attending physiotherapy sessions improves athletes' on-field performance.” or “Water evaporates at 100 °C. ” The alternative hypothesis further branches into directional and non-directional.

- Directional hypothesis: A hypothesis that states the result would be either positive or negative is called directional hypothesis. It accompanies H1 with either the ‘<' or ‘>' sign.

- Non-directional hypothesis: A non-directional hypothesis only claims an effect on the dependent variable. It does not clarify whether the result would be positive or negative. The sign for a non-directional hypothesis is ‘≠.'

3. Simple hypothesis

A simple hypothesis is a statement made to reflect the relation between exactly two variables. One independent and one dependent. Consider the example, “Smoking is a prominent cause of lung cancer." The dependent variable, lung cancer, is dependent on the independent variable, smoking.

4. Complex hypothesis

In contrast to a simple hypothesis, a complex hypothesis implies the relationship between multiple independent and dependent variables. For instance, “Individuals who eat more fruits tend to have higher immunity, lesser cholesterol, and high metabolism.” The independent variable is eating more fruits, while the dependent variables are higher immunity, lesser cholesterol, and high metabolism.

5. Associative and casual hypothesis

Associative and casual hypotheses don't exhibit how many variables there will be. They define the relationship between the variables. In an associative hypothesis, changing any one variable, dependent or independent, affects others. In a casual hypothesis, the independent variable directly affects the dependent.

6. Empirical hypothesis

Also referred to as the working hypothesis, an empirical hypothesis claims a theory's validation via experiments and observation. This way, the statement appears justifiable and different from a wild guess.

Say, the hypothesis is “Women who take iron tablets face a lesser risk of anemia than those who take vitamin B12.” This is an example of an empirical hypothesis where the researcher the statement after assessing a group of women who take iron tablets and charting the findings.

7. Statistical hypothesis

The point of a statistical hypothesis is to test an already existing hypothesis by studying a population sample. Hypothesis like “44% of the Indian population belong in the age group of 22-27.” leverage evidence to prove or disprove a particular statement.

Characteristics of a Good Hypothesis

Writing a hypothesis is essential as it can make or break your research for you. That includes your chances of getting published in a journal. So when you're designing one, keep an eye out for these pointers:

- A research hypothesis has to be simple yet clear to look justifiable enough.

- It has to be testable — your research would be rendered pointless if too far-fetched into reality or limited by technology.

- It has to be precise about the results —what you are trying to do and achieve through it should come out in your hypothesis.

- A research hypothesis should be self-explanatory, leaving no doubt in the reader's mind.

- If you are developing a relational hypothesis, you need to include the variables and establish an appropriate relationship among them.

- A hypothesis must keep and reflect the scope for further investigations and experiments.

Separating a Hypothesis from a Prediction

Outside of academia, hypothesis and prediction are often used interchangeably. In research writing, this is not only confusing but also incorrect. And although a hypothesis and prediction are guesses at their core, there are many differences between them.

A hypothesis is an educated guess or even a testable prediction validated through research. It aims to analyze the gathered evidence and facts to define a relationship between variables and put forth a logical explanation behind the nature of events.

Predictions are assumptions or expected outcomes made without any backing evidence. They are more fictionally inclined regardless of where they originate from.

For this reason, a hypothesis holds much more weight than a prediction. It sticks to the scientific method rather than pure guesswork. "Planets revolve around the Sun." is an example of a hypothesis as it is previous knowledge and observed trends. Additionally, we can test it through the scientific method.

Whereas "COVID-19 will be eradicated by 2030." is a prediction. Even though it results from past trends, we can't prove or disprove it. So, the only way this gets validated is to wait and watch if COVID-19 cases end by 2030.

Finally, How to Write a Hypothesis

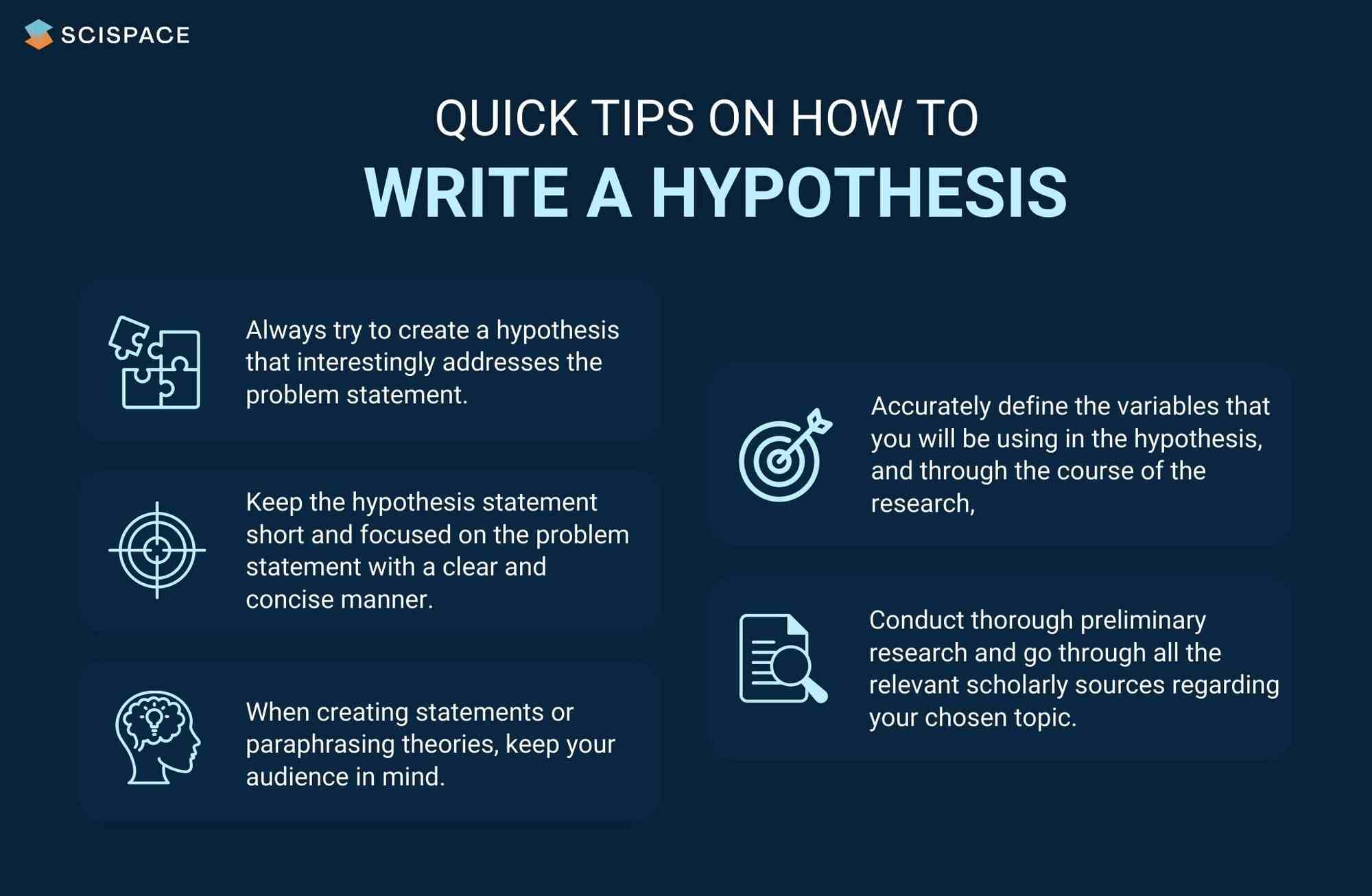

Quick tips on writing a hypothesis

1. Be clear about your research question

A hypothesis should instantly address the research question or the problem statement. To do so, you need to ask a question. Understand the constraints of your undertaken research topic and then formulate a simple and topic-centric problem. Only after that can you develop a hypothesis and further test for evidence.

2. Carry out a recce

Once you have your research's foundation laid out, it would be best to conduct preliminary research. Go through previous theories, academic papers, data, and experiments before you start curating your research hypothesis. It will give you an idea of your hypothesis's viability or originality.

Making use of references from relevant research papers helps draft a good research hypothesis. SciSpace Discover offers a repository of over 270 million research papers to browse through and gain a deeper understanding of related studies on a particular topic. Additionally, you can use SciSpace Copilot , your AI research assistant, for reading any lengthy research paper and getting a more summarized context of it. A hypothesis can be formed after evaluating many such summarized research papers. Copilot also offers explanations for theories and equations, explains paper in simplified version, allows you to highlight any text in the paper or clip math equations and tables and provides a deeper, clear understanding of what is being said. This can improve the hypothesis by helping you identify potential research gaps.

3. Create a 3-dimensional hypothesis

Variables are an essential part of any reasonable hypothesis. So, identify your independent and dependent variable(s) and form a correlation between them. The ideal way to do this is to write the hypothetical assumption in the ‘if-then' form. If you use this form, make sure that you state the predefined relationship between the variables.

In another way, you can choose to present your hypothesis as a comparison between two variables. Here, you must specify the difference you expect to observe in the results.

4. Write the first draft

Now that everything is in place, it's time to write your hypothesis. For starters, create the first draft. In this version, write what you expect to find from your research.

Clearly separate your independent and dependent variables and the link between them. Don't fixate on syntax at this stage. The goal is to ensure your hypothesis addresses the issue.

5. Proof your hypothesis

After preparing the first draft of your hypothesis, you need to inspect it thoroughly. It should tick all the boxes, like being concise, straightforward, relevant, and accurate. Your final hypothesis has to be well-structured as well.

Research projects are an exciting and crucial part of being a scholar. And once you have your research question, you need a great hypothesis to begin conducting research. Thus, knowing how to write a hypothesis is very important.

Now that you have a firmer grasp on what a good hypothesis constitutes, the different kinds there are, and what process to follow, you will find it much easier to write your hypothesis, which ultimately helps your research.

Now it's easier than ever to streamline your research workflow with SciSpace Discover . Its integrated, comprehensive end-to-end platform for research allows scholars to easily discover, write and publish their research and fosters collaboration.

It includes everything you need, including a repository of over 270 million research papers across disciplines, SEO-optimized summaries and public profiles to show your expertise and experience.

If you found these tips on writing a research hypothesis useful, head over to our blog on Statistical Hypothesis Testing to learn about the top researchers, papers, and institutions in this domain.

Frequently Asked Questions (FAQs)

1. what is the definition of hypothesis.

According to the Oxford dictionary, a hypothesis is defined as “An idea or explanation of something that is based on a few known facts, but that has not yet been proved to be true or correct”.

2. What is an example of hypothesis?

The hypothesis is a statement that proposes a relationship between two or more variables. An example: "If we increase the number of new users who join our platform by 25%, then we will see an increase in revenue."

3. What is an example of null hypothesis?

A null hypothesis is a statement that there is no relationship between two variables. The null hypothesis is written as H0. The null hypothesis states that there is no effect. For example, if you're studying whether or not a particular type of exercise increases strength, your null hypothesis will be "there is no difference in strength between people who exercise and people who don't."

4. What are the types of research?

• Fundamental research

• Applied research

• Qualitative research

• Quantitative research

• Mixed research

• Exploratory research

• Longitudinal research

• Cross-sectional research

• Field research

• Laboratory research

• Fixed research

• Flexible research

• Action research

• Policy research

• Classification research

• Comparative research

• Causal research

• Inductive research

• Deductive research

5. How to write a hypothesis?

• Your hypothesis should be able to predict the relationship and outcome.

• Avoid wordiness by keeping it simple and brief.

• Your hypothesis should contain observable and testable outcomes.

• Your hypothesis should be relevant to the research question.

6. What are the 2 types of hypothesis?

• Null hypotheses are used to test the claim that "there is no difference between two groups of data".

• Alternative hypotheses test the claim that "there is a difference between two data groups".

7. Difference between research question and research hypothesis?

A research question is a broad, open-ended question you will try to answer through your research. A hypothesis is a statement based on prior research or theory that you expect to be true due to your study. Example - Research question: What are the factors that influence the adoption of the new technology? Research hypothesis: There is a positive relationship between age, education and income level with the adoption of the new technology.

8. What is plural for hypothesis?

The plural of hypothesis is hypotheses. Here's an example of how it would be used in a statement, "Numerous well-considered hypotheses are presented in this part, and they are supported by tables and figures that are well-illustrated."

9. What is the red queen hypothesis?

The red queen hypothesis in evolutionary biology states that species must constantly evolve to avoid extinction because if they don't, they will be outcompeted by other species that are evolving. Leigh Van Valen first proposed it in 1973; since then, it has been tested and substantiated many times.

10. Who is known as the father of null hypothesis?

The father of the null hypothesis is Sir Ronald Fisher. He published a paper in 1925 that introduced the concept of null hypothesis testing, and he was also the first to use the term itself.

11. When to reject null hypothesis?

You need to find a significant difference between your two populations to reject the null hypothesis. You can determine that by running statistical tests such as an independent sample t-test or a dependent sample t-test. You should reject the null hypothesis if the p-value is less than 0.05.

You might also like

Consensus GPT vs. SciSpace GPT: Choose the Best GPT for Research

Literature Review and Theoretical Framework: Understanding the Differences

Types of Essays in Academic Writing - Quick Guide (2024)

The Research Hypothesis: Role and Construction

- First Online: 01 January 2012

Cite this chapter

- Phyllis G. Supino EdD 3

5991 Accesses

A hypothesis is a logical construct, interposed between a problem and its solution, which represents a proposed answer to a research question. It gives direction to the investigator’s thinking about the problem and, therefore, facilitates a solution. There are three primary modes of inference by which hypotheses are developed: deduction (reasoning from a general propositions to specific instances), induction (reasoning from specific instances to a general proposition), and abduction (formulation/acceptance on probation of a hypothesis to explain a surprising observation).

A research hypothesis should reflect an inference about variables; be stated as a grammatically complete, declarative sentence; be expressed simply and unambiguously; provide an adequate answer to the research problem; and be testable. Hypotheses can be classified as conceptual versus operational, single versus bi- or multivariable, causal or not causal, mechanistic versus nonmechanistic, and null or alternative. Hypotheses most commonly entail statements about “variables” which, in turn, can be classified according to their level of measurement (scaling characteristics) or according to their role in the hypothesis (independent, dependent, moderator, control, or intervening).

A hypothesis is rendered operational when its broadly (conceptually) stated variables are replaced by operational definitions of those variables. Hypotheses stated in this manner are called operational hypotheses, specific hypotheses, or predictions and facilitate testing.

Wrong hypotheses, rightly worked from, have produced more results than unguided observation

—Augustus De Morgan, 1872[ 1 ]—

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

De Morgan A, De Morgan S. A budget of paradoxes. London: Longmans Green; 1872.

Google Scholar

Leedy Paul D. Practical research. Planning and design. 2nd ed. New York: Macmillan; 1960.

Bernard C. Introduction to the study of experimental medicine. New York: Dover; 1957.

Erren TC. The quest for questions—on the logical force of science. Med Hypotheses. 2004;62:635–40.

Article PubMed Google Scholar

Peirce CS. Collected papers of Charles Sanders Peirce, vol. 7. In: Hartshorne C, Weiss P, editors. Boston: The Belknap Press of Harvard University Press; 1966.

Aristotle. The complete works of Aristotle: the revised Oxford Translation. In: Barnes J, editor. vol. 2. Princeton/New Jersey: Princeton University Press; 1984.

Polit D, Beck CT. Conceptualizing a study to generate evidence for nursing. In: Polit D, Beck CT, editors. Nursing research: generating and assessing evidence for nursing practice. 8th ed. Philadelphia: Wolters Kluwer/Lippincott Williams and Wilkins; 2008. Chapter 4.

Jenicek M, Hitchcock DL. Evidence-based practice. Logic and critical thinking in medicine. Chicago: AMA Press; 2005.

Bacon F. The novum organon or a true guide to the interpretation of nature. A new translation by the Rev G.W. Kitchin. Oxford: The University Press; 1855.

Popper KR. Objective knowledge: an evolutionary approach (revised edition). New York: Oxford University Press; 1979.

Morgan AJ, Parker S. Translational mini-review series on vaccines: the Edward Jenner Museum and the history of vaccination. Clin Exp Immunol. 2007;147:389–94.

Article PubMed CAS Google Scholar

Pead PJ. Benjamin Jesty: new light in the dawn of vaccination. Lancet. 2003;362:2104–9.

Lee JA. The scientific endeavor: a primer on scientific principles and practice. San Francisco: Addison-Wesley Longman; 2000.

Allchin D. Lawson’s shoehorn, or should the philosophy of science be rated, ‘X’? Science and Education. 2003;12:315–29.

Article Google Scholar

Lawson AE. What is the role of induction and deduction in reasoning and scientific inquiry? J Res Sci Teach. 2005;42:716–40.

Peirce CS. Collected papers of Charles Sanders Peirce, vol. 2. In: Hartshorne C, Weiss P, editors. Boston: The Belknap Press of Harvard University Press; 1965.

Bonfantini MA, Proni G. To guess or not to guess? In: Eco U, Sebeok T, editors. The sign of three: Dupin, Holmes, Peirce. Bloomington: Indiana University Press; 1983. Chapter 5.

Peirce CS. Collected papers of Charles Sanders Peirce, vol. 5. In: Hartshorne C, Weiss P, editors. Boston: The Belknap Press of Harvard University Press; 1965.

Flach PA, Kakas AC. Abductive and inductive reasoning: background issues. In: Flach PA, Kakas AC, editors. Abduction and induction. Essays on their relation and integration. The Netherlands: Klewer; 2000. Chapter 1.

Murray JF. Voltaire, Walpole and Pasteur: variations on the theme of discovery. Am J Respir Crit Care Med. 2005;172:423–6.

Danemark B, Ekstrom M, Jakobsen L, Karlsson JC. Methodological implications, generalization, scientific inference, models (Part II) In: explaining society. Critical realism in the social sciences. New York: Routledge; 2002.

Pasteur L. Inaugural lecture as professor and dean of the faculty of sciences. In: Peterson H, editor. A treasury of the world’s greatest speeches. Douai, France: University of Lille 7 Dec 1954.

Swineburne R. Simplicity as evidence for truth. Milwaukee: Marquette University Press; 1997.

Sakar S, editor. Logical empiricism at its peak: Schlick, Carnap and Neurath. New York: Garland; 1996.

Popper K. The logic of scientific discovery. New York: Basic Books; 1959. 1934, trans. 1959.

Caws P. The philosophy of science. Princeton: D. Van Nostrand Company; 1965.

Popper K. Conjectures and refutations. The growth of scientific knowledge. 4th ed. London: Routledge and Keegan Paul; 1972.

Feyerabend PK. Against method, outline of an anarchistic theory of knowledge. London, UK: Verso; 1978.

Smith PG. Popper: conjectures and refutations (Chapter IV). In: Theory and reality: an introduction to the philosophy of science. Chicago: University of Chicago Press; 2003.

Blystone RV, Blodgett K. WWW: the scientific method. CBE Life Sci Educ. 2006;5:7–11.

Kleinbaum DG, Kupper LL, Morgenstern H. Epidemiological research. Principles and quantitative methods. New York: Van Nostrand Reinhold; 1982.

Fortune AE, Reid WJ. Research in social work. 3rd ed. New York: Columbia University Press; 1999.

Kerlinger FN. Foundations of behavioral research. 1st ed. New York: Hold, Reinhart and Winston; 1970.

Hoskins CN, Mariano C. Research in nursing and health. Understanding and using quantitative and qualitative methods. New York: Springer; 2004.

Tuckman BW. Conducting educational research. New York: Harcourt, Brace, Jovanovich; 1972.

Wang C, Chiari PC, Weihrauch D, Krolikowski JG, Warltier DC, Kersten JR, Pratt Jr PF, Pagel PS. Gender-specificity of delayed preconditioning by isoflurane in rabbits: potential role of endothelial nitric oxide synthase. Anesth Analg. 2006;103:274–80.

Beyer ME, Slesak G, Nerz S, Kazmaier S, Hoffmeister HM. Effects of endothelin-1 and IRL 1620 on myocardial contractility and myocardial energy metabolism. J Cardiovasc Pharmacol. 1995;26(Suppl 3):S150–2.

PubMed CAS Google Scholar

Stone J, Sharpe M. Amnesia for childhood in patients with unexplained neurological symptoms. J Neurol Neurosurg Psychiatry. 2002;72:416–7.

Naughton BJ, Moran M, Ghaly Y, Michalakes C. Computer tomography scanning and delirium in elder patients. Acad Emerg Med. 1997;4:1107–10.

Easterbrook PJ, Berlin JA, Gopalan R, Matthews DR. Publication bias in clinical research. Lancet. 1991;337:867–72.

Stern JM, Simes RJ. Publication bias: evidence of delayed publication in a cohort study of clinical research projects. BMJ. 1997;315:640–5.

Stevens SS. On the theory of scales and measurement. Science. 1946;103:677–80.

Knapp TR. Treating ordinal scales as interval scales: an attempt to resolve the controversy. Nurs Res. 1990;39:121–3.

The Cochrane Collaboration. Open Learning Material. www.cochrane-net.org/openlearning/html/mod14-3.htm . Accessed 12 Oct 2009.

MacCorquodale K, Meehl PE. On a distinction between hypothetical constructs and intervening variables. Psychol Rev. 1948;55:95–107.

Baron RM, Kenny DA. The moderator-mediator variable distinction in social psychological research: conceptual, strategic and statistical considerations. J Pers Soc Psychol. 1986;51:1173–82.

Williamson GM, Schultz R. Activity restriction mediates the association between pain and depressed affect: a study of younger and older adult cancer patients. Psychol Aging. 1995;10:369–78.

Song M, Lee EO. Development of a functional capacity model for the elderly. Res Nurs Health. 1998;21:189–98.

MacKinnon DP. Introduction to statistical mediation analysis. New York: Routledge; 2008.

Download references

Author information

Authors and affiliations.

Department of Medicine, College of Medicine, SUNY Downstate Medical Center, 450 Clarkson Avenue, 1199, Brooklyn, NY, 11203, USA

Phyllis G. Supino EdD

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Phyllis G. Supino EdD .

Editor information

Editors and affiliations.

, Cardiovascular Medicine, SUNY Downstate Medical Center, Clarkson Avenue, box 1199 450, Brooklyn, 11203, USA

Phyllis G. Supino

, Cardiovascualr Medicine, SUNY Downstate Medical Center, Clarkson Avenue 450, Brooklyn, 11203, USA

Jeffrey S. Borer

Rights and permissions

Reprints and permissions

Copyright information

© 2012 Springer Science+Business Media, LLC

About this chapter

Supino, P.G. (2012). The Research Hypothesis: Role and Construction. In: Supino, P., Borer, J. (eds) Principles of Research Methodology. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-3360-6_3

Download citation

DOI : https://doi.org/10.1007/978-1-4614-3360-6_3

Published : 18 April 2012

Publisher Name : Springer, New York, NY

Print ISBN : 978-1-4614-3359-0

Online ISBN : 978-1-4614-3360-6

eBook Packages : Medicine Medicine (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

10.3 Operational definitions

Learning objectives.

Learners will be able to…

- Define and give an example of indicators and attributes for a variable

- Apply the three components of an operational definition to a variable

- Distinguish between levels of measurement for a variable and how those differences relate to measurement

- Describe the purpose of composite measures like scales and indices

Conceptual definitions are like dictionary definitions. They tell you what a concept means by defining it using other concepts. Operationalization occurs after conceptualization and is the process by which researchers spell out precisely how a concept will be measured in their study. It involves identifying the specific research procedures we will use to gather data about our concepts. It entails identifying indicators that can identify when your variable is present or not, the magnitude of the variable, and so forth.

Operationalization works by identifying specific indicators that will be taken to represent the ideas we are interested in studying. Let’s look at an example. Each day, Gallup researchers poll 1,000 randomly selected Americans to ask them about their well-being. To measure well-being, Gallup asks these people to respond to questions covering six broad areas: physical health, emotional health, work environment, life evaluation, healthy behaviors, and access to basic necessities. Gallup uses these six factors as indicators of the concept that they are really interested in, which is well-being .

Identifying indicators can be even simpler than this example. Political party affiliation is another relatively easy concept for which to identify indicators. If you asked a person what party they voted for in the last national election (or gained access to their voting records), you would get a good indication of their party affiliation. Of course, some voters split tickets between multiple parties when they vote and others swing from party to party each election, so our indicator is not perfect. Indeed, if our study were about political identity as a key concept, operationalizing it solely in terms of who they voted for in the previous election leaves out a lot of information about identity that is relevant to that concept. Nevertheless, it’s a pretty good indicator of political party affiliation.

Choosing indicators is not an arbitrary process. Your conceptual definitions point you in the direction of relevant indicators and then you can identify appropriate indicators in a scholarly manner using theory and empirical evidence. Specifically, empirical work will give you some examples of how the important concepts in an area have been measured in the past and what sorts of indicators have been used. Often, it makes sense to use the same indicators as previous researchers; however, you may find that some previous measures have potential weaknesses that your own study may improve upon.

So far in this section, all of the examples of indicators deal with questions you might ask a research participant on a questionnaire for survey research. If you plan to collect data from other sources, such as through direct observation or the analysis of available records, think practically about what the design of your study might look like and how you can collect data on various indicators feasibly. If your study asks about whether participants regularly change the oil in their car, you will likely not observe them directly doing so. Instead, you would rely on a survey question that asks them the frequency with which they change their oil or ask to see their car maintenance records.

TRACK 1 (IF YOU ARE CREATING A RESEARCH PROPOSAL FOR THIS CLASS):

What indicators are commonly used to measure the variables in your research question?

- How can you feasibly collect data on these indicators?

- Are you planning to collect your own data using a questionnaire or interview? Or are you planning to analyze available data like client files or raw data shared from another researcher’s project?

Remember, you need raw data . Your research project cannot rely solely on the results reported by other researchers or the arguments you read in the literature. A literature review is only the first part of a research project, and your review of the literature should inform the indicators you end up choosing when you measure the variables in your research question.

TRACK 2 (IF YOU AREN’T CREATING A RESEARCH PROPOSAL FOR THIS CLASS):

You are interested in studying older adults’ social-emotional well-being. Specifically, you would like to research the impact on levels of older adult loneliness of an intervention that pairs older adults living in assisted living communities with university student volunteers for a weekly conversation.

- How could you feasibly collect data on these indicators?

- Would you collect your own data using a questionnaire or interview? Or would you analyze available data like client files or raw data shared from another researcher’s project?

Steps in the Operationalization Process

Unlike conceptual definitions which contain other concepts, operational definition consists of the following components: (1) the variable being measured and its attributes, (2) the measure you will use, and (3) how you plan to interpret the data collected from that measure to draw conclusions about the variable you are measuring.

Step 1 of Operationalization: Specify variables and attributes

The first component, the variable, should be the easiest part. At this point in quantitative research, you should have a research question with identifiable variables. When social scientists measure concepts, they often use the language of variables and attributes . A variable refers to a quality or quantity that varies across people or situations. Attributes are the characteristics that make up a variable. For example, the variable hair color could contain attributes such as blonde, brown, black, red, gray, etc.

Levels of measurement

A variable’s attributes determine its level of measurement. There are four possible levels of measurement: nominal, ordinal, interval, and ratio. The first two levels of measurement are categorical , meaning their attributes are categories rather than numbers. The latter two levels of measurement are continuous , meaning their attributes are numbers within a range.

Nominal level of measurement

Hair color is an example of a nominal level of measurement. At the nominal level of measurement , attributes are categorical, and those categories cannot be mathematically ranked. In all nominal levels of measurement, there is no ranking order; the attributes are simply different. Gender and race are two additional variables measured at the nominal level. A variable that has only two possible attributes is called binary or dichotomous . If you are measuring whether an individual has received a specific service, this is a dichotomous variable, as the only two options are received or not received.

What attributes are contained in the variable hair color ? Brown, black, blonde, and red are common colors, but if we only list these attributes, many people may not fit into those categories. This means that our attributes were not exhaustive. Exhaustiveness means that every participant can find a choice for their attribute in the response options. It is up to the researcher to include the most comprehensive attribute choices relevant to their research questions. We may have to list a lot of colors before we can meet the criteria of exhaustiveness. Clearly, there is a point at which exhaustiveness has been reasonably met. If a person insists that their hair color is light burnt sienna , it is not your responsibility to list that as an option. Rather, that person would reasonably be described as brown-haired. Perhaps listing a category for other color would suffice to make our list of colors exhaustive.

What about a person who has multiple hair colors at the same time, such as red and black? They would fall into multiple attributes. This violates the rule of mutual exclusivity , in which a person cannot fall into two different attributes. Instead of listing all of the possible combinations of colors, perhaps you might include a multi-color attribute to describe people with more than one hair color.

Making sure researchers provide mutually exclusive and exhaustive attribute options is about making sure all people are represented in the data record. For many years, the attributes for gender were only male or female. Now, our understanding of gender has evolved to encompass more attributes that better reflect the diversity in the world. Children of parents from different races were often classified as one race or another, even if they identified with both. The option for bi-racial or multi-racial on a survey not only more accurately reflects the racial diversity in the real world but also validates and acknowledges people who identify in that manner. If we did not measure race in this way, we would leave empty the data record for people who identify as biracial or multiracial, impairing our search for truth.

Ordinal level of measurement

Unlike nominal-level measures, attributes at the ordinal level of measurement can be rank-ordered. For example, someone’s degree of satisfaction in their romantic relationship can be ordered by magnitude of satisfaction. That is, you could say you are not at all satisfied, a little satisfied, moderately satisfied, or highly satisfied. Even though these have a rank order to them (not at all satisfied is certainly worse than highly satisfied), we cannot calculate a mathematical distance between those attributes. We can simply say that one attribute of an ordinal-level variable is more or less than another attribute. A variable that is commonly measured at the ordinal level of measurement in social work is education (e.g., less than high school education, high school education or equivalent, some college, associate’s degree, college degree, graduate degree or higher). Just as with nominal level of measurement, ordinal-level attributes should also be exhaustive and mutually exclusive.

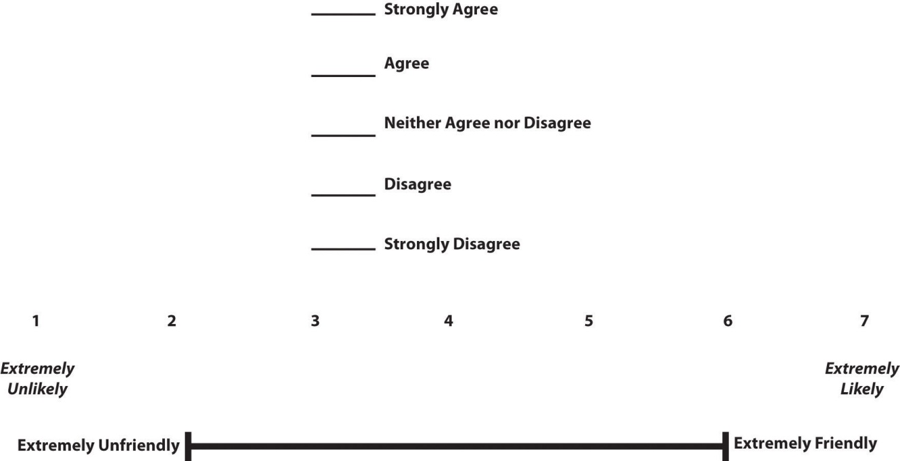

Rating scales for ordinal-level measurement

The fact that we cannot specify exactly how far apart the responses for different individuals in ordinal level of measurement can become clear when using rating scales . If you have ever taken a customer satisfaction survey or completed a course evaluation for school, you are familiar with rating scales such as, “On a scale of 1-5, with 1 being the lowest and 5 being the highest, how likely are you to recommend our company to other people?” Rating scales use numbers, but only as a shorthand, to indicate what attribute (highly likely, somewhat likely, etc.) the person feels describes them best. You wouldn’t say you are “2” likely to recommend the company, but you would say you are “not very likely” to recommend the company. In rating scales the difference between 2 = “ not very likely” and 3 = “ somewhat likely” is not quantifiable as a difference of 1. Likewise, we couldn’t say that it is the same as the difference between 3 = “ somewhat likely ” and 4 = “ very likely .”

Rating scales can be unipolar rating scales where only one dimension is tested, such as frequency (e.g., Never, Rarely, Sometimes, Often, Always) or strength of satisfaction (e.g., Not at all, Somewhat, Very). The attributes on a unipolar rating scale are different magnitudes of the same concept.

There are also bipolar rating scales where there is a dichotomous spectrum, such as liking or disliking (Like very much, Like somewhat, Like slightly, Neither like nor dislike, Dislike slightly, Dislike somewhat, Dislike very much). The attributes on the ends of a bipolar scale are opposites of one another. Figure 10.1 shows several examples of bipolar rating scales.

Interval level of measurement

Interval measures are continuous, meaning the meaning and interpretation of their attributes are numbers, rather than categories. Temperatures in Fahrenheit and Celsius are interval level, as are IQ scores and credit scores. Just like variables measured at the ordinal level, the attributes for variables measured at the interval level should be mutually exclusive and exhaustive, and are rank-ordered. In addition, they also have an equal distance between the attribute values.

The interval level of measurement allows us to examine “how much more” is one attribute when compared to another, which is not possible with nominal or ordinal measures. In other words, the unit of measurement allows us to compare the distance between attributes. The value of one unit of measurement (e.g., one degree Celsius, one IQ point) is always the same regardless of where in the range of values you look. The difference of 10 degrees between a temperature of 50 and 60 degrees Fahrenheit is the same as the difference between 60 and 70 degrees Fahrenheit.

We cannot, however, say with certainty what the ratio of one attribute is in comparison to another. For example, it would not make sense to say that a person with an IQ score of 140 has twice the IQ of a person with a score of 70. However, the difference between IQ scores of 80 and 100 is the same as the difference between IQ scores of 120 and 140.

You may find research in which ordinal-level variables are treated as if they are interval measures for analysis. This can be a problem because as we’ve noted, there is no way to know whether the difference between a 3 and a 4 on a rating scale is the same as the difference between a 2 and a 3. Those numbers are just placeholders for categories.

Ratio level of measurement

The final level of measurement is the ratio level of measurement . Variables measured at the ratio level of measurement are continuous variables, just like with interval scale. They, too, have equal intervals between each point. However, the ratio level of measurement has a true zero, which means that a value of zero on a ratio scale means that the variable you’re measuring is absent. For example, if you have no siblings, the a value of 0 indicates this (unlike a temperature of 0 which does not mean there is no temperature). What is the advantage of having a “true zero?” It allows you to calculate ratios. For example, if you have a three siblings, you can say that this is half the number of siblings as a person with six.

At the ratio level, the attribute values are mutually exclusive and exhaustive, can be rank-ordered, the distance between attributes is equal, and attributes have a true zero point. Thus, with these variables, we can say what the ratio of one attribute is in comparison to another. Examples of ratio-level variables include age and years of education. We know that a person who is 12 years old is twice as old as someone who is 6 years old. Height measured in meters and weight measured in kilograms are good examples. So are counts of discrete objects or events such as the number of siblings one has or the number of questions a student answers correctly on an exam. Measuring interval and ratio data is relatively easy, as people either select or input a number for their answer. If you ask a person how many eggs they purchased last week, they can simply tell you they purchased `a dozen eggs at the store, two at breakfast on Wednesday, or none at all.

The differences between each level of measurement are visualized in Table 10.2.

Levels of measurement=levels of specificity

We have spent time learning how to determine a variable’s level of measurement. Now what? How could we use this information to help us as we measure concepts and develop measurement tools? First, the types of statistical tests that we are able to use depend on level of measurement. With nominal-level measurement, for example, the only available measure of central tendency is the mode. With ordinal-level measurement, the median or mode can be used. Interval- and ratio-level measurement are typically considered the most desirable because they permit any indicators of central tendency to be computed (i.e., mean, median, or mode). Also, ratio-level measurement is the only level that allows meaningful statements about ratios of scores. The higher the level of measurement, the more options we have for the statistical tests we are able to conduct. This knowledge may help us decide what kind of data we need to gather, and how.

That said, we have to balance this knowledge with the understanding that sometimes, collecting data at a higher level of measurement could negatively impact our studies. For instance, sometimes providing answers in ranges may make prospective participants feel more comfortable responding to sensitive items. Imagine that you were interested in collecting information on topics such as income, number of sexual partners, number of times someone used illicit drugs, etc. You would have to think about the sensitivity of these items and determine if it would make more sense to collect some data at a lower level of measurement (e.g., nominal: asking if they are sexually active or not) versus a higher level such as ratio (e.g., their total number of sexual partners).

Finally, sometimes when analyzing data, researchers find a need to change a variable’s level of measurement. For example, a few years ago, a student was interested in studying the association between mental health and life satisfaction. This student used a variety of measures. One item asked about the number of mental health symptoms, reported as the actual number. When analyzing data, the student examined the mental health symptom variable and noticed that she had two groups, those with none or one symptoms and those with many symptoms. Instead of using the ratio level data (actual number of mental health symptoms), she collapsed her cases into two categories, few and many. She decided to use this variable in her analyses. It is important to note that you can move a higher level of data to a lower level of data; however, you are unable to move a lower level to a higher level.

- Check that the variables in your research question can vary…and that they are not constants or one of many potential attributes of a variable.

- Think about the attributes your variables have. Are they categorical or continuous? What level of measurement seems most appropriate?

Step 2 of Operationalization: Specify measures for each variable

Let’s pick a social work research question and walk through the process of operationalizing variables to see how specific we need to get. Suppose we hypothesize that residents of a psychiatric unit who are more depressed are less likely to be satisfied with care. Remember, this would be an inverse relationship—as levels of depression increase, satisfaction decreases. In this hypothesis, level of depression is the independent (or predictor) variable and satisfaction with care is the dependent (or outcome) variable.

How would you measure these key variables? What indicators would you look for? Some might say that levels of depression could be measured by observing a participant’s body language. They may also say that a depressed person will often express feelings of sadness or hopelessness. In addition, a satisfied person might be happy around service providers and often express gratitude. While these factors may indicate that the variables are present, they lack coherence. Unfortunately, what this “measure” is actually saying is that “I know depression and satisfaction when I see them.” In a research study, you need more precision for how you plan to measure your variables. Individual judgments are subjective, based on idiosyncratic experiences with depression and satisfaction. They couldn’t be replicated by another researcher. They also can’t be done consistently for a large group of people. Operationalization requires that you come up with a specific and rigorous measure for seeing who is depressed or satisfied.

Finding a good measure for your variable depends on the kind of variable it is. Variables that are directly observable might include things like taking someone’s blood pressure, marking attendance or participation in a group, and so forth. To measure an indirectly observable variable like age, you would probably put a question on a survey that asked, “How old are you?” Measuring a variable like income might first require some more conceptualization, though. Are you interested in this person’s individual income or the income of their family unit? This might matter if your participant does not work or is dependent on other family members for income. Do you count income from social welfare programs? Are you interested in their income per month or per year? Even though indirect observables are relatively easy to measure, the measures you use must be clear in what they are asking, and operationalization is all about figuring out the specifics about how to measure what you want to know. For more complicated variables such as constructs, you will need compound measures that use multiple indicators to measure a single variable.

How you plan to collect your data also influences how you will measure your variables. For social work researchers using secondary data like client records as a data source, you are limited by what information is in the data sources you can access. If a partnering organization uses a given measurement for a mental health outcome, that is the one you will use in your study. Similarly, if you plan to study how long a client was housed after an intervention using client visit records, you are limited by how their caseworker recorded their housing status in the chart. One of the benefits of collecting your own data is being able to select the measures you feel best exemplify your understanding of the topic.

Composite measures

Depending on your research design, your measure may be something you put on a survey or pre/post-test that you give to your participants. For a variable like age or income, one well-worded item may suffice. Unfortunately, most variables in the social world are not so simple. Depression and satisfaction are multidimensional concepts. Relying on a indicator that is a single item on a questionnaire like a question that asks “Yes or no, are you depressed?” does not encompass the complexity of constructs.

For more complex variables, researchers use scales and indices (sometimes called indexes) because they use multiple items to develop a composite (or total) score as a measure for a variable. As such, they are called composite measures . Composite measures provide a much greater understanding of concepts than a single item could.

It can be complex to delineate between multidimensional and unidimensional concepts. If satisfaction were a key variable in our study, we would need a theoretical framework and conceptual definition for it. Perhaps we come to view satisfaction has having two dimensions: a mental one and an emotional one. That means we would need to include indicators that measured both mental and emotional satisfaction as separate dimensions of satisfaction. However, if satisfaction is not a key variable in your theoretical framework, it may make sense to operationalize it as a unidimensional concept.

Although we won’t delve too deeply into the process of scale development, we will cover some important topics for you to understand how scales and indices developed by other researchers can be used in your project.

Need to make better sense of the following content:

Measuring abstract concepts in concrete terms remains one of the most difficult tasks in empirical social science research.

A scale , XXXXXXXXXXXX .

The scales we discuss in this section are a different from “rating scales” discussed in the previous section. A rating scale is used to capture the respondents’ reactions to a given item on a questionnaire. For example, an ordinally scaled item captures a value between “strongly disagree” to “strongly agree.” Attaching a rating scale to a statement or instrument is not scaling. Rather, scaling is the formal process of developing scale items, before rating scales can be attached to those items.

If creating your own scale sounds painful, don’t worry! For most constructs, you would likely be duplicating work that has already been done by other researchers. Specifically, this is a branch of science called psychometrics. You do not need to create a scale for depression because scales such as the Patient Health Questionnaire (PHQ-9) [1] , the Center for Epidemiologic Studies Depression Scale (CES-D) [2] , and Beck’s Depression Inventory [3] (BDI) have been developed and refined over dozens of years to measure variables like depression. Similarly, scales such as the Patient Satisfaction Questionnaire (PSQ-18) have been developed to measure satisfaction with medical care. As we will discuss in the next section, these scales have been shown to be reliable and valid. While you could create a new scale to measure depression or satisfaction, a study with rigor would pilot test and refine that new scale over time to make sure it measures the concept accurately and consistently before using it in other research. This high level of rigor is often unachievable in smaller research projects because of the cost and time involved in pilot testing and validating, so using existing scales is recommended.

Unfortunately, there is no good one-stop-shop for psychometric scales. The Mental Measurements Yearbook provides a list of measures for social science variables, though it is incomplete and may not contain the full documentation for instruments in its database. It is available as a searchable database by many university libraries.

Perhaps an even better option could be looking at the methods section of the articles in your literature review. The methods section of each article will detail how the researchers measured their variables, and often the results section is instructive for understanding more about measures. In a quantitative study, researchers may have used a scale to measure key variables and will provide a brief description of that scale, its names, and maybe a few example questions. If you need more information, look at the results section and tables discussing the scale to get a better idea of how the measure works.

Looking beyond the articles in your literature review, searching Google Scholar or other databases using queries like “depression scale” or “satisfaction scale” should also provide some relevant results. For example, searching for documentation for the Rosenberg Self-Esteem Scale, I found this report about useful measures for acceptance and commitment therapy which details measurements for mental health outcomes. If you find the name of the scale somewhere but cannot find the documentation (i.e., all items, response choices, and how to interpret the scale), a general web search with the name of the scale and “.pdf” may bring you to what you need. Or, to get professional help with finding information, ask a librarian!

Unfortunately, these approaches do not guarantee that you will be able to view the scale itself or get information on how it is interpreted. Many scales cost money to use and may require training to properly administer. You may also find scales that are related to your variable but would need to be slightly modified to match your study’s needs. You could adapt a scale to fit your study, however changing even small parts of a scale can influence its accuracy and consistency. Pilot testing is always recommended for adapted scales, and researchers seeking to draw valid conclusions and publish their results should take this additional step.

Types of scales

Likert scales.

Although Likert scale is a term colloquially used to refer to almost any rating scale (e.g., a 0-to-10 life satisfaction scale), it has a much more precise meaning. In the 1930s, researcher Rensis Likert (pronounced LICK-ert) created a new approach for measuring people’s attitudes (Likert, 1932) . [4] It involves presenting people with several statements—including both favorable and unfavorable statements—about some person, group, or idea. Respondents then express their approval or disapproval with each statement on a 5-point rating scale: Strongly Approve , Approve , Undecided , Disapprove, Strongly Disapprove . Numbers are assigned to each response a nd then summed across all items to produce a score representing the attitude toward the person, group, or idea. For items that are phrased in an opposite direction (e.g., negatively worded statements instead of positively worded statements), reverse coding is used so that the numerical scoring of statements also runs in the opposite direction. The scores for the entire set of items are totaled for a score for the attitude of interest. This type of scale came to be called a Likert scale, as indicated in Table 10.3 below. Scales that use similar logic but do not have these exact characteristics are referred to as “Likert-type scales.”

Semantic Differential Scales

Semantic differential scales are composite scales in which respondents are asked to indicate their opinions or feelings toward a single statement using different pairs of adjectives framed as polar opposites. Whereas in a Likert scale, a participant is asked how much they approve or disapprove of a statement, in a semantic differential scale the participant is asked to indicate how they about a specific item using several pairs of opposites. This makes the semantic differential scale an excellent technique for measuring people’s feelings toward objects, events, or behaviors. Table 10.4 provides an example of a semantic differential scale that was created to assess participants’ feelings about this textbook.

Guttman Scales

A specialized scale for measuring unidimensional concepts was designed by Louis Guttman. A Guttman scale (also called cumulative scale ) uses a series of items arranged in increasing order of intensity (least intense to most intense) of the concept. This type of scale allows us to understand the intensity of beliefs or feelings. Each item in the Guttman scale below has a weight (this is not indicated on the tool) which varies with the intensity of that item, and the weighted combination of each response is used as an aggregate measure of an observation.

Table XX presents an example of a Guttman Scale. Notice how the items move from lower intensity to higher intensity. A researcher reviews the yes answers and creates a score for each participant.

Example Guttman Scale Items

- I often felt the material was not engaging Yes/No

- I was often thinking about other things in class Yes/No

- I was often working on other tasks during class Yes/No

- I will work to abolish research from the curriculum Yes/No

An index is a composite score derived from aggregating measures of multiple indicators. At its most basic, an index sums up indicators. A well-known example of an index is the consumer price index (CPI), which is computed every month by the Bureau of Labor Statistics of the U.S. Department of Labor. The CPI is a measure of how much consumers have to pay for goods and services (in general) and is divided into eight major categories (food and beverages, housing, apparel, transportation, healthcare, recreation, education and communication, and “other goods and services”), which are further subdivided into more than 200 smaller items. Each month, government employees call all over the country to get the current prices of more than 80,000 items. Using a complicated weighting scheme that takes into account the location and probability of purchase for each item, analysts then combine these prices into an overall index score using a series of formulas and rules.

Another example of an index is the Duncan Socioeconomic Index (SEI). This index is used to quantify a person’s socioeconomic status (SES) and is a combination of three concepts: income, education, and occupation. Income is measured in dollars, education in years or degrees achieved, and occupation is classified into categories or levels by status. These very different measures are combined to create an overall SES index score. However, SES index measurement has generated a lot of controversy and disagreement among researchers.

The process of creating an index is similar to that of a scale. First, conceptualize the index and its constituent components. Though this appears simple, there may be a lot of disagreement on what components (concepts/constructs) should be included or excluded from an index. For instance, in the SES index, isn’t income correlated with education and occupation? And if so, should we include one component only or all three components? Reviewing the literature, using theories, and/or interviewing experts or key stakeholders may help resolve this issue. Second, operationalize and measure each component. For instance, how will you categorize occupations, particularly since some occupations may have changed with time (e.g., there were no Web developers before the Internet)? As we will see in step three below, researchers must create a rule or formula for calculating the index score. Again, this process may involve a lot of subjectivity, so validating the index score using existing or new data is important.

Differences between scales and indices

Though indices and scales yield a single numerical score or value representing a concept of interest, they are different in many ways. First, indices often comprise components that are very different from each other (e.g., income, education, and occupation in the SES index) and are measured in different ways. Conversely, scales typically involve a set of similar items that use the same rating scale (such as a five-point Likert scale about customer satisfaction).

Second, indices often combine objectively measurable values such as prices or income, while scales are designed to assess subjective or judgmental constructs such as attitude, prejudice, or self-esteem. Some argue that the sophistication of the scaling methodology makes scales different from indexes, while others suggest that indexing methodology can be equally sophisticated. Nevertheless, indexes and scales are both essential tools in social science research.

Scales and indices seem like clean, convenient ways to measure different phenomena in social science, but just like with a lot of research, we have to be mindful of the assumptions and biases underneath. What if the developers of scale or an index were influenced by unconscious biases? Or what if it was validated using only White women as research participants? Is it going to be useful for other groups? It very well might be, but when using a scale or index on a group for whom it hasn’t been tested, it will be very important to evaluate the validity and reliability of the instrument, which we address in the rest of the chapter.

Finally, it’s important to note that while scales and indices are often made up of items measured at the nominal or ordinal level, the scores on the composite measurement are continuous variables.

Looking back to your work from the previous section, are your variables unidimensional or multidimensional?

- Describe the specific measures you will use (actual questions and response options you will use with participants) for each variable in your research question.

- If you are using a measure developed by another researcher but do not have all of the questions, response options, and instructions needed to implement it, put it on your to-do list to get them.