- Increase Font Size

26 Hypothesis and Variables – Meaning, Classification and Uses

C. Parvathi

INTRODUCTION

Today, we are going to see the meaning of the hypothesis, steps involved to write a hypothesis, its characteristics, types and errors in formulating hypothesis. It involves different errors of hypothesis for which we have to identify the variables which will enable the research scholars to justify the area of research and design of the research work under taken by the investigator.

Hypothesis is usually considered as the principal instrument in research. Its main function is to suggest new experiments and observations. In fact, many experiments are carried out with the deliberate objective of testing hypotheses. Decision-makers often face situations wherein they are interested in testing hypotheses on the basis of available information and then take decisions on the basis of such testing. In social science, where direct knowledge of population parameter(s) is rare, hypothesis testing is often used strategy for deciding whether a sample data offers such support for a hypothesis from which generalization can be made. Thus hypothesis testing enables us to make probability statements about population parameter(s). The hypothesis may not be proved absolutely, but in practice it is accepted if it has withstood a critical testing. Before we explain how hypotheses are tested through different tests meant for this purpose, it will be appropriate to explain clearly the meaning of a hypothesis and the related concepts for better understanding of the hypothesis testing techniques.

WHAT IS HYPOTHESIS?

Generally, when one talks about hypothesis, one simply means mere assumption or some supposition to be proved or disproved. Thus a hypothesis may be defined as a proposition or a set of proposition set forth as an explanation for the occurrence of some specified group of phenomena either asserted merely as a provisional conjecture to guide some investigation or accepted a highly probable in the light of established facts. Research hypothesis is a predictive statement, capable of being tested by scientific methods that relate an independent variable to some dependant variable. For example, consider statement like the following ones:

“Students who receive counseling will show better performance increase in creativity than students not receiving counseling” or “the automobile A is performing better than automobile B .”

The above hypothesis is capable of being objectively verified and tested. It is a proposition which can be put to a test to determine its validity.

Here, we are examining the truth or otherwise of the hypothesis (guess, claim or assumptions, etc.) about some feature about one or more populations on the basis of samples drawn from these populations. Testing plays a major role in statistical investigation. Generally, a statistical hypothesis is a statement or a conclusion or an assumption about certain characteristic populations which is drawn on a logical basis and it can be tested based on the sample evidences. Test of hypothesis means either accept or reject the hypothesis under a valid reason. The test of significance enables a researcher to decide either to accept or reject the statistical hypothesis. For example, a manufacturing company producing bolts of different sizes and claims that not more than 2 per cent bolts are defective. In order to verify the claim as true or not, we have to check it on the basis of sample of bolts. A company wants to verify the effectiveness of advertisement given through print media is less effective than audio-visual media or not. There are wide ranges of areas in business where we have to come across situations of arriving at a decision of accepting or rejecting hypothesis. So, it is very much important to have knowledge about the logical basis of such decisions and it is provided by hypothesis testing, which is the objective of this chapter.

It is a usual procedure that sample is drawn from the population an estimate of population parameter which is in other words, called sample statistic. Estimate of population parameters thus obtained may or may not exactly match with true values. To take the sample statistic as the estimate of population parameter is involved with risk. So, it is worthwhile to find whether the difference between the estimated value of the parameter or the true value is significantly different or it could have arisen due to fluctuation of sampling. For this reason only, a hypothesis is formulated and then tested for validity.

Meaning of Hypothesis:

Hypothesis simply means a mere assumption to be proved or disproved. But for a researcher hypothesis is a formal question that he intends to resolve. It is a testable statement; hypotheses are generally either derived theory of from direct observation of data

Types of Hypothesis

Null hypothesis

Null hypothesis is the statement about the parameters, which is usually a hypothesis of no difference and is denoted by Ho.

Alternative Hypothesis

Any hypothesis, which is complementary to the null hypothesis, is called an alternative hypothesis, usually denoted by H1.

BASIC CONCEPTS ON TESTING OF HYPOTHESES

a) NULL HYPOTHESIS

In the context of statistical analysis, we often talk about null hypothesis and alternative hypothesis. If we are to compare method A with method B about its superiority and if we proceed on the assumption that both methods are equally good, then this assumption is termed as the null hypothesis. The null hypothesis is generally symbolized as Ho and the alternative hypothesis as Ha.

In the choice of null hypothesis, the following considerations are usually kept in view:

Alternative hypothesis is usually the one which one wishes to prove and the null hypothesis is the one which one wishes to disprove. Thus, a null hypothesis represents the hypothesis we are trying to reject, and the alternative hypothesis represents all other possibilities.

If the rejection of a certain hypothesis when it actually true involves great risk, it is taken as null hypothesis.

Null hypothesis should always be specific hypothesis i.e., it should not state about or approximately a certain value.

b) THE LEVEL OF SIGNIFICANCE

This is a very important concept in the context of hypothesis testing. It is always some percentage (usually 5%) which should be chosen with great care. In case we take the significance level at 5 percent, then this implies that Ho will be rejected when the sampling result (i.e., observed evidence) has a less than 0.05 probability of occurring if Ho is true. In other words, the 5 percent level of significance means that researcher is willing to take as much as 5 percent risk of rejecting the null hypothesis when it (Ho) happens to be true.

c) DECISION RULE OF TEST OF HYPOTHESIS

Given a hypothesis Ho and an alternative hypothesis Ha, we make a rule which is known as decision rule according to which we accept Ho (i.e., reject Ha) or reject Ho (i.e., accept Ha).

d) TYPE I ERROR AND TYPE II ERRORS

In the context of testing of hypotheses, there are basically two types of errors. We may reject Ho when Ho is true and we may accept Ho when Ho is not true. The former is known as Type I error and the latter as Type II error. In other words, Type I error means rejection of hypothesis which should have been accepted and Type II error means accepting the hypothesis which should have been rejected. Type I error is denoted by α (alpha) known as α error, also called as the level of significance of test; and Type II error is denoted by β (beta) known as β error.

e) TWO-TAILED AND ONE-TAILED TESTS

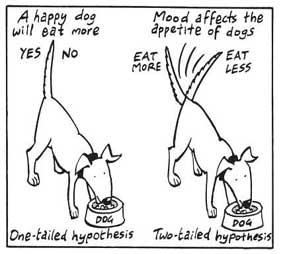

In the content of hypothesis testing, these two terms are quite important and must be clearly understood. A two-tailed test rejects the null hypothesis if, say, the sample mean is significantly higher or lower than the hypothesized value of the mean of the population. Such a test is appropriate when the null hypothesis is some specified value and the alternative hypothesis is a value not equal to the specified value of the null hypothesis.

ERRORS IN TESTING OF HYPOTHESIS

In the procedure of testing of hypothesis, a decision is taken about the acceptance or rejection of null hypothesis. The possible decisions can be written in a tabular form.

There is always some possibility of committing the following two types of errors in taking such as decision as

Type I Error: Reject the null hypothesis Ho when it is true.

Type II Error: Accept the null hypothesis Ho when it is false.

Now, we write α = Probability of committing Type I error

And β = Probability of committing Type II error

The compliment of Type II error is called as the power of the test and is given by (1- β) and the size of Type I error (α) is also called as level of significance . The level of significance is the quantity of risk, which can be readily tolerated in making a decision Ho. Usually the value of α, is chosen depending upon the desired degree of precession and it and its value varies between 0.05 (for moderate precision) to 0.01 (for high precision).

PROCEDURE FOR HYPOTHESIS TESTING

In hypothesis testing the main question is: whether to accept the null hypothesis or not. Procedure for hypothesis testing refers to all those steps that we undertake for making a choice between the two actions i.e., rejection and acceptance of a null hypothesis.

The various steps involved in hypothesis testing are stated below:

(i) Selection of Variables

DEPENDENT VARIABLE

The variable that depends on other factors is called dependent variable. These variables are expected to change a result of an experimental manipulation of the independent variable or variables. The outcome variable measured in each subject, who may be influenced by manipulation of the independent variable, is termed the dependent variable.

INDEPENDENT VARIABLE

The variable that is stable and unaffected by other variables is called independent variable. It refers to the condition of an experiment that is systematically manipulated by the investigator. In experimental research, an investigator manipulates one variable and measures the effect of that manipulation on another variable. For example, let’s take a study in which the investigators want to determine how often an exercise must be done to increase strength.

Check your progress

Fill in the blanks

F Hypothesis is usually considered as the principal instrument of _________________

F The null hypothesis is generally symbolized as_________

F The variable that depends on other factor is called _____________ Variable.

IDENTIFYING THE KEY VARIABLES FOR ANALYSIS

- The key variables provide focus when writing the Introduction section

- The key variables are the major terms to be used in methodology.

- The key variables are the terms to be operationally defined if an Operational Definition of Terms section is necessary.

- The key variables must be directly measured or manipulated for the research study to be valid

(ii) Making a formal statement

(iii) Selecting a significance level

(iv) Deciding the distribution to use

(v) Selecting a random sample and computing an appropriate value

(vi) Calculation of the probability; and

(vii) Comparing the probability

FLOW DIAGRAM FOR HYPOTHESIS TESTING

TEST OF HYPOTHESIS

Statisticians have developed several tests of hypotheses (also known as the tests of significance) for the purpose of testing of hypotheses which can be classified as: (a) Parametric tests or standard test of hypothesis and (b) Non-parametric tests or distribution-free test of hypotheses.

Parametric tests are usually assuming certain properties of the parent population from which we draw samples. Assumptions like observations come from a normal population, sample size is large, assumptions about the population parameters like mean, variance, etc., must hold good before parametric tests can be used.

Non-parametric tests assume only nominal or ordinal data, whereas parameters tests require measurement equivalent to at least an interval scale.

IMPORTANT PARAMETRIC TESTS

The important parametric tests are:

(i) Z-test

Z-test is based on the normal probability distribution and is used for judging the significance of several statistical measures, particularly the mean.

(ii) t-test

t-test is based on t-distribution and is considered an appropriate test for judging the significance of an sample mean or for judging the significance of difference between the means of two samples in case of small sample(s) when population variance is not known (in which we use variance of the samples as an estimate of the population variance).

(iii) X 2 -test

X2-test is also used as a test of goodness of fit and also as a test of independence in which case it is a non-parametric test. X2-test is based on chi-square distribution and as a parametric test is used for comparing a sample variance to a theoretical population variance.

(iv) F-test

F-test is based on F-distribution and is used to compare the variance of the two-independent samples.

LIMITATIONS OF THE TESTS OF HYPOTHESES

Limitations of test of hypothesis are as follows:

i) The tests should not be used in a mechanical fashion. It should be kept in view that testing is not decision- making itself; the tests are only useful aids for decision-making.

ii) Tests do not explain the reasons as to why does the difference exist, like between the means of the two samples. They simply indicate whether the difference is due to fluctuations of sampling or because of other reasons.

iii) Results of test of significance are based on probabilities which cannot be expressed with full certainty. When a test shows that a difference is statistically significant, then it simply suggests that the difference is probably not due to chance.

iv) Statistical inferences based on the significance tests cannot be said to be entirely correct evidences concerning the truth of the hypotheses. This is specially so in case of small samples where the probability of drawing inferences happens to be generally higher. For greater reliability, the size of samples is sufficiently enlarged.

To conclude, we have seen the meaning, steps, and characteristics of hypothesis in a detailed manner. Framing and testing of the hypothesis is the major part of the research work with which investigator will be able to test by scientific method(s), to apply econometric models to establish a strong relationship between the theory and the analysis of the research work which will strengthen the findings of the study. Therefore, in social science, framing the hypothesis occupies a significant place to proceed with the research work. Hence, the present E-module will be very useful for the project investigators and thereby conclusions drawn will enable the government to take decision at policy level.

- Anderson ,R.L. and Bancroft, T.A. Statistical Theory In Research (Chs. 7,13) Mc Graw-Hill, 1952.

- Bhattacharyya G.K., and Johnson, R.A, Concepts and Methods of Statistics (Chs 6-8). John Wiley, 1977.

- Dixon, W.J and Massey, F.J. Introduction to Statistical Analysis (Chs 6-8, 10-11) Mc Graw-Hill,1969 and Kogakusha.

- Freund, J.E. Mathemetical Statistics (Chs. 10-13). Prentic Hall of India, New Delhi, 1992.

- Hald, A. Statistical theory with engineering applications (Chs.9-11,18).John Wiley,1962.

- Hogg, R.V. and Craig, A.T. Introduction to Mathemetical Statistics(chs 5,9-11). Macmillan, 1965, and Amerind.

- Johnson,N.L. and Leone,F.C.Statistics and exprimental degin,vol.I (Chs.8,12).john wiley,1964.

- Keeping, E.S. Introduction to Statistical Inference (Chs.8,11). Van Nostrand, 1962 and Affiliated East-West PressModd, A.M.,

- Graybill, F.a. and Boes, D.GIntroduction to the Theory of Statistics (Chs. 7, 8, 11,12). McGraw-Hill, 1963,and Kogakusha Rao, C.R. Advanced Statistical Methods in Biometric Research (Chs. 4, 8a). John Wiley, 1952.

- Wald, a. Principles of Statistical Inference. Notre Dame, 1942.

- Walker, H.M and Lev, J. Statistical Inference (Chs. 3,4,7-10) Holt, Rinchart and Winston, 1953 and Oxford and IBH, 1965.

Research Hypothesis In Psychology: Types, & Examples

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A research hypothesis, in its plural form “hypotheses,” is a specific, testable prediction about the anticipated results of a study, established at its outset. It is a key component of the scientific method .

Hypotheses connect theory to data and guide the research process towards expanding scientific understanding

Some key points about hypotheses:

- A hypothesis expresses an expected pattern or relationship. It connects the variables under investigation.

- It is stated in clear, precise terms before any data collection or analysis occurs. This makes the hypothesis testable.

- A hypothesis must be falsifiable. It should be possible, even if unlikely in practice, to collect data that disconfirms rather than supports the hypothesis.

- Hypotheses guide research. Scientists design studies to explicitly evaluate hypotheses about how nature works.

- For a hypothesis to be valid, it must be testable against empirical evidence. The evidence can then confirm or disprove the testable predictions.

- Hypotheses are informed by background knowledge and observation, but go beyond what is already known to propose an explanation of how or why something occurs.

Predictions typically arise from a thorough knowledge of the research literature, curiosity about real-world problems or implications, and integrating this to advance theory. They build on existing literature while providing new insight.

Types of Research Hypotheses

Alternative hypothesis.

The research hypothesis is often called the alternative or experimental hypothesis in experimental research.

It typically suggests a potential relationship between two key variables: the independent variable, which the researcher manipulates, and the dependent variable, which is measured based on those changes.

The alternative hypothesis states a relationship exists between the two variables being studied (one variable affects the other).

A hypothesis is a testable statement or prediction about the relationship between two or more variables. It is a key component of the scientific method. Some key points about hypotheses:

- Important hypotheses lead to predictions that can be tested empirically. The evidence can then confirm or disprove the testable predictions.

In summary, a hypothesis is a precise, testable statement of what researchers expect to happen in a study and why. Hypotheses connect theory to data and guide the research process towards expanding scientific understanding.

An experimental hypothesis predicts what change(s) will occur in the dependent variable when the independent variable is manipulated.

It states that the results are not due to chance and are significant in supporting the theory being investigated.

The alternative hypothesis can be directional, indicating a specific direction of the effect, or non-directional, suggesting a difference without specifying its nature. It’s what researchers aim to support or demonstrate through their study.

Null Hypothesis

The null hypothesis states no relationship exists between the two variables being studied (one variable does not affect the other). There will be no changes in the dependent variable due to manipulating the independent variable.

It states results are due to chance and are not significant in supporting the idea being investigated.

The null hypothesis, positing no effect or relationship, is a foundational contrast to the research hypothesis in scientific inquiry. It establishes a baseline for statistical testing, promoting objectivity by initiating research from a neutral stance.

Many statistical methods are tailored to test the null hypothesis, determining the likelihood of observed results if no true effect exists.

This dual-hypothesis approach provides clarity, ensuring that research intentions are explicit, and fosters consistency across scientific studies, enhancing the standardization and interpretability of research outcomes.

Nondirectional Hypothesis

A non-directional hypothesis, also known as a two-tailed hypothesis, predicts that there is a difference or relationship between two variables but does not specify the direction of this relationship.

It merely indicates that a change or effect will occur without predicting which group will have higher or lower values.

For example, “There is a difference in performance between Group A and Group B” is a non-directional hypothesis.

Directional Hypothesis

A directional (one-tailed) hypothesis predicts the nature of the effect of the independent variable on the dependent variable. It predicts in which direction the change will take place. (i.e., greater, smaller, less, more)

It specifies whether one variable is greater, lesser, or different from another, rather than just indicating that there’s a difference without specifying its nature.

For example, “Exercise increases weight loss” is a directional hypothesis.

Falsifiability

The Falsification Principle, proposed by Karl Popper , is a way of demarcating science from non-science. It suggests that for a theory or hypothesis to be considered scientific, it must be testable and irrefutable.

Falsifiability emphasizes that scientific claims shouldn’t just be confirmable but should also have the potential to be proven wrong.

It means that there should exist some potential evidence or experiment that could prove the proposition false.

However many confirming instances exist for a theory, it only takes one counter observation to falsify it. For example, the hypothesis that “all swans are white,” can be falsified by observing a black swan.

For Popper, science should attempt to disprove a theory rather than attempt to continually provide evidence to support a research hypothesis.

Can a Hypothesis be Proven?

Hypotheses make probabilistic predictions. They state the expected outcome if a particular relationship exists. However, a study result supporting a hypothesis does not definitively prove it is true.

All studies have limitations. There may be unknown confounding factors or issues that limit the certainty of conclusions. Additional studies may yield different results.

In science, hypotheses can realistically only be supported with some degree of confidence, not proven. The process of science is to incrementally accumulate evidence for and against hypothesized relationships in an ongoing pursuit of better models and explanations that best fit the empirical data. But hypotheses remain open to revision and rejection if that is where the evidence leads.

- Disproving a hypothesis is definitive. Solid disconfirmatory evidence will falsify a hypothesis and require altering or discarding it based on the evidence.

- However, confirming evidence is always open to revision. Other explanations may account for the same results, and additional or contradictory evidence may emerge over time.

We can never 100% prove the alternative hypothesis. Instead, we see if we can disprove, or reject the null hypothesis.

If we reject the null hypothesis, this doesn’t mean that our alternative hypothesis is correct but does support the alternative/experimental hypothesis.

Upon analysis of the results, an alternative hypothesis can be rejected or supported, but it can never be proven to be correct. We must avoid any reference to results proving a theory as this implies 100% certainty, and there is always a chance that evidence may exist which could refute a theory.

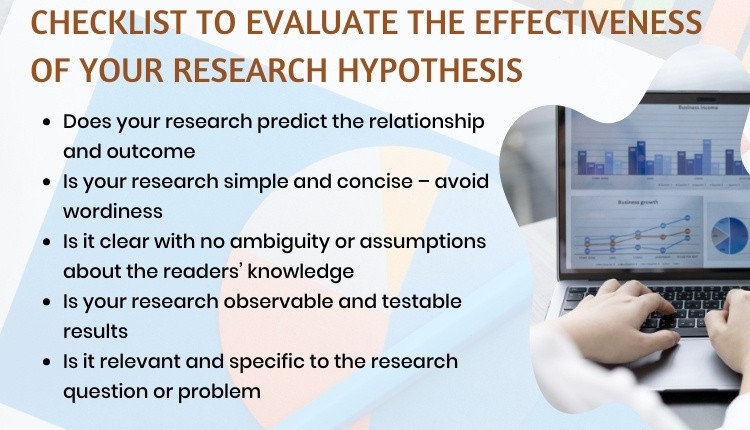

How to Write a Hypothesis

- Identify variables . The researcher manipulates the independent variable and the dependent variable is the measured outcome.

- Operationalized the variables being investigated . Operationalization of a hypothesis refers to the process of making the variables physically measurable or testable, e.g. if you are about to study aggression, you might count the number of punches given by participants.

- Decide on a direction for your prediction . If there is evidence in the literature to support a specific effect of the independent variable on the dependent variable, write a directional (one-tailed) hypothesis. If there are limited or ambiguous findings in the literature regarding the effect of the independent variable on the dependent variable, write a non-directional (two-tailed) hypothesis.

- Make it Testable : Ensure your hypothesis can be tested through experimentation or observation. It should be possible to prove it false (principle of falsifiability).

- Clear & concise language . A strong hypothesis is concise (typically one to two sentences long), and formulated using clear and straightforward language, ensuring it’s easily understood and testable.

Consider a hypothesis many teachers might subscribe to: students work better on Monday morning than on Friday afternoon (IV=Day, DV= Standard of work).

Now, if we decide to study this by giving the same group of students a lesson on a Monday morning and a Friday afternoon and then measuring their immediate recall of the material covered in each session, we would end up with the following:

- The alternative hypothesis states that students will recall significantly more information on a Monday morning than on a Friday afternoon.

- The null hypothesis states that there will be no significant difference in the amount recalled on a Monday morning compared to a Friday afternoon. Any difference will be due to chance or confounding factors.

More Examples

- Memory : Participants exposed to classical music during study sessions will recall more items from a list than those who studied in silence.

- Social Psychology : Individuals who frequently engage in social media use will report higher levels of perceived social isolation compared to those who use it infrequently.

- Developmental Psychology : Children who engage in regular imaginative play have better problem-solving skills than those who don’t.

- Clinical Psychology : Cognitive-behavioral therapy will be more effective in reducing symptoms of anxiety over a 6-month period compared to traditional talk therapy.

- Cognitive Psychology : Individuals who multitask between various electronic devices will have shorter attention spans on focused tasks than those who single-task.

- Health Psychology : Patients who practice mindfulness meditation will experience lower levels of chronic pain compared to those who don’t meditate.

- Organizational Psychology : Employees in open-plan offices will report higher levels of stress than those in private offices.

- Behavioral Psychology : Rats rewarded with food after pressing a lever will press it more frequently than rats who receive no reward.

Principles of Research Methodology pp 31–53 Cite as

The Research Hypothesis: Role and Construction

- Phyllis G. Supino EdD 3

- First Online: 01 January 2012

5958 Accesses

A hypothesis is a logical construct, interposed between a problem and its solution, which represents a proposed answer to a research question. It gives direction to the investigator’s thinking about the problem and, therefore, facilitates a solution. There are three primary modes of inference by which hypotheses are developed: deduction (reasoning from a general propositions to specific instances), induction (reasoning from specific instances to a general proposition), and abduction (formulation/acceptance on probation of a hypothesis to explain a surprising observation).

A research hypothesis should reflect an inference about variables; be stated as a grammatically complete, declarative sentence; be expressed simply and unambiguously; provide an adequate answer to the research problem; and be testable. Hypotheses can be classified as conceptual versus operational, single versus bi- or multivariable, causal or not causal, mechanistic versus nonmechanistic, and null or alternative. Hypotheses most commonly entail statements about “variables” which, in turn, can be classified according to their level of measurement (scaling characteristics) or according to their role in the hypothesis (independent, dependent, moderator, control, or intervening).

A hypothesis is rendered operational when its broadly (conceptually) stated variables are replaced by operational definitions of those variables. Hypotheses stated in this manner are called operational hypotheses, specific hypotheses, or predictions and facilitate testing.

- Attention Deficit Hyperactivity Disorder

- Operational Definition

- Moderator Variable

- Ventricular Performance

- Attention Deficit Hyperactivity Disorder Group

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

Wrong hypotheses, rightly worked from, have produced more results than unguided observation

—Augustus De Morgan, 1872[ 1 ]—

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

De Morgan A, De Morgan S. A budget of paradoxes. London: Longmans Green; 1872.

Google Scholar

Leedy Paul D. Practical research. Planning and design. 2nd ed. New York: Macmillan; 1960.

Bernard C. Introduction to the study of experimental medicine. New York: Dover; 1957.

Erren TC. The quest for questions—on the logical force of science. Med Hypotheses. 2004;62:635–40.

Article PubMed Google Scholar

Peirce CS. Collected papers of Charles Sanders Peirce, vol. 7. In: Hartshorne C, Weiss P, editors. Boston: The Belknap Press of Harvard University Press; 1966.

Aristotle. The complete works of Aristotle: the revised Oxford Translation. In: Barnes J, editor. vol. 2. Princeton/New Jersey: Princeton University Press; 1984.

Polit D, Beck CT. Conceptualizing a study to generate evidence for nursing. In: Polit D, Beck CT, editors. Nursing research: generating and assessing evidence for nursing practice. 8th ed. Philadelphia: Wolters Kluwer/Lippincott Williams and Wilkins; 2008. Chapter 4.

Jenicek M, Hitchcock DL. Evidence-based practice. Logic and critical thinking in medicine. Chicago: AMA Press; 2005.

Bacon F. The novum organon or a true guide to the interpretation of nature. A new translation by the Rev G.W. Kitchin. Oxford: The University Press; 1855.

Popper KR. Objective knowledge: an evolutionary approach (revised edition). New York: Oxford University Press; 1979.

Morgan AJ, Parker S. Translational mini-review series on vaccines: the Edward Jenner Museum and the history of vaccination. Clin Exp Immunol. 2007;147:389–94.

Article PubMed CAS Google Scholar

Pead PJ. Benjamin Jesty: new light in the dawn of vaccination. Lancet. 2003;362:2104–9.

Lee JA. The scientific endeavor: a primer on scientific principles and practice. San Francisco: Addison-Wesley Longman; 2000.

Allchin D. Lawson’s shoehorn, or should the philosophy of science be rated, ‘X’? Science and Education. 2003;12:315–29.

Article Google Scholar

Lawson AE. What is the role of induction and deduction in reasoning and scientific inquiry? J Res Sci Teach. 2005;42:716–40.

Peirce CS. Collected papers of Charles Sanders Peirce, vol. 2. In: Hartshorne C, Weiss P, editors. Boston: The Belknap Press of Harvard University Press; 1965.

Bonfantini MA, Proni G. To guess or not to guess? In: Eco U, Sebeok T, editors. The sign of three: Dupin, Holmes, Peirce. Bloomington: Indiana University Press; 1983. Chapter 5.

Peirce CS. Collected papers of Charles Sanders Peirce, vol. 5. In: Hartshorne C, Weiss P, editors. Boston: The Belknap Press of Harvard University Press; 1965.

Flach PA, Kakas AC. Abductive and inductive reasoning: background issues. In: Flach PA, Kakas AC, editors. Abduction and induction. Essays on their relation and integration. The Netherlands: Klewer; 2000. Chapter 1.

Murray JF. Voltaire, Walpole and Pasteur: variations on the theme of discovery. Am J Respir Crit Care Med. 2005;172:423–6.

Danemark B, Ekstrom M, Jakobsen L, Karlsson JC. Methodological implications, generalization, scientific inference, models (Part II) In: explaining society. Critical realism in the social sciences. New York: Routledge; 2002.

Pasteur L. Inaugural lecture as professor and dean of the faculty of sciences. In: Peterson H, editor. A treasury of the world’s greatest speeches. Douai, France: University of Lille 7 Dec 1954.

Swineburne R. Simplicity as evidence for truth. Milwaukee: Marquette University Press; 1997.

Sakar S, editor. Logical empiricism at its peak: Schlick, Carnap and Neurath. New York: Garland; 1996.

Popper K. The logic of scientific discovery. New York: Basic Books; 1959. 1934, trans. 1959.

Caws P. The philosophy of science. Princeton: D. Van Nostrand Company; 1965.

Popper K. Conjectures and refutations. The growth of scientific knowledge. 4th ed. London: Routledge and Keegan Paul; 1972.

Feyerabend PK. Against method, outline of an anarchistic theory of knowledge. London, UK: Verso; 1978.

Smith PG. Popper: conjectures and refutations (Chapter IV). In: Theory and reality: an introduction to the philosophy of science. Chicago: University of Chicago Press; 2003.

Blystone RV, Blodgett K. WWW: the scientific method. CBE Life Sci Educ. 2006;5:7–11.

Kleinbaum DG, Kupper LL, Morgenstern H. Epidemiological research. Principles and quantitative methods. New York: Van Nostrand Reinhold; 1982.

Fortune AE, Reid WJ. Research in social work. 3rd ed. New York: Columbia University Press; 1999.

Kerlinger FN. Foundations of behavioral research. 1st ed. New York: Hold, Reinhart and Winston; 1970.

Hoskins CN, Mariano C. Research in nursing and health. Understanding and using quantitative and qualitative methods. New York: Springer; 2004.

Tuckman BW. Conducting educational research. New York: Harcourt, Brace, Jovanovich; 1972.

Wang C, Chiari PC, Weihrauch D, Krolikowski JG, Warltier DC, Kersten JR, Pratt Jr PF, Pagel PS. Gender-specificity of delayed preconditioning by isoflurane in rabbits: potential role of endothelial nitric oxide synthase. Anesth Analg. 2006;103:274–80.

Beyer ME, Slesak G, Nerz S, Kazmaier S, Hoffmeister HM. Effects of endothelin-1 and IRL 1620 on myocardial contractility and myocardial energy metabolism. J Cardiovasc Pharmacol. 1995;26(Suppl 3):S150–2.

PubMed CAS Google Scholar

Stone J, Sharpe M. Amnesia for childhood in patients with unexplained neurological symptoms. J Neurol Neurosurg Psychiatry. 2002;72:416–7.

Naughton BJ, Moran M, Ghaly Y, Michalakes C. Computer tomography scanning and delirium in elder patients. Acad Emerg Med. 1997;4:1107–10.

Easterbrook PJ, Berlin JA, Gopalan R, Matthews DR. Publication bias in clinical research. Lancet. 1991;337:867–72.

Stern JM, Simes RJ. Publication bias: evidence of delayed publication in a cohort study of clinical research projects. BMJ. 1997;315:640–5.

Stevens SS. On the theory of scales and measurement. Science. 1946;103:677–80.

Knapp TR. Treating ordinal scales as interval scales: an attempt to resolve the controversy. Nurs Res. 1990;39:121–3.

The Cochrane Collaboration. Open Learning Material. www.cochrane-net.org/openlearning/html/mod14-3.htm . Accessed 12 Oct 2009.

MacCorquodale K, Meehl PE. On a distinction between hypothetical constructs and intervening variables. Psychol Rev. 1948;55:95–107.

Baron RM, Kenny DA. The moderator-mediator variable distinction in social psychological research: conceptual, strategic and statistical considerations. J Pers Soc Psychol. 1986;51:1173–82.

Williamson GM, Schultz R. Activity restriction mediates the association between pain and depressed affect: a study of younger and older adult cancer patients. Psychol Aging. 1995;10:369–78.

Song M, Lee EO. Development of a functional capacity model for the elderly. Res Nurs Health. 1998;21:189–98.

MacKinnon DP. Introduction to statistical mediation analysis. New York: Routledge; 2008.

Download references

Author information

Authors and affiliations.

Department of Medicine, College of Medicine, SUNY Downstate Medical Center, 450 Clarkson Avenue, 1199, Brooklyn, NY, 11203, USA

Phyllis G. Supino EdD

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Phyllis G. Supino EdD .

Editor information

Editors and affiliations.

, Cardiovascular Medicine, SUNY Downstate Medical Center, Clarkson Avenue, box 1199 450, Brooklyn, 11203, USA

Phyllis G. Supino

, Cardiovascualr Medicine, SUNY Downstate Medical Center, Clarkson Avenue 450, Brooklyn, 11203, USA

Jeffrey S. Borer

Rights and permissions

Reprints and permissions

Copyright information

© 2012 Springer Science+Business Media, LLC

About this chapter

Cite this chapter.

Supino, P.G. (2012). The Research Hypothesis: Role and Construction. In: Supino, P., Borer, J. (eds) Principles of Research Methodology. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-3360-6_3

Download citation

DOI : https://doi.org/10.1007/978-1-4614-3360-6_3

Published : 18 April 2012

Publisher Name : Springer, New York, NY

Print ISBN : 978-1-4614-3359-0

Online ISBN : 978-1-4614-3360-6

eBook Packages : Medicine Medicine (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Frequently asked questions

What is a hypothesis.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Frequently asked questions: Methodology

Attrition refers to participants leaving a study. It always happens to some extent—for example, in randomized controlled trials for medical research.

Differential attrition occurs when attrition or dropout rates differ systematically between the intervention and the control group . As a result, the characteristics of the participants who drop out differ from the characteristics of those who stay in the study. Because of this, study results may be biased .

Action research is conducted in order to solve a particular issue immediately, while case studies are often conducted over a longer period of time and focus more on observing and analyzing a particular ongoing phenomenon.

Action research is focused on solving a problem or informing individual and community-based knowledge in a way that impacts teaching, learning, and other related processes. It is less focused on contributing theoretical input, instead producing actionable input.

Action research is particularly popular with educators as a form of systematic inquiry because it prioritizes reflection and bridges the gap between theory and practice. Educators are able to simultaneously investigate an issue as they solve it, and the method is very iterative and flexible.

A cycle of inquiry is another name for action research . It is usually visualized in a spiral shape following a series of steps, such as “planning → acting → observing → reflecting.”

To make quantitative observations , you need to use instruments that are capable of measuring the quantity you want to observe. For example, you might use a ruler to measure the length of an object or a thermometer to measure its temperature.

Criterion validity and construct validity are both types of measurement validity . In other words, they both show you how accurately a method measures something.

While construct validity is the degree to which a test or other measurement method measures what it claims to measure, criterion validity is the degree to which a test can predictively (in the future) or concurrently (in the present) measure something.

Construct validity is often considered the overarching type of measurement validity . You need to have face validity , content validity , and criterion validity in order to achieve construct validity.

Convergent validity and discriminant validity are both subtypes of construct validity . Together, they help you evaluate whether a test measures the concept it was designed to measure.

- Convergent validity indicates whether a test that is designed to measure a particular construct correlates with other tests that assess the same or similar construct.

- Discriminant validity indicates whether two tests that should not be highly related to each other are indeed not related. This type of validity is also called divergent validity .

You need to assess both in order to demonstrate construct validity. Neither one alone is sufficient for establishing construct validity.

- Discriminant validity indicates whether two tests that should not be highly related to each other are indeed not related

Content validity shows you how accurately a test or other measurement method taps into the various aspects of the specific construct you are researching.

In other words, it helps you answer the question: “does the test measure all aspects of the construct I want to measure?” If it does, then the test has high content validity.

The higher the content validity, the more accurate the measurement of the construct.

If the test fails to include parts of the construct, or irrelevant parts are included, the validity of the instrument is threatened, which brings your results into question.

Face validity and content validity are similar in that they both evaluate how suitable the content of a test is. The difference is that face validity is subjective, and assesses content at surface level.

When a test has strong face validity, anyone would agree that the test’s questions appear to measure what they are intended to measure.

For example, looking at a 4th grade math test consisting of problems in which students have to add and multiply, most people would agree that it has strong face validity (i.e., it looks like a math test).

On the other hand, content validity evaluates how well a test represents all the aspects of a topic. Assessing content validity is more systematic and relies on expert evaluation. of each question, analyzing whether each one covers the aspects that the test was designed to cover.

A 4th grade math test would have high content validity if it covered all the skills taught in that grade. Experts(in this case, math teachers), would have to evaluate the content validity by comparing the test to the learning objectives.

Snowball sampling is a non-probability sampling method . Unlike probability sampling (which involves some form of random selection ), the initial individuals selected to be studied are the ones who recruit new participants.

Because not every member of the target population has an equal chance of being recruited into the sample, selection in snowball sampling is non-random.

Snowball sampling is a non-probability sampling method , where there is not an equal chance for every member of the population to be included in the sample .

This means that you cannot use inferential statistics and make generalizations —often the goal of quantitative research . As such, a snowball sample is not representative of the target population and is usually a better fit for qualitative research .

Snowball sampling relies on the use of referrals. Here, the researcher recruits one or more initial participants, who then recruit the next ones.

Participants share similar characteristics and/or know each other. Because of this, not every member of the population has an equal chance of being included in the sample, giving rise to sampling bias .

Snowball sampling is best used in the following cases:

- If there is no sampling frame available (e.g., people with a rare disease)

- If the population of interest is hard to access or locate (e.g., people experiencing homelessness)

- If the research focuses on a sensitive topic (e.g., extramarital affairs)

The reproducibility and replicability of a study can be ensured by writing a transparent, detailed method section and using clear, unambiguous language.

Reproducibility and replicability are related terms.

- Reproducing research entails reanalyzing the existing data in the same manner.

- Replicating (or repeating ) the research entails reconducting the entire analysis, including the collection of new data .

- A successful reproduction shows that the data analyses were conducted in a fair and honest manner.

- A successful replication shows that the reliability of the results is high.

Stratified sampling and quota sampling both involve dividing the population into subgroups and selecting units from each subgroup. The purpose in both cases is to select a representative sample and/or to allow comparisons between subgroups.

The main difference is that in stratified sampling, you draw a random sample from each subgroup ( probability sampling ). In quota sampling you select a predetermined number or proportion of units, in a non-random manner ( non-probability sampling ).

Purposive and convenience sampling are both sampling methods that are typically used in qualitative data collection.

A convenience sample is drawn from a source that is conveniently accessible to the researcher. Convenience sampling does not distinguish characteristics among the participants. On the other hand, purposive sampling focuses on selecting participants possessing characteristics associated with the research study.

The findings of studies based on either convenience or purposive sampling can only be generalized to the (sub)population from which the sample is drawn, and not to the entire population.

Random sampling or probability sampling is based on random selection. This means that each unit has an equal chance (i.e., equal probability) of being included in the sample.

On the other hand, convenience sampling involves stopping people at random, which means that not everyone has an equal chance of being selected depending on the place, time, or day you are collecting your data.

Convenience sampling and quota sampling are both non-probability sampling methods. They both use non-random criteria like availability, geographical proximity, or expert knowledge to recruit study participants.

However, in convenience sampling, you continue to sample units or cases until you reach the required sample size.

In quota sampling, you first need to divide your population of interest into subgroups (strata) and estimate their proportions (quota) in the population. Then you can start your data collection, using convenience sampling to recruit participants, until the proportions in each subgroup coincide with the estimated proportions in the population.

A sampling frame is a list of every member in the entire population . It is important that the sampling frame is as complete as possible, so that your sample accurately reflects your population.

Stratified and cluster sampling may look similar, but bear in mind that groups created in cluster sampling are heterogeneous , so the individual characteristics in the cluster vary. In contrast, groups created in stratified sampling are homogeneous , as units share characteristics.

Relatedly, in cluster sampling you randomly select entire groups and include all units of each group in your sample. However, in stratified sampling, you select some units of all groups and include them in your sample. In this way, both methods can ensure that your sample is representative of the target population .

A systematic review is secondary research because it uses existing research. You don’t collect new data yourself.

The key difference between observational studies and experimental designs is that a well-done observational study does not influence the responses of participants, while experiments do have some sort of treatment condition applied to at least some participants by random assignment .

An observational study is a great choice for you if your research question is based purely on observations. If there are ethical, logistical, or practical concerns that prevent you from conducting a traditional experiment , an observational study may be a good choice. In an observational study, there is no interference or manipulation of the research subjects, as well as no control or treatment groups .

It’s often best to ask a variety of people to review your measurements. You can ask experts, such as other researchers, or laypeople, such as potential participants, to judge the face validity of tests.

While experts have a deep understanding of research methods , the people you’re studying can provide you with valuable insights you may have missed otherwise.

Face validity is important because it’s a simple first step to measuring the overall validity of a test or technique. It’s a relatively intuitive, quick, and easy way to start checking whether a new measure seems useful at first glance.

Good face validity means that anyone who reviews your measure says that it seems to be measuring what it’s supposed to. With poor face validity, someone reviewing your measure may be left confused about what you’re measuring and why you’re using this method.

Face validity is about whether a test appears to measure what it’s supposed to measure. This type of validity is concerned with whether a measure seems relevant and appropriate for what it’s assessing only on the surface.

Statistical analyses are often applied to test validity with data from your measures. You test convergent validity and discriminant validity with correlations to see if results from your test are positively or negatively related to those of other established tests.

You can also use regression analyses to assess whether your measure is actually predictive of outcomes that you expect it to predict theoretically. A regression analysis that supports your expectations strengthens your claim of construct validity .

When designing or evaluating a measure, construct validity helps you ensure you’re actually measuring the construct you’re interested in. If you don’t have construct validity, you may inadvertently measure unrelated or distinct constructs and lose precision in your research.

Construct validity is often considered the overarching type of measurement validity , because it covers all of the other types. You need to have face validity , content validity , and criterion validity to achieve construct validity.

Construct validity is about how well a test measures the concept it was designed to evaluate. It’s one of four types of measurement validity , which includes construct validity, face validity , and criterion validity.

There are two subtypes of construct validity.

- Convergent validity : The extent to which your measure corresponds to measures of related constructs

- Discriminant validity : The extent to which your measure is unrelated or negatively related to measures of distinct constructs

Naturalistic observation is a valuable tool because of its flexibility, external validity , and suitability for topics that can’t be studied in a lab setting.

The downsides of naturalistic observation include its lack of scientific control , ethical considerations , and potential for bias from observers and subjects.

Naturalistic observation is a qualitative research method where you record the behaviors of your research subjects in real world settings. You avoid interfering or influencing anything in a naturalistic observation.

You can think of naturalistic observation as “people watching” with a purpose.

A dependent variable is what changes as a result of the independent variable manipulation in experiments . It’s what you’re interested in measuring, and it “depends” on your independent variable.

In statistics, dependent variables are also called:

- Response variables (they respond to a change in another variable)

- Outcome variables (they represent the outcome you want to measure)

- Left-hand-side variables (they appear on the left-hand side of a regression equation)

An independent variable is the variable you manipulate, control, or vary in an experimental study to explore its effects. It’s called “independent” because it’s not influenced by any other variables in the study.

Independent variables are also called:

- Explanatory variables (they explain an event or outcome)

- Predictor variables (they can be used to predict the value of a dependent variable)

- Right-hand-side variables (they appear on the right-hand side of a regression equation).

As a rule of thumb, questions related to thoughts, beliefs, and feelings work well in focus groups. Take your time formulating strong questions, paying special attention to phrasing. Be careful to avoid leading questions , which can bias your responses.

Overall, your focus group questions should be:

- Open-ended and flexible

- Impossible to answer with “yes” or “no” (questions that start with “why” or “how” are often best)

- Unambiguous, getting straight to the point while still stimulating discussion

- Unbiased and neutral

A structured interview is a data collection method that relies on asking questions in a set order to collect data on a topic. They are often quantitative in nature. Structured interviews are best used when:

- You already have a very clear understanding of your topic. Perhaps significant research has already been conducted, or you have done some prior research yourself, but you already possess a baseline for designing strong structured questions.

- You are constrained in terms of time or resources and need to analyze your data quickly and efficiently.

- Your research question depends on strong parity between participants, with environmental conditions held constant.

More flexible interview options include semi-structured interviews , unstructured interviews , and focus groups .

Social desirability bias is the tendency for interview participants to give responses that will be viewed favorably by the interviewer or other participants. It occurs in all types of interviews and surveys , but is most common in semi-structured interviews , unstructured interviews , and focus groups .

Social desirability bias can be mitigated by ensuring participants feel at ease and comfortable sharing their views. Make sure to pay attention to your own body language and any physical or verbal cues, such as nodding or widening your eyes.

This type of bias can also occur in observations if the participants know they’re being observed. They might alter their behavior accordingly.

The interviewer effect is a type of bias that emerges when a characteristic of an interviewer (race, age, gender identity, etc.) influences the responses given by the interviewee.

There is a risk of an interviewer effect in all types of interviews , but it can be mitigated by writing really high-quality interview questions.

A semi-structured interview is a blend of structured and unstructured types of interviews. Semi-structured interviews are best used when:

- You have prior interview experience. Spontaneous questions are deceptively challenging, and it’s easy to accidentally ask a leading question or make a participant uncomfortable.

- Your research question is exploratory in nature. Participant answers can guide future research questions and help you develop a more robust knowledge base for future research.

An unstructured interview is the most flexible type of interview, but it is not always the best fit for your research topic.

Unstructured interviews are best used when:

- You are an experienced interviewer and have a very strong background in your research topic, since it is challenging to ask spontaneous, colloquial questions.

- Your research question is exploratory in nature. While you may have developed hypotheses, you are open to discovering new or shifting viewpoints through the interview process.

- You are seeking descriptive data, and are ready to ask questions that will deepen and contextualize your initial thoughts and hypotheses.

- Your research depends on forming connections with your participants and making them feel comfortable revealing deeper emotions, lived experiences, or thoughts.

The four most common types of interviews are:

- Structured interviews : The questions are predetermined in both topic and order.

- Semi-structured interviews : A few questions are predetermined, but other questions aren’t planned.

- Unstructured interviews : None of the questions are predetermined.

- Focus group interviews : The questions are presented to a group instead of one individual.

Deductive reasoning is commonly used in scientific research, and it’s especially associated with quantitative research .

In research, you might have come across something called the hypothetico-deductive method . It’s the scientific method of testing hypotheses to check whether your predictions are substantiated by real-world data.

Deductive reasoning is a logical approach where you progress from general ideas to specific conclusions. It’s often contrasted with inductive reasoning , where you start with specific observations and form general conclusions.

Deductive reasoning is also called deductive logic.

There are many different types of inductive reasoning that people use formally or informally.

Here are a few common types:

- Inductive generalization : You use observations about a sample to come to a conclusion about the population it came from.

- Statistical generalization: You use specific numbers about samples to make statements about populations.

- Causal reasoning: You make cause-and-effect links between different things.

- Sign reasoning: You make a conclusion about a correlational relationship between different things.

- Analogical reasoning: You make a conclusion about something based on its similarities to something else.

Inductive reasoning is a bottom-up approach, while deductive reasoning is top-down.

Inductive reasoning takes you from the specific to the general, while in deductive reasoning, you make inferences by going from general premises to specific conclusions.

In inductive research , you start by making observations or gathering data. Then, you take a broad scan of your data and search for patterns. Finally, you make general conclusions that you might incorporate into theories.

Inductive reasoning is a method of drawing conclusions by going from the specific to the general. It’s usually contrasted with deductive reasoning, where you proceed from general information to specific conclusions.

Inductive reasoning is also called inductive logic or bottom-up reasoning.

Triangulation can help:

- Reduce research bias that comes from using a single method, theory, or investigator

- Enhance validity by approaching the same topic with different tools

- Establish credibility by giving you a complete picture of the research problem

But triangulation can also pose problems:

- It’s time-consuming and labor-intensive, often involving an interdisciplinary team.

- Your results may be inconsistent or even contradictory.

There are four main types of triangulation :

- Data triangulation : Using data from different times, spaces, and people

- Investigator triangulation : Involving multiple researchers in collecting or analyzing data

- Theory triangulation : Using varying theoretical perspectives in your research

- Methodological triangulation : Using different methodologies to approach the same topic

Many academic fields use peer review , largely to determine whether a manuscript is suitable for publication. Peer review enhances the credibility of the published manuscript.

However, peer review is also common in non-academic settings. The United Nations, the European Union, and many individual nations use peer review to evaluate grant applications. It is also widely used in medical and health-related fields as a teaching or quality-of-care measure.

Peer assessment is often used in the classroom as a pedagogical tool. Both receiving feedback and providing it are thought to enhance the learning process, helping students think critically and collaboratively.

Peer review can stop obviously problematic, falsified, or otherwise untrustworthy research from being published. It also represents an excellent opportunity to get feedback from renowned experts in your field. It acts as a first defense, helping you ensure your argument is clear and that there are no gaps, vague terms, or unanswered questions for readers who weren’t involved in the research process.

Peer-reviewed articles are considered a highly credible source due to this stringent process they go through before publication.

In general, the peer review process follows the following steps:

- First, the author submits the manuscript to the editor.

- Reject the manuscript and send it back to author, or

- Send it onward to the selected peer reviewer(s)

- Next, the peer review process occurs. The reviewer provides feedback, addressing any major or minor issues with the manuscript, and gives their advice regarding what edits should be made.

- Lastly, the edited manuscript is sent back to the author. They input the edits, and resubmit it to the editor for publication.

Exploratory research is often used when the issue you’re studying is new or when the data collection process is challenging for some reason.

You can use exploratory research if you have a general idea or a specific question that you want to study but there is no preexisting knowledge or paradigm with which to study it.

Exploratory research is a methodology approach that explores research questions that have not previously been studied in depth. It is often used when the issue you’re studying is new, or the data collection process is challenging in some way.

Explanatory research is used to investigate how or why a phenomenon occurs. Therefore, this type of research is often one of the first stages in the research process , serving as a jumping-off point for future research.

Exploratory research aims to explore the main aspects of an under-researched problem, while explanatory research aims to explain the causes and consequences of a well-defined problem.

Explanatory research is a research method used to investigate how or why something occurs when only a small amount of information is available pertaining to that topic. It can help you increase your understanding of a given topic.

Clean data are valid, accurate, complete, consistent, unique, and uniform. Dirty data include inconsistencies and errors.

Dirty data can come from any part of the research process, including poor research design , inappropriate measurement materials, or flawed data entry.

Data cleaning takes place between data collection and data analyses. But you can use some methods even before collecting data.

For clean data, you should start by designing measures that collect valid data. Data validation at the time of data entry or collection helps you minimize the amount of data cleaning you’ll need to do.

After data collection, you can use data standardization and data transformation to clean your data. You’ll also deal with any missing values, outliers, and duplicate values.

Every dataset requires different techniques to clean dirty data , but you need to address these issues in a systematic way. You focus on finding and resolving data points that don’t agree or fit with the rest of your dataset.

These data might be missing values, outliers, duplicate values, incorrectly formatted, or irrelevant. You’ll start with screening and diagnosing your data. Then, you’ll often standardize and accept or remove data to make your dataset consistent and valid.

Data cleaning is necessary for valid and appropriate analyses. Dirty data contain inconsistencies or errors , but cleaning your data helps you minimize or resolve these.

Without data cleaning, you could end up with a Type I or II error in your conclusion. These types of erroneous conclusions can be practically significant with important consequences, because they lead to misplaced investments or missed opportunities.

Data cleaning involves spotting and resolving potential data inconsistencies or errors to improve your data quality. An error is any value (e.g., recorded weight) that doesn’t reflect the true value (e.g., actual weight) of something that’s being measured.

In this process, you review, analyze, detect, modify, or remove “dirty” data to make your dataset “clean.” Data cleaning is also called data cleansing or data scrubbing.

Research misconduct means making up or falsifying data, manipulating data analyses, or misrepresenting results in research reports. It’s a form of academic fraud.

These actions are committed intentionally and can have serious consequences; research misconduct is not a simple mistake or a point of disagreement but a serious ethical failure.

Anonymity means you don’t know who the participants are, while confidentiality means you know who they are but remove identifying information from your research report. Both are important ethical considerations .

You can only guarantee anonymity by not collecting any personally identifying information—for example, names, phone numbers, email addresses, IP addresses, physical characteristics, photos, or videos.

You can keep data confidential by using aggregate information in your research report, so that you only refer to groups of participants rather than individuals.

Research ethics matter for scientific integrity, human rights and dignity, and collaboration between science and society. These principles make sure that participation in studies is voluntary, informed, and safe.

Ethical considerations in research are a set of principles that guide your research designs and practices. These principles include voluntary participation, informed consent, anonymity, confidentiality, potential for harm, and results communication.

Scientists and researchers must always adhere to a certain code of conduct when collecting data from others .

These considerations protect the rights of research participants, enhance research validity , and maintain scientific integrity.

In multistage sampling , you can use probability or non-probability sampling methods .

For a probability sample, you have to conduct probability sampling at every stage.

You can mix it up by using simple random sampling , systematic sampling , or stratified sampling to select units at different stages, depending on what is applicable and relevant to your study.

Multistage sampling can simplify data collection when you have large, geographically spread samples, and you can obtain a probability sample without a complete sampling frame.

But multistage sampling may not lead to a representative sample, and larger samples are needed for multistage samples to achieve the statistical properties of simple random samples .

These are four of the most common mixed methods designs :

- Convergent parallel: Quantitative and qualitative data are collected at the same time and analyzed separately. After both analyses are complete, compare your results to draw overall conclusions.

- Embedded: Quantitative and qualitative data are collected at the same time, but within a larger quantitative or qualitative design. One type of data is secondary to the other.

- Explanatory sequential: Quantitative data is collected and analyzed first, followed by qualitative data. You can use this design if you think your qualitative data will explain and contextualize your quantitative findings.

- Exploratory sequential: Qualitative data is collected and analyzed first, followed by quantitative data. You can use this design if you think the quantitative data will confirm or validate your qualitative findings.

Triangulation in research means using multiple datasets, methods, theories and/or investigators to address a research question. It’s a research strategy that can help you enhance the validity and credibility of your findings.

Triangulation is mainly used in qualitative research , but it’s also commonly applied in quantitative research . Mixed methods research always uses triangulation.

In multistage sampling , or multistage cluster sampling, you draw a sample from a population using smaller and smaller groups at each stage.

This method is often used to collect data from a large, geographically spread group of people in national surveys, for example. You take advantage of hierarchical groupings (e.g., from state to city to neighborhood) to create a sample that’s less expensive and time-consuming to collect data from.

No, the steepness or slope of the line isn’t related to the correlation coefficient value. The correlation coefficient only tells you how closely your data fit on a line, so two datasets with the same correlation coefficient can have very different slopes.

To find the slope of the line, you’ll need to perform a regression analysis .

Correlation coefficients always range between -1 and 1.

The sign of the coefficient tells you the direction of the relationship: a positive value means the variables change together in the same direction, while a negative value means they change together in opposite directions.

The absolute value of a number is equal to the number without its sign. The absolute value of a correlation coefficient tells you the magnitude of the correlation: the greater the absolute value, the stronger the correlation.

These are the assumptions your data must meet if you want to use Pearson’s r :

- Both variables are on an interval or ratio level of measurement

- Data from both variables follow normal distributions

- Your data have no outliers

- Your data is from a random or representative sample

- You expect a linear relationship between the two variables

Quantitative research designs can be divided into two main categories:

- Correlational and descriptive designs are used to investigate characteristics, averages, trends, and associations between variables.

- Experimental and quasi-experimental designs are used to test causal relationships .

Qualitative research designs tend to be more flexible. Common types of qualitative design include case study , ethnography , and grounded theory designs.

A well-planned research design helps ensure that your methods match your research aims, that you collect high-quality data, and that you use the right kind of analysis to answer your questions, utilizing credible sources . This allows you to draw valid , trustworthy conclusions.

The priorities of a research design can vary depending on the field, but you usually have to specify:

- Your research questions and/or hypotheses

- Your overall approach (e.g., qualitative or quantitative )

- The type of design you’re using (e.g., a survey , experiment , or case study )

- Your sampling methods or criteria for selecting subjects

- Your data collection methods (e.g., questionnaires , observations)

- Your data collection procedures (e.g., operationalization , timing and data management)

- Your data analysis methods (e.g., statistical tests or thematic analysis )

A research design is a strategy for answering your research question . It defines your overall approach and determines how you will collect and analyze data.

Questionnaires can be self-administered or researcher-administered.

Self-administered questionnaires can be delivered online or in paper-and-pen formats, in person or through mail. All questions are standardized so that all respondents receive the same questions with identical wording.

Researcher-administered questionnaires are interviews that take place by phone, in-person, or online between researchers and respondents. You can gain deeper insights by clarifying questions for respondents or asking follow-up questions.

You can organize the questions logically, with a clear progression from simple to complex, or randomly between respondents. A logical flow helps respondents process the questionnaire easier and quicker, but it may lead to bias. Randomization can minimize the bias from order effects.

Closed-ended, or restricted-choice, questions offer respondents a fixed set of choices to select from. These questions are easier to answer quickly.

Open-ended or long-form questions allow respondents to answer in their own words. Because there are no restrictions on their choices, respondents can answer in ways that researchers may not have otherwise considered.

A questionnaire is a data collection tool or instrument, while a survey is an overarching research method that involves collecting and analyzing data from people using questionnaires.

The third variable and directionality problems are two main reasons why correlation isn’t causation .

The third variable problem means that a confounding variable affects both variables to make them seem causally related when they are not.

The directionality problem is when two variables correlate and might actually have a causal relationship, but it’s impossible to conclude which variable causes changes in the other.

Correlation describes an association between variables : when one variable changes, so does the other. A correlation is a statistical indicator of the relationship between variables.

Causation means that changes in one variable brings about changes in the other (i.e., there is a cause-and-effect relationship between variables). The two variables are correlated with each other, and there’s also a causal link between them.

While causation and correlation can exist simultaneously, correlation does not imply causation. In other words, correlation is simply a relationship where A relates to B—but A doesn’t necessarily cause B to happen (or vice versa). Mistaking correlation for causation is a common error and can lead to false cause fallacy .

Controlled experiments establish causality, whereas correlational studies only show associations between variables.

- In an experimental design , you manipulate an independent variable and measure its effect on a dependent variable. Other variables are controlled so they can’t impact the results.

- In a correlational design , you measure variables without manipulating any of them. You can test whether your variables change together, but you can’t be sure that one variable caused a change in another.

In general, correlational research is high in external validity while experimental research is high in internal validity .

A correlation is usually tested for two variables at a time, but you can test correlations between three or more variables.

A correlation coefficient is a single number that describes the strength and direction of the relationship between your variables.

Different types of correlation coefficients might be appropriate for your data based on their levels of measurement and distributions . The Pearson product-moment correlation coefficient (Pearson’s r ) is commonly used to assess a linear relationship between two quantitative variables.

A correlational research design investigates relationships between two variables (or more) without the researcher controlling or manipulating any of them. It’s a non-experimental type of quantitative research .

A correlation reflects the strength and/or direction of the association between two or more variables.

- A positive correlation means that both variables change in the same direction.

- A negative correlation means that the variables change in opposite directions.

- A zero correlation means there’s no relationship between the variables.

Random error is almost always present in scientific studies, even in highly controlled settings. While you can’t eradicate it completely, you can reduce random error by taking repeated measurements, using a large sample, and controlling extraneous variables .