- Duke NetID Login

- 919.660.1100

- Duke Health Badge: 24-hour access

- Accounts & Access

- Databases, Journals & Books

- Request & Reserve

- Training & Consulting

- Request Articles & Books

- Renew Online

- Reserve Spaces

- Reserve a Locker

- Study & Meeting Rooms

- Course Reserves

- Digital Health Device Collection

- Pay Fines/Fees

- Recommend a Purchase

- Access From Off Campus

- Building Access

- Computers & Equipment

- Wifi Access

- My Accounts

- Mobile Apps

- Known Access Issues

- Report an Access Issue

- All Databases

- Article Databases

- Basic Sciences

- Clinical Sciences

- Dissertations & Theses

- Drugs, Chemicals & Toxicology

- Grants & Funding

- Interprofessional Education

- Non-Medical Databases

- Search for E-Journals

- Search for Print & E-Journals

- Search for E-Books

- Search for Print & E-Books

- E-Book Collections

- Biostatistics

- Global Health

- MBS Program

- Medical Students

- MMCi Program

- Occupational Therapy

- Path Asst Program

- Physical Therapy

- Researchers

- Community Partners

Conducting Research

- Archival & Historical Research

- Black History at Duke Health

- Data Analytics & Viz Software

- Data: Find and Share

- Evidence-Based Practice

- NIH Public Access Policy Compliance

- Publication Metrics

- Qualitative Research

- Searching Animal Alternatives

Systematic Reviews

- Test Instruments

Using Databases

- JCR Impact Factors

- Web of Science

Finding & Accessing

- COVID-19: Core Clinical Resources

- Health Literacy

- Health Statistics & Data

- Library Orientation

Writing & Citing

- Creating Links

- Getting Published

- Reference Mgmt

- Scientific Writing

Meet a Librarian

- Request a Consultation

- Find Your Liaisons

- Register for a Class

- Request a Class

- Self-Paced Learning

Search Services

- Literature Search

- Systematic Review

- Animal Alternatives (IACUC)

- Research Impact

Citation Mgmt

- Other Software

Scholarly Communications

- About Scholarly Communications

- Publish Your Work

- Measure Your Research Impact

- Engage in Open Science

- Libraries and Publishers

- Directions & Maps

- Floor Plans

Library Updates

- Annual Snapshot

- Conference Presentations

- Contact Information

- Gifts & Donations

- What is a Systematic Review?

- Types of Reviews

- Manuals and Reporting Guidelines

- Our Service

- 1. Assemble Your Team

- 2. Develop a Research Question

- 3. Write and Register a Protocol

- 4. Search the Evidence

- 5. Screen Results

- 6. Assess for Quality and Bias

- 7. Extract the Data

- 8. Write the Review

- Additional Resources

- Finding Full-Text Articles

Manuals versus Reporting Guidelines

There are two types of guidance documents necessary for conducting systematic reviews and other evidence syntheses, they serve different purposes and you need both to successfully navigate the process from planning to publication.

- Handbooks or manuals

- Reporting guidelines

Handbooks and manuals provide practical methodological guidance for undertaking a systematic review. They contain detailed steps on how to plan, conduct, organize, and present your review. This is the best place to go if you have any questions about the best practices for any of the steps in the process.

Reporting guidelines aid in the transparent and accurate reporting, in your manuscript for publication, the steps you performed when conducting your review.

From the Equator Network - What is a reporting guideline?

A reporting guideline is a simple, structured tool for health researchers to use while writing manuscripts. A reporting guideline provides a minimum list of information needed to ensure a manuscript can be:

- Understood by a reader,

- Replicated by a researcher,

- Used by a doctor to make a clinical decision, and

- Included in a systematic review.

- EQUATOR Network: Enhancing the QUAlity and Transparency Of health Research

Handbooks and Manuals

- Cochrane Handbook for Systematic Reviews of Interventions The Cochrane Handbook for Systematic Reviews of Interventions is the official document that describes in detail the process of preparing and maintaining Cochrane systematic reviews on the effects of healthcare interventions.

- JBI Manual for Evidence Synthesis: Scoping Reviews Comprehensive chapter from the Joanna Briggs Institute on how to conduct a scoping review.

- Scoping studies: Towards a Methodological Framework Arksey H, O'Malley L. Scoping studies: Towards a Methodological Framework. Int J Soc Res Methodol. 2005;8:19–32. doi: 10.1080/1364557032000119616

- AAFP Clinical Practice Guideline Manual This manual summarizes the processes used by the AAFP to produce high-quality, evidence-based guidelines.

- Finding What Works in Health Care: Standards for Systematic Reviews The National Academies Press (formerly IOM) standards address the entire systematic review process, from locating, screening, and selecting studies for the review, to synthesizing the findings and assessing the overall quality of the body of evidence, to producing the final review report.

- Methods Guide for Effectiveness and Comparative Effectiveness Reviews (AHRQ) This guide was developed to improve the transparency, consistency, and scientific rigor of those working on Comparative Effectiveness Reviews.

- Conduct Standards: Methodological Expectations of Campbell Collaboration Intervention Reviews (MECCIR) Guidelines for conducting a Campbell Systematic Review. The Campbell Collaboration is an international research network that produces systematic reviews of the effects of social interventions.

- Guidance for producing a Campbell evidence and gap map This guidance is intended for commissioners and producers of Campbell evidence and gaps maps (EGMs), and will be of use to others also producing evidence maps. The guidance provides an overview of the steps involved in producing a map.

- Cochrane Handbook - Chapter 16: Equity and specific populations

Systematic Review Reporting Guidelines

- Preferred Reporting Items for Systematic Reviews and Meta-Analyses 2020 (PRISMA Statement) The aim of the 20020 PRISMA Statement is to help authors improve the reporting of systematic reviews and meta-analyses. The focus of PRISMA is randomized trials, but it can also be used as a basis for reporting systematic reviews of other types of research, particularly evaluations of interventions. We highly encourage authors to review the PRISMA 2020 Elaboration & Explanation document.

- PRISMA for Diagnostic Test Accuracy The 27-item PRISMA diagnostic test accuracy checklist provides specific guidance for reporting of systematic reviews. The PRISMA diagnostic test accuracy guideline can facilitate the transparent reporting of reviews, and may assist in the evaluation of validity and applicability, enhance replicability of reviews, and make the results from systematic reviews of diagnostic test accuracy studies more useful.

- PRISMA for reviews including harms outcomes The PRISMA harms checklist contains four extension items that must be used in any systematic review addressing harms, irrespective of whether harms are analysed alone or in association with benefits

- PRISMA for Scoping Reviews The PRISMA extension for scoping reviews was published in 2018. The checklist contains 20 essential reporting items and 2 optional items to include when completing a scoping review. Scoping reviews serve to synthesize evidence and assess the scope of literature on a topic. Among other objectives, scoping reviews help determine whether a systematic review of the literature is warranted.

- Reporting Standards: Campbell evidence and gap map This document provides detailed methodological expectations for the reporting of Campbell Collaboration evidence and gap maps (EGMs).

- Reporting Standads: Methodological Expectations of Campbell Collaboration Intervention Reviews (MECCIR) Guidelines for reporting a Campbell Systematic Review. The Campbell Collaboration is an international research network that produces systematic reviews of the effects of social interventions.

- The Equity Checklist for Systematic Review Authors This tool developed by the Campbell and Cochrane Equity Methods Group may help authors when considering equity in their review. It may also be helpful to use the PRISMA-Equity checklist for reporting.

- PRISMA Equity It provides guidance for reporting equity-focused systematic reviews in order to help reviewers identify, extract, and synthesize evidence on equity in systematic reviews. Health inequity is defined as unfair and avoidable differences in health.

- MOOSE (Meta-analyses Of Observational Studies in Epidemiology) Checklist Guidelines for meta-analyses of observational studies in epidemiology.

- Cochrane Handbook - Chapter 4: Searching for and selecting studies This chapter aims to provide review authors with background information on all aspects of searching for and selecting studies so that they can better understand the search and selection processes. All authors of systematic reviews should, however, identify an experienced medical/healthcare librarian or information specialist to collaborate with on the search process.

- PRISMA-S: an extension to the PRISMA Statement for Reporting Literature Searches in Systematic Reviews The checklist includes 16 reporting items, each of which is detailed with exemplar reporting and Rationale.

- Searching for studies: a guide to information retrieval for Campbell systematic reviews This guide (a) identifies the key issues faced by reviewers when gathering information for a review, (b) proposes different approaches in order to guide the work of the reviewer during the information retrieval phase, and (c) provides examples that demonstrate these approaches.

Reporting Guidelines for Other Reviews

- EQUATOR Network: Systematic Reviews, Meta-Analysis, Reviews, Overview, HTA The EQUATOR network includes reporting guidelines for many review types.

- << Previous: Types of Reviews

- Next: Our Service >>

- Last Updated: Mar 20, 2024 2:21 PM

- URL: https://guides.mclibrary.duke.edu/sysreview

- Duke Health

- Duke University

- Duke Libraries

- Medical Center Archives

- Duke Directory

- Seeley G. Mudd Building

- 10 Searle Drive

- [email protected]

Easy guide to conducting a systematic review

Affiliations.

- 1 Discipline of Child and Adolescent Health, University of Sydney, Sydney, New South Wales, Australia.

- 2 Department of Nephrology, The Children's Hospital at Westmead, Sydney, New South Wales, Australia.

- 3 Education Department, The Children's Hospital at Westmead, Sydney, New South Wales, Australia.

- PMID: 32364273

- DOI: 10.1111/jpc.14853

A systematic review is a type of study that synthesises research that has been conducted on a particular topic. Systematic reviews are considered to provide the highest level of evidence on the hierarchy of evidence pyramid. Systematic reviews are conducted following rigorous research methodology. To minimise bias, systematic reviews utilise a predefined search strategy to identify and appraise all available published literature on a specific topic. The meticulous nature of the systematic review research methodology differentiates a systematic review from a narrative review (literature review or authoritative review). This paper provides a brief step by step summary of how to conduct a systematic review, which may be of interest for clinicians and researchers.

Keywords: research; research design; systematic review.

© 2020 Paediatrics and Child Health Division (The Royal Australasian College of Physicians).

Publication types

- Systematic Review

- Research Design*

Systematic reviews

- Introduction to systematic reviews

- Steps in a systematic review

- Formulate the question

Review protocols

Finding existing systematic reviews, registering your protocol.

- Sources to search

- Conduct a thorough search

- Post search phase

- Select studies (screening)

- Appraise the quality of the studies

- Extract data, synthesise and analyse

- Interpret results and write

- Guides and manuals

- Training and support

The plan for a systematic review is called a protocol and defines the steps that will be undertaken in the review.

The Cochrane Collaboration defines a protocol as the plan or set of steps to be followed in a study.

A protocol for a systematic review should describe the rationale for the review, the objectives, the methods that will be used to locate, select, and critically appraise studies, and to collect and analyse data from the included studies.

Higgins J, Thomas J, Chandler J, Cumpston M, Li T, Page MJ et al, editors. Cochrane handbook for systematic reviews of interventions, Cochrane; 2022.

A review protocol will include:

- Background to the study and the importance of the topic

- Objectives and scope

- Selection criteria for the studies

- Planned search strategy

- Planned data extraction

- Proposed method for synthesising the findings.

The protocol will help guide the process of the review and work as a point of reference to each part of the review.

PRISMA is considered the gold standard for reporting how you conducted your systematic review, and it's worth considering these elements now as you prepare your protocol. There is an extension to PRISMA specifically aimed at guiding the format of protocols, PRISMA-P . There is more information about PRISMA in the section of this guide, 'Writing the review' .

In the early stages of your planned project you'll need to check for any existing systematic reviews on the same topic. The section 'Before you build a search' in this guide demonstrates how to do this as part of the scoping searching process. You can also do some searching in the sources listed below for existing reviews. Note that you'll need to keep your eye out for existing systematic reviews as you progress your project and develop more sophisticated searches for your topic.

PubMed : A good place to check for health-related reviews. Use the 'systematic reviews' limit on the left hand side to help you find existing reviews. PubMed will also allow to you find the reviews from the Cochrane Library , and the Joanna Briggs Institute , two well known producers of health related reviews (you could also search those two sites separately if you wish).

PROSPERO : International Prospective Register of Systematic Reviews (health-related). Search PROSPERO to find registered systematic review protocols, i.e. systematic review projects that are currently underway.

The Campbell Collaboration : Campbell systematic reviews follow structured guidelines and standards for summarizing the international research evidence on the effects of interventions in crime and justice, education, international development, and social welfare.

Environmental Evidence Library : contains a collection of Systematic Reviews (SRs) of evidence on the effectiveness human interventions in environmental management and the environmental impacts of humans activities.

EPPI-Centre : Evidence for Policy and Practice Information and Co-ordinating Centre. (Education and social policy, Health promotion and public health, International health).

You should also consult subject-specific or specialist databases that may be relevant to the topic area. It's worth searching the main databases that you're planning to use for your project for existing systematic reviews. Check the database options to see if you can limit to systematic reviews, or include 'systematic review' in your searches.

Registering the protocol for your planned project "promotes transparency, helps reduce potential for bias and serves to avoid unintended duplication of reviews". It's best to wait until your protocol is well developed and unlikely to change before registering it.

Stewart L, Moher D, Shekelle P. Why prospective registration of systematic reviews makes sense . Syst Rev. 2012;1(1):7.

PROSPERO is the main place for registering systematic reviews, rapid reviews and umbrella reviews that have a clear outcome related to human health. The PROSPERO webpage Accessing and completing the registration form has information about how to register your protocol, including information on which review types are eligible. Note that PROSPERO does not accept scoping reviews, and we would recommend registering these with the Open Science Framework.

Open Science Framework is another place that many review protocols are registered. It is a large, free platform dedicated to supporting many aspects of open science and is a good option for scoping reviews or reviews without a health outcome. The OSF Registries area is where protocols can be registered and searched. Information on how to register your protocol is available on the Welcome to Registrations page. Examples of registered protocols may be viewed by searching OSF Registries .

Another way to make your protocol available is to publish it in a journal that accepts protocols. Examples of such journals include Systematic Reviews, BMJ Open, Journal of Human Nutrition & Dietetics and more. To find potential journals, search on the topic of your review using a major database in your discipline and include the words systematic review protocol in your search, e.g. you might search for blended learning education systematic review protocol . The UQ Librarians can also assist you to identify potential journals.

- << Previous: Formulate the question

- Next: Sources to search >>

- Last Updated: Mar 26, 2024 2:54 PM

- URL: https://guides.library.uq.edu.au/research-techniques/systematic-reviews

- Locations and Hours

- UCLA Library

- Research Guides

- Biomedical Library Guides

Systematic Reviews

- Planning Your Systematic Review

- Types of Literature Reviews

Assembling Your Team

Steps of a systematic review, writing and publishing your protocol.

- Database Searching

- Creating the Search

- Search Filters and Hedges

- Grey Literature

- Managing and Appraising Results

- Further Resources

It is essential that Cochrane reviews be undertaken by more than one person. This ensures that tasks such as selection of studies for eligibility and data extraction can be performed by at least two people independently, increasing the likelihood that errors are detected.

- Cochrane Handbook version 5.1 , 2011, section 2.3.4.1

The objective of organizing the review team is to pull together a group of researchers as well as key users and stakeholders who have the necessary skills and clinical content knowledge to produce a high-quality SR.

Standard 2.1 Establish a team with appropriate expertise and experience to conduct the systematic review Required elements: Include expertise in the pertinent clinical content areas Include expertise in systematic review methods Include expertise in searching for relevant evidence Include expertise in quantitative methods Include other expertise as appropriate

- National Academies of Sciences, Engineering, and Medicine, Finding What Works in Health Care: Standards for Systematic Reviews , chapter 2, 2011.

See the further resources page for links to more in-depth resources on these steps.

- Usually this means deciding on an answerable question. The PICO framework can help you formulate a question that can be answered in the literature. PICO stands for: Patient or population, Intervention, Comparison or control, and Outcome.

- It is important to include team members who have clinical expertise related to the research topic. You also want to have at least one team member with expertise systematic review methodology, one team member with expertise in evidence searching (e.g. a medical librarian), and a biostatistician if you intend to perform a meta-analysis on your findings.

- A protocol is critical for your process. It spells out your search plan and your inclusion and exclusion criteria for the evidence you will discover. Sticking to your previously published protocol increases transparency and reduces bias in the process of gathering evidence.

- You may need to do some scoping searches as you develop your protocol, in order to help refine your research question.

- Once your protocol is finalized, you can work with a medical librarian on search strategies for multiple literature databases.

- Evidence may exist beyond the published literature. Gray literature searching is necessary to correct for publication bias.

- At least two independent screeners review titles and abstracts first, then full text.

- Various quality checklists (especially for RCTs) exist. You may also want to read about Cochrane's methods and risk of bias tool.

- Data must be extracted in a structured, documented way for included studies.

- Meta-analyses statistically combine results from multiple studies to gain more power, potentially detecting a different effect, through a larger sample size than the individual studies. A biostatistician should be part of the research team if a meta-analysis is conducted.

- It may not be possible to perform a meta-analysis on the existing evidence. In this case, evidence can be synthesized narratively.

- PRISMA is a popular reporting standard required by many journals.

- Check to see if a specialized reporting standard exists for your subfield.

What is a protocol?

- A protocol lays out your plan for the systematic review. It specifies the systematic review authors, the rationale and objectives for the review, the inclusion and exclusion criteria for study eligibility, the databases to be searched along with the search strategy, and the process for managing, screening, analyzing, and synthesizing the results.

Why write a protocol?

- As with any other study, a systematic review needs a plan. The protocol provides the team with a road map for completion.

Why publish a protocol?

- A published protocol makes your plan public. This accountability mitigates bias that can result from changing the research topic, or study eligibility criteria, based on results that were discovered during the study.

- It also informs other researchers of your ongoing work, preventing possible duplication of efforts.

- For more, read this article: Why prospective registration of systematic reviews makes sense

Guidance on writing a protocol

- PRISMA-P is an extension of the PRISMA reporting standard for protocols

- The Cochrane Handbook part 1, chapter 4 has information on writing Cochrane protocols

Sharing/publishing protocols

- Systematic Reviews journal

- PROSPERO , a database of protocols (it's free to add yours)

- << Previous: Types of Literature Reviews

- Next: Database Searching >>

- Last Updated: Apr 17, 2024 2:02 PM

- URL: https://guides.library.ucla.edu/systematicreviews

Systematic Review

- Library Help

- What is a Systematic Review (SR)?

- Steps of a Systematic Review

- Framing a Research Question

- Developing a Search Strategy

- Searching the Literature

- Managing the Process

- Meta-analysis

- Publishing your Systematic Review

Introduction to Systematic Review

- Introduction

- Types of literature reviews

- Other Libguides

- Systematic review as part of a dissertation

- Tutorials & Guidelines & Examples from non-Medical Disciplines

Depending on your learning style, please explore the resources in various formats on the tabs above.

For additional tutorials, visit the SR Workshop Videos from UNC at Chapel Hill outlining each stage of the systematic review process.

Know the difference! Systematic review vs. literature review

Types of literature reviews along with associated methodologies

JBI Manual for Evidence Synthesis . Find definitions and methodological guidance.

- Systematic Reviews - Chapters 1-7

- Mixed Methods Systematic Reviews - Chapter 8

- Diagnostic Test Accuracy Systematic Reviews - Chapter 9

- Umbrella Reviews - Chapter 10

- Scoping Reviews - Chapter 11

- Systematic Reviews of Measurement Properties - Chapter 12

Systematic reviews vs scoping reviews -

Grant, M. J., & Booth, A. (2009). A typology of reviews: an analysis of 14 review types and associated methodologies. Health Information and Libraries Journal , 26 (2), 91–108. https://doi.org/10.1111/j.1471-1842.2009.00848.x

Gough, D., Thomas, J., & Oliver, S. (2012). Clarifying differences between review designs and methods. Systematic Reviews, 1 (28). htt p s://doi.org/ 10.1186/2046-4053-1-28

Munn, Z., Peters, M., Stern, C., Tufanaru, C., McArthur, A., & Aromataris, E. (2018). Systematic review or scoping review ? Guidance for authors when choosing between a systematic or scoping review approach. BMC medical research methodology, 18 (1), 143. https://doi.org/10.1186/s12874-018-0611-x. Also, check out the Libguide from Weill Cornell Medicine for the differences between a systematic review and a scoping review and when to embark on either one of them.

Sutton, A., Clowes, M., Preston, L., & Booth, A. (2019). Meeting the review family: Exploring review types and associated information retrieval requirements . Health Information & Libraries Journal , 36 (3), 202–222. https://doi.org/10.1111/hir.12276

Temple University. Review Types . - This guide provides useful descriptions of some of the types of reviews listed in the above article.

UMD Health Sciences and Human Services Library. Review Types . - Guide describing Literature Reviews, Scoping Reviews, and Rapid Reviews.

Whittemore, R., Chao, A., Jang, M., Minges, K. E., & Park, C. (2014). Methods for knowledge synthesis: An overview. Heart & Lung: The Journal of Acute and Critical Care, 43 (5), 453–461. https://doi.org/10.1016/j.hrtlng.2014.05.014

Differences between a systematic review and other types of reviews

Armstrong, R., Hall, B. J., Doyle, J., & Waters, E. (2011). ‘ Scoping the scope ’ of a cochrane review. Journal of Public Health , 33 (1), 147–150. https://doi.org/10.1093/pubmed/fdr015

Kowalczyk, N., & Truluck, C. (2013). Literature reviews and systematic reviews: What is the difference? Radiologic Technology , 85 (2), 219–222.

White, H., Albers, B., Gaarder, M., Kornør, H., Littell, J., Marshall, Z., Matthew, C., Pigott, T., Snilstveit, B., Waddington, H., & Welch, V. (2020). Guidance for producing a Campbell evidence and gap map . Campbell Systematic Reviews, 16 (4), e1125. https://doi.org/10.1002/cl2.1125. Check also this comparison between evidence and gaps maps and systematic reviews.

Rapid Reviews Tutorials

Rapid Review Guidebook by the National Collaborating Centre of Methods and Tools (NCCMT)

Hamel, C., Michaud, A., Thuku, M., Skidmore, B., Stevens, A., Nussbaumer-Streit, B., & Garritty, C. (2021). Defining Rapid Reviews: a systematic scoping review and thematic analysis of definitions and defining characteristics of rapid reviews. Journal of clinical epidemiology , 129 , 74–85. https://doi.org/10.1016/j.jclinepi.2020.09.041

- Müller, C., Lautenschläger, S., Meyer, G., & Stephan, A. (2017). Interventions to support people with dementia and their caregivers during the transition from home care to nursing home care: A systematic review . International Journal of Nursing Studies, 71 , 139–152. https://doi.org/10.1016/j.ijnurstu.2017.03.013

- Bhui, K. S., Aslam, R. W., Palinski, A., McCabe, R., Johnson, M. R. D., Weich, S., … Szczepura, A. (2015). Interventions to improve therapeutic communications between Black and minority ethnic patients and professionals in psychiatric services: Systematic review . The British Journal of Psychiatry, 207 (2), 95–103. https://doi.org/10.1192/bjp.bp.114.158899

- Rosen, L. J., Noach, M. B., Winickoff, J. P., & Hovell, M. F. (2012). Parental smoking cessation to protect young children: A systematic review and meta-analysis . Pediatrics, 129 (1), 141–152. https://doi.org/10.1542/peds.2010-3209

Scoping Review

- Hyshka, E., Karekezi, K., Tan, B., Slater, L. G., Jahrig, J., & Wild, T. C. (2017). The role of consumer perspectives in estimating population need for substance use services: A scoping review . BMC Health Services Research, 171-14. https://doi.org/10.1186/s12913-017-2153-z

- Olson, K., Hewit, J., Slater, L.G., Chambers, T., Hicks, D., Farmer, A., & ... Kolb, B. (2016). Assessing cognitive function in adults during or following chemotherapy: A scoping review . Supportive Care In Cancer, 24 (7), 3223-3234. https://doi.org/10.1007/s00520-016-3215-1

- Pham, M. T., Rajić, A., Greig, J. D., Sargeant, J. M., Papadopoulos, A., & McEwen, S. A. (2014). A scoping review of scoping reviews: Advancing the approach and enhancing the consistency . Research Synthesis Methods, 5 (4), 371–385. https://doi.org/10.1002/jrsm.1123

- Scoping Review Tutorial from UNC at Chapel Hill

Qualitative Systematic Review/Meta-Synthesis

- Lee, H., Tamminen, K. A., Clark, A. M., Slater, L., Spence, J. C., & Holt, N. L. (2015). A meta-study of qualitative research examining determinants of children's independent active free play . International Journal Of Behavioral Nutrition & Physical Activity, 12 (5), 121-12. https://doi.org/10.1186/s12966-015-0165-9

Videos on systematic reviews

Systematic Reviews: What are they? Are they right for my research? - 47 min. video recording with a closed caption option.

More training videos on systematic reviews:

Books on Systematic Reviews

Books on Meta-analysis

- University of Toronto Libraries - very detailed with good tips on the sensitivity and specificity of searches.

- Monash University - includes an interactive case study tutorial.

- Dalhousie University Libraries - a comprehensive How-To Guide on conducting a systematic review.

Guidelines for a systematic review as part of the dissertation

- Guidelines for Systematic Reviews in the Context of Doctoral Education Background by University of Victoria (PDF)

- Can I conduct a Systematic Review as my Master’s dissertation or PhD thesis? Yes, It Depends! by Farhad (blog)

- What is a Systematic Review Dissertation Like? by the University of Edinburgh (50 min video)

Further readings on experiences of PhD students and doctoral programs with systematic reviews

Puljak, L., & Sapunar, D. (2017). Acceptance of a systematic review as a thesis: Survey of biomedical doctoral programs in Europe . Systematic Reviews , 6 (1), 253. https://doi.org/10.1186/s13643-017-0653-x

Perry, A., & Hammond, N. (2002). Systematic reviews: The experiences of a PhD Student . Psychology Learning & Teaching , 2 (1), 32–35. https://doi.org/10.2304/plat.2002.2.1.32

Daigneault, P.-M., Jacob, S., & Ouimet, M. (2014). Using systematic review methods within a Ph.D. dissertation in political science: Challenges and lessons learned from practice . International Journal of Social Research Methodology , 17 (3), 267–283. https://doi.org/10.1080/13645579.2012.730704

UMD Doctor of Philosophy Degree Policies

Before you embark on a systematic review research project, check the UMD PhD Policies to make sure you are on the right path. Systematic reviews require a team of at least two reviewers and an information specialist or a librarian. Discuss with your advisor the authorship roles of the involved team members. Keep in mind that the UMD Doctor of Philosophy Degree Policies (scroll down to the section, Inclusion of one's own previously published materials in a dissertation ) outline such cases, specifically the following:

" It is recognized that a graduate student may co-author work with faculty members and colleagues that should be included in a dissertation . In such an event, a letter should be sent to the Dean of the Graduate School certifying that the student's examining committee has determined that the student made a substantial contribution to that work. This letter should also note that the inclusion of the work has the approval of the dissertation advisor and the program chair or Graduate Director. The letter should be included with the dissertation at the time of submission. The format of such inclusions must conform to the standard dissertation format. A foreword to the dissertation, as approved by the Dissertation Committee, must state that the student made substantial contributions to the relevant aspects of the jointly authored work included in the dissertation."

- Cochrane Handbook for Systematic Reviews of Interventions - See Part 2: General methods for Cochrane reviews

- Systematic Searches - Yale library video tutorial series

- Using PubMed's Clinical Queries to Find Systematic Reviews - From the U.S. National Library of Medicine

- Systematic reviews and meta-analyses: A step-by-step guide - From the University of Edinsburgh, Centre for Cognitive Ageing and Cognitive Epidemiology

Bioinformatics

- Mariano, D. C., Leite, C., Santos, L. H., Rocha, R. E., & de Melo-Minardi, R. C. (2017). A guide to performing systematic literature reviews in bioinformatics . arXiv preprint arXiv:1707.05813.

Environmental Sciences

Collaboration for Environmental Evidence. 2018. Guidelines and Standards for Evidence synthesis in Environmental Management. Version 5.0 (AS Pullin, GK Frampton, B Livoreil & G Petrokofsky, Eds) www.environmentalevidence.org/information-for-authors .

Pullin, A. S., & Stewart, G. B. (2006). Guidelines for systematic review in conservation and environmental management. Conservation Biology, 20 (6), 1647–1656. https://doi.org/10.1111/j.1523-1739.2006.00485.x

Engineering Education

- Borrego, M., Foster, M. J., & Froyd, J. E. (2014). Systematic literature reviews in engineering education and other developing interdisciplinary fields. Journal of Engineering Education, 103 (1), 45–76. https://doi.org/10.1002/jee.20038

Public Health

- Hannes, K., & Claes, L. (2007). Learn to read and write systematic reviews: The Belgian Campbell Group . Research on Social Work Practice, 17 (6), 748–753. https://doi.org/10.1177/1049731507303106

- McLeroy, K. R., Northridge, M. E., Balcazar, H., Greenberg, M. R., & Landers, S. J. (2012). Reporting guidelines and the American Journal of Public Health’s adoption of preferred reporting items for systematic reviews and meta-analyses . American Journal of Public Health, 102 (5), 780–784. https://doi.org/10.2105/AJPH.2011.300630

- Pollock, A., & Berge, E. (2018). How to do a systematic review. International Journal of Stroke, 13 (2), 138–156. https://doi.org/10.1177/1747493017743796

- Institute of Medicine. (2011). Finding what works in health care: Standards for systematic reviews . https://doi.org/10.17226/13059

- Wanden-Berghe, C., & Sanz-Valero, J. (2012). Systematic reviews in nutrition: Standardized methodology . The British Journal of Nutrition, 107 Suppl 2, S3-7. https://doi.org/10.1017/S0007114512001432

Social Sciences

- Bronson, D., & Davis, T. (2012). Finding and evaluating evidence: Systematic reviews and evidence-based practice (Pocket guides to social work research methods). Oxford: Oxford University Press.

- Petticrew, M., & Roberts, H. (2006). Systematic reviews in the social sciences: A practical guide . Malden, MA: Blackwell Pub.

- Cornell University Library Guide - Systematic literature reviews in engineering: Example: Software Engineering

- Biolchini, J., Mian, P. G., Natali, A. C. C., & Travassos, G. H. (2005). Systematic review in software engineering . System Engineering and Computer Science Department COPPE/UFRJ, Technical Report ES, 679 (05), 45.

- Biolchini, J. C., Mian, P. G., Natali, A. C. C., Conte, T. U., & Travassos, G. H. (2007). Scientific research ontology to support systematic review in software engineering . Advanced Engineering Informatics, 21 (2), 133–151.

- Kitchenham, B. (2007). Guidelines for performing systematic literature reviews in software engineering . [Technical Report]. Keele, UK, Keele University, 33(2004), 1-26.

- Weidt, F., & Silva, R. (2016). Systematic literature review in computer science: A practical guide . Relatórios Técnicos do DCC/UFJF , 1 .

- Academic Phrasebank - Get some inspiration and find some terms and phrases for writing your research paper

- Oxford English Dictionary - Use to locate word variants and proper spelling

- << Previous: Library Help

- Next: Steps of a Systematic Review >>

- Last Updated: Apr 19, 2024 12:47 PM

- URL: https://lib.guides.umd.edu/SR

Conducting a Systematic Review: A Practical Guide

- Living reference work entry

- Later version available View entry history

- First Online: 13 January 2018

- Cite this living reference work entry

- Freya MacMillan 2 ,

- Kate A. McBride 3 ,

- Emma S. George 4 &

- Genevieve Z. Steiner 5

470 Accesses

It can be challenging to conduct a systematic review with limited experience and skills in undertaking such a task. This chapter provides a practical guide to undertaking a systematic review, providing step-by-step instructions to guide the individual through the process from start to finish. The chapter begins with defining what a systematic review is, reviewing its various components, turning a research question into a search strategy, developing a systematic review protocol, followed by searching for relevant literature and managing citations. Next, the chapter focuses on documenting the characteristics of included studies and summarizing findings, extracting data, methods for assessing risk of bias and considering heterogeneity, and undertaking meta-analyses. Last, the chapter explores creating a narrative and interpreting findings. Practical tips and examples from existing literature are utilized throughout the chapter to assist readers in their learning. By the end of this chapter, the reader will have the knowledge to conduct their own systematic review.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Institutional subscriptions

Barbour RS. Checklists for improving rigour in qualitative research: a case of the tail wagging the dog? BMJ. 2001;322(7294):1115–7.

Article Google Scholar

Butler A, Hall H, Copnell B. A guide to writing a qualitative systematic review protocol to enhance evidence-based practice in nursing and health care. Worldviews Evid-Based Nurs. 2016;13(3):241–9.

Cook DJ, Mulrow CD, Haynes RB. Systematic reviews: synthesis of best evidence for clinical decisions. Ann Intern Med. 1997;126(5):376–80.

Dixon-Woods M, Bonas S, Booth A, Jones DR, Miller T, Sutton AJ, … Young B. How can systematic reviews incorporate qualitative research? A critical perspective. Qual Res. 2006;6(1):27–44. https://doi.org/10.1177/1468794106058867 .

Greenhalgh T. How to read a paper: the basics of evidence-based medicine. 4th ed. Chichester/Hoboken: Wiley-Blackwell; 2010.

Google Scholar

Hannes K, Lockwood C, Pearson A. A comparative analysis of three online appraisal instruments’ ability to assess validity in qualitative research. Qual Health Res. 2010;20(12):1736–43. https://doi.org/10.1177/1049732310378656 .

Higgins JPT, Green S. Cochrane handbook for systematic reviews of interventions (Version 5.1.0 [updated March 2011]). The Cochrane Collaboration; 2011. http://handbook-5-1.cochrane.org/

Higgins JPT, Altman DG, Gøtzsche PC, Jüni P, Moher D, Oxman AD, … Sterne JAC. The Cochrane Collaboration’s tool for assessing risk of bias in randomised trials. BMJ. 2011;343. https://doi.org/10.1136/bmj.d5928 .

Hillier S, Grimmer-Somers K, Merlin T, Middleton P, Salisbury J, Tooher R, Weston A. FORM: an Australian method for formulating and grading recommendations in evidence-based clinical guidelines. BMC Med Res Methodol. 2011;11:23. https://doi.org/10.1186/1471-2288-11-23 .

Humphreys DK, Panter J, Ogilvie D. Questioning the application of risk of bias tools in appraising evidence from natural experimental studies: critical reflections on Benton et al., IJBNPA 2016. Int J Behav Nutr Phys Act. 2017; 14 (1):49. https://doi.org/10.1186/s12966-017-0500-4 .

King R, Hooper B, Wood W. Using bibliographic software to appraise and code data in educational systematic review research. Med Teach. 2011;33(9):719–23. https://doi.org/10.3109/0142159x.2011.558138 .

Koelemay MJ, Vermeulen H. Quick guide to systematic reviews and meta-analysis. Eur J Vasc Endovasc Surg. 2016;51(2):309. https://doi.org/10.1016/j.ejvs.2015.11.010 .

Lucas PJ, Baird J, Arai L, Law C, Roberts HM. Worked examples of alternative methods for the synthesis of qualitative and quantitative research in systematic reviews. BMC Med Res Methodol. 2007;7:4–4. https://doi.org/10.1186/1471-2288-7-4 .

MacMillan F, Kirk A, Mutrie N, Matthews L, Robertson K, Saunders DH. A systematic review of physical activity and sedentary behavior intervention studies in youth with type 1 diabetes: study characteristics, intervention design, and efficacy. Pediatr Diabetes. 2014;15(3):175–89. https://doi.org/10.1111/pedi.12060 .

MacMillan F, Karamacoska D, El Masri A, McBride KA, Steiner GZ, Cook A, … George ES. A systematic review of health promotion intervention studies in the police force: study characteristics, intervention design and impacts on health. Occup Environ Med. 2017. https://doi.org/10.1136/oemed-2017-104430 .

Matthews L, Kirk A, MacMillan F, Mutrie N. Can physical activity interventions for adults with type 2 diabetes be translated into practice settings? A systematic review using the RE-AIM framework. Transl Behav Med. 2014;4(1):60–78. https://doi.org/10.1007/s13142-013-0235-y .

Moher D, Schulz KF, Altman DG. The CONSORT statement: revised recommendations for improving the quality of reports of parallel group randomized trials. BMC Med Res Methodol. 2001;1:2. https://doi.org/10.1186/1471-2288-1-2 .

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6(7):e1000097. https://doi.org/10.1371/journal.pmed.1000097 .

Moher D, Shamseer L, Clarke M, Ghersi D, Liberati A, Petticrew M, et al. Preferred reporting items for systematic review and meta-analysis protocols (PRISMA-P) 2015 statement. Syst Rev. 2015;4:1. https://doi.org/10.1186/2046-4053-4-1 .

Mulrow CD, Cook DJ, Davidoff F. Systematic reviews: critical links in the great chain of evidence. Ann Intern Med. 1997;126(5):389–91.

Peters MDJ. Managing and coding references for systematic reviews and scoping reviews in EndNote. Med Ref Serv Q. 2017;36(1):19–31. https://doi.org/10.1080/02763869.2017.1259891 .

Steiner GZ, Mathersul DC, MacMillan F, Camfield DA, Klupp NL, Seto SW, … Chang DH. A systematic review of intervention studies examining nutritional and herbal therapies for mild cognitive impairment and dementia using neuroimaging methods: study characteristics and intervention efficacy. Evid Based Complement Alternat Med. 2017;2017:21. https://doi.org/10.1155/2017/6083629 .

Sterne JA, Hernán MA, Reeves BC, Savović J, Berkman ND, Viswanathan M, … Higgins JP. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ. 2016;355. https://doi.org/10.1136/bmj.i4919 .

Tong A, Sainsbury P, Craig J. Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care. 2007;19(6):349–57. https://doi.org/10.1093/intqhc/mzm042 .

Tong A, Palmer S, Craig JC, Strippoli GFM. A guide to reading and using systematic reviews of qualitative research. Nephrol Dial Transplant. 2016;31(6):897–903. https://doi.org/10.1093/ndt/gfu354 .

Uman LS. Systematic reviews and meta-analyses. J Can Acad Child Adolesc Psychiatry. 2011;20(1):57–9.

Download references

Author information

Authors and affiliations.

School of Science and Health and Translational Health Research Institute, Sydney, NSW, Australia

Freya MacMillan

Translational Health Research Institute, Western Sydney University, Sydney, NSW, Australia

Kate A. McBride

School of Science and Health, Western Sydney University, Sydney, NSW, Australia

Emma S. George

NICM, Western Sydney University, Sydney, NSW, Australia

Genevieve Z. Steiner

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Freya MacMillan .

Editor information

Editors and affiliations.

Health, Locked Bag 1797, CA.02.35, Western Sydney Univ, School of Science & Health, Locked Bag 1797, CA.02.35, Penrith, New South Wales, Australia

Pranee Liamputtong

Rights and permissions

Reprints and permissions

Copyright information

© 2018 Springer Nature Singapore Pte Ltd.

About this entry

Cite this entry.

MacMillan, F., McBride, K.A., George, E.S., Steiner, G.Z. (2018). Conducting a Systematic Review: A Practical Guide. In: Liamputtong, P. (eds) Handbook of Research Methods in Health Social Sciences . Springer, Singapore. https://doi.org/10.1007/978-981-10-2779-6_113-1

Download citation

DOI : https://doi.org/10.1007/978-981-10-2779-6_113-1

Received : 18 December 2017

Accepted : 02 January 2018

Published : 13 January 2018

Publisher Name : Springer, Singapore

Print ISBN : 978-981-10-2779-6

Online ISBN : 978-981-10-2779-6

eBook Packages : Springer Reference Social Sciences Reference Module Humanities and Social Sciences Reference Module Business, Economics and Social Sciences

- Publish with us

Policies and ethics

Chapter history

DOI: https://doi.org/10.1007/978-981-10-2779-6_113-2

DOI: https://doi.org/10.1007/978-981-10-2779-6_113-1

- Find a journal

- Track your research

- Systematic Review

- Open access

- Published: 24 April 2024

The immediate impacts of TV programs on preschoolers' executive functions and attention: a systematic review

- Sara Arian Namazi 1 &

- Saeid Sadeghi 1

BMC Psychology volume 12 , Article number: 226 ( 2024 ) Cite this article

Metrics details

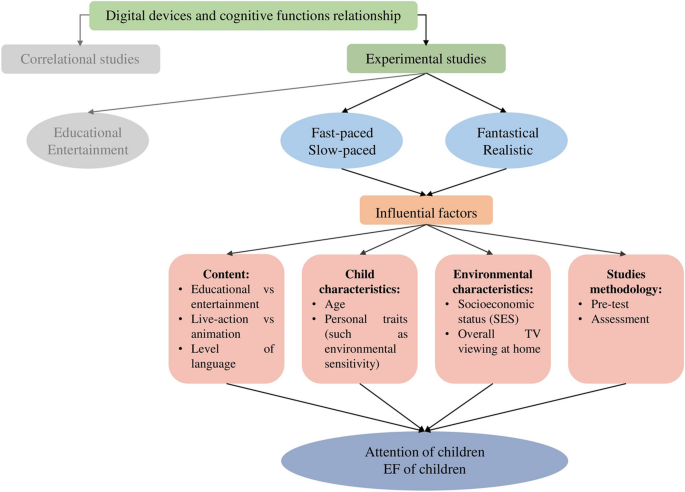

Previous research has presented varying perspectives on the potential effect of screen media use among preschoolers. In this study, we systematically reviewed experimental studies that investigated how pacing and fantasy features of TV programs affect children's attention and executive functions (EFs).

A systematic search was conducted across eight online databases to identify pertinent studies published until August 2023. We followed the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analysis) guidelines.

Fifteen papers involving 1855 participants aged 2–7 years fulfilled all the inclusion criteria for this review and were entered into the narrative synthesis. Despite the challenge of reaching general conclusions and encountering conflicting outcomes, a nuanced analysis reveals distinct patterns within various subgroups. The impact of pacing on attention is discernible, particularly in bottom-up attention processes, although the nature of this effect remains contradictory. Conversely, consistent findings emerge regarding top-down attention, suggesting any impact. Moreover, a subgroup analysis of different EF components yields valuable insights, highlighting the negative effect of fantasy on inhibitory control within the EF framework.

The complexity of these outcomes highlights the need for further research, considering factors such as content, child-specific characteristics, environmental factors, and methodological approaches. These findings collectively emphasize the necessity of conducting more comprehensive and detailed research, especially in terms of the underlying mechanisms and their impact on brain function.

Peer Review reports

Introduction

In the last few decades, the advancement of technology has made digital devices a significant part of children's lives [ 1 ]. Children are now using digital devices at a younger age as devices are more readily available at home, school, and in society as a whole [ 2 , 3 , 4 ]. Studies have shown that excessive screen time is associated with obesity and sleep problems, as well as lowered social and motor development scores in young children [ 5 , 6 ]. In recent years, researchers have been studying the interaction between digital devices and children's cognitive development [ 7 ].

The term “digital devices” refers to devices that can create, generate, share, communicate, receive, store, display, or process information, including, but not limited to, laptops, tablets, desktops, televisions (TVs), mobile phones, and smartphones [ 8 ]. TV is one of the digital devices well-studied for its effects on children and refers to shows (e.g. live-action, puppets, …) and cartoons that children watch on TVs and other touchscreen devices [ 9 ]. The effects of TV content are determined by many factors, including fantastical content and the program's pacing [ 10 ]. Pacing refers to how fast audio and visual elements change [ 11 ]. Video pace can be assessed through varying filming techniques, like changing the camera's perspective [ 12 ] or transitioning between scenes [ 13 ]. The concept of fantasy is about phenomena that defy the laws of reality, such as Superman [ 14 ].

Recent studies have examined whether TV (the pace and fantasy events in the programs) affects children's cognitive development, particularly regarding attention and executive functions (EFs). Attention is a multifaceted cognitive mechanism characterized by the allocation of resources towards distinct stimuli or tasks, thereby facilitating heightened processing and perception of relevant information [ 15 , 16 ]. There is a difference between attention and higher cognitive functions (e.g., executive functions). The attention process occurs between perception, memory, and higher cognitive functions. In this way, information can flow from perception to memory and higher cognitive functions and vice versa [ 17 , 18 ]. Many models have been developed to explain attention ability, and some of these models include components that are related to EF. EFs encompass a spectrum of cognitive processes essential for solving goal-oriented problems. This term comprises diverse higher-order cognitive functions including reasoning, working memory, problem-solving, planning, inhibitory control, attention, multitasking, and flexibility [ 19 , 20 , 21 ]. These functions are often referred to as "cool" EF, as the underlying cognitive mechanisms operate with limited emotional arousal [ 22 ]. In contrast, "hot" EF involves emotion or motivation, such as rewards or punishment tacking [ 22 , 23 ]. Within this classification, two subsets encompass basic EFs like working memory, inhibition, attention control, and cognitive flexibility, along with higher-order (higher-level) EFs such as reasoning, problem-solving, and planning, which stem from these basic ones [ 20 ].

Due to the complexity of the topic, studies investigating the relationship between TV programs and attention or EF have adopted diverse assessment methods. In some studies, children's involvement in tasks during free play or direct testing has been used to measure attention [ 24 ]. Another substantial portion of these studies adopted the model of EF proposed by Miyake et al. [ 25 ], which divided EF into three components: inhibitory control (the ability of a person to inhibit dominant or automatic responses in favor of less prominent data), working memory (the capacity to hold and manipulate various sets of information) and flexibility (shifting attention) [ 10 , 26 , 27 ]. Alternatively, some studies have measured EF through two dimensions: "hot" and "cool" [ 13 , 14 ]. Another subset of related research has focused on higher-order EF tests, encompassing domains such as planning and problem-solving. Additionally, a few studies have measured EF in a very general way, with tasks that address different parts of EF (assessed through tasks involving color separation or completing puzzles as quickly as possible) [ 28 ].

As an illustration, Cooper et al. [ 12 ] investigated the influence of pacing on attention using a direct task and demonstrated a positive effect on performance in EF tasks. In another study by Lillard and Peterson [ 13 ], the impact of pacing on Cool EF was investigated, revealing a reduced performance in EF tasks after exposure to fast-paced programs. Regarding higher-order EFs, the 2022 study [ 29 ] concluded that exposure to a fast-paced TV program did not immediately affect children's problem-solving abilities. Moreover, Jiang et al. [ 26 ] evaluated EFs based on Miyake's model, indicating that fantastical events negatively affected inhibitory control and flexibility, whereas working memory remained unaffected.

A limited capacity model and the attention system are essential for explaining the underlying mechanisms behind how TV pacing impacts children's cognitive performance. It has been proposed that fast-paced programs, which are characterized by rapid changes in the scene, capture attention in a bottom-up manner through orienting responses to scene changes, primarily engaging sensory rather than the prefrontal cortex [ 30 , 31 ]. In this way, fast-paced programs could overwhelm cognitive resources, aligning with the "overstimulation hypothesis" [ 32 , 33 , 34 ]. This hypothesis posits that exposure to such programs may lead the mind to anticipate high levels of stimulation, which can reduce children's attention spans and influence their performance [ 31 , 32 ]. Furthermore, a study by Carey [ 35 ] revealed young children's anticipations about the occurrence of events. Likewise, Kahneman [ 36 ] proposed the concept of a single pool of attentional resources and suggested that processing fantastical events overloads limited cognitive resources. Watching TV programs engages the bottom-up cognitive processing system. Consequently, the top-down cognitive processing system may be delayed in re-engaging in subsequent cognitive tasks after program viewing [ 14 ]. This suggests that exposure to fast-paced and fantastical TV programs has temporary effects on children's attention and executive functioning.

Research examining the immediate impact of these two features on children's attention and EF has yielded conflicting outcomes. Several studies indicate that fast-paced television programs have a negative effect on children's attention and EFs [ 13 , 28 , 37 , 38 ]. In contrast, some studies have shown positive results [ 12 , 39 ], while other studies found no significant impact [ 14 , 27 , 29 , 40 ]. Similar findings are observed for the fantasy feature. Some studies have shown that higher levels of fantastical content led to lower performance on cognitive tests [ 10 , 14 , 26 , 27 , 41 , 42 ], while contrary findings are also reported [ 39 , 43 ].

Therefore, it remains unclear how television content affects children's attention and EFs. Due to this, it is necessary to identify any gaps in the prior research, which can lead to effective strategies to investigate TV programs' effects. Previous reviews: (1) summarized the relationship between screen time and EF [ 44 ]; (2) adopted a comprehensive approach by combining diverse research methodologies, yet omitted some recent studies [ 24 ]; and (3) summarized the influence of media on self-regulation, although they emphasized several studies, overlooking a subset of investigations concerning the immediate impact of TV programs [ 45 ]. None of these reviews have specifically focused on the outcomes of experimental research. To investigate the effects of programs, experimental studies seem to be a more accurate research method. Experiments allow the control of certain variables and manipulation of an independent variable (such as the pace of the program and fantasy). This review aims to explore the immediate impact of TV pacing and fantasy features on children's attention and EF, as well as the potential factors contributing to the variations in outcomes.

Search strategy

This systematic review follows the guidelines set by the Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) protocol [ 46 ]. We searched eight online databases on 2 August 2023: APA PsycARTICLES, Cochrane Library, EBSCO (APA PsycINFO), Google Scholar (limited to first three pages), Ovid, ProQuest, PubMed (MedLINE), and Web of Science. The search strategy utilized the article abstract and ignored the date and language restrictions: child* OR preschool* AND television OR TV OR cartoon AND executive function OR attention OR inhibit* OR flexibility OR working memory AND immediate* OR short-term OR pace OR fantasy. This strategy was tailored to suit the requirements of each database. Additionally, to account for any potentially overlooked studies, citation searching was conducted for the Lillard et al. [ 14 ] article on Google Scholar on 7 August 2023. However, only studies with relevant titles and abstracts were included in the review screening.

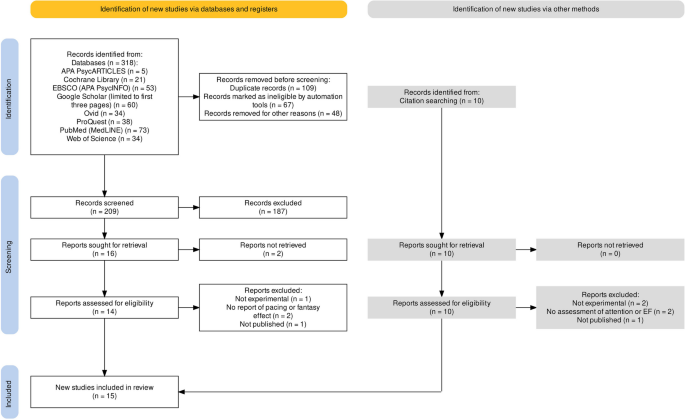

Study selection

The studies had to meet these criteria to be included in the review: (1) participants were children younger than seven years (preschool); (2) the study assessed the impact of TV programs on children's attention or EFs; (3) the independent variable was the exposure to a TV program (including cartoons and non-animated programs, while excluding advertisements), with immediate measurement of its impact on children's attention or EF; (4) the study measured the effect of pacing and fantasy features present in TV programs; (5) the study had an experimental design; and (6) the research was published as journal articles in English. Furthermore, any study where a participant had been diagnosed with a disorder was excluded from the review. The initial identification yielded 328 potentially relevant studies, from which 67 duplicates were eliminated using EndNote 20's automated tool [ 47 ]. Additionally, the manual review led to the elimination of 42 more duplicates, while six non-English studies were further removed. The remaining 203 studies were screened for title and abstract relevance. Subsequently, two screeners reviewed the full text and included 15 as eligible studies. Any conflict between screeners regarding eligibility was resolved through discussions. The PRISMA chart that summarizes these processes can be seen in Fig. 1 .

PRISMA flow diagram [ 48 ] showing the number of studies that were removed at each stage of the literature search

Data extraction and synthesis

The relevant data from the selected studies were extracted on a form by two reviewers, and any conflict was resolved through discussion. The data extraction form had information about the characteristics of each study: authors’ names, titles of manuscripts, publication dates, sample sizes, the mean and standard deviation of participant ages, the proportion of females within the sample, TV program name, features and length, type of cognitive functions (EFs or attention) measured in the study along with their assessment methods and variables used for controlling or checking differences between groups. Additionally, eligible outcomes were as follows: the effect of fast and slow-paced TV programs, the effect of fantastical and realistic TV programs, and variable interactions. In our research, the data synthesis was conducted using narrative synthesis for the included studies. This choice was driven by the conflicting results observed across the various studies. Although a single reviewer composed the narratives, all decisions were reached through discussions involving two reviewers.

Quality assessment

The evaluation of study quality was conducted utilizing the Downs and Black [ 49 ] checklist, which has 27 items. However, not all of these items apply to every type of study design. Following a similar approach as Uzundağ et al. [ 45 ], for the experimental studies, a subset of 21 relevant items was employed. The study's quality check result can be found in Table 1 .

A total of 1855 children aged between two and seven years participated in the 15 studies (49.43%, female). Among these studies, seven exclusively investigated the impact of pacing, with four exploring its effects on attention and three on EF. Additionally, three studies examined the impact of pacing and fantasy, with only one focusing on attention, while five studies specifically concentrated on the fantasy effect on EF. The sample sizes varied from 20 to 279 participants, while the duration of video exposure ranged from 3.5 to 40 min. The mean age of participants, as reported in 13 studies, was 59.56 months (SD = 9.94). Notably, only seven studies involved a pre-test, eight studies controlled for the overall media exposure, and four considered socioeconomic status (SES).

Five of the conducted studies measured attention. As for EF, the studies explored a diverse range of EF components: inhibitory control was measured in five studies, cognitive flexibility in four, working memory in three, composite cool EF in three, and hot EF in two, with one study each dedicated to measuring planning, problem-solving, and general EF (motor EF). For assessment, attention was operationalized through either the observation of children's behavior during free play or direct task measurement. In all these studies, EF was directly assessed through various tasks.

Experimental investigations into the impact of TV program pacing on preschoolers' attention have yielded inconsistent outcomes. Among the initial two studies, fast-paced TV programs negatively impacted children’s attention. Geist and Gibson [ 37 ] examined the effects of rapid TV program pacing on 62 children aged 4 to 6. Their findings demonstrated that children exposed to a fast-paced program displayed more frequent activity switches and allocated less time to tasks during the post-viewing period, in contrast to the control group. This pattern was interpreted as indicative of a shortened attention span in children. However, it cannot be definitively determined whether the observed negative impact could be attributed to content, pacing, or an interplay of both factors. Furthermore, no pre-viewing attention test was included, which complicates the interpretation of the results. To address the pacing/content dilemma, Kostyrka-Allchorne et al. [ 38 ] adopted the methodology employed by Cooper et al. [ 12 ]. They created experimental videos with identical content, varying only in the number of edits (pace). In this study, 70 children aged 2 to 4.5 years were exposed to one of two 4-min edited videos featuring a narrator reading a children's story. The fast-paced group displayed more frequent shifts of attention between toys than the slow-paced group, despite the lack of initial behavioral differences between the groups before watching the videos. By coping with the pacing/content issue and incorporating younger participants, this study provides insights, albeit with video durations that notably differ from typical children's program episodes.

In contrast to the studies mentioned earlier, the subsequent two studies propose that fast-paced TV programs may not significantly impact or might even yield positive ones on children's attention. To elaborate, Anderson et al. [ 40 ] initiated their research by subjecting 4-year-old children to a 40-min fast-paced or slow-paced version of Sesame Street , while a control group listened to a parent reading a story. The findings failed to provide substantial support for the immediate effects of TV program pacing on the behavior and attention of preschoolers. In a subsequent study, Cooper et al. [ 12 ] presented a 3.5-min video of a narrator reading a story to children aged 4 to 7. This investigation employed edited versions of the video to create both fast-paced and slow-paced versions with identical content. Through applying an attention networks task, post-viewing evaluation alerting, orienting, and executive control. The outcomes revealed that even a very brief exposure to programs can impact children's orienting networks and error rates. Moreover, a noteworthy interaction emerged between age and pacing: 4-year-olds displayed lower orientation scores in the fast-paced group compared to the slow-paced one, while the reverse occurred for the 6-year-olds. In summary, these two studies maintained consistent video content by manipulating pacing, focusing solely on evaluating the pacing effect. However, it's important to acknowledge that Anderson et al. [ 40 ] utilized TV programs with a slower pace than contemporary ones, and Cooper et al. [ 12 ] subjected children to programs for 3.5 min—considerably shorter than the typical time children spend watching TV programs [ 14 ]. Refer to Table 1 for a concise overview of attention studies.

Regarding EF, research examining the influence of pacing has also produced inconsistent outcomes. Lillard and Peterson [ 13 ] explored the immediate impact of fast-paced TV content on the EF of 60 four-year-olds. In this study, participants were exposed to a 9-min cartoon episode (fast or slow-paced content) or were engaged in drawing (serving as the control condition). The results indicated that children who viewed the fast-paced cartoon performed notably poorer on a post-viewing Cool and Hot EF assessment when compared to the other groups. This finding underscores the significant influence of pacing on children's EF. Additionally, Sanketh et al. [ 28 ] investigated the impact of a TV program's pacing on children's motor EF. Involving a sample of 279 four- to six-year-olds, the study began with a pre-viewing test to ensure developmental equivalence among participants. The findings revealed that children exposed to the fast-paced cartoon exhibited slower performance on motor EF tasks compared to their counterparts in the other two groups. This outcome suggested that ten minutes of viewing a fast-paced cartoon yielded an immediate negative impact on the motor EF of 4- to 6-year-old children. However, it's important to note that these two studies could not differentiate between the effects of pacing and content.

In contrast to these studies, Rose et al. [ 29 ] more recently delved into the effects of TV program pacing on problem-solving abilities through ecologically valid research. In this study, each child underwent exposure to both fast and slow programs during two distinct sessions to ensure comparability and control over other variables. Notably, no significant differences emerged in the problem-solving task between the fast and slow programs. The study identified no significant differences in problem-solving performance between the two pacing conditions. However, following exposure to the fast-paced program, both age groups demonstrated a non-significant increase in EF scores (p = 0.71). Additionally, the study by Rose et al. [ 29 ] aimed to ensure content parity between the fast and slow programs, leading to a smaller pacing difference compared to certain other studies. Refer to Table 2 for a concise overview of EF studies.

Continuing the exploration of the distinct impacts of TV program content, particularly in the context of fantasy, Lillard et al. [ 14 ] introduced a novel dimension to the discussion. The concept of "fantastical" versus "non-fantastical" (also termed "realistic" or "unrealistic") content emerged as a notable category within TV programming. This idea prompted three separate research studies, all aiming to disentangle the effects of pacing from fantasy on children's EF. To address this inquiry, all three studies employed a common approach, utilizing four TV programs that varied along two dimensions: fast and fantastical, fast and non-fantastical, slow and fantastical, or slow and non-fantastical. Of these three, only one study focused on attention.

Kostyrka-Allchorne et al. [ 39 ] conducted a study in 2019 with 187 children aged 3.5 to 5 years, exposing them to 5-min self-produced videos. Their findings indicated that there is a significant interaction between pacing and fantasy, while neither factor displayed an individual effect. Notably, exposure to the fast-paced video led to quicker responses, but only when the story was non-fantastical. However, due to the brief length of the videos, it's uncertain if the stimuli adequately challenged cognitive resources (see Table 1 ).

There is a more extensive body of literature on EF (all three mentioned studies) that accurately separates the effect of pace from fantasy. The outcomes of these studies indicated a lack of influence from pacing, while the impact of fantasy and the interplay between pacing and fantasy yielded conflicting results. Lillard et al. [ 14 ] conducted three distinct studies to test their hypotheses, building upon their prior research findings. Study 1 involved diverse videos with an extended duration (11 min) compared to the 2011 study [ 13 ], focusing on 4- and 6-year-olds. The findings indicated that children's Cool EF scores were notably lower in the two fast and fantastical conditions compared to the control group. Conversely, children in the slow and non-fantastical condition performed better in the hot EF task. Study 2 aimed to discern whether solely fast and fantastical entertainment TV programs, as opposed to educational ones, influenced children's EF. The results indicated that even when designed with educational intent, watching a fast and fantastical TV program led to lower EF scores than reading a book. Additionally, the EF performance following exposure to the educational program was similar to that of the entertaining program. In the final study, Lillard et al. [ 14 ] aimed to differentiate the contributions of fantasy versus pacing (fast or slow). The analysis revealed that fantastical content has an impact on EF, although fast-paced did not show a similar effect. However, this particular study focused on a single age group without considering potential age-related nuances in the development of EF.

Moreover, Kostyrka-Allchorne et al.'s [ 39 ] findings indicated that children in two fantastical conditions had higher inhibitory control scores than those in the alternative condition, yet no discernible pacing effect was observed. In a parallel vein, within the same investigative framework as Lillard et al.’s [ 14 ] Study 3, Fan et al. [ 27 ] explored the age-related influence on the impact of TV program features on EF of children aged 4 to 7 years. Employing four 11-min cartoons for exposure, the study revealed that following fantastical TV program viewing, children's performance on subsequent EF tasks declined. Albeit, the pacing did not exert a comparable effect. The most significant interaction emerged between fantasy and age, indicating a heightened impact of fantasy on inhibitory control among younger children. Unlike the earlier studies, this study emphasized EF development and encompassed a broader age range of children. In summation, these three research studies reveal inconsistent results. To address the novelty aspect inherent in EF tests, Fan et al. [ 27 ] adopted parent questionnaires to account for pre-viewing EF levels. In contrast, the other two studies incorporated at least one task during the pre-viewing session to assess EF.

Expanding upon the findings of Lillard et al. [ 14 ], subsequent studies focused exclusively on the impact of fantasy, omitting the pacing feature. Out of the five studies, four of them collectively suggest that fantastical TV programs tend to exert a negative impact on children's EF.

Li et al. [ 42 ] undertook a comparative study to assess the effects of viewing versus interacting with fantastical or non-fantastical events on inhibitory control. Through two experimental studies, participants were involved in a video game or a video clip showcasing identical events from the game. The findings indicated that watching fantastical programs led to a reduction in inhibitory control, while interaction with them did not produce a similar effect. Moreover, children in the game condition perceived the fantastical events to be less fantastical. Notably, inhibitory control showed improvement after both watching and interacting with non-fantastical content. It is worth noting that while this study employed direct tasks to address pre-viewing EF levels, the number of fantastical events was not standardized and varied across programs and game conditions. To refine the understanding of the fantasy effect, Jiang et al. [ 26 ] introduced three levels of fantasy in their investigation. The findings revealed that working memory scores did not significantly differ across conditions. However, a nonlinear pattern emerged about the effects of fantasy on inhibitory control and cognitive flexibility, with children in the mid-fantasy group demonstrating comparatively poorer performance. Notably, the potential moderating influence of gender on the relationship between fantastical events and EF lacked conclusive evidence. Continuing from the groundwork laid by Lillard et al. [ 14 ], Rhodes et al. [ 10 ] undertook a study investigating the impact of fantasy on 80 children aged 5 to 6 years. Employing two complete episodes of cartoons utilized by Lillard et al. [ 14 ], they revealed that children in the fantastical condition exhibited lower performance on inhibition, working memory, and cognitive flexibility tasks during the post-viewing session. Notably, the disparity in planning tasks did not yield a statistically significant difference. It is worth highlighting that despite employing cartoons from a different study, they were not matched in terms of pace and language factors, which might have influenced their effect on EF.

In a study aligned with the ones mentioned earlier, Li et al. [ 41 ] examined whether watching TV programs featuring fantastical events had a diminishing impact on the post-viewing EF of 4- to 6-year-olds. They exposed 90 children to Mickey Mouse Clubhouse (non-fantastical), Tom and Jerry (fantastical), or typical classroom activities (control). The outcomes indicated significantly lower scores on behavioral EF tasks for children in the fantastical condition compared to the other groups. In their pursuit, Li et al. [ 41 ] additionally conducted supplementary experiments. The analysis of eye tracking data revealed heightened and briefer eye fixations, while fNIRS data indicated elevated Coxy-Hb levels in the prefrontal cortex (PFC) of the fantastical group, aligning with models of limited cognitive resources. Similar to the preceding study, a notable distinction between the two cartoons existed. Mickey Mouse Clubhouse constituted one episode with a single narrative, whereas Tom and Jerry comprised three distinct episodes with separate stories (episodic narratives). Moreover, the differentiation between fantastical events and comedic violence within Tom and Jerry remains unclear.

Conversely, a recent investigation by Wang and Moriguchi [ 43 ], adopting the methodology established by Li et al. [ 42 ], presented divergent outcomes. After exposure to fantastical content, 3 to 6.5-year-old children's cognitive flexibility and prefrontal activation were assessed. There were no observable alterations in performance or neural activity. In summary, the initial four studies, each exclusively focused on assessing the impact of fantasy, consistently suggest a negative effect. However, the most recent one and the investigation conducted by Kostyrka-Allchorne et al. [ 39 ] produced contrasting outcomes, with one indicating a positive impact and the other showing no discernible effect. It is essential to note that Wang and Moriguchi's [ 43 ] study covers a wide age range between 3 and 6.5 years and does not consider the potential effect of age. Additionally, the brief duration spent on fantasy content raises concerns, as it may not have allowed sufficient time for any potential effect. Despite drawing inspiration from the methodology used in Li et al.'s [ 42 ] study, the number of fantasy events in this recent study was not standardized.

As a result, the impact of exposure to fantastical TV programs on children's EF remains unclear, while the influence of pacing can be more certainly dismissed (see Table 2 ). Additionally, in the field of attention, it is not possible to draw conclusions based on the study results for both features.

We conducted the current systematic review to gain a better understanding of how TV programs' pace and fantasy may impact children's attention and EF by synthesizing results from multiple experimental studies. The synthesis of the reviewed studies and their outcomes has highlighted variations in how pacing and fantasy influence attention and different aspects of EF. The discussion will now delve into the potential explanations for these observed effects.

Numerous studies have investigated the influence of pacing on children's attention. Anderson et al. [ 40 ] and Kostyrka-Allchorne et al. [ 39 ] found no significant effects on attention, while Geist and Gibson [ 37 ] and Kostyrka-Allchorne et al. [ 38 ] reported a negative impact. In contrast, Cooper et al. [ 12 ] observed positive results. To explain these results, it's crucial to look at the methodologies employed in attention measurement. Anderson et al. [ 40 ], Geist and Gibson [ 37 ], and Kostyrka-Allchorne et al. [ 38 ] used child observation during free play, whereas Anderson et al. [ 40 ] used the Matching Familiar Figures task, Cooper et al. [ 12 ] the Attention Networks Task, and Kostyrka-Allchorne et al. [ 39 ] the Continuous Performance Task (CPT).