- Bipolar Disorder

- Therapy Center

- When To See a Therapist

- Types of Therapy

- Best Online Therapy

- Best Couples Therapy

- Best Family Therapy

- Managing Stress

- Sleep and Dreaming

- Understanding Emotions

- Self-Improvement

- Healthy Relationships

- Student Resources

- Personality Types

- Guided Meditations

- Verywell Mind Insights

- 2023 Verywell Mind 25

- Mental Health in the Classroom

- Editorial Process

- Meet Our Review Board

- Crisis Support

The Use of Self-Report Data in Psychology

- Disadvantages

- Other Data Sources

How to Create a Self-Report Study

In psychology, a self-report is any test, measure, or survey that relies on an individual's own report of their symptoms, behaviors, beliefs, or attitudes. Self-report data is gathered typically in paper-and-pencil or electronic format or sometimes through an interview.

Self-reporting is commonly used in psychological studies because it can yield valuable and diagnostic information to a researcher or a clinician.

This article explores examples of how self-report data is used in psychology. It also covers the advantages and disadvantages of this approach.

Examples of Self-Reports

To understand how self-reports are used in psychology, it can be helpful to look at some examples. Some many well-known assessments and inventories rely on self-reporting to collect data.

One of the most commonly used self-report tools is the Minnesota Multiphasic Personality Inventory (MMPI) for personality testing . This inventory includes more than 500 questions focused on different areas, including behaviors, psychological health, interpersonal relationships, and attitudes. It is often used as a mental health assessment, but it is also used in legal cases, custody evaluations, and as a screening instrument for some careers.

The 16 Personality Factor (PF) Questionnaire

This personality inventory is often used as a diagnostic tool to help therapists plan treatment. It can be used to learn more about various individual characteristics, including empathy, openness, attitudes, attachment quality, and coping style.

Myers-Briggs Type Indicator (MBTI)

The MBTI is a popular personality measure that describes personality types in four categories: introversion or extraversion, sensing or intuiting, thinking or feeling, and judging or perceiving. A letter is taken from each category to describe a person's personality type, such as INTP or ESFJ.

Personality inventories and psychology assessments often utilize self-reporting for data collection. Examples include the MMPI, the 16PF Questionnaire, and the MBTI.

Advantages of Self-Report Data

One of the primary advantages of self-reporting is that it can be easy to obtain. It is also an important way that clinicians diagnose their patients—by asking questions. Those making the self-report are usually familiar with filling out questionnaires.

For research, it is inexpensive and can reach many more test subjects than could be analyzed by observation or other methods. It can be performed relatively quickly, so a researcher can obtain results in days or weeks rather than observing a population over the course of a longer time frame.

Self-reports can be made in private and can be anonymized to protect sensitive information and perhaps promote truthful responses.

Disadvantages of Self-Report Data

Collecting information through a self-reporting has limitations. People are often biased when they report on their own experiences. For example, many individuals are either consciously or unconsciously influenced by "social desirability." That is, they are more likely to report experiences that are considered to be socially acceptable or preferred.

Self-reports are subject to these biases and limitations:

- Honesty : Subjects may make the more socially acceptable answer rather than being truthful.

- Introspective ability : The subjects may not be able to assess themselves accurately.

- Interpretation of questions : The wording of the questions may be confusing or have different meanings to different subjects.

- Rating scales : Rating something yes or no can be too restrictive, but numerical scales also can be inexact and subject to individual inclination to give an extreme or middle response to all questions.

- Response bias : Questions are subject to all of the biases of what the previous responses were, whether they relate to recent or significant experience and other factors.

- Sampling bias : The people who complete the questionnaire are the sort of people who will complete a questionnaire. Are they representative of the population you wish to study?

Self-Report Info With Other Data

Most experts in psychological research and diagnosis suggest that self-report data should not be used alone, as it tends to be biased. Research is best done when combining self-reporting with other information, such as an individual’s behavior or physiological data.

This “multi-modal” or “multi-method” assessment provides a more global, and therefore more likely accurate, picture of the subject.

The questionnaires used in research should be checked to see if they produce consistent results over time. They also should be validated by another data method demonstrating that responses measure what they claim they measure. Questionnaires and responses should be easy to discriminate between controls and the test group.

If you are creating a self-report tool for psychology research, there are a few key steps you should follow. First, decide what type of data you want to collect. This will determine the format of your questions and the type of scale you use.

Next, create a pool of questions that are clear and concise. The goal is to have several items that cover all the topics you wish to address. Finally, pilot your study with a small group to ensure it is valid and reliable.

When creating a self-report study, determine what information you need to collect and test the assessment with a group of individuals to determine if the instrument is reliable.

Self-reporting can be a useful tool for collecting data. The benefits of self-report data include lower costs and the ability to collect data from a large number of people. However, self-report data can also be biased and prone to errors.

Levin-Aspenson HF, Watson D. Mode of administration effects in psychopathology assessment: Analyses of gender, age, and education differences in self-rated versus interview-based depression . Psychol Assess. 2018;30(3):287-295. doi:10.1037/pas0000474

Tarescavage AM, Ben-Porath YS. Examination of the feasibility and utility of flexible and conditional administration of the Minnesota Multiphasic Personality Inventory-2-Restructured Form . Psychol Assess. 2017;29(11):1337-1348. doi:10.1037/pas0000442

Warner CH, Appenzeller GN, Grieger T, et al. Importance of anonymity to encourage honest reporting in mental health screening after combat deployment . Arch Gen Psychiatry . 2011;68(10):1065-1071. doi:10.1001/archgenpsychiatry.2011.112

Devaux M, Sassi F. Social disparities in hazardous alcohol use: Self-report bias may lead to incorrect estimates . Eur J Public Health . 2016;26(1):129-134. doi:10.1093/eurpub/ckv190

Althubaiti A. Information bias in health research: Definition, pitfalls, and adjustment methods . J Multidiscip Healthc . 2016;9:211-217. doi:10.2147/JMDH.S104807

Hopwood CJ, Good EW, Morey LC. Validity of the DSM-5 Levels of Personality Functioning Scale-Self Report . J Pers Assess. 2018;100(6):650-659. doi:10.1080/00223891.2017.1420660

By Kristalyn Salters-Pedneault, PhD Kristalyn Salters-Pedneault, PhD, is a clinical psychologist and associate professor of psychology at Eastern Connecticut State University.

- Search Menu

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Urban Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Literature

- Classical Reception

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Archaeology

- Greek and Roman Papyrology

- Late Antiquity

- Religion in the Ancient World

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Agriculture

- History of Education

- History of Emotions

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Acquisition

- Language Variation

- Language Families

- Language Evolution

- Language Reference

- Lexicography

- Linguistic Theories

- Linguistic Typology

- Linguistic Anthropology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Modernism)

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Religion

- Music and Culture

- Music and Media

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Science

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Lifestyle, Home, and Garden

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Politics

- Law and Society

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Neuroanaesthesia

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Oncology

- Medical Toxicology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Clinical Neuroscience

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Medical Ethics

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Security

- Computer Games

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Neuroscience

- Cognitive Psychology

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training

- Research Methods in Psychology

- Social Psychology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Browse content in Business and Management

- Business Strategy

- Business History

- Business Ethics

- Business and Government

- Business and Technology

- Business and the Environment

- Comparative Management

- Corporate Governance

- Corporate Social Responsibility

- Entrepreneurship

- Health Management

- Human Resource Management

- Industrial and Employment Relations

- Industry Studies

- Information and Communication Technologies

- International Business

- Knowledge Management

- Management and Management Techniques

- Operations Management

- Organizational Theory and Behaviour

- Pensions and Pension Management

- Public and Nonprofit Management

- Strategic Management

- Supply Chain Management

- Browse content in Criminology and Criminal Justice

- Criminal Justice

- Criminology

- Forms of Crime

- International and Comparative Criminology

- Youth Violence and Juvenile Justice

- Development Studies

- Browse content in Economics

- Agricultural, Environmental, and Natural Resource Economics

- Asian Economics

- Behavioural Finance

- Behavioural Economics and Neuroeconomics

- Econometrics and Mathematical Economics

- Economic Systems

- Economic Methodology

- Economic History

- Economic Development and Growth

- Financial Markets

- Financial Institutions and Services

- General Economics and Teaching

- Health, Education, and Welfare

- History of Economic Thought

- International Economics

- Labour and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Microeconomics

- Public Economics

- Urban, Rural, and Regional Economics

- Welfare Economics

- Browse content in Education

- Adult Education and Continuous Learning

- Care and Counselling of Students

- Early Childhood and Elementary Education

- Educational Equipment and Technology

- Educational Strategies and Policy

- Higher and Further Education

- Organization and Management of Education

- Philosophy and Theory of Education

- Schools Studies

- Secondary Education

- Teaching of a Specific Subject

- Teaching of Specific Groups and Special Educational Needs

- Teaching Skills and Techniques

- Browse content in Environment

- Applied Ecology (Social Science)

- Climate Change

- Conservation of the Environment (Social Science)

- Environmentalist Thought and Ideology (Social Science)

- Natural Disasters (Environment)

- Social Impact of Environmental Issues (Social Science)

- Browse content in Human Geography

- Cultural Geography

- Economic Geography

- Political Geography

- Browse content in Interdisciplinary Studies

- Communication Studies

- Museums, Libraries, and Information Sciences

- Browse content in Politics

- African Politics

- Asian Politics

- Chinese Politics

- Comparative Politics

- Conflict Politics

- Elections and Electoral Studies

- Environmental Politics

- European Union

- Foreign Policy

- Gender and Politics

- Human Rights and Politics

- Indian Politics

- International Relations

- International Organization (Politics)

- International Political Economy

- Irish Politics

- Latin American Politics

- Middle Eastern Politics

- Political Methodology

- Political Communication

- Political Philosophy

- Political Sociology

- Political Theory

- Political Behaviour

- Political Economy

- Political Institutions

- Politics and Law

- Public Administration

- Public Policy

- Quantitative Political Methodology

- Regional Political Studies

- Russian Politics

- Security Studies

- State and Local Government

- UK Politics

- US Politics

- Browse content in Regional and Area Studies

- African Studies

- Asian Studies

- East Asian Studies

- Japanese Studies

- Latin American Studies

- Middle Eastern Studies

- Native American Studies

- Scottish Studies

- Browse content in Research and Information

- Research Methods

- Browse content in Social Work

- Addictions and Substance Misuse

- Adoption and Fostering

- Care of the Elderly

- Child and Adolescent Social Work

- Couple and Family Social Work

- Developmental and Physical Disabilities Social Work

- Direct Practice and Clinical Social Work

- Emergency Services

- Human Behaviour and the Social Environment

- International and Global Issues in Social Work

- Mental and Behavioural Health

- Social Justice and Human Rights

- Social Policy and Advocacy

- Social Work and Crime and Justice

- Social Work Macro Practice

- Social Work Practice Settings

- Social Work Research and Evidence-based Practice

- Welfare and Benefit Systems

- Browse content in Sociology

- Childhood Studies

- Community Development

- Comparative and Historical Sociology

- Economic Sociology

- Gender and Sexuality

- Gerontology and Ageing

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Organizations

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Sport and Leisure

- Urban and Rural Studies

- Browse content in Warfare and Defence

- Defence Strategy, Planning, and Research

- Land Forces and Warfare

- Military Administration

- Military Life and Institutions

- Naval Forces and Warfare

- Other Warfare and Defence Issues

- Peace Studies and Conflict Resolution

- Weapons and Equipment

- < Previous chapter

- Next chapter >

5. Self-Report Measures

- Published: September 2007

- Cite Icon Cite

- Permissions Icon Permissions

Chapter 5 explores self-report (SR) measures in treatment research. It discusses types of SRs, quality of SRs (reliability, validity, sensitivity and specificity in classification, utility), selecting SR measures for outcome research, and response distortions.

Signed in as

Institutional accounts.

- GoogleCrawler [DO NOT DELETE]

- Google Scholar Indexing

Personal account

- Sign in with email/username & password

- Get email alerts

- Save searches

- Purchase content

- Activate your purchase/trial code

Institutional access

- Sign in with a library card Sign in with username/password Recommend to your librarian

- Institutional account management

- Get help with access

Access to content on Oxford Academic is often provided through institutional subscriptions and purchases. If you are a member of an institution with an active account, you may be able to access content in one of the following ways:

IP based access

Typically, access is provided across an institutional network to a range of IP addresses. This authentication occurs automatically, and it is not possible to sign out of an IP authenticated account.

Sign in through your institution

Choose this option to get remote access when outside your institution. Shibboleth/Open Athens technology is used to provide single sign-on between your institution’s website and Oxford Academic.

- Click Sign in through your institution.

- Select your institution from the list provided, which will take you to your institution's website to sign in.

- When on the institution site, please use the credentials provided by your institution. Do not use an Oxford Academic personal account.

- Following successful sign in, you will be returned to Oxford Academic.

If your institution is not listed or you cannot sign in to your institution’s website, please contact your librarian or administrator.

Sign in with a library card

Enter your library card number to sign in. If you cannot sign in, please contact your librarian.

Society Members

Society member access to a journal is achieved in one of the following ways:

Sign in through society site

Many societies offer single sign-on between the society website and Oxford Academic. If you see ‘Sign in through society site’ in the sign in pane within a journal:

- Click Sign in through society site.

- When on the society site, please use the credentials provided by that society. Do not use an Oxford Academic personal account.

If you do not have a society account or have forgotten your username or password, please contact your society.

Sign in using a personal account

Some societies use Oxford Academic personal accounts to provide access to their members. See below.

A personal account can be used to get email alerts, save searches, purchase content, and activate subscriptions.

Some societies use Oxford Academic personal accounts to provide access to their members.

Viewing your signed in accounts

Click the account icon in the top right to:

- View your signed in personal account and access account management features.

- View the institutional accounts that are providing access.

Signed in but can't access content

Oxford Academic is home to a wide variety of products. The institutional subscription may not cover the content that you are trying to access. If you believe you should have access to that content, please contact your librarian.

For librarians and administrators, your personal account also provides access to institutional account management. Here you will find options to view and activate subscriptions, manage institutional settings and access options, access usage statistics, and more.

Our books are available by subscription or purchase to libraries and institutions.

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Rights and permissions

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

- Subject List

- Take a Tour

- For Authors

- Subscriber Services

- Publications

- African American Studies

- African Studies

- American Literature

- Anthropology

- Architecture Planning and Preservation

- Art History

- Atlantic History

- Biblical Studies

- British and Irish Literature

- Childhood Studies

- Chinese Studies

- Cinema and Media Studies

- Communication

- Criminology

- Environmental Science

- Evolutionary Biology

- International Law

- International Relations

- Islamic Studies

- Jewish Studies

- Latin American Studies

- Latino Studies

- Linguistics

- Literary and Critical Theory

- Medieval Studies

- Military History

- Political Science

- Public Health

- Renaissance and Reformation

- Social Work

- Urban Studies

- Victorian Literature

- Browse All Subjects

How to Subscribe

- Free Trials

In This Article Expand or collapse the "in this article" section Self-Report Tests, Measures, and Inventories in Clinical Psychology

Introduction, background information.

- Broadband Measures

- Internalizing Measures

- Stress and Trauma Measures

- Eating/Body Image Problems Measures

- Obsessive-Compulsive Measures

- Externalizing Measures

- Thought Dysfunction Measures

- Somatic Problems Measures

- Interpersonal Functioning Measures

Related Articles Expand or collapse the "related articles" section about

About related articles close popup.

Lorem Ipsum Sit Dolor Amet

Vestibulum ante ipsum primis in faucibus orci luctus et ultrices posuere cubilia Curae; Aliquam ligula odio, euismod ut aliquam et, vestibulum nec risus. Nulla viverra, arcu et iaculis consequat, justo diam ornare tellus, semper ultrices tellus nunc eu tellus.

- Diagnostic and Statistical Manual of Mental Disorders (DSM)

- Implicit Association Test (IAT)

- Personality and Health

- Personality Psychology

- Testing and Assessment

Other Subject Areas

Forthcoming articles expand or collapse the "forthcoming articles" section.

- Data Visualization

- Remote Work

- Workforce Training Evaluation

- Find more forthcoming articles...

- Export Citations

- Share This Facebook LinkedIn Twitter

Self-Report Tests, Measures, and Inventories in Clinical Psychology by Anthony Tarescavage LAST MODIFIED: 29 November 2022 DOI: 10.1093/obo/9780199828340-0300

There are thousands of psychological tests that rely on test-takers’ reports of themselves to measure their standing on psychological constructs of interest. This annotated bibliography on self-report inventories delineates over fifty of these self-report measures. Specifically, this review includes some of the most relevant self-report assessments in the major measurement domains of personality and psychopathology. All can be administered in the traditional paper-and-pencil format. Pertinent background information on using and evaluating these tests is described next.

Ben-Porath 2012 and Costa and McCrae 2009 provide historical background information regarding the measurement of personality and psychopathology. Cronbach and Meehl 1955 , Pedhazur and Schmelkin 1991a , Pedhazur and Schmelkin 1991b , Reynolds and Ramsay 2003 , and Kane 2006 describe the major considerations for evaluating the reliability and validity of self-report tests. American Educational Research Association, et al. 2014 describes best practices and ethical guidelines for using psychological tests. Finally, Lee, et al. 2017 describes how to map self-report inventories onto modern dimensional models of personality and psychopathology.

American Educational Research Association, American Psychological Association, National Council on Measurement in Education, and Joint Committee on Standards for Educational and Psychological Testing. 2014. Standards for educational and psychological testing . Washington, DC: American Educational Research Association.

These standards provide information on the foundations of psychological testing, best practices in operations, and information on applying testing information.

Ben-Porath, Y. S. 2012. Self-report inventories: Assessing personality and psychopathology. In Handbook of psychology . Vol. 10, Assessment psychology . 2d ed. Edited by J. R. Graham and J. A. Naglieri, 622–644. Hoboken, NJ: John Wiley & Sons, Inc.

This comprehensive chapter discusses the origins of self-report measures of personality and psychopathology, criticisms of self-report inventories and responses, and the common threats to protocol validity ( nonresponding, content-based invalid responding, over-reporting, and under-reporting).

Costa, P. T., and R. R. McCrae. 2009. The five-factor model and the NEO inventories. In Oxford handbook of personality assessment . Edited by J. N. Butcher, 299–322. New York: Oxford Univ. Press.

This chapter describes the most prominent model of personality, the five-factor model of personality. It also describes the NEO inventories that are most commonly used to measure this model.

Cronbach, L. J., and P. E. Meehl. 1955. Construct validity in psychological tests. Psychological Bulletin 52.4: 281–303.

DOI: 10.1037/h0040957

This seminal work provides background on one of the three primary areas of validity evidence—construct validity.

Kane, M. 2006. Content-related validity evidence in test development. In Handbook of test development . Edited by S. M. Downing and T. M. Haladyna, 131–153. Hillsdale, NJ: Lawrence Erlbaum.

This chapter provides background information on the third of three primary areas of validity evidence—content validity.

Lee, T. C., M. Sellbom, and C. J. Hopwood. 2017. Contemporary psychopathology assessment: Mapping major personality inventories onto empirical models of psychopathology. In Neuropsychological assessment in the age of evidence-based practice: Diagnostic and treatment evaluations . Edited by S. C. Bowden, 65–94. New York: Oxford Univ. Press.

This chapter discusses how self-report inventories can be used to assess contemporary dimensional models of psychopathology.

Pedhazur, E. J., and L. P. Schmelkin. 1991a. Criterion-related validation. In Measurement, design and analysis: An integrated approach . By E. J. Pedhazur and L. P. Schmelkin, 30–51. Hillsdale, NJ: Lawrence Erlbaum.

This chapter provides background information on the second of three primary areas of validity evidence—criterion validity.

Pedhazur, E. J., and L. P. Schmelkin. 1991b. Reliability. In Measurement, Design and Analysis: An Integrated Approach . By E. J. Pedhazur and L. P. Schmelkin, 81–117. Hillsdale, NJ: Lawrence Erlbaum and Associates.

This chapter provides background on evaluating the reliability of psychological tests.

Reynolds, C. R., and M. C. Ramsay. 2003. Bias in Psychological Assessment: An Empirical Review and Recommendations. In Handbook of psychology . Vol. 10, Assessment psychology . Edited by J. R. Graham and J. A. Naglieri, 67–93. Hoboken, NJ: John Wiley & Sons, Inc.

This chapter provides an overview of research on bias in psychological testing, including a review of possible explanations for mean score differences across demographic groups.

back to top

Users without a subscription are not able to see the full content on this page. Please subscribe or login .

Oxford Bibliographies Online is available by subscription and perpetual access to institutions. For more information or to contact an Oxford Sales Representative click here .

- About Psychology »

- Meet the Editorial Board »

- Abnormal Psychology

- Academic Assessment

- Acculturation and Health

- Action Regulation Theory

- Action Research

- Addictive Behavior

- Adolescence

- Adoption, Social, Psychological, and Evolutionary Perspect...

- Advanced Theory of Mind

- Affective Forecasting

- Affirmative Action

- Ageism at Work

- Allport, Gordon

- Alzheimer’s Disease

- Ambulatory Assessment in Behavioral Science

- Analysis of Covariance (ANCOVA)

- Animal Behavior

- Animal Learning

- Anxiety Disorders

- Art and Aesthetics, Psychology of

- Artificial Intelligence, Machine Learning, and Psychology

- Assessment and Clinical Applications of Individual Differe...

- Attachment in Social and Emotional Development across the ...

- Attention-Deficit/Hyperactivity Disorder (ADHD) in Adults

- Attention-Deficit/Hyperactivity Disorder (ADHD) in Childre...

- Attitudinal Ambivalence

- Attraction in Close Relationships

- Attribution Theory

- Authoritarian Personality

- Bayesian Statistical Methods in Psychology

- Behavior Therapy, Rational Emotive

- Behavioral Economics

- Behavioral Genetics

- Belief Perseverance

- Bereavement and Grief

- Biological Psychology

- Birth Order

- Body Image in Men and Women

- Bystander Effect

- Categorical Data Analysis in Psychology

- Childhood and Adolescence, Peer Victimization and Bullying...

- Clark, Mamie Phipps

- Clinical Neuropsychology

- Clinical Psychology

- Cognitive Consistency Theories

- Cognitive Dissonance Theory

- Cognitive Neuroscience

- Communication, Nonverbal Cues and

- Comparative Psychology

- Competence to Stand Trial: Restoration Services

- Competency to Stand Trial

- Computational Psychology

- Conflict Management in the Workplace

- Conformity, Compliance, and Obedience

- Consciousness

- Coping Processes

- Correspondence Analysis in Psychology

- Counseling Psychology

- Creativity at Work

- Critical Thinking

- Cross-Cultural Psychology

- Cultural Psychology

- Daily Life, Research Methods for Studying

- Data Science Methods for Psychology

- Data Sharing in Psychology

- Death and Dying

- Deceiving and Detecting Deceit

- Defensive Processes

- Depressive Disorders

- Development, Prenatal

- Developmental Psychology (Cognitive)

- Developmental Psychology (Social)

- Diagnostic and Statistical Manual of Mental Disorders (DSM...

- Discrimination

- Dissociative Disorders

- Drugs and Behavior

- Eating Disorders

- Ecological Psychology

- Educational Settings, Assessment of Thinking in

- Effect Size

- Embodiment and Embodied Cognition

- Emerging Adulthood

- Emotional Intelligence

- Empathy and Altruism

- Employee Stress and Well-Being

- Environmental Neuroscience and Environmental Psychology

- Ethics in Psychological Practice

- Event Perception

- Evolutionary Psychology

- Expansive Posture

- Experimental Existential Psychology

- Exploratory Data Analysis

- Eyewitness Testimony

- Eysenck, Hans

- Factor Analysis

- Festinger, Leon

- Five-Factor Model of Personality

- Flynn Effect, The

- Forensic Psychology

- Forgiveness

- Friendships, Children's

- Fundamental Attribution Error/Correspondence Bias

- Gambler's Fallacy

- Game Theory and Psychology

- Geropsychology, Clinical

- Global Mental Health

- Habit Formation and Behavior Change

- Health Psychology

- Health Psychology Research and Practice, Measurement in

- Heider, Fritz

- Heuristics and Biases

- History of Psychology

- Human Factors

- Humanistic Psychology

- Industrial and Organizational Psychology

- Inferential Statistics in Psychology

- Insanity Defense, The

- Intelligence

- Intelligence, Crystallized and Fluid

- Intercultural Psychology

- Intergroup Conflict

- International Classification of Diseases and Related Healt...

- International Psychology

- Interviewing in Forensic Settings

- Intimate Partner Violence, Psychological Perspectives on

- Introversion–Extraversion

- Item Response Theory

- Law, Psychology and

- Lazarus, Richard

- Learned Helplessness

- Learning Theory

- Learning versus Performance

- LGBTQ+ Romantic Relationships

- Lie Detection in a Forensic Context

- Life-Span Development

- Locus of Control

- Loneliness and Health

- Mathematical Psychology

- Meaning in Life

- Mechanisms and Processes of Peer Contagion

- Media Violence, Psychological Perspectives on

- Mediation Analysis

- Memories, Autobiographical

- Memories, Flashbulb

- Memories, Repressed and Recovered

- Memory, False

- Memory, Human

- Memory, Implicit versus Explicit

- Memory in Educational Settings

- Memory, Semantic

- Meta-Analysis

- Metacognition

- Metaphor, Psychological Perspectives on

- Microaggressions

- Military Psychology

- Mindfulness

- Mindfulness and Education

- Minnesota Multiphasic Personality Inventory (MMPI)

- Money, Psychology of

- Moral Conviction

- Moral Development

- Moral Psychology

- Moral Reasoning

- Nature versus Nurture Debate in Psychology

- Neuroscience of Associative Learning

- Nonergodicity in Psychology and Neuroscience

- Nonparametric Statistical Analysis in Psychology

- Observational (Non-Randomized) Studies

- Obsessive-Complusive Disorder (OCD)

- Occupational Health Psychology

- Olfaction, Human

- Operant Conditioning

- Optimism and Pessimism

- Organizational Justice

- Parenting Stress

- Parenting Styles

- Parents' Beliefs about Children

- Path Models

- Peace Psychology

- Perception, Person

- Performance Appraisal

- Personality Disorders

- Phenomenological Psychology

- Placebo Effects in Psychology

- Play Behavior

- Positive Psychological Capital (PsyCap)

- Positive Psychology

- Posttraumatic Stress Disorder (PTSD)

- Prejudice and Stereotyping

- Pretrial Publicity

- Prisoner's Dilemma

- Problem Solving and Decision Making

- Procrastination

- Prosocial Behavior

- Prosocial Spending and Well-Being

- Protocol Analysis

- Psycholinguistics

- Psychological Literacy

- Psychological Perspectives on Food and Eating

- Psychology, Political

- Psychoneuroimmunology

- Psychophysics, Visual

- Psychotherapy

- Psychotic Disorders

- Publication Bias in Psychology

- Reasoning, Counterfactual

- Rehabilitation Psychology

- Relationships

- Reliability–Contemporary Psychometric Conceptions

- Religion, Psychology and

- Replication Initiatives in Psychology

- Research Methods

- Risk Taking

- Role of the Expert Witness in Forensic Psychology, The

- Sample Size Planning for Statistical Power and Accurate Es...

- Schizophrenic Disorders

- School Psychology

- School Psychology, Counseling Services in

- Self, Gender and

- Self, Psychology of the

- Self-Construal

- Self-Control

- Self-Deception

- Self-Determination Theory

- Self-Efficacy

- Self-Esteem

- Self-Monitoring

- Self-Regulation in Educational Settings

- Self-Report Tests, Measures, and Inventories in Clinical P...

- Sensation Seeking

- Sex and Gender

- Sexual Minority Parenting

- Sexual Orientation

- Signal Detection Theory and its Applications

- Simpson's Paradox in Psychology

- Single People

- Single-Case Experimental Designs

- Skinner, B.F.

- Sleep and Dreaming

- Small Groups

- Social Class and Social Status

- Social Cognition

- Social Neuroscience

- Social Support

- Social Touch and Massage Therapy Research

- Somatoform Disorders

- Spatial Attention

- Sports Psychology

- Stanford Prison Experiment (SPE): Icon and Controversy

- Stereotype Threat

- Stereotypes

- Stress and Coping, Psychology of

- Student Success in College

- Subjective Wellbeing Homeostasis

- Taste, Psychological Perspectives on

- Teaching of Psychology

- Terror Management Theory

- The Concept of Validity in Psychological Assessment

- The Neuroscience of Emotion Regulation

- The Reasoned Action Approach and the Theories of Reasoned ...

- The Weapon Focus Effect in Eyewitness Memory

- Theory of Mind

- Therapies, Person-Centered

- Therapy, Cognitive-Behavioral

- Thinking Skills in Educational Settings

- Time Perception

- Trait Perspective

- Trauma Psychology

- Twin Studies

- Type A Behavior Pattern (Coronary Prone Personality)

- Unconscious Processes

- Video Games and Violent Content

- Virtues and Character Strengths

- Women and Science, Technology, Engineering, and Math (STEM...

- Women, Psychology of

- Work Well-Being

- Wundt, Wilhelm

- Privacy Policy

- Cookie Policy

- Legal Notice

- Accessibility

Powered by:

- [66.249.64.20|185.80.150.64]

- 185.80.150.64

- Help & FAQ

Assessing psychological well-being: Self-report instruments for the NIH Toolbox

- Medical Social Sciences

- Psychiatry and Behavioral Sciences

Research output : Contribution to journal › Article › peer-review

Objective: Psychological well-being (PWB) has a significant relationship with physical and mental health. As a part of the NIH Toolbox for the Assessment of Neurological and Behavioral Function, we developed self-report item banks and short forms to assess PWB. Study design and setting: Expert feedback and literature review informed the selection of PWB concepts and the development of item pools for positive affect, life satisfaction, and meaning and purpose. Items were tested with a community-dwelling US Internet panel sample of adults aged 18 and above (N = 552). Classical and item response theory (IRT) approaches were used to evaluate unidimensionality, fit of items to the overall measure, and calibrations of those items, including differential item function (DIF). Results: IRT-calibrated item banks were produced for positive affect (34 items), life satisfaction (16 items), and meaning and purpose (18 items). Their psychometric properties were supported based on the results of factor analysis, fit statistics, and DIF evaluation. All banks measured the concepts precisely (reliability ≥0.90) for more than 98 % of participants. Conclusion: These adult scales and item banks for PWB provide the flexibility, efficiency, and precision necessary to promote future epidemiological, observational, and intervention research on the relationship of PWB with physical and mental health.

- Life satisfaction

- Positive affect

- Psychological assessment

ASJC Scopus subject areas

- Public Health, Environmental and Occupational Health

This output contributes to the following UN Sustainable Development Goals (SDGs)

Access to Document

- 10.1007/s11136-013-0452-3

Other files and links

- Link to publication in Scopus

- Link to the citations in Scopus

Fingerprint

- banks INIS 100%

- Self-Report Psychology 100%

- Psychological Well-Being Psychology 100%

- Positive Affect Psychology 33%

- Mental Health Psychology 33%

- Life Satisfaction Psychology 33%

- evaluation INIS 25%

- psychological behavior INIS 25%

T1 - Assessing psychological well-being

T2 - Self-report instruments for the NIH Toolbox

AU - Salsman, John M.

AU - Lai, Jin Shei

AU - Hendrie, Hugh C.

AU - Butt, Zeeshan

AU - Zill, Nicholas

AU - Pilkonis, Paul A.

AU - Peterson, Christopher

AU - Stoney, Catherine M.

AU - Brouwers, Pim

AU - Cella, David

N1 - Funding Information: Acknowledgments This project was funded in whole or in part with federal funds from the Blueprint for Neuroscience Research and the Office of Behavioral and Social Sciences Research, National Institutes of Health, under Contract No. HHS-N-260-2006-00007-C. Preparation of this manuscript was supported in part by NIH grants KL2RR025740 from the National Center for Research Resources and 5K07CA158008-01A1 from the National Cancer Institute. The authors would like to thank the subdomain consultants, Felicia Huppert, Ph.D., Alice Carter, Ph.D., Marianne Brady, Ph.D., Dilip Jeste, MD, Colin Depp, Ph.D., and Bruce Cuthbert, Ph.D., and members of the NIH project team, Gitanjali Taneja, Ph.D., and Sarah Knox, Ph.D., who provided critical and constructive expertise during the development of the NIH Toolbox Emotion measurement battery.

PY - 2014/2

Y1 - 2014/2

N2 - Objective: Psychological well-being (PWB) has a significant relationship with physical and mental health. As a part of the NIH Toolbox for the Assessment of Neurological and Behavioral Function, we developed self-report item banks and short forms to assess PWB. Study design and setting: Expert feedback and literature review informed the selection of PWB concepts and the development of item pools for positive affect, life satisfaction, and meaning and purpose. Items were tested with a community-dwelling US Internet panel sample of adults aged 18 and above (N = 552). Classical and item response theory (IRT) approaches were used to evaluate unidimensionality, fit of items to the overall measure, and calibrations of those items, including differential item function (DIF). Results: IRT-calibrated item banks were produced for positive affect (34 items), life satisfaction (16 items), and meaning and purpose (18 items). Their psychometric properties were supported based on the results of factor analysis, fit statistics, and DIF evaluation. All banks measured the concepts precisely (reliability ≥0.90) for more than 98 % of participants. Conclusion: These adult scales and item banks for PWB provide the flexibility, efficiency, and precision necessary to promote future epidemiological, observational, and intervention research on the relationship of PWB with physical and mental health.

AB - Objective: Psychological well-being (PWB) has a significant relationship with physical and mental health. As a part of the NIH Toolbox for the Assessment of Neurological and Behavioral Function, we developed self-report item banks and short forms to assess PWB. Study design and setting: Expert feedback and literature review informed the selection of PWB concepts and the development of item pools for positive affect, life satisfaction, and meaning and purpose. Items were tested with a community-dwelling US Internet panel sample of adults aged 18 and above (N = 552). Classical and item response theory (IRT) approaches were used to evaluate unidimensionality, fit of items to the overall measure, and calibrations of those items, including differential item function (DIF). Results: IRT-calibrated item banks were produced for positive affect (34 items), life satisfaction (16 items), and meaning and purpose (18 items). Their psychometric properties were supported based on the results of factor analysis, fit statistics, and DIF evaluation. All banks measured the concepts precisely (reliability ≥0.90) for more than 98 % of participants. Conclusion: These adult scales and item banks for PWB provide the flexibility, efficiency, and precision necessary to promote future epidemiological, observational, and intervention research on the relationship of PWB with physical and mental health.

KW - Life satisfaction

KW - Meaning

KW - Positive affect

KW - Psychological assessment

KW - Well-being

UR - http://www.scopus.com/inward/record.url?scp=84895062253&partnerID=8YFLogxK

UR - http://www.scopus.com/inward/citedby.url?scp=84895062253&partnerID=8YFLogxK

U2 - 10.1007/s11136-013-0452-3

DO - 10.1007/s11136-013-0452-3

M3 - Article

C2 - 23771709

AN - SCOPUS:84895062253

SN - 0962-9343

JO - Quality of Life Research

JF - Quality of Life Research

- Systematic review

- Open access

- Published: 27 July 2011

A systematic review of the psychometric properties of self-report research utilization measures used in healthcare

- Janet E Squires 1 ,

- Carole A Estabrooks 2 ,

- Hannah M O'Rourke 2 ,

- Petter Gustavsson 3 ,

- Christine V Newburn-Cook 2 &

- Lars Wallin 4

Implementation Science volume 6 , Article number: 83 ( 2011 ) Cite this article

20k Accesses

69 Citations

1 Altmetric

Metrics details

In healthcare, a gap exists between what is known from research and what is practiced. Understanding this gap depends upon our ability to robustly measure research utilization.

The objectives of this systematic review were: to identify self-report measures of research utilization used in healthcare, and to assess the psychometric properties (acceptability, reliability, and validity) of these measures.

We conducted a systematic review of literature reporting use or development of self-report research utilization measures. Our search included: multiple databases, ancestry searches, and a hand search. Acceptability was assessed by examining time to complete the measure and missing data rates. Our approach to reliability and validity assessment followed that outlined in the Standards for Educational and Psychological Testing .

Of 42,770 titles screened, 97 original studies (108 articles) were included in this review. The 97 studies reported on the use or development of 60 unique self-report research utilization measures. Seven of the measures were assessed in more than one study. Study samples consisted of healthcare providers (92 studies) and healthcare decision makers (5 studies). No studies reported data on acceptability of the measures. Reliability was reported in 32 (33%) of the studies, representing 13 of the 60 measures. Internal consistency (Cronbach's Alpha) reliability was reported in 31 studies; values exceeded 0.70 in 29 studies. Test-retest reliability was reported in 3 studies with Pearson's r coefficients > 0.80. No validity information was reported for 12 of the 60 measures. The remaining 48 measures were classified into a three-level validity hierarchy according to the number of validity sources reported in 50% or more of the studies using the measure. Level one measures (n = 6) reported evidence from any three (out of four possible) Standards validity sources (which, in the case of single item measures, was all applicable validity sources). Level two measures (n = 16) had evidence from any two validity sources, and level three measures (n = 26) from only one validity source.

Conclusions

This review reveals significant underdevelopment in the measurement of research utilization. Substantial methodological advances with respect to construct clarity, use of research utilization and related theory, use of measurement theory, and psychometric assessment are required. Also needed are improved reporting practices and the adoption of a more contemporary view of validity ( i.e. , the Standards ) in future research utilization measurement studies.

Peer Review reports

Clinical and health services research produces vast amounts of new research every year. Despite increased access by healthcare providers and decision-makers to this knowledge, uptake into practice is slow [ 1 , 2 ] and has resulted in a 'research-practice gap.'

Measuring research utilization

Recognition of, and a desire to narrow, the research-practice gap, has led to the accumulation of a considerable body of knowledge on research utilization and related terms, such as knowledge translation, knowledge utilization, innovation adoption, innovation diffusion, and research implementation. Despite gains in the understanding of research utilization theoretically [ 3 , 4 ], a large and rapidly expanding literature addressing the individual factors associated with research utilization [ 5 , 6 ], and the implementation of clinical practice guidelines in various health disciplines [ 7 , 8 ], little is known about how to robustly measure research utilization.

We located three theoretical papers explicitly addressing the measurement of knowledge utilization (of which research utilization is a component) [ 9 – 11 ], and one integrative review that examined the psychometric properties of self-report research utilization measures used in professions allied to medicine [ 12 ]. Within each of these papers, a need for conceptual clarity and pluralism in measurement was stressed. Weiss [ 11 ] also argued for specific foci ( i.e ., focus on specific studies, people, issues, or organizations) when measuring knowledge utilization. Shortly thereafter, Dunn [ 9 ], proposed a linear four-step process for measuring knowledge utilization: conceptualization (what is knowledge utilization and how it is defined and classified); methods (given a particular conceptualization, what methods are available to observe knowledge use); measures (what scales are available to measure knowledge use); and reliability and validity. Dunn specifically urged that greater emphasis be placed on step four (reliability and validity). A decade later, Rich [ 10 ] provided a comprehensive overview of issues influencing knowledge utilization across many disciplines. He emphasized the complexity of the measurement process, suggesting that knowledge utilization may not always be tied to a specific action, and that it may exist as more of an omnibus concept.

The only review of research utilization measures to date was conducted in 2003 by Estabrooks et al. [ 12 ]. The review was limited to self-report research utilization measures used in professions allied to medicine and to the specific data on validity that was extracted. That is, only data that was (by the original authors) explicitly interpreted as validity in the study reports was extracted as 'supporting validity evidence'. A total of 43 articles from three online databases (CINAHL, Medline, and Pubmed) comprised the final sample of articles included in the review. Two commonly used multi-item self-report measures (published in 16 papers) were identified--the Nurses Practice Questionnaire and the Research Utilization Questionnaire. An additional 16 published papers were identified that used single-item self-report questions to measure research utilization. Several problems with these research utilization measures were identified: lack of construct clarity of research utilization, lack of use of research utilization theories, lack of use of measurement theory, and finally, lack of standard psychometric assessment.

The four papers [ 9 – 12 ] discussed above point to a persistent and unresolved problem--an inability to robustly measure research utilization. This presents both an important and a practical challenge to researchers and decision-makers who rely on such measures to evaluate the uptake and effectiveness of research findings to improve patient and organizational outcomes. There are multiple reasons why we believe the measurement of research utilization is important. The most important reason relates to designing and evaluating the effectiveness of interventions to improve patient outcomes. Research utilization is commonly assumed to have a positive impact on patient outcomes by assisting with eliminating ineffective and potentially harmful practices, and implementing more effective (research-based) practices. However, we can only determine if patient outcomes are sensitive to varying levels of research utilization if we can first measure research utilization in a reliable and valid manner. If patient outcomes are sensitive to the use of research and we do not measure it, we, in essence, do the field more harm than good by ignoring a 'black box' of causal mechanisms that can influence research utilization. The causal mechanisms within this back box can, and should, be used to inform the design of interventions that aim to improve patient outcomes by increasing research utilization by care providers.

Study purpose and objectives

The study reported in this paper is a systematic review of the psychometric properties of self-report measures of research utilization used in healthcare. Specific objectives of this study were to: identify self-report measures of research utilization used in healthcare ( i.e ., used to measure research utilization by healthcare providers, healthcare decision makers, and in healthcare organizations); and assess the psychometric properties of these measures.

Study selection (inclusion and exclusion) criteria

Studies were included that met the following inclusion criteria: reported on the development or use of a self-report measure of research utilization; and the study population comprised one or more of the following groups--healthcare providers, healthcare decision makers, or healthcare organizations. We defined research utilization as the use of research-based (empirically derived) information. This information could be reported in a primary research article, review/synthesis report, or a protocol. Where the study involved the use of a protocol, we required the research-basis for the protocol to be apparent in the article. We excluded articles that reported on adherence to clinical practice guidelines, the rationale being that clinical practice guidelines can be based on non-research evidence ( e.g ., expert opinion). We also excluded articles reporting on the use of one specific-research-based practice if the overall purpose of the study was not to examine research utilization.

Search strategy for identification of studies

We searched 12 bibliographic databases; details of the search strategy are located in Additional File 1 . We also hand searched the journal Implementation Science (a specialized journal in the research utilization field) and assessed the reference lists of all retrieved articles. The final set of included articles was restricted to those published in the English, Danish, Swedish, and Norwegian languages (the official languages of the research team). There were no restrictions based on when the study was undertaken or publication status.

Selection of Studies

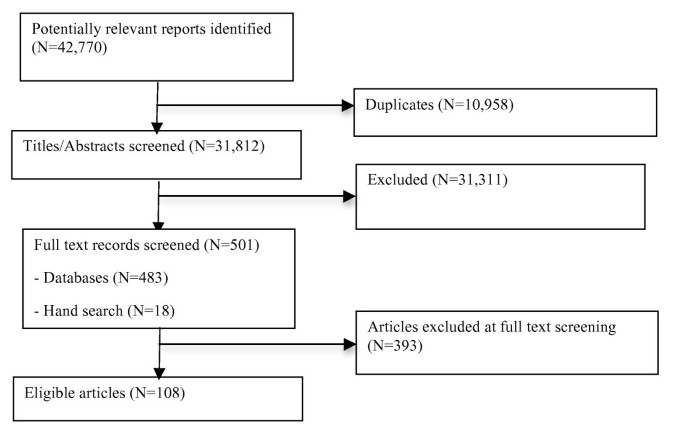

Two team members (JES and HMO) independently screened all titles and abstracts (n = 42,770). Full text copies were retrieved for 501 titles, which represented all titles identified as having potential relevance to our objectives or where there was insufficient information to make a decision as to relevance. A total of 108 articles (representing 97 original studies) comprised the final sample. Disagreements were resolved by consensus. When consensus could not be reached, a third senior member of the review team (CAE, LW) acted as an arbitrator and made the final decision (n = 9 articles). Figure 1 summarizes the results of the screening/selection process. A list of retrieved articles that were excluded can be found in Additional File 2 .

Article screening and selection .

Data Extraction

Two reviewers (JES and HMO) performed data extraction: one reviewer extracted the data, which was then checked for accuracy by a second reviewer. We extracted data on: year of publication, study design, setting, sampling, subject characteristics, methods, the measure of research utilization used, substantive theory, measurement theory, responsiveness (the extent to which the measure can assess change over time), reliability (information on variances and standard deviations of measurement errors, item response theory test information functions, and reliability coefficients where extracted where it existed), reported statements of traditional validity (content validity, criterion validity, construct validity), and study findings reflective of the four sources of validity evidence (content, response processes, internal structure, and relations to other variables) outlined in the Standards for Educational and Psychological Testing (the Standards ) [ 13 ]. Content evidence refers to the extent to which the items in a self-report measure adequately represent the content domain of the concept or construct of interest. Response processes evidence refers to how respondents interpret, process, and elaborate upon item content and whether this behaviour is in accordance with the concept or construct being measured. Internal structure evidence examines the relationships between the items on a self-report measure to evaluate its dimensionality. Relations to other variables evidence provide the fourth source of validity evidence. External variables may include measures of criteria that the concept or construct of interest is expected to predict, as well as relationships to other scales hypothesized to measure the same concepts or constructs, and variables measuring related or different concepts or constructs [ 13 ]. In the Standards , validity is a unitary construct in which multiple evidence sources contribute to construct validity. A higher number of validity sources indicate stronger construct validity. An overview of the Standards approach to reliability and validity assessment is in Additional File 3 . All disagreements in data extraction were resolved by consensus.

There are no universal criteria to grade the quality of self-report measures. Therefore, in line with other recent measurement reviews [ 14 , 15 ], we did not use restrictive criteria to rate the quality of each study. Instead, we focused on performing a comprehensive assessment of the psychometric properties of the scores obtained using the research utilization measures reported in each study. In performing this assessment, we adhered to the Standards , considered best practice in the field of psychometrics [ 16 ]. Accordingly, we extracted data on all study results that could be grouped according to the Standards' critical reliability information and four validity evidence sources. To assess relations to other variables, we a priori (based on commonly used research utilization theories and systematic reviews) identified established relationships between research utilization and other (external) variables (See Additional File 3 ). The external variables included: individual characteristics ( e.g ., attitude towards research use), contextual characteristics ( e.g ., role), organizational characteristics ( e.g ., hospital size), and interventions ( e.g ., use of reminders). All relationships between research use and external variables in the final set of included articles were then interpreted as supporting or refuting validity evidence. The relationship was coded as 'supporting validity evidence' if it was in the same direction and had the significance predicted, and as 'refuting validity evidence' if it was in the opposite direction or did not have the significance predicted.

Data Synthesis

The findings from the review are presented in narrative form. To synthesize the large volume of data extracted on validity, we developed a three-level hierarchy of self-report research utilization measures based on the number of validity sources reported in 50% or more of the studies for each measure. In the Standards , no one source of validity evidence is considered always superior to the other sources. Therefore, in our hierarchy, level one, two, and three measures provided evidence from any three, two, and one validity sources respectively. In the case of single-item measures, only three validity sources are applicable; internal structure validity evidence is not applicable as it assesses relationships between items. Therefore, a single-item measure within level one has evidence from all applicable validity sources.

Objective 1: Identification and characteristics of self-report research utilization measures used in healthcare

In total, 60 unique self-report research utilization measures were identified. We grouped them into 10 classes as follows:

Nurses Practice Questionnaire (n = 1 Measure)

Research Utilization Survey (n = 1 Measure)

Edmonton Research Orientation Survey (n = 1 Measure)

Knott and Wildavsky Standards (n = 1 Measure)

Other Specific Practices Indices (n = 4 Measures) (See Additional File 4 )

Other General Research Utilization Indices (n = 10 Measures) (See Additional File 4 )

Past, Present, Future Use (n = 1 Measure)

Parahoo's Measure (n = 1 Measure)

Estabrooks' Kinds of Research Utilization (n = 1 Measure)

Other Single-Item Measures (n = 39 Measures)

Table 1 provides a description of each class of measures. Classes one through six contain multiple-item measures, while classes seven through ten contain single-item measures; similar proportions of articles reported multi- and single-item measures (n = 51 and n = 59 respectively, two articles reported both multi- and single-item measures). Only seven measures were assessed in multiple studies: Nurses Practice Questionnaire; Research Utilization Survey; Edmonton Research Orientation Survey; a Specific Practice Index [ 17 , 18 ]; Past, Present, Future Use; Parahoo's Measure; and Estabrooks' Kinds of Research Utilization. All study reports claimed to measure research utilization; however, 13 of the 60 measures identified were proxy measures of research utilization. That is, they measure variables related to using research ( e.g ., reading research articles) but not research utilization directly. The 13 proxy measures are: Nurses Practice Questionnaire, Research Utilization Questionnaire, Edmonton Research Orientation Survey, and the ten Other General Research Utilization Indices.