Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

2-Types of Sources

3. Fact or Opinion

Thinking about the reason an author created a source can be helpful to you because that reason was what dictated the kind of information he/she chose to include. Depending on that purpose, the author may have chosen to include factual, analytical, and objective information. Or, instead, it may have suited his/her purpose to include information that was subjective and therefore less factual and analytical. The author’s reason for producing the source also determined whether he or she included more than one perspective or just his/her own.

Authors typically want to do at least one of the following:

- Inform and educate

- Sell services or products

Combined Purposes

Sometimes authors have a combination of purposes, as when a marketer decides he can sell more smartphones with an informative sales video that also entertains us. The same is true when a singer writes and performs a song that entertains us but that she intends to make available for sale. Other examples of authors having multiple purposes occur in most scholarly writing.

In those cases, authors certainly want to inform and educate their audiences. But they also want to persuade their audiences that what they are reporting and/or postulating is a true description of a situation, event, or phenomenon or a valid argument that their audience must take a particular action. In this blend of scholarly authors’ purposes, the intent to educate and inform is considered to trump the intent to persuade

Why Intent Matters

Authors’ intent usually matters in how useful their information can be to your research project, depending on which information need you are trying to meet. For instance, when you’re looking for sources that will help you actually decide your answer to your research question or evidence for your answer that you will share with your audience, you will want the author’s main purpose to have been to inform or educate his/her audience. That’s because, with that intent, he/she is likely to have used:

- Facts where possible.

- Multiple perspectives instead of just his/her own.

- Little subjective information.

- Seemingly unbiased, objective language that cites where he/she got the information.

The reason you want that kind of source when trying to answer your research question or explaining that answer is that all of those characteristics will lend credibility to the argument you are making with your project. Both you and your audience will simply find it easier to believe—will have more confidence in the argument being made—when you include those types of sources.

Sources whose authors intend only to persuade others won’t meet your information need for an answer to your research question or evidence with which to convince your audience. That’s because they don’t always confine themselves to facts. Instead, they tell us their opinions without backing them up with evidence. If you used those sources, your readers would notice and would be less likely to believe your argument.

Fact vs. Opinion vs. Objective vs. Subjective

Need to brush up on the differences between fact, objective information, subjective information, and opinion?

Fact – Facts are useful to inform or make an argument.

- The United States was established in 1776.

- The pH levels in acids are lower than the pH levels in alkalines.

- Beethoven had a reputation as a virtuoso pianist.

Opinion – Opinions are useful to persuade, but careful readers and listeners will notice and demand evidence to back them up.

- That was a good movie.

- Strawberries taste better than blueberries.

- Timothee Chalamet is the sexiest actor alive.

- The death penalty is wrong.

- Beethoven’s reputation as a virtuoso pianist is overrated.

Objective – Objective information reflects a research finding or multiple perspectives that are not biased.

- “Several studies show that an active lifestyle reduces the risk of heart disease and diabetes.”

- “Studies from the Brown University Medical School show that twenty-somethings eat 25 percent more fast-food meals at this age than they did as teenagers.”

Subjective – Subjective information presents one person or organization’s perspective or interpretation. Subjective information can be meant to distort, or it can reflect educated and informed thinking. All opinions are subjective, but some are backed up with facts more than others.

- “The simple truth is this: As human beings, we were meant to move.”

- “In their thirties, women should stock up on calcium to ensure strong, dense bones and to ward off osteoporosis later in life.” *

*In this quote, it’s mostly the “should” that makes it subjective. The objective version of that quote would read something like: “Studies have shown that women who begin taking calcium in their 30s show stronger bone density and fewer repercussions of osteoporosis than women who did not take calcium at all.” But perhaps there are other data showing complications from taking calcium. That’s why drawing the conclusion that requires a “should” makes the statement subjective.

Choosing & Using Sources: A Guide to Academic Research Copyright © 2015 by Teaching & Learning, Ohio State University Libraries is licensed under a Creative Commons Attribution 4.0 International License , except where otherwise noted.

Share This Book

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

2-Types of Sources

Fact or Opinion

Thinking about the reason an author produced a source can be helpful to you because that reason was what dictated the kind of information he/she chose to include. Depending on that purpose, the author may have chosen to include factual, analytical, and objective information. Or, instead, it may have suited his/her purpose to include information that was subjective and therefore less factual and analytical. The author’s reason for producing the source also determined whether he or she included more than one perspective or just his/her own.

Authors typically want to do at least one of the following:

- Inform and educate

- Sell services or products or

Combined Purposes

Sometimes authors have a combination of purposes, as when a marketer decides he can sell more smart phones with an informative sales video that also entertains us. The same is true when a singer writes and performs a song that entertains us but that she intends to make available for sale. Other examples of authors having multiple purposes occur in most scholarly writing.

In those cases, authors certainly want to inform and educate their audiences. But they also want to persuade their audiences that what they are reporting and/or postulating is a true description of a situation, event, or phenomenon or a valid argument that their audience must take a particular action. In this blend of scholarly author’s purposes, the intent to educate and inform is considered to trump the intent to persuade.

Why Intent Matters

Authors’ intent usually matters in how useful their information can be to your research project, depending on which information need you are trying to meet. For instance, when you’re looking for sources that will help you actually decide your answer to your research question or evidence for your answer that you will share with your audience, you will want the author’s main purpose to have been to inform or educate his/her audience. That’s because, with that intent, he/she is likely to have used:

- Facts where possible.

- Multiple perspectives instead of just his/her own.

- Little subjective information.

- Seemingly unbiased, objective language that cites where he/she got the information.

The reason you want that kind of resource when trying to answer your research question or explaining that answer is that all of those characteristics will lend credibility to the argument you are making with your project. Both you and your audience will simply find it easier to believe—will have more confidence in the argument being made—when you include those types of sources.

Sources whose authors intend only to persuade others won’t meet your information need for an answer to your research question or evidence with which to convince your audience. That’s because they don’t always confine themselves to facts. Instead, they tell us their opinions without backing them up with evidence. If you used those sources, your readers will notice and not believe your argument.

Fact vs. Opinion vs. Objective vs. Subjective

Need to brush up on the differences between fact, objective information, subjective information, and opinion?

Fact – Facts are useful to inform or make an argument.

- The United States was established in 1776.

- The pH levels in acids are lower than pH levels in alkalines.

- Beethoven had a reputation as a virtuoso pianist.

Opinion – Opinions are useful to persuade, but careful readers and listeners will notice and demand evidence to back them up.

- That was a good movie.

- Strawberries taste better blueberries.

- George Clooney is the sexiest actor alive.

- The death penalty is wrong.

- Beethoven’s reputation as a virtuoso pianist is overrated.

Objective – Objective information reflects a research finding or multiple perspectives that are not biased.

- “Several studies show that an active lifestyle reduces the risk of heart disease and diabetes.”

- “Studies from the Brown University Medical School show that twenty-somethings eat 25 percent more fast-food meals at this age than they did as teenagers.”

Subjective – Subjective information presents one person or organization’s perspective or interpretation. Subjective information can be meant to distort, or it can reflect educated and informed thinking. All opinions are subjective, but some are backed up with facts more than others.

- “The simple truth is this: As human beings, we were meant to move.”

- “In their thirties, women should stock up on calcium to ensure strong, dense bones and to ward off osteoporosis later in life.”*

*In this quote, it’s mostly the “should” that makes it subjective. The objective version of the last quote would read: “Studies have shown that women who begin taking calcium in their 30s show stronger bone density and fewer repercussions of osteoporosis than women who did not take calcium at all.” But perhaps there are other data showing complications from taking calcium. That’s why drawing the conclusion that requires a “should” makes the statement subjective.

Activity: Fact, Opinion, Objective, or Subjective?

Open activity in a web browser.

Choosing & Using Sources: A Guide to Academic Research by Teaching & Learning, Ohio State University Libraries is licensed under a Creative Commons Attribution 4.0 International License , except where otherwise noted.

Share This Book

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Indian Assoc Pediatr Surg

- v.24(1); Jan-Mar 2019

Formulation of Research Question – Stepwise Approach

Simmi k. ratan.

Department of Pediatric Surgery, Maulana Azad Medical College, New Delhi, India

1 Department of Community Medicine, North Delhi Municipal Corporation Medical College, New Delhi, India

2 Department of Pediatric Surgery, Batra Hospital and Research Centre, New Delhi, India

Formulation of research question (RQ) is an essentiality before starting any research. It aims to explore an existing uncertainty in an area of concern and points to a need for deliberate investigation. It is, therefore, pertinent to formulate a good RQ. The present paper aims to discuss the process of formulation of RQ with stepwise approach. The characteristics of good RQ are expressed by acronym “FINERMAPS” expanded as feasible, interesting, novel, ethical, relevant, manageable, appropriate, potential value, publishability, and systematic. A RQ can address different formats depending on the aspect to be evaluated. Based on this, there can be different types of RQ such as based on the existence of the phenomenon, description and classification, composition, relationship, comparative, and causality. To develop a RQ, one needs to begin by identifying the subject of interest and then do preliminary research on that subject. The researcher then defines what still needs to be known in that particular subject and assesses the implied questions. After narrowing the focus and scope of the research subject, researcher frames a RQ and then evaluates it. Thus, conception to formulation of RQ is very systematic process and has to be performed meticulously as research guided by such question can have wider impact in the field of social and health research by leading to formulation of policies for the benefit of larger population.

I NTRODUCTION

A good research question (RQ) forms backbone of a good research, which in turn is vital in unraveling mysteries of nature and giving insight into a problem.[ 1 , 2 , 3 , 4 ] RQ identifies the problem to be studied and guides to the methodology. It leads to building up of an appropriate hypothesis (Hs). Hence, RQ aims to explore an existing uncertainty in an area of concern and points to a need for deliberate investigation. A good RQ helps support a focused arguable thesis and construction of a logical argument. Hence, formulation of a good RQ is undoubtedly one of the first critical steps in the research process, especially in the field of social and health research, where the systematic generation of knowledge that can be used to promote, restore, maintain, and/or protect health of individuals and populations.[ 1 , 3 , 4 ] Basically, the research can be classified as action, applied, basic, clinical, empirical, administrative, theoretical, or qualitative or quantitative research, depending on its purpose.[ 2 ]

Research plays an important role in developing clinical practices and instituting new health policies. Hence, there is a need for a logical scientific approach as research has an important goal of generating new claims.[ 1 ]

C HARACTERISTICS OF G OOD R ESEARCH Q UESTION

“The most successful research topics are narrowly focused and carefully defined but are important parts of a broad-ranging, complex problem.”

A good RQ is an asset as it:

- Details the problem statement

- Further describes and refines the issue under study

- Adds focus to the problem statement

- Guides data collection and analysis

- Sets context of research.

Hence, while writing RQ, it is important to see if it is relevant to the existing time frame and conditions. For example, the impact of “odd-even” vehicle formula in decreasing the level of air particulate pollution in various districts of Delhi.

A good research is represented by acronym FINERMAPS[ 5 ]

Interesting.

- Appropriate

- Potential value and publishability

- Systematic.

Feasibility means that it is within the ability of the investigator to carry out. It should be backed by an appropriate number of subjects and methodology as well as time and funds to reach the conclusions. One needs to be realistic about the scope and scale of the project. One has to have access to the people, gadgets, documents, statistics, etc. One should be able to relate the concepts of the RQ to the observations, phenomena, indicators, or variables that one can access. One should be clear that the collection of data and the proceedings of project can be completed within the limited time and resources available to the investigator. Sometimes, a RQ appears feasible, but when fieldwork or study gets started, it proves otherwise. In this situation, it is important to write up the problems honestly and to reflect on what has been learned. One should try to discuss with more experienced colleagues or the supervisor so as to develop a contingency plan to anticipate possible problems while working on a RQ and find possible solutions in such situations.

This is essential that one has a real grounded interest in one's RQ and one can explore this and back it up with academic and intellectual debate. This interest will motivate one to keep going with RQ.

The question should not simply copy questions investigated by other workers but should have scope to be investigated. It may aim at confirming or refuting the already established findings, establish new facts, or find new aspects of the established facts. It should show imagination of the researcher. Above all, the question has to be simple and clear. The complexity of a question can frequently hide unclear thoughts and lead to a confused research process. A very elaborate RQ, or a question which is not differentiated into different parts, may hide concepts that are contradictory or not relevant. This needs to be clear and thought-through. Having one key question with several subcomponents will guide your research.

This is the foremost requirement of any RQ and is mandatory to get clearance from appropriate authorities before stating research on the question. Further, the RQ should be such that it minimizes the risk of harm to the participants in the research, protect the privacy and maintain their confidentiality, and provide the participants right to withdraw from research. It should also guide in avoiding deceptive practices in research.

The question should of academic and intellectual interest to people in the field you have chosen to study. The question preferably should arise from issues raised in the current situation, literature, or in practice. It should establish a clear purpose for the research in relation to the chosen field. For example, filling a gap in knowledge, analyzing academic assumptions or professional practice, monitoring a development in practice, comparing different approaches, or testing theories within a specific population are some of the relevant RQs.

Manageable (M): It has the similar essence as of feasibility but mainly means that the following research can be managed by the researcher.

Appropriate (A): RQ should be appropriate logically and scientifically for the community and institution.

Potential value and publishability (P): The study can make significant health impact in clinical and community practices. Therefore, research should aim for significant economic impact to reduce unnecessary or excessive costs. Furthermore, the proposed study should exist within a clinical, consumer, or policy-making context that is amenable to evidence-based change. Above all, a good RQ must address a topic that has clear implications for resolving important dilemmas in health and health-care decisions made by one or more stakeholder groups.

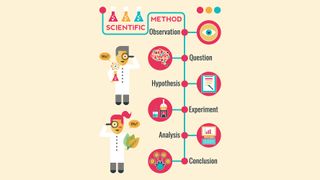

Systematic (S): Research is structured with specified steps to be taken in a specified sequence in accordance with the well-defined set of rules though it does not rule out creative thinking.

Example of RQ: Would the topical skin application of oil as a skin barrier reduces hypothermia in preterm infants? This question fulfills the criteria of a good RQ, that is, feasible, interesting, novel, ethical, and relevant.

Types of research question

A RQ can address different formats depending on the aspect to be evaluated.[ 6 ] For example:

- Existence: This is designed to uphold the existence of a particular phenomenon or to rule out rival explanation, for example, can neonates perceive pain?

- Description and classification: This type of question encompasses statement of uniqueness, for example, what are characteristics and types of neuropathic bladders?

- Composition: It calls for breakdown of whole into components, for example, what are stages of reflux nephropathy?

- Relationship: Evaluate relation between variables, for example, association between tumor rupture and recurrence rates in Wilm's tumor

- Descriptive—comparative: Expected that researcher will ensure that all is same between groups except issue in question, for example, Are germ cell tumors occurring in gonads more aggressive than those occurring in extragonadal sites?

- Causality: Does deletion of p53 leads to worse outcome in patients with neuroblastoma?

- Causality—comparative: Such questions frequently aim to see effect of two rival treatments, for example, does adding surgical resection improves survival rate outcome in children with neuroblastoma than with chemotherapy alone?

- Causality–Comparative interactions: Does immunotherapy leads to better survival outcome in neuroblastoma Stage IV S than with chemotherapy in the setting of adverse genetic profile than without it? (Does X cause more changes in Y than those caused by Z under certain condition and not under other conditions).

How to develop a research question

- Begin by identifying a broader subject of interest that lends itself to investigate, for example, hormone levels among hypospadias

- Do preliminary research on the general topic to find out what research has already been done and what literature already exists.[ 7 ] Therefore, one should begin with “information gaps” (What do you already know about the problem? For example, studies with results on testosterone levels among hypospadias

- What do you still need to know? (e.g., levels of other reproductive hormones among hypospadias)

- What are the implied questions: The need to know about a problem will lead to few implied questions. Each general question should lead to more specific questions (e.g., how hormone levels differ among isolated hypospadias with respect to that in normal population)

- Narrow the scope and focus of research (e.g., assessment of reproductive hormone levels among isolated hypospadias and hypospadias those with associated anomalies)

- Is RQ clear? With so much research available on any given topic, RQs must be as clear as possible in order to be effective in helping the writer direct his or her research

- Is the RQ focused? RQs must be specific enough to be well covered in the space available

- Is the RQ complex? RQs should not be answerable with a simple “yes” or “no” or by easily found facts. They should, instead, require both research and analysis on the part of the writer

- Is the RQ one that is of interest to the researcher and potentially useful to others? Is it a new issue or problem that needs to be solved or is it attempting to shed light on previously researched topic

- Is the RQ researchable? Consider the available time frame and the required resources. Is the methodology to conduct the research feasible?

- Is the RQ measurable and will the process produce data that can be supported or contradicted?

- Is the RQ too broad or too narrow?

- Create Hs: After formulating RQ, think where research is likely to be progressing? What kind of argument is likely to be made/supported? What would it mean if the research disputed the planned argument? At this step, one can well be on the way to have a focus for the research and construction of a thesis. Hs consists of more specific predictions about the nature and direction of the relationship between two variables. It is a predictive statement about the outcome of the research, dictate the method, and design of the research[ 1 ]

- Understand implications of your research: This is important for application: whether one achieves to fill gap in knowledge and how the results of the research have practical implications, for example, to develop health policies or improve educational policies.[ 1 , 8 ]

Brainstorm/Concept map for formulating research question

- First, identify what types of studies have been done in the past?

- Is there a unique area that is yet to be investigated or is there a particular question that may be worth replicating?

- Begin to narrow the topic by asking open-ended “how” and “why” questions

- Evaluate the question

- Develop a Hypothesis (Hs)

- Write down the RQ.

Writing down the research question

- State the question in your own words

- Write down the RQ as completely as possible.

For example, Evaluation of reproductive hormonal profile in children presenting with isolated hypospadias)

- Divide your question into concepts. Narrow to two or three concepts (reproductive hormonal profile, isolated hypospadias, compare with normal/not isolated hypospadias–implied)

- Specify the population to be studied (children with isolated hypospadias)

- Refer to the exposure or intervention to be investigated, if any

- Reflect the outcome of interest (hormonal profile).

Another example of a research question

Would the topical skin application of oil as a skin barrier reduces hypothermia in preterm infants? Apart from fulfilling the criteria of a good RQ, that is, feasible, interesting, novel, ethical, and relevant, it also details about the intervention done (topical skin application of oil), rationale of intervention (as a skin barrier), population to be studied (preterm infants), and outcome (reduces hypothermia).

Other important points to be heeded to while framing research question

- Make reference to a population when a relationship is expected among a certain type of subjects

- RQs and Hs should be made as specific as possible

- Avoid words or terms that do not add to the meaning of RQs and Hs

- Stick to what will be studied, not implications

- Name the variables in the order in which they occur/will be measured

- Avoid the words significant/”prove”

- Avoid using two different terms to refer to the same variable.

Some of the other problems and their possible solutions have been discussed in Table 1 .

Potential problems and solutions while making research question

G OING B EYOND F ORMULATION OF R ESEARCH Q UESTION–THE P ATH A HEAD

Once RQ is formulated, a Hs can be developed. Hs means transformation of a RQ into an operational analog.[ 1 ] It means a statement as to what prediction one makes about the phenomenon to be examined.[ 4 ] More often, for case–control trial, null Hs is generated which is later accepted or refuted.

A strong Hs should have following characteristics:

- Give insight into a RQ

- Are testable and measurable by the proposed experiments

- Have logical basis

- Follows the most likely outcome, not the exceptional outcome.

E XAMPLES OF R ESEARCH Q UESTION AND H YPOTHESIS

Research question-1.

- Does reduced gap between the two segments of the esophagus in patients of esophageal atresia reduces the mortality and morbidity of such patients?

Hypothesis-1

- Reduced gap between the two segments of the esophagus in patients of esophageal atresia reduces the mortality and morbidity of such patients

- In pediatric patients with esophageal atresia, gap of <2 cm between two segments of the esophagus and proper mobilization of proximal pouch reduces the morbidity and mortality among such patients.

Research question-2

- Does application of mitomycin C improves the outcome in patient of corrosive esophageal strictures?

Hypothesis-2

In patients aged 2–9 years with corrosive esophageal strictures, 34 applications of mitomycin C in dosage of 0.4 mg/ml for 5 min over a period of 6 months improve the outcome in terms of symptomatic and radiological relief. Some other examples of good and bad RQs have been shown in Table 2 .

Examples of few bad (left-hand side column) and few good (right-hand side) research questions

R ESEARCH Q UESTION AND S TUDY D ESIGN

RQ determines study design, for example, the question aimed to find the incidence of a disease in population will lead to conducting a survey; to find risk factors for a disease will need case–control study or a cohort study. RQ may also culminate into clinical trial.[ 9 , 10 ] For example, effect of administration of folic acid tablet in the perinatal period in decreasing incidence of neural tube defect. Accordingly, Hs is framed.

Appropriate statistical calculations are instituted to generate sample size. The subject inclusion, exclusion criteria and time frame of research are carefully defined. The detailed subject information sheet and pro forma are carefully defined. Moreover, research is set off few examples of research methodology guided by RQ:

- Incidence of anorectal malformations among adolescent females (hospital-based survey)

- Risk factors for the development of spontaneous pneumoperitoneum in pediatric patients (case–control design and cohort study)

- Effect of technique of extramucosal ureteric reimplantation without the creation of submucosal tunnel for the preservation of upper tract in bladder exstrophy (clinical trial).

The results of the research are then be available for wider applications for health and social life

C ONCLUSION

A good RQ needs thorough literature search and deep insight into the specific area/problem to be investigated. A RQ has to be focused yet simple. Research guided by such question can have wider impact in the field of social and health research by leading to formulation of policies for the benefit of larger population.

Financial support and sponsorship

Conflicts of interest.

There are no conflicts of interest.

R EFERENCES

Research Basics

- What Is Research?

- Types of Research

- Secondary Research | Literature Review

- Developing Your Topic

- Primary vs. Secondary Sources

- Evaluating Sources

- Responsible Conduct of Research

- Additional Help

A good working definition of research might be:

Research is the deliberate, purposeful, and systematic gathering of data, information, facts, and/or opinions for the advancement of personal, societal, or overall human knowledge.

Based on this definition, we all do research all the time. Most of this research is casual research. Asking friends what they think of different restaurants, looking up reviews of various products online, learning more about celebrities; these are all research.

Formal research includes the type of research most people think of when they hear the term “research”: scientists in white coats working in a fully equipped laboratory. But formal research is a much broader category that just this. Most people will never do laboratory research after graduating from college, but almost everybody will have to do some sort of formal research at some point in their careers.

So What Do We Mean By “Formal Research?”

Casual research is inward facing: it’s done to satisfy our own curiosity or meet our own needs, whether that’s choosing a reliable car or figuring out what to watch on TV. Formal research is outward facing. While it may satisfy our own curiosity, it’s primarily intended to be shared in order to achieve some purpose. That purpose could be anything: finding a cure for cancer, securing funding for a new business, improving some process at your workplace, proving the latest theory in quantum physics, or even just getting a good grade in your Humanities 200 class.

What sets formal research apart from casual research is the documentation of where you gathered your information from. This is done in the form of “citations” and “bibliographies.” Citing sources is covered in the section "Citing Your Sources."

Formal research also follows certain common patterns depending on what the research is trying to show or prove. These are covered in the section “Types of Research.”

- Next: Types of Research >>

- Last Updated: Dec 21, 2023 3:49 PM

- URL: https://guides.library.iit.edu/research_basics

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Course: LSAT > Unit 1

- Getting started with Logical Reasoning

- Introduction to arguments

- Catalog of question types

- Types of conclusions

Types of evidence

- Types of flaws

- Identify the conclusion | Quick guide

- Identify the conclusion | Learn more

- Identify the conclusion | Examples

- Identify an entailment | Quick guide

- Identify an entailment | Learn more

- Strongly supported inferences | Quick guide

- Strongly supported inferences | Learn more

- Disputes | Quick guide

- Disputes | Learn more

- Identify the technique | Quick guide

- Identify the technique | Learn more

- Identify the role | Quick guide

- Identify the role | learn more

- Identify the principle | Quick guide

- Identify the principle | Learn more

- Match structure | Quick guide

- Match structure | Learn more

- Match principles | Quick guide

- Match principles | Learn more

- Identify a flaw | Quick guide

- Identify a flaw | Learn more

- Match a flaw | Quick guide

- Match a flaw | Learn more

- Necessary assumptions | Quick guide

- Necessary assumptions | Learn more

- Sufficient assumptions | Quick guide

- Sufficient assumptions | Learn more

- Strengthen and weaken | Quick guide

- Strengthen and weaken | Learn more

- Helpful to know | Quick guide

- Helpful to know | learn more

- Explain or resolve | Quick guide

- Explain or resolve | Learn more

Types of Evidence

- This can help you avoid getting “lost” in the words; if you’re reading actively and recognizing what type of evidence you’re looking at, then you’re more likely to stay focused.

- Different types of evidence are often associated with specific types of assumptions or flaws, so if a question presents a classic evidence structure, you may be able to find the answer more quickly.

Common Evidence Types

Examples as evidence.

- [Paola is the best athlete in the state.] After all, Paola has won medals in 8 different Olympic sports.

- Paola beat last year's decathlon state champion on Saturday, so [she is the best athlete in the state].

What others say

- [Paola is the best athlete in the state.] We know this because the most highly-acclaimed sports magazine has named her as such.

- Because the population voted Paola the Best Athlete in the state in a landslide, [it would be absurd to claim that anyone else is the best athlete in the state].

Using the past

- [Paola is the best athlete in the state.] She must be, since she won the state championships last year, two years ago, three years ago, and four years ago.

- [Paola is the best athlete in the state], because she won the most athletic awards. Look at Jude, who's currently the Best Chef in the State because he won the most cooking awards.

Generalizing from a Sample

- [Paola is the best athlete in the state], because she won every local tournament in every spring sport.

Common Rebuttal Structures

Counterexamples, alternate possibilities, other types of argument structures, conditional.

- Penguins win → Flyers make big mistake

- Flyers make big mistake → coach tired

- Friday → coach is not tired

Causation based on correlation

Want to join the conversation.

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

Chapter 4 Theories in Scientific Research

As we know from previous chapters, science is knowledge represented as a collection of “theories” derived using the scientific method. In this chapter, we will examine what is a theory, why do we need theories in research, what are the building blocks of a theory, how to evaluate theories, how can we apply theories in research, and also presents illustrative examples of five theories frequently used in social science research.

Theories are explanations of a natural or social behavior, event, or phenomenon. More formally, a scientific theory is a system of constructs (concepts) and propositions (relationships between those constructs) that collectively presents a logical, systematic, and coherent explanation of a phenomenon of interest within some assumptions and boundary conditions (Bacharach 1989). [1]

Theories should explain why things happen, rather than just describe or predict. Note that it is possible to predict events or behaviors using a set of predictors, without necessarily explaining why such events are taking place. For instance, market analysts predict fluctuations in the stock market based on market announcements, earnings reports of major companies, and new data from the Federal Reserve and other agencies, based on previously observed correlations . Prediction requires only correlations. In contrast, explanations require causations , or understanding of cause-effect relationships. Establishing causation requires three conditions: (1) correlations between two constructs, (2) temporal precedence (the cause must precede the effect in time), and (3) rejection of alternative hypotheses (through testing). Scientific theories are different from theological, philosophical, or other explanations in that scientific theories can be empirically tested using scientific methods.

Explanations can be idiographic or nomothetic. Idiographic explanations are those that explain a single situation or event in idiosyncratic detail. For example, you did poorly on an exam because: (1) you forgot that you had an exam on that day, (2) you arrived late to the exam due to a traffic jam, (3) you panicked midway through the exam, (4) you had to work late the previous evening and could not study for the exam, or even (5) your dog ate your text book. The explanations may be detailed, accurate, and valid, but they may not apply to other similar situations, even involving the same person, and are hence not generalizable. In contrast, nomothetic explanations seek to explain a class of situations or events rather than a specific situation or event. For example, students who do poorly in exams do so because they did not spend adequate time preparing for exams or that they suffer from nervousness, attention-deficit, or some other medical disorder. Because nomothetic explanations are designed to be generalizable across situations, events, or people, they tend to be less precise, less complete, and less detailed. However, they explain economically, using only a few explanatory variables. Because theories are also intended to serve as generalized explanations for patterns of events, behaviors, or phenomena, theoretical explanations are generally nomothetic in nature.

While understanding theories, it is also important to understand what theory is not. Theory is not data, facts, typologies, taxonomies, or empirical findings. A collection of facts is not a theory, just as a pile of stones is not a house. Likewise, a collection of constructs (e.g., a typology of constructs) is not a theory, because theories must go well beyond constructs to include propositions, explanations, and boundary conditions. Data, facts, and findings operate at the empirical or observational level, while theories operate at a conceptual level and are based on logic rather than observations.

There are many benefits to using theories in research. First, theories provide the underlying logic of the occurrence of natural or social phenomenon by explaining what are the key drivers and key outcomes of the target phenomenon and why, and what underlying processes are responsible driving that phenomenon. Second, they aid in sense-making by helping us synthesize prior empirical findings within a theoretical framework and reconcile contradictory findings by discovering contingent factors influencing the relationship between two constructs in different studies. Third, theories provide guidance for future research by helping identify constructs and relationships that are worthy of further research. Fourth, theories can contribute to cumulative knowledge building by bridging gaps between other theories and by causing existing theories to be reevaluated in a new light.

However, theories can also have their own share of limitations. As simplified explanations of reality, theories may not always provide adequate explanations of the phenomenon of interest based on a limited set of constructs and relationships. Theories are designed to be simple and parsimonious explanations, while reality may be significantly more complex. Furthermore, theories may impose blinders or limit researchers’ “range of vision,” causing them to miss out on important concepts that are not defined by the theory.

Building Blocks of a Theory

David Whetten (1989) suggests that there are four building blocks of a theory: constructs, propositions, logic, and boundary conditions/assumptions. Constructs capture the “what” of theories (i.e., what concepts are important for explaining a phenomenon), propositions capture the “how” (i.e., how are these concepts related to each other), logic represents the “why” (i.e., why are these concepts related), and boundary conditions/assumptions examines the “who, when, and where” (i.e., under what circumstances will these concepts and relationships work). Though constructs and propositions were previously discussed in Chapter 2, we describe them again here for the sake of completeness.

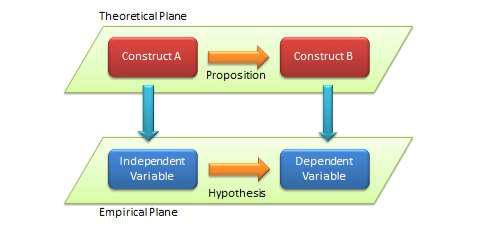

Constructs are abstract concepts specified at a high level of abstraction that are chosen specifically to explain the phenomenon of interest. Recall from Chapter 2 that constructs may be unidimensional (i.e., embody a single concept), such as weight or age, or multi-dimensional (i.e., embody multiple underlying concepts), such as personality or culture. While some constructs, such as age, education, and firm size, are easy to understand, others, such as creativity, prejudice, and organizational agility, may be more complex and abstruse, and still others such as trust, attitude, and learning, may represent temporal tendencies rather than steady states. Nevertheless, all constructs must have clear and unambiguous operational definition that should specify exactly how the construct will be measured and at what level of analysis (individual, group, organizational, etc.). Measurable representations of abstract constructs are called variables . For instance, intelligence quotient (IQ score) is a variable that is purported to measure an abstract construct called intelligence. As noted earlier, scientific research proceeds along two planes: a theoretical plane and an empirical plane. Constructs are conceptualized at the theoretical plane, while variables are operationalized and measured at the empirical (observational) plane. Furthermore, variables may be independent, dependent, mediating, or moderating, as discussed in Chapter 2. The distinction between constructs (conceptualized at the theoretical level) and variables (measured at the empirical level) is shown in Figure 4.1.

Figure 4.1. Distinction between theoretical and empirical concepts

Propositions are associations postulated between constructs based on deductive logic. Propositions are stated in declarative form and should ideally indicate a cause-effect relationship (e.g., if X occurs, then Y will follow). Note that propositions may be conjectural but MUST be testable, and should be rejected if they are not supported by empirical observations. However, like constructs, propositions are stated at the theoretical level, and they can only be tested by examining the corresponding relationship between measurable variables of those constructs. The empirical formulation of propositions, stated as relationships between variables, is called hypotheses . The distinction between propositions (formulated at the theoretical level) and hypotheses (tested at the empirical level) is depicted in Figure 4.1.

The third building block of a theory is the logic that provides the basis for justifying the propositions as postulated. Logic acts like a “glue” that connects the theoretical constructs and provides meaning and relevance to the relationships between these constructs. Logic also represents the “explanation” that lies at the core of a theory. Without logic, propositions will be ad hoc, arbitrary, and meaningless, and cannot be tied into a cohesive “system of propositions” that is the heart of any theory.

Finally, all theories are constrained by assumptions about values, time, and space, and boundary conditions that govern where the theory can be applied and where it cannot be applied. For example, many economic theories assume that human beings are rational (or boundedly rational) and employ utility maximization based on cost and benefit expectations as a way of understand human behavior. In contrast, political science theories assume that people are more political than rational, and try to position themselves in their professional or personal environment in a way that maximizes their power and control over others. Given the nature of their underlying assumptions, economic and political theories are not directly comparable, and researchers should not use economic theories if their objective is to understand the power structure or its evolution in a organization. Likewise, theories may have implicit cultural assumptions (e.g., whether they apply to individualistic or collective cultures), temporal assumptions (e.g., whether they apply to early stages or later stages of human behavior), and spatial assumptions (e.g., whether they apply to certain localities but not to others). If a theory is to be properly used or tested, all of its implicit assumptions that form the boundaries of that theory must be properly understood. Unfortunately, theorists rarely state their implicit assumptions clearly, which leads to frequent misapplications of theories to problem situations in research.

Attributes of a Good Theory

Theories are simplified and often partial explanations of complex social reality. As such, there can be good explanations or poor explanations, and consequently, there can be good theories or poor theories. How can we evaluate the “goodness” of a given theory? Different criteria have been proposed by different researchers, the more important of which are listed below:

- Logical consistency : Are the theoretical constructs, propositions, boundary conditions, and assumptions logically consistent with each other? If some of these “building blocks” of a theory are inconsistent with each other (e.g., a theory assumes rationality, but some constructs represent non-rational concepts), then the theory is a poor theory.

- Explanatory power : How much does a given theory explain (or predict) reality? Good theories obviously explain the target phenomenon better than rival theories, as often measured by variance explained (R-square) value in regression equations.

- Falsifiability : British philosopher Karl Popper stated in the 1940’s that for theories to be valid, they must be falsifiable. Falsifiability ensures that the theory is potentially disprovable, if empirical data does not match with theoretical propositions, which allows for their empirical testing by researchers. In other words, theories cannot be theories unless they can be empirically testable. Tautological statements, such as “a day with high temperatures is a hot day” are not empirically testable because a hot day is defined (and measured) as a day with high temperatures, and hence, such statements cannot be viewed as a theoretical proposition. Falsifiability requires presence of rival explanations it ensures that the constructs are adequately measurable, and so forth. However, note that saying that a theory is falsifiable is not the same as saying that a theory should be falsified. If a theory is indeed falsified based on empirical evidence, then it was probably a poor theory to begin with!

- Parsimony : Parsimony examines how much of a phenomenon is explained with how few variables. The concept is attributed to 14 th century English logician Father William of Ockham (and hence called “Ockham’s razor” or “Occam’s razor), which states that among competing explanations that sufficiently explain the observed evidence, the simplest theory (i.e., one that uses the smallest number of variables or makes the fewest assumptions) is the best. Explanation of a complex social phenomenon can always be increased by adding more and more constructs. However, such approach defeats the purpose of having a theory, which are intended to be “simplified” and generalizable explanations of reality. Parsimony relates to the degrees of freedom in a given theory. Parsimonious theories have higher degrees of freedom, which allow them to be more easily generalized to other contexts, settings, and populations.

Approaches to Theorizing

How do researchers build theories? Steinfeld and Fulk (1990) [2] recommend four such approaches. The first approach is to build theories inductively based on observed patterns of events or behaviors. Such approach is often called “grounded theory building”, because the theory is grounded in empirical observations. This technique is heavily dependent on the observational and interpretive abilities of the researcher, and the resulting theory may be subjective and non -confirmable. Furthermore, observing certain patterns of events will not necessarily make a theory, unless the researcher is able to provide consistent explanations for the observed patterns. We will discuss the grounded theory approach in a later chapter on qualitative research.

The second approach to theory building is to conduct a bottom-up conceptual analysis to identify different sets of predictors relevant to the phenomenon of interest using a predefined framework. One such framework may be a simple input-process-output framework, where the researcher may look for different categories of inputs, such as individual, organizational, and/or technological factors potentially related to the phenomenon of interest (the output), and describe the underlying processes that link these factors to the target phenomenon. This is also an inductive approach that relies heavily on the inductive abilities of the researcher, and interpretation may be biased by researcher’s prior knowledge of the phenomenon being studied.

The third approach to theorizing is to extend or modify existing theories to explain a new context, such as by extending theories of individual learning to explain organizational learning. While making such an extension, certain concepts, propositions, and/or boundary conditions of the old theory may be retained and others modified to fit the new context. This deductive approach leverages the rich inventory of social science theories developed by prior theoreticians, and is an efficient way of building new theories by building on existing ones.

The fourth approach is to apply existing theories in entirely new contexts by drawing upon the structural similarities between the two contexts. This approach relies on reasoning by analogy, and is probably the most creative way of theorizing using a deductive approach. For instance, Markus (1987) [3] used analogic similarities between a nuclear explosion and uncontrolled growth of networks or network-based businesses to propose a critical mass theory of network growth. Just as a nuclear explosion requires a critical mass of radioactive material to sustain a nuclear explosion, Markus suggested that a network requires a critical mass of users to sustain its growth, and without such critical mass, users may leave the network, causing an eventual demise of the network.

Examples of Social Science Theories

In this section, we present brief overviews of a few illustrative theories from different social science disciplines. These theories explain different types of social behaviors, using a set of constructs, propositions, boundary conditions, assumptions, and underlying logic. Note that the following represents just a simplistic introduction to these theories; readers are advised to consult the original sources of these theories for more details and insights on each theory.

Agency Theory. Agency theory (also called principal-agent theory), a classic theory in the organizational economics literature, was originally proposed by Ross (1973) [4] to explain two-party relationships (such as those between an employer and its employees, between organizational executives and shareholders, and between buyers and sellers) whose goals are not congruent with each other. The goal of agency theory is to specify optimal contracts and the conditions under which such contracts may help minimize the effect of goal incongruence. The core assumptions of this theory are that human beings are self-interested individuals, boundedly rational, and risk-averse, and the theory can be applied at the individual or organizational level.

The two parties in this theory are the principal and the agent; the principal employs the agent to perform certain tasks on its behalf. While the principal’s goal is quick and effective completion of the assigned task, the agent’s goal may be working at its own pace, avoiding risks, and seeking self-interest (such as personal pay) over corporate interests. Hence, the goal incongruence. Compounding the nature of the problem may be information asymmetry problems caused by the principal’s inability to adequately observe the agent’s behavior or accurately evaluate the agent’s skill sets. Such asymmetry may lead to agency problems where the agent may not put forth the effort needed to get the task done (the moral hazard problem) or may misrepresent its expertise or skills to get the job but not perform as expected (the adverse selection problem). Typical contracts that are behavior-based, such as a monthly salary, cannot overcome these problems. Hence, agency theory recommends using outcome-based contracts, such as a commissions or a fee payable upon task completion, or mixed contracts that combine behavior-based and outcome-based incentives. An employee stock option plans are is an example of an outcome-based contract while employee pay is a behavior-based contract. Agency theory also recommends tools that principals may employ to improve the efficacy of behavior-based contracts, such as investing in monitoring mechanisms (such as hiring supervisors) to counter the information asymmetry caused by moral hazard, designing renewable contracts contingent on agent’s performance (performance assessment makes the contract partially outcome-based), or by improving the structure of the assigned task to make it more programmable and therefore more observable.

Theory of Planned Behavior. Postulated by Azjen (1991) [5] , the theory of planned behavior (TPB) is a generalized theory of human behavior in the social psychology literature that can be used to study a wide range of individual behaviors. It presumes that individual behavior represents conscious reasoned choice, and is shaped by cognitive thinking and social pressures. The theory postulates that behaviors are based on one’s intention regarding that behavior, which in turn is a function of the person’s attitude toward the behavior, subjective norm regarding that behavior, and perception of control over that behavior (see Figure 4.2). Attitude is defined as the individual’s overall positive or negative feelings about performing the behavior in question, which may be assessed as a summation of one’s beliefs regarding the different consequences of that behavior, weighted by the desirability of those consequences.

Subjective norm refers to one’s perception of whether people important to that person expect the person to perform the intended behavior, and represented as a weighted combination of the expected norms of different referent groups such as friends, colleagues, or supervisors at work. Behavioral control is one’s perception of internal or external controls constraining the behavior in question. Internal controls may include the person’s ability to perform the intended behavior (self-efficacy), while external control refers to the availability of external resources needed to perform that behavior (facilitating conditions). TPB also suggests that sometimes people may intend to perform a given behavior but lack the resources needed to do so, and therefore suggests that posits that behavioral control can have a direct effect on behavior, in addition to the indirect effect mediated by intention.

TPB is an extension of an earlier theory called the theory of reasoned action, which included attitude and subjective norm as key drivers of intention, but not behavioral control. The latter construct was added by Ajzen in TPB to account for circumstances when people may have incomplete control over their own behaviors (such as not having high-speed Internet access for web surfing).

Figure 4.2. Theory of planned behavior

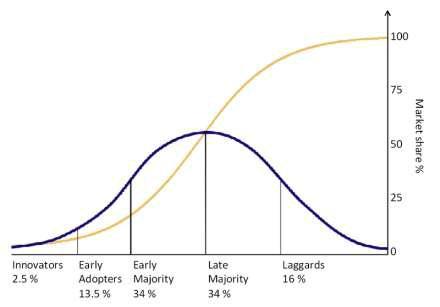

Innovation diffusion theory. Innovation diffusion theory (IDT) is a seminal theory in the communications literature that explains how innovations are adopted within a population of potential adopters. The concept was first studied by French sociologist Gabriel Tarde, but the theory was developed by Everett Rogers in 1962 based on observations of 508 diffusion studies. The four key elements in this theory are: innovation, communication channels, time, and social system. Innovations may include new technologies, new practices, or new ideas, and adopters may be individuals or organizations. At the macro (population) level, IDT views innovation diffusion as a process of communication where people in a social system learn about a new innovation and its potential benefits through communication channels (such as mass media or prior adopters) and are persuaded to adopt it. Diffusion is a temporal process; the diffusion process starts off slow among a few early adopters, then picks up speed as the innovation is adopted by the mainstream population, and finally slows down as the adopter population reaches saturation. The cumulative adoption pattern therefore an S-shaped curve, as shown in Figure 4.3, and the adopter distribution represents a normal distribution. All adopters are not identical, and adopters can be classified into innovators, early adopters, early majority, late majority, and laggards based on their time of their adoption. The rate of diffusion a lso depends on characteristics of the social system such as the presence of opinion leaders (experts whose opinions are valued by others) and change agents (people who influence others’ behaviors).

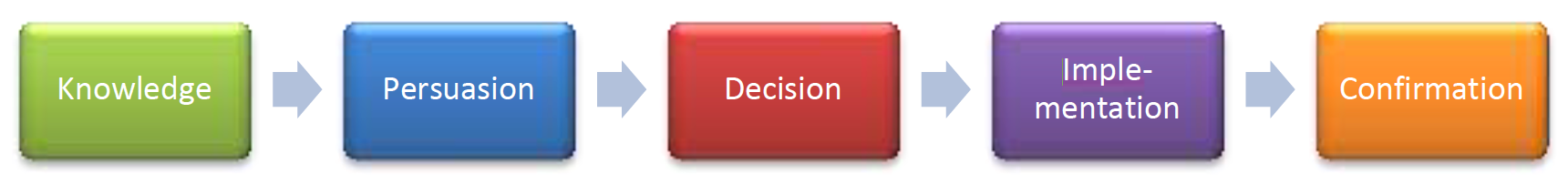

At the micro (adopter) level, Rogers (1995) [6] suggests that innovation adoption is a process consisting of five stages: (1) knowledge: when adopters first learn about an innovation from mass-media or interpersonal channels, (2) persuasion: when they are persuaded by prior adopters to try the innovation, (3) decision: their decision to accept or reject the innovation, (4) implementation: their initial utilization of the innovation, and (5) confirmation: their decision to continue using it to its fullest potential (see Figure 4.4). Five innovation characteristics are presumed to shape adopters’ innovation adoption decisions: (1) relative advantage: the expected benefits of an innovation relative to prior innovations, (2) compatibility: the extent to which the innovation fits with the adopter’s work habits, beliefs, and values, (3) complexity: the extent to which the innovation is difficult to learn and use, (4) trialability: the extent to which the innovation can be tested on a trial basis, and (5) observability: the extent to which the results of using the innovation can be clearly observed. The last two characteristics have since been dropped from many innovation studies. Complexity is negatively correlated to innovation adoption, while the other four factors are positively correlated. Innovation adoption also depends on personal factors such as the adopter’s risk- taking propensity, education level, cosmopolitanism, and communication influence. Early adopters are venturesome, well educated, and rely more on mass media for information about the innovation, while later adopters rely more on interpersonal sources (such as friends and family) as their primary source of information. IDT has been criticized for having a “pro-innovation bias,” that is for presuming that all innovations are beneficial and will be eventually diffused across the entire population, and because it does not allow for inefficient innovations such as fads or fashions to die off quickly without being adopted by the entire population or being replaced by better innovations.

Figure 4.3. S-shaped diffusion curve

Figure 4.4. Innovation adoption process.

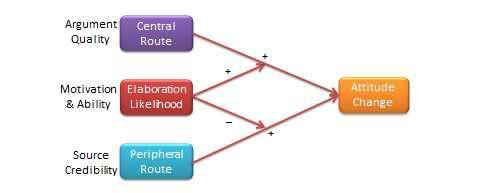

Elaboration Likelihood Model . Developed by Petty and Cacioppo (1986) [7] , the elaboration likelihood model (ELM) is a dual-process theory of attitude formation or change in the psychology literature. It explains how individuals can be influenced to change their attitude toward a certain object, events, or behavior and the relative efficacy of such change strategies. The ELM posits that one’s attitude may be shaped by two “routes” of influence, the central route and the peripheral route, which differ in the amount of thoughtful information processing or “elaboration” required of people (see Figure 4.5). The central route requires a person to think about issue-related arguments in an informational message and carefully scrutinize the merits and relevance of those arguments, before forming an informed judgment about the target object. In the peripheral route, subjects rely on external “cues” such as number of prior users, endorsements from experts, or likeability of the endorser, rather than on the quality of arguments, in framing their attitude towards the target object. The latter route is less cognitively demanding, and the routes of attitude change are typically operationalized in the ELM using the argument quality and peripheral cues constructs respectively.

Figure 4.5. Elaboration likelihood model

Whether people will be influenced by the central or peripheral routes depends upon their ability and motivation to elaborate the central merits of an argument. This ability and motivation to elaborate is called elaboration likelihood . People in a state of high elaboration likelihood (high ability and high motivation) are more likely to thoughtfully process the information presented and are therefore more influenced by argument quality, while those in the low elaboration likelihood state are more motivated by peripheral cues. Elaboration likelihood is a situational characteristic and not a personal trait. For instance, a doctor may employ the central route for diagnosing and treating a medical ailment (by virtue of his or her expertise of the subject), but may rely on peripheral cues from auto mechanics to understand the problems with his car. As such, the theory has widespread implications about how to enact attitude change toward new products or ideas and even social change.

General Deterrence Theory. Two utilitarian philosophers of the eighteenth century, Cesare Beccaria and Jeremy Bentham, formulated General Deterrence Theory (GDT) as both an explanation of crime and a method for reducing it. GDT examines why certain individuals engage in deviant, anti-social, or criminal behaviors. This theory holds that people are fundamentally rational (for both conforming and deviant behaviors), and that they freely choose deviant behaviors based on a rational cost-benefit calculation. Because people naturally choose utility-maximizing behaviors, deviant choices that engender personal gain or pleasure can be controlled by increasing the costs of such behaviors in the form of punishments (countermeasures) as well as increasing the probability of apprehension. Swiftness, severity, and certainty of punishments are the key constructs in GDT.

While classical positivist research in criminology seeks generalized causes of criminal behaviors, such as poverty, lack of education, psychological conditions, and recommends strategies to rehabilitate criminals, such as by providing them job training and medical treatment, GDT focuses on the criminal decision making process and situational factors that influence that process. Hence, a criminal’s personal situation (such as his personal values, his affluence, and his need for money) and the environmental context (such as how protected is the target, how efficient is the local police, how likely are criminals to be apprehended) play key roles in this decision making process. The focus of GDT is not how to rehabilitate criminals and avert future criminal behaviors, but how to make criminal activities less attractive and therefore prevent crimes. To that end, “target hardening” such as installing deadbolts and building self-defense skills, legal deterrents such as eliminating parole for certain crimes, “three strikes law” (mandatory incarceration for three offenses, even if the offenses are minor and not worth imprisonment), and the death penalty, increasing the chances of apprehension using means such as neighborhood watch programs, special task forces on drugs or gang -related crimes, and increased police patrols, and educational programs such as highly visible notices such as “Trespassers will be prosecuted” are effective in preventing crimes. This theory has interesting implications not only for traditional crimes, but also for contemporary white-collar crimes such as insider trading, software piracy, and illegal sharing of music.

[1] Bacharach, S. B. (1989). “Organizational Theories: Some Criteria for Evaluation,” Academy of Management Review (14:4), 496-515.

[2] Steinfield, C.W. and Fulk, J. (1990). “The Theory Imperative,” in Organizations and Communications Technology , J. Fulk and C. W. Steinfield (eds.), Newbury Park, CA: Sage Publications.

[3] Markus, M. L. (1987). “Toward a ‘Critical Mass’ Theory of Interactive Media: Universal Access, Interdependence, and Diffusion,” Communication Research (14:5), 491-511.

[4] Ross, S. A. (1973). “The Economic Theory of Agency: The Principal’s Problem,” American Economic Review (63:2), 134-139.

[5] Ajzen, I. (1991). “The Theory of Planned Behavior,” Organizational Behavior and Human Decision Processes (50), 179-211.

[6] Rogers, E. (1962). Diffusion of Innovations . New York: The Free Press. Other editions 1983, 1996, 2005.

[7] Petty, R. E., and Cacioppo, J. T. (1986). Communication and Persuasion: Central and Peripheral Routes to Attitude Change . New York: Springer-Verlag.

- Social Science Research: Principles, Methods, and Practices. Authored by : Anol Bhattacherjee. Provided by : University of South Florida. Located at : http://scholarcommons.usf.edu/oa_textbooks/3/ . License : CC BY-NC-SA: Attribution-NonCommercial-ShareAlike

- About The Project

- Current, Upcoming & Past Workshops

- Information for Applicants

- Science Journalism Resources

Fact-Checking Essentials

Why Fact-Check?

Fact-checking ensures that a story is as accurate and clear as possible before it is published or broadcast. It improves the accuracy and credibility of a publication/program and helps to eliminate errors. As a fact-checker, you must be detail oriented and committed to making sure every fact in the story is accurate.

What Is a Fact?

A fact is a statement that can be verified. A statement of opinion is not a fact. As a fact-checker, you are working with content that is written, not researching new material. Therefore, you must read the document and identify and extract all content in need of fact checking.

How Do You Fact-Check?

The first step is to read through the entire document. Next, read the document again, this time highlighting, underlining, or marking all facts that can be verified, including phrasing and word choices such as “always” and “exactly." The following are common places to start when fact-checking:

- Always ask yourself, “Who would know this?” to find the best resource.

- Always ask, “Does this make sense?”

- Check assertions about scientific theories and evidence. Sometimes, the easiest way to do this will be to contact scientists in the field (see below); other times, the information will be well-established in the literature.

- Confirm statistics.

- Check all proper names, titles, product names, place names, locations, etc.

- Check terms used. Are they commonplace and agreed upon in the scientific community? Do they need clarification?

- Check declarative statements, for example, “…this is a big deal,” “the area is huge,” "always," "exactly," etc. The reason it is a “big deal” (how “huge” is the area?) should be explained in the text. If it isn’t, find out why: Is it a big deal because of money, time, compared with something else?

- Be particularly cautious of facts stated absolutely.

- Verify any numbers used in the article.

When you begin the process of verifying the facts in the document, you must choose quality resources. Working with primary sources when available (including contacting a source via telephone, reading original publications, accessing government Web sites) and quality secondary sources when necessary will ensure the legitimacy, authenticity, and validity of the information at hand. Statements of common sense or common knowledge ("the sky is blue") do not need to be verified. A source's personal opinions or experiences, or the personal opinions of the writer, do not need verification. If a fact has you stumped, move on to the next one and come back. You may not be able to confirm all facts in an article (if you can't, make sure you indicate that a fact has not been confirmed).

For additional information about working with statistics and scientific journals, refer to the "How to Deal with Statistics" and "Science Journal Resource List" tip sheets on the Science Literacy Project Web site.

Using the Internet to Fact-Check

Many facts can be easily verified, for example, a URL (does it work?) or the spelling of a famous name or place. For facts that need additional verification, the Internet can be a powerful tool, but it must be used with caution. In this day of electronic information, many studies and primary research are available online. Whenever possible, find the primary source (for example, if the document cites a study, try to find the study itself). Reputable Web sites (.gov or government-run Web sites, .edu or university Web sites, publisher Web sites) are more reliable than Web sites run by nonprofessionals (Wikipedia, hobbyist Web sites, outdated or unmaintained Web sites). A note about Wikipedia and Google: Wikipedia should NEVER be your only source to confirm a fact. A Google search is a good place to start, but you must look at the results with a careful eye and select the best site for verifying the fact in question.

For example, what Web site would you use to check the following statement: "The first replacement magnet for Sector 3-4 of the Large Hadron Collider (LHC) underwent its final preparations before being lowered into the tunnel on 28 November." If you Google "LHC," the first result is a Wikipedia page ( http://en.wikipedia.org/wiki/Large_Hadron_Collider ). However, a better place to start is the LHC home page ( http://lhc.web.cern.ch/lhc/ ), which is run by CERN, the European Organization for Nuclear Research. CERN issued a press release on December 1, 2008 about Sector 3-4. As of December 29, 2008, the Wikipedia LHC page did NOT include this updated information about Sector 3-4, making it a dead end for confirming the fact you are checking.

Government and university Web sites also tend to be updated on a regular basis, whereas hobbyist sites are often not updated at all. The International Astronomical Union (IAU) General Assembly introduced the category of dwarf planets and recategorized Pluto in August 2006; However, many Web pages continue to list Pluto as a planet. IAU.org and NASA.gov maintain up-to-date list of Solar System objects. Other sites have not been updated to reflect the new definition of planet [for example, as of January 22, 2009, The Solar System ( http://www.solarviews.com/eng/solarsys.htm ) lists Pluto as the ninth planet].

In 2004, Google launched Google Scholar. Google Scholar is "a free service that helps users search scholarly literature such as peer-reviewed papers, theses, books, preprints, abstracts and technical reports." This can be a powerful tool for fact-finding, but should be used with the same caution as any other search engine. Not every search result is from a peer-reviewed, reputable source, so consider the content carefully. Google is one of many search engines. Science-specialized search engines can also be helpful (Google "Science Search Engines" or visit the Yahoo Science Directory at http://dir.yahoo.com/Science/ ).

Using the Internet at a Glance:

- Whenever possible, find the primary source of the fact.

- Use reputable Web sites rather than Web sites run by nonprofessionals.

- Do not use Wikipedia as your only source.

- When using Google, look at the results with a careful eye and select the best site for verifying the fact in question.

- Always cite the date you accessed a Web page.

- Useful Web tools: Google Scholar, SCIRUS, GoPubMed

Using E-mail and the Telephone to Fact-Check

It is often necessary to contact a source directly to verify facts in a document. Whether contacting a source via email or telephone, always remember that you are representing all those involved, including authors, editors, and publishers. Be polite and professional. When contacting a source via email, it is important to NEVER send the actual script. Sources may become self-conscious of how they are portrayed in the article, so it is important to check facts without revealing more context than necessary. If possible, paraphrase the fact(s) into simple, concise statements that are easy to verify (bulleted lists work well). Your email should include deadlines for the information and instructions for indicating corrections in addition to the requests for verification.

As with email, when contacting a source via telephone, it is important to not read directly from a script. It is often helpful to have a separate list of statements and facts ready when you call the source, rather than working from the main document. Always introduce yourself and let the source know why you are calling (to verify facts for a publication, radio program, etc.). If a source becomes upset about how a direct quotation seems to portray the speaker, be calm and courteous, but do not offer to change the article. Focus on the content (the fact are you trying to verify) of the statement, rather than the context (how that fact fits into the script as a whole).

Although you are only verifying facts, not doing original research, it might be helpful to refer to the "How to Talk to a Scientist" tip sheet on the Science Literacy Project Web site.

Using E-mail and the Telephone at a Glance:

- Be polite and professional; you are representing all those involved, not just you.

- Never send the actual script to a source.

- If possible, paraphrase the fact(s) into simple, concise statements that are easy to verify.

- Include the following in your email: Requests for verification, deadlines, and instructions for corrections.

- If a source becomes upset about how a direct quotation seems to portray the speaker, be calm and courteous, but do not offer to change the article. Focus on the content (the fact are you trying to verify) of the statement, rather than the context (how that fact fits into the script as a whole).

Additional Fact-Check Resources

- Libraries/bookstores

- Subject databases (e.g., LexusNexus, available at many libraries)

- Dictionaries/encyclopedias

- Review articles/scholarly publications/press releases

- Overviews and information provided by the writer or producer

- Science Literacy Project Tip Sheets

- Science Literacy Project Science Journalism Resources

Implementing Changes

When verifications and corrections come back, it is up to the fact-checker to confirm if a fact has been verified. Indicate all facts that have been verified (you will need to check with the author to see how they want you to indicate this on the document). Always include detailed notes about how and when you verified a fact (did you use a Web search? Telephone conversation with researcher? When did you access the government Web site?). So what do you do if you find a discrepancy? When you find an inaccuracy, cross it out in the text and indicate what is accurate. Don’t just find a problem; solve it (for example, don't just indicate that a number is wrong, find the correct number). A fact-checker's comments should be few and concise. Remember, you are not adding additional information to the document; you are verifying what is already there.

Fact-checking is an essential part of science journalism, so it is important to stay organized and meet your deadlines. Your careful attention to detail and verification of facts will help get accurate information about sometimes complicated topics out to the public!

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

2.3: Fact or Opinion

- Last updated

- Save as PDF

- Page ID 205565

Teaching & Learning and University Libraries