Research Design 101

Everything You Need To Get Started (With Examples)

By: Derek Jansen (MBA) | Reviewers: Eunice Rautenbach (DTech) & Kerryn Warren (PhD) | April 2023

Navigating the world of research can be daunting, especially if you’re a first-time researcher. One concept you’re bound to run into fairly early in your research journey is that of “ research design ”. Here, we’ll guide you through the basics using practical examples , so that you can approach your research with confidence.

Overview: Research Design 101

What is research design.

- Research design types for quantitative studies

- Video explainer : quantitative research design

- Research design types for qualitative studies

- Video explainer : qualitative research design

- How to choose a research design

- Key takeaways

Research design refers to the overall plan, structure or strategy that guides a research project , from its conception to the final data analysis. A good research design serves as the blueprint for how you, as the researcher, will collect and analyse data while ensuring consistency, reliability and validity throughout your study.

Understanding different types of research designs is essential as helps ensure that your approach is suitable given your research aims, objectives and questions , as well as the resources you have available to you. Without a clear big-picture view of how you’ll design your research, you run the risk of potentially making misaligned choices in terms of your methodology – especially your sampling , data collection and data analysis decisions.

The problem with defining research design…

One of the reasons students struggle with a clear definition of research design is because the term is used very loosely across the internet, and even within academia.

Some sources claim that the three research design types are qualitative, quantitative and mixed methods , which isn’t quite accurate (these just refer to the type of data that you’ll collect and analyse). Other sources state that research design refers to the sum of all your design choices, suggesting it’s more like a research methodology . Others run off on other less common tangents. No wonder there’s confusion!

In this article, we’ll clear up the confusion. We’ll explain the most common research design types for both qualitative and quantitative research projects, whether that is for a full dissertation or thesis, or a smaller research paper or article.

Research Design: Quantitative Studies

Quantitative research involves collecting and analysing data in a numerical form. Broadly speaking, there are four types of quantitative research designs: descriptive , correlational , experimental , and quasi-experimental .

Descriptive Research Design

As the name suggests, descriptive research design focuses on describing existing conditions, behaviours, or characteristics by systematically gathering information without manipulating any variables. In other words, there is no intervention on the researcher’s part – only data collection.

For example, if you’re studying smartphone addiction among adolescents in your community, you could deploy a survey to a sample of teens asking them to rate their agreement with certain statements that relate to smartphone addiction. The collected data would then provide insight regarding how widespread the issue may be – in other words, it would describe the situation.

The key defining attribute of this type of research design is that it purely describes the situation . In other words, descriptive research design does not explore potential relationships between different variables or the causes that may underlie those relationships. Therefore, descriptive research is useful for generating insight into a research problem by describing its characteristics . By doing so, it can provide valuable insights and is often used as a precursor to other research design types.

Correlational Research Design

Correlational design is a popular choice for researchers aiming to identify and measure the relationship between two or more variables without manipulating them . In other words, this type of research design is useful when you want to know whether a change in one thing tends to be accompanied by a change in another thing.

For example, if you wanted to explore the relationship between exercise frequency and overall health, you could use a correlational design to help you achieve this. In this case, you might gather data on participants’ exercise habits, as well as records of their health indicators like blood pressure, heart rate, or body mass index. Thereafter, you’d use a statistical test to assess whether there’s a relationship between the two variables (exercise frequency and health).

As you can see, correlational research design is useful when you want to explore potential relationships between variables that cannot be manipulated or controlled for ethical, practical, or logistical reasons. It is particularly helpful in terms of developing predictions , and given that it doesn’t involve the manipulation of variables, it can be implemented at a large scale more easily than experimental designs (which will look at next).

That said, it’s important to keep in mind that correlational research design has limitations – most notably that it cannot be used to establish causality . In other words, correlation does not equal causation . To establish causality, you’ll need to move into the realm of experimental design, coming up next…

Need a helping hand?

Experimental Research Design

Experimental research design is used to determine if there is a causal relationship between two or more variables . With this type of research design, you, as the researcher, manipulate one variable (the independent variable) while controlling others (dependent variables). Doing so allows you to observe the effect of the former on the latter and draw conclusions about potential causality.

For example, if you wanted to measure if/how different types of fertiliser affect plant growth, you could set up several groups of plants, with each group receiving a different type of fertiliser, as well as one with no fertiliser at all. You could then measure how much each plant group grew (on average) over time and compare the results from the different groups to see which fertiliser was most effective.

Overall, experimental research design provides researchers with a powerful way to identify and measure causal relationships (and the direction of causality) between variables. However, developing a rigorous experimental design can be challenging as it’s not always easy to control all the variables in a study. This often results in smaller sample sizes , which can reduce the statistical power and generalisability of the results.

Moreover, experimental research design requires random assignment . This means that the researcher needs to assign participants to different groups or conditions in a way that each participant has an equal chance of being assigned to any group (note that this is not the same as random sampling ). Doing so helps reduce the potential for bias and confounding variables . This need for random assignment can lead to ethics-related issues . For example, withholding a potentially beneficial medical treatment from a control group may be considered unethical in certain situations.

Quasi-Experimental Research Design

Quasi-experimental research design is used when the research aims involve identifying causal relations , but one cannot (or doesn’t want to) randomly assign participants to different groups (for practical or ethical reasons). Instead, with a quasi-experimental research design, the researcher relies on existing groups or pre-existing conditions to form groups for comparison.

For example, if you were studying the effects of a new teaching method on student achievement in a particular school district, you may be unable to randomly assign students to either group and instead have to choose classes or schools that already use different teaching methods. This way, you still achieve separate groups, without having to assign participants to specific groups yourself.

Naturally, quasi-experimental research designs have limitations when compared to experimental designs. Given that participant assignment is not random, it’s more difficult to confidently establish causality between variables, and, as a researcher, you have less control over other variables that may impact findings.

All that said, quasi-experimental designs can still be valuable in research contexts where random assignment is not possible and can often be undertaken on a much larger scale than experimental research, thus increasing the statistical power of the results. What’s important is that you, as the researcher, understand the limitations of the design and conduct your quasi-experiment as rigorously as possible, paying careful attention to any potential confounding variables .

Research Design: Qualitative Studies

There are many different research design types when it comes to qualitative studies, but here we’ll narrow our focus to explore the “Big 4”. Specifically, we’ll look at phenomenological design, grounded theory design, ethnographic design, and case study design.

Phenomenological Research Design

Phenomenological design involves exploring the meaning of lived experiences and how they are perceived by individuals. This type of research design seeks to understand people’s perspectives , emotions, and behaviours in specific situations. Here, the aim for researchers is to uncover the essence of human experience without making any assumptions or imposing preconceived ideas on their subjects.

For example, you could adopt a phenomenological design to study why cancer survivors have such varied perceptions of their lives after overcoming their disease. This could be achieved by interviewing survivors and then analysing the data using a qualitative analysis method such as thematic analysis to identify commonalities and differences.

Phenomenological research design typically involves in-depth interviews or open-ended questionnaires to collect rich, detailed data about participants’ subjective experiences. This richness is one of the key strengths of phenomenological research design but, naturally, it also has limitations. These include potential biases in data collection and interpretation and the lack of generalisability of findings to broader populations.

Grounded Theory Research Design

Grounded theory (also referred to as “GT”) aims to develop theories by continuously and iteratively analysing and comparing data collected from a relatively large number of participants in a study. It takes an inductive (bottom-up) approach, with a focus on letting the data “speak for itself”, without being influenced by preexisting theories or the researcher’s preconceptions.

As an example, let’s assume your research aims involved understanding how people cope with chronic pain from a specific medical condition, with a view to developing a theory around this. In this case, grounded theory design would allow you to explore this concept thoroughly without preconceptions about what coping mechanisms might exist. You may find that some patients prefer cognitive-behavioural therapy (CBT) while others prefer to rely on herbal remedies. Based on multiple, iterative rounds of analysis, you could then develop a theory in this regard, derived directly from the data (as opposed to other preexisting theories and models).

Grounded theory typically involves collecting data through interviews or observations and then analysing it to identify patterns and themes that emerge from the data. These emerging ideas are then validated by collecting more data until a saturation point is reached (i.e., no new information can be squeezed from the data). From that base, a theory can then be developed .

As you can see, grounded theory is ideally suited to studies where the research aims involve theory generation , especially in under-researched areas. Keep in mind though that this type of research design can be quite time-intensive , given the need for multiple rounds of data collection and analysis.

Ethnographic Research Design

Ethnographic design involves observing and studying a culture-sharing group of people in their natural setting to gain insight into their behaviours, beliefs, and values. The focus here is on observing participants in their natural environment (as opposed to a controlled environment). This typically involves the researcher spending an extended period of time with the participants in their environment, carefully observing and taking field notes .

All of this is not to say that ethnographic research design relies purely on observation. On the contrary, this design typically also involves in-depth interviews to explore participants’ views, beliefs, etc. However, unobtrusive observation is a core component of the ethnographic approach.

As an example, an ethnographer may study how different communities celebrate traditional festivals or how individuals from different generations interact with technology differently. This may involve a lengthy period of observation, combined with in-depth interviews to further explore specific areas of interest that emerge as a result of the observations that the researcher has made.

As you can probably imagine, ethnographic research design has the ability to provide rich, contextually embedded insights into the socio-cultural dynamics of human behaviour within a natural, uncontrived setting. Naturally, however, it does come with its own set of challenges, including researcher bias (since the researcher can become quite immersed in the group), participant confidentiality and, predictably, ethical complexities . All of these need to be carefully managed if you choose to adopt this type of research design.

Case Study Design

With case study research design, you, as the researcher, investigate a single individual (or a single group of individuals) to gain an in-depth understanding of their experiences, behaviours or outcomes. Unlike other research designs that are aimed at larger sample sizes, case studies offer a deep dive into the specific circumstances surrounding a person, group of people, event or phenomenon, generally within a bounded setting or context .

As an example, a case study design could be used to explore the factors influencing the success of a specific small business. This would involve diving deeply into the organisation to explore and understand what makes it tick – from marketing to HR to finance. In terms of data collection, this could include interviews with staff and management, review of policy documents and financial statements, surveying customers, etc.

While the above example is focused squarely on one organisation, it’s worth noting that case study research designs can have different variation s, including single-case, multiple-case and longitudinal designs. As you can see in the example, a single-case design involves intensely examining a single entity to understand its unique characteristics and complexities. Conversely, in a multiple-case design , multiple cases are compared and contrasted to identify patterns and commonalities. Lastly, in a longitudinal case design , a single case or multiple cases are studied over an extended period of time to understand how factors develop over time.

As you can see, a case study research design is particularly useful where a deep and contextualised understanding of a specific phenomenon or issue is desired. However, this strength is also its weakness. In other words, you can’t generalise the findings from a case study to the broader population. So, keep this in mind if you’re considering going the case study route.

How To Choose A Research Design

Having worked through all of these potential research designs, you’d be forgiven for feeling a little overwhelmed and wondering, “ But how do I decide which research design to use? ”. While we could write an entire post covering that alone, here are a few factors to consider that will help you choose a suitable research design for your study.

Data type: The first determining factor is naturally the type of data you plan to be collecting – i.e., qualitative or quantitative. This may sound obvious, but we have to be clear about this – don’t try to use a quantitative research design on qualitative data (or vice versa)!

Research aim(s) and question(s): As with all methodological decisions, your research aim and research questions will heavily influence your research design. For example, if your research aims involve developing a theory from qualitative data, grounded theory would be a strong option. Similarly, if your research aims involve identifying and measuring relationships between variables, one of the experimental designs would likely be a better option.

Time: It’s essential that you consider any time constraints you have, as this will impact the type of research design you can choose. For example, if you’ve only got a month to complete your project, a lengthy design such as ethnography wouldn’t be a good fit.

Resources: Take into account the resources realistically available to you, as these need to factor into your research design choice. For example, if you require highly specialised lab equipment to execute an experimental design, you need to be sure that you’ll have access to that before you make a decision.

Keep in mind that when it comes to research, it’s important to manage your risks and play as conservatively as possible. If your entire project relies on you achieving a huge sample, having access to niche equipment or holding interviews with very difficult-to-reach participants, you’re creating risks that could kill your project. So, be sure to think through your choices carefully and make sure that you have backup plans for any existential risks. Remember that a relatively simple methodology executed well generally will typically earn better marks than a highly-complex methodology executed poorly.

Recap: Key Takeaways

We’ve covered a lot of ground here. Let’s recap by looking at the key takeaways:

- Research design refers to the overall plan, structure or strategy that guides a research project, from its conception to the final analysis of data.

- Research designs for quantitative studies include descriptive , correlational , experimental and quasi-experimenta l designs.

- Research designs for qualitative studies include phenomenological , grounded theory , ethnographic and case study designs.

- When choosing a research design, you need to consider a variety of factors, including the type of data you’ll be working with, your research aims and questions, your time and the resources available to you.

If you need a helping hand with your research design (or any other aspect of your research), check out our private coaching services .

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

Is there any blog article explaining more on Case study research design? Is there a Case study write-up template? Thank you.

Thanks this was quite valuable to clarify such an important concept.

Thanks for this simplified explanations. it is quite very helpful.

This was really helpful. thanks

Thank you for your explanation. I think case study research design and the use of secondary data in researches needs to be talked about more in your videos and articles because there a lot of case studies research design tailored projects out there.

Please is there any template for a case study research design whose data type is a secondary data on your repository?

This post is very clear, comprehensive and has been very helpful to me. It has cleared the confusion I had in regard to research design and methodology.

This post is helpful, easy to understand, and deconstructs what a research design is. Thanks

how to cite this page

Thank you very much for the post. It is wonderful and has cleared many worries in my mind regarding research designs. I really appreciate .

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

Research Design | Step-by-Step Guide with Examples

Published on 5 May 2022 by Shona McCombes . Revised on 20 March 2023.

A research design is a strategy for answering your research question using empirical data. Creating a research design means making decisions about:

- Your overall aims and approach

- The type of research design you’ll use

- Your sampling methods or criteria for selecting subjects

- Your data collection methods

- The procedures you’ll follow to collect data

- Your data analysis methods

A well-planned research design helps ensure that your methods match your research aims and that you use the right kind of analysis for your data.

Table of contents

Step 1: consider your aims and approach, step 2: choose a type of research design, step 3: identify your population and sampling method, step 4: choose your data collection methods, step 5: plan your data collection procedures, step 6: decide on your data analysis strategies, frequently asked questions.

- Introduction

Before you can start designing your research, you should already have a clear idea of the research question you want to investigate.

There are many different ways you could go about answering this question. Your research design choices should be driven by your aims and priorities – start by thinking carefully about what you want to achieve.

The first choice you need to make is whether you’ll take a qualitative or quantitative approach.

Qualitative research designs tend to be more flexible and inductive , allowing you to adjust your approach based on what you find throughout the research process.

Quantitative research designs tend to be more fixed and deductive , with variables and hypotheses clearly defined in advance of data collection.

It’s also possible to use a mixed methods design that integrates aspects of both approaches. By combining qualitative and quantitative insights, you can gain a more complete picture of the problem you’re studying and strengthen the credibility of your conclusions.

Practical and ethical considerations when designing research

As well as scientific considerations, you need to think practically when designing your research. If your research involves people or animals, you also need to consider research ethics .

- How much time do you have to collect data and write up the research?

- Will you be able to gain access to the data you need (e.g., by travelling to a specific location or contacting specific people)?

- Do you have the necessary research skills (e.g., statistical analysis or interview techniques)?

- Will you need ethical approval ?

At each stage of the research design process, make sure that your choices are practically feasible.

Prevent plagiarism, run a free check.

Within both qualitative and quantitative approaches, there are several types of research design to choose from. Each type provides a framework for the overall shape of your research.

Types of quantitative research designs

Quantitative designs can be split into four main types. Experimental and quasi-experimental designs allow you to test cause-and-effect relationships, while descriptive and correlational designs allow you to measure variables and describe relationships between them.

With descriptive and correlational designs, you can get a clear picture of characteristics, trends, and relationships as they exist in the real world. However, you can’t draw conclusions about cause and effect (because correlation doesn’t imply causation ).

Experiments are the strongest way to test cause-and-effect relationships without the risk of other variables influencing the results. However, their controlled conditions may not always reflect how things work in the real world. They’re often also more difficult and expensive to implement.

Types of qualitative research designs

Qualitative designs are less strictly defined. This approach is about gaining a rich, detailed understanding of a specific context or phenomenon, and you can often be more creative and flexible in designing your research.

The table below shows some common types of qualitative design. They often have similar approaches in terms of data collection, but focus on different aspects when analysing the data.

Your research design should clearly define who or what your research will focus on, and how you’ll go about choosing your participants or subjects.

In research, a population is the entire group that you want to draw conclusions about, while a sample is the smaller group of individuals you’ll actually collect data from.

Defining the population

A population can be made up of anything you want to study – plants, animals, organisations, texts, countries, etc. In the social sciences, it most often refers to a group of people.

For example, will you focus on people from a specific demographic, region, or background? Are you interested in people with a certain job or medical condition, or users of a particular product?

The more precisely you define your population, the easier it will be to gather a representative sample.

Sampling methods

Even with a narrowly defined population, it’s rarely possible to collect data from every individual. Instead, you’ll collect data from a sample.

To select a sample, there are two main approaches: probability sampling and non-probability sampling . The sampling method you use affects how confidently you can generalise your results to the population as a whole.

Probability sampling is the most statistically valid option, but it’s often difficult to achieve unless you’re dealing with a very small and accessible population.

For practical reasons, many studies use non-probability sampling, but it’s important to be aware of the limitations and carefully consider potential biases. You should always make an effort to gather a sample that’s as representative as possible of the population.

Case selection in qualitative research

In some types of qualitative designs, sampling may not be relevant.

For example, in an ethnography or a case study, your aim is to deeply understand a specific context, not to generalise to a population. Instead of sampling, you may simply aim to collect as much data as possible about the context you are studying.

In these types of design, you still have to carefully consider your choice of case or community. You should have a clear rationale for why this particular case is suitable for answering your research question.

For example, you might choose a case study that reveals an unusual or neglected aspect of your research problem, or you might choose several very similar or very different cases in order to compare them.

Data collection methods are ways of directly measuring variables and gathering information. They allow you to gain first-hand knowledge and original insights into your research problem.

You can choose just one data collection method, or use several methods in the same study.

Survey methods

Surveys allow you to collect data about opinions, behaviours, experiences, and characteristics by asking people directly. There are two main survey methods to choose from: questionnaires and interviews.

Observation methods

Observations allow you to collect data unobtrusively, observing characteristics, behaviours, or social interactions without relying on self-reporting.

Observations may be conducted in real time, taking notes as you observe, or you might make audiovisual recordings for later analysis. They can be qualitative or quantitative.

Other methods of data collection

There are many other ways you might collect data depending on your field and topic.

If you’re not sure which methods will work best for your research design, try reading some papers in your field to see what data collection methods they used.

Secondary data

If you don’t have the time or resources to collect data from the population you’re interested in, you can also choose to use secondary data that other researchers already collected – for example, datasets from government surveys or previous studies on your topic.

With this raw data, you can do your own analysis to answer new research questions that weren’t addressed by the original study.

Using secondary data can expand the scope of your research, as you may be able to access much larger and more varied samples than you could collect yourself.

However, it also means you don’t have any control over which variables to measure or how to measure them, so the conclusions you can draw may be limited.

As well as deciding on your methods, you need to plan exactly how you’ll use these methods to collect data that’s consistent, accurate, and unbiased.

Planning systematic procedures is especially important in quantitative research, where you need to precisely define your variables and ensure your measurements are reliable and valid.

Operationalisation

Some variables, like height or age, are easily measured. But often you’ll be dealing with more abstract concepts, like satisfaction, anxiety, or competence. Operationalisation means turning these fuzzy ideas into measurable indicators.

If you’re using observations , which events or actions will you count?

If you’re using surveys , which questions will you ask and what range of responses will be offered?

You may also choose to use or adapt existing materials designed to measure the concept you’re interested in – for example, questionnaires or inventories whose reliability and validity has already been established.

Reliability and validity

Reliability means your results can be consistently reproduced , while validity means that you’re actually measuring the concept you’re interested in.

For valid and reliable results, your measurement materials should be thoroughly researched and carefully designed. Plan your procedures to make sure you carry out the same steps in the same way for each participant.

If you’re developing a new questionnaire or other instrument to measure a specific concept, running a pilot study allows you to check its validity and reliability in advance.

Sampling procedures

As well as choosing an appropriate sampling method, you need a concrete plan for how you’ll actually contact and recruit your selected sample.

That means making decisions about things like:

- How many participants do you need for an adequate sample size?

- What inclusion and exclusion criteria will you use to identify eligible participants?

- How will you contact your sample – by mail, online, by phone, or in person?

If you’re using a probability sampling method, it’s important that everyone who is randomly selected actually participates in the study. How will you ensure a high response rate?

If you’re using a non-probability method, how will you avoid bias and ensure a representative sample?

Data management

It’s also important to create a data management plan for organising and storing your data.

Will you need to transcribe interviews or perform data entry for observations? You should anonymise and safeguard any sensitive data, and make sure it’s backed up regularly.

Keeping your data well organised will save time when it comes to analysing them. It can also help other researchers validate and add to your findings.

On their own, raw data can’t answer your research question. The last step of designing your research is planning how you’ll analyse the data.

Quantitative data analysis

In quantitative research, you’ll most likely use some form of statistical analysis . With statistics, you can summarise your sample data, make estimates, and test hypotheses.

Using descriptive statistics , you can summarise your sample data in terms of:

- The distribution of the data (e.g., the frequency of each score on a test)

- The central tendency of the data (e.g., the mean to describe the average score)

- The variability of the data (e.g., the standard deviation to describe how spread out the scores are)

The specific calculations you can do depend on the level of measurement of your variables.

Using inferential statistics , you can:

- Make estimates about the population based on your sample data.

- Test hypotheses about a relationship between variables.

Regression and correlation tests look for associations between two or more variables, while comparison tests (such as t tests and ANOVAs ) look for differences in the outcomes of different groups.

Your choice of statistical test depends on various aspects of your research design, including the types of variables you’re dealing with and the distribution of your data.

Qualitative data analysis

In qualitative research, your data will usually be very dense with information and ideas. Instead of summing it up in numbers, you’ll need to comb through the data in detail, interpret its meanings, identify patterns, and extract the parts that are most relevant to your research question.

Two of the most common approaches to doing this are thematic analysis and discourse analysis .

There are many other ways of analysing qualitative data depending on the aims of your research. To get a sense of potential approaches, try reading some qualitative research papers in your field.

A sample is a subset of individuals from a larger population. Sampling means selecting the group that you will actually collect data from in your research.

For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

Statistical sampling allows you to test a hypothesis about the characteristics of a population. There are various sampling methods you can use to ensure that your sample is representative of the population as a whole.

Operationalisation means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioural avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalise the variables that you want to measure.

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts, and meanings, use qualitative methods .

- If you want to analyse a large amount of readily available data, use secondary data. If you want data specific to your purposes with control over how they are generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2023, March 20). Research Design | Step-by-Step Guide with Examples. Scribbr. Retrieved 29 April 2024, from https://www.scribbr.co.uk/research-methods/research-design/

Is this article helpful?

Shona McCombes

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research Research Tools and Apps

Research Design: What it is, Elements & Types

Can you imagine doing research without a plan? Probably not. When we discuss a strategy to collect, study, and evaluate data, we talk about research design. This design addresses problems and creates a consistent and logical model for data analysis. Let’s learn more about it.

What is Research Design?

Research design is the framework of research methods and techniques chosen by a researcher to conduct a study. The design allows researchers to sharpen the research methods suitable for the subject matter and set up their studies for success.

Creating a research topic explains the type of research (experimental, survey research , correlational , semi-experimental, review) and its sub-type (experimental design, research problem , descriptive case-study).

There are three main types of designs for research:

- Data collection

- Measurement

- Data Analysis

The research problem an organization faces will determine the design, not vice-versa. The design phase of a study determines which tools to use and how they are used.

The Process of Research Design

The research design process is a systematic and structured approach to conducting research. The process is essential to ensure that the study is valid, reliable, and produces meaningful results.

- Consider your aims and approaches: Determine the research questions and objectives, and identify the theoretical framework and methodology for the study.

- Choose a type of Research Design: Select the appropriate research design, such as experimental, correlational, survey, case study, or ethnographic, based on the research questions and objectives.

- Identify your population and sampling method: Determine the target population and sample size, and choose the sampling method, such as random , stratified random sampling , or convenience sampling.

- Choose your data collection methods: Decide on the data collection methods , such as surveys, interviews, observations, or experiments, and select the appropriate instruments or tools for collecting data.

- Plan your data collection procedures: Develop a plan for data collection, including the timeframe, location, and personnel involved, and ensure ethical considerations.

- Decide on your data analysis strategies: Select the appropriate data analysis techniques, such as statistical analysis , content analysis, or discourse analysis, and plan how to interpret the results.

The process of research design is a critical step in conducting research. By following the steps of research design, researchers can ensure that their study is well-planned, ethical, and rigorous.

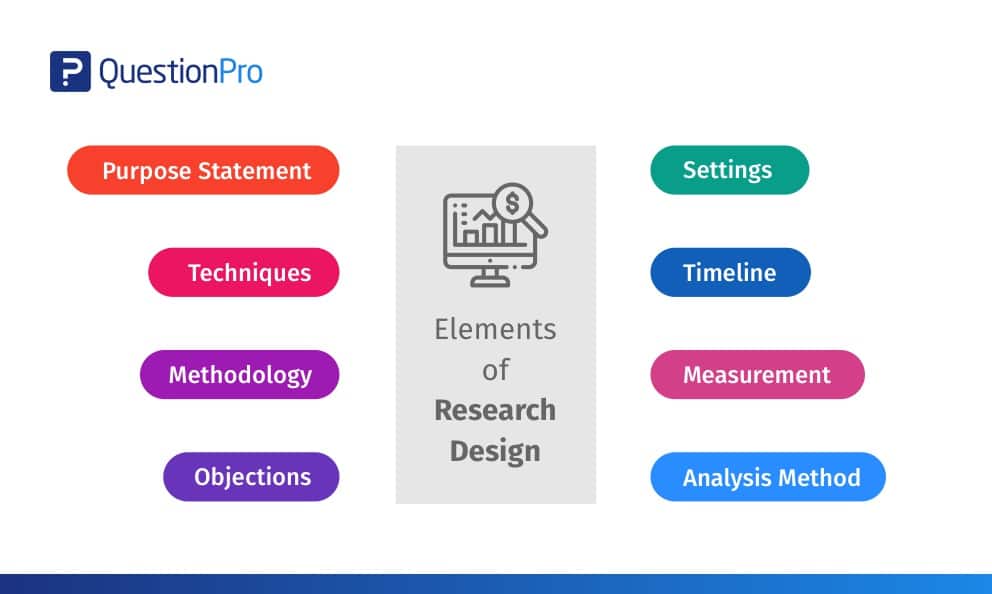

Research Design Elements

Impactful research usually creates a minimum bias in data and increases trust in the accuracy of collected data. A design that produces the slightest margin of error in experimental research is generally considered the desired outcome. The essential elements are:

- Accurate purpose statement

- Techniques to be implemented for collecting and analyzing research

- The method applied for analyzing collected details

- Type of research methodology

- Probable objections to research

- Settings for the research study

- Measurement of analysis

Characteristics of Research Design

A proper design sets your study up for success. Successful research studies provide insights that are accurate and unbiased. You’ll need to create a survey that meets all of the main characteristics of a design. There are four key characteristics:

- Neutrality: When you set up your study, you may have to make assumptions about the data you expect to collect. The results projected in the research should be free from research bias and neutral. Understand opinions about the final evaluated scores and conclusions from multiple individuals and consider those who agree with the results.

- Reliability: With regularly conducted research, the researcher expects similar results every time. You’ll only be able to reach the desired results if your design is reliable. Your plan should indicate how to form research questions to ensure the standard of results.

- Validity: There are multiple measuring tools available. However, the only correct measuring tools are those which help a researcher in gauging results according to the objective of the research. The questionnaire developed from this design will then be valid.

- Generalization: The outcome of your design should apply to a population and not just a restricted sample . A generalized method implies that your survey can be conducted on any part of a population with similar accuracy.

The above factors affect how respondents answer the research questions, so they should balance all the above characteristics in a good design. If you want, you can also learn about Selection Bias through our blog.

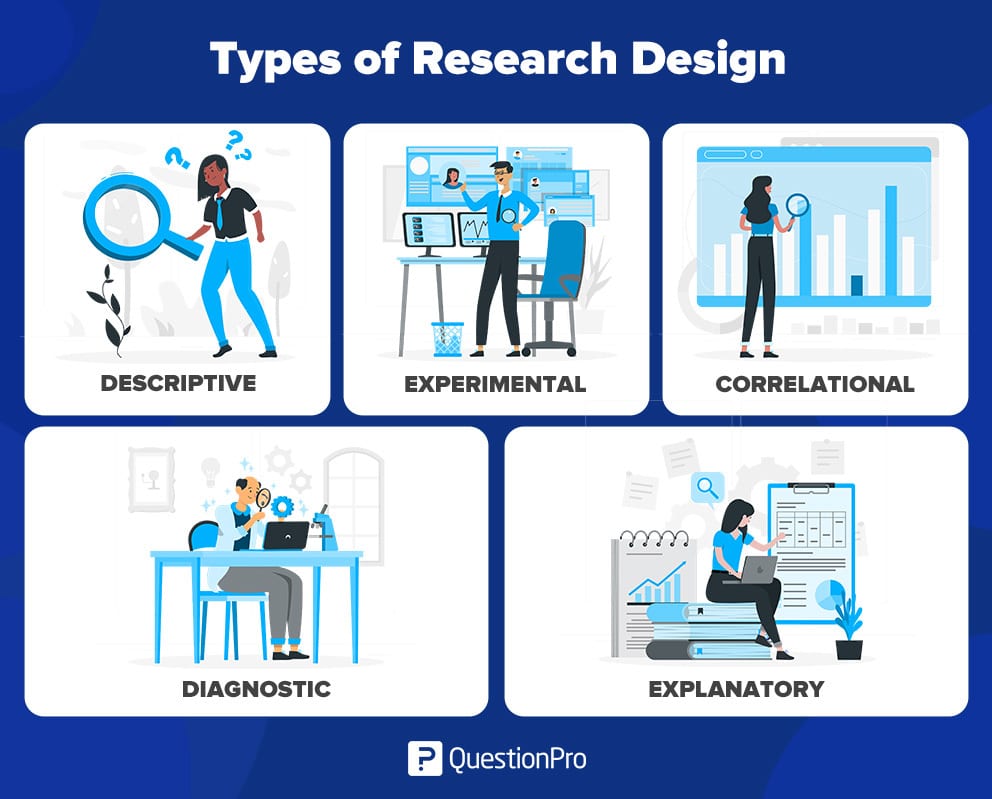

Research Design Types

A researcher must clearly understand the various types to select which model to implement for a study. Like the research itself, the design of your analysis can be broadly classified into quantitative and qualitative.

Qualitative research

Qualitative research determines relationships between collected data and observations based on mathematical calculations. Statistical methods can prove or disprove theories related to a naturally existing phenomenon. Researchers rely on qualitative observation research methods that conclude “why” a particular theory exists and “what” respondents have to say about it.

Quantitative research

Quantitative research is for cases where statistical conclusions to collect actionable insights are essential. Numbers provide a better perspective for making critical business decisions. Quantitative research methods are necessary for the growth of any organization. Insights drawn from complex numerical data and analysis prove to be highly effective when making decisions about the business’s future.

Qualitative Research vs Quantitative Research

Here is a chart that highlights the major differences between qualitative and quantitative research:

In summary or analysis , the step of qualitative research is more exploratory and focuses on understanding the subjective experiences of individuals, while quantitative research is more focused on objective data and statistical analysis.

You can further break down the types of research design into five categories:

1. Descriptive: In a descriptive composition, a researcher is solely interested in describing the situation or case under their research study. It is a theory-based design method created by gathering, analyzing, and presenting collected data. This allows a researcher to provide insights into the why and how of research. Descriptive design helps others better understand the need for the research. If the problem statement is not clear, you can conduct exploratory research.

2. Experimental: Experimental research establishes a relationship between the cause and effect of a situation. It is a causal research design where one observes the impact caused by the independent variable on the dependent variable. For example, one monitors the influence of an independent variable such as a price on a dependent variable such as customer satisfaction or brand loyalty. It is an efficient research method as it contributes to solving a problem.

The independent variables are manipulated to monitor the change it has on the dependent variable. Social sciences often use it to observe human behavior by analyzing two groups. Researchers can have participants change their actions and study how the people around them react to understand social psychology better.

3. Correlational research: Correlational research is a non-experimental research technique. It helps researchers establish a relationship between two closely connected variables. There is no assumption while evaluating a relationship between two other variables, and statistical analysis techniques calculate the relationship between them. This type of research requires two different groups.

A correlation coefficient determines the correlation between two variables whose values range between -1 and +1. If the correlation coefficient is towards +1, it indicates a positive relationship between the variables, and -1 means a negative relationship between the two variables.

4. Diagnostic research: In diagnostic design, the researcher is looking to evaluate the underlying cause of a specific topic or phenomenon. This method helps one learn more about the factors that create troublesome situations.

This design has three parts of the research:

- Inception of the issue

- Diagnosis of the issue

- Solution for the issue

5. Explanatory research : Explanatory design uses a researcher’s ideas and thoughts on a subject to further explore their theories. The study explains unexplored aspects of a subject and details the research questions’ what, how, and why.

Benefits of Research Design

There are several benefits of having a well-designed research plan. Including:

- Clarity of research objectives: Research design provides a clear understanding of the research objectives and the desired outcomes.

- Increased validity and reliability: To ensure the validity and reliability of results, research design help to minimize the risk of bias and helps to control extraneous variables.

- Improved data collection: Research design helps to ensure that the proper data is collected and data is collected systematically and consistently.

- Better data analysis: Research design helps ensure that the collected data can be analyzed effectively, providing meaningful insights and conclusions.

- Improved communication: A well-designed research helps ensure the results are clean and influential within the research team and external stakeholders.

- Efficient use of resources: reducing the risk of waste and maximizing the impact of the research, research design helps to ensure that resources are used efficiently.

A well-designed research plan is essential for successful research, providing clear and meaningful insights and ensuring that resources are practical.

QuestionPro offers a comprehensive solution for researchers looking to conduct research. With its user-friendly interface, robust data collection and analysis tools, and the ability to integrate results from multiple sources, QuestionPro provides a versatile platform for designing and executing research projects.

Our robust suite of research tools provides you with all you need to derive research results. Our online survey platform includes custom point-and-click logic and advanced question types. Uncover the insights that matter the most.

FREE TRIAL LEARN MORE

MORE LIKE THIS

Taking Action in CX – Tuesday CX Thoughts

Apr 30, 2024

QuestionPro CX Product Updates – Quarter 1, 2024

Apr 29, 2024

NPS Survey Platform: Types, Tips, 11 Best Platforms & Tools

Apr 26, 2024

User Journey vs User Flow: Differences and Similarities

Other categories.

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

- Types of Research Designs

- Purpose of Guide

- Design Flaws to Avoid

- Independent and Dependent Variables

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Applying Critical Thinking

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Quantitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- Generative AI and Writing

- USC Libraries Tutorials and Other Guides

- Bibliography

Introduction

Before beginning your paper, you need to decide how you plan to design the study .

The research design refers to the overall strategy and analytical approach that you have chosen in order to integrate, in a coherent and logical way, the different components of the study, thus ensuring that the research problem will be thoroughly investigated. It constitutes the blueprint for the collection, measurement, and interpretation of information and data. Note that the research problem determines the type of design you choose, not the other way around!

De Vaus, D. A. Research Design in Social Research . London: SAGE, 2001; Trochim, William M.K. Research Methods Knowledge Base. 2006.

General Structure and Writing Style

The function of a research design is to ensure that the evidence obtained enables you to effectively address the research problem logically and as unambiguously as possible . In social sciences research, obtaining information relevant to the research problem generally entails specifying the type of evidence needed to test the underlying assumptions of a theory, to evaluate a program, or to accurately describe and assess meaning related to an observable phenomenon.

With this in mind, a common mistake made by researchers is that they begin their investigations before they have thought critically about what information is required to address the research problem. Without attending to these design issues beforehand, the overall research problem will not be adequately addressed and any conclusions drawn will run the risk of being weak and unconvincing. As a consequence, the overall validity of the study will be undermined.

The length and complexity of describing the research design in your paper can vary considerably, but any well-developed description will achieve the following :

- Identify the research problem clearly and justify its selection, particularly in relation to any valid alternative designs that could have been used,

- Review and synthesize previously published literature associated with the research problem,

- Clearly and explicitly specify hypotheses [i.e., research questions] central to the problem,

- Effectively describe the information and/or data which will be necessary for an adequate testing of the hypotheses and explain how such information and/or data will be obtained, and

- Describe the methods of analysis to be applied to the data in determining whether or not the hypotheses are true or false.

The research design is usually incorporated into the introduction of your paper . You can obtain an overall sense of what to do by reviewing studies that have utilized the same research design [e.g., using a case study approach]. This can help you develop an outline to follow for your own paper.

NOTE : Use the SAGE Research Methods Online and Cases and the SAGE Research Methods Videos databases to search for scholarly resources on how to apply specific research designs and methods . The Research Methods Online database contains links to more than 175,000 pages of SAGE publisher's book, journal, and reference content on quantitative, qualitative, and mixed research methodologies. Also included is a collection of case studies of social research projects that can be used to help you better understand abstract or complex methodological concepts. The Research Methods Videos database contains hours of tutorials, interviews, video case studies, and mini-documentaries covering the entire research process.

Creswell, John W. and J. David Creswell. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches . 5th edition. Thousand Oaks, CA: Sage, 2018; De Vaus, D. A. Research Design in Social Research . London: SAGE, 2001; Gorard, Stephen. Research Design: Creating Robust Approaches for the Social Sciences . Thousand Oaks, CA: Sage, 2013; Leedy, Paul D. and Jeanne Ellis Ormrod. Practical Research: Planning and Design . Tenth edition. Boston, MA: Pearson, 2013; Vogt, W. Paul, Dianna C. Gardner, and Lynne M. Haeffele. When to Use What Research Design . New York: Guilford, 2012.

Action Research Design

Definition and Purpose

The essentials of action research design follow a characteristic cycle whereby initially an exploratory stance is adopted, where an understanding of a problem is developed and plans are made for some form of interventionary strategy. Then the intervention is carried out [the "action" in action research] during which time, pertinent observations are collected in various forms. The new interventional strategies are carried out, and this cyclic process repeats, continuing until a sufficient understanding of [or a valid implementation solution for] the problem is achieved. The protocol is iterative or cyclical in nature and is intended to foster deeper understanding of a given situation, starting with conceptualizing and particularizing the problem and moving through several interventions and evaluations.

What do these studies tell you ?

- This is a collaborative and adaptive research design that lends itself to use in work or community situations.

- Design focuses on pragmatic and solution-driven research outcomes rather than testing theories.

- When practitioners use action research, it has the potential to increase the amount they learn consciously from their experience; the action research cycle can be regarded as a learning cycle.

- Action research studies often have direct and obvious relevance to improving practice and advocating for change.

- There are no hidden controls or preemption of direction by the researcher.

What these studies don't tell you ?

- It is harder to do than conducting conventional research because the researcher takes on responsibilities of advocating for change as well as for researching the topic.

- Action research is much harder to write up because it is less likely that you can use a standard format to report your findings effectively [i.e., data is often in the form of stories or observation].

- Personal over-involvement of the researcher may bias research results.

- The cyclic nature of action research to achieve its twin outcomes of action [e.g. change] and research [e.g. understanding] is time-consuming and complex to conduct.

- Advocating for change usually requires buy-in from study participants.

Coghlan, David and Mary Brydon-Miller. The Sage Encyclopedia of Action Research . Thousand Oaks, CA: Sage, 2014; Efron, Sara Efrat and Ruth Ravid. Action Research in Education: A Practical Guide . New York: Guilford, 2013; Gall, Meredith. Educational Research: An Introduction . Chapter 18, Action Research. 8th ed. Boston, MA: Pearson/Allyn and Bacon, 2007; Gorard, Stephen. Research Design: Creating Robust Approaches for the Social Sciences . Thousand Oaks, CA: Sage, 2013; Kemmis, Stephen and Robin McTaggart. “Participatory Action Research.” In Handbook of Qualitative Research . Norman Denzin and Yvonna S. Lincoln, eds. 2nd ed. (Thousand Oaks, CA: SAGE, 2000), pp. 567-605; McNiff, Jean. Writing and Doing Action Research . London: Sage, 2014; Reason, Peter and Hilary Bradbury. Handbook of Action Research: Participative Inquiry and Practice . Thousand Oaks, CA: SAGE, 2001.

Case Study Design

A case study is an in-depth study of a particular research problem rather than a sweeping statistical survey or comprehensive comparative inquiry. It is often used to narrow down a very broad field of research into one or a few easily researchable examples. The case study research design is also useful for testing whether a specific theory and model actually applies to phenomena in the real world. It is a useful design when not much is known about an issue or phenomenon.

- Approach excels at bringing us to an understanding of a complex issue through detailed contextual analysis of a limited number of events or conditions and their relationships.

- A researcher using a case study design can apply a variety of methodologies and rely on a variety of sources to investigate a research problem.

- Design can extend experience or add strength to what is already known through previous research.

- Social scientists, in particular, make wide use of this research design to examine contemporary real-life situations and provide the basis for the application of concepts and theories and the extension of methodologies.

- The design can provide detailed descriptions of specific and rare cases.

- A single or small number of cases offers little basis for establishing reliability or to generalize the findings to a wider population of people, places, or things.

- Intense exposure to the study of a case may bias a researcher's interpretation of the findings.

- Design does not facilitate assessment of cause and effect relationships.

- Vital information may be missing, making the case hard to interpret.

- The case may not be representative or typical of the larger problem being investigated.

- If the criteria for selecting a case is because it represents a very unusual or unique phenomenon or problem for study, then your interpretation of the findings can only apply to that particular case.

Case Studies. Writing@CSU. Colorado State University; Anastas, Jeane W. Research Design for Social Work and the Human Services . Chapter 4, Flexible Methods: Case Study Design. 2nd ed. New York: Columbia University Press, 1999; Gerring, John. “What Is a Case Study and What Is It Good for?” American Political Science Review 98 (May 2004): 341-354; Greenhalgh, Trisha, editor. Case Study Evaluation: Past, Present and Future Challenges . Bingley, UK: Emerald Group Publishing, 2015; Mills, Albert J. , Gabrielle Durepos, and Eiden Wiebe, editors. Encyclopedia of Case Study Research . Thousand Oaks, CA: SAGE Publications, 2010; Stake, Robert E. The Art of Case Study Research . Thousand Oaks, CA: SAGE, 1995; Yin, Robert K. Case Study Research: Design and Theory . Applied Social Research Methods Series, no. 5. 3rd ed. Thousand Oaks, CA: SAGE, 2003.

Causal Design

Causality studies may be thought of as understanding a phenomenon in terms of conditional statements in the form, “If X, then Y.” This type of research is used to measure what impact a specific change will have on existing norms and assumptions. Most social scientists seek causal explanations that reflect tests of hypotheses. Causal effect (nomothetic perspective) occurs when variation in one phenomenon, an independent variable, leads to or results, on average, in variation in another phenomenon, the dependent variable.

Conditions necessary for determining causality:

- Empirical association -- a valid conclusion is based on finding an association between the independent variable and the dependent variable.

- Appropriate time order -- to conclude that causation was involved, one must see that cases were exposed to variation in the independent variable before variation in the dependent variable.

- Nonspuriousness -- a relationship between two variables that is not due to variation in a third variable.

- Causality research designs assist researchers in understanding why the world works the way it does through the process of proving a causal link between variables and by the process of eliminating other possibilities.

- Replication is possible.

- There is greater confidence the study has internal validity due to the systematic subject selection and equity of groups being compared.

- Not all relationships are causal! The possibility always exists that, by sheer coincidence, two unrelated events appear to be related [e.g., Punxatawney Phil could accurately predict the duration of Winter for five consecutive years but, the fact remains, he's just a big, furry rodent].

- Conclusions about causal relationships are difficult to determine due to a variety of extraneous and confounding variables that exist in a social environment. This means causality can only be inferred, never proven.

- If two variables are correlated, the cause must come before the effect. However, even though two variables might be causally related, it can sometimes be difficult to determine which variable comes first and, therefore, to establish which variable is the actual cause and which is the actual effect.

Beach, Derek and Rasmus Brun Pedersen. Causal Case Study Methods: Foundations and Guidelines for Comparing, Matching, and Tracing . Ann Arbor, MI: University of Michigan Press, 2016; Bachman, Ronet. The Practice of Research in Criminology and Criminal Justice . Chapter 5, Causation and Research Designs. 3rd ed. Thousand Oaks, CA: Pine Forge Press, 2007; Brewer, Ernest W. and Jennifer Kubn. “Causal-Comparative Design.” In Encyclopedia of Research Design . Neil J. Salkind, editor. (Thousand Oaks, CA: Sage, 2010), pp. 125-132; Causal Research Design: Experimentation. Anonymous SlideShare Presentation; Gall, Meredith. Educational Research: An Introduction . Chapter 11, Nonexperimental Research: Correlational Designs. 8th ed. Boston, MA: Pearson/Allyn and Bacon, 2007; Trochim, William M.K. Research Methods Knowledge Base. 2006.

Cohort Design

Often used in the medical sciences, but also found in the applied social sciences, a cohort study generally refers to a study conducted over a period of time involving members of a population which the subject or representative member comes from, and who are united by some commonality or similarity. Using a quantitative framework, a cohort study makes note of statistical occurrence within a specialized subgroup, united by same or similar characteristics that are relevant to the research problem being investigated, rather than studying statistical occurrence within the general population. Using a qualitative framework, cohort studies generally gather data using methods of observation. Cohorts can be either "open" or "closed."

- Open Cohort Studies [dynamic populations, such as the population of Los Angeles] involve a population that is defined just by the state of being a part of the study in question (and being monitored for the outcome). Date of entry and exit from the study is individually defined, therefore, the size of the study population is not constant. In open cohort studies, researchers can only calculate rate based data, such as, incidence rates and variants thereof.

- Closed Cohort Studies [static populations, such as patients entered into a clinical trial] involve participants who enter into the study at one defining point in time and where it is presumed that no new participants can enter the cohort. Given this, the number of study participants remains constant (or can only decrease).

- The use of cohorts is often mandatory because a randomized control study may be unethical. For example, you cannot deliberately expose people to asbestos, you can only study its effects on those who have already been exposed. Research that measures risk factors often relies upon cohort designs.

- Because cohort studies measure potential causes before the outcome has occurred, they can demonstrate that these “causes” preceded the outcome, thereby avoiding the debate as to which is the cause and which is the effect.

- Cohort analysis is highly flexible and can provide insight into effects over time and related to a variety of different types of changes [e.g., social, cultural, political, economic, etc.].

- Either original data or secondary data can be used in this design.

- In cases where a comparative analysis of two cohorts is made [e.g., studying the effects of one group exposed to asbestos and one that has not], a researcher cannot control for all other factors that might differ between the two groups. These factors are known as confounding variables.

- Cohort studies can end up taking a long time to complete if the researcher must wait for the conditions of interest to develop within the group. This also increases the chance that key variables change during the course of the study, potentially impacting the validity of the findings.

- Due to the lack of randominization in the cohort design, its external validity is lower than that of study designs where the researcher randomly assigns participants.

Healy P, Devane D. “Methodological Considerations in Cohort Study Designs.” Nurse Researcher 18 (2011): 32-36; Glenn, Norval D, editor. Cohort Analysis . 2nd edition. Thousand Oaks, CA: Sage, 2005; Levin, Kate Ann. Study Design IV: Cohort Studies. Evidence-Based Dentistry 7 (2003): 51–52; Payne, Geoff. “Cohort Study.” In The SAGE Dictionary of Social Research Methods . Victor Jupp, editor. (Thousand Oaks, CA: Sage, 2006), pp. 31-33; Study Design 101. Himmelfarb Health Sciences Library. George Washington University, November 2011; Cohort Study. Wikipedia.

Cross-Sectional Design

Cross-sectional research designs have three distinctive features: no time dimension; a reliance on existing differences rather than change following intervention; and, groups are selected based on existing differences rather than random allocation. The cross-sectional design can only measure differences between or from among a variety of people, subjects, or phenomena rather than a process of change. As such, researchers using this design can only employ a relatively passive approach to making causal inferences based on findings.

- Cross-sectional studies provide a clear 'snapshot' of the outcome and the characteristics associated with it, at a specific point in time.

- Unlike an experimental design, where there is an active intervention by the researcher to produce and measure change or to create differences, cross-sectional designs focus on studying and drawing inferences from existing differences between people, subjects, or phenomena.

- Entails collecting data at and concerning one point in time. While longitudinal studies involve taking multiple measures over an extended period of time, cross-sectional research is focused on finding relationships between variables at one moment in time.

- Groups identified for study are purposely selected based upon existing differences in the sample rather than seeking random sampling.

- Cross-section studies are capable of using data from a large number of subjects and, unlike observational studies, is not geographically bound.

- Can estimate prevalence of an outcome of interest because the sample is usually taken from the whole population.

- Because cross-sectional designs generally use survey techniques to gather data, they are relatively inexpensive and take up little time to conduct.

- Finding people, subjects, or phenomena to study that are very similar except in one specific variable can be difficult.

- Results are static and time bound and, therefore, give no indication of a sequence of events or reveal historical or temporal contexts.

- Studies cannot be utilized to establish cause and effect relationships.

- This design only provides a snapshot of analysis so there is always the possibility that a study could have differing results if another time-frame had been chosen.

- There is no follow up to the findings.

Bethlehem, Jelke. "7: Cross-sectional Research." In Research Methodology in the Social, Behavioural and Life Sciences . Herman J Adèr and Gideon J Mellenbergh, editors. (London, England: Sage, 1999), pp. 110-43; Bourque, Linda B. “Cross-Sectional Design.” In The SAGE Encyclopedia of Social Science Research Methods . Michael S. Lewis-Beck, Alan Bryman, and Tim Futing Liao. (Thousand Oaks, CA: 2004), pp. 230-231; Hall, John. “Cross-Sectional Survey Design.” In Encyclopedia of Survey Research Methods . Paul J. Lavrakas, ed. (Thousand Oaks, CA: Sage, 2008), pp. 173-174; Helen Barratt, Maria Kirwan. Cross-Sectional Studies: Design Application, Strengths and Weaknesses of Cross-Sectional Studies. Healthknowledge, 2009. Cross-Sectional Study. Wikipedia.

Descriptive Design

Descriptive research designs help provide answers to the questions of who, what, when, where, and how associated with a particular research problem; a descriptive study cannot conclusively ascertain answers to why. Descriptive research is used to obtain information concerning the current status of the phenomena and to describe "what exists" with respect to variables or conditions in a situation.

- The subject is being observed in a completely natural and unchanged natural environment. True experiments, whilst giving analyzable data, often adversely influence the normal behavior of the subject [a.k.a., the Heisenberg effect whereby measurements of certain systems cannot be made without affecting the systems].

- Descriptive research is often used as a pre-cursor to more quantitative research designs with the general overview giving some valuable pointers as to what variables are worth testing quantitatively.

- If the limitations are understood, they can be a useful tool in developing a more focused study.

- Descriptive studies can yield rich data that lead to important recommendations in practice.

- Appoach collects a large amount of data for detailed analysis.

- The results from a descriptive research cannot be used to discover a definitive answer or to disprove a hypothesis.

- Because descriptive designs often utilize observational methods [as opposed to quantitative methods], the results cannot be replicated.

- The descriptive function of research is heavily dependent on instrumentation for measurement and observation.

Anastas, Jeane W. Research Design for Social Work and the Human Services . Chapter 5, Flexible Methods: Descriptive Research. 2nd ed. New York: Columbia University Press, 1999; Given, Lisa M. "Descriptive Research." In Encyclopedia of Measurement and Statistics . Neil J. Salkind and Kristin Rasmussen, editors. (Thousand Oaks, CA: Sage, 2007), pp. 251-254; McNabb, Connie. Descriptive Research Methodologies. Powerpoint Presentation; Shuttleworth, Martyn. Descriptive Research Design, September 26, 2008; Erickson, G. Scott. "Descriptive Research Design." In New Methods of Market Research and Analysis . (Northampton, MA: Edward Elgar Publishing, 2017), pp. 51-77; Sahin, Sagufta, and Jayanta Mete. "A Brief Study on Descriptive Research: Its Nature and Application in Social Science." International Journal of Research and Analysis in Humanities 1 (2021): 11; K. Swatzell and P. Jennings. “Descriptive Research: The Nuts and Bolts.” Journal of the American Academy of Physician Assistants 20 (2007), pp. 55-56; Kane, E. Doing Your Own Research: Basic Descriptive Research in the Social Sciences and Humanities . London: Marion Boyars, 1985.

Experimental Design

A blueprint of the procedure that enables the researcher to maintain control over all factors that may affect the result of an experiment. In doing this, the researcher attempts to determine or predict what may occur. Experimental research is often used where there is time priority in a causal relationship (cause precedes effect), there is consistency in a causal relationship (a cause will always lead to the same effect), and the magnitude of the correlation is great. The classic experimental design specifies an experimental group and a control group. The independent variable is administered to the experimental group and not to the control group, and both groups are measured on the same dependent variable. Subsequent experimental designs have used more groups and more measurements over longer periods. True experiments must have control, randomization, and manipulation.

- Experimental research allows the researcher to control the situation. In so doing, it allows researchers to answer the question, “What causes something to occur?”

- Permits the researcher to identify cause and effect relationships between variables and to distinguish placebo effects from treatment effects.

- Experimental research designs support the ability to limit alternative explanations and to infer direct causal relationships in the study.

- Approach provides the highest level of evidence for single studies.

- The design is artificial, and results may not generalize well to the real world.

- The artificial settings of experiments may alter the behaviors or responses of participants.

- Experimental designs can be costly if special equipment or facilities are needed.

- Some research problems cannot be studied using an experiment because of ethical or technical reasons.

- Difficult to apply ethnographic and other qualitative methods to experimentally designed studies.

Anastas, Jeane W. Research Design for Social Work and the Human Services . Chapter 7, Flexible Methods: Experimental Research. 2nd ed. New York: Columbia University Press, 1999; Chapter 2: Research Design, Experimental Designs. School of Psychology, University of New England, 2000; Chow, Siu L. "Experimental Design." In Encyclopedia of Research Design . Neil J. Salkind, editor. (Thousand Oaks, CA: Sage, 2010), pp. 448-453; "Experimental Design." In Social Research Methods . Nicholas Walliman, editor. (London, England: Sage, 2006), pp, 101-110; Experimental Research. Research Methods by Dummies. Department of Psychology. California State University, Fresno, 2006; Kirk, Roger E. Experimental Design: Procedures for the Behavioral Sciences . 4th edition. Thousand Oaks, CA: Sage, 2013; Trochim, William M.K. Experimental Design. Research Methods Knowledge Base. 2006; Rasool, Shafqat. Experimental Research. Slideshare presentation.

Exploratory Design

An exploratory design is conducted about a research problem when there are few or no earlier studies to refer to or rely upon to predict an outcome . The focus is on gaining insights and familiarity for later investigation or undertaken when research problems are in a preliminary stage of investigation. Exploratory designs are often used to establish an understanding of how best to proceed in studying an issue or what methodology would effectively apply to gathering information about the issue.

The goals of exploratory research are intended to produce the following possible insights:

- Familiarity with basic details, settings, and concerns.

- Well grounded picture of the situation being developed.

- Generation of new ideas and assumptions.

- Development of tentative theories or hypotheses.

- Determination about whether a study is feasible in the future.

- Issues get refined for more systematic investigation and formulation of new research questions.

- Direction for future research and techniques get developed.

- Design is a useful approach for gaining background information on a particular topic.

- Exploratory research is flexible and can address research questions of all types (what, why, how).

- Provides an opportunity to define new terms and clarify existing concepts.

- Exploratory research is often used to generate formal hypotheses and develop more precise research problems.

- In the policy arena or applied to practice, exploratory studies help establish research priorities and where resources should be allocated.

- Exploratory research generally utilizes small sample sizes and, thus, findings are typically not generalizable to the population at large.

- The exploratory nature of the research inhibits an ability to make definitive conclusions about the findings. They provide insight but not definitive conclusions.

- The research process underpinning exploratory studies is flexible but often unstructured, leading to only tentative results that have limited value to decision-makers.

- Design lacks rigorous standards applied to methods of data gathering and analysis because one of the areas for exploration could be to determine what method or methodologies could best fit the research problem.

Cuthill, Michael. “Exploratory Research: Citizen Participation, Local Government, and Sustainable Development in Australia.” Sustainable Development 10 (2002): 79-89; Streb, Christoph K. "Exploratory Case Study." In Encyclopedia of Case Study Research . Albert J. Mills, Gabrielle Durepos and Eiden Wiebe, editors. (Thousand Oaks, CA: Sage, 2010), pp. 372-374; Taylor, P. J., G. Catalano, and D.R.F. Walker. “Exploratory Analysis of the World City Network.” Urban Studies 39 (December 2002): 2377-2394; Exploratory Research. Wikipedia.

Field Research Design