- Quality Improvement

- Talk To Minitab

Understanding Hypothesis Tests: Significance Levels (Alpha) and P values in Statistics

Topics: Hypothesis Testing , Statistics

What do significance levels and P values mean in hypothesis tests? What is statistical significance anyway? In this post, I’ll continue to focus on concepts and graphs to help you gain a more intuitive understanding of how hypothesis tests work in statistics.

To bring it to life, I’ll add the significance level and P value to the graph in my previous post in order to perform a graphical version of the 1 sample t-test. It’s easier to understand when you can see what statistical significance truly means!

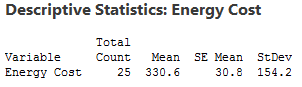

Here’s where we left off in my last post . We want to determine whether our sample mean (330.6) indicates that this year's average energy cost is significantly different from last year’s average energy cost of $260.

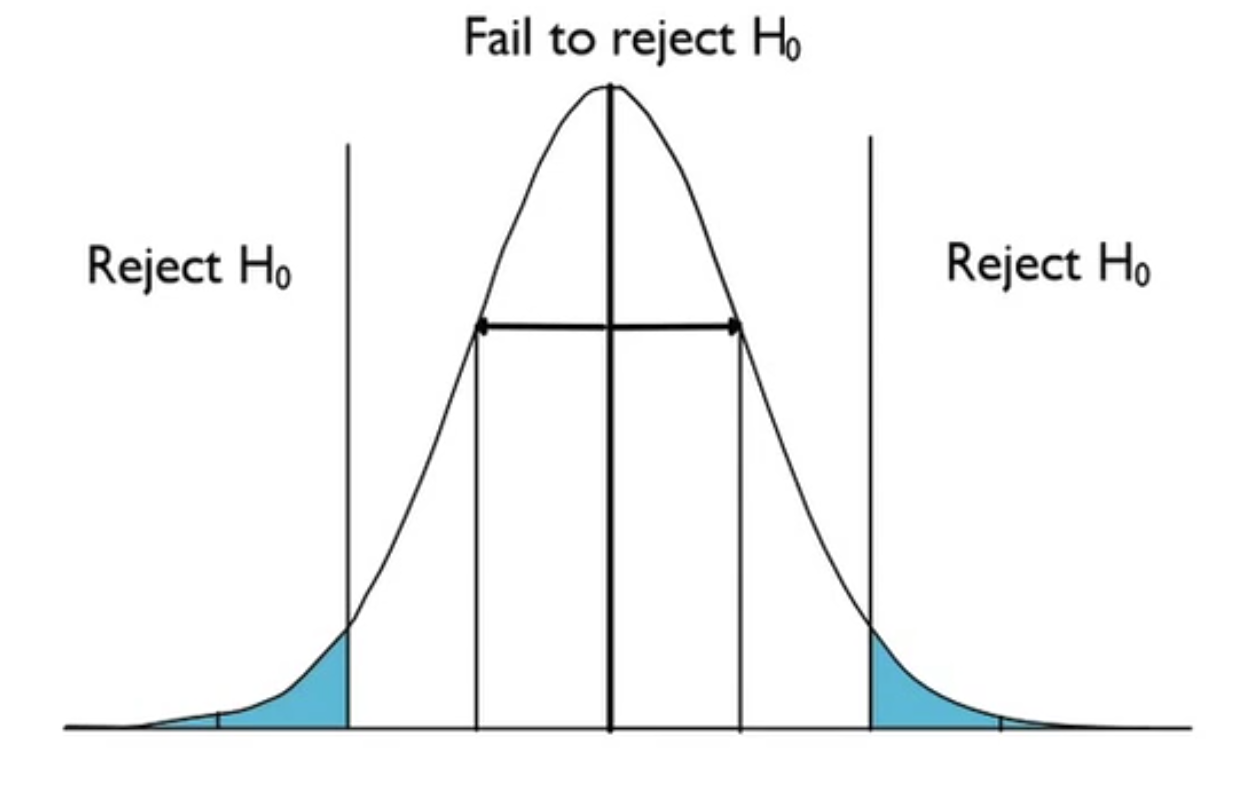

The probability distribution plot above shows the distribution of sample means we’d obtain under the assumption that the null hypothesis is true (population mean = 260) and we repeatedly drew a large number of random samples.

I left you with a question: where do we draw the line for statistical significance on the graph? Now we'll add in the significance level and the P value, which are the decision-making tools we'll need.

We'll use these tools to test the following hypotheses:

- Null hypothesis: The population mean equals the hypothesized mean (260).

- Alternative hypothesis: The population mean differs from the hypothesized mean (260).

What Is the Significance Level (Alpha)?

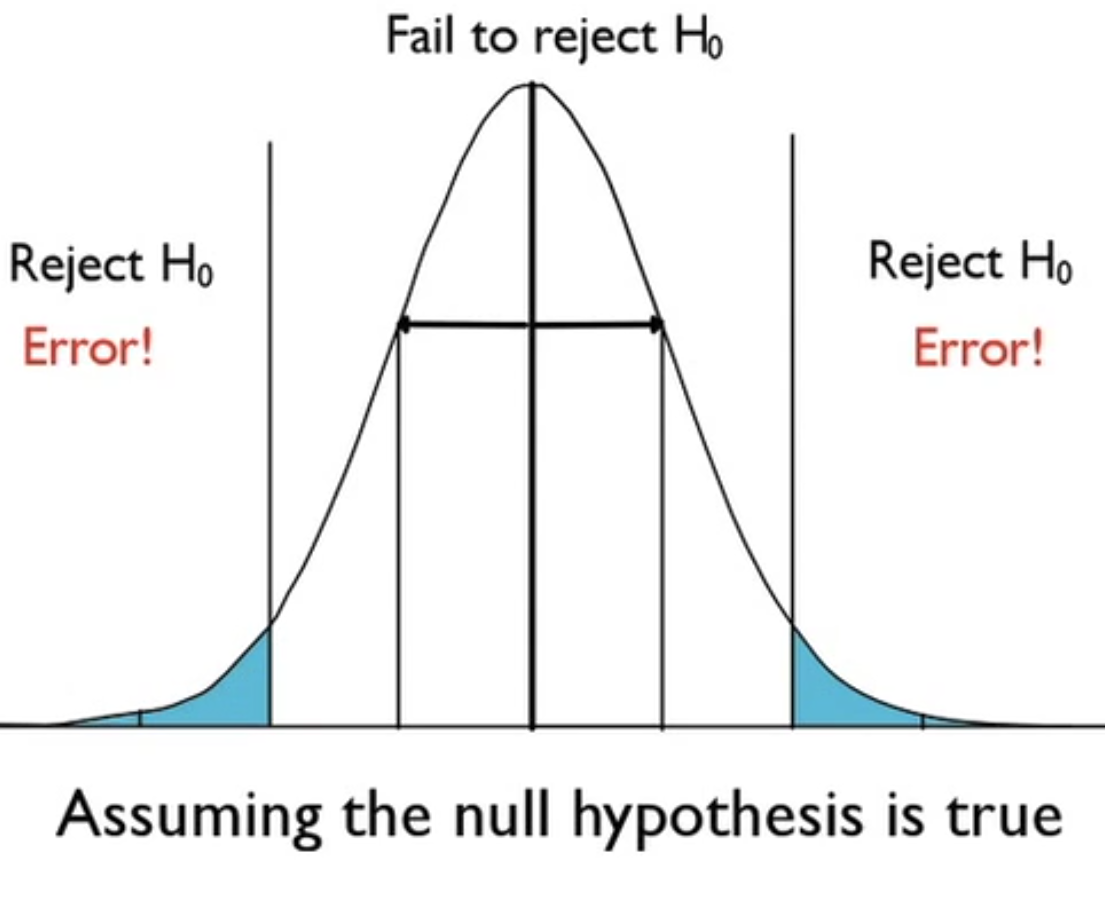

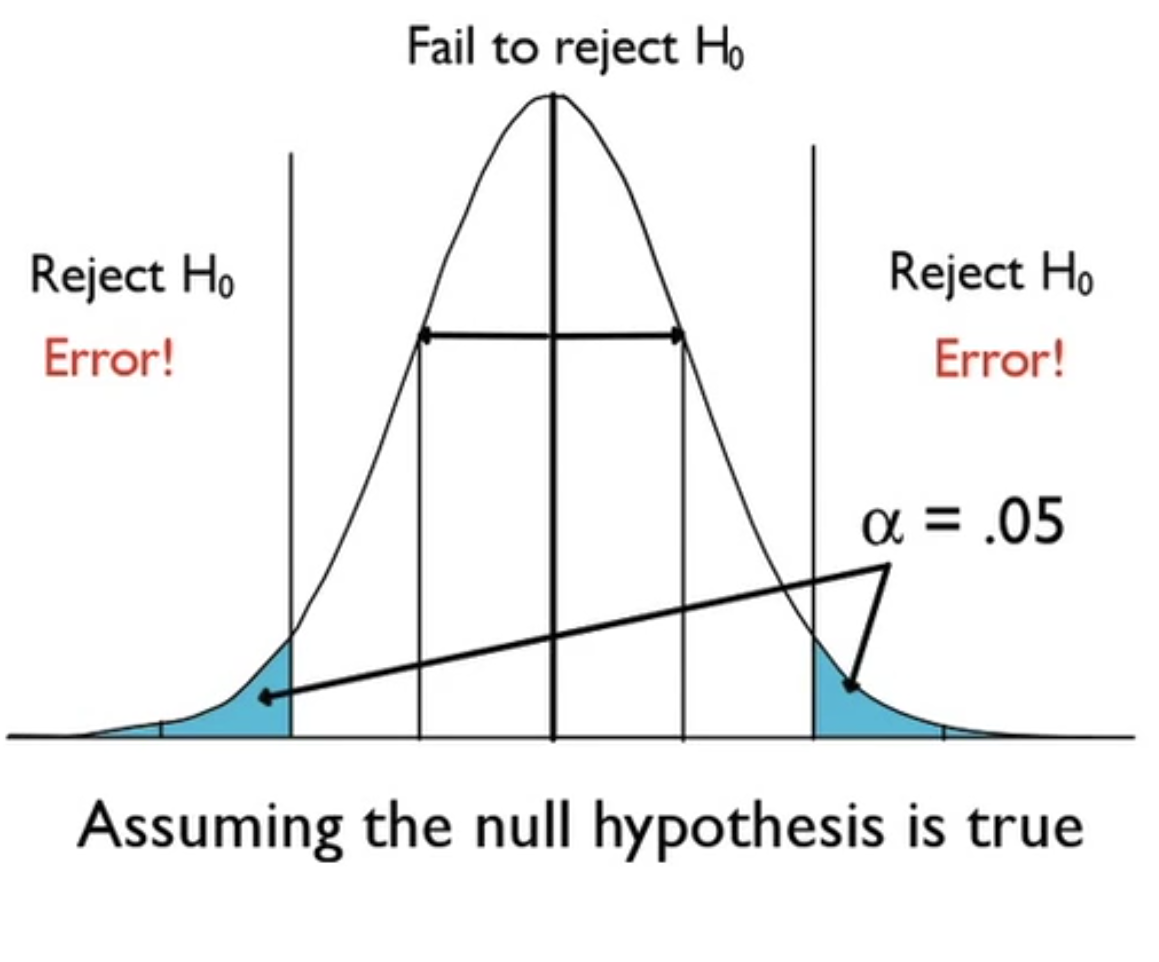

The significance level, also denoted as alpha or α, is the probability of rejecting the null hypothesis when it is true. For example, a significance level of 0.05 indicates a 5% risk of concluding that a difference exists when there is no actual difference.

These types of definitions can be hard to understand because of their technical nature. A picture makes the concepts much easier to comprehend!

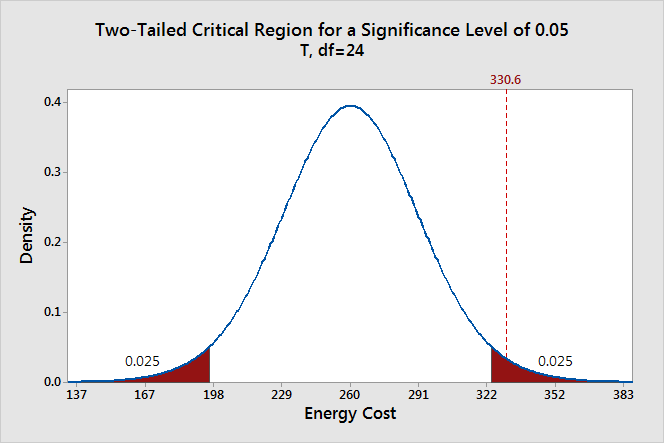

The significance level determines how far out from the null hypothesis value we'll draw that line on the graph. To graph a significance level of 0.05, we need to shade the 5% of the distribution that is furthest away from the null hypothesis.

In the graph above, the two shaded areas are equidistant from the null hypothesis value and each area has a probability of 0.025, for a total of 0.05. In statistics, we call these shaded areas the critical region for a two-tailed test. If the population mean is 260, we’d expect to obtain a sample mean that falls in the critical region 5% of the time. The critical region defines how far away our sample statistic must be from the null hypothesis value before we can say it is unusual enough to reject the null hypothesis.

Our sample mean (330.6) falls within the critical region, which indicates it is statistically significant at the 0.05 level.

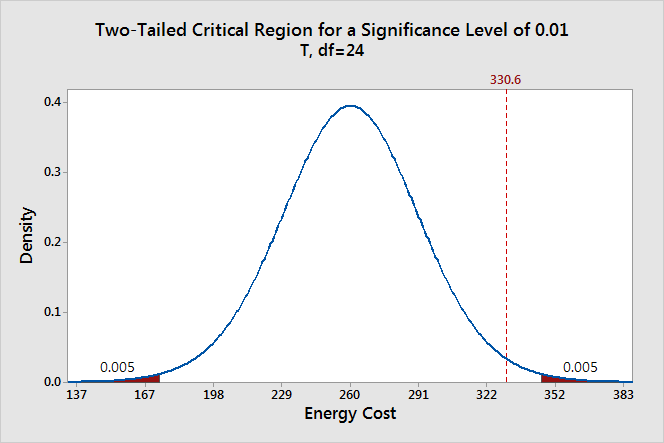

We can also see if it is statistically significant using the other common significance level of 0.01.

The two shaded areas each have a probability of 0.005, which adds up to a total probability of 0.01. This time our sample mean does not fall within the critical region and we fail to reject the null hypothesis. This comparison shows why you need to choose your significance level before you begin your study. It protects you from choosing a significance level because it conveniently gives you significant results!

Thanks to the graph, we were able to determine that our results are statistically significant at the 0.05 level without using a P value. However, when you use the numeric output produced by statistical software , you’ll need to compare the P value to your significance level to make this determination.

Ready for a demo of Minitab Statistical Software? Just ask!

What Are P values?

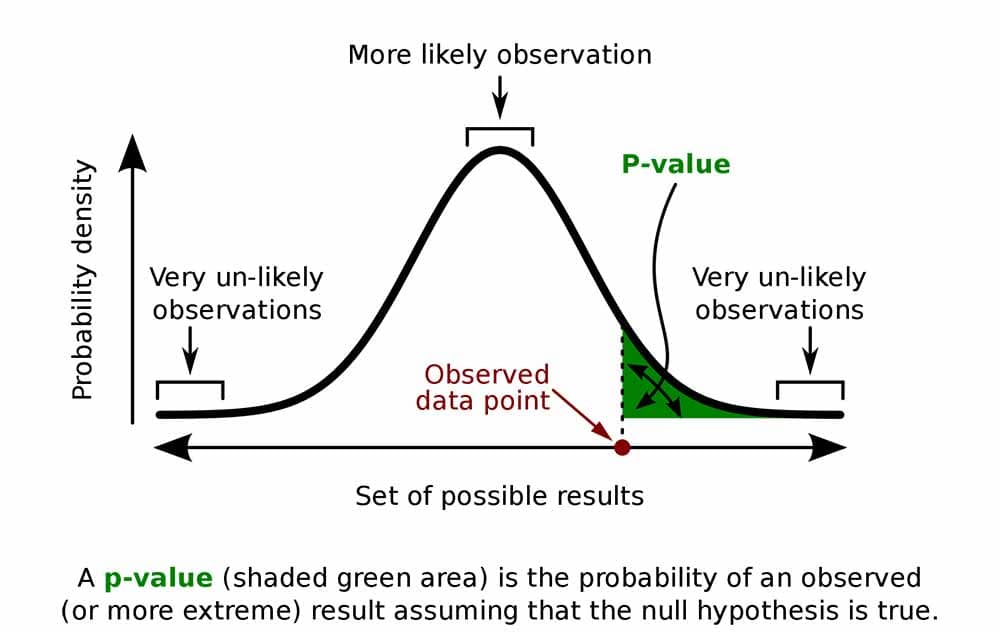

P-values are the probability of obtaining an effect at least as extreme as the one in your sample data, assuming the truth of the null hypothesis.

This definition of P values, while technically correct, is a bit convoluted. It’s easier to understand with a graph!

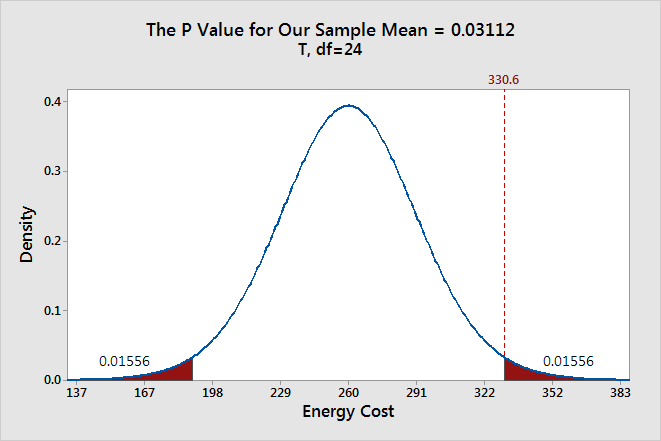

To graph the P value for our example data set, we need to determine the distance between the sample mean and the null hypothesis value (330.6 - 260 = 70.6). Next, we can graph the probability of obtaining a sample mean that is at least as extreme in both tails of the distribution (260 +/- 70.6).

In the graph above, the two shaded areas each have a probability of 0.01556, for a total probability 0.03112. This probability represents the likelihood of obtaining a sample mean that is at least as extreme as our sample mean in both tails of the distribution if the population mean is 260. That’s our P value!

When a P value is less than or equal to the significance level, you reject the null hypothesis. If we take the P value for our example and compare it to the common significance levels, it matches the previous graphical results. The P value of 0.03112 is statistically significant at an alpha level of 0.05, but not at the 0.01 level.

If we stick to a significance level of 0.05, we can conclude that the average energy cost for the population is greater than 260.

A common mistake is to interpret the P-value as the probability that the null hypothesis is true. To understand why this interpretation is incorrect, please read my blog post How to Correctly Interpret P Values .

Discussion about Statistically Significant Results

A hypothesis test evaluates two mutually exclusive statements about a population to determine which statement is best supported by the sample data. A test result is statistically significant when the sample statistic is unusual enough relative to the null hypothesis that we can reject the null hypothesis for the entire population. “Unusual enough” in a hypothesis test is defined by:

- The assumption that the null hypothesis is true—the graphs are centered on the null hypothesis value.

- The significance level—how far out do we draw the line for the critical region?

- Our sample statistic—does it fall in the critical region?

Keep in mind that there is no magic significance level that distinguishes between the studies that have a true effect and those that don’t with 100% accuracy. The common alpha values of 0.05 and 0.01 are simply based on tradition. For a significance level of 0.05, expect to obtain sample means in the critical region 5% of the time when the null hypothesis is true . In these cases, you won’t know that the null hypothesis is true but you’ll reject it because the sample mean falls in the critical region. That’s why the significance level is also referred to as an error rate!

This type of error doesn’t imply that the experimenter did anything wrong or require any other unusual explanation. The graphs show that when the null hypothesis is true, it is possible to obtain these unusual sample means for no reason other than random sampling error. It’s just luck of the draw.

Significance levels and P values are important tools that help you quantify and control this type of error in a hypothesis test. Using these tools to decide when to reject the null hypothesis increases your chance of making the correct decision.

If you like this post, you might want to read the other posts in this series that use the same graphical framework:

- Previous: Why We Need to Use Hypothesis Tests

- Next: Confidence Intervals and Confidence Levels

If you'd like to see how I made these graphs, please read: How to Create a Graphical Version of the 1-sample t-Test .

You Might Also Like

- Trust Center

© 2023 Minitab, LLC. All Rights Reserved.

- Terms of Use

- Privacy Policy

- Cookies Settings

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Hypothesis Testing | A Step-by-Step Guide with Easy Examples

Published on November 8, 2019 by Rebecca Bevans . Revised on June 22, 2023.

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics . It is most often used by scientists to test specific predictions, called hypotheses, that arise from theories.

There are 5 main steps in hypothesis testing:

- State your research hypothesis as a null hypothesis and alternate hypothesis (H o ) and (H a or H 1 ).

- Collect data in a way designed to test the hypothesis.

- Perform an appropriate statistical test .

- Decide whether to reject or fail to reject your null hypothesis.

- Present the findings in your results and discussion section.

Though the specific details might vary, the procedure you will use when testing a hypothesis will always follow some version of these steps.

Table of contents

Step 1: state your null and alternate hypothesis, step 2: collect data, step 3: perform a statistical test, step 4: decide whether to reject or fail to reject your null hypothesis, step 5: present your findings, other interesting articles, frequently asked questions about hypothesis testing.

After developing your initial research hypothesis (the prediction that you want to investigate), it is important to restate it as a null (H o ) and alternate (H a ) hypothesis so that you can test it mathematically.

The alternate hypothesis is usually your initial hypothesis that predicts a relationship between variables. The null hypothesis is a prediction of no relationship between the variables you are interested in.

- H 0 : Men are, on average, not taller than women. H a : Men are, on average, taller than women.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

For a statistical test to be valid , it is important to perform sampling and collect data in a way that is designed to test your hypothesis. If your data are not representative, then you cannot make statistical inferences about the population you are interested in.

There are a variety of statistical tests available, but they are all based on the comparison of within-group variance (how spread out the data is within a category) versus between-group variance (how different the categories are from one another).

If the between-group variance is large enough that there is little or no overlap between groups, then your statistical test will reflect that by showing a low p -value . This means it is unlikely that the differences between these groups came about by chance.

Alternatively, if there is high within-group variance and low between-group variance, then your statistical test will reflect that with a high p -value. This means it is likely that any difference you measure between groups is due to chance.

Your choice of statistical test will be based on the type of variables and the level of measurement of your collected data .

- an estimate of the difference in average height between the two groups.

- a p -value showing how likely you are to see this difference if the null hypothesis of no difference is true.

Based on the outcome of your statistical test, you will have to decide whether to reject or fail to reject your null hypothesis.

In most cases you will use the p -value generated by your statistical test to guide your decision. And in most cases, your predetermined level of significance for rejecting the null hypothesis will be 0.05 – that is, when there is a less than 5% chance that you would see these results if the null hypothesis were true.

In some cases, researchers choose a more conservative level of significance, such as 0.01 (1%). This minimizes the risk of incorrectly rejecting the null hypothesis ( Type I error ).

Prevent plagiarism. Run a free check.

The results of hypothesis testing will be presented in the results and discussion sections of your research paper , dissertation or thesis .

In the results section you should give a brief summary of the data and a summary of the results of your statistical test (for example, the estimated difference between group means and associated p -value). In the discussion , you can discuss whether your initial hypothesis was supported by your results or not.

In the formal language of hypothesis testing, we talk about rejecting or failing to reject the null hypothesis. You will probably be asked to do this in your statistics assignments.

However, when presenting research results in academic papers we rarely talk this way. Instead, we go back to our alternate hypothesis (in this case, the hypothesis that men are on average taller than women) and state whether the result of our test did or did not support the alternate hypothesis.

If your null hypothesis was rejected, this result is interpreted as “supported the alternate hypothesis.”

These are superficial differences; you can see that they mean the same thing.

You might notice that we don’t say that we reject or fail to reject the alternate hypothesis . This is because hypothesis testing is not designed to prove or disprove anything. It is only designed to test whether a pattern we measure could have arisen spuriously, or by chance.

If we reject the null hypothesis based on our research (i.e., we find that it is unlikely that the pattern arose by chance), then we can say our test lends support to our hypothesis . But if the pattern does not pass our decision rule, meaning that it could have arisen by chance, then we say the test is inconsistent with our hypothesis .

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess — it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations and statistical analysis of data).

Null and alternative hypotheses are used in statistical hypothesis testing . The null hypothesis of a test always predicts no effect or no relationship between variables, while the alternative hypothesis states your research prediction of an effect or relationship.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 22). Hypothesis Testing | A Step-by-Step Guide with Easy Examples. Scribbr. Retrieved April 3, 2024, from https://www.scribbr.com/statistics/hypothesis-testing/

Is this article helpful?

Rebecca Bevans

Other students also liked, choosing the right statistical test | types & examples, understanding p values | definition and examples, what is your plagiarism score.

Hypothesis Testing (cont...)

Hypothesis testing, the null and alternative hypothesis.

In order to undertake hypothesis testing you need to express your research hypothesis as a null and alternative hypothesis. The null hypothesis and alternative hypothesis are statements regarding the differences or effects that occur in the population. You will use your sample to test which statement (i.e., the null hypothesis or alternative hypothesis) is most likely (although technically, you test the evidence against the null hypothesis). So, with respect to our teaching example, the null and alternative hypothesis will reflect statements about all statistics students on graduate management courses.

The null hypothesis is essentially the "devil's advocate" position. That is, it assumes that whatever you are trying to prove did not happen ( hint: it usually states that something equals zero). For example, the two different teaching methods did not result in different exam performances (i.e., zero difference). Another example might be that there is no relationship between anxiety and athletic performance (i.e., the slope is zero). The alternative hypothesis states the opposite and is usually the hypothesis you are trying to prove (e.g., the two different teaching methods did result in different exam performances). Initially, you can state these hypotheses in more general terms (e.g., using terms like "effect", "relationship", etc.), as shown below for the teaching methods example:

Depending on how you want to "summarize" the exam performances will determine how you might want to write a more specific null and alternative hypothesis. For example, you could compare the mean exam performance of each group (i.e., the "seminar" group and the "lectures-only" group). This is what we will demonstrate here, but other options include comparing the distributions , medians , amongst other things. As such, we can state:

Now that you have identified the null and alternative hypotheses, you need to find evidence and develop a strategy for declaring your "support" for either the null or alternative hypothesis. We can do this using some statistical theory and some arbitrary cut-off points. Both these issues are dealt with next.

Significance levels

The level of statistical significance is often expressed as the so-called p -value . Depending on the statistical test you have chosen, you will calculate a probability (i.e., the p -value) of observing your sample results (or more extreme) given that the null hypothesis is true . Another way of phrasing this is to consider the probability that a difference in a mean score (or other statistic) could have arisen based on the assumption that there really is no difference. Let us consider this statement with respect to our example where we are interested in the difference in mean exam performance between two different teaching methods. If there really is no difference between the two teaching methods in the population (i.e., given that the null hypothesis is true), how likely would it be to see a difference in the mean exam performance between the two teaching methods as large as (or larger than) that which has been observed in your sample?

So, you might get a p -value such as 0.03 (i.e., p = .03). This means that there is a 3% chance of finding a difference as large as (or larger than) the one in your study given that the null hypothesis is true. However, you want to know whether this is "statistically significant". Typically, if there was a 5% or less chance (5 times in 100 or less) that the difference in the mean exam performance between the two teaching methods (or whatever statistic you are using) is as different as observed given the null hypothesis is true, you would reject the null hypothesis and accept the alternative hypothesis. Alternately, if the chance was greater than 5% (5 times in 100 or more), you would fail to reject the null hypothesis and would not accept the alternative hypothesis. As such, in this example where p = .03, we would reject the null hypothesis and accept the alternative hypothesis. We reject it because at a significance level of 0.03 (i.e., less than a 5% chance), the result we obtained could happen too frequently for us to be confident that it was the two teaching methods that had an effect on exam performance.

Whilst there is relatively little justification why a significance level of 0.05 is used rather than 0.01 or 0.10, for example, it is widely used in academic research. However, if you want to be particularly confident in your results, you can set a more stringent level of 0.01 (a 1% chance or less; 1 in 100 chance or less).

One- and two-tailed predictions

When considering whether we reject the null hypothesis and accept the alternative hypothesis, we need to consider the direction of the alternative hypothesis statement. For example, the alternative hypothesis that was stated earlier is:

The alternative hypothesis tells us two things. First, what predictions did we make about the effect of the independent variable(s) on the dependent variable(s)? Second, what was the predicted direction of this effect? Let's use our example to highlight these two points.

Sarah predicted that her teaching method (independent variable: teaching method), whereby she not only required her students to attend lectures, but also seminars, would have a positive effect (that is, increased) students' performance (dependent variable: exam marks). If an alternative hypothesis has a direction (and this is how you want to test it), the hypothesis is one-tailed. That is, it predicts direction of the effect. If the alternative hypothesis has stated that the effect was expected to be negative, this is also a one-tailed hypothesis.

Alternatively, a two-tailed prediction means that we do not make a choice over the direction that the effect of the experiment takes. Rather, it simply implies that the effect could be negative or positive. If Sarah had made a two-tailed prediction, the alternative hypothesis might have been:

In other words, we simply take out the word "positive", which implies the direction of our effect. In our example, making a two-tailed prediction may seem strange. After all, it would be logical to expect that "extra" tuition (going to seminar classes as well as lectures) would either have a positive effect on students' performance or no effect at all, but certainly not a negative effect. However, this is just our opinion (and hope) and certainly does not mean that we will get the effect we expect. Generally speaking, making a one-tail prediction (i.e., and testing for it this way) is frowned upon as it usually reflects the hope of a researcher rather than any certainty that it will happen. Notable exceptions to this rule are when there is only one possible way in which a change could occur. This can happen, for example, when biological activity/presence in measured. That is, a protein might be "dormant" and the stimulus you are using can only possibly "wake it up" (i.e., it cannot possibly reduce the activity of a "dormant" protein). In addition, for some statistical tests, one-tailed tests are not possible.

Rejecting or failing to reject the null hypothesis

Let's return finally to the question of whether we reject or fail to reject the null hypothesis.

If our statistical analysis shows that the significance level is below the cut-off value we have set (e.g., either 0.05 or 0.01), we reject the null hypothesis and accept the alternative hypothesis. Alternatively, if the significance level is above the cut-off value, we fail to reject the null hypothesis and cannot accept the alternative hypothesis. You should note that you cannot accept the null hypothesis, but only find evidence against it.

International Encyclopedia of Statistical Science pp 1318–1321 Cite as

Significance Testing: An Overview

- Elena Kulinskaya 2 ,

- Stephan Morgenthaler 3 &

- Robert G. Staudte 4

- Reference work entry

- First Online: 01 January 2014

223 Accesses

Introduction

A significance test is a statistical procedure for testing a hypothesis based on experimental or observational data. Let, for example, \(\bar{{X}}_{1}\) and \(\bar{{X}}_{2}\) be the average scores obtained in two groups of randomly selected subjects and let μ 1 and μ 2 denote the corresponding population averages. The observed averages can be used to test the null hypothesis μ 1 = μ 2 , which expresses the idea that both populations have equal average scores. A significant result occurs if \(\bar{{X}}_{1}\) and \(\bar{{X}}_{2}\) are very different from each other, because this contradicts or falsifies the null hypothesis. If the two group averages are similar to each other, the null hypothesis is not contradicted by the data. What exact values of the difference \(\bar{{X}}_{1} -\bar{ {X}}_{2}\) of the group averages are judged as significant depends on various elements. The variation of the scores between the subjects, for example, must be taken into account. This...

This is a preview of subscription content, log in via an institution .

Buying options

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

References and Further Reading

Berger JO (2003) Could Fisher, Jeffreys and Neyman have agreed on testing? Stat Sci 18(1):1–32, With discussion

Google Scholar

Dollinger MB, Kulinskaya E, Staudte RG (1996) Fuzzy hypothesis tests and confidence intervals. In: Dowe DL, Korb KB, Oliver JJ (eds) Information, statistics and induction in science. World Scientific, Singapore, pp 119–128

Fisher RA (1990) Statistical methods, experimental design and scientific inference. Oxford University Press, Oxford. Reprints of Fisher’s main books, first printed in 1925, 1935 and 1956, respectively. The 14th edition of the first book was printed in 1973

Geyer C, Meeden G (2006) Fuzzy confidence intervals and p-values (with discussion). Stat Sci 20:258–387

MathSciNet Google Scholar

Hubbard R, Bayarri MJ (2003) Confusion over measures of evidence ( p ’s) versus errors ( a ’s) in classical statistical testing. Am Stat 57(3):171–182, with discussion

Kass RE, Raftery AE (1995) Bayes factors. J Am Stat Assoc 90: 773–795

MATH Google Scholar

Krantz D (1999) The null hypothesis testing controversy in psychology. J Am Stat Assoc 94(448):1372–1381

Kulinskaya E, Morgenthaler S, Staudte RG (2008) Meta analysis: a guide to calibrating and combining statistical evidence. Wiley, Chichester, www.wiley.com/go/meta_analysis

Lavine M, Schervish M (1999) Bayes factors: what they are and what they are not. Am Stat 53:119–122

Lehmann EL (1986) Testing statistical hypotheses, 2nd edn. Wiley, New York

Lehmann EL (1993) The Fisher, Neyman-Pearson theories of testing hypotheses: one theory or two? J Am Stat Assoc 88:1242–1249

Neyman J (1935) On the problem of confidence intervals. Ann Math Stat 6:111–116

Neyman J (1950) First course in probability and statistics. Henry Holt, New York

Neyman J, Pearson ES (1928) On the use and interpretation of certain test criteria for purposes of statistical inference. Biometrika 20A:175–240 and 263–294

Neyman J, Pearson ES (1933) On the problem of the most efficient tests of statistical hypotheses. Philos Trans R Soc A 231:289–337

Welch BL (1938) The significance of the difference between two means when the variances are unequal. Biometrika 29:350–361

Wellek S (2003) Testing statistical hypotheses of equivalence. Chapman & Hall/CRC Press, New York

Download references

Author information

Authors and affiliations.

University of East Anglia, Norwich, UK

Elena Kulinskaya

Ecole Polytechnique Fédéralede Lausanne, Lausanne, Switzerland

Stephan Morgenthaler

La Trobe University, Melbourne, VIC, Australia

Robert G. Staudte

You can also search for this author in PubMed Google Scholar

Editor information

Editors and affiliations.

Department of Statistics and Informatics, Faculty of Economics, University of Kragujevac, City of Kragujevac, Serbia

Miodrag Lovric

Rights and permissions

Reprints and permissions

Copyright information

© 2011 Springer-Verlag Berlin Heidelberg

About this entry

Cite this entry.

Kulinskaya, E., Morgenthaler, S., Staudte, R.G. (2011). Significance Testing: An Overview. In: Lovric, M. (eds) International Encyclopedia of Statistical Science. Springer, Berlin, Heidelberg. https://doi.org/10.1007/978-3-642-04898-2_514

Download citation

DOI : https://doi.org/10.1007/978-3-642-04898-2_514

Published : 02 December 2014

Publisher Name : Springer, Berlin, Heidelberg

Print ISBN : 978-3-642-04897-5

Online ISBN : 978-3-642-04898-2

eBook Packages : Mathematics and Statistics Reference Module Computer Science and Engineering

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Prompt Library

- DS/AI Trends

- Stats Tools

- Interview Questions

- Generative AI

- Machine Learning

- Deep Learning

Level of Significance & Hypothesis Testing

In hypothesis testing , the level of significance is a measure of how confident you can be about rejecting the null hypothesis. This blog post will explore what hypothesis testing is and why understanding significance levels are important for your data science projects. In addition, you will also get to test your knowledge of level of significance towards the end of the blog with the help of quiz . These questions can help you test your understanding and prepare for data science / statistics interviews . Before we look into what level of significance is, let’s quickly understand what is hypothesis testing.

Table of Contents

What is Hypothesis testing and how is it related to significance level?

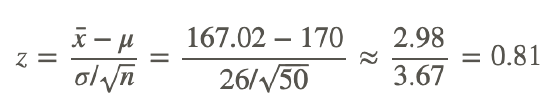

Hypothesis testing can be defined as tests performed to evaluate whether a claim or theory about something is true or otherwise. In order to perform hypothesis tests, the following steps need to be taken:

- Hypothesis formulation: Formulate the null and alternate hypothesis

- Data collection: Gather the sample of data

- Statistical tests: Determine the statistical test and test statistics. The statistical tests can be z-test or t-test depending upon the number of data samples and/or whether the population variance is known otherwise.

- Set the level of significance

- Calculate the p-value

- Draw conclusions: Based on the value of p-value and significance level, reject the null hypothesis or otherwise.

A detailed explanation is provided in one of my related posts titled hypothesis testing explained with examples .

What is the level of significance?

The level of significance is defined as the criteria or threshold value based on which one can reject the null hypothesis or fail to reject the null hypothesis. The level of significance determines whether the outcome of hypothesis testing is statistically significant or otherwise. The significance level is also called as alpha level.

Another way of looking at the level of significance is the value which represents the likelihood of making a type I error . You may recall that Type I error occurs while evaluating hypothesis testing outcomes. If you reject the null hypothesis by mistake, you end up making a Type I error. This scenario is also termed as “false positive”. Take an example of a person alleged with committing a crime. The null hypothesis is that the person is not guilty. Type I error happens when you reject the null hypothesis by mistake. Given the example, a Type I error happens when you reject the null hypothesis that the person is not guilty by mistake. The innocent person is convicted.

The level of significance can take values such as 0.1 , 0.05 , 0.01 . The most common value of the level of significance is 0.05 . The lower the value of significance level, the lesser is the chance of type I error. That would essentially mean that the experiment or hypothesis testing outcome would really need to be highly precise for one to reject the null hypothesis. The likelihood of making a type I error would be very low. However, that does increase the chances of making type II errors as you may make mistakes in failing to reject the null hypothesis. You may want to read more details in relation to type I errors and type II errors in this post – Type I errors and Type II errors in hypothesis testing

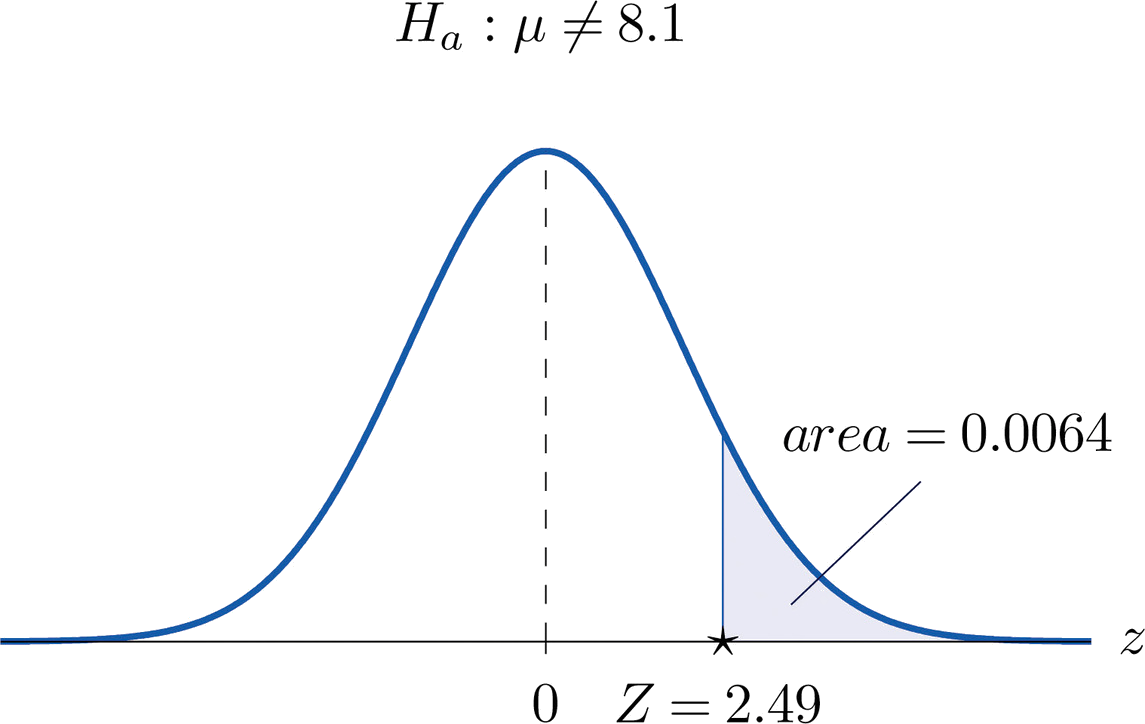

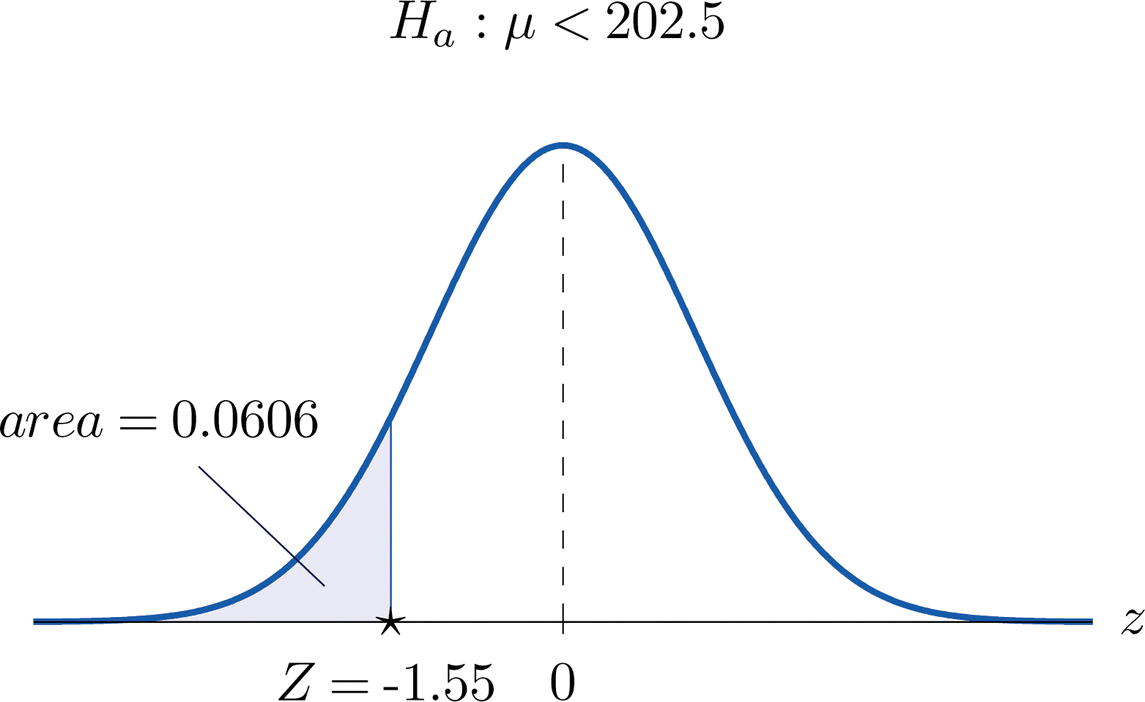

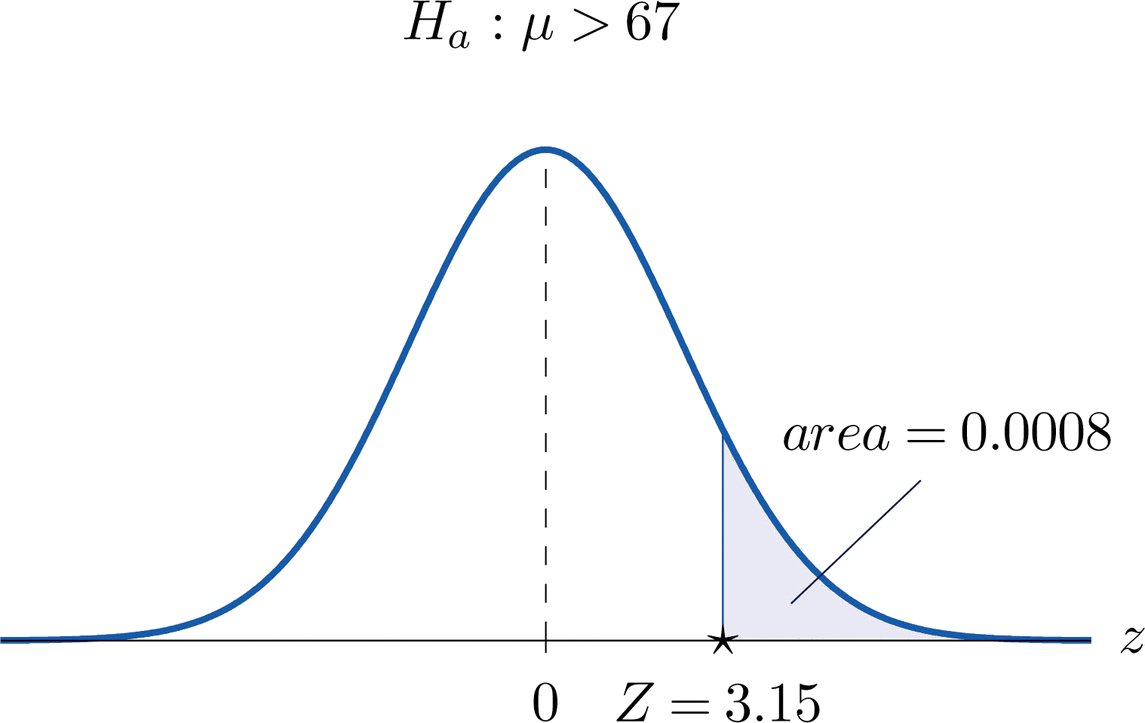

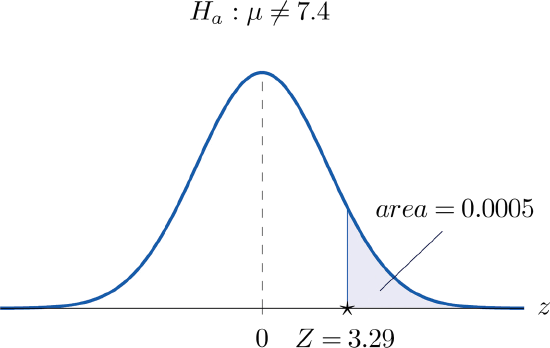

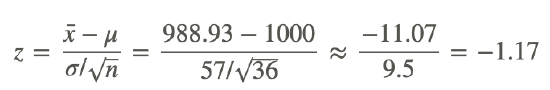

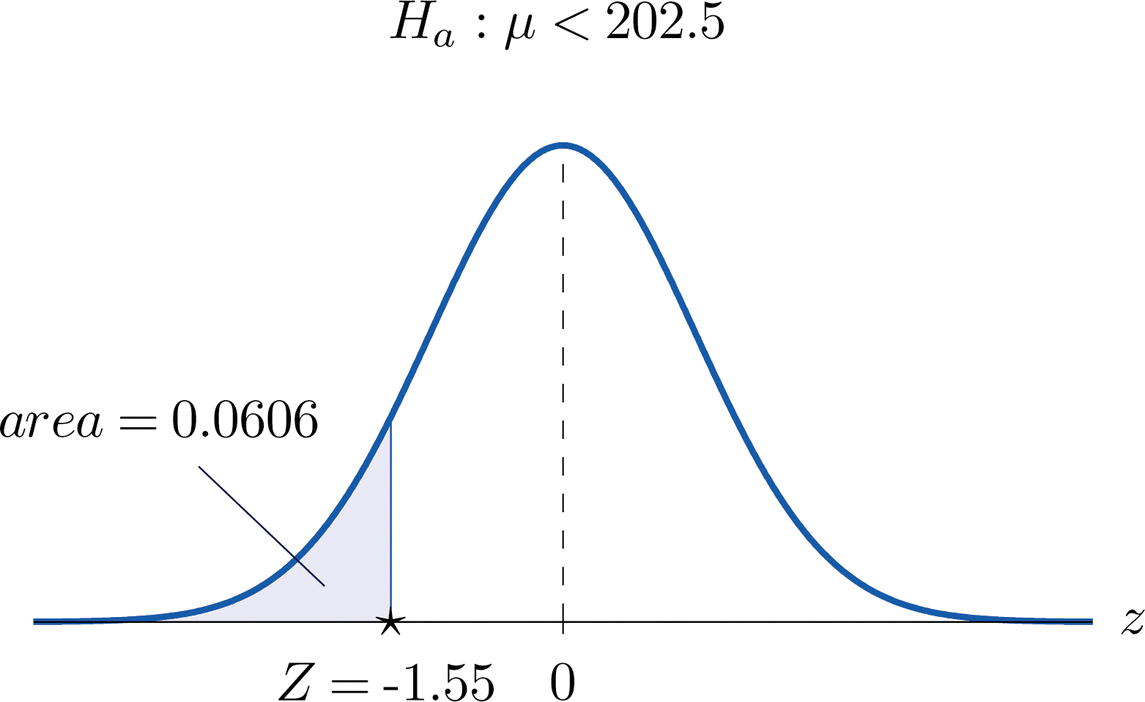

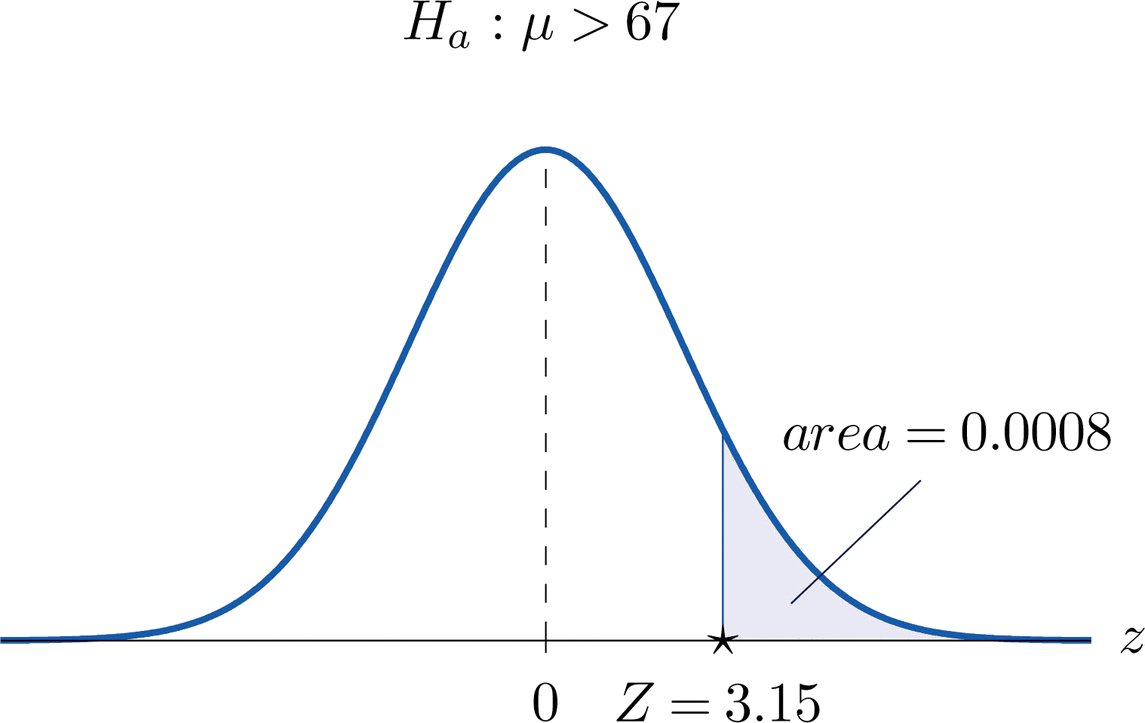

The outcome of the hypothesis testing is evaluated with the help of a p-value. If the p-value is less than the level of significance, then the hypothesis testing outcome is statistically significant. On the other hand, if the hypothesis testing outcome is not statistically significant or the p-value is more than the level of significance, then we fail to reject the null hypothesis. The same is represented in the picture below for a right-tailed test. I will be posting details on different types of tail test in future posts.

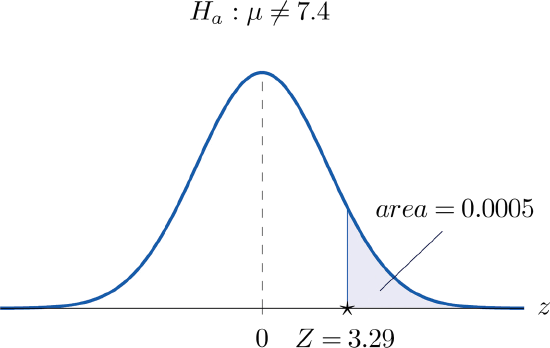

The picture below represents the concept for two-tailed hypothesis test:

For example: Let’s say that a school principal wants to find out whether extra coaching of 2 hours after school help students do better in their exams. The hypothesis would be as follows:

- Null hypothesis : There is no difference between the performance of students even after providing extra coaching of 2 hours after the schools are over.

- Alternate hypothesis : Students perform better when they get extra coaching of 2 hours after the schools are over. This hypothesis testing example would require a level of significant value at 0.05 or simply put, it would need to be highly precise that there’s actually a difference between the performance of students based on whether they take extra coaching.

Now, let’s say that we conduct this experiment with 100 students and measure their scores in exams. The test statistics is computed to be z=-0.50 (p-value=0.62). Since the p-value is more than 0.05, we fail to reject the null hypothesis. There is not enough evidence to show that there’s a difference in the performance of students based on whether they get extra coaching.

While performing hypothesis tests or experiments, it is important to keep the level of significance in mind.

Why does one need a level of significance?

In hypothesis tests, if we do not have some sort of threshold by which to determine whether your results are statistically significant enough for you to reject the null hypothesis, then it would be tough for us to determine whether your findings are significant or not. This is why we take into account levels of significance when performing hypothesis tests and experiments.

Since hypothesis testing helps us in making decisions about our data, having a level of significance set up allows one to know what sort of chances their findings might have of actually being due to the null hypothesis. If you set your level of significance at 0.05 for example, it would mean that there’s only a five percent chance that the difference between groups (assuming two groups are tested) is due to random sampling error. So if we found a difference in the performance of students based on whether they take extra coaching, we would need to consider other factors that could have contributed to the difference.

This is why hypothesis testing and level of significance go hand in hand with one another: hypothesis tests help us know whether our data falls within a certain range where it’s statistically significant or not so statistically significant whereas the level of significance tells us how likely is it that our hypothesis testing results are not due to random sampling error.

How is the level of significance used in hypothesis testing?

The level of significance along with the test statistic and p-value formed a key part of hypothesis testing. The value that you derive from hypothesis testing depends on whether or not you accept/reject the null hypothesis, given your findings at each step. Before going into rejection vs non-rejection, let’s understand the terms better.

If the test statistic falls within the critical region, you reject the null hypothesis. This means that your findings are statistically significant and support the alternate hypothesis. The value of the p-value determines how likely it is for finding this outcome if, in fact, the null hypothesis were true. If the p-value is less than or equal to the level of significance, you reject the null hypothesis. This means that your hypothesis testing outcome was statistically significant at a certain degree and in favor of the alternate hypothesis.

If on the other hand, the p-value is greater than alpha level or significance level, then you fail to reject the null hypothesis. These findings are not statistically significant enough for one to reject the null hypothesis. The same is represented in the diagram below:

Level of Significance – Quiz / Interview Questions

Here are some practice questions which can help you in testing your questions, and, also prepare for interviews.

#1. The p-value less than the level of significance would mean which of the following?

#2. level of significance is also called as ________, #3. the p-value of 0.03 is statistically significant for significance level as 0.01, #4. which of the following is looks to be inappropriate level of significance, #5. the statistically significant outcome of hypothesis testing would mean which of the following, #6. which one of the following is considered most popular choice of significance level, #7. which of the following will result in greater type i error, #8. which of the following will result in greater type ii error, recent posts.

- Self-Supervised Learning vs Transfer Learning: Examples - April 3, 2024

- OKRs vs KPIs vs KRAs: Differences and Examples - February 21, 2024

- CEP vs Traditional Database Examples - February 2, 2024

Oops! Check your answers again. The minimum pass percentage is 70%.

Hypothesis testing is an important statistical concept that helps us determine whether the claim made about anything is true or otherwise. The hypothesis test statistic, level of significance, and p-value all work together to help you make decisions about your data. If our hypothesis tests show enough evidence to reject the null hypothesis, then we know statistically significant findings are at hand. This post gave you ideas for how you can use hypothesis testing in your experiments by understanding what it means when someone rejects or fails to reject the null hypothesis.

Ajitesh Kumar

3 responses.

Well explained with examples and helpful illustration

Thank you for your feedback

Well explained

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

- Search for:

- Excellence Awaits: IITs, NITs & IIITs Journey

ChatGPT Prompts (250+)

- Generate Design Ideas for App

- Expand Feature Set of App

- Create a User Journey Map for App

- Generate Visual Design Ideas for App

- Generate a List of Competitors for App

- Self-Supervised Learning vs Transfer Learning: Examples

- OKRs vs KPIs vs KRAs: Differences and Examples

- CEP vs Traditional Database Examples

- Retrieval Augmented Generation (RAG) & LLM: Examples

- Attention Mechanism in Transformers: Examples

Data Science / AI Trends

- • Prepend any arxiv.org link with talk2 to load the paper into a responsive chat application

- • Custom LLM and AI Agents (RAG) On Structured + Unstructured Data - AI Brain For Your Organization

- • Guides, papers, lecture, notebooks and resources for prompt engineering

- • Common tricks to make LLMs efficient and stable

- • Machine learning in finance

Free Online Tools

- Create Scatter Plots Online for your Excel Data

- Histogram / Frequency Distribution Creation Tool

- Online Pie Chart Maker Tool

- Z-test vs T-test Decision Tool

- Independent samples t-test calculator

Recent Comments

I found it very helpful. However the differences are not too understandable for me

Very Nice Explaination. Thankyiu very much,

in your case E respresent Member or Oraganization which include on e or more peers?

Such a informative post. Keep it up

Thank you....for your support. you given a good solution for me.

- Forgot your Password?

First, please create an account

Significance level and power of a hypothesis test.

- Selecting an Appropriate Significance Level

- Cautions about Significance Level

- Power of a Hypothesis Test

1. Significance Level

Before we begin, you should first understand what is meant by statistical significance. When you calculate a test statistic in a hypothesis test, you can calculate the p-value. The p-value is the probability that you would have obtained a statistic as large (or small, or extreme) as the one you got if the null hypothesis is true. It's a conditional probability.

Sometimes you’re willing to attribute whatever difference you found between your statistic and your parameter to chance. If this is the case, you fail to reject the null hypothesis, if you’re willing to write off the differences between your statistic and your hypothesized parameter.

If you’re not, meaning it's just too far away from the mean to attribute to chance, then you’re going to reject the null hypothesis in favor of the alternative.

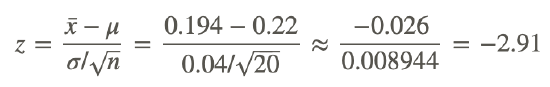

This is what it might look like for a two-tailed test.

The hypothesized mean is right in the center of the normal distribution. Anything that is considered to be too far away--something like two standard deviations or more away--you would reject the null hypothesis. Anything you might attribute to chance, within the two standard deviations, you would fail to reject the null hypothesis. Again, this is assuming that the null hypothesis is true.

However, think about this. All of this curve assumes that the null hypothesis is true, but you make a decision to reject the null hypothesis anyway if the statistic you got is far away. It means that this would rarely happen by chance. But, it's still the wrong thing to do technically, if the null hypothesis is true. This idea that we're comfortable making some error sometimes is called a significance level.

The probability of rejecting the null hypothesis in error, in other words, rejecting the null hypothesis when it is, in fact, true, is called a Type I Error.

Fortunately, you get to choose how big you want this error to be. You could have stated that three standard deviations from the mean on either side as "too far away". Or, for instance, you could say you only want to be wrong 1% of the time, or 5% of the time, meaning that you are rejecting the null hypothesis in error that often.

When you choose how big you want alpha to be, you do it before you start the tests. You do it this way to reduce bias because if you already ran the tests, you could choose an alpha level that would automatically make your result seem more significant than it is. You don't want to bias your results that way.

Take a look back at this visual here.

The alpha, in this case, is 0.05. If you recall, the 68-95-99.7 rule says that 95% of the values will fall within two standard deviations of the mean, meaning that 5% of the values will fall outside of those two standard deviations. Your decision to reject the null hypothesis will be 5% of the time; the most extreme 5% of cases, you will not be willing to attribute to chance variation from the hypothesized mean.

The level of significance will also depend on the type of experiment that you're doing.

If you want to be really cautious and not reject the null hypothesis in error very much, you'll choose a low significance level, like 0.01. This means that only the most extreme 1% of cases will have the null hypothesis rejected.

If you don't believe a Type I Error is going to be that bad, you might allow the significance level to be something higher, like 0.05 or 0.10. Those still seem like low numbers. However, think about what that means. This means that one out of every 20, or one out of every ten samples of that particular size will have the null hypothesis rejected even when it's true. Are you willing to make that mistake one out of every 20 times or once every ten times? Or are you only willing to make that mistake one out of every 100 times? Setting this value to something really low reduces the probability that you make that error.

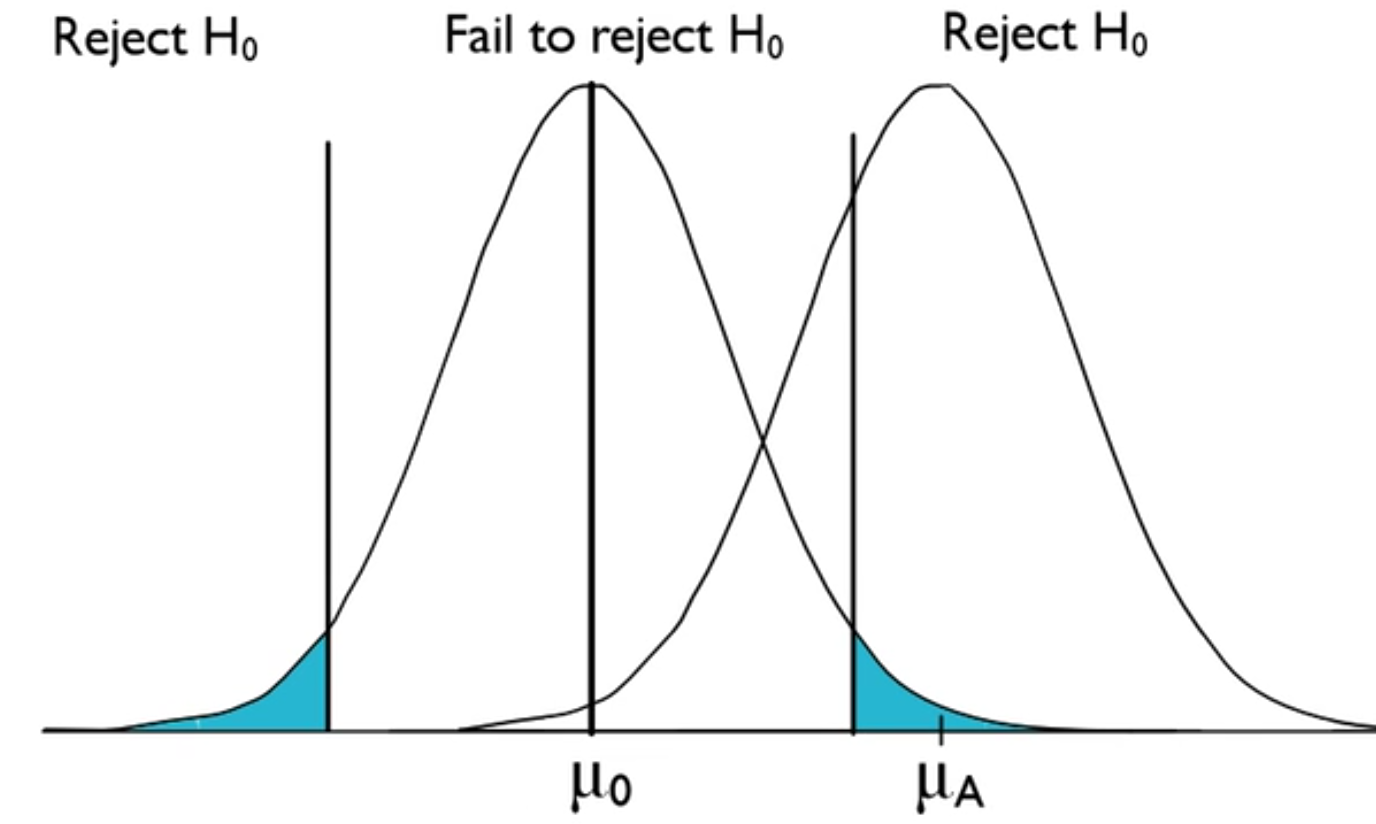

It is important to note that you don't want the significance level to be too low. The problem with setting it really low is that as you lower the value of a Type 1 Error, you actually increase the probability of a Type II Error.

A Type II Error is failing to reject the null hypothesis when a difference does exist. This reduces the power or the sensitivity of your significance test, meaning that you will not be able to detect very real differences from the null hypothesis when they actually exist if your alpha level is set too low.

2. Power of a Hypothesis Test

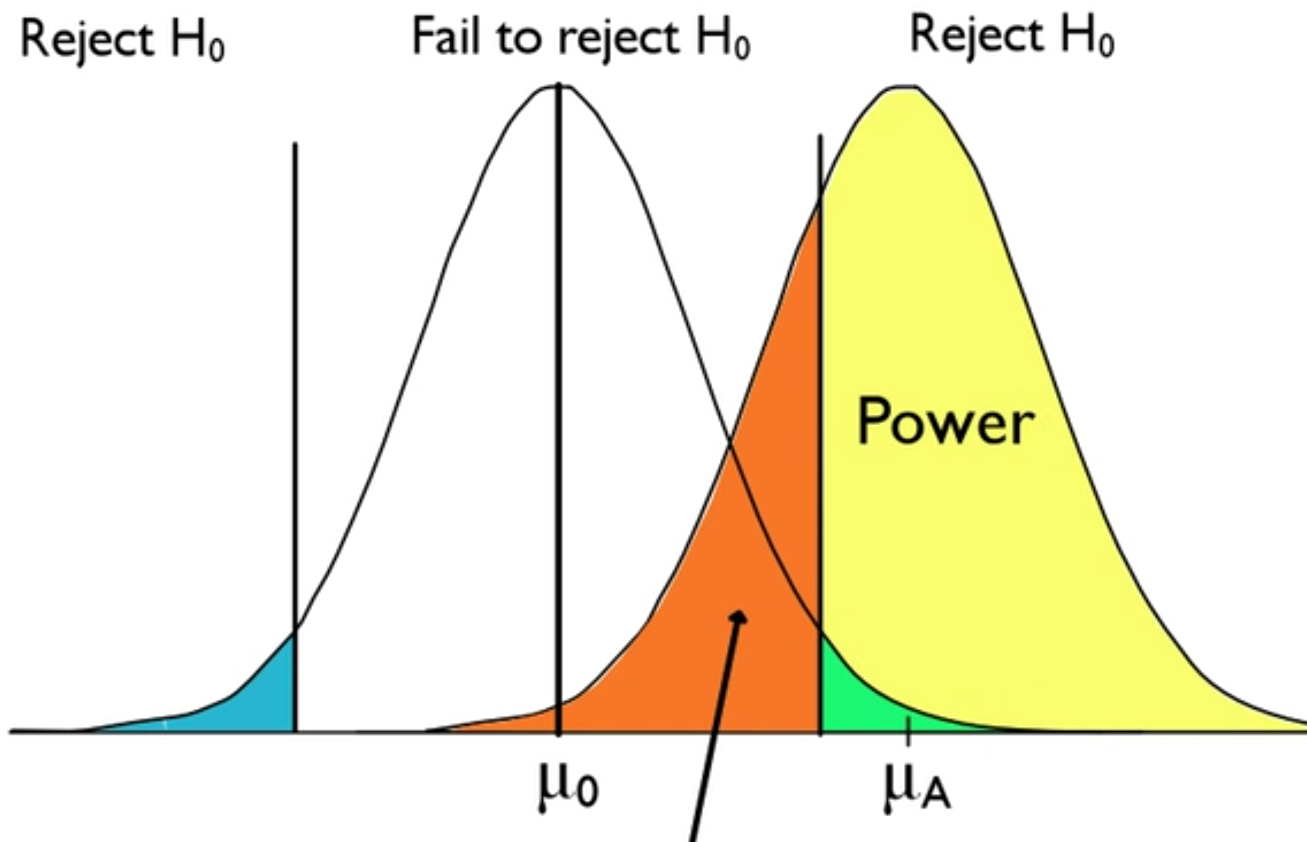

You might wonder, what is power? Power is the ability of a hypothesis test to detect a difference that is present.

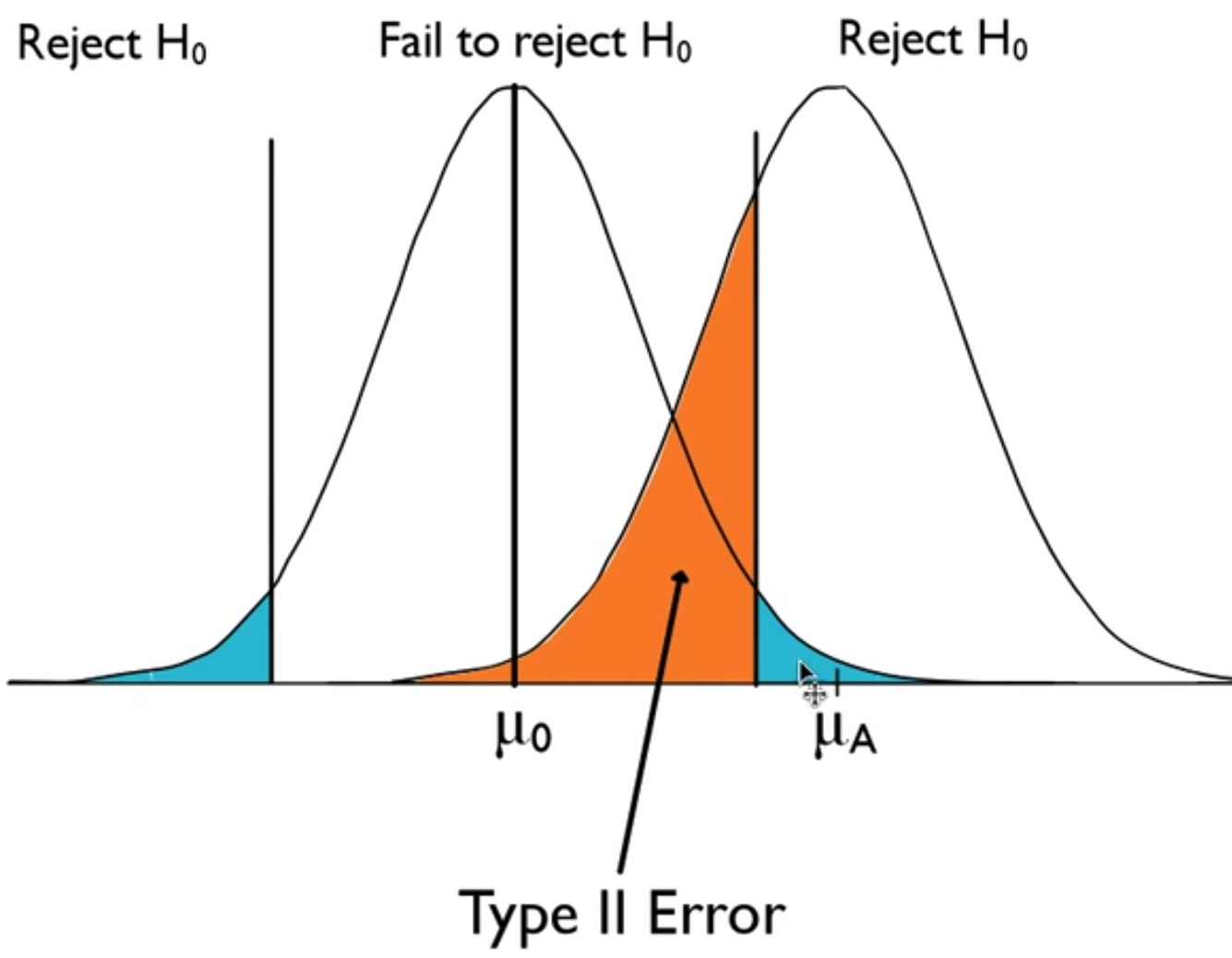

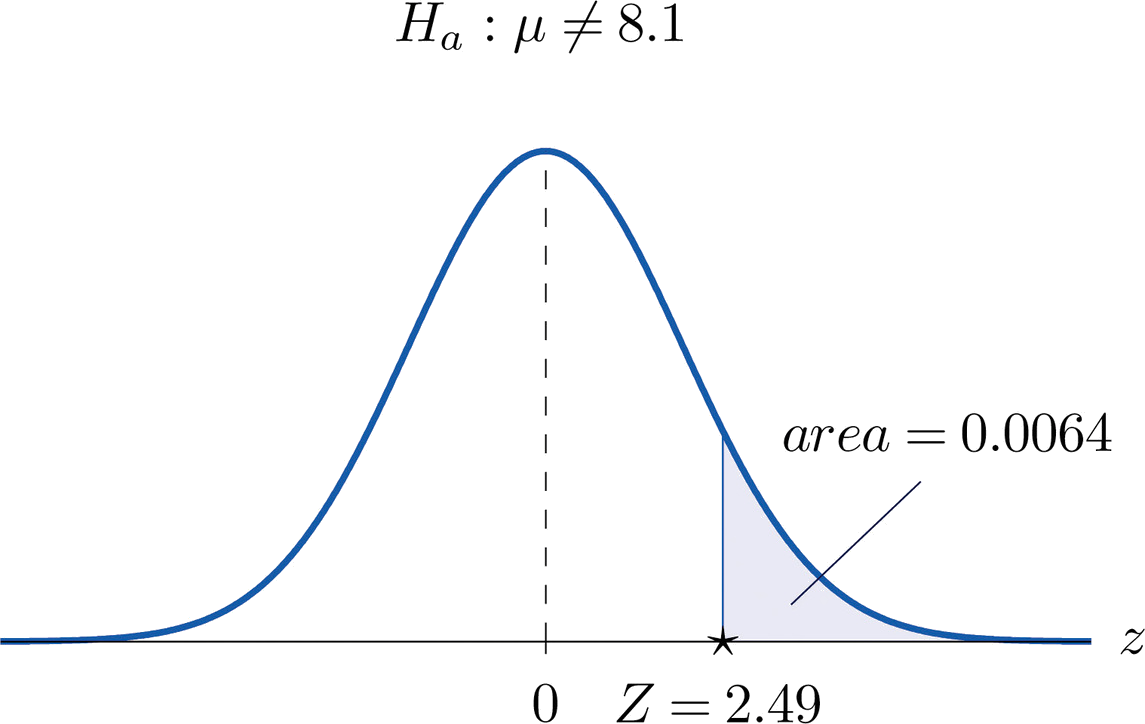

Consider the curves below. Note that μ 0 is the hypothesized mean and μ A is the actual mean. The actual mean is different than the null hypothesis; therefore, you should reject the null hypothesis. What you end up with is an identical curve to the original normal curve.

If you take a look at the curve below, it illustrates the way the data is actually behaving, versus the way you thought it should behave based on the null hypothesis. This line in the sand still exists, which means that because we should reject the null hypothesis, this area in orange is a mistake.

Failing to reject the null hypothesis is wrong, if this is actually the mean, which is different from the null hypothesis' mean. This is a type II error.

Now, the area in yellow on the other side, where you are correctly rejecting the null hypothesis when a difference is present, is called power of a hypothesis test . Power is the probability of rejecting the null hypothesis correctly, rejecting when the null hypothesis is false, which is a correct decision.

summary The probability of a type I error is a value that you get to choose in a hypothesis test. It is called the significance level and is denoted with the Greek letter alpha. Choosing a big significance level allows you to reject the null hypothesis more often, though the problem is that sometimes we reject the null hypothesis in error. When the difference really doesn't exist, you say that a difference does exist. However, if you choose a really small one, you reject the null hypothesis less often. Sometimes you fail to reject the null hypothesis in error as well. There's no foolproof method here. Usually, you want to keep your significance levels low, such as 0.05 or 0.01. Note that 0.05 is the default choice for most significance tests for most hypothesis testing. Good luck!

Source: Adapted from Sophia tutorial by Jonathan Osters.

The probability that we reject the null hypothesis (correctly) when a difference truly does exist.

- Privacy Policy

- Cookie Policy

- Terms of Use

© 2024 SOPHIA Learning, LLC. SOPHIA is a registered trademark of SOPHIA Learning, LLC.

P-Value And Statistical Significance: What It Is & Why It Matters

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

The p-value in statistics quantifies the evidence against a null hypothesis. A low p-value suggests data is inconsistent with the null, potentially favoring an alternative hypothesis. Common significance thresholds are 0.05 or 0.01.

Hypothesis testing

When you perform a statistical test, a p-value helps you determine the significance of your results in relation to the null hypothesis.

The null hypothesis (H0) states no relationship exists between the two variables being studied (one variable does not affect the other). It states the results are due to chance and are not significant in supporting the idea being investigated. Thus, the null hypothesis assumes that whatever you try to prove did not happen.

The alternative hypothesis (Ha or H1) is the one you would believe if the null hypothesis is concluded to be untrue.

The alternative hypothesis states that the independent variable affected the dependent variable, and the results are significant in supporting the theory being investigated (i.e., the results are not due to random chance).

What a p-value tells you

A p-value, or probability value, is a number describing how likely it is that your data would have occurred by random chance (i.e., that the null hypothesis is true).

The level of statistical significance is often expressed as a p-value between 0 and 1.

The smaller the p -value, the less likely the results occurred by random chance, and the stronger the evidence that you should reject the null hypothesis.

Remember, a p-value doesn’t tell you if the null hypothesis is true or false. It just tells you how likely you’d see the data you observed (or more extreme data) if the null hypothesis was true. It’s a piece of evidence, not a definitive proof.

Example: Test Statistic and p-Value

Suppose you’re conducting a study to determine whether a new drug has an effect on pain relief compared to a placebo. If the new drug has no impact, your test statistic will be close to the one predicted by the null hypothesis (no difference between the drug and placebo groups), and the resulting p-value will be close to 1. It may not be precisely 1 because real-world variations may exist. Conversely, if the new drug indeed reduces pain significantly, your test statistic will diverge further from what’s expected under the null hypothesis, and the p-value will decrease. The p-value will never reach zero because there’s always a slim possibility, though highly improbable, that the observed results occurred by random chance.

P-value interpretation

The significance level (alpha) is a set probability threshold (often 0.05), while the p-value is the probability you calculate based on your study or analysis.

A p-value less than or equal to your significance level (typically ≤ 0.05) is statistically significant.

A p-value less than or equal to a predetermined significance level (often 0.05 or 0.01) indicates a statistically significant result, meaning the observed data provide strong evidence against the null hypothesis.

This suggests the effect under study likely represents a real relationship rather than just random chance.

For instance, if you set α = 0.05, you would reject the null hypothesis if your p -value ≤ 0.05.

It indicates strong evidence against the null hypothesis, as there is less than a 5% probability the null is correct (and the results are random).

Therefore, we reject the null hypothesis and accept the alternative hypothesis.

Example: Statistical Significance

Upon analyzing the pain relief effects of the new drug compared to the placebo, the computed p-value is less than 0.01, which falls well below the predetermined alpha value of 0.05. Consequently, you conclude that there is a statistically significant difference in pain relief between the new drug and the placebo.

What does a p-value of 0.001 mean?

A p-value of 0.001 is highly statistically significant beyond the commonly used 0.05 threshold. It indicates strong evidence of a real effect or difference, rather than just random variation.

Specifically, a p-value of 0.001 means there is only a 0.1% chance of obtaining a result at least as extreme as the one observed, assuming the null hypothesis is correct.

Such a small p-value provides strong evidence against the null hypothesis, leading to rejecting the null in favor of the alternative hypothesis.

A p-value more than the significance level (typically p > 0.05) is not statistically significant and indicates strong evidence for the null hypothesis.

This means we retain the null hypothesis and reject the alternative hypothesis. You should note that you cannot accept the null hypothesis; we can only reject it or fail to reject it.

Note : when the p-value is above your threshold of significance, it does not mean that there is a 95% probability that the alternative hypothesis is true.

One-Tailed Test

Two-Tailed Test

How do you calculate the p-value ?

Most statistical software packages like R, SPSS, and others automatically calculate your p-value. This is the easiest and most common way.

Online resources and tables are available to estimate the p-value based on your test statistic and degrees of freedom.

These tables help you understand how often you would expect to see your test statistic under the null hypothesis.

Understanding the Statistical Test:

Different statistical tests are designed to answer specific research questions or hypotheses. Each test has its own underlying assumptions and characteristics.

For example, you might use a t-test to compare means, a chi-squared test for categorical data, or a correlation test to measure the strength of a relationship between variables.

Be aware that the number of independent variables you include in your analysis can influence the magnitude of the test statistic needed to produce the same p-value.

This factor is particularly important to consider when comparing results across different analyses.

Example: Choosing a Statistical Test

If you’re comparing the effectiveness of just two different drugs in pain relief, a two-sample t-test is a suitable choice for comparing these two groups. However, when you’re examining the impact of three or more drugs, it’s more appropriate to employ an Analysis of Variance ( ANOVA) . Utilizing multiple pairwise comparisons in such cases can lead to artificially low p-values and an overestimation of the significance of differences between the drug groups.

How to report

A statistically significant result cannot prove that a research hypothesis is correct (which implies 100% certainty).

Instead, we may state our results “provide support for” or “give evidence for” our research hypothesis (as there is still a slight probability that the results occurred by chance and the null hypothesis was correct – e.g., less than 5%).

Example: Reporting the results

In our comparison of the pain relief effects of the new drug and the placebo, we observed that participants in the drug group experienced a significant reduction in pain ( M = 3.5; SD = 0.8) compared to those in the placebo group ( M = 5.2; SD = 0.7), resulting in an average difference of 1.7 points on the pain scale (t(98) = -9.36; p < 0.001).

The 6th edition of the APA style manual (American Psychological Association, 2010) states the following on the topic of reporting p-values:

“When reporting p values, report exact p values (e.g., p = .031) to two or three decimal places. However, report p values less than .001 as p < .001.

The tradition of reporting p values in the form p < .10, p < .05, p < .01, and so forth, was appropriate in a time when only limited tables of critical values were available.” (p. 114)

- Do not use 0 before the decimal point for the statistical value p as it cannot equal 1. In other words, write p = .001 instead of p = 0.001.

- Please pay attention to issues of italics ( p is always italicized) and spacing (either side of the = sign).

- p = .000 (as outputted by some statistical packages such as SPSS) is impossible and should be written as p < .001.

- The opposite of significant is “nonsignificant,” not “insignificant.”

Why is the p -value not enough?

A lower p-value is sometimes interpreted as meaning there is a stronger relationship between two variables.

However, statistical significance means that it is unlikely that the null hypothesis is true (less than 5%).

To understand the strength of the difference between the two groups (control vs. experimental) a researcher needs to calculate the effect size .

When do you reject the null hypothesis?

In statistical hypothesis testing, you reject the null hypothesis when the p-value is less than or equal to the significance level (α) you set before conducting your test. The significance level is the probability of rejecting the null hypothesis when it is true. Commonly used significance levels are 0.01, 0.05, and 0.10.

Remember, rejecting the null hypothesis doesn’t prove the alternative hypothesis; it just suggests that the alternative hypothesis may be plausible given the observed data.

The p -value is conditional upon the null hypothesis being true but is unrelated to the truth or falsity of the alternative hypothesis.

What does p-value of 0.05 mean?

If your p-value is less than or equal to 0.05 (the significance level), you would conclude that your result is statistically significant. This means the evidence is strong enough to reject the null hypothesis in favor of the alternative hypothesis.

Are all p-values below 0.05 considered statistically significant?

No, not all p-values below 0.05 are considered statistically significant. The threshold of 0.05 is commonly used, but it’s just a convention. Statistical significance depends on factors like the study design, sample size, and the magnitude of the observed effect.

A p-value below 0.05 means there is evidence against the null hypothesis, suggesting a real effect. However, it’s essential to consider the context and other factors when interpreting results.

Researchers also look at effect size and confidence intervals to determine the practical significance and reliability of findings.

How does sample size affect the interpretation of p-values?

Sample size can impact the interpretation of p-values. A larger sample size provides more reliable and precise estimates of the population, leading to narrower confidence intervals.

With a larger sample, even small differences between groups or effects can become statistically significant, yielding lower p-values. In contrast, smaller sample sizes may not have enough statistical power to detect smaller effects, resulting in higher p-values.

Therefore, a larger sample size increases the chances of finding statistically significant results when there is a genuine effect, making the findings more trustworthy and robust.

Can a non-significant p-value indicate that there is no effect or difference in the data?

No, a non-significant p-value does not necessarily indicate that there is no effect or difference in the data. It means that the observed data do not provide strong enough evidence to reject the null hypothesis.

There could still be a real effect or difference, but it might be smaller or more variable than the study was able to detect.

Other factors like sample size, study design, and measurement precision can influence the p-value. It’s important to consider the entire body of evidence and not rely solely on p-values when interpreting research findings.

Can P values be exactly zero?

While a p-value can be extremely small, it cannot technically be absolute zero. When a p-value is reported as p = 0.000, the actual p-value is too small for the software to display. This is often interpreted as strong evidence against the null hypothesis. For p values less than 0.001, report as p < .001

Further Information

- P-values and significance tests (Kahn Academy)

- Hypothesis testing and p-values (Kahn Academy)

- Wasserstein, R. L., Schirm, A. L., & Lazar, N. A. (2019). Moving to a world beyond “ p “< 0.05”.

- Criticism of using the “ p “< 0.05”.

- Publication manual of the American Psychological Association

- Statistics for Psychology Book Download

Bland, J. M., & Altman, D. G. (1994). One and two sided tests of significance: Authors’ reply. BMJ: British Medical Journal , 309 (6958), 874.

Goodman, S. N., & Royall, R. (1988). Evidence and scientific research. American Journal of Public Health , 78 (12), 1568-1574.

Goodman, S. (2008, July). A dirty dozen: twelve p-value misconceptions . In Seminars in hematology (Vol. 45, No. 3, pp. 135-140). WB Saunders.

Lang, J. M., Rothman, K. J., & Cann, C. I. (1998). That confounded P-value. Epidemiology (Cambridge, Mass.) , 9 (1), 7-8.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- PMC5635437.1 ; 2015 Aug 25

- PMC5635437.2 ; 2016 Jul 13

- ➤ PMC5635437.3; 2016 Oct 10

Null hypothesis significance testing: a short tutorial

Cyril pernet.

1 Centre for Clinical Brain Sciences (CCBS), Neuroimaging Sciences, The University of Edinburgh, Edinburgh, UK

Version Changes

Revised. amendments from version 2.

This v3 includes minor changes that reflect the 3rd reviewers' comments - in particular the theoretical vs. practical difference between Fisher and Neyman-Pearson. Additional information and reference is also included regarding the interpretation of p-value for low powered studies.

Peer Review Summary

Although thoroughly criticized, null hypothesis significance testing (NHST) remains the statistical method of choice used to provide evidence for an effect, in biological, biomedical and social sciences. In this short tutorial, I first summarize the concepts behind the method, distinguishing test of significance (Fisher) and test of acceptance (Newman-Pearson) and point to common interpretation errors regarding the p-value. I then present the related concepts of confidence intervals and again point to common interpretation errors. Finally, I discuss what should be reported in which context. The goal is to clarify concepts to avoid interpretation errors and propose reporting practices.

The Null Hypothesis Significance Testing framework

NHST is a method of statistical inference by which an experimental factor is tested against a hypothesis of no effect or no relationship based on a given observation. The method is a combination of the concepts of significance testing developed by Fisher in 1925 and of acceptance based on critical rejection regions developed by Neyman & Pearson in 1928 . In the following I am first presenting each approach, highlighting the key differences and common misconceptions that result from their combination into the NHST framework (for a more mathematical comparison, along with the Bayesian method, see Christensen, 2005 ). I next present the related concept of confidence intervals. I finish by discussing practical aspects in using NHST and reporting practice.

Fisher, significance testing, and the p-value

The method developed by ( Fisher, 1934 ; Fisher, 1955 ; Fisher, 1959 ) allows to compute the probability of observing a result at least as extreme as a test statistic (e.g. t value), assuming the null hypothesis of no effect is true. This probability or p-value reflects (1) the conditional probability of achieving the observed outcome or larger: p(Obs≥t|H0), and (2) is therefore a cumulative probability rather than a point estimate. It is equal to the area under the null probability distribution curve from the observed test statistic to the tail of the null distribution ( Turkheimer et al. , 2004 ). The approach proposed is of ‘proof by contradiction’ ( Christensen, 2005 ), we pose the null model and test if data conform to it.

In practice, it is recommended to set a level of significance (a theoretical p-value) that acts as a reference point to identify significant results, that is to identify results that differ from the null-hypothesis of no effect. Fisher recommended using p=0.05 to judge whether an effect is significant or not as it is roughly two standard deviations away from the mean for the normal distribution ( Fisher, 1934 page 45: ‘The value for which p=.05, or 1 in 20, is 1.96 or nearly 2; it is convenient to take this point as a limit in judging whether a deviation is to be considered significant or not’). A key aspect of Fishers’ theory is that only the null-hypothesis is tested, and therefore p-values are meant to be used in a graded manner to decide whether the evidence is worth additional investigation and/or replication ( Fisher, 1971 page 13: ‘it is open to the experimenter to be more or less exacting in respect of the smallness of the probability he would require […]’ and ‘no isolated experiment, however significant in itself, can suffice for the experimental demonstration of any natural phenomenon’). How small the level of significance is, is thus left to researchers.

What is not a p-value? Common mistakes

The p-value is not an indication of the strength or magnitude of an effect . Any interpretation of the p-value in relation to the effect under study (strength, reliability, probability) is wrong, since p-values are conditioned on H0. In addition, while p-values are randomly distributed (if all the assumptions of the test are met) when there is no effect, their distribution depends of both the population effect size and the number of participants, making impossible to infer strength of effect from them.

Similarly, 1-p is not the probability to replicate an effect . Often, a small value of p is considered to mean a strong likelihood of getting the same results on another try, but again this cannot be obtained because the p-value is not informative on the effect itself ( Miller, 2009 ). Because the p-value depends on the number of subjects, it can only be used in high powered studies to interpret results. In low powered studies (typically small number of subjects), the p-value has a large variance across repeated samples, making it unreliable to estimate replication ( Halsey et al. , 2015 ).

A (small) p-value is not an indication favouring a given hypothesis . Because a low p-value only indicates a misfit of the null hypothesis to the data, it cannot be taken as evidence in favour of a specific alternative hypothesis more than any other possible alternatives such as measurement error and selection bias ( Gelman, 2013 ). Some authors have even argued that the more (a priori) implausible the alternative hypothesis, the greater the chance that a finding is a false alarm ( Krzywinski & Altman, 2013 ; Nuzzo, 2014 ).

The p-value is not the probability of the null hypothesis p(H0), of being true, ( Krzywinski & Altman, 2013 ). This common misconception arises from a confusion between the probability of an observation given the null p(Obs≥t|H0) and the probability of the null given an observation p(H0|Obs≥t) that is then taken as an indication for p(H0) (see Nickerson, 2000 ).

Neyman-Pearson, hypothesis testing, and the α-value

Neyman & Pearson (1933) proposed a framework of statistical inference for applied decision making and quality control. In such framework, two hypotheses are proposed: the null hypothesis of no effect and the alternative hypothesis of an effect, along with a control of the long run probabilities of making errors. The first key concept in this approach, is the establishment of an alternative hypothesis along with an a priori effect size. This differs markedly from Fisher who proposed a general approach for scientific inference conditioned on the null hypothesis only. The second key concept is the control of error rates . Neyman & Pearson (1928) introduced the notion of critical intervals, therefore dichotomizing the space of possible observations into correct vs. incorrect zones. This dichotomization allows distinguishing correct results (rejecting H0 when there is an effect and not rejecting H0 when there is no effect) from errors (rejecting H0 when there is no effect, the type I error, and not rejecting H0 when there is an effect, the type II error). In this context, alpha is the probability of committing a Type I error in the long run. Alternatively, Beta is the probability of committing a Type II error in the long run.

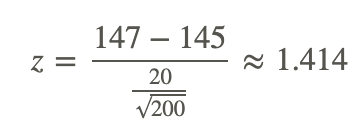

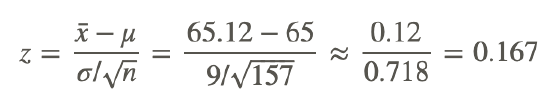

The (theoretical) difference in terms of hypothesis testing between Fisher and Neyman-Pearson is illustrated on Figure 1 . In the 1 st case, we choose a level of significance for observed data of 5%, and compute the p-value. If the p-value is below the level of significance, it is used to reject H0. In the 2 nd case, we set a critical interval based on the a priori effect size and error rates. If an observed statistic value is below and above the critical values (the bounds of the confidence region), it is deemed significantly different from H0. In the NHST framework, the level of significance is (in practice) assimilated to the alpha level, which appears as a simple decision rule: if the p-value is less or equal to alpha, the null is rejected. It is however a common mistake to assimilate these two concepts. The level of significance set for a given sample is not the same as the frequency of acceptance alpha found on repeated sampling because alpha (a point estimate) is meant to reflect the long run probability whilst the p-value (a cumulative estimate) reflects the current probability ( Fisher, 1955 ; Hubbard & Bayarri, 2003 ).

The figure was prepared with G-power for a one-sided one-sample t-test, with a sample size of 32 subjects, an effect size of 0.45, and error rates alpha=0.049 and beta=0.80. In Fisher’s procedure, only the nil-hypothesis is posed, and the observed p-value is compared to an a priori level of significance. If the observed p-value is below this level (here p=0.05), one rejects H0. In Neyman-Pearson’s procedure, the null and alternative hypotheses are specified along with an a priori level of acceptance. If the observed statistical value is outside the critical region (here [-∞ +1.69]), one rejects H0.

Acceptance or rejection of H0?

The acceptance level α can also be viewed as the maximum probability that a test statistic falls into the rejection region when the null hypothesis is true ( Johnson, 2013 ). Therefore, one can only reject the null hypothesis if the test statistics falls into the critical region(s), or fail to reject this hypothesis. In the latter case, all we can say is that no significant effect was observed, but one cannot conclude that the null hypothesis is true. This is another common mistake in using NHST: there is a profound difference between accepting the null hypothesis and simply failing to reject it ( Killeen, 2005 ). By failing to reject, we simply continue to assume that H0 is true, which implies that one cannot argue against a theory from a non-significant result (absence of evidence is not evidence of absence). To accept the null hypothesis, tests of equivalence ( Walker & Nowacki, 2011 ) or Bayesian approaches ( Dienes, 2014 ; Kruschke, 2011 ) must be used.

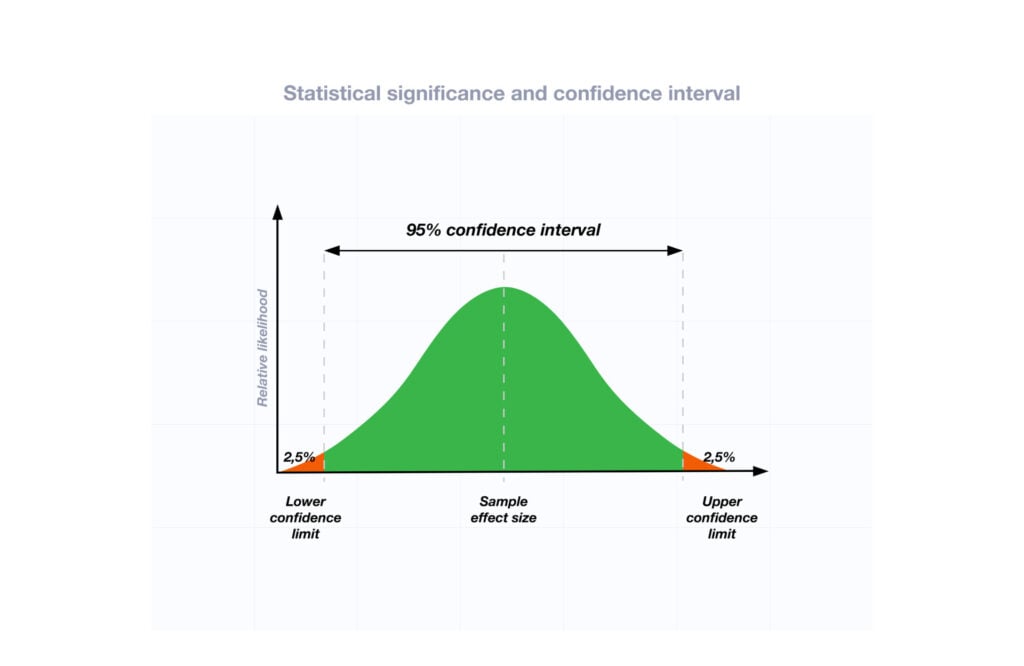

Confidence intervals

Confidence intervals (CI) are builds that fail to cover the true value at a rate of alpha, the Type I error rate ( Morey & Rouder, 2011 ) and therefore indicate if observed values can be rejected by a (two tailed) test with a given alpha. CI have been advocated as alternatives to p-values because (i) they allow judging the statistical significance and (ii) provide estimates of effect size. Assuming the CI (a)symmetry and width are correct (but see Wilcox, 2012 ), they also give some indication about the likelihood that a similar value can be observed in future studies. For future studies of the same sample size, 95% CI give about 83% chance of replication success ( Cumming & Maillardet, 2006 ). If sample sizes however differ between studies, CI do not however warranty any a priori coverage.

Although CI provide more information, they are not less subject to interpretation errors (see Savalei & Dunn, 2015 for a review). The most common mistake is to interpret CI as the probability that a parameter (e.g. the population mean) will fall in that interval X% of the time. The correct interpretation is that, for repeated measurements with the same sample sizes, taken from the same population, X% of times the CI obtained will contain the true parameter value ( Tan & Tan, 2010 ). The alpha value has the same interpretation as testing against H0, i.e. we accept that 1-alpha CI are wrong in alpha percent of the times in the long run. This implies that CI do not allow to make strong statements about the parameter of interest (e.g. the mean difference) or about H1 ( Hoekstra et al. , 2014 ). To make a statement about the probability of a parameter of interest (e.g. the probability of the mean), Bayesian intervals must be used.

The (correct) use of NHST

NHST has always been criticized, and yet is still used every day in scientific reports ( Nickerson, 2000 ). One question to ask oneself is what is the goal of a scientific experiment at hand? If the goal is to establish a discrepancy with the null hypothesis and/or establish a pattern of order, because both requires ruling out equivalence, then NHST is a good tool ( Frick, 1996 ; Walker & Nowacki, 2011 ). If the goal is to test the presence of an effect and/or establish some quantitative values related to an effect, then NHST is not the method of choice since testing is conditioned on H0.

While a Bayesian analysis is suited to estimate that the probability that a hypothesis is correct, like NHST, it does not prove a theory on itself, but adds its plausibility ( Lindley, 2000 ). No matter what testing procedure is used and how strong results are, ( Fisher, 1959 p13) reminds us that ‘ […] no isolated experiment, however significant in itself, can suffice for the experimental demonstration of any natural phenomenon'. Similarly, the recent statement of the American Statistical Association ( Wasserstein & Lazar, 2016 ) makes it clear that conclusions should be based on the researchers understanding of the problem in context, along with all summary data and tests, and that no single value (being p-values, Bayesian factor or else) can be used support or invalidate a theory.

What to report and how?

Considering that quantitative reports will always have more information content than binary (significant or not) reports, we can always argue that raw and/or normalized effect size, confidence intervals, or Bayes factor must be reported. Reporting everything can however hinder the communication of the main result(s), and we should aim at giving only the information needed, at least in the core of a manuscript. Here I propose to adopt optimal reporting in the result section to keep the message clear, but have detailed supplementary material. When the hypothesis is about the presence/absence or order of an effect, and providing that a study has sufficient power, NHST is appropriate and it is sufficient to report in the text the actual p-value since it conveys the information needed to rule out equivalence. When the hypothesis and/or the discussion involve some quantitative value, and because p-values do not inform on the effect, it is essential to report on effect sizes ( Lakens, 2013 ), preferably accompanied with confidence or credible intervals. The reasoning is simply that one cannot predict and/or discuss quantities without accounting for variability. For the reader to understand and fully appreciate the results, nothing else is needed.

Because science progress is obtained by cumulating evidence ( Rosenthal, 1991 ), scientists should also consider the secondary use of the data. With today’s electronic articles, there are no reasons for not including all of derived data: mean, standard deviations, effect size, CI, Bayes factor should always be included as supplementary tables (or even better also share raw data). It is also essential to report the context in which tests were performed – that is to report all of the tests performed (all t, F, p values) because of the increase type one error rate due to selective reporting (multiple comparisons and p-hacking problems - Ioannidis, 2005 ). Providing all of this information allows (i) other researchers to directly and effectively compare their results in quantitative terms (replication of effects beyond significance, Open Science Collaboration, 2015 ), (ii) to compute power to future studies ( Lakens & Evers, 2014 ), and (iii) to aggregate results for meta-analyses whilst minimizing publication bias ( van Assen et al. , 2014 ).

[version 3; referees: 1 approved

Funding Statement

The author(s) declared that no grants were involved in supporting this work.

- Christensen R: Testing Fisher, Neyman, Pearson, and Bayes. The American Statistician. 2005; 59 ( 2 ):121–126. 10.1198/000313005X20871 [ CrossRef ] [ Google Scholar ]

- Cumming G, Maillardet R: Confidence intervals and replication: Where will the next mean fall? Psychological Methods. 2006; 11 ( 3 ):217–227. 10.1037/1082-989X.11.3.217 [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Dienes Z: Using Bayes to get the most out of non-significant results. Front Psychol. 2014; 5 :781. 10.3389/fpsyg.2014.00781 [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]