An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Springer Nature - PMC COVID-19 Collection

Ethical Issues in Research: Perceptions of Researchers, Research Ethics Board Members and Research Ethics Experts

Marie-josée drolet.

1 Department of Occupational Therapy (OT), Université du Québec à Trois-Rivières (UQTR), Trois-Rivières (Québec), Canada

Eugénie Rose-Derouin

2 Bachelor OT program, Université du Québec à Trois-Rivières (UQTR), Trois-Rivières (Québec), Canada

Julie-Claude Leblanc

Mélanie ruest, bryn williams-jones.

3 Department of Social and Preventive Medicine, School of Public Health, Université de Montréal, Montréal (Québec), Canada

In the context of academic research, a diversity of ethical issues, conditioned by the different roles of members within these institutions, arise. Previous studies on this topic addressed mainly the perceptions of researchers. However, to our knowledge, no studies have explored the transversal ethical issues from a wider spectrum, including other members of academic institutions as the research ethics board (REB) members, and the research ethics experts. The present study used a descriptive phenomenological approach to document the ethical issues experienced by a heterogeneous group of Canadian researchers, REB members, and research ethics experts. Data collection involved socio-demographic questionnaires and individual semi-structured interviews. Following the triangulation of different perspectives (researchers, REB members and ethics experts), emerging ethical issues were synthesized in ten units of meaning: (1) research integrity, (2) conflicts of interest, (3) respect for research participants, (4) lack of supervision and power imbalances, (5) individualism and performance, (6) inadequate ethical guidance, (7) social injustices, (8) distributive injustices, (9) epistemic injustices, and (10) ethical distress. This study highlighted several problematic elements that can support the identification of future solutions to resolve transversal ethical issues in research that affect the heterogeneous members of the academic community.

Introduction

Research includes a set of activities in which researchers use various structured methods to contribute to the development of knowledge, whether this knowledge is theoretical, fundamental, or applied (Drolet & Ruest, accepted ). University research is carried out in a highly competitive environment that is characterized by ever-increasing demands (i.e., on time, productivity), insufficient access to research funds, and within a market economy that values productivity and speed often to the detriment of quality or rigour – this research context creates a perfect recipe for breaches in research ethics, like research misbehaviour or misconduct (i.e., conduct that is ethically questionable or unacceptable because it contravenes the accepted norms of responsible conduct of research or compromises the respect of core ethical values that are widely held by the research community) (Drolet & Girard, 2020 ; Sieber, 2004 ). Problematic ethics and integrity issues – e.g., conflicts of interest, falsification of data, non-respect of participants’ rights, and plagiarism, to name but a few – have the potential to both undermine the credibility of research and lead to negative consequences for many stakeholders, including researchers, research assistants and personnel, research participants, academic institutions, and society as a whole (Drolet & Girard, 2020 ). It is thus evident that the academic community should be able to identify these different ethical issues in order to evaluate the nature of the risks that they pose (and for whom), and then work towards their prevention or management (i.e., education, enhanced policies and procedures, risk mitigation strategies).

In this article, we define an “ethical issue” as any situation that may compromise, in whole or in part, the respect of at least one moral value (Swisher et al., 2005 ) that is considered socially legitimate and should thus be respected. In general, ethical issues occur at three key moments or stages of the research process: (1) research design (i.e., conception, project planning), (2) research conduct (i.e., data collection, data analysis) and (3) knowledge translation or communication (e.g., publications of results, conferences, press releases) (Drolet & Ruest, accepted ). According to Sieber ( 2004 ), ethical issues in research can be classified into five categories, related to: (a) communication with participants and the community, (b) acquisition and use of research data, (c) external influence on research, (d) risks and benefits of the research, and (e) selection and use of research theories and methods. Many of these issues are related to breaches of research ethics norms, misbehaviour or research misconduct. Bruhn et al., ( 2002 ) developed a typology of misbehaviour and misconduct in academia that can be used to judge the seriousness of different cases. This typology takes into consideration two axes of reflection: (a) the origin of the situation (i.e., is it the researcher’s own fault or due to the organizational context?), and (b) the scope and severity (i.e., is this the first instance or a recurrent behaviour? What is the nature of the situation? What are the consequences, for whom, for how many people, and for which organizations?).

A previous detailed review of the international literature on ethical issues in research revealed several interesting findings (Beauchemin et al., 2021 ). Indeed, the current literature is dominated by descriptive ethics, i.e., the sharing by researchers from various disciplines of the ethical issues they have personally experienced. While such anecdotal documentation is relevant, it is insufficient because it does not provide a global view of the situation. Among the reviewed literature, empirical studies were in the minority (Table 1 ) – only about one fifth of the sample (n = 19) presented empirical research findings on ethical issues in research. The first of these studies was conducted almost 50 years ago (Hunt et al., 1984 ), with the remainder conducted in the 1990s. Eight studies were conducted in the United States (n = 8), five in Canada (n = 5), three in England (n = 3), two in Sweden (n = 2) and one in Ghana (n = 1).

Summary of Empirical Studies on Ethical Issues in Research by the year of publication

Further, the majority of studies in our sample (n = 12) collected the perceptions of a homogeneous group of participants, usually researchers (n = 14) and sometimes health professionals (n = 6). A minority of studies (n = 7) triangulated the perceptions of diverse research stakeholders (i.e., researchers and research participants, or students). To our knowledge, only one study has examined perceptions of ethical issues in research by research ethics board members (REB; Institutional Review Boards [IRB] in the USA), and none to date have documented the perceptions of research ethics experts. Finally, nine studies (n = 9) adopted a qualitative design, seven studies (n = 7) a quantitative design, and three (n = 3) a mixed-methods design.

More studies using empirical research methods are needed to better identify broader trends, to enrich discussions on the values that should govern responsible conduct of research in the academic community, and to evaluate the means by which these values can be supported in practice (Bahn, 2012 ; Beauchemin et al., 2021 ; Bruhn et al., 2002 ; Henderson et al., 2013 ; Resnik & Elliot, 2016; Sieber 2004 ). To this end, we conducted an empirical qualitative study to document the perceptions and experiences of a heterogeneous group of Canadian researchers, REB members, and research ethics experts, to answer the following broad question: What are the ethical issues in research?

Research Methods

Research design.

A qualitative research approach involving individual semi-structured interviews was used to systematically document ethical issues (De Poy & Gitlin, 2010 ; Hammell et al., 2000 ). Specifically, a descriptive phenomenological approach inspired by the philosophy of Husserl was used (Husserl, 1970 , 1999 ), as it is recommended for documenting the perceptions of ethical issues raised by various practices (Hunt & Carnavale, 2011 ).

Ethical considerations

The principal investigator obtained ethics approval for this project from the Research Ethics Board of the Université du Québec à Trois-Rivières (UQTR). All members of the research team signed a confidentiality agreement, and research participants signed the consent form after reading an information letter explaining the nature of the research project.

Sampling and recruitment

As indicated above, three types of participants were sought: (1) researchers from different academic disciplines conducting research (i.e., theoretical, fundamental or empirical) in Canadian universities; (2) REB members working in Canadian organizations responsible for the ethical review, oversight or regulation of research; and (3) research ethics experts, i.e., academics or ethicists who teach research ethics, conduct research in research ethics, or are scholars who have acquired a specialization in research ethics. To be included in the study, participants had to work in Canada, speak and understand English or French, and be willing to participate in the study. Following Thomas and Polio’s (2002) recommendation to recruit between six and twelve participants (for a homogeneous sample) to ensure data saturation, for our heterogeneous sample, we aimed to recruit approximately twelve participants in order to obtain data saturation. Having used this method several times in related projects in professional ethics, data saturation is usually achieved with 10 to 15 participants (Drolet & Goulet, 2018 ; Drolet & Girard, 2020 ; Drolet et al., 2020 ). From experience, larger samples only serve to increase the degree of data saturation, especially in heterogeneous samples (Drolet et al., 2017 , 2019 ; Drolet & Maclure, 2016 ).

Purposive sampling facilitated the identification of participants relevant to documenting the phenomenon in question (Fortin, 2010 ). To ensure a rich and most complete representation of perceptions, we sought participants with varied and complementary characteristics with regards to the social roles they occupy in research practice (Drolet & Girard, 2020 ). A triangulation of sources was used for the recruitment (Bogdan & Biklen, 2006 ). The websites of Canadian universities and Canadian health institution REBs, as well as those of major Canadian granting agencies (i.e., the Canadian Institutes of Health Research, the Natural Sciences and Engineering Research Council of Canada, and the Social Sciences and Humanities Research Council of Canada, Fonds de recherche du Quebec), were searched to identify individuals who might be interested in participating in the study. Further, people known by the research team for their knowledge and sensitivity to ethical issues in research were asked to participate. Research participants were also asked to suggest other individuals who met the study criteria.

Data Collection

Two tools were used for data collecton: (a) a socio-demographic questionnaire, and (b) a semi-structured individual interview guide. English and French versions of these two documents were used and made available, depending on participant preferences. In addition, although the interview guide contained the same questions, they were adapted to participants’ specific roles (i.e., researcher, REB member, research ethics expert). When contacted by email by the research assistant, participants were asked to confirm under which role they wished to participate (because some participants might have multiple, overlapping responsibilities) and they were sent the appropriate interview guide.

The interview guides each had two parts: an introduction and a section on ethical issues. The introduction consisted of general questions to put the participant at ease (i.e., “Tell me what a typical day at work is like for you”). The section on ethical issues was designed to capture the participant’s perceptions through questions such as: “Tell me three stories you have experienced at work that involve an ethical issue?” and “Do you feel that your organization is doing enough to address, manage, and resolve ethical issues in your work?”. Although some interviews were conducted in person, the majority were conducted by videoconference to promote accessibility and because of the COVID-19 pandemic. Interviews were digitally recorded so that the verbatim could be transcribed in full, and varied between 40 and 120 min in duration, with an average of 90 min. Research assistants conducted the interviews and transcribed the verbatim.

Data Analysis

The socio-demographic questionnaires were subjected to simple descriptive statistical analyses (i.e., means and totals), and the semi-structured interviews were subjected to qualitative analysis. The steps proposed by Giorgi ( 1997 ) for a Husserlian phenomenological reduction of the data were used. After collecting, recording, and transcribing the interviews, all verbatim were analyzed by at least two analysts: a research assistant (2nd author of this article) and the principal investigator (1st author) or a postdoctoral fellow (3rd author). The repeated reading of the verbatim allowed the first analyst to write a synopsis, i.e., an initial extraction of units of meaning. The second analyst then read the synopses, which were commented and improved if necessary. Agreement between analysts allowed the final drafting of the interview synopses, which were then analyzed by three analysts to generate and organize the units of meaning that emerged from the qualitative data.

Participants

Sixteen individuals (n = 16) participated in the study, of whom nine (9) identified as female and seven (7) as male (Table 2 ). Participants ranged in age from 22 to 72 years, with a mean age of 47.5 years. Participants had between one (1) and 26 years of experience in the research setting, with an average of 14.3 years of experience. Participants held a variety of roles, including: REB members (n = 11), researchers (n = 10), research ethics experts (n = 4), and research assistant (n = 1). As mentioned previously, seven (7) participants held more than one role, i.e., REB member, research ethics expert, and researcher. The majority (87.5%) of participants were working in Quebec, with the remaining working in other Canadian provinces. Although all participants considered themselves to be francophone, one quarter (n = 4) identified themselves as belonging to a cultural minority group.

Description of Participants

With respect to their academic background, most participants (n = 9) had a PhD, three (3) had a post-doctorate, two (2) had a master’s degree, and two (2) had a bachelor’s degree. Participants came from a variety of disciplines: nine (9) had a specialty in the humanities or social sciences, four (4) in the health sciences and three (3) in the natural sciences. In terms of their knowledge of ethics, five (5) participants reported having taken one university course entirely dedicated to ethics, four (4) reported having taken several university courses entirely dedicated to ethics, three (3) had a university degree dedicated to ethics, while two (2) only had a few hours or days of training in ethics and two (2) reported having no knowledge of ethics.

Ethical issues

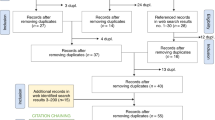

As Fig. 1 illustrates, ten units of meaning emerge from the data analysis, namely: (1) research integrity, (2) conflicts of interest, (3) respect for research participants, (4) lack of supervision and power imbalances, (5) individualism and performance, (6) inadequate ethical guidance, (7) social injustices, (8) distributive injustices, (9) epistemic injustices, and (10) ethical distress. To illustrate the results, excerpts from verbatim interviews are presented in the following sub-sections. Most of the excerpts have been translated into English as the majority of interviews were conducted with French-speaking participants.

Ethical issues in research according to the participants

Research Integrity

The research environment is highly competitive and performance-based. Several participants, in particular researchers and research ethics experts, felt that this environment can lead both researchers and research teams to engage in unethical behaviour that reflects a lack of research integrity. For example, as some participants indicated, competition for grants and scientific publications is sometimes so intense that researchers falsify research results or plagiarize from colleagues to achieve their goals.

Some people will lie or exaggerate their research findings in order to get funding. Then, you see it afterwards, you realize: “ah well, it didn’t work, but they exaggerated what they found and what they did” (participant 14). Another problem in research is the identification of authors when there is a publication. Very often, there are authors who don’t even know what the publication is about and that their name is on it. (…) The time that it surprised me the most was just a few months ago when I saw someone I knew who applied for a teaching position. He got it I was super happy for him. Then I looked at his publications and … there was one that caught my attention much more than the others, because I was in it and I didn’t know what that publication was. I was the second author of a publication that I had never read (participant 14). I saw a colleague who had plagiarized another colleague. [When the colleague] found out about it, he complained. So, plagiarism is a serious [ethical breach]. I would also say that there is a certain amount of competition in the university faculties, especially for grants (…). There are people who want to win at all costs or get as much as possible. They are not necessarily going to consider their colleagues. They don’t have much of a collegial spirit (participant 10).

These examples of research misbehaviour or misconduct are sometimes due to or associated with situations of conflicts of interest, which may be poorly managed by certain researchers or research teams, as noted by many participants.

Conflict of interest

The actors and institutions involved in research have diverse interests, like all humans and institutions. As noted in Chap. 7 of the Canadian Tri-Council Policy Statement: Ethical Conduct for Research Involving Humans (TCPS2, 2018),

“researchers and research students hold trust relationships, either directly or indirectly, with participants, research sponsors, institutions, their professional bodies and society. These trust relationships can be put at risk by conflicts of interest that may compromise independence, objectivity or ethical duties of loyalty. Although the potential for such conflicts has always existed, pressures on researchers (i.e., to delay or withhold dissemination of research outcomes or to use inappropriate recruitment strategies) heighten concerns that conflicts of interest may affect ethical behaviour” (p. 92).

The sources of these conflicts are varied and can include interpersonal conflicts, financial partnerships, third-party pressures, academic or economic interests, a researcher holding multiple roles within an institution, or any other incentive that may compromise a researcher’s independence, integrity, and neutrality (TCPS2, 2018). While it is not possible to eliminate all conflicts of interest, it is important to manage them properly and to avoid temptations to behave unethically.

Ethical temptations correspond to situations in which people are tempted to prioritize their own interests to the detriment of the ethical goods that should, in their own context, govern their actions (Swisher et al., 2005 ). In the case of researchers, this refers to situations that undermine independence, integrity, neutrality, or even the set of principles that govern research ethics (TCPS2, 2018) or the responsible conduct of research. According to study participants, these types of ethical issues frequently occur in research. Many participants, especially researchers and REB members, reported that conflicts of interest can arise when members of an organization make decisions to obtain large financial rewards or to increase their academic profile, often at the expense of the interests of members of their research team, research participants, or even the populations affected by their research.

A company that puts money into making its drug work wants its drug to work. So, homeopathy is a good example, because there are not really any consequences of homeopathy, there are not very many side effects, because there are no effects at all. So, it’s not dangerous, but it’s not a good treatment either. But some people will want to make it work. And that’s a big issue when you’re sitting at a table and there are eight researchers, and there are two or three who are like that, and then there are four others who are neutral, and I say to myself, this is not science. I think that this is a very big ethical issue (participant 14). There are also times in some research where there will be more links with pharmaceutical companies. Obviously, there are then large amounts of money that will be very interesting for the health-care institutions because they still receive money for clinical trials. They’re still getting some compensation because its time consuming for the people involved and all that. The pharmaceutical companies have money, so they will compensate, and that is sometimes interesting for the institutions, and since we are a bit caught up in this, in the sense that we have no choice but to accept it. (…) It may not be the best research in the world, there may be a lot of side effects due to the drugs, but it’s good to accept it, we’re going to be part of the clinical trial (participant 3). It is integrity, what we believe should be done or said. Often by the pressure of the environment, integrity is in tension with the pressures of the environment, so it takes resistance, it takes courage in research. (…) There were all the debates there about the problems of research that was funded and then the companies kept control over what was written. That was really troubling for a lot of researchers (participant 5).

Further, these situations sometimes have negative consequences for research participants as reported by some participants.

Respect for research participants

Many research projects, whether they are psychosocial or biomedical in nature, involve human participants. Relationships between the members of research teams and their research participants raise ethical issues that can be complex. Research projects must always be designed to respect the rights and interests of research participants, and not just those of researchers. However, participants in our study – i.e., REB members, researchers, and research ethics experts – noted that some research teams seem to put their own interests ahead of those of research participants. They also emphasized the importance of ensuring the respect, well-being, and safety of research participants. The ethical issues related to this unit of meaning are: respect for free, informed and ongoing consent of research participants; respect for and the well-being of participants; data protection and confidentiality; over-solicitation of participants; ownership of the data collected on participants; the sometimes high cost of scientific innovations and their accessibility; balance between the social benefits of research and the risks to participants (particularly in terms of safety); balance between collective well-being (development of knowledge) and the individual rights of participants; exploitation of participants; paternalism when working with populations in vulnerable situations; and the social acceptability of certain types of research. The following excerpts present some of these issues.

Where it disturbs me ethically is in the medical field – because it’s more in the medical field that we’re going to see this – when consent forms are presented to patients to solicit them as participants, and then [these forms] have an average of 40 pages. That annoys me. When they say that it has to be easy to understand and all that, adapted to the language, and then the hyper-technical language plus there are 40 pages to read, I don’t understand how you’re going to get informed consent after reading 40 pages. (…) For me, it doesn’t work. I read them to evaluate them and I have a certain level of education and experience in ethics, and there are times when I don’t understand anything (participant 2). There is a lot of pressure from researchers who want to recruit research participants (…). The idea that when you enter a health care institution, you become a potential research participant, when you say “yes to a research, you check yes to all research”, then everyone can ask you. I think that researchers really have this fantasy of saying to themselves: “as soon as people walk through the door of our institution, they become potential participants with whom we can communicate and get them involved in all projects”. There’s a kind of idea that, yes, it can be done, but it has to be somewhat supervised to avoid over-solicitation (…). Researchers are very interested in facilitating recruitment and making it more fluid, but perhaps to the detriment of confidentiality, privacy, and respect; sometimes that’s what it is, to think about what type of data you’re going to have in your bank of potential participants? Is it just name and phone number or are you getting into more sensitive information? (participant 9).

In addition, one participant reported that their university does not provide the resources required to respect the confidentiality of research participants.

The issue is as follows: researchers, of course, commit to protecting data with passwords and all that, but we realize that in practice, it is more difficult. It is not always as protected as one might think, because professor-researchers will run out of space. Will the universities make rooms available to researchers, places where they can store these things, especially when they have paper documentation, and is there indeed a guarantee of confidentiality? Some researchers have told me: “Listen; there are even filing cabinets in the corridors”. So, that certainly poses a concrete challenge. How do we go about challenging the administrative authorities? Tell them it’s all very well to have an ethics committee, but you have to help us, you also have to make sure that the necessary infrastructures are in place so that what we are proposing is really put into practice (participant 4).

If the relationships with research participants are likely to raise ethical issues, so too are the relationships with students, notably research assistants. On this topic, several participants discussed the lack of supervision or recognition offered to research assistants by researchers as well as the power imbalances between members of the research team.

Lack of Supervision and Power Imbalances

Many research teams are composed not only of researchers, but also of students who work as research assistants. The relationship between research assistants and other members of research teams can sometimes be problematic and raise ethical issues, particularly because of the inevitable power asymmetries. In the context of this study, several participants – including a research assistant, REB members, and researchers – discussed the lack of supervision or recognition of the work carried out by students, psychological pressure, and the more or less well-founded promises that are sometimes made to students. Participants also mentioned the exploitation of students by certain research teams, which manifest when students are inadequately paid, i.e., not reflective of the number of hours actually worked, not a fair wage, or even a wage at all.

[As a research assistant], it was more of a feeling of distress that I felt then because I didn’t know what to do. (…) I was supposed to get coaching or be supported, but I didn’t get anything in the end. It was like, “fix it by yourself”. (…) All research assistants were supposed to be supervised, but in practice they were not (participant 1). Very often, we have a master’s or doctoral student that we put on a subject and we consider that the project will be well done, while the student is learning. So, it happens that the student will do a lot of work and then we realize that the work is poorly done, and it is not necessarily the student’s fault. He wasn’t necessarily well supervised. There are directors who have 25 students, and they just don’t supervise them (participant 14). I think it’s really the power relationship. I thought to myself, how I saw my doctorate, the beginning of my research career, I really wanted to be in that laboratory, but they are the ones who are going to accept me or not, so what do I do to be accepted? I finally accept their conditions [which was to work for free]. If these are the conditions that are required to enter this lab, I want to go there. So, what do I do, well I accepted. It doesn’t make sense, but I tell myself that I’m still privileged, because I don’t have so many financial worries, one more reason to work for free, even though it doesn’t make sense (participant 1). In research, we have research assistants. (…). The fact of using people… so that’s it, you have to take into account where they are, respect them, but at the same time they have to show that they are there for the research. In English, we say “carry” or take care of people. With research assistants, this is often a problem that I have observed: for grant machines, the person is the last to be found there. Researchers, who will take, use student data, without giving them the recognition for it (participant 5). The problem at our university is that they reserve funding for Canadian students. The doctoral clientele in my field is mostly foreign students. So, our students are poorly funded. I saw one student end up in the shelter, in a situation of poverty. It ended very badly for him because he lacked financial resources. Once you get into that dynamic, it’s very hard to get out. I was made aware of it because the director at the time had taken him under her wing and wanted to try to find a way to get him out of it. So, most of my students didn’t get funded (participant 16). There I wrote “manipulation”, but it’s kind of all promises all the time. I, for example, was promised a lot of advancement, like when I got into the lab as a graduate student, it was said that I had an interest in [this particular area of research]. I think there are a lot of graduate students who must have gone through that, but it is like, “Well, your CV has to be really good, if you want to do a lot of things and big things. If you do this, if you do this research contract, the next year you could be the coordinator of this part of the lab and supervise this person, get more contracts, be paid more. Let’s say: you’ll be invited to go to this conference, this big event”. They were always dangling something, but you have to do that first to get there. But now, when you’ve done that, you have to do this business. It’s like a bit of manipulation, I think. That was very hard to know who is telling the truth and who is not (participant 1).

These ethical issues have significant negative consequences for students. Indeed, they sometimes find themselves at the mercy of researchers, for whom they work, struggling to be recognized and included as authors of an article, for example, or to receive the salary that they are due. For their part, researchers also sometimes find themselves trapped in research structures that can negatively affect their well-being. As many participants reported, researchers work in organizations that set very high productivity standards and in highly competitive contexts, all within a general culture characterized by individualism.

Individualism and performance

Participants, especially researchers, discussed the culture of individualism and performance that characterizes the academic environment. In glorifying excellence, some universities value performance and productivity, often at the expense of psychological well-being and work-life balance (i.e., work overload and burnout). Participants noted that there are ethical silences in their organizations on this issue, and that the culture of individualism and performance is not challenged for fear of retribution or simply to survive, i.e., to perform as expected. Participants felt that this culture can have a significant negative impact on the quality of the research conducted, as research teams try to maximize the quantity of their work (instead of quality) in a highly competitive context, which is then exacerbated by a lack of resources and support, and where everything must be done too quickly.

The work-life balance with the professional ethics related to work in a context where you have too much and you have to do a lot, it is difficult to balance all that and there is a lot of pressure to perform. If you don’t produce enough, that’s it; after that, you can’t get any more funds, so that puts pressure on you to do more and more and more (participant 3). There is a culture, I don’t know where it comes from, and that is extremely bureaucratic. If you dare to raise something, you’re going to have many, many problems. They’re going to make you understand it. So, I don’t talk. It is better: your life will be easier. I think there are times when you have to talk (…) because there are going to be irreparable consequences. (…) I’m not talking about a climate of terror, because that’s exaggerated, it’s not true, people are not afraid. But people close their office door and say nothing because it’s going to make their work impossible and they’re not going to lose their job, they’re not going to lose money, but researchers need time to be focused, so they close their office door and say nothing (participant 16).

Researchers must produce more and more, and they feel little support in terms of how to do such production, ethically, and how much exactly they are expected to produce. As this participant reports, the expectation is an unspoken rule: more is always better.

It’s sometimes the lack of a clear line on what the expectations are as a researcher, like, “ah, we don’t have any specific expectations, but produce, produce, produce, produce.” So, in that context, it’s hard to be able to put the line precisely: “have I done enough for my work?” (participant 3).

Inadequate ethical Guidance

While the productivity expectation is not clear, some participants – including researchers, research ethics experts, and REB members – also felt that the ethical expectations of some REBs were unclear. The issue of the inadequate ethical guidance of research includes the administrative mechanisms to ensure that research projects respect the principles of research ethics. According to those participants, the forms required for both researchers and REB members are increasingly long and numerous, and one participant noted that the standards to be met are sometimes outdated and disconnected from the reality of the field. Multicentre ethics review (by several REBs) was also critiqued by a participant as an inefficient method that encumbers the processes for reviewing research projects. Bureaucratization imposes an ever-increasing number of forms and ethics guidelines that actually hinder researchers’ ethical reflection on the issues at stake, leading the ethics review process to be perceived as purely bureaucratic in nature.

The ethical dimension and the ethical review of projects have become increasingly bureaucratized. (…) When I first started working (…) it was less bureaucratic, less strict then. I would say [there are now] tons of forms to fill out. Of course, we can’t do without it, it’s one of the ways of marking out ethics and ensuring that there are ethical considerations in research, but I wonder if it hasn’t become too bureaucratized, so that it’s become a kind of technical reflex to fill out these forms, and I don’t know if people really do ethical reflection as such anymore (participant 10). The fundamental structural issue, I would say, is the mismatch between the normative requirements and the real risks posed by the research, i.e., we have many, many requirements to meet; we have very long forms to fill out but the research projects we evaluate often pose few risks (participant 8). People [in vulnerable situations] were previously unable to participate because of overly strict research ethics rules that were to protect them, but in the end [these rules] did not protect them. There was a perverse effect, because in the end there was very little research done with these people and that’s why we have very few results, very little evidence [to support practices with these populations] so it didn’t improve the quality of services. (…) We all understand that we have to be careful with that, but when the research is not too risky, we say to ourselves that it would be good because for once a researcher who is interested in that population, because it is not a very popular population, it would be interesting to have results, but often we are blocked by the norms, and then we can’t accept [the project] (participant 2).

Moreover, as one participant noted, accessing ethics training can be a challenge.

There is no course on research ethics. […] Then, I find that it’s boring because you go through university and you come to do your research and you know how to do quantitative and qualitative research, but all the research ethics, where do you get this? I don’t really know (participant 13).

Yet, such training could provide relevant tools to resolve, to some extent, the ethical issues that commonly arise in research. That said, and as noted by many participants, many ethical issues in research are related to social injustices over which research actors have little influence.

Social Injustices

For many participants, notably researchers, the issues that concern social injustices are those related to power asymmetries, stigma, or issues of equity, diversity, and inclusion, i.e., social injustices related to people’s identities (Blais & Drolet, 2022 ). Participants reported experiencing or witnessing discrimination from peers, administration, or lab managers. Such oppression is sometimes cross-sectional and related to a person’s age, cultural background, gender or social status.

I have my African colleague who was quite successful when he arrived but had a backlash from colleagues in the department. I think it’s unconscious, nobody is overtly racist. But I have a young person right now who is the same, who has the same success, who got exactly the same early career award and I don’t see the same backlash. He’s just as happy with what he’s doing. It’s normal, they’re young and they have a lot of success starting out. So, I think there is discrimination. Is it because he is African? Is it because he is black? I think it’s on a subconscious level (participant 16).

Social injustices were experienced or reported by many participants, and included issues related to difficulties in obtaining grants or disseminating research results in one’s native language (i.e., even when there is official bilingualism) or being considered credible and fundable in research when one researcher is a woman.

If you do international research, there are things you can’t talk about (…). It is really a barrier to research to not be able to (…) address this question [i.e. the question of inequalities between men and women]. Women’s inequality is going to be addressed [but not within the country where the research takes place as if this inequality exists elsewhere but not here]. There are a lot of women working on inequality issues, doing work and it’s funny because I was talking to a young woman who works at Cairo University and she said to me: “Listen, I saw what you had written, you’re right. I’m willing to work on this but guarantee me a position at your university with a ticket to go”. So yes, there are still many barriers [for women in research] (participant 16).

Because of the varied contextual characteristics that intervene in their occurrence, these social injustices are also related to distributive injustices, as discussed by many participants.

Distributive Injustices

Although there are several views of distributive justice, a classical definition such as that of Aristotle ( 2012 ), describes distributive justice as consisting in distributing honours, wealth, and other social resources or benefits among the members of a community in proportion to their alleged merit. Justice, then, is about determining an equitable distribution of common goods. Contemporary theories of distributive justice are numerous and varied. Indeed, many authors (e.g., Fraser 2011 ; Mills, 2017 ; Sen, 2011 ; Young, 2011 ) have, since Rawls ( 1971 ), proposed different visions of how social burdens and benefits should be shared within a community to ensure equal respect, fairness, and distribution. In our study, what emerges from participants’ narratives is a definite concern for this type of justice. Women researchers, francophone researchers, early career researchers or researchers belonging to racialized groups all discussed inequities in the distribution of research grants and awards, and the extra work they need to do to somehow prove their worth. These inequities are related to how granting agencies determine which projects will be funded.

These situations make me work 2–3 times harder to prove myself and to show people in power that I have a place as a woman in research (participant 12). Number one: it’s conservative thinking. The older ones control what comes in. So, the younger people have to adapt or they don’t get funded (participant 14).

Whether it is discrimination against stigmatized or marginalized populations or interest in certain hot topics, granting agencies judge research projects according to criteria that are sometimes questionable, according to those participants. Faced with difficulties in obtaining funding for their projects, several strategies – some of which are unethical – are used by researchers in order to cope with these situations.

Sometimes there are subjects that everyone goes to, such as nanotechnology (…), artificial intelligence or (…) the therapeutic use of cannabis, which are very fashionable, and this is sometimes to the detriment of other research that is just as relevant, but which is (…), less sexy, less in the spirit of the time. (…) Sometimes this can lead to inequities in the funding of certain research sectors (participant 9). When we use our funds, we get them given to us, we pretty much say what we think we’re going to do with them, but things change… So, when these things change, sometimes it’s an ethical decision, but by force of circumstances I’m obliged to change the project a little bit (…). Is it ethical to make these changes or should I just let the money go because I couldn’t use it the way I said I would? (participant 3).

Moreover, these distributional injustices are not only linked to social injustices, but also epistemic injustices. Indeed, the way in which research honours and grants are distributed within the academic community depends on the epistemic authority of the researchers, which seems to vary notably according to their language of use, their age or their gender, but also to the research design used (inductive versus deductive), their decision to use (or not use) animals in research, or to conduct activist research.

Epistemic injustices

The philosopher Fricker ( 2007 ) conceptualized the notions of epistemic justice and injustice. Epistemic injustice refers to a form of social inequality that manifests itself in the access, recognition, and production of knowledge as well as the various forms of ignorance that arise (Godrie & Dos Santos, 2017 ). Addressing epistemic injustice necessitates acknowledging the iniquitous wrongs suffered by certain groups of socially stigmatized individuals who have been excluded from knowledge, thus limiting their abilities to interpret, understand, or be heard and account for their experiences. In this study, epistemic injustices were experienced or reported by some participants, notably those related to difficulties in obtaining grants or disseminating research results in one’s native language (i.e., even when there is official bilingualism) or being considered credible and fundable in research when a researcher is a woman or an early career researcher.

I have never sent a grant application to the federal government in English. I have always done it in French, even though I know that when you receive the review, you can see that reviewers didn’t understand anything because they are English-speaking. I didn’t want to get in the boat. It’s not my job to translate, because let’s be honest, I’m not as good in English as I am in French. So, I do them in my first language, which is the language I’m most used to. Then, technically at the administrative level, they are supposed to be able to do it, but they are not good in French. (…) Then, it’s a very big Canadian ethical issue, because basically there are technically two official languages, but Canada is not a bilingual country, it’s a country with two languages, either one or the other. (…) So I was not funded (participant 14).

Researchers who use inductive (or qualitative) methods observed that their projects are sometimes less well reviewed or understood, while research that adopts a hypothetical-deductive (or quantitative) or mixed methods design is better perceived, considered more credible and therefore more easily funded. Of course, regardless of whether a research project adopts an inductive, deductive or mixed-methods scientific design, or whether it deals with qualitative or quantitative data, it must respect a set of scientific criteria. A research project should achieve its objectives by using proven methods that, in the case of inductive research, are credible, reliable, and transferable or, in the case of deductive research, generalizable, objective, representative, and valid (Drolet & Ruest, accepted ). Participants discussing these issues noted that researchers who adopt a qualitative design or those who question the relevance of animal experimentation or are not militant have sometimes been unfairly devalued in their epistemic authority.

There is a mini war between quantitative versus qualitative methods, which I think is silly because science is a method. If you apply the method well, it doesn’t matter what the field is, it’s done well and it’s perfect ” (participant 14). There is also the issue of the place of animals in our lives, because for me, ethics is human ethics, but also animal ethics. Then, there is a great evolution in society on the role of the animal… with the new law that came out in Quebec on the fact that animals are sensitive beings. Then, with the rise of the vegan movement, [we must ask ourselves]: “Do animals still have a place in research?” That’s a big question and it also means that there are practices that need to evolve, but sometimes there’s a disconnection between what’s expected by research ethics boards versus what’s expected in the field (participant 15). In research today, we have more and more research that is militant from an ideological point of view. And so, we have researchers, because they defend values that seem important to them, we’ll talk for example about the fight for equality and social justice. They have pressure to defend a form of moral truth and have the impression that everyone thinks like them or should do so, because they are defending a moral truth. This is something that we see more and more, namely the lack of distance between ideology and science (participant 8).

The combination or intersectionality of these inequities, which seems to be characterized by a lack of ethical support and guidance, is experienced in the highly competitive and individualistic context of research; it provides therefore the perfect recipe for researchers to experience ethical distress.

Ethical distress

The concept of “ethical distress” refers to situations in which people know what they should do to act ethically, but encounter barriers, generally of an organizational or systemic nature, limiting their power to act according to their moral or ethical values (Drolet & Ruest, 2021 ; Jameton, 1984 ; Swisher et al., 2005 ). People then run the risk of finding themselves in a situation where they do not act as their ethical conscience dictates, which in the long term has the potential for exhaustion and distress. The examples reported by participants in this study point to the fact that researchers in particular may be experiencing significant ethical distress. This distress takes place in a context of extreme competition, constant injunctions to perform, and where administrative demands are increasingly numerous and complex to complete, while paradoxically, they lack the time to accomplish all their tasks and responsibilities. Added to these demands are a lack of resources (human, ethical, and financial), a lack of support and recognition, and interpersonal conflicts.

We are in an environment, an elite one, you are part of it, you know what it is: “publish or perish” is the motto. Grants, there is a high level of performance required, to do a lot, to publish, to supervise students, to supervise them well, so yes, it is clear that we are in an environment that is conducive to distress. (…). Overwork, definitely, can lead to distress and eventually to exhaustion. When you know that you should take the time to read the projects before sharing them, but you don’t have the time to do that because you have eight that came in the same day, and then you have others waiting… Then someone rings a bell and says: “ah but there, the protocol is a bit incomplete”. Oh yes, look at that, you’re right. You make up for it, but at the same time it’s a bit because we’re in a hurry, we don’t necessarily have the resources or are able to take the time to do things well from the start, we have to make up for it later. So yes, it can cause distress (participant 9). My organization wanted me to apply in English, and I said no, and everyone in the administration wanted me to apply in English, and I always said no. Some people said: “Listen, I give you the choice”, then some people said: “Listen, I agree with you, but if you’re not [submitting] in English, you won’t be funded”. Then the fact that I am young too, because very often they will look at the CV, they will not look at the project: “ah, his CV is not impressive, we will not finance him”. This is complete nonsense. The person is capable of doing the project, the project is fabulous: we fund the project. So, that happened, organizational barriers: that happened a lot. I was not eligible for Quebec research funds (…). I had big organizational barriers unfortunately (participant 14). At the time of my promotion, some colleagues were not happy with the type of research I was conducting. I learned – you learn this over time when you become friends with people after you enter the university – that someone was against me. He had another candidate in mind, and he was angry about the selection. I was under pressure for the first three years until my contract was renewed. I almost quit at one point, but another colleague told me, “No, stay, nothing will happen”. Nothing happened, but these issues kept me awake at night (participant 16).

This difficult context for many researchers affects not only the conduct of their own research, but also their participation in research. We faced this problem in our study, despite the use of multiple recruitment methods, including more than 200 emails – of which 191 were individual solicitations – sent to potential participants by the two research assistants. REB members and organizations overseeing or supporting research (n = 17) were also approached to see if some of their employees would consider participating. While it was relatively easy to recruit REB members and research ethics experts, our team received a high number of non-responses to emails (n = 175) and some refusals (n = 5), especially by researchers. The reasons given by those who replied were threefold: (a) fear of being easily identified should they take part in the research, (b) being overloaded and lacking time, and (c) the intrusive aspect of certain questions (i.e., “Have you experienced a burnout episode? If so, have you been followed up medically or psychologically?”). In light of these difficulties and concerns, some questions in the socio-demographic questionnaire were removed or modified. Talking about burnout in research remains a taboo for many researchers, which paradoxically can only contribute to the unresolved problem of unhealthy research environments.

Returning to the research question and objective

The question that prompted this research was: What are the ethical issues in research? The purpose of the study was to describe these issues from the perspective of researchers (from different disciplines), research ethics board (REB) members, and research ethics experts. The previous section provided a detailed portrait of the ethical issues experienced by different research stakeholders: these issues are numerous, diverse and were recounted by a range of stakeholders.

The results of the study are generally consistent with the literature. For example, as in our study, the literature discusses the lack of research integrity on the part of some researchers (Al-Hidabi et al., 2018 ; Swazey et al., 1993 ), the numerous conflicts of interest experienced in research (Williams-Jones et al., 2013 ), the issues of recruiting and obtaining the free and informed consent of research participants (Provencher et al., 2014 ; Keogh & Daly, 2009 ), the sometimes difficult relations between researchers and REBs (Drolet & Girard, 2020 ), the epistemological issues experienced in research (Drolet & Ruest, accepted; Sieber 2004 ), as well as the harmful academic context in which researchers evolve, insofar as this is linked to a culture of performance, an overload of work in a context of accountability (Berg & Seeber, 2016 ; FQPPU; 2019 ) that is conducive to ethical distress and even burnout.

If the results of the study are generally in line with those of previous publications on the subject, our findings also bring new elements to the discussion while complementing those already documented. In particular, our results highlight the role of systemic injustices – be they social, distributive or epistemic – within the environments in which research is carried out, at least in Canada. To summarize, the results of our study point to the fact that the relationships between researchers and research participants are likely still to raise worrying ethical issues, despite widely accepted research ethics norms and institutionalized review processes. Further, the context in which research is carried out is not only conducive to breaches of ethical norms and instances of misbehaviour or misconduct, but also likely to be significantly detrimental to the health and well-being of researchers, as well as research assistants. Another element that our research also highlighted is the instrumentalization and even exploitation of students and research assistants, which is another important and worrying social injustice given the inevitable power imbalances between students and researchers.

Moreover, in a context in which ethical issues are often discussed from a micro perspective, our study helps shed light on both the micro- and macro-level ethical dimensions of research (Bronfenbrenner, 1979 ; Glaser 1994 ). However, given that ethical issues in research are not only diverse, but also and above all complex, a broader perspective that encompasses the interplay between the micro and macro dimensions can enable a better understanding of these issues and thereby support the identification of the multiple factors that may be at their origin. Triangulating the perspectives of researchers with those of REB members and research ethics experts enabled us to bring these elements to light, and thus to step back from and critique the way that research is currently conducted. To this end, attention to socio-political elements such as the performance culture in academia or how research funds are distributed, and according to what explicit and implicit criteria, can contribute to identifying the sources of the ethical issues described above.

Contemporary culture characterized by the social acceleration

The German sociologist and philosopher Rosa (2010) argues that late modernity – that is, the period between the 1980s and today – is characterized by a phenomenon of social acceleration that causes various forms of alienation in our relationship to time, space, actions, things, others and ourselves. Rosa distinguishes three types of acceleration: technical acceleration , the acceleration of social changes and the acceleration of the rhythm of life . According to Rosa, social acceleration is the main problem of late modernity, in that the invisible social norm of doing more and faster to supposedly save time operates unchallenged at all levels of individual and collective life, as well as organizational and social life. Although we all, researchers and non-researchers alike, perceive this unspoken pressure to be ever more productive, the process of social acceleration as a new invisible social norm is our blind spot, a kind of tyrant over which we have little control. This conceptualization of the contemporary culture can help us to understand the context in which research is conducted (like other professional practices). To this end, Berg & Seeber ( 2016 ) invite faculty researchers to slow down in order to better reflect and, in the process, take care of their health and their relationships with their colleagues and students. Many women professors encourage their fellow researchers, especially young women researchers, to learn to “say No” in order to protect their mental and physical health and to remain in their academic careers (Allaire & Descheneux, 2022 ). These authors also remind us of the relevance of Kahneman’s ( 2012 ) work which demonstrates that it takes time to think analytically, thoroughly, and logically. Conversely, thinking quickly exposes humans to cognitive and implicit biases that then lead to errors in thinking (e.g., in the analysis of one’s own research data or in the evaluation of grant applications or student curriculum vitae). The phenomenon of social acceleration, which pushes the researcher to think faster and faster, is likely to lead to unethical bad science that can potentially harm humankind. In sum, Rosa’s invitation to contemporary critical theorists to seriously consider the problem of social acceleration is particularly insightful to better understand the ethical issues of research. It provides a lens through which to view the toxic context in which research is conducted today, and one that was shared by the participants in our study.

Clark & Sousa ( 2022 ) note, it is important that other criteria than the volume of researchers’ contributions be valued in research, notably quality. Ultimately, it is the value of the knowledge produced and its influence on the concrete lives of humans and other living beings that matters, not the quantity of publications. An interesting articulation of this view in research governance is seen in a change in practice by Australia’s national health research funder: they now restrict researchers to listing on their curriculum vitae only the top ten publications from the past ten years (rather than all of their publications), in order to evaluate the quality of contributions rather than their quantity. To create environments conducive to the development of quality research, it is important to challenge the phenomenon of social acceleration, which insidiously imposes a quantitative normativity that is both alienating and detrimental to the quality and ethical conduct of research. Based on our experience, we observe that the social norm of acceleration actively disfavours the conduct of empirical research on ethics in research. The fact is that researchers are so busy that it is almost impossible for them to find time to participate in such studies. Further, operating in highly competitive environments, while trying to respect the values and ethical principles of research, creates ethical paradoxes for members of the research community. According to Malherbe ( 1999 ), an ethical paradox is a situation where an individual is confronted by contradictory injunctions (i.e., do more, faster, and better). And eventually, ethical paradoxes lead individuals to situations of distress and burnout, or even to ethical failures (i.e., misbehaviour or misconduct) in the face of the impossibility of responding to contradictory injunctions.

Strengths and Limitations of the study

The triangulation of perceptions and experiences of different actors involved in research is a strength of our study. While there are many studies on the experiences of researchers, rarely are members of REBs and experts in research ethics given the space to discuss their views of what are ethical issues. Giving each of these stakeholders a voice and comparing their different points of view helped shed a different and complementary light on the ethical issues that occur in research. That said, it would have been helpful to also give more space to issues experienced by students or research assistants, as the relationships between researchers and research assistants are at times very worrying, as noted by a participant, and much work still needs to be done to eliminate the exploitative situations that seem to prevail in certain research settings. In addition, no Indigenous or gender diverse researchers participated in the study. Given the ethical issues and systemic injustices that many people from these groups face in Canada (Drolet & Goulet, 2018 ; Nicole & Drolet, in press ), research that gives voice to these researchers would be relevant and contribute to knowledge development, and hopefully also to change in research culture.

Further, although most of the ethical issues discussed in this article may be transferable to the realities experienced by researchers in other countries, the epistemic injustice reported by Francophone researchers who persist in doing research in French in Canada – which is an officially bilingual country but in practice is predominantly English – is likely specific to the Canadian reality. In addition, and as mentioned above, recruitment proved exceedingly difficult, particularly amongst researchers. Despite this difficulty, we obtained data saturation for all but two themes – i.e., exploitation of students and ethical issues of research that uses animals. It follows that further empirical research is needed to improve our understanding of these specific issues, as they may diverge to some extent from those documented here and will likely vary across countries and academic research contexts.

Conclusions

This study, which gave voice to researchers, REB members, and ethics experts, reveals that the ethical issues in research are related to several problematic elements as power imbalances and authority relations. Researchers and research assistants are subject to external pressures that give rise to integrity issues, among others ethical issues. Moreover, the current context of social acceleration influences the definition of the performance indicators valued in academic institutions and has led their members to face several ethical issues, including social, distributive, and epistemic injustices, at different steps of the research process. In this study, ten categories of ethical issues were identified, described and illustrated: (1) research integrity, (2) conflicts of interest, (3) respect for research participants, (4) lack of supervision and power imbalances, (5) individualism and performance, (6) inadequate ethical guidance, (7) social injustices, (8) distributive injustices, (9) epistemic injustices, and (10) ethical distress. The triangulation of the perspectives of different members (i.e., researchers from different disciplines, REB members, research ethics experts, and one research assistant) involved in the research process made it possible to lift the veil on some of these ethical issues. Further, it enabled the identification of additional ethical issues, especially systemic injustices experienced in research. To our knowledge, this is the first time that these injustices (social, distributive, and epistemic injustices) have been clearly identified.

Finally, this study brought to the fore several problematic elements that are important to address if the research community is to develop and implement the solutions needed to resolve the diverse and transversal ethical issues that arise in research institutions. A good starting point is the rejection of the corollary norms of “publish or perish” and “do more, faster, and better” and their replacement with “publish quality instead of quantity”, which necessarily entails “do less, slower, and better”. It is also important to pay more attention to the systemic injustices within which researchers work, because these have the potential to significantly harm the academic careers of many researchers, including women researchers, early career researchers, and those belonging to racialized groups as well as the health, well-being, and respect of students and research participants.

Acknowledgements

The team warmly thanks the participants who took part in the research and who made this study possible. Marie-Josée Drolet thanks the five research assistants who participated in the data collection and analysis: Julie-Claude Leblanc, Élie Beauchemin, Pénéloppe Bernier, Louis-Pierre Côté, and Eugénie Rose-Derouin, all students at the Université du Québec à Trois-Rivières (UQTR), two of whom were active in the writing of this article. MJ Drolet and Bryn Williams-Jones also acknowledge the financial contribution of the Social Sciences and Humanities Research Council of Canada (SSHRC), which supported this research through a grant. We would also like to thank the reviewers of this article who helped us improve it, especially by clarifying and refining our ideas.

Competing Interests and Funding

As noted in the Acknowledgements, this research was supported financially by the Social Sciences and Humanities Research Council of Canada (SSHRC).

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

- Al-Hidabi, Abdulmalek, M. D., & The, P. L. (2018). Multiple Publications: The Main Reason for the Retraction of Papers in Computer Science. In K. Arai, S. Kapoor, & R. Bhatia (eds), Future of Information and Communication Conference (FICC): Advances in Information and Communication, Advances in Intelligent Systems and Computing (AISC), Springer, vol. 886, pp. 511–526

- Allaire, S., & Deschenaux, F. (2022). Récits de professeurs d’université à mi-carrière. Si c’était à refaire… . Presses de l’Université du Québec

- Aristotle . Aristotle’s Nicomachean Ethics. Chicago: The University of Chicago Press; 2012. [ Google Scholar ]

- Bahn S. Keeping Academic Field Researchers Safe: Ethical Safeguards. Journal of Academic Ethics. 2012; 10 :83–91. doi: 10.1007/s10805-012-9159-2. [ CrossRef ] [ Google Scholar ]

- Balk DE. Bereavement Research Using Control Groups: Ethical Obligations and Questions. Death Studies. 1995; 19 :123–138. doi: 10.1080/07481189508252720. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Beauchemin, É., Côté, L. P., Drolet, M. J., & Williams-Jones, B. (2021). Conceptualizing Ethical Issues in the Conduct of Research: Results from a Critical and Systematic Literature Review. Journal of Academic Ethics , Early Online. 10.1007/s10805-021-09411-7

- Berg, M., & Seeber, B. K. (2016). The Slow Professor . University of Toronto Press

- Birchley G, Huxtable R, Murtagh M, Meulen RT, Flach P, Gooberman-Hill R. Smart homes, private homes? An empirical study of technology researchers’ perceptions of ethical issues in developing smart-home health technologies. BMC Medical Ethics. 2017; 18 (23):1–13. doi: 10.1186/s12910-017-0183-z. [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Blais, J., & Drolet, M. J. (2022). Les injustices sociales vécues en camp de réfugiés: les comprendre pour mieux intervenir auprès de personnes ayant séjourné dans un camp de réfugiés. Recueil annuel belge d’ergothérapie , 14, 37–48

- Bogdan, R. C., & Biklen, S. K. (2006). Qualitative research in education: An introduction to theory and methods . Allyn & Bacon

- Bouffard C. Le développement des pratiques de la génétique médicale et la construction des normes bioéthiques. Anthropologie et Sociétés. 2000; 24 (2):73–90. doi: 10.7202/015650ar. [ CrossRef ] [ Google Scholar ]

- Bronfenbrenner, U. (1979). The Ecology of Human development. Experiments by nature and design . Harvard University Press

- Bruhn JG, Zajac G, Al-Kazemi AA, Prescott LD. Moral positions and academic conduct: Parameters of tolerance for ethics failure. Journal of Higher Education. 2002; 73 (4):461–493. doi: 10.1353/jhe.2002.0033. [ CrossRef ] [ Google Scholar ]

- Clark, A., & Sousa (2022). It’s time to end Canada’s obsession with research quantity. University Affairs/Affaires universitaires , February 14th. https://www.universityaffairs.ca/career-advice/effective-successfull-happy-academic/its-time-to-end-canadas-obsession-with-research-quantity/?utm_source=University+Affairs+e-newsletter&utm_campaign=276a847f 70-EMAIL_CAMPAIGN_2022_02_16&utm_medium=email&utm_term=0_314bc2ee29-276a847f70-425259989

- Colnerud G. Ethical dilemmas in research in relation to ethical review: An empirical study. Research Ethics. 2015; 10 (4):238–253. doi: 10.1177/1747016114552339. [ CrossRef ] [ Google Scholar ]

- Davison J. Dilemmas in Research: Issues of Vulnerability and Disempowerment for the Social Workers/Researcher. Journal of Social Work Practice. 2004; 18 (3):379–393. doi: 10.1080/0265053042000314447. [ CrossRef ] [ Google Scholar ]

- DePoy E, Gitlin LN. Introduction to Research. St. Louis: Elsevier Mosby; 2010. [ Google Scholar ]

- Drolet, M. J., & Goulet, M. (2018). Travailler avec des patients autochtones du Canada ? Perceptions d’ergothérapeutes du Québec des enjeux éthiques de cette pratique. Recueil annuel belge francophone d’ergothérapie , 10 , 25–56

- Drolet MJ, Girard K. Les enjeux éthiques de la recherche en ergothérapie: un portrait préoccupant. Revue canadienne de bioéthique. 2020; 3 (3):21–40. doi: 10.7202/1073779ar. [ CrossRef ] [ Google Scholar ]

- Drolet MJ, Girard K, Gaudet R. Les enjeux éthiques de l’enseignement en ergothérapie: des injustices au sein des départements universitaires. Revue canadienne de bioéthique. 2020; 3 (1):22–36. [ Google Scholar ]

- Drolet MJ, Maclure J. Les enjeux éthiques de la pratique de l’ergothérapie: perceptions d’ergothérapeutes. Revue Approches inductives. 2016; 3 (2):166–196. doi: 10.7202/1037918ar. [ CrossRef ] [ Google Scholar ]

- Drolet MJ, Pinard C, Gaudet R. Les enjeux éthiques de la pratique privée: des ergothérapeutes du Québec lancent un cri d’alarme. Ethica – Revue interdisciplinaire de recherche en éthique. 2017; 21 (2):173–209. [ Google Scholar ]

- Drolet MJ, Ruest M. De l’éthique à l’ergothérapie: un cadre théorique et une méthode pour soutenir la pratique professionnelle. Québec: Presses de l’Université du Québec; 2021. [ Google Scholar ]

- Drolet, M. J., & Ruest, M. (accepted). Quels sont les enjeux éthiques soulevés par la recherche scientifique? In M. Lalancette & J. Luckerhoff (dir). Initiation au travail intellectuel et à la recherche . Québec: Presses de l’Université du Québec, 18 p

- Drolet MJ, Sauvageau A, Baril N, Gaudet R. Les enjeux éthiques de la formation clinique en ergothérapie. Revue Approches inductives. 2019; 6 (1):148–179. doi: 10.7202/1060048ar. [ CrossRef ] [ Google Scholar ]

- Fédération québécoise des professeures et des professeurs d’université (FQPPU) Enquête nationale sur la surcharge administrative du corps professoral universitaire québécois. Principaux résultats et pistes d’action. Montréal: FQPPU; 2019. [ Google Scholar ]

- Fortin MH. Fondements et étapes du processus de recherche. Méthodes quantitatives et qualitatives. Montréal, QC: Chenelière éducation; 2010. [ Google Scholar ]

- Fraser DM. Ethical dilemmas and practical problems for the practitioner researcher. Educational Action Research. 1997; 5 (1):161–171. doi: 10.1080/09650799700200014. [ CrossRef ] [ Google Scholar ]

- Fraser, N. (2011). Qu’est-ce que la justice sociale? Reconnaissance et redistribution . La Découverte

- Fricker, M. (2007). Epistemic Injustice: Power and the Ethics of Knowing . Oxford University Press

- Giorgi A, et al. De la méthode phénoménologique utilisée comme mode de recherche qualitative en sciences humaines: théories, pratique et évaluation. In: Poupart J, Groulx LH, Deslauriers JP, et al., editors. La recherche qualitative: enjeux épistémologiques et méthodologiques. Boucherville, QC: Gaëtan Morin; 1997. pp. 341–364. [ Google Scholar ]

- Giorgini V, Mecca JT, Gibson C, Medeiros K, Mumford MD, Connelly S, Devenport LD. Researcher Perceptions of Ethical Guidelines and Codes of Conduct. Accountability in Research. 2016; 22 (3):123–138. doi: 10.1080/08989621.2014.955607. [ PMC free article ] [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Glaser, J. W. (1994). Three realms of ethics: Individual, institutional, societal. Theoretical model and case studies . Kansas Cuty, Sheed & Ward

- Godrie B, Dos Santos M. Présentation: inégalités sociales, production des savoirs et de l’ignorance. Sociologie et sociétés. 2017; 49 (1):7. doi: 10.7202/1042804ar. [ CrossRef ] [ Google Scholar ]

- Hammell KW, Carpenter C, Dyck I. Using Qualitative Research: A Practical Introduction for Occupational and Physical Therapists. Edinburgh: Churchill Livingstone; 2000. [ Google Scholar ]

- Henderson M, Johnson NF, Auld G. Silences of ethical practice: dilemmas for researchers using social media. Educational Research and Evaluation. 2013; 19 (6):546–560. doi: 10.1080/13803611.2013.805656. [ CrossRef ] [ Google Scholar ]

- Husserl E. The crisis of European sciences and transcendental phenomenology. Evanston, IL: Northwestern University Press; 1970. [ Google Scholar ]

- Husserl E. The train of thoughts in the lectures. In: Polifroni EC, Welch M, editors. Perspectives on Philosophy of Science in Nursing. Philadelphia, PA: Lippincott; 1999. [ Google Scholar ]

- Hunt SD, Chonko LB, Wilcox JB. Ethical problems of marketing researchers. Journal of Marketing Research. 1984; 21 :309–324. doi: 10.1177/002224378402100308. [ CrossRef ] [ Google Scholar ]

- Hunt MR, Carnevale FA. Moral experience: A framework for bioethics research. Journal of Medical Ethics. 2011; 37 (11):658–662. doi: 10.1136/jme.2010.039008. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Jameton, A. (1984). Nursing practice: The ethical issues . Englewood Cliffs, Prentice-Hall

- Jarvis K. Dilemmas in International Research and the Value of Practical Wisdom. Developing World Bioethics. 2017; 17 (1):50–58. doi: 10.1111/dewb.12121. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Kahneman D. Système 1, système 2: les deux vitesses de la pensée. Paris: Flammarion; 2012. [ Google Scholar ]

- Keogh B, Daly L. The ethics of conducting research with mental health service users. British Journal of Nursing. 2009; 18 (5):277–281. doi: 10.12968/bjon.2009.18.5.40539. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Lierville AL, Grou C, Pelletier JF. Enjeux éthiques potentiels liés aux partenariats patients en psychiatrie: État de situation à l’Institut universitaire en santé mentale de Montréal. Santé mentale au Québec. 2015; 40 (1):119–134. doi: 10.7202/1032386ar. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Lynöe N, Sandlund M, Jacobsson L. Research ethics committees: A comparative study of assessment of ethical dilemmas. Scandinavian Journal of Public Health. 1999; 27 (2):152–159. doi: 10.1177/14034948990270020401. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Malherbe JF. Compromis, dilemmes et paradoxes en éthique clinique. Anjou: Éditions Fides; 1999. [ Google Scholar ]

- McGinn R. Discernment and denial: Nanotechnology researchers’ recognition of ethical responsibilities related to their work. NanoEthics. 2013; 7 :93–105. doi: 10.1007/s11569-013-0174-6. [ CrossRef ] [ Google Scholar ]

- Mills, C. W. (2017). Black Rights / White rongs. The Critique of Racial Liberalism . Oxford University Press

- Miyazaki AD, Taylor KA. Researcher interaction biases and business ethics research: Respondent reactions to researcher characteristics. Journal of Business Ethics. 2008; 81 (4):779–795. doi: 10.1007/s10551-007-9547-5. [ CrossRef ] [ Google Scholar ]

- Mondain N, Bologo E. L’intentionnalité du chercheur dans ses pratiques de production des connaissances: les enjeux soulevés par la construction des données en démographie et santé en Afrique. Cahiers de recherche sociologique. 2009; 48 :175–204. doi: 10.7202/039772ar. [ CrossRef ] [ Google Scholar ]

- Nicole, M., & Drolet, M. J. (in press). Fitting transphobia and cisgenderism in occupational therapy, Occupational Therapy Now

- Pope KS, Vetter VA. Ethical dilemmas encountered by members of the American Psychological Association: A national survey. The American Psychologist. 1992; 47 (3):397–411. doi: 10.1037/0003-066X.47.3.397. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Provencher V, Mortenson WB, Tanguay-Garneau L, Bélanger K, Dagenais M. Challenges and strategies pertaining to recruitment and retention of frail elderly in research studies: A systematic review. Archives of Gerontology and Geriatrics. 2014; 59 (1):18–24. doi: 10.1016/j.archger.2014.03.006. [ PubMed ] [ CrossRef ] [ Google Scholar ]

- Rawls, J. (1971). A Theory of Justice . Harvard University Press