- Current Team

- Funded Projects

Selected Research Projects

- COVID-19 Response

- Articles in Peer-reviewed Journals

- Articles in Books and Proceedings

- Edited Books

- Presentations

Dissertations & Theses

- Lab Resources

- Place Connectivity Index

- Human Mobility Flows

- Twitter Census

Ph.D. Dissertations

Qian Huang (2022), Spatial and Age Disparities in COVID-19 Outcomes (Committee: Drs. Susan Cutter, Zhenlong Li, Kevin Bennett, Jan Eberth, Jerry Mitchell)

Grayson Morgan (2022), sUAS and Deep Learning for High-resolution Monitoring of Tidal Marshes in Coastal South Carolina , (Committee: Drs. Susan Wang, Zhenlong Li, Michael Hodgson, Steve Schill )

Jiang Y. (2022), Quantifying Human Mobility Patterns During Disruptive Events with Big Data (Committee: Drs. Zhenlong Li, Susan Cutter, Michael Hodgson, Qunying Huang )

Huang, X. (2020), Remote Sensing and Social Sensing for Improved Flood Awareness and Exposure Analysis in the Big Data Era (Committee: Drs. Susan Wang, Zhenlong Li, Michael Hodgson, David Hitchcock)

Derakhshan, S. (2020) Spatio-Temporal Modeling of Earthquake Recovery (Committee: Drs. Susan Cutter, Cuizhen Wang, Zhenlong Li, Melanie Gall)

Martin, Y. (2019), Leveraging Geotagged Social Media to Monitor Spatial Behavior During Population Movements Triggered by Hurricanes (Committee: Drs. Susan L. Cutter, Zhenlong Li, Jerry T. Mitchell, Christopher T. Emrich)

Master Theses

Fulham A. (2023). Sentiment analysis of Swedish and Finnish twitter users’ views toward NATO pre- and post- 2022 2nd Russian invasion of Ukraine (Committee: Drs. Zhenlong Li, Carl Dahlman, Robert Kopack)

Ning H. (2019). Prototyping A Social Media Flooding Photo Screening System Based On Deep Learning and Crowdsourcing (Committee: Drs. Zhenlong Li, Michael E. Hodgson, Cuizhen Wang)

Vayansky, I. R. (2018). An Evaluation of Geotagged Twitter Data during Hurricane Irma using Sentiment Analysis and Topic Modeling for Disaster Resilience. (Committee: Drs. Sathish A.P Kumar, Zhenlong Li, William Jones )

Pham. E. (2018). Analysis of Evacuation Behaviors and Departure Timing for October 2016’s Hurricane Matthew. (Committee: Drs. Susan L. Cutter, Christopher Emrich, Zhenlong Li )

Campbell. R. (2018). Tweets About Tornado Warnings: A Spatiotemporal & Content Analysis (Committee: Drs. Susan L. Cutter, Zhenlong Li, Gregory Carbone)

Windsor M. (2017). A Web-based Decision Support Platform for Community Engagement in Water Resources Planning (Committee: Drs. Zhenlong Li, Jean Taylor Ellis)

Jiang Y. (2016). Urban Accessibility Measurement and Visualization—A Big Data Approach (Committee: Drs. Diansheng Guo, Zhenlong Li, Michael E. Hodgson)

Undergraduate Theses (Honors and Graduate with Distinction)

Finn Hagerty (2021), Tracking Population Movement using Geotagged Tweets to and from New York City and Los Angeles during the COVID-19 Pandemic , (Committee: Drs. Zhenlong Li, Amir Karami)

Murph R. (2019), Steering Clear of Single-Occupancy Vehicles: Campus Transportation Demand Management Strategies for the University of South Carolina (Committee: Drs. Conor M. Harrison, Zhenlong Li)

- How our collective efforts of fighting the virus are reflected on maps?

- ODT Flow Explorer: Extract, Query, and Visualize Human Mobility

- Human Mobility, Policy, and COVID-19: A Preliminary Analysis of South Carolina

- Big Geo-tweets Computing

- Social Sensing and Big Data Computing for Evacuation Analysis

- Social Sensing and Big Data Computing for Rapid Flood Mapping

- Spatiotemporal Indexing Approach (SIA) for efficient management, access, and analyze Big Climate and Remote Sensing Data

- Spatiotemporal query analytics for parallel climate data aggregation and analysis

- SOVAS: A Scalable Online Visual Analytic System for Big Climate Data Analysis

- Human mobility during disruptive events

- Measuring and modeling activity space

- Understanding tourist movement patterns with big data

- Exploring the vertical dimension of street view image: lowest floor elevation estimation

- Sidewalk Extraction from Big Visual Data

- Warning : Invalid argument supplied for foreach() in /home/customer/www/opendatascience.com/public_html/wp-includes/nav-menu.php on line 95 Warning : array_merge(): Expected parameter 2 to be an array, null given in /home/customer/www/opendatascience.com/public_html/wp-includes/nav-menu.php on line 102

- ODSC EUROPE

- AI+ Training

- Speak at ODSC

- Data Analytics

- Data Engineering

- Data Visualization

- Deep Learning

- Generative AI

- Machine Learning

- NLP and LLMs

- Business & Use Cases

- Career Advice

- Write for us

- ODSC Community Slack Channel

- Upcoming Webinars

17 Compelling Machine Learning Ph.D. Dissertations

Machine Learning Modeling Research posted by Daniel Gutierrez, ODSC August 12, 2021 Daniel Gutierrez, ODSC

Working in the field of data science, I’m always seeking ways to keep current in the field and there are a number of important resources available for this purpose: new book titles, blog articles, conference sessions, Meetups, webinars/podcasts, not to mention the gems floating around in social media. But to dig even deeper, I routinely look at what’s coming out of the world’s research labs. And one great way to keep a pulse for what the research community is working on is to monitor the flow of new machine learning Ph.D. dissertations. Admittedly, many such theses are laser-focused and narrow, but from previous experience reading these documents, you can learn an awful lot about new ways to solve difficult problems over a vast range of problem domains.

In this article, I present a number of hand-picked machine learning dissertations that I found compelling in terms of my own areas of interest and aligned with problems that I’m working on. I hope you’ll find a number of them that match your own interests. Each dissertation may be challenging to consume but the process will result in hours of satisfying summer reading. Enjoy!

Please check out my previous data science dissertation round-up article .

1. Fitting Convex Sets to Data: Algorithms and Applications

This machine learning dissertation concerns the geometric problem of finding a convex set that best fits a given data set. The overarching question serves as an abstraction for data-analytical tasks arising in a range of scientific and engineering applications with a focus on two specific instances: (i) a key challenge that arises in solving inverse problems is ill-posedness due to a lack of measurements. A prominent family of methods for addressing such issues is based on augmenting optimization-based approaches with a convex penalty function so as to induce a desired structure in the solution. These functions are typically chosen using prior knowledge about the data. The thesis also studies the problem of learning convex penalty functions directly from data for settings in which we lack the domain expertise to choose a penalty function. The solution relies on suitably transforming the problem of learning a penalty function into a fitting task; and (ii) the problem of fitting tractably-described convex sets given the optimal value of linear functionals evaluated in different directions.

2. Structured Tensors and the Geometry of Data

This machine learning dissertation analyzes data to build a quantitative understanding of the world. Linear algebra is the foundation of algorithms, dating back one hundred years, for extracting structure from data. Modern technologies provide an abundance of multi-dimensional data, in which multiple variables or factors can be compared simultaneously. To organize and analyze such data sets we can use a tensor , the higher-order analogue of a matrix. However, many theoretical and practical challenges arise in extending linear algebra to the setting of tensors. The first part of the thesis studies and develops the algebraic theory of tensors. The second part of the thesis presents three algorithms for tensor data. The algorithms use algebraic and geometric structure to give guarantees of optimality.

3. Statistical approaches for spatial prediction and anomaly detection

This machine learning dissertation is primarily a description of three projects. It starts with a method for spatial prediction and parameter estimation for irregularly spaced, and non-Gaussian data. It is shown that by judiciously replacing the likelihood with an empirical likelihood in the Bayesian hierarchical model, approximate posterior distributions for the mean and covariance parameters can be obtained. Due to the complex nature of the hierarchical model, standard Markov chain Monte Carlo methods cannot be applied to sample from the posterior distributions. To overcome this issue, a generalized sequential Monte Carlo algorithm is used. Finally, this method is applied to iron concentrations in California. The second project focuses on anomaly detection for functional data; specifically for functional data where the observed functions may lie over different domains. By approximating each function as a low-rank sum of spline basis functions the coefficients will be compared for each basis across each function. The idea being, if two functions are similar then their respective coefficients should not be significantly different. This project concludes with an application of the proposed method to detect anomalous behavior of users of a supercomputer at NREL. The final project is an extension of the second project to two-dimensional data. This project aims to detect location and temporal anomalies from ground motion data from a fiber-optic cable using distributed acoustic sensing (DAS).

4. Sampling for Streaming Data

Advances in data acquisition technology pose challenges in analyzing large volumes of streaming data. Sampling is a natural yet powerful tool for analyzing such data sets due to their competent estimation accuracy and low computational cost. Unfortunately, sampling methods and their statistical properties for streaming data, especially streaming time series data, are not well studied in the literature. Meanwhile, estimating the dependence structure of multidimensional streaming time-series data in real-time is challenging. With large volumes of streaming data, the problem becomes more difficult when the multidimensional data are collected asynchronously across distributed nodes, which motivates us to sample representative data points from streams. This machine learning dissertation proposes a series of leverage score-based sampling methods for streaming time series data. The simulation studies and real data analysis are conducted to validate the proposed methods. The theoretical analysis of the asymptotic behaviors of the least-squares estimator is developed based on the subsamples.

5. Statistical Machine Learning Methods for Complex, Heterogeneous Data

This machine learning dissertation develops statistical machine learning methodology for three distinct tasks. Each method blends classical statistical approaches with machine learning methods to provide principled solutions to problems with complex, heterogeneous data sets. The first framework proposes two methods for high-dimensional shape-constrained regression and classification. These methods reshape pre-trained prediction rules to satisfy shape constraints like monotonicity and convexity. The second method provides a nonparametric approach to the econometric analysis of discrete choice. This method provides a scalable algorithm for estimating utility functions with random forests, and combines this with random effects to properly model preference heterogeneity. The final method draws inspiration from early work in statistical machine translation to construct embeddings for variable-length objects like mathematical equations

6. Topics in Multivariate Statistics with Dependent Data

This machine learning dissertation comprises four chapters. The first is an introduction to the topics of the dissertation and the remaining chapters contain the main results. Chapter 2 gives new results for consistency of maximum likelihood estimators with a focus on multivariate mixed models. The presented theory builds on the idea of using subsets of the full data to establish consistency of estimators based on the full data. The theory is applied to two multivariate mixed models for which it was unknown whether maximum likelihood estimators are consistent. In Chapter 3 an algorithm is proposed for maximum likelihood estimation of a covariance matrix when the corresponding correlation matrix can be written as the Kronecker product of two lower-dimensional correlation matrices. The proposed method is fully likelihood-based. Some desirable properties of separable correlation in comparison to separable covariance are also discussed. Chapter 4 is concerned with Bayesian vector auto-regressions (VARs). A collapsed Gibbs sampler is proposed for Bayesian VARs with predictors and the convergence properties of the algorithm are studied.

7. Model Selection and Estimation for High-dimensional Data Analysis

In the era of big data, uncovering useful information and hidden patterns in the data is prevalent in different fields. However, it is challenging to effectively select input variables in data and estimate their effects. The goal of this machine learning dissertation is to develop reproducible statistical approaches that provide mechanistic explanations of the phenomenon observed in big data analysis. The research contains two parts: variable selection and model estimation. The first part investigates how to measure and interpret the usefulness of an input variable using an approach called “variable importance learning” and builds tools (methodology and software) that can be widely applied. Two variable importance measures are proposed, a parametric measure SOIL and a non-parametric measure CVIL, using the idea of a model combining and cross-validation respectively. The SOIL method is theoretically shown to have the inclusion/exclusion property: When the model weights are properly around the true model, the SOIL importance can well separate the variables in the true model from the rest. The CVIL method possesses desirable theoretical properties and enhances the interpretability of many mysterious but effective machine learning methods. The second part focuses on how to estimate the effect of a useful input variable in the case where the interaction of two input variables exists. Investigated is the minimax rate of convergence for regression estimation in high-dimensional sparse linear models with two-way interactions, and construct an adaptive estimator that achieves the minimax rate of convergence regardless of the true heredity condition and the sparsity indices.

8. High-Dimensional Structured Regression Using Convex Optimization

While the term “Big Data” can have multiple meanings, this dissertation considers the type of data in which the number of features can be much greater than the number of observations (also known as high-dimensional data). High-dimensional data is abundant in contemporary scientific research due to the rapid advances in new data-measurement technologies and computing power. Recent advances in statistics have witnessed great development in the field of high-dimensional data analysis. This machine learning dissertation proposes three methods that study three different components of a general framework of the high-dimensional structured regression problem. A general theme of the proposed methods is that they cast a certain structured regression as a convex optimization problem. In so doing, the theoretical properties of each method can be well studied, and efficient computation is facilitated. Each method is accompanied by a thorough theoretical analysis of its performance, and also by an R package containing its practical implementation. It is shown that the proposed methods perform favorably (both theoretically and practically) compared with pre-existing methods.

9. Asymptotics and Interpretability of Decision Trees and Decision Tree Ensembles

Decision trees and decision tree ensembles are widely used nonparametric statistical models. A decision tree is a binary tree that recursively segments the covariate space along the coordinate directions to create hyper rectangles as basic prediction units for fitting constant values within each of them. A decision tree ensemble combines multiple decision trees, either in parallel or in sequence, in order to increase model flexibility and accuracy, as well as to reduce prediction variance. Despite the fact that tree models have been extensively used in practice, results on their asymptotic behaviors are scarce. This machine learning dissertation presents analyses on tree asymptotics in the perspectives of tree terminal nodes, tree ensembles, and models incorporating tree ensembles respectively. The study introduces a few new tree-related learning frameworks which provides provable statistical guarantees and interpretations. A study on the Gini index used in the greedy tree building algorithm reveals its limiting distribution, leading to the development of a test of better splitting that helps to measure the uncertain optimality of a decision tree split. This test is combined with the concept of decision tree distillation, which implements a decision tree to mimic the behavior of a block box model, to generate stable interpretations by guaranteeing a unique distillation tree structure as long as there are sufficiently many random sample points. Also applied is mild modification and regularization to the standard tree boosting to create a new boosting framework named Boulevard. Also included is an integration of two new mechanisms: honest trees , which isolate the tree terminal values from the tree structure, and adaptive shrinkage , which scales the boosting history to create an equally weighted ensemble. This theoretical development provides the prerequisite for the practice of statistical inference with boosted trees. Lastly, the thesis investigates the feasibility of incorporating existing semi-parametric models with tree boosting.

10. Bayesian Models for Imputing Missing Data and Editing Erroneous Responses in Surveys

This dissertation develops Bayesian methods for handling unit nonresponse, item nonresponse, and erroneous responses in large-scale surveys and censuses containing categorical data. The focus is on applications of nested household data where individuals are nested within households and certain combinations of the variables are not allowed, such as the U.S. Decennial Census, as well as surveys subject to both unit and item nonresponse, such as the Current Population Survey.

11. Localized Variable Selection with Random Forest

Due to recent advances in computer technology, the cost of collecting and storing data has dropped drastically. This makes it feasible to collect large amounts of information for each data point. This increasing trend in feature dimensionality justifies the need for research on variable selection. Random forest (RF) has demonstrated the ability to select important variables and model complex data. However, simulations confirm that it fails in detecting less influential features in presence of variables with large impacts in some cases. This dissertation proposes two algorithms for localized variable selection: clustering-based feature selection (CBFS) and locally adjusted feature importance (LAFI). Both methods aim to find regions where the effects of weaker features can be isolated and measured. CBFS combines RF variable selection with a two-stage clustering method to detect variables where their effect can be detected only in certain regions. LAFI, on the other hand, uses a binary tree approach to split data into bins based on response variable rankings, and implements RF to find important variables in each bin. Larger LAFI is assigned to variables that get selected in more bins. Simulations and real data sets are used to evaluate these variable selection methods.

12. Functional Principal Component Analysis and Sparse Functional Regression

The focus of this dissertation is on functional data which are sparsely and irregularly observed. Such data require special consideration, as classical functional data methods and theory were developed for densely observed data. As is the case in much of functional data analysis, the functional principal components (FPCs) play a key role in current sparse functional data methods via the Karhunen-Loéve expansion. Thus, after a review of relevant background material, this dissertation is divided roughly into two parts, the first focusing specifically on theoretical properties of FPCs, and the second on regression for sparsely observed functional data.

13. Essays In Causal Inference: Addressing Bias In Observational And Randomized Studies Through Analysis And Design

In observational studies, identifying assumptions may fail, often quietly and without notice, leading to biased causal estimates. Although less of a concern in randomized trials where treatment is assigned at random, bias may still enter the equation through other means. This dissertation has three parts, each developing new methods to address a particular pattern or source of bias in the setting being studied. The first part extends the conventional sensitivity analysis methods for observational studies to better address patterns of heterogeneous confounding in matched-pair designs. The second part develops a modified difference-in-difference design for comparative interrupted time-series studies. The method permits partial identification of causal effects when the parallel trends assumption is violated by an interaction between group and history. The method is applied to a study of the repeal of Missouri’s permit-to-purchase handgun law and its effect on firearm homicide rates. The final part presents a study design to identify vaccine efficacy in randomized control trials when there is no gold standard case definition. The approach augments a two-arm randomized trial with natural variation of a genetic trait to produce a factorial experiment.

14. Bayesian Shrinkage: Computation, Methods, and Theory

Sparsity is a standard structural assumption that is made while modeling high-dimensional statistical parameters. This assumption essentially entails a lower-dimensional embedding of the high-dimensional parameter thus enabling sound statistical inference. Apart from this obvious statistical motivation, in many modern applications of statistics such as Genomics, Neuroscience, etc. parameters of interest are indeed of this nature. For over almost two decades, spike and slab type priors have been the Bayesian gold standard for modeling of sparsity. However, due to their computational bottlenecks, shrinkage priors have emerged as a powerful alternative. This family of priors can almost exclusively be represented as a scale mixture of Gaussian distribution and posterior Markov chain Monte Carlo (MCMC) updates of related parameters are then relatively easy to design. Although shrinkage priors were tipped as having computational scalability in high-dimensions, when the number of parameters is in thousands or more, they do come with their own computational challenges. Standard MCMC algorithms implementing shrinkage priors generally scale cubic in the dimension of the parameter making real-life application of these priors severely limited.

The first chapter of this dissertation addresses this computational issue and proposes an alternative exact posterior sampling algorithm complexity of which that linearly in the ambient dimension. The algorithm developed in the first chapter is specifically designed for regression problems. The second chapter develops a Bayesian method based on shrinkage priors for high-dimensional multiple response regression. Chapter three chooses a specific member of the shrinkage family known as the horseshoe prior and studies its convergence rates in several high-dimensional models.

15. Topics in Measurement Error Analysis and High-Dimensional Binary Classification

This dissertation proposes novel methods to tackle two problems: the misspecified model with measurement error and high-dimensional binary classification, both have a crucial impact on applications in public health. The first problem exists in the epidemiology practice. Epidemiologists often categorize a continuous risk predictor since categorization is thought to be more robust and interpretable, even when the true risk model is not a categorical one. Thus, their goal is to fit the categorical model and interpret the categorical parameters. The second project considers the problem of high-dimensional classification between the two groups with unequal covariance matrices. Rather than estimating the full quadratic discriminant rule, it is proposed to perform simultaneous variable selection and linear dimension reduction on original data, with the subsequent application of quadratic discriminant analysis on the reduced space. Further, in order to support the proposed methodology, two R packages were developed, CCP and DAP, along with two vignettes as long-format illustrations for their usage.

16. Model-Based Penalized Regression

This dissertation contains three chapters that consider penalized regression from a model-based perspective, interpreting penalties as assumed prior distributions for unknown regression coefficients. The first chapter shows that treating a lasso penalty as a prior can facilitate the choice of tuning parameters when standard methods for choosing the tuning parameters are not available, and when it is necessary to choose multiple tuning parameters simultaneously. The second chapter considers a possible drawback of treating penalties as models, specifically possible misspecification. The third chapter introduces structured shrinkage priors for dependent regression coefficients which generalize popular independent shrinkage priors. These can be useful in various applied settings where many regression coefficients are not only expected to be nearly or exactly equal to zero, but also structured.

17. Topics on Least Squares Estimation

This dissertation revisits and makes progress on some old but challenging problems concerning least squares estimation, the work-horse of supervised machine learning. Two major problems are addressed: (i) least squares estimation with heavy-tailed errors, and (ii) least squares estimation in non-Donsker classes. For (i), this problem is studied both from a worst-case perspective, and a more refined envelope perspective. For (ii), two case studies are performed in the context of (a) estimation involving sets and (b) estimation of multivariate isotonic functions. Understanding these particular aspects of least squares estimation problems requires several new tools in the empirical process theory, including a sharp multiplier inequality controlling the size of the multiplier empirical process, and matching upper and lower bounds for empirical processes indexed by non-Donsker classes.

How to Learn More about Machine Learning

At our upcoming event this November 16th-18th in San Francisco, ODSC West 2021 will feature a plethora of talks, workshops, and training sessions on machine learning and machine learning research. You can register now for 50% off all ticket types before the discount drops to 40% in a few weeks. Some highlighted sessions on machine learning include:

- Towards More Energy-Efficient Neural Networks? Use Your Brain!: Olaf de Leeuw | Data Scientist | Dataworkz

- Practical MLOps: Automation Journey: Evgenii Vinogradov, PhD | Head of DHW Development | YooMoney

- Applications of Modern Survival Modeling with Python: Brian Kent, PhD | Data Scientist | Founder The Crosstab Kite

- Using Change Detection Algorithms for Detecting Anomalous Behavior in Large Systems: Veena Mendiratta, PhD | Adjunct Faculty, Network Reliability and Analytics Researcher | Northwestern University

Sessions on MLOps:

- Tuning Hyperparameters with Reproducible Experiments: Milecia McGregor | Senior Software Engineer | Iterative

- MLOps… From Model to Production: Filipa Peleja, PhD | Lead Data Scientist | Levi Strauss & Co

- Operationalization of Models Developed and Deployed in Heterogeneous Platforms: Sourav Mazumder | Data Scientist, Thought Leader, AI & ML Operationalization Leader | IBM

- Develop and Deploy a Machine Learning Pipeline in 45 Minutes with Ploomber: Eduardo Blancas | Data Scientist | Fidelity Investments

Sessions on Deep Learning:

- GANs: Theory and Practice, Image Synthesis With GANs Using TensorFlow: Ajay Baranwal | Center Director | Center for Deep Learning in Electronic Manufacturing, Inc

- Machine Learning With Graphs: Going Beyond Tabular Data: Dr. Clair J. Sullivan | Data Science Advocate | Neo4j

- Deep Dive into Reinforcement Learning with PPO using TF-Agents & TensorFlow 2.0: Oliver Zeigermann | Software Developer | embarc Software Consulting GmbH

- Get Started with Time-Series Forecasting using the Google Cloud AI Platform: Karl Weinmeister | Developer Relations Engineering Manager | Google

Daniel Gutierrez, ODSC

Daniel D. Gutierrez is a practicing data scientist who’s been working with data long before the field came in vogue. As a technology journalist, he enjoys keeping a pulse on this fast-paced industry. Daniel is also an educator having taught data science, machine learning and R classes at the university level. He has authored four computer industry books on database and data science technology, including his most recent title, “Machine Learning and Data Science: An Introduction to Statistical Learning Methods with R.” Daniel holds a BS in Mathematics and Computer Science from UCLA.

HHS Outlines New Framework for Responsible AI Use in Public Benefits Administration

AI and Data Science News posted by ODSC Team May 4, 2024 In a move toward modernizing public benefits administration, the U.S. Department of Health and Human Services...

OpenAI Looks to Balance Risks and Model Capabilities

AI and Data Science News posted by ODSC Team May 3, 2024 OpenAI is working to remain at the forefront of artificial intelligence, but with that desire comes...

Podcast: Data Science Interview Tips & Tricks with Microsoft’s Leondra Gonzalez

Podcast Career Insights posted by ODSC Team May 3, 2024 Learn about cutting-edge developments in AI and Data Science from the experts who know them best...

Top 15+ Big Data Dissertation Topics

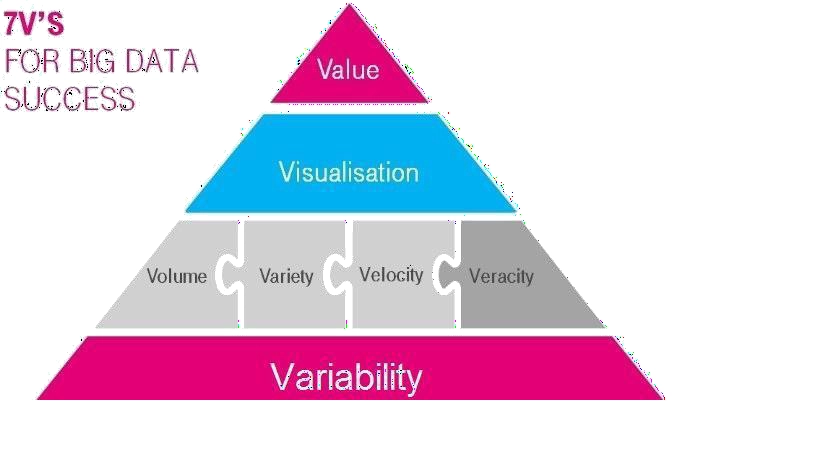

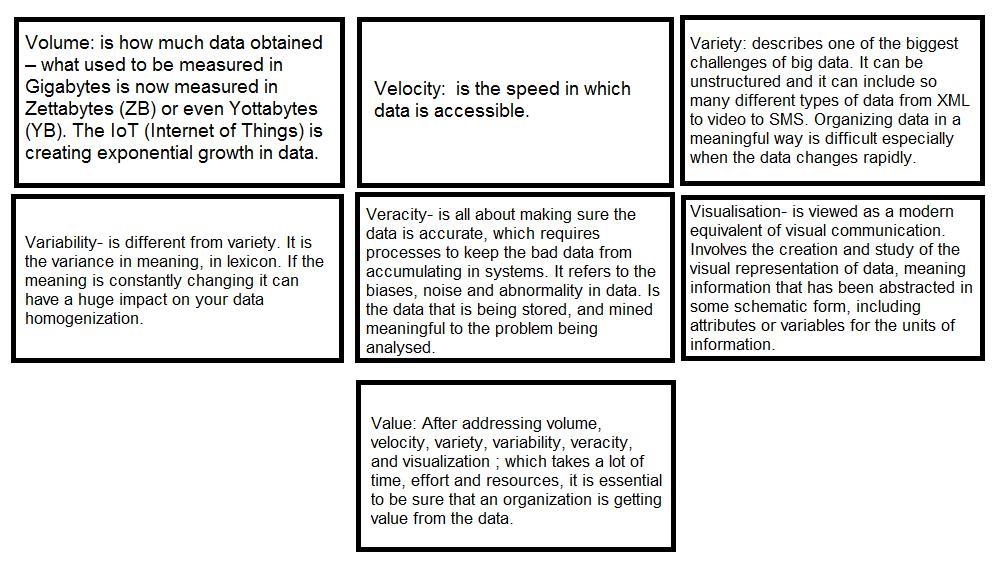

The term big data refers to the technology which processes a huge amount of data in various formats within a fraction of seconds . Big data handles the research domains by means of managing their data loads. Big data dissertation helps to convey the perceptions on the proposed research problems. It is also known as the new generation technology which could compatible with high-speed data acquisitions, storage, and analytics . From this article, you will come to know the big data dissertation topics with their relevant justifications”

In general, dissertation writing is one of the irreplaceable parts of the research . A well-drafted dissertation helps you to point out the issues and solutions of the researched area to the other opponents . Our technical team has framed this article with the introduction of big data fundamentals to make you understand. At the end of this article, you are going to become a master in the areas of dissertation topics without any doubts. Shall we move on to the upcoming areas? Let’s move to get into the article.

Fundamentals of Big Data

- Pattern Analytics

- Sentiment Analysis

- Block Modeling

- Association Rule Mining

- Partitioning Nodes

- Cassandra & Oozie

- Hbase & JAQL

- Mahout & Hadoop

- Hive & Middleware

- Pig & MapReduce

- Demographical Data

- Social Media Data

- Multimedia Data

- Crime Incidents

- Financial Reports

- Telephone Histories

- Network Location Data

- Observation Logs

The above listed are the aspects that are getting comprised in the fundamentals of big data . Big data is the technology to progress a huge amount of data with homogeneity by numerous concepts. Big data applications can be deployed in any of the fields to achieve extreme results in the determined areas of research/projects . In the subsequent areas, we mentioned to you the pipeline architecture of the big data for the ease of your understanding.

Big data progresses the unstructured data and normalizes the same in the human-readable formats. Our technical crew is very much sure about every concept of big data technology . Now let us move on to the next phase. Are you interested in stepping into the next section? Come we will learn together.

Pipeline Architecture for Big Data

- Data Warranty

- Data Cleaning

- Meta Data Managing

- Raw & Normalized Logs Storage

- Prescriptive & Descriptive

- Pattern Recognition

- Machine Learning & AI

- Statistical Data Mining

- Decision Support Methods

- Visualized Dashboards

- Alerting & Reporting Systems

This is how the big data architecture is built in real-time. Generally, manual working with a massive amount of data leads to too much time ingestions. Besides, you need to get familiar with the big data technical concepts to exclude these limitations . Usually, it needs experts’ pieces of advice to learn the eminent and crucial edges of those overlays.

In addition, here we wanted to remark about our incredible abilities in handling big data technologies. You might get wondered about us! We are a company with numerous skilled top engineers who are dynamically particularly performing the big data dissertation topics. Are you ready to know about us? Let’s move on to the next phase!

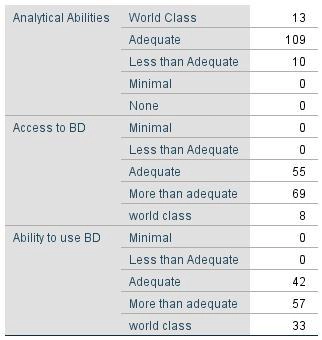

Our Experts Skillsets in Big Data

- Familiar with Hadoop & Cloud era etc.

- Google & AWS cloud deployment practices

- Virtuous inherent writing skillsets

- Experts in handling the bottlenecks with various tools

- Masters in big data concepts

- Experts in IoT, deep learning, machine learning & data mining

- Conversant with software, hardware, myriad & Matlab tools

- Experts in multivariable calculus, matrix & linear algebra

- Highly aware of Hadoop , SQL, R, Hive & Scala

- Proficient in Python, Java, C++ & R

The aforementioned are the various skillsets of our technical team. We are delivering the big data and other projects/researches by interpreting with these techniques and abilities. So far, we have discussed the basic concepts of big data analytics . We thought that it would be the right time to reveal the major features that overlap in big data analytics for the ease of your understanding. Shall we guys get into that phase? Here we go!!!

Major Features of Big Data Analytics

- Optimization of data storage

- Processing large volume of data

- Relevant search option

- Feedbacks update and work precisely

The listed above passage conveyed to you the features that manipulate the workflow of big data . As the matter of fact, our technical team with experts is frequently updating them according to the trends in the technology industry and solves the problems that arise in it. As this article is concentrated on the big data dissertation topics, our experts want to highlight the major problems that get up in big data management to improve your skill sets in that areas too. Let us have the next section!!!

Major Problems in Big Data

- Difficult to work with the different data formats

- Massive unstructured data ranges from videos, data & image

- Region-wise privacy control variations make much complex

- Trains the decentralized data models

- Accommodates with the regulatory in which data cannot be shared

- Requires improved local models in each boundary

- Hardware or software level security is big a challenge

- It fails to preserve the sensitive fields in the healthcare systems

- For instance, it reveals the personal health records visibly

- It fails to recognize the abnormalities (anomalies) of the big data

- In addition, it is the major issue in telecom domains

- Effective graph processing is needed in social media analysis

- It fails to handle the large scale graph processing

- Spark & Hadoop processes the online & offline data formats

- It requires improved scalability to process the parallel big data

- Videos are the public data transmission medium

- For instance CCTV footages, YouTube, and other social media video clips

- Data storage in cloud systems are a challenging issue here

- Inaccurate / Partial & Low Reliability is the biggest issue here

- Unlabeled data vagueness makes it much complex

- It results in data omission & ineffective data propagation

- Leads to understand the meaning in different ways

- Visualization of the massive amount of data dimensions are not possible

- Results in spreading rumors unconditionally

- Fake data sources are Whatsapp, Twitters & forged URLs

The listed above are the major problems that are being faced in big data technologies. However, these issues can be eradicated by the deployment of several tools along with improving the techniques of the same. In fact, this phase needs experts guidance. We do have world-class certified engineers to perform in emerging technologies.

If you are facing any issues in these areas while experimenting you can approach our researchers at any time. We are always welcoming the students to get benefits from us.

In a matter of fact, our technical crew is very much intelligent in handling the thesis/dissertation as well as familiar in the areas of big data projects and researches. Yes, we are going to cover the next section by highlighting the recent big data dissertation topics for your better understanding. As we reserved the unique places in the industries, we are being trusted blindly in the event of providing the unimaginable innovations in the determined dissertation and other works.

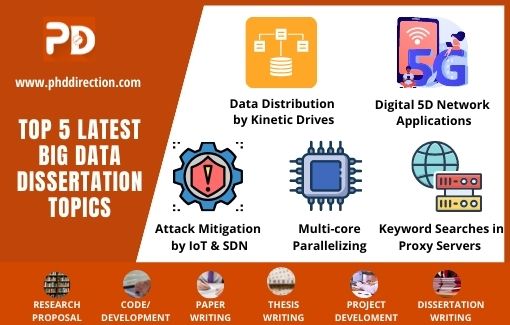

Recent Big Data Dissertation Topics

- Huge Scale Key-Value Storing & Data Distribution by Kinetic Drives

- Blocking Falls / HOL Deadlock Freedom & Minimal Path Routing by Smart-queuing

- Digital 5D Network Applications by Lessor Dimensionality Elements

- Effective Biological Network Analytics by Graph Theory Sampling Methods

- Advanced Big Data Segmentation (unfair) by Boosted Sampling Methods

- Collaborative Filtering & Huge Scale Bipartite Rating Graphs by Spark

- DDoS Attack Mitigation by IoT & SDN

- Termination of Tasks by Drive Diagnostic Data Center Attribution System

- Container Resource Integrations by Hadoop Transcoding Cluster Split Samples

- Retail Supply Chain Decision Making & Alerting System by Cloud Computing

- Sensitive Processes by Collaborative Filtering Algorithm & Quality Variance Methods

- Keyword Searches in Proxy Servers & Cloud Computing by Cryptography

- Non-Collaborative (Game) Cloud Computing by Task Scheduling Algorithm

- Multi-core Parallelizing & Overlapping by Speaker Listener Label Propagation

- Bipartite Graphs for Vacation Spots by Inventive Recommendation Frameworks

The above listed are some of the big data dissertation topics . In this section we have used some acronyms; we thought that you might need their explanations to understand the same.

- SDN- Software Defined Networking

- DDoS- Distributed Denial of Service

- IoT- Internet of Things

Let’s begin your dissertation works by envisaging these as your references. We hope that you are getting the points as of now listed. As the matter of fact, we are offering the dissertation services at the lowest cost compared to others. In addition to that, we have delivered more than 10,000 big data dissertations till now.

To be honest, each big data dissertation has a unique quality and we never imitate the contents as represented in the other dissertations. This makes us irreplaceable from others. If you are interested, let’s join your hands with us to experience the inexperienced technical fields. In addition to these sections, we have also wanted to encompass the big data analytics tools for the ease of your understanding. Let’s have that section!

Big Data Analytics Tools

- Imports data from RDBMS and sends to the Hadoop systems for queries

- Runs the aggregated queries & generates the columnar based database

- Sums up the incidences and words in the given inputs

- Stores the massive unstructured data & acts as a data streaming mode

- Computational open source big data tool with real-time occurrences

- Analyses & processes the immense amount of data robustly

- Handles the data portions effectively (chunks) & distributed DB

- Manages and integrates the big data acquisitions

- Deals with the dynamic datasets

- Analyses & warehouses the huge amount of data

The aforementioned are the top big data analytical tools . In those tools, Spark & Kafka writes simple window sliding queries to identify the necessary data. Open source datasets & log data parsing can be practiced if you become familiar with the functionalities and concepts of the big data analytical tools. So far, we have learned in the areas of big data dissertation topics. We hope that you would have enjoyed this article as this is conveyed to you the very essential aspects with crystal clear points. We are hoping for your explorations.

“Let’s start to light up your envisaged ideologies and thoughts in the forms of technology”

Why Work With Us ?

Senior research member, research experience, journal member, book publisher, research ethics, business ethics, valid references, explanations, paper publication, 9 big reasons to select us.

Our Editor-in-Chief has Website Ownership who control and deliver all aspects of PhD Direction to scholars and students and also keep the look to fully manage all our clients.

Our world-class certified experts have 18+years of experience in Research & Development programs (Industrial Research) who absolutely immersed as many scholars as possible in developing strong PhD research projects.

We associated with 200+reputed SCI and SCOPUS indexed journals (SJR ranking) for getting research work to be published in standard journals (Your first-choice journal).

PhDdirection.com is world’s largest book publishing platform that predominantly work subject-wise categories for scholars/students to assist their books writing and takes out into the University Library.

Our researchers provide required research ethics such as Confidentiality & Privacy, Novelty (valuable research), Plagiarism-Free, and Timely Delivery. Our customers have freedom to examine their current specific research activities.

Our organization take into consideration of customer satisfaction, online, offline support and professional works deliver since these are the actual inspiring business factors.

Solid works delivering by young qualified global research team. "References" is the key to evaluating works easier because we carefully assess scholars findings.

Detailed Videos, Readme files, Screenshots are provided for all research projects. We provide Teamviewer support and other online channels for project explanation.

Worthy journal publication is our main thing like IEEE, ACM, Springer, IET, Elsevier, etc. We substantially reduces scholars burden in publication side. We carry scholars from initial submission to final acceptance.

Related Pages

Our benefits, throughout reference, confidential agreement, research no way resale, plagiarism-free, publication guarantee, customize support, fair revisions, business professionalism, domains & tools, we generally use, wireless communication (4g lte, and 5g), ad hoc networks (vanet, manet, etc.), wireless sensor networks, software defined networks, network security, internet of things (mqtt, coap), internet of vehicles, cloud computing, fog computing, edge computing, mobile computing, mobile cloud computing, ubiquitous computing, digital image processing, medical image processing, pattern analysis and machine intelligence, geoscience and remote sensing, big data analytics, data mining, power electronics, web of things, digital forensics, natural language processing, automation systems, artificial intelligence, mininet 2.1.0, matlab (r2018b/r2019a), matlab and simulink, apache hadoop, apache spark mlib, apache mahout, apache flink, apache storm, apache cassandra, pig and hive, rapid miner, support 24/7, call us @ any time, +91 9444829042, [email protected].

Questions ?

Click here to chat with us

- Bibliography

- More Referencing guides Blog Automated transliteration Relevant bibliographies by topics

- Automated transliteration

- Relevant bibliographies by topics

- Referencing guides

Dissertations / Theses on the topic 'Big data frameworks'

Create a spot-on reference in apa, mla, chicago, harvard, and other styles.

Consult the top 50 dissertations / theses for your research on the topic 'Big data frameworks.'

Next to every source in the list of references, there is an 'Add to bibliography' button. Press on it, and we will generate automatically the bibliographic reference to the chosen work in the citation style you need: APA, MLA, Harvard, Chicago, Vancouver, etc.

You can also download the full text of the academic publication as pdf and read online its abstract whenever available in the metadata.

Browse dissertations / theses on a wide variety of disciplines and organise your bibliography correctly.

Nyström, Simon, and Joakim Lönnegren. "Processing data sources with big data frameworks." Thesis, KTH, Data- och elektroteknik, 2016. http://urn.kb.se/resolve?urn=urn:nbn:se:kth:diva-188204.

Bao, Shunxing. "Algorithmic Enhancements to Data Colocation Grid Frameworks for Big Data Medical Image Processing." Thesis, Vanderbilt University, 2019. http://pqdtopen.proquest.com/#viewpdf?dispub=13877282.

Large-scale medical imaging studies to date have predominantly leveraged in-house, laboratory-based or traditional grid computing resources for their computing needs, where the applications often use hierarchical data structures (e.g., Network file system file stores) or databases (e.g., COINS, XNAT) for storage and retrieval. The resulting performance for laboratory-based approaches reveal that performance is impeded by standard network switches since typical processing can saturate network bandwidth during transfer from storage to processing nodes for even moderate-sized studies. On the other hand, the grid may be costly to use due to the dedicated resources used to execute the tasks and lack of elasticity. With increasing availability of cloud-based big data frameworks, such as Apache Hadoop, cloud-based services for executing medical imaging studies have shown promise.

Despite this promise, our studies have revealed that existing big data frameworks illustrate different performance limitations for medical imaging applications, which calls for new algorithms that optimize their performance and suitability for medical imaging. For instance, Apache HBases data distribution strategy of region split and merge is detrimental to the hierarchical organization of imaging data (e.g., project, subject, session, scan, slice). Big data medical image processing applications involving multi-stage analysis often exhibit significant variability in processing times ranging from a few seconds to several days. Due to the sequential nature of executing the analysis stages by traditional software technologies and platforms, any errors in the pipeline are only detected at the later stages despite the sources of errors predominantly being the highly compute-intensive first stage. This wastes precious computing resources and incurs prohibitively higher costs for re-executing the application. To address these challenges, this research propose a framework - Hadoop & HBase for Medical Image Processing (HadoopBase-MIP) - which develops a range of performance optimization algorithms and employs a number of system behaviors modeling for data storage, data access and data processing. We also introduce how to build up prototypes to help empirical system behaviors verification. Furthermore, we introduce a discovery with the development of HadoopBase-MIP about a new type of contrast for medical imaging deep brain structure enhancement. And finally we show how to move forward the Hadoop based framework design into a commercialized big data / High performance computing cluster with cheap, scalable and geographically distributed file system.

Carvalho, Rafael Aquino de. "Uma análise comparativa de ambientes para Big Data: Apche Spark e HPAT." Universidade de São Paulo, 2018. http://www.teses.usp.br/teses/disponiveis/45/45134/tde-15062018-110116/.

Lemon, Alexander Michael. "A Shared-Memory Coupled Architecture to Leverage Big Data Frameworks in Prototyping and In-Situ Analytics for Data Intensive Scientific Workflows." BYU ScholarsArchive, 2019. https://scholarsarchive.byu.edu/etd/7545.

Kurt, Mehmet Can. "Fault-tolerant Programming Models and Computing Frameworks." The Ohio State University, 2015. http://rave.ohiolink.edu/etdc/view?acc_num=osu1437390499.

Lakoju, Mike. "A strategic approach of value identification for a big data project." Thesis, Brunel University, 2017. http://bura.brunel.ac.uk/handle/2438/15837.

Ainslie, Mandi. "Big data and privacy : a modernised framework." Diss., University of Pretoria, 2017. http://hdl.handle.net/2263/59805.

Su, Yu. "Big Data Management Framework based on Virtualization and Bitmap Data Summarization." The Ohio State University, 2015. http://rave.ohiolink.edu/etdc/view?acc_num=osu1420738636.

Bock, Matthew. "A Framework for Hadoop Based Digital Libraries of Tweets." Thesis, Virginia Tech, 2017. http://hdl.handle.net/10919/78351.

Teske, Alexander. "Automated Risk Management Framework with Application to Big Maritime Data." Thesis, Université d'Ottawa / University of Ottawa, 2018. http://hdl.handle.net/10393/38567.

Jayapandian, Catherine Praveena. "Cloudwave: A Cloud Computing Framework for Multimodal Electrophysiological Big Data." Case Western Reserve University School of Graduate Studies / OhioLINK, 2014. http://rave.ohiolink.edu/etdc/view?acc_num=case1405516626.

Sweeney, Michael John. "A framework for scoring and tagging NetFlow data." Thesis, Rhodes University, 2019. http://hdl.handle.net/10962/65022.

Orenga, Roglá Sergio. "Framework for the Implementation of a Big Data Ecosystem in Organizations." Doctoral thesis, Universitat Jaume I, 2017. http://hdl.handle.net/10803/481983.

Mgudlwa, Sibulela. "A big data analytics framework to improve healthcare service delivery in South Africa." Thesis, Cape Peninsula University of Technology, 2018. http://hdl.handle.net/20.500.11838/2877.

Forresi, Chiara. "Un framework per l'analisi di big data con elevata eterogeneità all'interno di multistore." Master's thesis, Alma Mater Studiorum - Università di Bologna, 2020. http://amslaurea.unibo.it/21411/.

Li, Zhen. "CloudVista: a Framework for Interactive Visual Cluster Exploration of Big Data in the Cloud." Wright State University / OhioLINK, 2012. http://rave.ohiolink.edu/etdc/view?acc_num=wright1348204863.

Carneiro, Tiago Reis. "Impacto do big data e analytics na performance da industria hoteleira nacional." Master's thesis, Instituto Superior de Economia e Gestão, 2017. http://hdl.handle.net/10400.5/15103.

Buono, Nicola. "Un framework per la predizione del contesto utente." Bachelor's thesis, Alma Mater Studiorum - Università di Bologna, 2019. http://amslaurea.unibo.it/17716/.

Huang, Xin. "Querying big RDF data : semantic heterogeneity and rule-based inconsistency." Thesis, Sorbonne Paris Cité, 2016. http://www.theses.fr/2016USPCB124/document.

Berglund, Jesper. "An automated approach to clustering with the framework suggested by Bradley, Fayyad and Reina." Thesis, KTH, Skolan för elektroteknik och datavetenskap (EECS), 2018. http://urn.kb.se/resolve?urn=urn:nbn:se:kth:diva-238736.

Cofré, Martel Sergio Manuel Ignacio. "A deep learning based framework for physical assets' health prognostics under uncertainty for big Machinery Data." Tesis, Universidad de Chile, 2018. http://repositorio.uchile.cl/handle/2250/168080.

Chen, Jiahong. "Data protection in the age of Big Data : legal challenges and responses in the context of online behavioural advertising." Thesis, University of Edinburgh, 2018. http://hdl.handle.net/1842/33149.

Zhang, Jianzhe. "Development of an Apache Spark-Based Framework for Processing and Analyzing Neuroscience Big Data: Application in Epilepsy Using EEG Signal Data." Case Western Reserve University School of Graduate Studies / OhioLINK, 2020. http://rave.ohiolink.edu/etdc/view?acc_num=case1597089028333942.

Calabria, Francesco. "Il Framework RAM3S: Generalizzazione ed Estensione." Master's thesis, Alma Mater Studiorum - Università di Bologna, 2018.

Aved, Alexander. "Scene Understanding for Real Time Processing of Queries over Big Data Streaming Video." Doctoral diss., University of Central Florida, 2013. http://digital.library.ucf.edu/cdm/ref/collection/ETD/id/5597.

Bursztyn, Damián. "Répondre efficacement aux requêtes Big Data en présence de contraintes." Thesis, Université Paris-Saclay (ComUE), 2016. http://www.theses.fr/2016SACLS567/document.

Nguyen, Ngoc Buu Cat. "Data Mining in Knowledge Management Processes: Developing an Implementing Framework." Thesis, Umeå universitet, Institutionen för informatik, 2018. http://urn.kb.se/resolve?urn=urn:nbn:se:umu:diva-149668.

KNOBEL, KARIN, and LOVISA LÆSTADIUS. "Big Data in Performance Measurement: : Towards a Framework for Performance Measurement in a Digital and Dynamic Business Climate." Thesis, KTH, Skolan för industriell teknik och management (ITM), 2018. http://urn.kb.se/resolve?urn=urn:nbn:se:kth:diva-238689.

Yang, Bin, and 杨彬. "A novel framework for binning environmental genomic fragments." Thesis, The University of Hong Kong (Pokfulam, Hong Kong), 2010. http://hub.hku.hk/bib/B45789344.

張美玲 and Mei-ling Lisa Cheung. "An evaluation framework for internet lexicography." Thesis, The University of Hong Kong (Pokfulam, Hong Kong), 2000. http://hub.hku.hk/bib/B31944553.

Leung, Yuk-yee, and 梁玉儀. "An integrated framework for feature selection and classification in microarray data analysis." Thesis, The University of Hong Kong (Pokfulam, Hong Kong), 2009. http://hub.hku.hk/bib/B43278632.

Alshaer, Mohammad. "An Efficient Framework for Processing and Analyzing Unstructured Text to Discover Delivery Delay and Optimization of Route Planning in Realtime." Thesis, Lyon, 2019. http://www.theses.fr/2019LYSE1105/document.

Coimbra, Rafael Melo. "Framework based on lambda architecture applied to IoT: case scenario." Master's thesis, Universidade de Aveiro, 2016. http://hdl.handle.net/10773/21739.

Semenski, Vedran. "An ABAC framework for IoT applications based on the OASIS XACML standard." Master's thesis, Universidade de Aveiro, 2015. http://hdl.handle.net/10773/18493.

Chen, Yuanfang. "Mobile collaborative sensing : framework and algorithm design." Thesis, Evry, Institut national des télécommunications, 2017. http://www.theses.fr/2017TELE0016/document.

Riegel, Ryan Nelson. "Generalized N-body problems: a framework for scalable computation." Diss., Georgia Institute of Technology, 2013. http://hdl.handle.net/1853/50269.

Lee, Yong Cheol. "Rule logic and its validation framework of model view definitions for building information modeling." Diss., Georgia Institute of Technology, 2015. http://hdl.handle.net/1853/54430.

Karlstedt, Johan M. "An ISD study of Extreme Information Management challenges in IoT Systems - Case : The “OpenSenses”eHealth/Smarthome project." Thesis, Blekinge Tekniska Högskola, Sektionen för datavetenskap och kommunikation, 2012. http://urn.kb.se/resolve?urn=urn:nbn:se:bth-4821.

von, Wenckstern Michael. "Web applications using the Google Web Toolkit." Master's thesis, Technische Universitaet Bergakademie Freiberg Universitaetsbibliothek "Georgius Agricola", 2013. http://nbn-resolving.de/urn:nbn:de:bsz:105-qucosa-115009.

Chen, Chao Hsu, and 陳潮旭. "A Study on Open Source Frameworks for Big Data Analytics." Thesis, 2016. http://ndltd.ncl.edu.tw/handle/9tu5j7.

Miranda, Cristiano José Ribeiro. "Processamento em streaming: avaliação de frameworks em contexto Big Data." Master's thesis, 2018. http://hdl.handle.net/1822/59130.

(10692924), Bara M. Abusalah. "DEPENDABLE CLOUD RESOURCES FOR BIG-DATA BATCH PROCESSING & STREAMING FRAMEWORKS." Thesis, 2021.

Yao, Yi-Cheng, and 姚奕丞. "An Automatic Pre-Processing Framework for Big Data." Thesis, 2016. http://ndltd.ncl.edu.tw/handle/58812215485504227958.

Chrimes, Dillon. "Towards a big data analytics platform with Hadoop/MapReduce framework using simulated patient data of a hospital system." Thesis, 2016. http://hdl.handle.net/1828/7645.

Hsieh, Tsung Ju, and 謝宗儒. "Continuous Audit Mechanism Using a Big Data Analytics Framework." Thesis, 2014. http://ndltd.ncl.edu.tw/handle/2759s3.

Shih, Jhih-Cheng, and 施志承. "A Framework to Support Hyper Big Data Integration and Management." Thesis, 2015. http://ndltd.ncl.edu.tw/handle/67359013162352975835.

Chang, Yu-Jui, and 張有睿. "An adaptively multi-attribute index framework for big IoT data." Thesis, 2019. http://ndltd.ncl.edu.tw/handle/5xcdb6.

Amankwah-Amoah, J., and Samuel Adomako. "Big Data Analytics and Business Failures in Data-Rich Environments: An Organizing Framework." 2018. http://hdl.handle.net/10454/16746.

Shieh, Jeng-Peng, and 謝正鵬. "An Adaptive Code/Object Offloading Framework for Personalized Big-Data Computing." Thesis, 2015. http://ndltd.ncl.edu.tw/handle/60606246242372908678.

Byrne, Thomas J., I. Felician Campean, and Daniel Neagu. "Towards a framework for engineering big data: An automotive systems perspective." 2018. http://hdl.handle.net/10454/15655.

- How It Works

- PhD thesis writing

- Master thesis writing

- Bachelor thesis writing

- Dissertation writing service

- Dissertation abstract writing

- Thesis proposal writing

- Thesis editing service

- Thesis proofreading service

- Thesis formatting service

- Coursework writing service

- Research paper writing service

- Architecture thesis writing

- Computer science thesis writing

- Engineering thesis writing

- History thesis writing

- MBA thesis writing

- Nursing dissertation writing

- Psychology dissertation writing

- Sociology thesis writing

- Statistics dissertation writing

- Buy dissertation online

- Write my dissertation

- Cheap thesis

- Cheap dissertation

- Custom dissertation

- Dissertation help

- Pay for thesis

- Pay for dissertation

- Senior thesis

- Write my thesis

214 Best Big Data Research Topics for Your Thesis Paper

Finding an ideal big data research topic can take you a long time. Big data, IoT, and robotics have evolved. The future generations will be immersed in major technologies that will make work easier. Work that was done by 10 people will now be done by one person or a machine. This is amazing because, in as much as there will be job loss, more jobs will be created. It is a win-win for everyone.

Big data is a major topic that is being embraced globally. Data science and analytics are helping institutions, governments, and the private sector. We will share with you the best big data research topics.

On top of that, we can offer you the best writing tips to ensure you prosper well in your academics. As students in the university, you need to do proper research to get top grades. Hence, you can consult us if in need of research paper writing services.

Big Data Analytics Research Topics for your Research Project

Are you looking for an ideal big data analytics research topic? Once you choose a topic, consult your professor to evaluate whether it is a great topic. This will help you to get good grades.

- Which are the best tools and software for big data processing?

- Evaluate the security issues that face big data.

- An analysis of large-scale data for social networks globally.

- The influence of big data storage systems.

- The best platforms for big data computing.

- The relation between business intelligence and big data analytics.

- The importance of semantics and visualization of big data.

- Analysis of big data technologies for businesses.

- The common methods used for machine learning in big data.

- The difference between self-turning and symmetrical spectral clustering.

- The importance of information-based clustering.

- Evaluate the hierarchical clustering and density-based clustering application.

- How is data mining used to analyze transaction data?

- The major importance of dependency modeling.

- The influence of probabilistic classification in data mining.

Interesting Big Data Analytics Topics

Who said big data had to be boring? Here are some interesting big data analytics topics that you can try. They are based on how some phenomena are done to make the world a better place.

- Discuss the privacy issues in big data.

- Evaluate the storage systems of scalable in big data.

- The best big data processing software and tools.

- Data mining tools and techniques are popularly used.

- Evaluate the scalable architectures for parallel data processing.

- The major natural language processing methods.

- Which are the best big data tools and deployment platforms?

- The best algorithms for data visualization.

- Analyze the anomaly detection in cloud servers

- The scrutiny normally done for the recruitment of big data job profiles.

- The malicious user detection in big data collection.

- Learning long-term dependencies via the Fourier recurrent units.

- Nomadic computing for big data analytics.

- The elementary estimators for graphical models.

- The memory-efficient kernel approximation.

Big Data Latest Research Topics

Do you know the latest research topics at the moment? These 15 topics will help you to dive into interesting research. You may even build on research done by other scholars.

- Evaluate the data mining process.

- The influence of the various dimension reduction methods and techniques.

- The best data classification methods.

- The simple linear regression modeling methods.

- Evaluate the logistic regression modeling.

- What are the commonly used theorems?

- The influence of cluster analysis methods in big data.

- The importance of smoothing methods analysis in big data.

- How is fraud detection done through AI?

- Analyze the use of GIS and spatial data.

- How important is artificial intelligence in the modern world?

- What is agile data science?

- Analyze the behavioral analytics process.

- Semantic analytics distribution.

- How is domain knowledge important in data analysis?

Big Data Debate Topics

If you want to prosper in the field of big data, you need to try even hard topics. These big data debate topics are interesting and will help you to get a better understanding.

- The difference between big data analytics and traditional data analytics methods.

- Why do you think the organization should think beyond the Hadoop hype?

- Does the size of the data matter more than how recent the data is?

- Is it true that bigger data are not always better?

- The debate of privacy and personalization in maintaining ethics in big data.

- The relation between data science and privacy.

- Do you think data science is a rebranding of statistics?

- Who delivers better results between data scientists and domain experts?

- According to your view, is data science dead?

- Do you think analytics teams need to be centralized or decentralized?

- The best methods to resource an analytics team.

- The best business case for investing in analytics.

- The societal implications of the use of predictive analytics within Education.

- Is there a need for greater control to prevent experimentation on social media users without their consent?

- How is the government using big data; for the improvement of public statistics or to control the population?

University Dissertation Topics on Big Data

Are you doing your Masters or Ph.D. and wondering the best dissertation topic or thesis to do? Why not try any of these? They are interesting and based on various phenomena. While doing the research ensure you relate the phenomenon with the current modern society.

- The machine learning algorithms are used for fall recognition.

- The divergence and convergence of the internet of things.

- The reliable data movements using bandwidth provision strategies.

- How is big data analytics using artificial neural networks in cloud gaming?

- How is Twitter accounts classification done using network-based features?

- How is online anomaly detection done in the cloud collaborative environment?

- Evaluate the public transportation insights provided by big data.

- Evaluate the paradigm for cancer patients using the nursing EHR to predict the outcome.

- Discuss the current data lossless compression in the smart grid.

- How does online advertising traffic prediction helps in boosting businesses?

- How is the hyperspectral classification done using the multiple kernel learning paradigm?

- The analysis of large data sets downloaded from websites.

- How does social media data help advertising companies globally?

- Which are the systems recognizing and enforcing ownership of data records?

- The alternate possibilities emerging for edge computing.

The Best Big Data Analysis Research Topics and Essays

There are a lot of issues that are associated with big data. Here are some of the research topics that you can use in your essays. These topics are ideal whether in high school or college.

- The various errors and uncertainty in making data decisions.

- The application of big data on tourism.

- The automation innovation with big data or related technology

- The business models of big data ecosystems.

- Privacy awareness in the era of big data and machine learning.

- The data privacy for big automotive data.

- How is traffic managed in defined data center networks?

- Big data analytics for fault detection.

- The need for machine learning with big data.

- The innovative big data processing used in health care institutions.

- The money normalization and extraction from texts.

- How is text categorization done in AI?

- The opportunistic development of data-driven interactive applications.

- The use of data science and big data towards personalized medicine.

- The programming and optimization of big data applications.

The Latest Big Data Research Topics for your Research Proposal

Doing a research proposal can be hard at first unless you choose an ideal topic. If you are just diving into the big data field, you can use any of these topics to get a deeper understanding.

- The data-centric network of things.

- Big data management using artificial intelligence supply chain.

- The big data analytics for maintenance.

- The high confidence network predictions for big biological data.

- The performance optimization techniques and tools for data-intensive computation platforms.

- The predictive modeling in the legal context.

- Analysis of large data sets in life sciences.

- How to understand the mobility and transport modal disparities sing emerging data sources?

- How do you think data analytics can support asset management decisions?

- An analysis of travel patterns for cellular network data.

- The data-driven strategic planning for citywide building retrofitting.

- How is money normalization done in data analytics?

- Major techniques used in data mining.

- The big data adaptation and analytics of cloud computing.

- The predictive data maintenance for fault diagnosis.

Interesting Research Topics on A/B Testing In Big Data

A/B testing topics are different from the normal big data topics. However, you use an almost similar methodology to find the reasons behind the issues. These topics are interesting and will help you to get a deeper understanding.

- How is ultra-targeted marketing done?

- The transition of A/B testing from digital to offline.

- How can big data and A/B testing be done to win an election?

- Evaluate the use of A/B testing on big data

- Evaluate A/B testing as a randomized control experiment.

- How does A/B testing work?

- The mistakes to avoid while conducting the A/B testing.

- The most ideal time to use A/B testing.

- The best way to interpret results for an A/B test.

- The major principles of A/B tests.

- Evaluate the cluster randomization in big data

- The best way to analyze A/B test results and the statistical significance.

- How is A/B testing used in boosting businesses?

- The importance of data analysis in conversion research

- The importance of A/B testing in data science.

Amazing Research Topics on Big Data and Local Governments

Governments are now using big data to make the lives of the citizens better. This is in the government and the various institutions. They are based on real-life experiences and making the world better.

- Assess the benefits and barriers of big data in the public sector.

- The best approach to smart city data ecosystems.

- The big analytics used for policymaking.

- Evaluate the smart technology and emergence algorithm bureaucracy.

- Evaluate the use of citizen scoring in public services.

- An analysis of the government administrative data globally.

- The public values are found in the era of big data.

- Public engagement on local government data use.

- Data analytics use in policymaking.

- How are algorithms used in public sector decision-making?

- The democratic governance in the big data era.

- The best business model innovation to be used in sustainable organizations.

- How does the government use the collected data from various sources?

- The role of big data for smart cities.

- How does big data play a role in policymaking?

Easy Research Topics on Big Data

Who said big data topics had to be hard? Here are some of the easiest research topics. They are based on data management, research, and data retention. Pick one and try it!

- Who uses big data analytics?

- Evaluate structure machine learning.

- Explain the whole deep learning process.

- Which are the best ways to manage platforms for enterprise analytics?

- Which are the new technologies used in data management?

- What is the importance of data retention?

- The best way to work with images is when doing research.

- The best way to promote research outreach is through data management.

- The best way to source and manage external data.

- Does machine learning improve the quality of data?

- Describe the security technologies that can be used in data protection.

- Evaluate token-based authentication and its importance.

- How can poor data security lead to the loss of information?

- How to determine secure data.

- What is the importance of centralized key management?

Unique IoT and Big Data Research Topics

Internet of Things has evolved and many devices are now using it. There are smart devices, smart cities, smart locks, and much more. Things can now be controlled by the touch of a button.

- Evaluate the 5G networks and IoT.

- Analyze the use of Artificial intelligence in the modern world.

- How do ultra-power IoT technologies work?

- Evaluate the adaptive systems and models at runtime.

- How have smart cities and smart environments improved the living space?

- The importance of the IoT-based supply chains.

- How does smart agriculture influence water management?

- The internet applications naming and identifiers.

- How does the smart grid influence energy management?

- Which are the best design principles for IoT application development?

- The best human-device interactions for the Internet of Things.

- The relation between urban dynamics and crowdsourcing services.

- The best wireless sensor network for IoT security.

- The best intrusion detection in IoT.

- The importance of big data on the Internet of Things.

Big Data Database Research Topics You Should Try

Big data is broad and interesting. These big data database research topics will put you in a better place in your research. You also get to evaluate the roles of various phenomena.

- The best cloud computing platforms for big data analytics.

- The parallel programming techniques for big data processing.

- The importance of big data models and algorithms in research.

- Evaluate the role of big data analytics for smart healthcare.

- How is big data analytics used in business intelligence?

- The best machine learning methods for big data.

- Evaluate the Hadoop programming in big data analytics.

- What is privacy-preserving to big data analytics?

- The best tools for massive big data processing

- IoT deployment in Governments and Internet service providers.

- How will IoT be used for future internet architectures?

- How does big data close the gap between research and implementation?

- What are the cross-layer attacks in IoT?

- The influence of big data and smart city planning in society.

- Why do you think user access control is important?

Big Data Scala Research Topics

Scala is a programming language that is used in data management. It is closely related to other data programming languages. Here are some of the best scala questions that you can research.

- Which are the most used languages in big data?

- How is scala used in big data research?

- Is scala better than Java in big data?

- How is scala a concise programming language?

- How does the scala language stream process in real-time?

- Which are the various libraries for data science and data analysis?

- How does scala allow imperative programming in data collection?

- Evaluate how scala includes a useful REPL for interaction.

- Evaluate scala’s IDE support.

- The data catalog reference model.

- Evaluate the basics of data management and its influence on research.

- Discuss the behavioral analytics process.

- What can you term as the experience economy?

- The difference between agile data science and scala language.

- Explain the graph analytics process.

Independent Research Topics for Big Data

These independent research topics for big data are based on the various technologies and how they are related. Big data will greatly be important for modern society.

- The biggest investment is in big data analysis.

- How are multi-cloud and hybrid settings deep roots?

- Why do you think machine learning will be in focus for a long while?

- Discuss in-memory computing.

- What is the difference between edge computing and in-memory computing?

- The relation between the Internet of things and big data.

- How will digital transformation make the world a better place?

- How does data analysis help in social network optimization?

- How will complex big data be essential for future enterprises?

- Compare the various big data frameworks.

- The best way to gather and monitor traffic information using the CCTV images

- Evaluate the hierarchical structure of groups and clusters in the decision tree.

- Which are the 3D mapping techniques for live streaming data.

- How does machine learning help to improve data analysis?

- Evaluate DataStream management in task allocation.

- How is big data provisioned through edge computing?

- The model-based clustering of texts.

- The best ways to manage big data.

- The use of machine learning in big data.

Is Your Big Data Thesis Giving You Problems?

These are some of the best topics that you can use to prosper in your studies. Not only are they easy to research but also reflect on real-time issues. Whether in University or college, you need to put enough effort into your studies to prosper. However, if you have time constraints, we can provide professional writing help. Are you looking for online expert writers? Look no further, we will provide quality work at a cheap price.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Comment * Error message

Name * Error message

Email * Error message

Save my name, email, and website in this browser for the next time I comment.

As Putin continues killing civilians, bombing kindergartens, and threatening WWIII, Ukraine fights for the world's peaceful future.

Ukraine Live Updates

Chapman University Digital Commons

Home > Dissertations and Theses > Computational and Data Sciences (PhD) Dissertations

Computational and Data Sciences (PhD) Dissertations

Below is a selection of dissertations from the Doctor of Philosophy in Computational and Data Sciences program in Schmid College that have been included in Chapman University Digital Commons. Additional dissertations from years prior to 2019 are available through the Leatherby Libraries' print collection or in Proquest's Dissertations and Theses database.

Dissertations from 2024 2024

Machine Learning and Geostatistical Approaches for Discovery of Weather and Climate Events Related to El Niño Phenomena , Sachi Perera