Center for Teaching and Learning

Step 4: develop assessment criteria and rubrics.

Just as we align assessments with the course learning objectives, we also align the grading criteria for each assessment with the goals of that unit of content or practice, especially for assignments than cannot be graded through automation the way that multiple-choice tests can. Grading criteria articulate what is important in each assessment, what knowledge or skills students should be able to demonstrate, and how they can best communicate that to you. When you share grading criteria with students, you help them understand what to focus on and how to demonstrate their learning successfully. From good assessment criteria, you can develop a grading rubric .

Develop Your Assessment Criteria | Decide on a Rating Scale | Create the Rubric

Developing Your Assessment Criteria

Good assessment criteria are

- Clear and easy to understand as a guide for students

- Attainable rather than beyond students’ grasp in the current place in the course

- Significant in terms of the learning students should demonstrate

- Relevant in that they assess student learning toward course objectives related to that one assessment.

To create your grading criteria, consider the following questions:

- What is the most significant content or knowledge students should be able to demonstrate understanding of at this point in the course?

- What specific skills, techniques, or applications should students be able to use to demonstrate using at this point in the course?

- What secondary skills or practices are important for students to demonstrate in this assessment? (for example, critical thinking, public speaking skills, or writing as well as more abstract concepts such as completeness, creativity, precision, or problem-solving abilities)

- Do the criteria align with the objectives for both the assessment and the course?

Once you have developed some ideas about the assessment’s grading criteria, double-check to make sure the criteria are observable, measurable, significant, and distinct from each other.

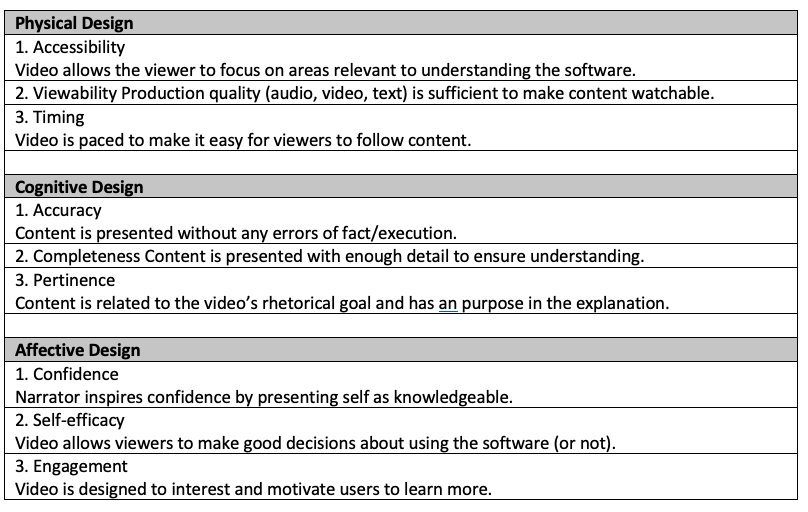

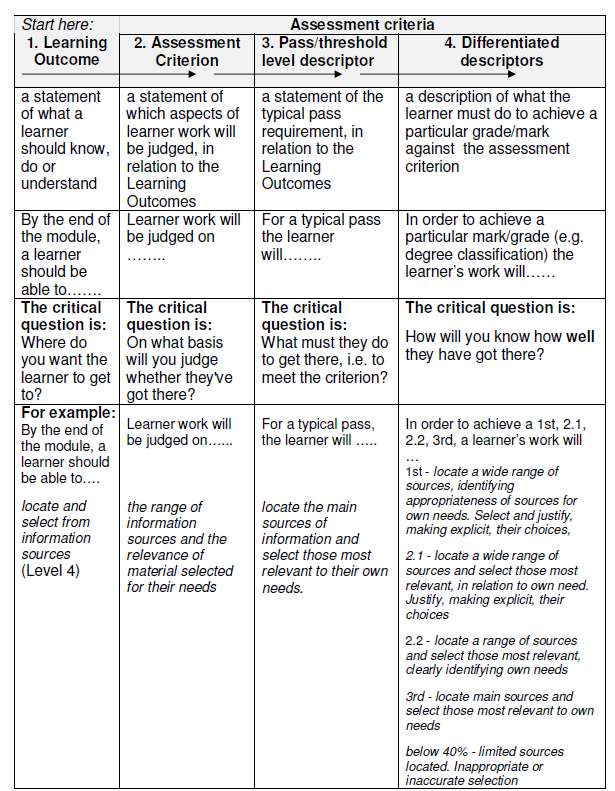

Assessment Criteria Example Using the questions above, the performance criteria in the example below were designed for an assignment in which students had to create an explainer video about a scientific concept for a specified audience. Each elements can be observed and measured based on both expert instructor and peer feedback, and each is significant because it relates to the course and assignment learning goals.

Additional Assessment Criteria Resources Developing Grading Criteria (Vanderbilt University) Creating Grading Criteria (Brown University) Sample Criteria (Brown University) Developing Grading Criteria (Temple University)

Decide on a Rating Scale

Deciding what scale you will use for an assessment depends on the type of learning you want students to demonstrate and the type of feedback you want to give students on this particular assignment or test. For example, for an introductory lab report early in the semester, you might not be as concerned with advanced levels of precision as much as correct displays of data and the tone of the report; therefore, grading heavily on copy editing or advanced analysis would not be appropriate. The criteria would likely more rigorous by the end of the semester, as you build up to the advanced level you want students to reach in the course.

Rating scales turn the grading criteria you have defined into levels of performance expectations for the students that can then be interpreted as a letter, number, or level. Common rating scales include

- A, B, C, etc. (without or without + and -)

- 100 point scale with defined cut-off for a letter grade if desired (ex. a B = 89-80; or a B+ = 89-87, B = 86-83, B- = 82-80)

- Yes or no, present or not present (if the rubric is a checklist of items students must show)

- below expectations, meets expectations, exceeds expectations

- not demonstrated, poor, average, good, excellent

Once you have decided on a scale for the type of assignment and the learning you want students to demonstrate, you can use the scale to clearly articulate what each level of performance looks like, such as defining what A, B, C, etc. level work would look like for each grading criteria. What would distinguish a student who earns a B from one who earns a C? What would distinguish a student who excelled in demonstrating use of a tool from a student who clearly was not familiar with it? Write these distinctions out in descriptive notes or brief paragraphs.

Ethical Implications of Rating Scales There are ethical implications in each of these types of rating skills. On a project worth 100 points, what is the objective difference between earning an 85 or and 87? On an exceeds/meets/does not meet scale, how can those levels be objectively applied? Different understandings of "fairness" can lead to several ways of grading that might disadvantage some students. Learn more about equitable grading practices here.

Create the Rubric

Rubrics Can Make Grading More Effective

- Provide students with more complete and targeted feedback

- Make grading more timely by enabling the provision of feedback soon after assignment is submitted/presented.

- Standardize assessment criteria among those assigning/assessing the same assignment.

- Facilitate peer evaluation of early drafts of assignment.

Rubrics Can Help Student Learning

- Convey your expectations about the assignment through a classroom discussion of the rubric prior to the beginning of the assignment

- Level the playing field by clarifying academic expectations and assignments so that all students understand regardless of their educational backgrounds.(e.g. define what we expect analysis, critical thinking, or even introductions/conclusions should include)

- Promote student independence and motivation by enabling self-assessment

- Prepare students to use detailed feedback.

Rubrics Have Other Uses:

- Track development of student skills over several assignments

- Facilitate communication with others (e.g. TAs, communication center, tutors, other faculty, etc)

- Refine own teaching skills (e.g. by responding to common areas of weaknesses, feedback on how well teaching strategies are working in preparing students for their assignments).

In this video, CTL's Dr. Carol Subino Sullivan discusses the value of the different types of rubrics.

Many non-test-based assessments might seem daunting to grade, but a well-designed rubric can alleviate some of that work. A rubric is a table that usually has these parts:

- a clear description of the learning activity being assessed

- criteria by which the activity will be evaluated

- a rating scale identifying different levels of performance

- descriptions of the level of performance a student must reach to earn that level.

When you define the criteria and pre-define what acceptable performance for each of those criteria looks like ahead of time, you can use the rubric to compare with student work and assign grades or points for each criteria accordingly. Rubrics work very well for projects, papers/reports, and presentations , as well as in peer review, and good rubrics can save instructors and TAs time when grading .

Sample Rubrics This final rubric for the scientific concept explainer video combines the assessment criteria and the holistic rating scale:

When using this rubric, which can be easily adapted to use a present/not present rating scale or a letter grade scale, you can use a combination of checking items off and adding written (or audio/video) comments in the different boxes to provide the student more detailed feedback.

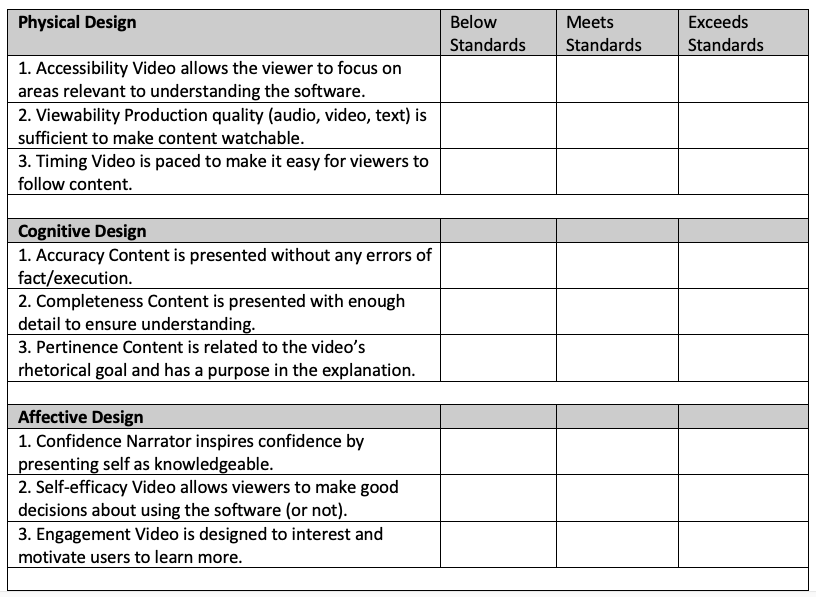

As a second example, this descriptive rubric was used to ask students to peer assess and self-assess their contributions to a collaborative project. The rating scale is 1 through 4, and each description of performance builds on the previous. ( See the full rubric with scales for both product and process here. This rubric was designed for students working in teams to assess their own contributions to the project as well as their peers.)

Building a Rubric in Canvas Assignments You can create rubrics for assignments and discussions boards in Canvas. Review these Canvas guides for tips and tricks. Rubrics Overview for Instructors What are rubrics? How do I align a rubric with a learning outcome? How do I add a rubric to an assignment? How do I add a rubric to a quiz? How do I add a rubric to a graded discussion? How do I use a rubric to grade submissions in SpeedGrader? How do I manage rubrics in a course?

Additional Resources for Developing Rubrics Designing Grading Rubrics (Brown University) Step-by-step process for creating an effective, fair, and efficient grading rubric.

Creating and Using Rubrics (Carnegie Mellon University) Explores the basics of rubric design along with multiple examples for grading different types of assignments.

Using Rubrics (Cornell University) Argument for the value of rubrics to support student learning.

Rubrics (University of California Berkeley) Shares "fun facts" about rubrics, and links the rubric guidelines from many higher ed organizations such as the AAC&U.

Creating and Using Rubrics (Yale University) Introduces different styles of rubrics and ways to decide what style to use given your course's learning goals.

Best Practices for Designing Effective Resources (Arizona State University) Comprehensive overview of rubric design principles.

Return to Main Menu | Return to Step 3 | Go to Step 5 Determine Feedback Strategy

Accessibility Information

Download Microsoft Products > Download Adobe Reader >

Rubric Best Practices, Examples, and Templates

A rubric is a scoring tool that identifies the different criteria relevant to an assignment, assessment, or learning outcome and states the possible levels of achievement in a specific, clear, and objective way. Use rubrics to assess project-based student work including essays, group projects, creative endeavors, and oral presentations.

Rubrics can help instructors communicate expectations to students and assess student work fairly, consistently and efficiently. Rubrics can provide students with informative feedback on their strengths and weaknesses so that they can reflect on their performance and work on areas that need improvement.

How to Get Started

Best practices, moodle how-to guides.

- Workshop Recording (Fall 2022)

- Workshop Registration

Step 1: Analyze the assignment

The first step in the rubric creation process is to analyze the assignment or assessment for which you are creating a rubric. To do this, consider the following questions:

- What is the purpose of the assignment and your feedback? What do you want students to demonstrate through the completion of this assignment (i.e. what are the learning objectives measured by it)? Is it a summative assessment, or will students use the feedback to create an improved product?

- Does the assignment break down into different or smaller tasks? Are these tasks equally important as the main assignment?

- What would an “excellent” assignment look like? An “acceptable” assignment? One that still needs major work?

- How detailed do you want the feedback you give students to be? Do you want/need to give them a grade?

Step 2: Decide what kind of rubric you will use

Types of rubrics: holistic, analytic/descriptive, single-point

Holistic Rubric. A holistic rubric includes all the criteria (such as clarity, organization, mechanics, etc.) to be considered together and included in a single evaluation. With a holistic rubric, the rater or grader assigns a single score based on an overall judgment of the student’s work, using descriptions of each performance level to assign the score.

Advantages of holistic rubrics:

- Can p lace an emphasis on what learners can demonstrate rather than what they cannot

- Save grader time by minimizing the number of evaluations to be made for each student

- Can be used consistently across raters, provided they have all been trained

Disadvantages of holistic rubrics:

- Provide less specific feedback than analytic/descriptive rubrics

- Can be difficult to choose a score when a student’s work is at varying levels across the criteria

- Any weighting of c riteria cannot be indicated in the rubric

Analytic/Descriptive Rubric . An analytic or descriptive rubric often takes the form of a table with the criteria listed in the left column and with levels of performance listed across the top row. Each cell contains a description of what the specified criterion looks like at a given level of performance. Each of the criteria is scored individually.

Advantages of analytic rubrics:

- Provide detailed feedback on areas of strength or weakness

- Each criterion can be weighted to reflect its relative importance

Disadvantages of analytic rubrics:

- More time-consuming to create and use than a holistic rubric

- May not be used consistently across raters unless the cells are well defined

- May result in giving less personalized feedback

Single-Point Rubric . A single-point rubric is breaks down the components of an assignment into different criteria, but instead of describing different levels of performance, only the “proficient” level is described. Feedback space is provided for instructors to give individualized comments to help students improve and/or show where they excelled beyond the proficiency descriptors.

Advantages of single-point rubrics:

- Easier to create than an analytic/descriptive rubric

- Perhaps more likely that students will read the descriptors

- Areas of concern and excellence are open-ended

- May removes a focus on the grade/points

- May increase student creativity in project-based assignments

Disadvantage of analytic rubrics: Requires more work for instructors writing feedback

Step 3 (Optional): Look for templates and examples.

You might Google, “Rubric for persuasive essay at the college level” and see if there are any publicly available examples to start from. Ask your colleagues if they have used a rubric for a similar assignment. Some examples are also available at the end of this article. These rubrics can be a great starting point for you, but consider steps 3, 4, and 5 below to ensure that the rubric matches your assignment description, learning objectives and expectations.

Step 4: Define the assignment criteria

Make a list of the knowledge and skills are you measuring with the assignment/assessment Refer to your stated learning objectives, the assignment instructions, past examples of student work, etc. for help.

Helpful strategies for defining grading criteria:

- Collaborate with co-instructors, teaching assistants, and other colleagues

- Brainstorm and discuss with students

- Can they be observed and measured?

- Are they important and essential?

- Are they distinct from other criteria?

- Are they phrased in precise, unambiguous language?

- Revise the criteria as needed

- Consider whether some are more important than others, and how you will weight them.

Step 5: Design the rating scale

Most ratings scales include between 3 and 5 levels. Consider the following questions when designing your rating scale:

- Given what students are able to demonstrate in this assignment/assessment, what are the possible levels of achievement?

- How many levels would you like to include (more levels means more detailed descriptions)

- Will you use numbers and/or descriptive labels for each level of performance? (for example 5, 4, 3, 2, 1 and/or Exceeds expectations, Accomplished, Proficient, Developing, Beginning, etc.)

- Don’t use too many columns, and recognize that some criteria can have more columns that others . The rubric needs to be comprehensible and organized. Pick the right amount of columns so that the criteria flow logically and naturally across levels.

Step 6: Write descriptions for each level of the rating scale

Artificial Intelligence tools like Chat GPT have proven to be useful tools for creating a rubric. You will want to engineer your prompt that you provide the AI assistant to ensure you get what you want. For example, you might provide the assignment description, the criteria you feel are important, and the number of levels of performance you want in your prompt. Use the results as a starting point, and adjust the descriptions as needed.

Building a rubric from scratch

For a single-point rubric , describe what would be considered “proficient,” i.e. B-level work, and provide that description. You might also include suggestions for students outside of the actual rubric about how they might surpass proficient-level work.

For analytic and holistic rubrics , c reate statements of expected performance at each level of the rubric.

- Consider what descriptor is appropriate for each criteria, e.g., presence vs absence, complete vs incomplete, many vs none, major vs minor, consistent vs inconsistent, always vs never. If you have an indicator described in one level, it will need to be described in each level.

- You might start with the top/exemplary level. What does it look like when a student has achieved excellence for each/every criterion? Then, look at the “bottom” level. What does it look like when a student has not achieved the learning goals in any way? Then, complete the in-between levels.

- For an analytic rubric , do this for each particular criterion of the rubric so that every cell in the table is filled. These descriptions help students understand your expectations and their performance in regard to those expectations.

Well-written descriptions:

- Describe observable and measurable behavior

- Use parallel language across the scale

- Indicate the degree to which the standards are met

Step 7: Create your rubric

Create your rubric in a table or spreadsheet in Word, Google Docs, Sheets, etc., and then transfer it by typing it into Moodle. You can also use online tools to create the rubric, but you will still have to type the criteria, indicators, levels, etc., into Moodle. Rubric creators: Rubistar , iRubric

Step 8: Pilot-test your rubric

Prior to implementing your rubric on a live course, obtain feedback from:

- Teacher assistants

Try out your new rubric on a sample of student work. After you pilot-test your rubric, analyze the results to consider its effectiveness and revise accordingly.

- Limit the rubric to a single page for reading and grading ease

- Use parallel language . Use similar language and syntax/wording from column to column. Make sure that the rubric can be easily read from left to right or vice versa.

- Use student-friendly language . Make sure the language is learning-level appropriate. If you use academic language or concepts, you will need to teach those concepts.

- Share and discuss the rubric with your students . Students should understand that the rubric is there to help them learn, reflect, and self-assess. If students use a rubric, they will understand the expectations and their relevance to learning.

- Consider scalability and reusability of rubrics. Create rubric templates that you can alter as needed for multiple assignments.

- Maximize the descriptiveness of your language. Avoid words like “good” and “excellent.” For example, instead of saying, “uses excellent sources,” you might describe what makes a resource excellent so that students will know. You might also consider reducing the reliance on quantity, such as a number of allowable misspelled words. Focus instead, for example, on how distracting any spelling errors are.

Example of an analytic rubric for a final paper

Example of a holistic rubric for a final paper, single-point rubric, more examples:.

- Single Point Rubric Template ( variation )

- Analytic Rubric Template make a copy to edit

- A Rubric for Rubrics

- Bank of Online Discussion Rubrics in different formats

- Mathematical Presentations Descriptive Rubric

- Math Proof Assessment Rubric

- Kansas State Sample Rubrics

- Design Single Point Rubric

Technology Tools: Rubrics in Moodle

- Moodle Docs: Rubrics

- Moodle Docs: Grading Guide (use for single-point rubrics)

Tools with rubrics (other than Moodle)

- Google Assignments

- Turnitin Assignments: Rubric or Grading Form

Other resources

- DePaul University (n.d.). Rubrics .

- Gonzalez, J. (2014). Know your terms: Holistic, Analytic, and Single-Point Rubrics . Cult of Pedagogy.

- Goodrich, H. (1996). Understanding rubrics . Teaching for Authentic Student Performance, 54 (4), 14-17. Retrieved from

- Miller, A. (2012). Tame the beast: tips for designing and using rubrics.

- Ragupathi, K., Lee, A. (2020). Beyond Fairness and Consistency in Grading: The Role of Rubrics in Higher Education. In: Sanger, C., Gleason, N. (eds) Diversity and Inclusion in Global Higher Education. Palgrave Macmillan, Singapore.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Am J Pharm Educ

- v.74(9); 2010 Nov 10

A Standardized Rubric to Evaluate Student Presentations

Michael j. peeters.

a University of Toledo College of Pharmacy

Eric G. Sahloff

Gregory e. stone.

b University of Toledo College of Education

To design, implement, and assess a rubric to evaluate student presentations in a capstone doctor of pharmacy (PharmD) course.

A 20-item rubric was designed and used to evaluate student presentations in a capstone fourth-year course in 2007-2008, and then revised and expanded to 25 items and used to evaluate student presentations for the same course in 2008-2009. Two faculty members evaluated each presentation.

The Many-Facets Rasch Model (MFRM) was used to determine the rubric's reliability, quantify the contribution of evaluator harshness/leniency in scoring, and assess grading validity by comparing the current grading method with a criterion-referenced grading scheme. In 2007-2008, rubric reliability was 0.98, with a separation of 7.1 and 4 rating scale categories. In 2008-2009, MFRM analysis suggested 2 of 98 grades be adjusted to eliminate evaluator leniency, while a further criterion-referenced MFRM analysis suggested 10 of 98 grades should be adjusted.

The evaluation rubric was reliable and evaluator leniency appeared minimal. However, a criterion-referenced re-analysis suggested a need for further revisions to the rubric and evaluation process.

INTRODUCTION

Evaluations are important in the process of teaching and learning. In health professions education, performance-based evaluations are identified as having “an emphasis on testing complex, ‘higher-order’ knowledge and skills in the real-world context in which they are actually used.” 1 Objective structured clinical examinations (OSCEs) are a common, notable example. 2 On Miller's pyramid, a framework used in medical education for measuring learner outcomes, “knows” is placed at the base of the pyramid, followed by “knows how,” then “shows how,” and finally, “does” is placed at the top. 3 Based on Miller's pyramid, evaluation formats that use multiple-choice testing focus on “knows” while an OSCE focuses on “shows how.” Just as performance evaluations remain highly valued in medical education, 4 authentic task evaluations in pharmacy education may be better indicators of future pharmacist performance. 5 Much attention in medical education has been focused on reducing the unreliability of high-stakes evaluations. 6 Regardless of educational discipline, high-stakes performance-based evaluations should meet educational standards for reliability and validity. 7

PharmD students at University of Toledo College of Pharmacy (UTCP) were required to complete a course on presentations during their final year of pharmacy school and then give a presentation that served as both a capstone experience and a performance-based evaluation for the course. Pharmacists attending the presentations were given Accreditation Council for Pharmacy Education (ACPE)-approved continuing education credits. An evaluation rubric for grading the presentations was designed to allow multiple faculty evaluators to objectively score student performances in the domains of presentation delivery and content. Given the pass/fail grading procedure used in advanced pharmacy practice experiences, passing this presentation-based course and subsequently graduating from pharmacy school were contingent upon this high-stakes evaluation. As a result, the reliability and validity of the rubric used and the evaluation process needed to be closely scrutinized.

Each year, about 100 students completed presentations and at least 40 faculty members served as evaluators. With the use of multiple evaluators, a question of evaluator leniency often arose (ie, whether evaluators used the same criteria for evaluating performances or whether some evaluators graded easier or more harshly than others). At UTCP, opinions among some faculty evaluators and many PharmD students implied that evaluator leniency in judging the students' presentations significantly affected specific students' grades and ultimately their graduation from pharmacy school. While it was plausible that evaluator leniency was occurring, the magnitude of the effect was unknown. Thus, this study was initiated partly to address this concern over grading consistency and scoring variability among evaluators.

Because both students' presentation style and content were deemed important, each item of the rubric was weighted the same across delivery and content. However, because there were more categories related to delivery than content, an additional faculty concern was that students feasibly could present poor content but have an effective presentation delivery and pass the course.

The objectives for this investigation were: (1) to describe and optimize the reliability of the evaluation rubric used in this high-stakes evaluation; (2) to identify the contribution and significance of evaluator leniency to evaluation reliability; and (3) to assess the validity of this evaluation rubric within a criterion-referenced grading paradigm focused on both presentation delivery and content.

The University of Toledo's Institutional Review Board approved this investigation. This study investigated performance evaluation data for an oral presentation course for final-year PharmD students from 2 consecutive academic years (2007-2008 and 2008-2009). The course was taken during the fourth year (P4) of the PharmD program and was a high-stakes, performance-based evaluation. The goal of the course was to serve as a capstone experience, enabling students to demonstrate advanced drug literature evaluation and verbal presentations skills through the development and delivery of a 1-hour presentation. These presentations were to be on a current pharmacy practice topic and of sufficient quality for ACPE-approved continuing education. This experience allowed students to demonstrate their competencies in literature searching, literature evaluation, and application of evidence-based medicine, as well as their oral presentation skills. Students worked closely with a faculty advisor to develop their presentation. Each class (2007-2008 and 2008-2009) was randomly divided, with half of the students taking the course and completing their presentation and evaluation in the fall semester and the other half in the spring semester. To accommodate such a large number of students presenting for 1 hour each, it was necessary to use multiple rooms with presentations taking place concurrently over 2.5 days for both the fall and spring sessions of the course. Two faculty members independently evaluated each student presentation using the provided evaluation rubric. The 2007-2008 presentations involved 104 PharmD students and 40 faculty evaluators, while the 2008-2009 presentations involved 98 students and 46 faculty evaluators.

After vetting through the pharmacy practice faculty, the initial rubric used in 2007-2008 focused on describing explicit, specific evaluation criteria such as amounts of eye contact, voice pitch/volume, and descriptions of study methods. The evaluation rubric used in 2008-2009 was similar to the initial rubric, but with 5 items added (Figure (Figure1). 1 ). The evaluators rated each item (eg, eye contact) based on their perception of the student's performance. The 25 rubric items had equal weight (ie, 4 points each), but each item received a rating from the evaluator of 1 to 4 points. Thus, only 4 rating categories were included as has been recommended in the literature. 8 However, some evaluators created an additional 3 rating categories by marking lines in between the 4 ratings to signify half points ie, 1.5, 2.5, and 3.5. For example, for the “notecards/notes” item in Figure Figure1, 1 , a student looked at her notes sporadically during her presentation, but not distractingly nor enough to warrant a score of 3 in the faculty evaluator's opinion, so a 3.5 was given. Thus, a 7-category rating scale (1, 1.5, 2, 2.5. 3, 3.5, and 4) was analyzed. Each independent evaluator's ratings for the 25 items were summed to form a score (0-100%). The 2 evaluators' scores then were averaged and a letter grade was assigned based on the following scale: >90% = A, 80%-89% = B, 70%-79% = C, <70% = F.

Rubric used to evaluate student presentations given in a 2008-2009 capstone PharmD course.

EVALUATION AND ASSESSMENT

Rubric reliability.

To measure rubric reliability, iterative analyses were performed on the evaluations using the Many-Facets Rasch Model (MFRM) following the 2007-2008 data collection period. While Cronbach's alpha is the most commonly reported coefficient of reliability, its single number reporting without supplementary information can provide incomplete information about reliability. 9 - 11 Due to its formula, Cronbach's alpha can be increased by simply adding more repetitive rubric items or having more rating scale categories, even when no further useful information has been added. The MFRM reports separation , which is calculated differently than Cronbach's alpha, is another source of reliability information. Unlike Cronbach's alpha, separation does not appear enhanced by adding further redundant items. From a measurement perspective, a higher separation value is better than a lower one because students are being divided into meaningful groups after measurement error has been accounted for. Separation can be thought of as the number of units on a ruler where the more units the ruler has, the larger the range of performance levels that can be measured among students. For example, a separation of 4.0 suggests 4 graduations such that a grade of A is distinctly different from a grade of B, which in turn is different from a grade of C or of F. In measuring performances, a separation of 9.0 is better than 5.5, just as a separation of 7.0 is better than a 6.5; a higher separation coefficient suggests that student performance potentially could be divided into a larger number of meaningfully separate groups.

The rating scale can have substantial effects on reliability, 8 while description of how a rating scale functions is a unique aspect of the MFRM. With analysis iterations of the 2007-2008 data, the number of rating scale categories were collapsed consecutively until improvements in reliability and/or separation were no longer found. The last positive iteration that led to positive improvements in reliability or separation was deemed an optimal rating scale for this evaluation rubric.

In the 2007-2008 analysis, iterations of the data where run through the MFRM. While only 4 rating scale categories had been included on the rubric, because some faculty members inserted 3 in-between categories, 7 categories had to be included in the analysis. This initial analysis based on a 7-category rubric provided a reliability coefficient (similar to Cronbach's alpha) of 0.98, while the separation coefficient was 6.31. The separation coefficient denoted 6 distinctly separate groups of students based on the items. Rating scale categories were collapsed, with “in-between” categories included in adjacent full-point categories. Table Table1 1 shows the reliability and separation for the iterations as the rating scale was collapsed. As shown, the optimal evaluation rubric maintained a reliability of 0.98, but separation improved the reliability to 7.10 or 7 distinctly separate groups of students based on the items. Another distinctly separate group was added through a reduction in the rating scale while no change was seen to Cronbach's alpha, even though the number of rating scale categories was reduced. Table Table1 1 describes the stepwise, sequential pattern across the final 4 rating scale categories analyzed. Informed by the 2007-2008 results, the 2008-2009 evaluation rubric (Figure (Figure1) 1 ) used 4 rating scale categories and reliability remained high.

Evaluation Rubric Reliability and Separation with Iterations While Collapsing Rating Scale Categories.

a Reliability coefficient of variance in rater response that is reproducible (ie, Cronbach's alpha).

b Separation is a coefficient of item standard deviation divided by average measurement error and is an additional reliability coefficient.

c Optimal number of rating scale categories based on the highest reliability (0.98) and separation (7.1) values.

Evaluator Leniency

Described by Fleming and colleagues over half a century ago, 6 harsh raters (ie, hawks) or lenient raters (ie, doves) have also been demonstrated in more recent studies as an issue as well. 12 - 14 Shortly after 2008-2009 data were collected, those evaluations by multiple faculty evaluators were collated and analyzed in the MFRM to identify possible inconsistent scoring. While traditional interrater reliability does not deal with this issue, the MFRM had been used previously to illustrate evaluator leniency on licensing examinations for medical students and medical residents in the United Kingdom. 13 Thus, accounting for evaluator leniency may prove important to grading consistency (and reliability) in a course using multiple evaluators. Along with identifying evaluator leniency, the MFRM also corrected for this variability. For comparison, course grades were calculated by summing the evaluators' actual ratings (as discussed in the Design section) and compared with the MFRM-adjusted grades to quantify the degree of evaluator leniency occurring in this evaluation.

Measures created from the data analysis in the MFRM were converted to percentages using a common linear test-equating procedure involving the mean and standard deviation of the dataset. 15 To these percentages, student letter grades were assigned using the same traditional method used in 2007-2008 (ie, 90% = A, 80% - 89% = B, 70% - 79% = C, <70% = F). Letter grades calculated using the revised rubric and the MFRM then were compared to letter grades calculated using the previous rubric and course grading method.

In the analysis of the 2008-2009 data, the interrater reliability for the letter grades when comparing the 2 independent faculty evaluations for each presentation was 0.98 by Cohen's kappa. However, using the 3-facet MRFM revealed significant variation in grading. The interaction of evaluator leniency on student ability and item difficulty was significant, with a chi-square of p < 0.01. As well, the MFRM showed a reliability of 0.77, with a separation of 1.85 (ie, almost 2 groups of evaluators). The MFRM student ability measures were scaled to letter grades and compared with course letter grades. As a result, 2 B's became A's and so evaluator leniency accounted for a 2% change in letter grades (ie, 2 of 98 grades).

Validity and Grading

Explicit criterion-referenced standards for grading are recommended for higher evaluation validity. 3 , 16 - 18 The course coordinator completed 3 additional evaluations of a hypothetical student presentation rating the minimal criteria expected to describe each of an A, B, or C letter grade performance. These evaluations were placed with the other 196 evaluations (2 evaluators × 98 students) from 2008-2009 into the MFRM, with the resulting analysis report giving specific cutoff percentage scores for each letter grade. Unlike the traditional scoring method of assigning all items an equal weight, the MFRM ordered evaluation items from those more difficult for students (given more weight) to those less difficult for students (given less weight). These criterion-referenced letter grades were compared with the grades generated using the traditional grading process.

When the MFRM data were rerun with the criterion-referenced evaluations added into the dataset, a 10% change was seen with letter grades (ie, 10 of 98 grades). When the 10 letter grades were lowered, 1 was below a C, the minimum standard, and suggested a failing performance. Qualitative feedback from faculty evaluators agreed with this suggested criterion-referenced performance failure.

Measurement Model

Within modern test theory, the Rasch Measurement Model maps examinee ability with evaluation item difficulty. Items are not arbitrarily given the same value (ie, 1 point) but vary based on how difficult or easy the items were for examinees. The Rasch measurement model has been used frequently in educational research, 19 by numerous high-stakes testing professional bodies such as the National Board of Medical Examiners, 20 and also by various state-level departments of education for standardized secondary education examinations. 21 The Rasch measurement model itself has rigorous construct validity and reliability. 22 A 3-facet MFRM model allows an evaluator variable to be added to the student ability and item difficulty variables that are routine in other Rasch measurement analyses. Just as multiple regression accounts for additional variables in analysis compared to a simple bivariate regression, the MFRM is a multiple variable variant of the Rasch measurement model and was applied in this study using the Facets software (Linacre, Chicago, IL). The MFRM is ideal for performance-based evaluations with the addition of independent evaluator/judges. 8 , 23 From both yearly cohorts in this investigation, evaluation rubric data were collated and placed into the MFRM for separate though subsequent analyses. Within the MFRM output report, a chi-square for a difference in evaluator leniency was reported with an alpha of 0.05.

The presentation rubric was reliable. Results from the 2007-2008 analysis illustrated that the number of rating scale categories impacted the reliability of this rubric and that use of only 4 rating scale categories appeared best for measurement. While a 10-point Likert-like scale may commonly be used in patient care settings, such as in quantifying pain, most people cannot process more then 7 points or categories reliably. 24 Presumably, when more than 7 categories are used, the categories beyond 7 either are not used or are collapsed by respondents into fewer than 7 categories. Five-point scales commonly are encountered, but use of an odd number of categories can be problematic to interpretation and is not recommended. 25 Responses using the middle category could denote a true perceived average or neutral response or responder indecisiveness or even confusion over the question. Therefore, removing the middle category appears advantageous and is supported by our results.

With 2008-2009 data, the MFRM identified evaluator leniency with some evaluators grading more harshly while others were lenient. Evaluator leniency was indeed found in the dataset but only a couple of changes were suggested based on the MFRM-corrected evaluator leniency and did not appear to play a substantial role in the evaluation of this course at this time.

Performance evaluation instruments are either holistic or analytic rubrics. 26 The evaluation instrument used in this investigation exemplified an analytic rubric, which elicits specific observations and often demonstrates high reliability. However, Norman and colleagues point out a conundrum where drastically increasing the number of evaluation rubric items (creating something similar to a checklist) could augment a reliability coefficient though it appears to dissociate from that evaluation rubric's validity. 27 Validity may be more than the sum of behaviors on evaluation rubric items. 28 Having numerous, highly specific evaluation items appears to undermine the rubric's function. With this investigation's evaluation rubric and its numerous items for both presentation style and presentation content, equal numeric weighting of items can in fact allow student presentations to receive a passing score while falling short of the course objectives, as was shown in the present investigation. As opposed to analytic rubrics, holistic rubrics often demonstrate lower yet acceptable reliability, while offering a higher degree of explicit connection to course objectives. A summative, holistic evaluation of presentations may improve validity by allowing expert evaluators to provide their “gut feeling” as experts on whether a performance is “outstanding,” “sufficient,” “borderline,” or “subpar” for dimensions of presentation delivery and content. A holistic rubric that integrates with criteria of the analytic rubric (Figure (Figure1) 1 ) for evaluators to reflect on but maintains a summary, overall evaluation for each dimension (delivery/content) of the performance, may allow for benefits of each type of rubric to be used advantageously. This finding has been demonstrated with OSCEs in medical education where checklists for completed items (ie, yes/no) at an OSCE station have been successfully replaced with a few reliable global impression rating scales. 29 - 31

Alternatively, and because the MFRM model was used in the current study, an items-weighting approach could be used with the analytic rubric. That is, item weighting based on the difficulty of each rubric item could suggest how many points should be given for that rubric items, eg, some items would be worth 0.25 points, while others would be worth 0.5 points or 1 point (Table (Table2). 2 ). As could be expected, the more complex the rubric scoring becomes, the less feasible the rubric is to use. This was the main reason why this revision approach was not chosen by the course coordinator following this study. As well, it does not address the conundrum that the performance may be more than the summation of behavior items in the Figure Figure1 1 rubric. This current study cannot suggest which approach would be better as each would have its merits and pitfalls.

Rubric Item Weightings Suggested in the 2008-2009 Data Many-Facet Rasch Measurement Analysis

Regardless of which approach is used, alignment of the evaluation rubric with the course objectives is imperative. Objectivity has been described as a general striving for value-free measurement (ie, free of the evaluator's interests, opinions, preferences, sentiments). 27 This is a laudable goal pursued through educational research. Strategies to reduce measurement error, termed objectification , may not necessarily lead to increased objectivity. 27 The current investigation suggested that a rubric could become too explicit if all the possible areas of an oral presentation that could be assessed (ie, objectification) were included. This appeared to dilute the effect of important items and lose validity. A holistic rubric that is more straightforward and easier to score quickly may be less likely to lose validity (ie, “lose the forest for the trees”), though operationalizing a revised rubric would need to be investigated further. Similarly, weighting items in an analytic rubric based on their importance and difficulty for students may alleviate this issue; however, adding up individual items might prove arduous. While the rubric in Figure Figure1, 1 , which has evolved over the years, is the subject of ongoing revisions, it appears a reliable rubric on which to build.

The major limitation of this study involves the observational method that was employed. Although the 2 cohorts were from a single institution, investigators did use a completely separate class of PharmD students to verify initial instrument revisions. Optimizing the rubric's rating scale involved collapsing data from misuse of a 4-category rating scale (expanded by evaluators to 7 categories) by a few of the evaluators into 4 independent categories without middle ratings. As a result of the study findings, no actual grading adjustments were made for students in the 2008-2009 presentation course; however, adjustment using the MFRM have been suggested by Roberts and colleagues. 13 Since 2008-2009, the course coordinator has made further small revisions to the rubric based on feedback from evaluators, but these have not yet been re-analyzed with the MFRM.

The evaluation rubric used in this study for student performance evaluations showed high reliability and the data analysis agreed with using 4 rating scale categories to optimize the rubric's reliability. While lenient and harsh faculty evaluators were found, variability in evaluator scoring affected grading in this course only minimally. Aside from reliability, issues of validity were raised using criterion-referenced grading. Future revisions to this evaluation rubric should reflect these criterion-referenced concerns. The rubric analyzed herein appears a suitable starting point for reliable evaluation of PharmD oral presentations, though it has limitations that could be addressed with further attention and revisions.

ACKNOWLEDGEMENT

Author contributions— MJP and EGS conceptualized the study, while MJP and GES designed it. MJP, EGS, and GES gave educational content foci for the rubric. As the study statistician, MJP analyzed and interpreted the study data. MJP reviewed the literature and drafted a manuscript. EGS and GES critically reviewed this manuscript and approved the final version for submission. MJP accepts overall responsibility for the accuracy of the data, its analysis, and this report.

Assessment Rubrics

A rubric is commonly defined as a tool that articulates the expectations for an assignment by listing criteria, and for each criteria, describing levels of quality (Andrade, 2000; Arter & Chappuis, 2007; Stiggins, 2001). Criteria are used in determining the level at which student work meets expectations. Markers of quality give students a clear idea about what must be done to demonstrate a certain level of mastery, understanding, or proficiency (i.e., "Exceeds Expectations" does xyz, "Meets Expectations" does only xy or yz, "Developing" does only x or y or z). Rubrics can be used for any assignment in a course, or for any way in which students are asked to demonstrate what they've learned. They can also be used to facilitate self and peer-reviews of student work.

Rubrics aren't just for summative evaluation. They can be used as a teaching tool as well. When used as part of a formative assessment, they can help students understand both the holistic nature and/or specific analytics of learning expected, the level of learning expected, and then make decisions about their current level of learning to inform revision and improvement (Reddy & Andrade, 2010).

Why use rubrics?

Rubrics help instructors:

Provide students with feedback that is clear, directed and focused on ways to improve learning.

Demystify assignment expectations so students can focus on the work instead of guessing "what the instructor wants."

Reduce time spent on grading and develop consistency in how you evaluate student learning across students and throughout a class.

Rubrics help students:

Focus their efforts on completing assignments in line with clearly set expectations.

Self and Peer-reflect on their learning, making informed changes to achieve the desired learning level.

Developing a Rubric

During the process of developing a rubric, instructors might:

Select an assignment for your course - ideally one you identify as time intensive to grade, or students report as having unclear expectations.

Decide what you want students to demonstrate about their learning through that assignment. These are your criteria.

Identify the markers of quality on which you feel comfortable evaluating students’ level of learning - often along with a numerical scale (i.e., "Accomplished," "Emerging," "Beginning" for a developmental approach).

Give students the rubric ahead of time. Advise them to use it in guiding their completion of the assignment.

It can be overwhelming to create a rubric for every assignment in a class at once, so start by creating one rubric for one assignment. See how it goes and develop more from there! Also, do not reinvent the wheel. Rubric templates and examples exist all over the Internet, or consider asking colleagues if they have developed rubrics for similar assignments.

Sample Rubrics

Examples of holistic and analytic rubrics : see Tables 2 & 3 in “Rubrics: Tools for Making Learning Goals and Evaluation Criteria Explicit for Both Teachers and Learners” (Allen & Tanner, 2006)

Examples across assessment types : see “Creating and Using Rubrics,” Carnegie Mellon Eberly Center for Teaching Excellence and & Educational Innovation

“VALUE Rubrics” : see the Association of American Colleges and Universities set of free, downloadable rubrics, with foci including creative thinking, problem solving, and information literacy.

Andrade, H. 2000. Using rubrics to promote thinking and learning. Educational Leadership 57, no. 5: 13–18. Arter, J., and J. Chappuis. 2007. Creating and recognizing quality rubrics. Upper Saddle River, NJ: Pearson/Merrill Prentice Hall. Stiggins, R.J. 2001. Student-involved classroom assessment. 3rd ed. Upper Saddle River, NJ: Prentice-Hall. Reddy, Y., & Andrade, H. (2010). A review of rubric use in higher education. Assessment & Evaluation In Higher Education, 35(4), 435-448.

Advertisement

Rubric formats for the formative assessment of oral presentation skills acquisition in secondary education

- Development Article

- Open access

- Published: 20 July 2021

- Volume 69 , pages 2663–2682, ( 2021 )

Cite this article

You have full access to this open access article

- Rob J. Nadolski ORCID: orcid.org/0000-0002-6585-0888 1 ,

- Hans G. K. Hummel 1 ,

- Ellen Rusman 1 &

- Kevin Ackermans 1

10k Accesses

4 Citations

1 Altmetric

Explore all metrics

Acquiring complex oral presentation skills is cognitively demanding for students and demands intensive teacher guidance. The aim of this study was twofold: (a) to identify and apply design guidelines in developing an effective formative assessment method for oral presentation skills during classroom practice, and (b) to develop and compare two analytic rubric formats as part of that assessment method. Participants were first-year secondary school students in the Netherlands ( n = 158) that acquired oral presentation skills with the support of either a formative assessment method with analytic rubrics offered through a dedicated online tool (experimental groups), or a method using more conventional (rating scales) rubrics (control group). One experimental group was provided text-based and the other was provided video-enhanced rubrics. No prior research is known about analytic video-enhanced rubrics, but, based on research on complex skill development and multimedia learning, we expected this format to best capture the (non-verbal aspects of) oral presentation performance. Significant positive differences on oral presentation performance were found between the experimental groups and the control group. However, no significant differences were found between both experimental groups. This study shows that a well-designed formative assessment method, using analytic rubric formats, outperforms formative assessment using more conventional rubric formats. It also shows that higher costs of developing video-enhanced analytic rubrics cannot be justified by significant more performance gains. Future studies should address the generalizability of such formative assessment methods for other contexts, and for complex skills other than oral presentation, and should lead to more profound understanding of video-enhanced rubrics.

Similar content being viewed by others

Viewbrics: A Technology-Enhanced Formative Assessment Method to Mirror and Master Complex Skills with Video-Enhanced Rubrics and Peer Feedback in Secondary Education

Students’ and Teachers’ Perceptions of the Usability and Usefulness of the First Viewbrics-Prototype: A Methodology and Online Tool to Formatively Assess Complex Generic Skills with Video-Enhanced Rubrics (VER) in Dutch Secondary Education

The Dilemmas of Formulating Theory-Informed Design Guidelines for a Video Enhanced Rubric

Avoid common mistakes on your manuscript.

Introduction

Both practitioners and scholars agree that students should be able to present orally (e.g., Morreale & Pearson, 2008 ; Smith & Sodano, 2011 ). Oral presentation involves the development and delivery of messages to the public with attention to vocal variety, articulation, and non-verbal signals, and with the aim to inform, self-express, relate to and persuade listeners (Baccarini & Bonfanti, 2015 ; De Grez et al., 2009a ; Quianthy, 1990 ). The current study is restricted to informative presentations (as opposed to persuasive presentations), as these are most common in secondary education. Oral presentation skills are complex generic skills of increasing importance for both society and education (Voogt & Roblin, 2012 ). However, secondary education seems to be in lack of instructional design guidelines for supporting oral presentation skills acquisition. Many secondary schools in the Netherlands are struggling with how to teach and assess students’ oral presentation skills, lack clear performance criteria for oral presentations, and fall short in offering adequate formative assessment methods that support the effective acquisition of oral presentation skills (Sluijsmans et al., 2013 ).

Many researchers agree that the acquisition and assessment of presentation skills should depart from a socio-cognitive perspective (Bandura, 1986 ) with emphasis on observation, practice, and feedback. Students practice new presentation skills by observing other presentations as modeling examples, then practice their own presentation, after which the feedback is addressed by adjusting their presentations towards the required levels. Evidently, delivering effective oral presentations requires much preparation, rehearsal, and practice, interspersed with good feedback, preferably from oral presentation experts. However, large class sizes in secondary schools of the Netherlands offer only limited opportunities for teacher-student interaction, and offer even fewer practice opportunities. Based on research on complex skill development and multimedia learning, it can be expected that video-enhanced analytic rubric formats best capture and guide oral presentation performance, since much non-verbal behavior cannot be captured in text (Van Gog et al., 2014 ; Van Merriënboer & Kirschner, 2013 ).

Formative assessment of complex skills

To support complex skills acquisition under limited teacher guidance, we will need more effective formative assessment methods (Boud & Molloy, 2013 ) based on proven instructional design guidelines. During skills acquisition students will perceive specific feedback as more adequate than non-specific feedback (Shute, 2008 ). Adequate feedback should inform students about (i) their task-performance, (ii) their progress towards intended learning goals, and (iii) what they should do to further progress towards those goals (Hattie & Timperly, 2007 ; Narciss, 2008 ). Students receiving specific feedback on criteria and performance levels will become equipped to improve oral presentation skills (De Grez et al., 2009a ; Ritchie, 2016 ). Analytic rubrics are therefore promising formats to provide specific feedback on oral presentations, because they can demonstrate the relations between subskills and explain the open-endedness of ideal presentations (through textual descriptions and their graphical design).

Ritchie ( 2016 ) showed that adding structure and self-assessment to peer- and teacher-assessments resulted in better oral presentation performance. Students were required to use analytic rubrics for self-assessment when following their (project-based) classroom education. In this way, they had ample opportunity for observing and reflecting on (good) oral presentations attributes, which was shown to foster acquisition of their oral presentation skills.

Analytic rubrics incorporate performance criteria to inform teachers and students when preparing oral presentation. Such rubrics support mental model formation, and enable adequate feedback provision by teachers, peers, and self (Brookhart & Chen, 2015 ; Jonsson & Svingby, 2007 ; Panadero & Jonsson, 2013 ). Such research is inconclusive about what are most effective formats and delivery media, but most studies dealt with analytic text-based rubrics delivered on paper. However, digital video-enhanced analytic rubrics are expected to be more effective for acquiring oral presentation skills, since many behavioral aspects refer to non-verbal actions and processes that can only be captured on video (e.g., body posture or use of voice during a presentation).

This study is situated within the Viewbrics project where video-modelling examples are integrated with analytic text-based rubrics (Ackermans et al., 2019a ). Video-modelling examples contain question prompts that illustrate behavior associated with (sub)skills performance levels in context, and are presented by young actors the target group can identify with. The question prompts require students to link behavior to performance levels, and build a coherent picture of the (sub)skills and levels. To the best of authors’ knowledge, there exist no previous studies on such video-enhanced analytic rubrics. The Viewbrics tool has been incrementally developed and validated with teachers and students to structure the formative assessment method in classroom settings (Rusman et al., 2019 ).

The purpose of our study is twofold. On the one hand, it investigates whether the application of evidence-based design guidelines results in a more effective formative assessment method in classroom. On the other hand, it investigates (within that method) whether video-enhanced analytic rubrics are more effective than text-based analytic rubrics.

Research questions

The twofold purpose of this study is stated by two research questions: (1) To what extent do analytic rubrics within formative assessment lead to better oral presentation performance? (the design-based part of this study); and (2) To what extent do video-enhanced analytic rubrics lead to better oral presentation performance (growth) than text-based analytic rubrics? (the experimental part of this study). We hypothesize that all students will improve their oral presentation performance in time, but that students in the experimental groups (receiving analytic rubrics designed according to proven design guidelines) will outperform a control group (receiving conventional rubrics) (Hypothesis 1). Furthermore, we expect the experimental group using video-enhanced rubrics to achieve more performance growth than the experimental group using text-based rubrics (Hypothesis 2).

After this introduction, the second section describes previous research on design guidelines that were applied to develop the analytic rubrics in the present study. The actual design, development and validation of these rubrics is described in “ Development of analytic rubrics tool ” section. “ Method ” section describes the experimental method of this study, whereas “ Results ” section reports its results. Finally, in the concluding “ Conclusions and discussion ” section, main findings and limitations of the study are discussed, and suggestions for future research are provided.

Previous research and design guidelines for formative assessment with analytic rubrics

Analytic rubrics are inextricably linked with assessment, either summative (for final grading of learning products) or formative (for scaffolding learning processes). They provide textual descriptions of skills’ mastery levels with performance indicators that describe concrete behavior for all constituent subskills at each mastery level (Allen & Tanner, 2006 ; Reddy, 2011 ; Sluijsmans et al., 2013 ) (see Figs. 1 and 2 in “ Development of analytic rubrics tool ” section for an example). Such performance indicators specify aspects of variation in the complexity of a (sub)skill (e.g., presenting for a small, homogeneous group as compared to presenting for a large heterogeneous group) and related mastery levels (Van Merriënboer & Kirschner, 2013 ). Analytic rubrics explicate criteria and expectations, can be used to check students’ progress, monitor learning, and diagnose learning problems, either by teachers, students themselves or by their peers (Rusman & Dirkx, 2017 ).

Subskills for oral presentation assessment

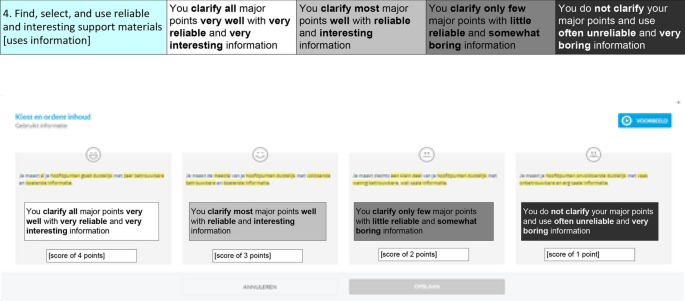

Specification of performance levels for criterium 4

Several motives for deploying analytic rubrics in education are distinguished. A review study by Panadero and Jonsson ( 2013 ) identified following motives: increasing transparency, reducing anxiety, aiding the feedback process, improving student self-efficacy, and supporting student self-regulation. Analytic rubrics also improve reliability among teachers when rating their students (Jonsson & Svingby, 2007 ). Evidence has shown that analytic rubrics can be utilized to enhance student performance and learning when they were used for formative assessment purposes in combination with metacognitive activities, like reflection and goal-setting, but research shows mixed results about their learning effectiveness (Panadero & Jonsson, 2013 ).

It remains unclear what is exactly needed to make their feedback effective (Reddy & Andrade, 2010 ; Reitmeier & Vrchota, 2009 ). Apparently, transparency of assessment criteria and learning goals (i.e., make expectations and criteria explicit) is not enough to establish effectiveness (Wöllenschläger et al., 2016 ). Several researchers stressed the importance of how and which feedback to provide with rubrics (Bower et al., 2011 ; De Grez et al., 2009b ; Kerby & Romine, 2009 ). We now continue this section by reviewing design guidelines for analytic rubrics we encountered in literature, and then specifically address what literature mentions about the added value of video-enhanced rubrics.

Design guidelines for analytic rubrics

Effective formative assessment methods for oral presentation and analytic rubrics should be based on proven instructional design guidelines (Van Ginkel et al., 2015 ). Table 1 presents an overview of (seventeen) guidelines on analytic rubrics we encountered in literature. Guidelines 1–4 inform us how to use rubrics for formative assessment; Guidelines 5–17 inform us how to use rubrics for instruction, with Guidelines 5–9 on a rather generic, meso level and Guidelines 10–17 on a more specific, micro level. We will now shortly describe them in relation to oral presentation skills.

Guideline 1: use analytic rubrics instead of rating scale rubrics if rubrics are meant for learning

Conventional rating-scale rubrics are easy to generate and use as they contain scores for each performance criterium (e.g., by a 5-point Likert scale). However, since each performance level is not clearly described or operationalized, rating can suffer from rater-subjectivity, and rating scales do not provide students with unambiguous feedback (Suskie, 2009 ). Analytic rubrics can address those shortcomings as they contain brief textual performance descriptions on all subskills, criteria, and performance levels of complex skills like presentation, but are harder to develop and score (Bargainnier, 2004 ; Brookhart, 2004 ; Schreiber et al., 2012 ).

Guideline 2: use self-assessment via rubrics for formative purposes

Analytic rubrics can encourage self-assessment and -reflection (Falchikov & Boud, 1989 ; Reitmeier & Vrchota, 2009 ), which appears essential when practicing presentations and reflecting on other presentations (Van Ginkel et al., 2017 ). The usefulness of self-assessment for oral presentation was demonstrated by Ritchie’s study ( 2016 ), but was absent in a study by De Grez et al. ( 2009b ) that used the same rubric.

Guideline 3: use peer-assessment via rubrics for formative purposes

Peer-feedback is more (readily) available than teacher-feedback, and can be beneficial for students’ confidence and learning (Cho & Cho, 2011 ; Murillo-Zamorano & Montanero, 2018 ), also for oral presentation (Topping, 2009 ). Students positively value peer-assessment if the circumstances guarantee serious feedback (De Grez et al., 2010 ; Lim et al., 2013 ). It can be assumed that using analytic rubrics positively influences the quality of peer-assessment.

Guideline 4: provide rubrics for usage by self, peers, and teachers as students appreciate rubrics

Students appreciate analytic rubrics because they support them in their learning, in their planning, in producing higher quality work, in focusing efforts, and in reducing anxiety about assignments (Reddy & Andrade, 2010 ), aspects of importance for oral presentation. While students positively perceive the use of peer-grading, the inclusion of teacher-grades is still needed (Mulder et al., 2014 ) and most valued by students (Ritchie, 2016 ).

Guidelines 5–9

Heitink et al. ( 2016 ) carried out a review study identifying five relevant prerequisites for effective classroom instruction on a meso-level when using analytic rubrics (for oral presentations): train teachers and students in using these rubrics, decide on a policy of their use in instruction, while taking school- and classroom contexts into account, and follow a constructivist learning approach. In the next section, it is described how these guidelines were applied to the design of this study’s classroom instruction.

Guidelines 10–17

Van Ginkel et al. ( 2015 ) review study presents a comprehensive overview of effective factors for oral presentation instruction in higher education on a micro-level. Although our research context is within secondary education, the findings from the aforementioned study seem very applicable as they were rooted in firmly researched and well-documented Instructional Design approaches. Their guidelines pertain to (a) instruction, (b) learning, and (c) assessment in the learning environment (Biggs, 2003 ). The next section describes how guidelines were applied to the design of this study’s online Viewbrics tool.

- Video-enhanced rubrics

Early analytic rubrics for oral presentations were all text-based descriptions. This study assumes that such analytic rubrics may fall short when used for learning to give oral presentations, since much of the required performance refers to motoric activities, time-consecutive operations and processes that can hardly be captured in text (e.g., body posture or use of voice during a presentation). Text-based rubrics also have a limited capacity to convey contextualized and more ‘tacit’ behavioral aspects (O’Donnevan et al., 2004 ), since ‘tacit knowledge’ (or ‘knowing how’) is interwoven with practical activities, operations, and behavior in the physical world (Westera, 2011 ). Finally, text leaves more space for personal interpretation (of performance indicators) than video, which negatively influences mental model formation and feedback consistency (Lew et al., 2010 ).

We can therefore expect video-enhanced rubrics to overcome such restrictions, as they can integrate modelling examples with analytic text-based explanations. The video-modelling examples and its embedded question prompts can illustrate behavior associated with performance levels in context, and contain information in different modalities (moving images, sound). Video-enhanced rubrics foster learning from active observation of video-modelling examples (De Grez et al., 2014 ; Rohbanfard & Proteau, 2013 ), especially when combined with textual performance indicators. Looking at effects of video-modelling examples, Van Gog et al. ( 2014 ) found an increased task performance when the video-modelling example of an expert was also shown. De Grez et al. ( 2014 ) found comparable results for learning to give oral presentations. Teachers in training assessing their own performance with video-modelling examples appeared to overrate their performance less than without examples (Baecher et al., 2013 ). Research on mastering complex skills indicates that both modelling examples (in a variety of application contexts) and frequent feedback positively influence the learning process and skills' acquisition (Van Merriënboer & Kirschner, 2013 ). Video-modelling examples not only capture the ‘know-how’ (procedural knowledge), but also elicit the ‘know-why’ (strategic/decisive knowledge).

Development of analytic rubrics tool

This section describes how design guidelines from previous research were applied in the actual development of the rubrics in the Viewbrics tool for our study, and then presents the subskills and levels for oral presentation skills as were defined.

Application of design guidelines

The previous section already mentioned that analytic rubrics should be restricted to formative assessment (Guidelines 2 and 3), and that there are good reasons to assume that a combination of teacher-, peer-, and self-assessment will improve oral presentations (Guidelines 1 and 4). Teachers and students were trained in rubric-usage (Guidelines 5 and 7), whereas students were motivated for using rubrics (Guideline 7). As participating schools were already using analytic rubrics, one might assume their positive initial attitude. Although the policy towards using analytic rubrics might not have been generally known at the work floor, the participating teachers in our study were knowledgeable (Guideline 6). We carefully considered the school context, as (a representative set of) secondary schools in the Netherlands were part of the Viewbrics team (Guideline 8). The formative assessment method was embedded within project-based education (Guideline 9).

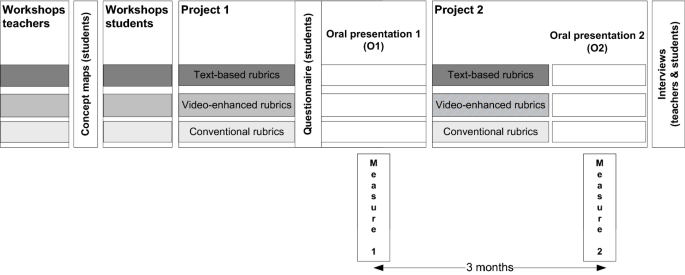

Within this study and on the micro-level of design, the learning objectives for the first presentation were clearly specified by lower performance levels, whereas advice on students' second presentation focused on improving specific subskills, that had been performed with insufficient quality during the first presentation (Guideline 10). Students carried out two consecutive projects of increasing complexity (Project 1, Project 2) with authentic tasks, amongst which the oral presentations (Guideline 11). Students were provided with opportunities to observe peer-models to increase their self-efficacy beliefs and oral presentation competence. In our study, only students that received video-enhanced rubrics could observe videos with peer-models before their first presentation (Guideline 12). Students were allowed enough opportunities to rehearse their oral presentations, to increase their presentation competence, and to decrease their communication apprehension. Within our study, only two oral presentations could be provided feedback, but students could rehearse as often as they wanted outside the classroom (Guideline 13). We ensured that feedback in the rubrics was of high quality, i.e., explicit, contextual, adequately timed, and of suitable intensity for improving students’ oral presentation competence. Both experimental groups in the study used digital analytic rubrics within the Viewbrics tool (both teacher-, peer-, and self-feedback). The control group received feedback by a more conventional rubric (rating scale), and could therefore not use the formative assessment and reflection functions (Guideline 14). The setup of the study implied that all peers play a major role during formative assessment in both experimental groups, because they formatively assessed each oral presentation using the Viewbrics tool (Guideline 15). The control group received feedback from their teacher. Both experimental groups used the Viewbrics tool to facilitate self-assessment (Guideline 16). The control group did not receive analytic progress data to inform their self-assessment. Specific goal-setting within self-assessment has been shown to positively stimulate oral presentation performance, to improve self-efficacy and reduce presentation anxiety (De Grez et al., 2009a ; Luchetti et al., 2003 ), so the Viewbrics tool was developed to support both specific goal-setting and self-reflection (Guideline 17).

Subskills and levels for oral presentation

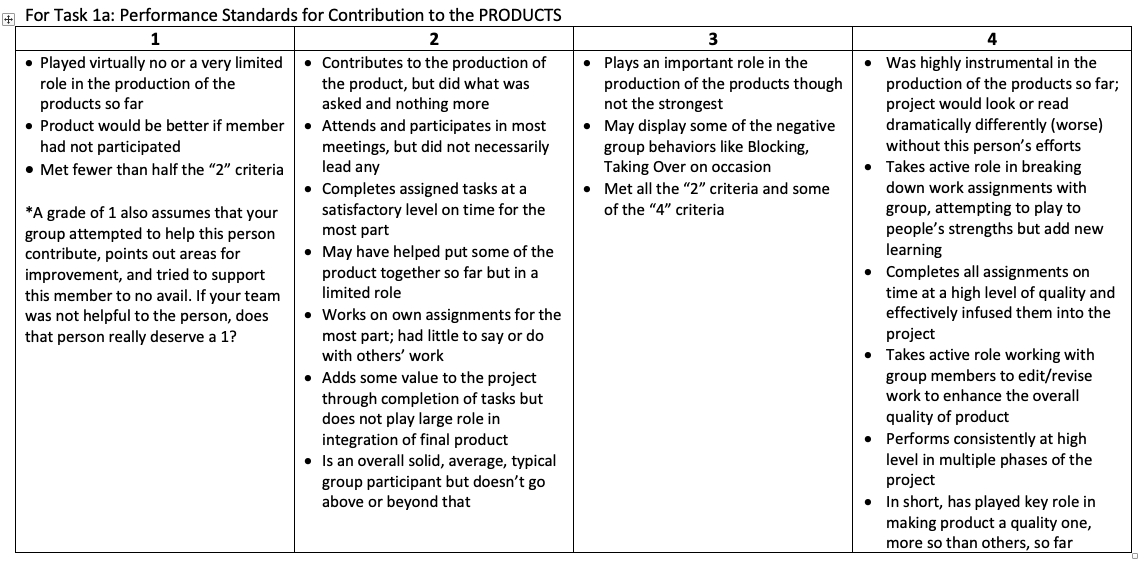

Reddy and Andrade ( 2010 ) stress that rubrics should be tailored to the specific learning objectives and target groups. Oral presentations in secondary education (our study context) involve generating and delivering informative messages with attention to vocal variety, articulation, and non-verbal signals. In this context, message composition and message delivery are considered important (Quianthy, 1990 ). Strong arguments (‘logos’) have to be presented in a credible (‘ethos’) and exciting (‘pathos’) way (Baccarini & Bonfanti, 2015 ). Public speaking experts agree that there is not one right way to do an oral presentation (Schneider et al., 2017 ). There is agreement that all presenters need much practice, commitment, and creativity. Effective presenters do not rigorously and obsessively apply communication rules and techniques, as their audience may then perceive the performance as too technical or artificial. But all presentations should demonstrate sufficient mastery of elementary (sub)skills in an integrated manner. Therefore, such skills should also be practiced as a whole (including knowledge and attitudes), making the attainment of a skill performance level more than the sum of its constituent (sub)skills (Van Merriënboer & Kirschner, 2013 ). A validated instrument for assessing oral presentation performance is needed to help teachers assess and support students while practicing.

When we started developing rubrics with the Viewbrics tool (late 2016), there were no studies or validated measuring instruments for oral presentation performance in secondary education, although several schools used locally developed, non-validated assessment forms (i.e., conventional rubrics). For instance, Schreiber et al. ( 2012 ) had developed an analytic rubric for public speaking skills assessment in higher education, aimed at faculty members and students across disciplines. They identified eleven (sub)skills of public speaking, that could be subsumed under three factors (‘topic adaptation’, ‘speech presentation’ and ‘nonverbal delivery’, similar to logos-ethos-pathos).

Such previous work holds much value, but still had to be adapted and elaborated in the context of the current study. This study elaborated and evaluated eleven subskills that can be identified within the natural flow of an oral presentation and its distinctive features (See Fig. 1 for an overview of subskills, and Fig. 2 for a specification of performance levels for a specific subskill).

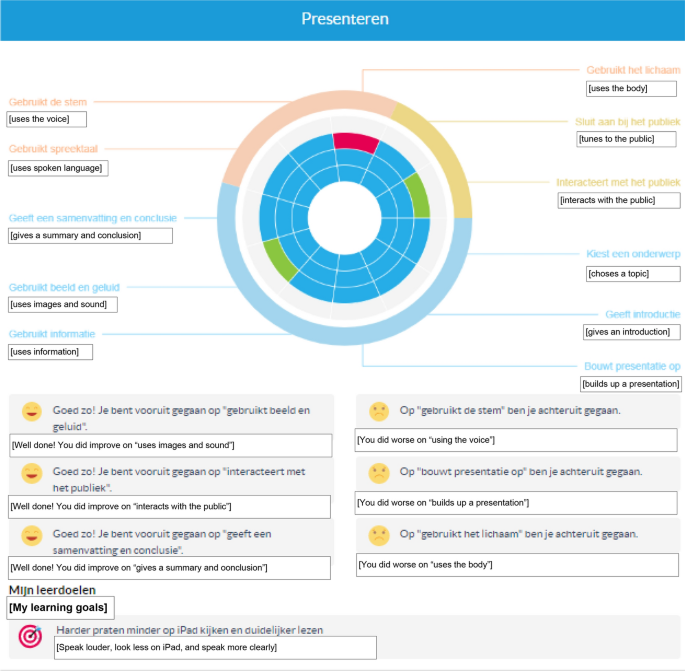

Between brackets are names of subskills as they appear in the dashboard of the Viewbrics tool (Fig. 3 ).

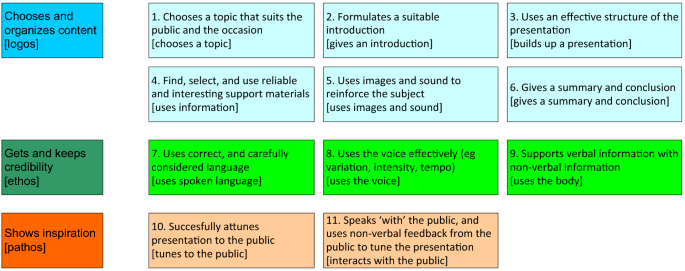

Visualization of oral presentation progress and feedback in the Viewbrics tool

The upper part of Fig. 2 shows the scoring levels for first-year secondary school students for criterium 4 of the oral presentation assessment (four values, from more expert (4 points) to more novice (1 point), from right to left), an example of the conventional rating-scale rubrics. The lower part shows the corresponding screenshot from the Viewbrics tool, representing a text-based analytic rubric example. A video-enhanced analytic rubric example for this subskill provides a peer modelling the required behavior on expert level, with question prompts on selecting reliable and interesting materials. Performance levels were inspired by previous research (Ritchie, 2016 ; Schneider et al., 2017 ; Schreiber et al., 2012 ), but also based upon current secondary school practices in the Netherlands, and developed and tested with secondary school teachers and their students.

All eleven subskills are to be scored on similar four-point Likert scales, and have similar weights in determining total average scores. Two pilot studies tested the usability, validity and reliability of the assessment tool (Rusman et al., 2019 ). Based on this input, the final rubrics were improved and embedded in a prototype of the online Viewbrics tool, and used for this study. The formative assessment method consisted of six steps: (1) study the rubric; (2) practice and conduct an oral presentation; (3) conduct a self-assessment; (4) consult feedback from teacher and peers; (5) Reflect on feedback; and (6) select personal learning goal(s) for the next oral presentation.

After the second project (Project 2), that used the same setup and assessment method as for the first project, students in experimental groups could also see their visualized progress in the ‘dashboard’ of the Viewbrics tool (see Fig. 3 , with English translations provided between brackets), by comparing performance on their two project presentations during the second reflection assignment. The dashboard of the tool shows progress (inner circles), with green reflecting improvement on subskills, blue indicating constant subskills, and red indicating declining subskills. Feedback is provided by emoticons with text. Students’ personal learning goals after reflection are shown under ‘Mijn leerdoelen’ [My learning goals].