What is Problem Solving Algorithm?, Steps, Representation

- Post author: Disha Singh

- Post published: 6 June 2021

- Post category: Computer Science

- Post comments: 0 Comments

Table of Contents

- 1 What is Problem Solving Algorithm?

- 2 Definition of Problem Solving Algorithm

- 3.1 Analysing the Problem

- 3.2 Developing an Algorithm

- 3.4 Testing and Debugging

- 4.1 Flowchart

- 4.2 Pseudo code

What is Problem Solving Algorithm?

Computers are used for solving various day-to-day problems and thus problem solving is an essential skill that a computer science student should know. It is pertinent to mention that computers themselves cannot solve a problem. Precise step-by-step instructions should be given by us to solve the problem.

Thus, the success of a computer in solving a problem depends on how correctly and precisely we define the problem, design a solution (algorithm) and implement the solution (program) using a programming language.

Thus, problem solving is the process of identifying a problem, developing an algorithm for the identified problem and finally implementing the algorithm to develop a computer program.

Definition of Problem Solving Algorithm

These are some simple definition of problem solving algorithm which given below:

Steps for Problem Solving

When problems are straightforward and easy, we can easily find the solution. But a complex problem requires a methodical approach to find the right solution. In other words, we have to apply problem solving techniques.

Problem solving begins with the precise identification of the problem and ends with a complete working solution in terms of a program or software. Key steps required for solving a problem using a computer.

For Example: Suppose while driving, a vehicle starts making a strange noise. We might not know how to solve the problem right away. First, we need to identify from where the noise is coming? In case the problem cannot be solved by us, then we need to take the vehicle to a mechanic.

The mechanic will analyse the problem to identify the source of the noise, make a plan about the work to be done and finally repair the vehicle in order to remove the noise. From the example, it is explicit that, finding the solution to a problem might consist of multiple steps.

Following are Steps for Problem Solving :

Analysing the Problem

Developing an algorithm, testing and debugging.

It is important to clearly understand a problem before we begin to find the solution for it. If we are not clear as to what is to be solved, we may end up developing a program which may not solve our purpose.

Thus, we need to read and analyse the problem statement carefully in order to list the principal components of the problem and decide the core functionalities that our solution should have. By analysing a problem, we would be able to figure out what are the inputs that our program should accept and the outputs that it should produce.

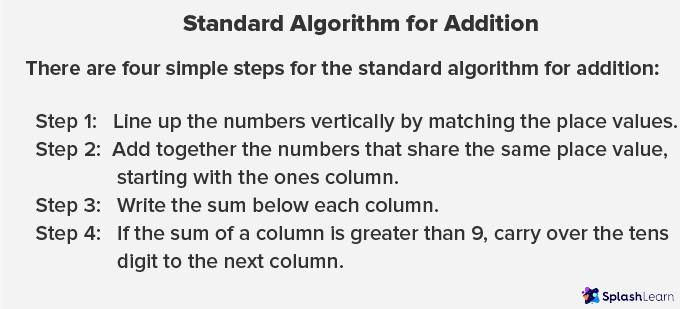

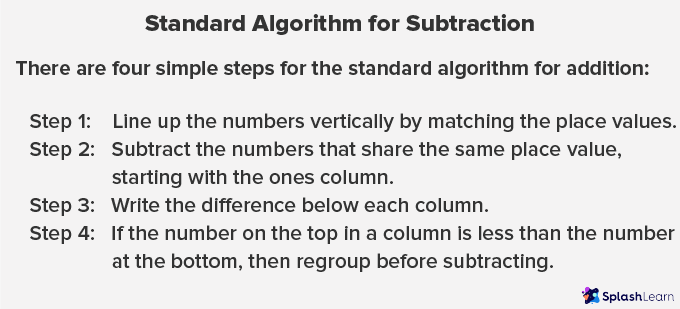

It is essential to device a solution before writing a program code for a given problem. The solution is represented in natural language and is called an algorithm. We can imagine an algorithm like a very well-written recipe for a dish, with clearly defined steps that, if followed, one will end up preparing the dish.

We start with a tentative solution plan and keep on refining the algorithm until the algorithm is able to capture all the aspects of the desired solution. For a given problem, more than one algorithm is possible and we have to select the most suitable solution.

After finalising the algorithm, we need to convert the algorithm into the format which can be understood by the computer to generate the desired solution. Different high level programming languages can be used for writing a program. It is equally important to record the details of the coding procedures followed and document the solution. This is helpful when revisiting the programs at a later stage.

The program created should be tested on various parameters. The program should meet the requirements of the user. It must respond within the expected time. It should generate correct output for all possible inputs. In the presence of syntactical errors, no output will be obtained. In case the output generated is incorrect, then the program should be checked for logical errors, if any.

Software industry follows standardised testing methods like unit or component testing, integration testing, system testing, and acceptance testing while developing complex applications. This is to ensure that the software meets all the business and technical requirements and works as expected.

The errors or defects found in the testing phases are debugged or rectified and the program is again tested. This continues till all the errors are removed from the program. Once the software application has been developed, tested and delivered to the user, still problems in terms of functioning can come up and need to be resolved from time to time.

The maintenance of the solution, thus, involves fixing the problems faced by the user, answering the queries of the user and even serving the request for addition or modification of features.

Representation of Algorithms

Using their algorithmic thinking skills, the software designers or programmers analyse the problem and identify the logical steps that need to be followed to reach a solution. Once the steps are identified, the need is to write down these steps along with the required input and desired output.

There are two common methods of representing an algorithm —flowchart and pseudocode. Either of the methods can be used to represent an algorithm while keeping in mind the following:

- It showcases the logic of the problem solution, excluding any implementational details.

- It clearly reveals the flow of control during execution of the program.

A flowchart is a visual representation of an algorithm . A flowchart is a diagram made up of boxes, diamonds and other shapes, connected by arrows. Each shape represents a step of the solution process and the arrow represents the order or link among the steps.

A flow chart is a step by step diagrammatic representation of the logic paths to solve a given problem. Or A flowchart is visual or graphical representation of an algorithm .

The flowcharts are pictorial representation of the methods to b used to solve a given problem and help a great deal to analyze the problem and plan its solution in a systematic and orderly manner. A flowchart when translated in to a proper computer language, results in a complete program.

Advantages of Flowcharts:

- The flowchart shows the logic of a problem displayed in pictorial fashion which felicitates easier checking of an algorithm

- The Flowchart is good means of communication to other users. It is also a compact means of recording an algorithm solution to a problem.

- The flowchart allows the problem solver to break the problem into parts. These parts can be connected to make master chart.

- The flowchart is a permanent record of the solution which can be consulted at a later time.

Differences between Algorithm and Flowchart

Pseudo code.

The Pseudo code is neither an algorithm nor a program. It is an abstract form of a program. It consists of English like statements which perform the specific operations. It is defined for an algorithm. It does not use any graphical representation.

In pseudo code , the program is represented in terms of words and phrases, but the syntax of program is not strictly followed.

Advantages of Pseudocode

- Before writing codes in a high level language, a pseudocode of a program helps in representing the basic functionality of the intended program.

- By writing the code first in a human readable language, the programmer safeguards against leaving out any important step. Besides, for non-programmers, actual programs are difficult to read and understand.

- But pseudocode helps them to review the steps to confirm that the proposed implementation is going to achieve the desire output.

Related posts:

10 Types of Computers | History of Computers, Advantages

What is microprocessor evolution of microprocessor, types, features, types of computer memory, characteristics, primary memory, secondary memory, data and information: definition, characteristics, types, channels, approaches, what is cloud computing classification, characteristics, principles, types of cloud providers, what is debugging types of errors, types of storage devices, advantages, examples, 10 evolution of computing machine, history, what are functions of operating system 6 functions, advantages and disadvantages of operating system, data representation in computer: number systems, characters, audio, image and video.

- What are Data Types in C++? Types

What are Operators in C? Different Types of Operators in C

- What are Expressions in C? Types

What are Decision Making Statements in C? Types

You might also like.

What is C++ Programming Language? C++ Character Set, C++ Tokens

What is Artificial Intelligence? Functions, 6 Benefits, Applications of AI

What is Computer System? Definition, Characteristics, Functional Units, Components

What is Big Data? Characteristics, Tools, Types, Internet of Things (IOT)

Advantages and Disadvantages of Flowcharts

Generations of Computer First To Fifth, Classification, Characteristics, Features, Examples

What is operating system? Functions, Types, Types of User Interface

- Entrepreneurship

- Organizational Behavior

- Financial Management

- Communication

- Human Resource Management

- Sales Management

- Marketing Management

- Admiral “Amazing Grace” Hopper

Exploring the Intricacies of NP-Completeness in Computer Science

Understanding p vs np problems in computer science: a primer for beginners, understanding key theoretical frameworks in computer science: a beginner’s guide.

Learn Computer Science with Python

CS is a journey, not a destination

- Foundations

Understanding Algorithms: The Key to Problem-Solving Mastery

The world of computer science is a fascinating realm, where intricate concepts and technologies continuously shape the way we interact with machines. Among the vast array of ideas and principles, few are as fundamental and essential as algorithms. These powerful tools serve as the building blocks of computation, enabling computers to solve problems, make decisions, and process vast amounts of data efficiently.

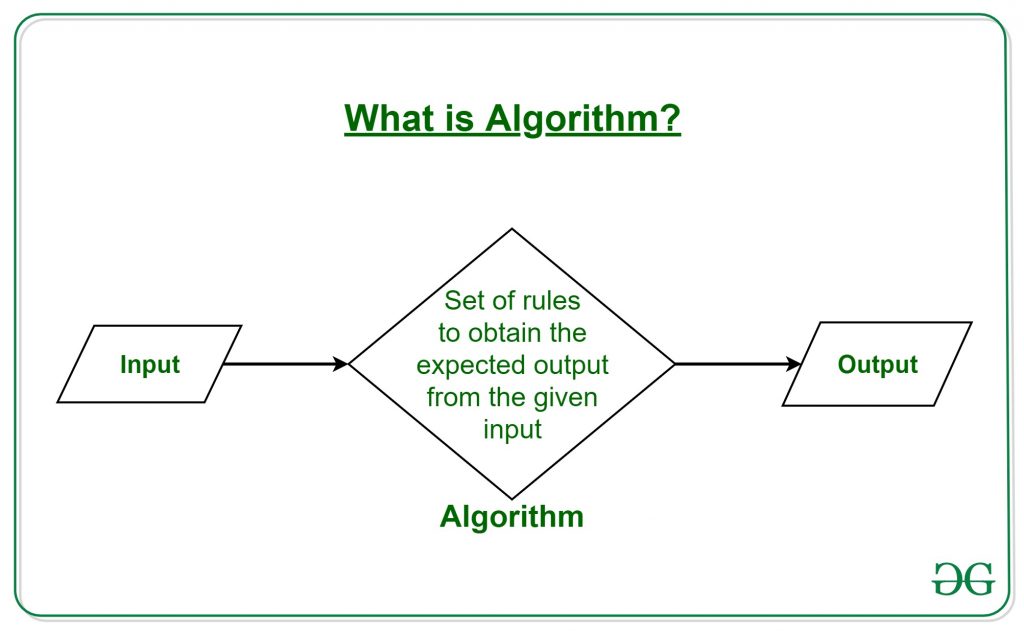

An algorithm can be thought of as a step-by-step procedure or a set of instructions designed to solve a specific problem or accomplish a particular task. It represents a systematic approach to finding solutions and provides a structured way to tackle complex computational challenges. Algorithms are at the heart of various applications, from simple calculations to sophisticated machine learning models and complex data analysis.

Understanding algorithms and their inner workings is crucial for anyone interested in computer science. They serve as the backbone of software development, powering the creation of innovative applications across numerous domains. By comprehending the concept of algorithms, aspiring computer science enthusiasts gain a powerful toolset to approach problem-solving and gain insight into the efficiency and performance of different computational methods.

In this article, we aim to provide a clear and accessible introduction to algorithms, focusing on their importance in problem-solving and exploring common types such as searching, sorting, and recursion. By delving into these topics, readers will gain a solid foundation in algorithmic thinking and discover the underlying principles that drive the functioning of modern computing systems. Whether you’re a beginner in the world of computer science or seeking to deepen your understanding, this article will equip you with the knowledge to navigate the fascinating world of algorithms.

What are Algorithms?

At its core, an algorithm is a systematic, step-by-step procedure or set of rules designed to solve a problem or perform a specific task. It provides clear instructions that, when followed meticulously, lead to the desired outcome.

Consider an algorithm to be akin to a recipe for your favorite dish. When you decide to cook, the recipe is your go-to guide. It lists out the ingredients you need, their exact quantities, and a detailed, step-by-step explanation of the process, from how to prepare the ingredients to how to mix them, and finally, the cooking process. It even provides an order for adding the ingredients and specific times for cooking to ensure the dish turns out perfect.

In the same vein, an algorithm, within the realm of computer science, provides an explicit series of instructions to accomplish a goal. This could be a simple goal like sorting a list of numbers in ascending order, a more complex task such as searching for a specific data point in a massive dataset, or even a highly complicated task like determining the shortest path between two points on a map (think Google Maps). No matter the complexity of the problem at hand, there’s always an algorithm working tirelessly behind the scenes to solve it.

Furthermore, algorithms aren’t limited to specific programming languages. They are universal and can be implemented in any language. This is why understanding the fundamental concept of algorithms can empower you to solve problems across various programming languages.

The Importance of Algorithms

Algorithms are indisputably the backbone of all computational operations. They’re a fundamental part of the digital world that we interact with daily. When you search for something on the web, an algorithm is tirelessly working behind the scenes to sift through millions, possibly billions, of web pages to bring you the most relevant results. When you use a GPS to find the fastest route to a location, an algorithm is computing all possible paths, factoring in variables like traffic and road conditions, to provide you the optimal route.

Consider the world of social media, where algorithms curate personalized feeds based on our previous interactions, or in streaming platforms where they recommend shows and movies based on our viewing habits. Every click, every like, every search, and every interaction is processed by algorithms to serve you a seamless digital experience.

In the realm of computer science and beyond, everything revolves around problem-solving, and algorithms are our most reliable problem-solving tools. They provide a structured approach to problem-solving, breaking down complex problems into manageable steps and ensuring that every eventuality is accounted for.

Moreover, an algorithm’s efficiency is not just a matter of preference but a necessity. Given that computers have finite resources — time, memory, and computational power — the algorithms we use need to be optimized to make the best possible use of these resources. Efficient algorithms are the ones that can perform tasks more quickly, using less memory, and provide solutions to complex problems that might be infeasible with less efficient alternatives.

In the context of massive datasets (the likes of which are common in our data-driven world), the difference between a poorly designed algorithm and an efficient one could be the difference between a solution that takes years to compute and one that takes mere seconds. Therefore, understanding, designing, and implementing efficient algorithms is a critical skill for any computer scientist or software engineer.

Hence, as a computer science beginner, you are starting a journey where algorithms will be your best allies — universal keys capable of unlocking solutions to a myriad of problems, big or small.

Common Types of Algorithms: Searching and Sorting

Two of the most ubiquitous types of algorithms that beginners often encounter are searching and sorting algorithms.

Searching algorithms are designed to retrieve specific information from a data structure, like an array or a database. A simple example is the linear search, which works by checking each element in the array until it finds the one it’s looking for. Although easy to understand, this method isn’t efficient for large datasets, which is where more complex algorithms like binary search come in.

Binary search, on the other hand, is like looking up a word in the dictionary. Instead of checking each word from beginning to end, you open the dictionary in the middle and see if the word you’re looking for should be on the left or right side, thereby reducing the search space by half with each step.

Sorting algorithms, meanwhile, are designed to arrange elements in a particular order. A simple sorting algorithm is bubble sort, which works by repeatedly swapping adjacent elements if they’re in the wrong order. Again, while straightforward, it’s not efficient for larger datasets. More advanced sorting algorithms, such as quicksort or mergesort, have been designed to sort large data collections more efficiently.

Diving Deeper: Graph and Dynamic Programming Algorithms

Building upon our understanding of searching and sorting algorithms, let’s delve into two other families of algorithms often encountered in computer science: graph algorithms and dynamic programming algorithms.

A graph is a mathematical structure that models the relationship between pairs of objects. Graphs consist of vertices (or nodes) and edges (where each edge connects a pair of vertices). Graphs are commonly used to represent real-world systems such as social networks, web pages, biological networks, and more.

Graph algorithms are designed to solve problems centered around these structures. Some common graph algorithms include:

Dynamic programming is a powerful method used in optimization problems, where the main problem is broken down into simpler, overlapping subproblems. The solutions to these subproblems are stored and reused to build up the solution to the main problem, saving computational effort.

Here are two common dynamic programming problems:

Understanding these algorithm families — searching, sorting, graph, and dynamic programming algorithms — not only equips you with powerful tools to solve a variety of complex problems but also serves as a springboard to dive deeper into the rich ocean of algorithms and computer science.

Recursion: A Powerful Technique

While searching and sorting represent specific problem domains, recursion is a broad technique used in a wide range of algorithms. Recursion involves breaking down a problem into smaller, more manageable parts, and a function calling itself to solve these smaller parts.

To visualize recursion, consider the task of calculating factorial of a number. The factorial of a number n (denoted as n! ) is the product of all positive integers less than or equal to n . For instance, the factorial of 5 ( 5! ) is 5 x 4 x 3 x 2 x 1 = 120 . A recursive algorithm for finding factorial of n would involve multiplying n by the factorial of n-1 . The function keeps calling itself with a smaller value of n each time until it reaches a point where n is equal to 1, at which point it starts returning values back up the chain.

Algorithms are truly the heart of computer science, transforming raw data into valuable information and insight. Understanding their functionality and purpose is key to progressing in your computer science journey. As you continue your exploration, remember that each algorithm you encounter, no matter how complex it may seem, is simply a step-by-step procedure to solve a problem.

We’ve just scratched the surface of the fascinating world of algorithms. With time, patience, and practice, you will learn to create your own algorithms and start solving problems with confidence and efficiency.

Related Articles

Three Elegant Algorithms Every Computer Science Beginner Should Know

What is an Algorithm? Algorithm Definition for Computer Science Beginners

If you’re a student and want to study computer science, or you’re learning to code, then there’s a chance you’ve heard of algorithms. Simply put, an algorithm is a set of instructions that performs a particular action.

Contrary to popular belief, an algorithm is not some piece of code that requires extremely advanced knowledge in order to implement. At the same time, I won't say that an algorithm is easy to implement, either. Some can be, but it depends on what you're trying to do.

In the end, the best way to get better at algorithms is by practicing them regularly.

In this article, you'll learn all about algorithms so you'll be prepared next time you encounter one, or have to write one yourself. I will also share some freeCodeCamp resources that will help you learn how to write algorithms in different languages.

What We'll Cover

What exactly is an algorithm.

- Why Do You Need an Algorithm?

Types of Algorithms

- Which Programming Language Is Best for Writing Algorithms? (rename)

Resources for Learning Algorithms

An algorithm is a set of steps for solving a known problem. Most algorithms are implemented to run following the four steps below:

- take an input

- access that input and make sure it's correct

- show the result

- terminate (the stage where the algorithm stop running)

Some steps of the algorithm may run repeatedly, but in the end, termination is what ends an algorithm.

For example, the algorithm below sort numbers in descending order. It loops through the numbers specified until it arranges them in descending order, then terminates when there are no more number to sort:

For a theoretical basis, for instance, an algorithm for dividing two numbers and showing the remainder could run through the steps below:

- Step 1 : the user enters the first and second numbers – the dividend and the divisor

- Step 2 : the algorithm written to perform the division takes in the number, then puts a division sign between the dividend and the divisor. It also checks for a remainder.

- Step 3 : the result of the division and remainder is shown to the user

- Step 4 : the algorithm terminates

Here's how that kind of algorithm is implemented in JavaScript:

If there's an error, the algorithm may not run, or might return the wrong output. If the programmer who wrote the algorithm took user experience into consideration, then an error handler could show an error to the user and let them know what to do.

Why do you Need an Algorithm?

If you’re one of those computer science students asking “why algorithms”, here are some reasons why you should learn about them:

Problem Solving : being able to write an algorithm improves your problem-solving capacity. It is a common belief that once you can solve a problem with one thing, you can solve problems with another closely related one. So, if you can solve problems with Python, you can solve problems with JavaScript.

Scalability : an algorithm helps your software/application/website respond appropriately to demands.

Proper Utilization of Resources: choosing the right algorithm ensures proper utilization of resources such as memory, storage, network, and others.

Algorithms in computer science can be broadly categorized into searching and sorting algorithms:

- Sorting – selection sort, bubble sort, insertion sort, merge sort, quick sort, and so on.

- Searching – binary search, exponential search, jump search, and so on.

But there are many types of algorithms that programers use regularly. Here are some other common algorithm types organized by category:

- Hashing – SHA-256, SHA-1

- Brute force – trial and error

- Divide and conquer – merge sort algorithm

- Greedy – Prim's algorithm, Kruskal's algorithm

- Recursive – computer factorials

Which Programming Language Is Best for Writing Algorithms?

You can write angorithms in any programming language. There's no benefit to using one language over another.

Every language has its strengths and weaknesses, and each has unique syntax and features. So writing an algorithm might look different in one language compared to another.

But algorithms are universal concepts. So if you can write bubble sort in Python, you should also be able to write it in JavaScript or C#.

Here are some videos from the freeCodeCamp YouTube channel that can help you learn algorithms effectively:

- Algorithms and Data Structures Tutorial - Full Course for Beginners

- Algorithms in Python – Full Course for Beginners

- Data Structures Easy to Advanced Course - Full Tutorial from a Google Engineer

- Dynamic Programming - Learn to Solve Algorithmic Problems & Coding Challenges

- Understanding Sorting Algorithms

Also, the interactive JavaScript Algorithms and Data Structures Certification on freeCodeCamp can give you a crash course in algorithmic thinking in JavaScript.

In this article, we went over what an algorithm is, their types, and resources for learning algorithms.

If you read this far, the next thing you should do is start learning algorithms with one or more of the resources listed in this article.

Thank you for reading.

Web developer and technical writer focusing on frontend technologies. I also dabble in a lot of other technologies.

If you read this far, thank the author to show them you care. Say Thanks

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

Smart. Open. Grounded. Inventive. Read our Ideas Made to Matter.

Which program is right for you?

Through intellectual rigor and experiential learning, this full-time, two-year MBA program develops leaders who make a difference in the world.

A rigorous, hands-on program that prepares adaptive problem solvers for premier finance careers.

A 12-month program focused on applying the tools of modern data science, optimization and machine learning to solve real-world business problems.

Earn your MBA and SM in engineering with this transformative two-year program.

Combine an international MBA with a deep dive into management science. A special opportunity for partner and affiliate schools only.

A doctoral program that produces outstanding scholars who are leading in their fields of research.

Bring a business perspective to your technical and quantitative expertise with a bachelor’s degree in management, business analytics, or finance.

A joint program for mid-career professionals that integrates engineering and systems thinking. Earn your master’s degree in engineering and management.

An interdisciplinary program that combines engineering, management, and design, leading to a master’s degree in engineering and management.

Executive Programs

A full-time MBA program for mid-career leaders eager to dedicate one year of discovery for a lifetime of impact.

This 20-month MBA program equips experienced executives to enhance their impact on their organizations and the world.

Non-degree programs for senior executives and high-potential managers.

A non-degree, customizable program for mid-career professionals.

Women’s career advice: Remember that exhaustion is not a yardstick for productivity

How, and why, to run a values-based business

Accelerated research about generative AI

Credit: Alejandro Giraldo

Ideas Made to Matter

How to use algorithms to solve everyday problems

Kara Baskin

May 8, 2017

How can I navigate the grocery store quickly? Why doesn’t anyone like my Facebook status? How can I alphabetize my bookshelves in a hurry? Apple data visualizer and MIT System Design and Management graduate Ali Almossawi solves these common dilemmas and more in his new book, “ Bad Choices: How Algorithms Can Help You Think Smarter and Live Happier ,” a quirky, illustrated guide to algorithmic thinking.

For the uninitiated: What is an algorithm? And how can algorithms help us to think smarter?

An algorithm is a process with unambiguous steps that has a beginning and an end, and does something useful.

Algorithmic thinking is taking a step back and asking, “If it’s the case that algorithms are so useful in computing to achieve predictability, might they also be useful in everyday life, when it comes to, say, deciding between alternative ways of solving a problem or completing a task?” In all cases, we optimize for efficiency: We care about time or space.

Note the mention of “deciding between.” Computer scientists do that all the time, and I was convinced that the tools they use to evaluate competing algorithms would be of interest to a broad audience.

Why did you write this book, and who can benefit from it?

All the books I came across that tried to introduce computer science involved coding. My approach to making algorithms compelling was focusing on comparisons. I take algorithms and put them in a scene from everyday life, such as matching socks from a pile, putting books on a shelf, remembering things, driving from one point to another, or cutting an onion. These activities can be mapped to one or more fundamental algorithms, which form the basis for the field of computing and have far-reaching applications and uses.

I wrote the book with two audiences in mind. One, anyone, be it a learner or an educator, who is interested in computer science and wants an engaging and lighthearted, but not a dumbed-down, introduction to the field. Two, anyone who is already familiar with the field and wants to experience a way of explaining some of the fundamental concepts in computer science differently than how they’re taught.

I’m going to the grocery store and only have 15 minutes. What do I do?

Do you know what the grocery store looks like ahead of time? If you know what it looks like, it determines your list. How do you prioritize things on your list? Order the items in a way that allows you to avoid walking down the same aisles twice.

For me, the intriguing thing is that the grocery store is a scene from everyday life that I can use as a launch pad to talk about various related topics, like priority queues and graphs and hashing. For instance, what is the most efficient way for a machine to store a prioritized list, and what happens when the equivalent of you scratching an item from a list happens in the machine’s list? How is a store analogous to a graph (an abstraction in computer science and mathematics that defines how things are connected), and how is navigating the aisles in a store analogous to traversing a graph?

Nobody follows me on Instagram. How do I get more followers?

The concept of links and networks, which I cover in Chapter 6, is relevant here. It’s much easier to get to people whom you might be interested in and who might be interested in you if you can start within the ball of links that connects those people, rather than starting at a random spot.

You mention Instagram: There, the hashtag is one way to enter that ball of links. Tag your photos, engage with users who tag their photos with the same hashtags, and you should be on your way to stardom.

What are the secret ingredients of a successful Facebook post?

I’ve posted things on social media that have died a sad death and then posted the same thing at a later date that somehow did great. Again, if we think of it in terms that are relevant to algorithms, we’d say that the challenge with making something go viral is really getting that first spark. And to get that first spark, a person who is connected to the largest number of people who are likely to engage with that post, needs to share it.

With [my first book], “Bad Arguments,” I spent a month pouring close to $5,000 into advertising for that project with moderate results. And then one science journalist with a large audience wrote about it, and the project took off and hasn’t stopped since.

What problems do you wish you could solve via algorithm but can’t?

When we care about efficiency, thinking in terms of algorithms is useful. There are cases when that’s not the quality we want to optimize for — for instance, learning or love. I walk for several miles every day, all throughout the city, as I find it relaxing. I’ve never asked myself, “What’s the most efficient way I can traverse the streets of San Francisco?” It’s not relevant to my objective.

Algorithms are a great way of thinking about efficiency, but the question has to be, “What approach can you optimize for that objective?” That’s what worries me about self-help: Books give you a silver bullet for doing everything “right” but leave out all the nuances that make us different. What works for you might not work for me.

Which companies use algorithms well?

When you read that the overwhelming majority of the shows that users of, say, Netflix, watch are due to Netflix’s recommendation engine, you know they’re doing something right.

Related Articles

- 1. Micro-Worlds

- 2. Light-Bot in Java

- 3. Jeroos of Santong Island

- 4. Problem Solving and Algorithms

- 5. Creating Jeroo Methods

- 6. Conditionally Executing Actions

- 7. Repeating Actions

- 8. Handling Touch Events

- 9. Adding Text to the Screen

Problem Solving and Algorithms

Learn a basic process for developing a solution to a problem. Nothing in this chapter is unique to using a computer to solve a problem. This process can be used to solve a wide variety of problems, including ones that have nothing to do with computers.

Problems, Solutions, and Tools

I have a problem! I need to thank Aunt Kay for the birthday present she sent me. I could send a thank you note through the mail. I could call her on the telephone. I could send her an email message. I could drive to her house and thank her in person. In fact, there are many ways I could thank her, but that's not the point. The point is that I must decide how I want to solve the problem, and use the appropriate tool to implement (carry out) my plan. The postal service, the telephone, the internet, and my automobile are tools that I can use, but none of these actually solves my problem. In a similar way, a computer does not solve problems, it's just a tool that I can use to implement my plan for solving the problem.

Knowing that Aunt Kay appreciates creative and unusual things, I have decided to hire a singing messenger to deliver my thanks. In this context, the messenger is a tool, but one that needs instructions from me. I have to tell the messenger where Aunt Kay lives, what time I would like the message to be delivered, and what lyrics I want sung. A computer program is similar to my instructions to the messenger.

The story of Aunt Kay uses a familiar context to set the stage for a useful point of view concerning computers and computer programs. The following list summarizes the key aspects of this point of view.

A computer is a tool that can be used to implement a plan for solving a problem.

A computer program is a set of instructions for a computer. These instructions describe the steps that the computer must follow to implement a plan.

An algorithm is a plan for solving a problem.

A person must design an algorithm.

A person must translate an algorithm into a computer program.

This point of view sets the stage for a process that we will use to develop solutions to Jeroo problems. The basic process is important because it can be used to solve a wide variety of problems, including ones where the solution will be written in some other programming language.

An Algorithm Development Process

Every problem solution starts with a plan. That plan is called an algorithm.

There are many ways to write an algorithm. Some are very informal, some are quite formal and mathematical in nature, and some are quite graphical. The instructions for connecting a DVD player to a television are an algorithm. A mathematical formula such as πR 2 is a special case of an algorithm. The form is not particularly important as long as it provides a good way to describe and check the logic of the plan.

The development of an algorithm (a plan) is a key step in solving a problem. Once we have an algorithm, we can translate it into a computer program in some programming language. Our algorithm development process consists of five major steps.

Step 1: Obtain a description of the problem.

Step 2: analyze the problem., step 3: develop a high-level algorithm., step 4: refine the algorithm by adding more detail., step 5: review the algorithm..

This step is much more difficult than it appears. In the following discussion, the word client refers to someone who wants to find a solution to a problem, and the word developer refers to someone who finds a way to solve the problem. The developer must create an algorithm that will solve the client's problem.

The client is responsible for creating a description of the problem, but this is often the weakest part of the process. It's quite common for a problem description to suffer from one or more of the following types of defects: (1) the description relies on unstated assumptions, (2) the description is ambiguous, (3) the description is incomplete, or (4) the description has internal contradictions. These defects are seldom due to carelessness by the client. Instead, they are due to the fact that natural languages (English, French, Korean, etc.) are rather imprecise. Part of the developer's responsibility is to identify defects in the description of a problem, and to work with the client to remedy those defects.

The purpose of this step is to determine both the starting and ending points for solving the problem. This process is analogous to a mathematician determining what is given and what must be proven. A good problem description makes it easier to perform this step.

When determining the starting point, we should start by seeking answers to the following questions:

What data are available?

Where is that data?

What formulas pertain to the problem?

What rules exist for working with the data?

What relationships exist among the data values?

When determining the ending point, we need to describe the characteristics of a solution. In other words, how will we know when we're done? Asking the following questions often helps to determine the ending point.

What new facts will we have?

What items will have changed?

What changes will have been made to those items?

What things will no longer exist?

An algorithm is a plan for solving a problem, but plans come in several levels of detail. It's usually better to start with a high-level algorithm that includes the major part of a solution, but leaves the details until later. We can use an everyday example to demonstrate a high-level algorithm.

Problem: I need a send a birthday card to my brother, Mark.

Analysis: I don't have a card. I prefer to buy a card rather than make one myself.

High-level algorithm:

Go to a store that sells greeting cards Select a card Purchase a card Mail the card

This algorithm is satisfactory for daily use, but it lacks details that would have to be added were a computer to carry out the solution. These details include answers to questions such as the following.

"Which store will I visit?"

"How will I get there: walk, drive, ride my bicycle, take the bus?"

"What kind of card does Mark like: humorous, sentimental, risqué?"

These kinds of details are considered in the next step of our process.

A high-level algorithm shows the major steps that need to be followed to solve a problem. Now we need to add details to these steps, but how much detail should we add? Unfortunately, the answer to this question depends on the situation. We have to consider who (or what) is going to implement the algorithm and how much that person (or thing) already knows how to do. If someone is going to purchase Mark's birthday card on my behalf, my instructions have to be adapted to whether or not that person is familiar with the stores in the community and how well the purchaser known my brother's taste in greeting cards.

When our goal is to develop algorithms that will lead to computer programs, we need to consider the capabilities of the computer and provide enough detail so that someone else could use our algorithm to write a computer program that follows the steps in our algorithm. As with the birthday card problem, we need to adjust the level of detail to match the ability of the programmer. When in doubt, or when you are learning, it is better to have too much detail than to have too little.

Most of our examples will move from a high-level to a detailed algorithm in a single step, but this is not always reasonable. For larger, more complex problems, it is common to go through this process several times, developing intermediate level algorithms as we go. Each time, we add more detail to the previous algorithm, stopping when we see no benefit to further refinement. This technique of gradually working from a high-level to a detailed algorithm is often called stepwise refinement .

The final step is to review the algorithm. What are we looking for? First, we need to work through the algorithm step by step to determine whether or not it will solve the original problem. Once we are satisfied that the algorithm does provide a solution to the problem, we start to look for other things. The following questions are typical of ones that should be asked whenever we review an algorithm. Asking these questions and seeking their answers is a good way to develop skills that can be applied to the next problem.

Does this algorithm solve a very specific problem or does it solve a more general problem ? If it solves a very specific problem, should it be generalized?

For example, an algorithm that computes the area of a circle having radius 5.2 meters (formula π*5.2 2 ) solves a very specific problem, but an algorithm that computes the area of any circle (formula π*R 2 ) solves a more general problem.

Can this algorithm be simplified ?

One formula for computing the perimeter of a rectangle is:

length + width + length + width

A simpler formula would be:

2.0 * ( length + width )

Is this solution similar to the solution to another problem? How are they alike? How are they different?

For example, consider the following two formulae:

Rectangle area = length * width Triangle area = 0.5 * base * height

Similarities: Each computes an area. Each multiplies two measurements.

Differences: Different measurements are used. The triangle formula contains 0.5.

Hypothesis: Perhaps every area formula involves multiplying two measurements.

Example 4.1: Pick and Plant

This section contains an extended example that demonstrates the algorithm development process. To complete the algorithm, we need to know that every Jeroo can hop forward, turn left and right, pick a flower from its current location, and plant a flower at its current location.

Problem Statement (Step 1)

A Jeroo starts at (0, 0) facing East with no flowers in its pouch. There is a flower at location (3, 0). Write a program that directs the Jeroo to pick the flower and plant it at location (3, 2). After planting the flower, the Jeroo should hop one space East and stop. There are no other nets, flowers, or Jeroos on the island.

Analysis of the Problem (Step 2)

The flower is exactly three spaces ahead of the jeroo.

The flower is to be planted exactly two spaces South of its current location.

The Jeroo is to finish facing East one space East of the planted flower.

There are no nets to worry about.

High-level Algorithm (Step 3)

Let's name the Jeroo Bobby. Bobby should do the following:

Get the flower Put the flower Hop East

Detailed Algorithm (Step 4)

Get the flower Hop 3 times Pick the flower Put the flower Turn right Hop 2 times Plant a flower Hop East Turn left Hop once

Review the Algorithm (Step 5)

The high-level algorithm partitioned the problem into three rather easy subproblems. This seems like a good technique.

This algorithm solves a very specific problem because the Jeroo and the flower are in very specific locations.

This algorithm is actually a solution to a slightly more general problem in which the Jeroo starts anywhere, and the flower is 3 spaces directly ahead of the Jeroo.

Java Code for "Pick and Plant"

A good programmer doesn't write a program all at once. Instead, the programmer will write and test the program in a series of builds. Each build adds to the previous one. The high-level algorithm will guide us in this process.

FIRST BUILD

To see this solution in action, create a new Greenfoot4Sofia scenario and use the Edit Palettes Jeroo menu command to make the Jeroo classes visible. Right-click on the Island class and create a new subclass with the name of your choice. This subclass will hold your new code.

The recommended first build contains three things:

The main method (here myProgram() in your island subclass).

Declaration and instantiation of every Jeroo that will be used.

The high-level algorithm in the form of comments.

The instantiation at the beginning of myProgram() places bobby at (0, 0), facing East, with no flowers.

Once the first build is working correctly, we can proceed to the others. In this case, each build will correspond to one step in the high-level algorithm. It may seem like a lot of work to use four builds for such a simple program, but doing so helps establish habits that will become invaluable as the programs become more complex.

SECOND BUILD

This build adds the logic to "get the flower", which in the detailed algorithm (step 4 above) consists of hopping 3 times and then picking the flower. The new code is indicated by comments that wouldn't appear in the original (they are just here to call attention to the additions). The blank lines help show the organization of the logic.

By taking a moment to run the work so far, you can confirm whether or not this step in the planned algorithm works as expected.

THIRD BUILD

This build adds the logic to "put the flower". New code is indicated by the comments that are provided here to mark the additions.

FOURTH BUILD (final)

Example 4.2: replace net with flower.

This section contains a second example that demonstrates the algorithm development process.

There are two Jeroos. One Jeroo starts at (0, 0) facing North with one flower in its pouch. The second starts at (0, 2) facing East with one flower in its pouch. There is a net at location (3, 2). Write a program that directs the first Jeroo to give its flower to the second one. After receiving the flower, the second Jeroo must disable the net, and plant a flower in its place. After planting the flower, the Jeroo must turn and face South. There are no other nets, flowers, or Jeroos on the island.

Jeroo_2 is exactly two spaces behind Jeroo_1.

The only net is exactly three spaces ahead of Jeroo_2.

Each Jeroo has exactly one flower.

Jeroo_2 will have two flowers after receiving one from Jeroo_1. One flower must be used to disable the net. The other flower must be planted at the location of the net, i.e. (3, 2).

Jeroo_1 will finish at (0, 1) facing South.

Jeroo_2 is to finish at (3, 2) facing South.

Each Jeroo will finish with 0 flowers in its pouch. One flower was used to disable the net, and the other was planted.

Let's name the first Jeroo Ann and the second one Andy.

Ann should do the following: Find Andy (but don't collide with him) Give a flower to Andy (he will be straight ahead) After receiving the flower, Andy should do the following: Find the net (but don't hop onto it) Disable the net Plant a flower at the location of the net Face South

Ann should do the following: Find Andy Turn around (either left or right twice) Hop (to location (0, 1)) Give a flower to Andy Give ahead Now Andy should do the following: Find the net Hop twice (to location (2, 2)) Disable the net Toss Plant a flower at the location of the net Hop (to location (3, 2)) Plant a flower Face South Turn right

The high-level algorithm helps manage the details.

This algorithm solves a very specific problem, but the specific locations are not important. The only thing that is important is the starting location of the Jeroos relative to one another and the location of the net relative to the second Jeroo's location and direction.

Java Code for "Replace Net with Flower"

As before, the code should be written incrementally as a series of builds. Four builds will be suitable for this problem. As usual, the first build will contain the main method, the declaration and instantiation of the Jeroo objects, and the high-level algorithm in the form of comments. The second build will have Ann give her flower to Andy. The third build will have Andy locate and disable the net. In the final build, Andy will place the flower and turn East.

This build creates the main method, instantiates the Jeroos, and outlines the high-level algorithm. In this example, the main method would be myProgram() contained within a subclass of Island .

This build adds the logic for Ann to locate Andy and give him a flower.

This build adds the logic for Andy to locate and disable the net.

This build adds the logic for Andy to place a flower at (3, 2) and turn South.

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Unit 1: Algorithms

About this unit.

We've partnered with Dartmouth college professors Tom Cormen and Devin Balkcom to teach introductory computer science algorithms, including searching, sorting, recursion, and graph theory. Learn with a combination of articles, visualizations, quizzes, and coding challenges.

Intro to algorithms

- What is an algorithm and why should you care? (Opens a modal)

- A guessing game (Opens a modal)

- Route-finding (Opens a modal)

- Discuss: Algorithms in your life (Opens a modal)

Binary search

- Binary search (Opens a modal)

- Implementing binary search of an array (Opens a modal)

- Challenge: Binary search (Opens a modal)

- Running time of binary search (Opens a modal)

- Running time of binary search 5 questions Practice

Asymptotic notation

- Asymptotic notation (Opens a modal)

- Big-θ (Big-Theta) notation (Opens a modal)

- Functions in asymptotic notation (Opens a modal)

- Big-O notation (Opens a modal)

- Big-Ω (Big-Omega) notation (Opens a modal)

- Comparing function growth 4 questions Practice

- Asymptotic notation 5 questions Practice

Selection sort

- Sorting (Opens a modal)

- Challenge: implement swap (Opens a modal)

- Selection sort pseudocode (Opens a modal)

- Challenge: Find minimum in subarray (Opens a modal)

- Challenge: implement selection sort (Opens a modal)

- Analysis of selection sort (Opens a modal)

- Project: Selection sort visualizer (Opens a modal)

Insertion sort

- Insertion sort (Opens a modal)

- Challenge: implement insert (Opens a modal)

- Insertion sort pseudocode (Opens a modal)

- Challenge: Implement insertion sort (Opens a modal)

- Analysis of insertion sort (Opens a modal)

Recursive algorithms

- Recursion (Opens a modal)

- The factorial function (Opens a modal)

- Challenge: Iterative factorial (Opens a modal)

- Recursive factorial (Opens a modal)

- Challenge: Recursive factorial (Opens a modal)

- Properties of recursive algorithms (Opens a modal)

- Using recursion to determine whether a word is a palindrome (Opens a modal)

- Challenge: is a string a palindrome? (Opens a modal)

- Computing powers of a number (Opens a modal)

- Challenge: Recursive powers (Opens a modal)

- Multiple recursion with the Sierpinski gasket (Opens a modal)

- Improving efficiency of recursive functions (Opens a modal)

- Project: Recursive art (Opens a modal)

Towers of Hanoi

- Towers of Hanoi (Opens a modal)

- Towers of Hanoi, continued (Opens a modal)

- Challenge: Solve Hanoi recursively (Opens a modal)

- Move three disks in Towers of Hanoi 3 questions Practice

- Divide and conquer algorithms (Opens a modal)

- Overview of merge sort (Opens a modal)

- Challenge: Implement merge sort (Opens a modal)

- Linear-time merging (Opens a modal)

- Challenge: Implement merge (Opens a modal)

- Analysis of merge sort (Opens a modal)

- Overview of quicksort (Opens a modal)

- Challenge: Implement quicksort (Opens a modal)

- Linear-time partitioning (Opens a modal)

- Challenge: Implement partition (Opens a modal)

- Analysis of quicksort (Opens a modal)

Graph representation

- Describing graphs (Opens a modal)

- Representing graphs (Opens a modal)

- Challenge: Store a graph (Opens a modal)

- Describing graphs 6 questions Practice

- Representing graphs 5 questions Practice

Breadth-first search

- Breadth-first search and its uses (Opens a modal)

- The breadth-first search algorithm (Opens a modal)

- Challenge: Implement breadth-first search (Opens a modal)

- Analysis of breadth-first search (Opens a modal)

Further learning

- Where to go from here (Opens a modal)

Analysis of Algorithms

- Backtracking

- Dynamic Programming

- Divide and Conquer

- Geometric Algorithms

- Mathematical Algorithms

- Pattern Searching

- Bitwise Algorithms

- Branch & Bound

- Randomized Algorithms

- Algorithms Tutorial

What is Algorithm | Introduction to Algorithms

- Definition, Types, Complexity and Examples of Algorithm

- Algorithms Design Techniques

- Why the Analysis of Algorithm is important?

- Asymptotic Notation and Analysis (Based on input size) in Complexity Analysis of Algorithms

- Worst, Average and Best Case Analysis of Algorithms

- Types of Asymptotic Notations in Complexity Analysis of Algorithms

- How to Analyse Loops for Complexity Analysis of Algorithms

- How to analyse Complexity of Recurrence Relation

- Introduction to Amortized Analysis

Types of Algorithms

- Sorting Algorithms

- Searching Algorithms

- Greedy Algorithms

- Dynamic Programming or DP

- What is Pattern Searching ?

- Backtracking Algorithm

- Graph Data Structure And Algorithms

- Branch and Bound Algorithm

- The Role of Algorithms in Computing

- Most important type of Algorithms

Definition of Algorithm

The word Algorithm means ” A set of finite rules or instructions to be followed in calculations or other problem-solving operations ” Or ” A procedure for solving a mathematical problem in a finite number of steps that frequently involves recursive operations” .

Therefore Algorithm refers to a sequence of finite steps to solve a particular problem.

Use of the Algorithms:

Algorithms play a crucial role in various fields and have many applications. Some of the key areas where algorithms are used include:

- Computer Science: Algorithms form the basis of computer programming and are used to solve problems ranging from simple sorting and searching to complex tasks such as artificial intelligence and machine learning.

- Mathematics: Algorithms are used to solve mathematical problems, such as finding the optimal solution to a system of linear equations or finding the shortest path in a graph.

- Operations Research : Algorithms are used to optimize and make decisions in fields such as transportation, logistics, and resource allocation.

- Artificial Intelligence: Algorithms are the foundation of artificial intelligence and machine learning, and are used to develop intelligent systems that can perform tasks such as image recognition, natural language processing, and decision-making.

- Data Science: Algorithms are used to analyze, process, and extract insights from large amounts of data in fields such as marketing, finance, and healthcare.

These are just a few examples of the many applications of algorithms. The use of algorithms is continually expanding as new technologies and fields emerge, making it a vital component of modern society.

Algorithms can be simple and complex depending on what you want to achieve.

It can be understood by taking the example of cooking a new recipe. To cook a new recipe, one reads the instructions and steps and executes them one by one, in the given sequence. The result thus obtained is the new dish is cooked perfectly. Every time you use your phone, computer, laptop, or calculator you are using Algorithms. Similarly, algorithms help to do a task in programming to get the expected output.

The Algorithm designed are language-independent, i.e. they are just plain instructions that can be implemented in any language, and yet the output will be the same, as expected.

What is the need for algorithms?

- Algorithms are necessary for solving complex problems efficiently and effectively.

- They help to automate processes and make them more reliable, faster, and easier to perform.

- Algorithms also enable computers to perform tasks that would be difficult or impossible for humans to do manually.

- They are used in various fields such as mathematics, computer science, engineering, finance, and many others to optimize processes, analyze data, make predictions, and provide solutions to problems.

What are the Characteristics of an Algorithm?

As one would not follow any written instructions to cook the recipe, but only the standard one. Similarly, not all written instructions for programming are an algorithm. For some instructions to be an algorithm, it must have the following characteristics:

- Clear and Unambiguous : The algorithm should be unambiguous. Each of its steps should be clear in all aspects and must lead to only one meaning.

- Well-Defined Inputs : If an algorithm says to take inputs, it should be well-defined inputs. It may or may not take input.

- Well-Defined Outputs: The algorithm must clearly define what output will be yielded and it should be well-defined as well. It should produce at least 1 output.

- Finite-ness: The algorithm must be finite, i.e. it should terminate after a finite time.

- Feasible: The algorithm must be simple, generic, and practical, such that it can be executed with the available resources. It must not contain some future technology or anything.

- Language Independent: The Algorithm designed must be language-independent, i.e. it must be just plain instructions that can be implemented in any language, and yet the output will be the same, as expected.

- Input : An algorithm has zero or more inputs. Each that contains a fundamental operator must accept zero or more inputs.

- Output : An algorithm produces at least one output. Every instruction that contains a fundamental operator must accept zero or more inputs.

- Definiteness: All instructions in an algorithm must be unambiguous, precise, and easy to interpret. By referring to any of the instructions in an algorithm one can clearly understand what is to be done. Every fundamental operator in instruction must be defined without any ambiguity.

- Finiteness: An algorithm must terminate after a finite number of steps in all test cases. Every instruction which contains a fundamental operator must be terminated within a finite amount of time. Infinite loops or recursive functions without base conditions do not possess finiteness.

- Effectiveness: An algorithm must be developed by using very basic, simple, and feasible operations so that one can trace it out by using just paper and pencil.

Properties of Algorithm:

- It should terminate after a finite time.

- It should produce at least one output.

- It should take zero or more input.

- It should be deterministic means giving the same output for the same input case.

- Every step in the algorithm must be effective i.e. every step should do some work.

Types of Algorithms:

There are several types of algorithms available. Some important algorithms are:

1. Brute Force Algorithm :

It is the simplest approach to a problem. A brute force algorithm is the first approach that comes to finding when we see a problem.

2. Recursive Algorithm :

A recursive algorithm is based on recursion . In this case, a problem is broken into several sub-parts and called the same function again and again.

3. Backtracking Algorithm :

The backtracking algorithm builds the solution by searching among all possible solutions. Using this algorithm, we keep on building the solution following criteria. Whenever a solution fails we trace back to the failure point build on the next solution and continue this process till we find the solution or all possible solutions are looked after.

4. Searching Algorithm :

Searching algorithms are the ones that are used for searching elements or groups of elements from a particular data structure. They can be of different types based on their approach or the data structure in which the element should be found.

5. Sorting Algorithm :

Sorting is arranging a group of data in a particular manner according to the requirement. The algorithms which help in performing this function are called sorting algorithms. Generally sorting algorithms are used to sort groups of data in an increasing or decreasing manner.

6. Hashing Algorithm :

Hashing algorithms work similarly to the searching algorithm. But they contain an index with a key ID. In hashing, a key is assigned to specific data.

7. Divide and Conquer Algorithm :

This algorithm breaks a problem into sub-problems, solves a single sub-problem, and merges the solutions to get the final solution. It consists of the following three steps:

8. Greedy Algorithm :

In this type of algorithm, the solution is built part by part. The solution for the next part is built based on the immediate benefit of the next part. The one solution that gives the most benefit will be chosen as the solution for the next part.

9. Dynamic Programming Algorithm :

This algorithm uses the concept of using the already found solution to avoid repetitive calculation of the same part of the problem. It divides the problem into smaller overlapping subproblems and solves them.

10. Randomized Algorithm :

In the randomized algorithm, we use a random number so it gives immediate benefit. The random number helps in deciding the expected outcome.

To learn more about the types of algorithms refer to the article about “ Types of Algorithms “.

Advantages of Algorithms:

- It is easy to understand.

- An algorithm is a step-wise representation of a solution to a given problem.

- In an Algorithm the problem is broken down into smaller pieces or steps hence, it is easier for the programmer to convert it into an actual program.

Disadvantages of Algorithms :

- Writing an algorithm takes a long time so it is time-consuming.

- Understanding complex logic through algorithms can be very difficult.

- Branching and Looping statements are difficult to show in Algorithms (imp) .

How to Design an Algorithm?

To write an algorithm, the following things are needed as a pre-requisite:

- The problem that is to be solved by this algorithm i.e. clear problem definition.

- The constraints of the problem must be considered while solving the problem.

- The input to be taken to solve the problem.

- The output is to be expected when the problem is solved.

- The solution to this problem is within the given constraints.

Then the algorithm is written with the help of the above parameters such that it solves the problem.

Example: Consider the example to add three numbers and print the sum.

Step 1: Fulfilling the pre-requisites

- The problem that is to be solved by this algorithm : Add 3 numbers and print their sum.

- The constraints of the problem that must be considered while solving the problem : The numbers must contain only digits and no other characters.

- The input to be taken to solve the problem: The three numbers to be added.

- The output to be expected when the problem is solved: The sum of the three numbers taken as the input i.e. a single integer value.

- The solution to this problem, in the given constraints: The solution consists of adding the 3 numbers. It can be done with the help of the ‘+’ operator, or bit-wise, or any other method.

Step 2: Designing the algorithm

Now let’s design the algorithm with the help of the above pre-requisites:

- Declare 3 integer variables num1, num2, and num3.

- Take the three numbers, to be added, as inputs in variables num1, num2, and num3 respectively.

- Declare an integer variable sum to store the resultant sum of the 3 numbers.

- Add the 3 numbers and store the result in the variable sum.

- Print the value of the variable sum

Step 3: Testing the algorithm by implementing it.

To test the algorithm, let’s implement it in C language.

Here is the step-by-step algorithm of the code:

- Declare three variables num1, num2, and num3 to store the three numbers to be added.

- Declare a variable sum to store the sum of the three numbers.

- Use the cout statement to prompt the user to enter the first number.

- Use the cin statement to read the first number and store it in num1.

- Use the cout statement to prompt the user to enter the second number.

- Use the cin statement to read the second number and store it in num2.

- Use the cout statement to prompt the user to enter the third number.

- Use the cin statement to read and store the third number in num3.

- Calculate the sum of the three numbers using the + operator and store it in the sum variable.

- Use the cout statement to print the sum of the three numbers.

- The main function returns 0, which indicates the successful execution of the program.

Time complexity: O(1) Auxiliary Space: O(1)

One problem, many solutions: The solution to an algorithm can be or cannot be more than one. It means that while implementing the algorithm, there can be more than one method to implement it. For example, in the above problem of adding 3 numbers, the sum can be calculated in many ways:

- Bit-wise operators

How to analyze an Algorithm?

For a standard algorithm to be good, it must be efficient. Hence the efficiency of an algorithm must be checked and maintained. It can be in two stages:

1. Priori Analysis:

“Priori” means “before”. Hence Priori analysis means checking the algorithm before its implementation. In this, the algorithm is checked when it is written in the form of theoretical steps. This Efficiency of an algorithm is measured by assuming that all other factors, for example, processor speed, are constant and have no effect on the implementation. This is done usually by the algorithm designer. This analysis is independent of the type of hardware and language of the compiler. It gives the approximate answers for the complexity of the program.

2. Posterior Analysis:

“Posterior” means “after”. Hence Posterior analysis means checking the algorithm after its implementation. In this, the algorithm is checked by implementing it in any programming language and executing it. This analysis helps to get the actual and real analysis report about correctness(for every possible input/s if it shows/returns correct output or not), space required, time consumed, etc. That is, it is dependent on the language of the compiler and the type of hardware used.

What is Algorithm complexity and how to find it?

An algorithm is defined as complex based on the amount of Space and Time it consumes. Hence the Complexity of an algorithm refers to the measure of the time that it will need to execute and get the expected output, and the Space it will need to store all the data (input, temporary data, and output). Hence these two factors define the efficiency of an algorithm. The two factors of Algorithm Complexity are:

- Time Factor : Time is measured by counting the number of key operations such as comparisons in the sorting algorithm.

- Space Factor : Space is measured by counting the maximum memory space required by the algorithm to run/execute.

Therefore the complexity of an algorithm can be divided into two types :

1. Space Complexity : The space complexity of an algorithm refers to the amount of memory required by the algorithm to store the variables and get the result. This can be for inputs, temporary operations, or outputs.

How to calculate Space Complexity? The space complexity of an algorithm is calculated by determining the following 2 components:

- Fixed Part: This refers to the space that is required by the algorithm. For example, input variables, output variables, program size, etc.

- Variable Part: This refers to the space that can be different based on the implementation of the algorithm. For example, temporary variables, dynamic memory allocation, recursion stack space, etc. Therefore Space complexity S(P) of any algorithm P is S(P) = C + SP(I) , where C is the fixed part and S(I) is the variable part of the algorithm, which depends on instance characteristic I.

Example: Consider the below algorithm for Linear Search

Step 1: START Step 2: Get n elements of the array in arr and the number to be searched in x Step 3: Start from the leftmost element of arr[] and one by one compare x with each element of arr[] Step 4: If x matches with an element, Print True. Step 5: If x doesn’t match with any of the elements, Print False. Step 6: END Here, There are 2 variables arr[], and x, where the arr[] is the variable part of n elements and x is the fixed part. Hence S(P) = 1+n. So, the space complexity depends on n(number of elements). Now, space depends on data types of given variables and constant types and it will be multiplied accordingly.

2. Time Complexity : The time complexity of an algorithm refers to the amount of time required by the algorithm to execute and get the result. This can be for normal operations, conditional if-else statements, loop statements, etc.

How to Calculate , Time Complexity? The time complexity of an algorithm is also calculated by determining the following 2 components:

- Constant time part: Any instruction that is executed just once comes in this part. For example, input, output, if-else, switch, arithmetic operations, etc.

Example: In the algorithm of Linear Search above, the time complexity is calculated as follows:

Step 1: –Constant Time Step 2: — Variable Time (Taking n inputs) Step 3: –Variable Time (Till the length of the Array (n) or the index of the found element) Step 4: –Constant Time Step 5: –Constant Time Step 6: –Constant Time Hence, T(P) = 1 + n + n(1 + 1) + 1 = 2 + 3n, which can be said as T(n).

How to express an Algorithm?

- Natural Language:- Here we express the Algorithm in the natural English language. It is too hard to understand the algorithm from it.

- Flow Chart :- Here we express the Algorithm by making a graphical/pictorial representation of it. It is easier to understand than Natural Language.

- Pseudo Code :- Here we express the Algorithm in the form of annotations and informative text written in plain English which is very much similar to the real code but as it has no syntax like any of the programming languages, it can’t be compiled or interpreted by the computer. It is the best way to express an algorithm because it can be understood by even a layman with some school-level knowledge.

Please Login to comment...

Similar reads.

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1: Algorithmic Problem Solving

- Last updated

- Save as PDF

- Page ID 46789

- Harrison Njoroge

- African Virtual University

Unit Objectives

Upon completion of this unit the learner should be able to:

- describe an algorithm

- explain the relationship between data and algorithm

- outline the characteristics of algorithms

- apply pseudo codes and flowcharts to represent algorithms

Unit Introduction

This unit introduces learners to data structures and algorithm course. The unit is on the different data structures and their algorithms that can help implement the different data structures in the computer. The application of the different data structures is presented by using examples of algorithms and which are not confined to a particular computer programming language.

- Data: the structural representation of logical relationships between elements of data

- Algorithm: finite sequence of steps for accomplishing some computational task

- Pseudo code: an informal high-level description of the operating principle of a computer program or other algorithm

- Flow chart: diagrammatic representation illustrates a solution model to a given problem.

Learning Activities

- 1.1: Activity 1 - Introduction to Algorithms and Problem Solving In this learning activity section, the learner will be introduced to algorithms and how to write algorithms to solve tasks faced by learners or everyday problems. Examples of the algorithm are also provided with a specific application to everyday problems that the learner is familiar with. The learners will particularly learn what is an algorithm, the process of developing a solution for a given task, and finally examples of application of the algorithms are given.

- 1.2: Activity 2 - The characteristics of an algorithm This section introduces the learners to the characteristics of algorithms. These characteristics make the learner become aware of what to ensure is basic, present and mandatory for any algorithm to qualify to be one. It also exposes the learner to what to expect from an algorithm to achieve or indicate. Key expectations are: the fact that an algorithm must be exact, terminate, effective, general among others.

- 1.3: Activity 3 - Using pseudo-codes and flowcharts to represent algorithms The student will learn how to design an algorithm using either a pseudo code or flowchart. Pseudo code is a mixture of English like statements, some mathematical notations and selected keywords from a programming language. It is one of the tools used to design and develop the solution to a task or problem. Pseudo codes have different ways of representing the same thing and emphasis is on the clarity and not style.

- 1.4: Unit Summary In this unit, you have seen what an algorithm is. Based on this knowledge, you should now be able to characterize an algorithm by stating its properties. We have explored the different ways of representing an algorithm such as using human language, pseudo codes and flow chart. You should now be able to present solutions to problems in form of an algorithm.

Forgot password? New user? Sign up

Existing user? Log in

Already have an account? Log in here.

- Pete Tomiello

- Debarghya Adhikari

- ANKIT JAVERI

An algorithm is a procedure that takes in input, follows a certain set of steps, and then produces an output. Oftentimes, the algorithm defines a desired relationship between the input and output. For example, if the problem that we are trying to solve is sorting a hand of cards, the problem might be defined as follows:

Problem : Sort the input. Input : A set of 5 cards. Output : The set of 5 input cards, sorted. Procedure : Up to the designer of the algorithm!

This last part is very important, it's the meat and substance of the algorithm. And, as an algorithm designer, you can do whatever you want to produce the desired output! Think about some ways you could sort 5 cards in your hand, and then click below to see some more ideas.

Algorithm Ideas

\(\hspace{0mm}\) We could simply toss them up in the air and pick them up again. Maybe they'll be sorted. If not, we can try it again and again until it works (spoiler: this is a bad algorithm). \(\hspace{0mm}\) We can sort them one at a time, left to right. Let's say our hand looks like {2, 4, 1, 9, 8}. Well, 2 and 4 are already sorted. But then we have a 1. That should go before 4, and it should go before 2. Now we have {1, 2, 4, 9, 8}. 9 is in the right spot because its higher than the card to its left, 4. But 8 is wrong because it's smaller than 9, so we'll just put it before 9. Now, we have {1, 2, 4, 8, 9}, and we're done. This is called insertion sort . \(\hspace{0mm}\) We can sort them two at a time, left to right. So, our hand is again {2, 4, 1, 9, 8}. 2 and 4 are good. 4 and 1 need to be swapped, so now we have {2, 1, 4, 9, 8}. 4 and 9 are good. 9 and 8 should be swapped, so now we have {2, 1, 4, 8, 9}. We have to start at the beginning again, but that's no problem. We look at the first pair and see that 2 and 1 need to switch, so now we have {1, 2, 4, 8, 9}, and we're done. This is called bubble sort . \(\hspace{0mm}\) There are a million ways to sort this hand of cards! Some are great, most are terrible. It's up to you as the algorithm designer to make a great one.