How critical thinking can help you learn to code

Become a Software Engineer in Months, Not Years

From your first line of code, to your first day on the job — Educative has you covered. Join 2M+ developers learning in-demand programming skills.

Experienced programmers frequently say that being able to problem-solve effectively is one of the most important skills they use in their work. In programming as in life, problems don’t usually have magical solutions. Solving a coding problem often means looking at the problem from multiple perspectives, breaking it down into its constituent parts, and then considering (and maybe trying) several approaches to addressing it.

In short, being a good problem-solver requires critical thinking .

Today, we’ll discuss what critical thinking is, why it’s important, and how it can make you a better programmer.

We’ll cover :

What is critical thinking?

Why is critical thinking important in programming, how you can start thinking more critically, apply critical thinking today.

Learn to code today. Try one of our courses on programming fundamentals: Learn to Code: Python for Absolute Beginners Learn to Code: C++ for Absolute Beginners Learn to Code: C# for Absolute Beginners Learn to Code: Java for Absolute Beginners Learn to Code: JavaScript for Absolute Beginners Learn to Code: Ruby for Absolute Beginners

Learn in-demand tech skills in half the time

Mock Interview

Skill Paths

Assessments

Learn to Code

Tech Interview Prep

Generative AI

Data Science

Machine Learning

GitHub Students Scholarship

Early Access Courses

For Individuals

Try for Free

Gift a Subscription

Become an Author

Become an Affiliate

Earn Referral Credits

Cheatsheets

Frequently Asked Questions

Privacy Policy

Cookie Policy

Terms of Service

Business Terms of Service

Data Processing Agreement

Copyright © 2024 Educative, Inc. All rights reserved.

How to think like a programmer — lessons in problem solving

by Richard Reis

If you’re interested in programming, you may well have seen this quote before:

“Everyone in this country should learn to program a computer, because it teaches you to think.” — Steve Jobs

You probably also wondered what does it mean, exactly, to think like a programmer? And how do you do it??

Essentially, it’s all about a more effective way for problem solving .

In this post, my goal is to teach you that way.

By the end of it, you’ll know exactly what steps to take to be a better problem-solver.

Why is this important?

Problem solving is the meta-skill.

We all have problems. Big and small. How we deal with them is sometimes, well…pretty random.

Unless you have a system, this is probably how you “solve” problems (which is what I did when I started coding):

- Try a solution.

- If that doesn’t work, try another one.

- If that doesn’t work, repeat step 2 until you luck out.

Look, sometimes you luck out. But that is the worst way to solve problems! And it’s a huge, huge waste of time.

The best way involves a) having a framework and b) practicing it.

“Almost all employers prioritize problem-solving skills first.

Problem-solving skills are almost unanimously the most important qualification that employers look for….more than programming languages proficiency, debugging, and system design.

Demonstrating computational thinking or the ability to break down large, complex problems is just as valuable (if not more so) than the baseline technical skills required for a job.” — Hacker Rank ( 2018 Developer Skills Report )

Have a framework

To find the right framework, I followed the advice in Tim Ferriss’ book on learning, “ The 4-Hour Chef ”.

It led me to interview two really impressive people: C. Jordan Ball (ranked 1st or 2nd out of 65,000+ users on Coderbyte ), and V. Anton Spraul (author of the book “ Think Like a Programmer: An Introduction to Creative Problem Solving ”).

I asked them the same questions, and guess what? Their answers were pretty similar!

Soon, you too will know them.

Sidenote: this doesn’t mean they did everything the same way. Everyone is different. You’ll be different. But if you start with principles we all agree are good, you’ll get a lot further a lot quicker.

“The biggest mistake I see new programmers make is focusing on learning syntax instead of learning how to solve problems.” — V. Anton Spraul

So, what should you do when you encounter a new problem?

Here are the steps:

1. Understand

Know exactly what is being asked. Most hard problems are hard because you don’t understand them (hence why this is the first step).

How to know when you understand a problem? When you can explain it in plain English.

Do you remember being stuck on a problem, you start explaining it, and you instantly see holes in the logic you didn’t see before?

Most programmers know this feeling.

This is why you should write down your problem, doodle a diagram, or tell someone else about it (or thing… some people use a rubber duck ).

“If you can’t explain something in simple terms, you don’t understand it.” — Richard Feynman

Don’t dive right into solving without a plan (and somehow hope you can muddle your way through). Plan your solution!

Nothing can help you if you can’t write down the exact steps.

In programming, this means don’t start hacking straight away. Give your brain time to analyze the problem and process the information.

To get a good plan, answer this question:

“Given input X, what are the steps necessary to return output Y?”

Sidenote: Programmers have a great tool to help them with this… Comments!

Pay attention. This is the most important step of all.

Do not try to solve one big problem. You will cry.

Instead, break it into sub-problems. These sub-problems are much easier to solve.

Then, solve each sub-problem one by one. Begin with the simplest. Simplest means you know the answer (or are closer to that answer).

After that, simplest means this sub-problem being solved doesn’t depend on others being solved.

Once you solved every sub-problem, connect the dots.

Connecting all your “sub-solutions” will give you the solution to the original problem. Congratulations!

This technique is a cornerstone of problem-solving. Remember it (read this step again, if you must).

“If I could teach every beginning programmer one problem-solving skill, it would be the ‘reduce the problem technique.’

For example, suppose you’re a new programmer and you’re asked to write a program that reads ten numbers and figures out which number is the third highest. For a brand-new programmer, that can be a tough assignment, even though it only requires basic programming syntax.

If you’re stuck, you should reduce the problem to something simpler. Instead of the third-highest number, what about finding the highest overall? Still too tough? What about finding the largest of just three numbers? Or the larger of two?

Reduce the problem to the point where you know how to solve it and write the solution. Then expand the problem slightly and rewrite the solution to match, and keep going until you are back where you started.” — V. Anton Spraul

By now, you’re probably sitting there thinking “Hey Richard... That’s cool and all, but what if I’m stuck and can’t even solve a sub-problem??”

First off, take a deep breath. Second, that’s fair.

Don’t worry though, friend. This happens to everyone!

The difference is the best programmers/problem-solvers are more curious about bugs/errors than irritated.

In fact, here are three things to try when facing a whammy:

- Debug: Go step by step through your solution trying to find where you went wrong. Programmers call this debugging (in fact, this is all a debugger does).

“The art of debugging is figuring out what you really told your program to do rather than what you thought you told it to do.”” — Andrew Singer

- Reassess: Take a step back. Look at the problem from another perspective. Is there anything that can be abstracted to a more general approach?

“Sometimes we get so lost in the details of a problem that we overlook general principles that would solve the problem at a more general level. […]

The classic example of this, of course, is the summation of a long list of consecutive integers, 1 + 2 + 3 + … + n, which a very young Gauss quickly recognized was simply n(n+1)/2, thus avoiding the effort of having to do the addition.” — C. Jordan Ball

Sidenote: Another way of reassessing is starting anew. Delete everything and begin again with fresh eyes. I’m serious. You’ll be dumbfounded at how effective this is.

- Research: Ahh, good ol’ Google. You read that right. No matter what problem you have, someone has probably solved it. Find that person/ solution. In fact, do this even if you solved the problem! (You can learn a lot from other people’s solutions).

Caveat: Don’t look for a solution to the big problem. Only look for solutions to sub-problems. Why? Because unless you struggle (even a little bit), you won’t learn anything. If you don’t learn anything, you wasted your time.

Don’t expect to be great after just one week. If you want to be a good problem-solver, solve a lot of problems!

Practice. Practice. Practice. It’ll only be a matter of time before you recognize that “this problem could easily be solved with <insert concept here>.”

How to practice? There are options out the wazoo!

Chess puzzles, math problems, Sudoku, Go, Monopoly, video-games, cryptokitties, bla… bla… bla….

In fact, a common pattern amongst successful people is their habit of practicing “micro problem-solving.” For example, Peter Thiel plays chess, and Elon Musk plays video-games.

“Byron Reeves said ‘If you want to see what business leadership may look like in three to five years, look at what’s happening in online games.’

Fast-forward to today. Elon [Musk], Reid [Hoffman], Mark Zuckerberg and many others say that games have been foundational to their success in building their companies.” — Mary Meeker ( 2017 internet trends report )

Does this mean you should just play video-games? Not at all.

But what are video-games all about? That’s right, problem-solving!

So, what you should do is find an outlet to practice. Something that allows you to solve many micro-problems (ideally, something you enjoy).

For example, I enjoy coding challenges. Every day, I try to solve at least one challenge (usually on Coderbyte ).

Like I said, all problems share similar patterns.

That’s all folks!

Now, you know better what it means to “think like a programmer.”

You also know that problem-solving is an incredible skill to cultivate (the meta-skill).

As if that wasn’t enough, notice how you also know what to do to practice your problem-solving skills!

Phew… Pretty cool right?

Finally, I wish you encounter many problems.

You read that right. At least now you know how to solve them! (also, you’ll learn that with every solution, you improve).

“Just when you think you’ve successfully navigated one obstacle, another emerges. But that’s what keeps life interesting.[…]

Life is a process of breaking through these impediments — a series of fortified lines that we must break through.

Each time, you’ll learn something.

Each time, you’ll develop strength, wisdom, and perspective.

Each time, a little more of the competition falls away. Until all that is left is you: the best version of you.” — Ryan Holiday ( The Obstacle is the Way )

Now, go solve some problems!

And best of luck ?

Special thanks to C. Jordan Ball and V. Anton Spraul . All the good advice here came from them.

Thanks for reading! If you enjoyed it, test how many times can you hit in 5 seconds. It’s great cardio for your fingers AND will help other people see the story.

If this article was helpful, share it .

Learn to code for free. freeCodeCamp's open source curriculum has helped more than 40,000 people get jobs as developers. Get started

OPINION article

Some evidence on the cognitive benefits of learning to code.

- 1 Centre for Educational Measurement, Faculty of Educational Sciences, University of Oslo, Oslo, Norway

- 2 Department of Education and Quality in Learning, Unit for Digitalisation and Education, Kongsberg, Norway

- 3 Department of Biology, Humboldt University of Berlin, Berlin, Germany

Introduction

Computer coding—an activity that involves the creation, modification, and implementation of computer code and exposes students to computational thinking—is an integral part of today's education in science, technology, engineering, and mathematics (STEM) ( Grover and Pea, 2013 ). As technology is advancing, coding is becoming a necessary process and much-needed skill to solve complex scientific problems efficiently and reproducibly, ultimately elevating the careers of those who master the skill. With many countries around the world launching coding initiatives and integrating computational thinking into the curricula of higher education, secondary education, primary education, and kindergarten, the question arises, what lies behind this enthusiasm for learning to code? Part of the reasoning is that learning to code may ultimately aid students' learning and acquiring of skills in domains other than coding. Researchers, policy-makers, and leaders in the field of computer science and education have made ample use of this argument to attract students into computer science, bring to attention the need for skilled programmers, and make coding compulsory for students. Bill Gates once stated that “[l]earning to write programs stretches your mind, and helps you think better, creates a way of thinking about things that I think is helpful in all domains” (2013). Similar to the claims surrounding chess instruction, learning Latin, video gaming, and brain training ( Sala and Gobet, 2017 ), this so-called “transfer effect” assumes that students learn a set of skills during coding instruction that are also relevant for solving problems in mathematics, science, and other contexts. Despite this assumption and the claims surrounding transfer effects, the evidence backing them seems to stand on shaky legs—a recently published paper even claimed that such evidence does not exist at all ( Denning, 2017 ), yet without reviewing the extant body of empirical studies on the matter. Moreover, simply teaching coding does not ensure that students are able to transfer the knowledge and skills they have gained to other situations and contexts—in fact, instruction needs to be designed for fostering this transfer ( Grover and Pea, 2018 ).

In this opinion paper, we (a) argue that learning to code involves thinking processes similar to those in other domains, such as mathematical modeling and creative problem solving, (b) highlight the empirical evidence on the cognitive benefits of learning computer coding that has bearing on this long-standing debate, and (c) describe several criteria for documenting these benefits (i.e., transfer effects). Despite the positive evidence suggesting that these benefits may exist, we argue that the transfer debate has not yet to be settled.

Computer Coding as Problem Solving

Computer coding comprises activities to create, modify, and evaluate computer code along with the knowledge about coding concepts and procedures ( Tondeur et al., 2019 ). Ultimately, computer science educators consider it a vehicle to teaching computational thinking through, for instance, (a) abstraction and pattern generalization, (b) systematic information processing, (c) symbol systems and representations, (d) algorithmic thinking, (e) problem decomposition, (f) debugging and systematic error detection ( Grover and Pea, 2013 ). These skills share considerable similarities with general problem solving and problem solving in specific domains ( Shute et al., 2017 ). Drawing from the “theory of common elements,” one may therefore expect possible transfer effects between coding and problem solving skills ( Thorndike and Woodworth, 1901 ). For instance, creative problem solving requires students to encode, recognize, and formulate the problem (preparation phase), represent the problem (incubation phase), search for and find solutions (illumination phase), evaluate the creative product and monitor the process of creative activities (verification phase)—activities that also play a critical role in coding ( Clements, 1995 ; Grover and Pea, 2013 ). Similarly, solving problems through mathematical modeling requires students to decompose a problem into its parts (e.g., variables), understand their relations (e.g., functions), use mathematical symbols to represent these relations (e.g., equations), and apply algorithms to obtain a solution—activities mimicking the coding process. These two examples illustrate that the processes involved in coding are close to those involved in performing skills outside the coding domain ( Popat and Starkey, 2019 ). This observation has motivated researchers and educators to hypothesize transfer effects of learning to code, and, in fact, some studies found positive correlations between coding skills and other skills, such as information processing, reasoning, and mathematical skills ( Shute et al., 2017 ). Nevertheless, despite the conceptual backing of such transfer effects, which evidence exists to back them empirically?

Cognitive Benefits of Learning Computer Coding

Despite the conceptual argument that computer coding engages students in general problem-solving activities and may ultimately be beneficial for acquiring cognitive skills beyond coding, the empirical evidence backing these transfer effects is diverse ( Denning, 2017 ). While some experimental and quasi-experimental studies documented mediocre to large effects of coding interventions on skills such as reasoning, creative thinking, and mathematical modeling, other studies did not find support for any transfer effect. Several research syntheses were therefore aimed at clarifying and explaining this diversity.

In 1991, Liao and Bright reviewed 65 empirical studies on the effects of learning-to-code interventions on measures of cognitive skills ( Liao and Bright, 1991 ). Drawing from the published literature between 1960 and 1989, the authors included experimental, quasi-experimental, and pre-experimental studies in classrooms with a control group (non-programming) and a treatment group (programming). The primary studies had to provide quantitative information about the effectiveness of the interventions on a broad range of cognitive skills, such as planning, reasoning, and metacognition. Studies that presented only correlations between programming and other cognitive skills were excluded. The interventions focused on learning the programming languages Logo, BASIC, Pascal, and mixtures thereof. This meta-analysis resulted in a positive effect size quantified as the well-known Cohen's d coefficient, indicating that control group and experimental group average gains in cognitive skills differed by 0.41 standard deviations. Supporting the existence of transfer effects, this evidence indicated that learning coding aided the acquisition of other skills to a considerable extent. Although this meta-analysis was ground-breaking at the time, transferring it into today's perspective on coding and transfer is problematic for several reasons: First, during the last three decades, the tools used to engage students in computer coding have changed substantially, and visual programming languages such as Scratch simplify the creation and understanding of computer code. Second, Liao and Bright included any cognitive outcome variable without considering possible differences in the transfer effects between skills (e.g., reasoning may be enhanced more than reading skills). Acknowledging this limitation, Liao (2000) performed a second, updated meta-analysis in 2000 summarizing the results of 22 studies and found strong effects on coding skills ( d ¯ = 2.48), yet insignificant effects on creative thinking ( d ¯ = −0.13). Moderate effects occurred for critical thinking, reasoning, and spatial skills ( d ¯ = 0.37–0.58).

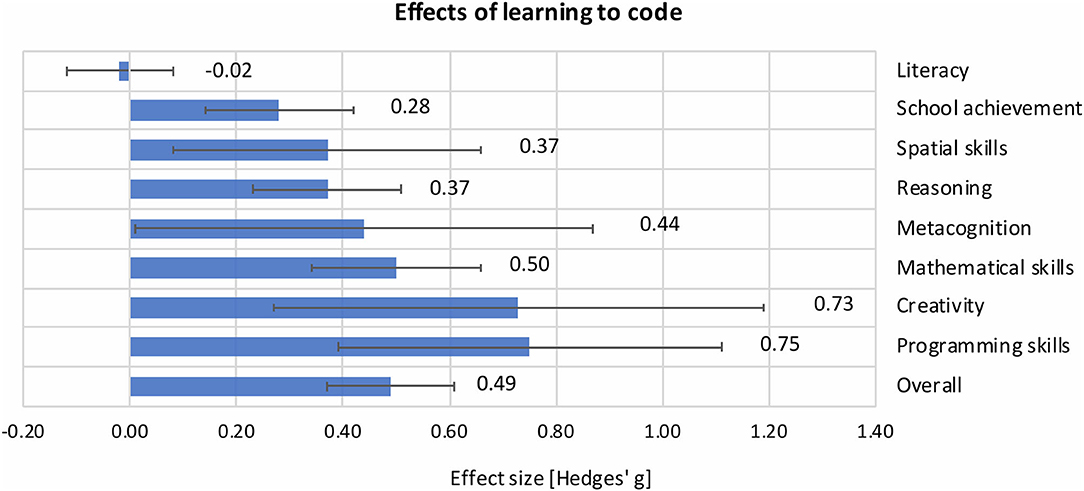

Drawing from a pool of 105 intervention studies and 539 reported effects, Tondeur et al. (2019) put the question of transfer effects to a new test. Their meta-analysis included experimental and quasi-experimental intervention studies with pretest-posttest and posttest-only designs. Each educational intervention had to include at least one control group and at least one treatment group with a design that allowed for studying the effects of coding (e.g., treatment group: intervention program of coding with Scratch ® , control group: no coding intervention at all; please see the meta-analysis for more examples of study designs). Finally, the outcome measures were performance-based measures, such as the Torrance Test of Creative Thinking or intelligence tests. This meta-analysis showed that learning to code had a positive and strong effect on coding skills ( g ¯ = 0.75) and a positive and medium effect on cognitive skills other than coding ( g ¯ = 0.47). The authors distinguished further between the different types of cognitive skills and found a range of effect sizes, g ¯ = −0.02–0.73 ( Figure 1 ). Ultimately, they documented the largest effects for creative thinking, mathematical skills, metacognition, reasoning, and spatial skills ( g ¯ = 0.37–0.73). At the same time, these effects were context-specific and depended on the study design features, such as randomization and the treatment of control groups.

Figure 1 . Effect sizes of learning-to-code interventions on several cognitive skills and their 95% confidence intervals ( Tondeur et al., 2019 ). The effect sizes represent mean differences in the cognitive skill gains between the control and experimental groups in units of standard deviations (Hedges' g ).

These research syntheses provide some evidence for the transfer effects of learning to code on other cognitive skills—learning to code may indeed have cognitive benefits. At the same time, as the evidence base included some study designs that deviated from randomized controlled trials, strictly causal conclusions (e.g., “Students' gains in creativity were caused by the coding intervention.”) cannot be drawn. Instead, one may conclude that learning to code was associated with improvements in other skills measures. Moreover, the evidence does not indicate that transfer just “happens”; yet, it must be facilitated and trained explicitly ( Grover and Pea, 2018 ). This represents a “cost” of transfer in the context of coding: among others, teaching for transfer requires sufficient teaching time, student-centered, cognitively activating, supportive, and motivating learning environments, and teacher training—in fact, possible transfer effects can be moderated by these instructional conditions (e.g., Gegenfurtner, 2011 ; Yadav et al., 2017 ; Waite et al., 2020 ; Beege et al., 2021 ). The extant body of research on fostering computational thinking through teaching programming suggests that problem-based learning approaches that involve information processing, scaffolding, and reflection activities are effective ways to promote the near transfer of coding ( Lye and Koh, 2014 ; Hsu et al., 2018 ). Beside the cost of effective instructional designs, another cost refers to the cognitive demands of the transfer: existing models of transfer suggest that the more similar the tasks during the instruction in one domain (e.g., coding) are to those in another domain (e.g., mathematical problem solving), the more likely students can transfer their knowledge and skills between domains ( Taatgen, 2013 ). Mastering this transfer involves additional cognitive skills, such as executive functioning (e.g., switching between tasks) and metacognition (e.g., recognizing similar tasks and solution patterns; Salomon and Perkins, 1987 ; Popat and Starkey, 2019 ). It is therefore key to further investigate the conditions and mechanisms underlying the possible transfer of the skills students acquire and the knowledge they gain during coding instruction via carefully designed learning interventions and experimental studies are needed that include the teaching, mediating, and assessment of transfer.

Challenges With Measuring Cognitive Benefits

Despite the promising evidence on the cognitive benefits of learning to code, the existing body of research still needs to address several challenges to detect and document transfer effects—these challenges include but are not limited to Tondeur et al. (2019) :

• Measuring coding skills. To identify the effects of learning-to-code interventions on coding skills, reliable and valid measures of these skills (e.g., performance scores) must be included. These measures allow researchers to establish baseline effects, that is, the effects on the skills trained during the intervention ( Melby-Lervåg et al., 2016 ). However, the domain of computer coding largely lacks measures showing sufficient quality ( Tang et al., 2020 ).

• Measuring other cognitive skills. Next to the measures of coding skills, measures of other cognitive skills must be administered to trace whether coding interventions are beneficial for learning skills outside the coding domain and ultimately document transfer effects. This design allows researchers to examine both near and far transfer effects and to test whether gains in cognitive skills may be caused by gains in coding skills ( Melby-Lervåg et al., 2016 ).

• Implementing experimental research designs. To detect and interpret intervention effects over time, pre- and post-test measures of coding and other cognitive skills are taken, the assignment to the experimental group(s) is random, and students in the control group(s) do not receive the coding intervention. Existing meta-analyses examining the near and far transfer effects of coding have shown that these designs features play a pivotal, moderating role, and the effects tend to be lower for randomized experimental studies with active control groups (e.g., Liao, 2000 ; Scherer et al., 2019 , 2020 ). Scholars in the field of transfer in education have emphasized the need for taking into account more aspects related to transfer than only changes in scores between the pre- and post-tests. These aspects include, for instance, continuous observations and tests of possible transfer over larger periods of time and qualitative measures of knowledge application that could make visible students' ability to learn new things and to solve (new) problems in different types of situations ( Bransford and Schwartz, 1999 ; Lobato, 2006 ).

Ideally, research studies address all of these challenges; however, in reality, researchers must examine the consequences of the departures from a well-structured experimental design and evaluate the validity of the resultant transfer effects.

Overall, the evidence supporting the cognitive benefits of learning to code is promising. In the first part of this opinion paper, we argued that coding skills and other skills, such as creative thinking and mathematical problem solving, share skillsets and that these common elements form the ground for expecting some degree of transfer from learning to code into other cognitive domains (e.g., Shute et al., 2017 ; Popat and Starkey, 2019 ). In fact, the existing meta-analyses supported the possible existence of this transfer for the two domains. This reasoning assumes that students engage in activities during coding through which they acquire a set of skills that could be transferred to other contexts and domains (e.g., Lye and Koh, 2014 ; Scherer et al., 2019 ). The specific mechanisms and beneficial factors of this transfer, however, still need to be clarified.

The evidence we have presented in this paper suggests that students' performance on tasks in several domains other than coding is not enhanced to the same extent—that is, acquiring some cognitive skills other than coding is more likely than acquiring others. We argue that the overlap of skillsets between coding and skills in other domains may differ across domains and the extent to which transfer seems likely may depend on the degree of this overlap (i.e., the common elements), next to other key aspects, such as task designs, instruction, and practice. Despite the evidence that cognitive skills may be prompted, the direct transfer of what is learned through coding is complex and does not happen automatically. To shed further light on the possible causes of why transferring coding skills to situations in which students are required to, for instance, think creatively may be more likely than transferring coding skills to situations in which students are required to comprehend written text as part of literacy, researchers are encouraged to continue testing these effects with carefully designed intervention studies and valid measures of coding and other cognitive skills. The transfer effects, although large enough to be significant, establish some evidence on the relation between learning to code and gains in other cognitive skills; however, for some skills, they are too modest to settle on the ongoing debate whether transfer effects were only due to the learning of coding or exist at all. More insights into the successful transfer are needed to inform educational practice and policy-making about the opportunities to leverage the potential that lies within the teaching of coding.

Author Contributions

RS conceived the idea of the paper and drafted the manuscript. FS and BS-S drafted additional parts of the manuscript and performed revisions. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Beege, M., Schneider, S., Nebel, S., Zimm, J., Windisch, S., and Rey, G. D. (2021). Learning programming from erroneous worked-examples. which type of error is beneficial for learning? Learn. Instruct. 75:101497. doi: 10.1016/j.learninstruc.2021.101497

CrossRef Full Text | Google Scholar

Bransford, J. D., and Schwartz, D. L. (1999). Rethinking transfer: a simple proposal with multiple implications. Rev. Res. Educ. 24, 61–100. doi: 10.3102/0091732X024001061

Clements, D. H. (1995). Teaching creativity with computers. Educ. Psychol. Rev. 7, 141–161. doi: 10.1007/BF02212491

Denning, P. J. (2017). Remaining trouble spots with computational thinking. Commun. ACM 60, 33–39. doi: 10.1145/2998438

Gegenfurtner, A. (2011). Motivation and transfer in professional training: A meta-analysis of the moderating effects of knowledge type, instruction, and assessment conditions. Educ. Res. Rev. 6, 153–168. doi: 10.1016/j.edurev.2011.04.001

Grover, S., and Pea, R. (2013). Computational thinking in K-12:a review of the state of the field. Educ. Res. 42, 38–43. doi: 10.3102/0013189X12463051

Grover, S., and Pea, R. (2018). “Computational thinking: a competency whose time has come,” in Computer Science Education: Perspectives on Teaching and Learning in School , eds S. Sentance, S. Carsten, and E. Barendsen (London: Bloomsbury Academic), 19–38.

Google Scholar

Hsu, T.-C., Chang, S.-C., and Hung, Y.-T. (2018). How to learn and how to teach computational thinking: suggestions based on a review of the literature. Comput. Educ. 126, 296–310. doi: 10.1016/j.compedu.2018.07.004

Liao, Y.-K. C. (2000). A Meta-analysis of Computer Programming on Cognitive Outcomes: An Updated Synthesis . Montréal, QC: EdMedia + Innovate Learning.

Liao, Y.-K. C., and Bright, G. W. (1991). Effects of computer programming on cognitive outcomes: a meta-analysis. J. Educ. Comput. Res. 7, 251–268. doi: 10.2190/E53G-HH8K-AJRR-K69M

Lobato, J. (2006). Alternative perspectives on the transfer of learning: history, issues, and challenges for future research. J. Learn. Sci. 15, 431–449. doi: 10.1207/s15327809jls1504_1

Lye, S. Y., and Koh, J. H. L. (2014). Review on teaching and learning of computational thinking through programming: what is next for K-12? Comput. Human Behav. 41, 51–61. doi: 10.1016/j.chb.2014.09.012

Melby-Lervåg, M., Redick, T. S., and Hulme, C. (2016). Working memory training does not improve performance on measures of intelligence or other measures of “far transfer”: evidence from a meta-analytic review. Perspect. Psychol. Sci. 11, 512–534. doi: 10.1177/1745691616635612

PubMed Abstract | CrossRef Full Text | Google Scholar

Popat, S., and Starkey, L. (2019). Learning to code or coding to learn? a systematic review. Comput. Educ. 128, 365–376. doi: 10.1016/j.compedu.2018.10.005

Sala, G., and Gobet, F. (2017). Does far transfer exist? negative evidence from chess, music, and working memory training. Curr. Dir. Psychol. Sci. 26, 515–520. doi: 10.1177/0963721417712760

Salomon, G., and Perkins, D. N. (1987). Transfer of cognitive skills from programming: when and how? J. Educ. Comput. Res. 3, 149–169. doi: 10.2190/6F4Q-7861-QWA5-8PL1

Scherer, R., Siddiq, F., and Sánchez Viveros, B. (2019). The cognitive benefits of learning computer programming: a meta-analysis of transfer effects. J. Educ. Psychol. 111, 764–792. doi: 10.1037/edu0000314

Scherer, R., Siddiq, F., and Viveros, B. S. (2020). A meta-analysis of teaching and learning computer programming: Effective instructional approaches and conditions. Comput. Human Behav. 109:106349. doi: 10.1016/j.chb.2020.106349

Shute, V. J., Sun, C., and Asbell-Clarke, J. (2017). Demystifying computational thinking. Educ. Res. Rev. 22, 142–158. doi: 10.1016/j.edurev.2017.09.003

Taatgen, N. A. (2013). The nature and transfer of cognitive skills. Psychol. Rev. 120, 439–471. doi: 10.1037/a0033138

Tang, X., Yin, Y., Lin, Q., Hadad, R., and Zhai, X. (2020). Assessing computational thinking: a systematic review of empirical studies. Comput. Educ. 148:103798. doi: 10.1016/j.compedu.2019.103798

Thorndike, E. L., and Woodworth, R. S. (1901). The influence of improvement in one mental function upon the efficiency of other functions. (I). Psychol. Rev. 8, 247–261. doi: 10.1037/h0074898

Tondeur, J., Scherer, R., Baran, E., Siddiq, F., Valtonen, T., and Sointu, E. (2019). Teacher educators as gatekeepers: preparing the next generation of teachers for technology integration in education. Br. J. Educ. Technol. 50, 1189–1209. doi: 10.1111/bjet.12748

Waite, J., Curzon, P., Marsh, W., and Sentance, S. (2020). Difficulties with design: the challenges of teaching design in K-5 programming. Comput. Educ. 150:103838. doi: 10.1016/j.compedu.2020.103838

Yadav, A., Gretter, S., Good, J., and McLean, T. (2017). “Computational thinking in teacher education,” in Emerging Research, Practice, and Policy on Computational Thinking , eds P. J. Rich and C. B. Hodges (New York, NY: Springer International Publishing), 205–220.

Keywords: computational thinking skills, transfer of learning, cognitive skills, meta-analysis, experimental studies

Citation: Scherer R, Siddiq F and Sánchez-Scherer B (2021) Some Evidence on the Cognitive Benefits of Learning to Code. Front. Psychol. 12:559424. doi: 10.3389/fpsyg.2021.559424

Received: 06 May 2020; Accepted: 17 August 2021; Published: 09 September 2021.

Reviewed by:

Copyright © 2021 Scherer, Siddiq and Sánchez-Scherer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Ronny Scherer, ronny.scherer@cemo.uio.no

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

- IEEE CS Standards

- Career Center

- Subscribe to Newsletter

- IEEE Standards

- For Industry Professionals

- For Students

- Launch a New Career

- Membership FAQ

- Membership FAQs

- Membership Grades

- Special Circumstances

- Discounts & Payments

- Distinguished Contributor Recognition

- Grant Programs

- Find a Local Chapter

- Find a Distinguished Visitor

- Find a Speaker on Early Career Topics

- Technical Communities

- Collabratec (Discussion Forum)

- Start a Chapter

- My Subscriptions

- My Referrals

- Computer Magazine

- ComputingEdge Magazine

- Let us help make your event a success. EXPLORE PLANNING SERVICES

- Events Calendar

- Calls for Papers

- Conference Proceedings

- Conference Highlights

- Top 2024 Conferences

- Conference Sponsorship Options

- Conference Planning Services

- Conference Organizer Resources

- Virtual Conference Guide

- Get a Quote

- CPS Dashboard

- CPS Author FAQ

- CPS Organizer FAQ

- Find the latest in advanced computing research. VISIT THE DIGITAL LIBRARY

- Open Access

- Tech News Blog

- Author Guidelines

- Reviewer Information

- Guest Editor Information

- Editor Information

- Editor-in-Chief Information

- Volunteer Opportunities

- Video Library

- Member Benefits

- Institutional Library Subscriptions

- Advertising and Sponsorship

- Code of Ethics

- Educational Webinars

- Online Education

- Certifications

- Industry Webinars & Whitepapers

- Research Reports

- Bodies of Knowledge

- CS for Industry Professionals

- Resource Library

- Newsletters

- Women in Computing

- Digital Library Access

- Organize a Conference

- Run a Publication

- Become a Distinguished Speaker

- Participate in Standards Activities

- Peer Review Content

- Author Resources

- Publish Open Access

- Society Leadership

- Boards & Committees

- Local Chapters

- Governance Resources

- Conference Publishing Services

- Chapter Resources

- About the Board of Governors

- Board of Governors Members

- Diversity & Inclusion

- Open Volunteer Opportunities

- Award Recipients

- Student Scholarships & Awards

- Nominate an Election Candidate

- Nominate a Colleague

- Corporate Partnerships

- Conference Sponsorships & Exhibits

- Advertising

- Recruitment

- Publications

- Education & Career

How Computer Engineering Helps You Think Creatively

But in reality, learning the basics of computer science can help you think more critically and with more novel inspiration, ultimately helping you in other areas of your life.

Thinking of a career in computing? Our “Careers in Computing” blog will get you there

Apply Creative Problem Solving to Other Areas

Let’s start by explaining why the creative problem-solving skills you’ll learn in computer science can help you in everyday life:

- Novel solutions and new products. Being familiar with creating and polishing hardware and/or software can help you come up with ingenious solutions for your everyday life. You’re used to thinking about problems as solvable challenges, so you naturally come up with ways to address them. This applies to areas beyond computer science as well; for example, one former computer engineer used his creativity to engineer a pillow that reduces pressure on your face while sleeping .

- Lateral thinking and breaking patterns. Writing code and creating applications from scratch also incentivizes you to think laterally and break the patterns you’d otherwise fall into. Traditional lines of thinking just won’t work for some problems, so you’ll be forced to think in new, creative ways. That allows you to experiment with new approaches and keep trying until you find something that works.

- Seeing problems from other perspectives. As a computer engineer, you’ll be forced to see problems from other perspectives, whether you want to or not. That might mean reviewing code that someone else wrote, getting feedback from a client who has no familiarity with engineering, or imagining how an application might look to a user who’s never seen it before. In any case, you’ll quickly learn how to broaden your perspective, which means you’ll see problems in an entirely new light.

How Computer Engineering Improves Your Abilities

So how exactly does computer engineering improve your creative abilities in this way?

- Generating new ideas. You have to be creative if you’re going to generate new ideas . In some roles, you’ll be responsible for coming up with the ideas yourself—either designing your own apps for circulation, or making direct recommendations to your clients. In other scenarios, you’ll be responsible for coming up with novel ways to include a feature that might otherwise be impossible. In any case, you’ll be forced to come up with ideas constantly, which gets easier the more you practice it.

- Reviewing code. You’ll also be responsible for reviewing code—including code that you wrote and code that other people wrote. Reviewing your own code forces you to see it from an outsider’s perspective, and reviewing the code of others gives you insight into how they think. That diverse experience lends itself to imagining scenarios from different perspectives.

- Fixing bugs. Finding and fixing bugs is an important part of the job, and it’s one of the most creatively enlightening. To resolve the problem, you first have to understand why it’s happening. If you’ve written the code yourself, it’s easy to think the program will run flawlessly, so you’ll have to challenge yourself to start looking for the root cause of the problem. Sometimes, tinkering with the code will only result in more problems, which forces you to go back to the drawing board with a new angle of approach. It’s an ideal problem-solving exercise, and one you’ll have to undergo many times.

- Aesthetics and approachability. Finally, you’ll need to think about the aesthetics and approachability of what you’re creating. Your code might be perfectly polished on the backend, but if users have a hard time understanding the sequence of actions to follow to get a product to do what they want, you may need to rebuild it.

Latest career advice from our Career Round Table: With Demand for Data Scientists at an All-Time High, Top Experts Offer Sound Career Advice

Is Computer Science Worth Learning?

If you’re not already experienced in a field related to computer science, you might feel intimidated at the idea of getting involved in the subject. After all, people spend years, if not decades studying computer science to become professionals.

The good news is, you don’t need decades of experience to see the creative problem-solving benefits of the craft. Learning the basics of a programming language, or even familiarizing yourself with the type of logic necessary to code, can be beneficial to you in your daily life. Take a few hours and flesh out your skills; you’ll be glad you did.

Recommended by IEEE Computer Society

From Data to Discovery: AI’s Revolutionary Impact on Upstream Oil and Gas Transformation

How to Implement Sustainable Innovation in Your Business

Understanding Cloud Native Security

The Role of a Green Data Center for a Sustainable IT Infrastructure

7 Tips for Implementing an Effective Cyber GRC Program

Continuous Deployment: Trends and Predictions for 2024

5 Ways to Save on Database Costs in AWS

Redefining Trust: Cybersecurity Trends and Tactics for 2024

Improving Critical Thinking through Coding

How learning coding can help you with critical thinking, building and honing critical thinking skills is one of the key takeaways of learning coding.

Aug 07, 2018 By Team YoungWonks *

That learning coding has many benefits to it is well known fact. At a time when we have all come to rely heavily on computers and smartphones on a daily basis, it is not surprising to see how coding, aka software programming, is in great demand today. What, however, is often underplayed is the role of coding in teaching critical thinking.

How does coding help develop critical thinking? Before we answer that question, it is important to examine the concept of critical thinking.

What is Critical Thinking?

Critical thinking, to put it simply, is the objective analysis of facts to form a judgment. It is self-monitored, self-corrective thinking that mandates rational, skeptical, unbiased evaluation of factual evidence, which, in turn guides, one to take a particular course of action. This is also why it is described as the ability to choose a certain belief or action after careful consideration of the data available. By its very nature, critical thinking encourages a creative, problem-solving approach.

While critical thinking is crucial in professional life, it is also extremely important in day-to-day life. So on one hand, one may argue that Charles Darwin used a critical thinking mindset to come up with his theory of evolution as it involved him questioning and connecting the aspects of his field of study to others. At the same time, critical thinking is also the skill set one employs when doing something as simple as assessing the authenticity of a particular email. So asking oneself questions such as, “Who emailed this to me?”; “Why have I received this email?”; “What sources are being cited for the information shared in the email”; “What is the purpose behind this email?” and “Are they who they claim to be in the email?” come under the purview of critical thinking as it helps one arrive at a logical solution to the given problem (in this case, determining if the email is spam or not).

Similarly, making a decision about something as mundane as what bag to buy can also involve critical thinking. Given the popularity of e-commerce, one may have a plethora of options available but this also means one needs to factor in things before making a choice for the purchase. So a person looking up blogs, websites and forums to read reviews about bags is, in fact, employing critical thinking.

Coding and Critical Thinking

How then does coding help with critical thinking? Now critical thinking may come across as fairly common, but often its importance is understated. Coding, however, is widely considered to be one of the best tools to teach critical thinking thanks to its authentic, real-world approach. We list below the reasons why coding helps with critical thinking:

1. A similar approach to problem solving: Coding and critical thinking have these process steps in common: a) Identifying a problem or task b) Analyzing the given problem/task c) Coming up with initial solutions d) Testing e) Repeating the process for improved results. A good example of this process in coding is troubleshooting, as this is where programmers need to identify issues and try different tactics until they find a strong solution.

2. Practice reigns supreme: Coding promotes thinking differently by approaching a problem from different angles and thus coming up with as many possible solutions as possible. This iteration during the coding creation process lets students practice their critical thinking skills in every class session.

3. Having an open mind: What if there’s no one right answer to a problem? In coding, this is a rather common scenario as there are multiple correct answers in the coding creation process. For instance, each website, animation, or game will be different from the other depending on the design aesthetics of the user, the functionality, and the technology available. This variability exposes students to the reality that one must be open to new ideas and stay flexible. This, in turn, paves the way for constant improvements.

What the studies and experts reveal

Several studies have found a positive correlation between computer programming and improved cognitive skills. Students with computer programming experiences are said to typically score higher on various cognitive ability tests than students who do not have programming experiences.

Vishal Raina, founder and senior instructor at YoungWonks, sums it up well by emphasising how the tech field thrives on critical thinking. “Since the tech industry is driven by innovation more than anything else, critical thinking plays an integral role in a coder’s career. After all, coding literally has one use syntax and semantics to deal with problems,” he says, adding that a coder’s job also requires perseverance, which means that he / she has to keep going when even they come across an obstacle. “When you come across a dead end in coding, there’s always a way you can go back and start again. This attitude encourages thinking outside the box and is thus tremendously helpful not just in coding but even in other fields.”

Enhancing Critical Thinking Skills with Coding

One of the most valuable benefits of learning to code is the enhancement of critical thinking skills. Through coding, students learn to analyze complex problems, break them down into manageable parts, and devise logical solutions. At YoungWonks, our Coding Classes for Kids are designed to challenge young minds in a supportive environment, encouraging them to think critically and creatively. The Python Coding Classes for Kids focus on teaching students how to tackle problems systematically using Python, one of the most versatile programming languages. Meanwhile, our Raspberry Pi, Arduino and Game Development Coding Classes provide a hands-on approach to learning, where students can apply their coding skills to build and program their own devices. These experiences not only improve critical thinking but also instill a sense of accomplishment and a passion for learning.

*Contributors: Written by: Vidya Prabhu

This blog is presented to you by YoungWonks. The leading coding program for kids and teens. YoungWonks offers instructor led one-on-one online classes and in-person classes with 4:1 student teacher ratio. Sign up for a free trial class by filling out the form below:

- Research article

- Open access

- Published: 12 December 2017

Computational thinking development through creative programming in higher education

- Margarida Romero ORCID: orcid.org/0000-0003-3356-8121 1 ,

- Alexandre Lepage 2 &

- Benjamin Lille 2

International Journal of Educational Technology in Higher Education volume 14 , Article number: 42 ( 2017 ) Cite this article

25k Accesses

96 Citations

31 Altmetric

Metrics details

Creative and problem-solving competencies are part of the so-called twenty-first century skills. The creative use of digital technologies to solve problems is also related to computational thinking as a set of cognitive and metacognitive strategies in which the learner is engaged in an active design and creation process and mobilized computational concepts and methods. At different educational levels, computational thinking can be developed and assessed through solving ill-defined problems. This paper introduces computational thinking in the context of Higher Education creative programming activities. In this study, we engage undergraduate students in a creative programming activity using Scratch. Then, we analyze the computational thinking scores of an automatic analysis tool and the human assessment of the creative programming projects. Results suggested the need for a human assessment of creative programming while pointing the limits of an automated analytical tool, which does not reflect the creative diversity of the Scratch projects and overrates algorithmic complexity.

Creativity as a context-related process

Creativity is a key competency within different frameworks for twenty-first century education (Dede, 2010 ; Voogt & Roblin, 2012 ) and is considered a competency-enabling way to succeed in an increasingly complex world (Rogers, 1954 ; Wang, Schneider, & Valacich, 2015 ). Creativity is a context-related process in which a solution is individually or collaboratively developed and considered as original, valuable, and useful by a reference group (McGuinness & O’Hare, 2012 ). Creativity is also considered under the principle of parsimony, which occurs when one prefers the development of a solution using the fewest resources possible. In computer science creative parsimony has been described as a representation or design that requires fewer resources (Hoffman & Moncet, 2008 ). The importance or the usefulness of the ideas or acts that are considered as creative is highlighted by Franken ( 2007 ). These authors consider creativity as “the tendency to generate or recognize ideas, alternatives, or possibilities that may be useful in solving problems, communicating with others, and entertaining ourselves and others” (p. 348). In this sense, creativity is no longer considered a mysterious breakthrough, but a process happening in a certain context which can be fostered both by the activity orchestration and enhanced creative education activities (Birkinshaw & Mol, 2006 ). Teachers should develop their capacities to integrate technologies in a reflective and innovative way (Hepp, Fernández, & García, 2015 ; Maor, 2017 ), in order to develop the creative use of technologies (Brennan, Balch, & Chung, 2014 ; McCormack & d’Inverno, 2014 ), including the creative use of programming.

From code writing to creative programming

Programming is not only about writing code but also about the capacity to analyze a situation, identify its key components, model the data and processes, and create or refine a program through an agile design-thinking approach. Because of its complexity, programming is often performed as a team-based task in professional settings. Moreover, professionals engaged in programming tasks are often specialized in specific aspects of the process, such as the analysis, the data modelling or even the quality test. In educational settings, programming could be used as a knowledge building and modeling tool for engaging participants in creative problem-solving activities. When learners engage in a creative programming activity, they are able to develop a modelling activity in the sense of Jonassen and Strobel ( 2006 ), who define modelling as “using technology-based environments to build representational models of the phenomena that are being studied” (p.3). The interactive nature of the computer programs created by the learners allows them to test their models, while supporting a prototype-oriented approach (Ke, 2014 ). Despite its pedagogical potential, programming activities must be pedagogically integrated in the classroom. Programming should be considered as a pedagogical strategy, and not only as a technical tool or as a set of coding techniques to be learnt. While some uses of technologies engage the learner in a passive or interactive situation where there is little room for knowledge creation, other uses engage the learner in a creative knowledge-building process in which the technology aims at enhancing the co-creative learning process (Romero, Laferrière & Power, 2016 ). As shown in the figure below, we distinguish five levels of creative engagement in computer programming education based on the creative learner engagement in the learning-to-program activity: (1) passive exposure to teacher-centered explanations, videos or tutorials on programming; (2) procedural step-by-step programming activities in which there is no creativity potential for the learner; creating original content through individual programming (3) or team-based programming (4), and finally, (5) participatory co-creation of knowledge through programming Fig. 1 .

Five levels of creative engagement in educational programming activities

Creative programming engages the learner in the process of designing and developing an original work through coding. In this approach, learners are encouraged to use the programming tool as a knowledge co-constructing tool. For example, they can (co-)create the history of their city at a given historical period or transpose a traditional story in a visual programming tool like Scratch ( http://scratch.mit.edu/ /). In such activities, learners must use skills and knowledge in mathematics (measurement, geometry and Cartesian plane to locate and move their characters, objects and scenery), Science and Technology (universe of hardware, transformations, etc.), Language Arts (narrative patterns, etc.) and Social Sciences (organization in time and space, companies and territories).

Computational thinking in the context of creative programming

We now expand on cognitive and metacognitive strategies potentially used by learners when engaged in creating programming activities: procedural and creative programming. In puzzle-based coding activities, both the learning path and outcomes have been predefined to ensure that each of the learners is able to successfully develop the same activity. These step-by-step learning to code activities do not solicit the level of thinking and cognitive and metacognitive strategies required by ill-defined co-creative programming activities. The ill-defined situations embed a certain level of complexity and uncertainty. In ill-defined co-creative programming activities, the learner should understand the ill-defined situation, empathize (Bjögvinsson, Ehn, & Hillgren, 2012 ), model, structure, develop, and refine a creative program that responds in an original, useful, and valuable way to the ill-defined task. These sets of cognitive and metacognitive strategies could be considered under the umbrella of the computational thinking (CT) concept initially proposed by Wing ( 2006 ) as a fundamental skill that draws on computer science. She defines it as “an approach to solving problems, designing systems and understanding human behavior that draws on concepts fundamental to computing” (Wing, 2008 , p. 3717). Later, she refined the CT concept as “the thought processes involved in formulating problems and their solutions so that the solutions are represented in a form that can be effectively carried out by an information-processing agent” (Cuny, Snyder, & Wing, 2010 ). Open or semi-open tasks in which the process and the outcome are not decided can address more dimensions of CT than closed tasks like step-by-step tutorials (Zhong, Wang, Chen, & Li, 2016 ).

Ongoing discussion about computational thinking

The boundaries of computational thinking vary among authors. This poses an important barrier when it comes to operationalizing CT in concrete activities (Chen et al., 2017 ). Although some associate it strictly with the understanding of algorithms, others insist to integrate problem solving, cooperative work, and attitudes in the concept of CT. The identification of the core components of computational thinking is also discussed by Chen et al. ( 2017 ). Selby and Woollard ( 2013 ) addressed that problem and made a review of literature to propose a definition based on elements that are widely accepted: abstraction, decomposition, evaluations, generalization, and algorithmic thinking. On the one hand, these authors’ definition deliberately rejected problem solving, logical thinking, systems design, automation, computer science content, and modelling. These elements were rejected because they were not widely accepted by the community. On the other hand, other authors such de Araujo, Andrade, and Guerrero ( 2016 , p.8) stress, through their literature review on the CT concept and components, that 96% of selected papers considered problem solving as a CT component. Therefore, we claim that the previously named components are relevant to the core of computational thinking and should be recognized as part of it.

Roots of the computational thinking concept

Following Wing ( 2006 , 2008 ), Duschl, Schweingruber, Shouse, and others ( 2007 ) have described CT as a general analytic approach to problem solving, designing systems, and understanding human behaviors. Based on a socio-constructivist (Nizet & Laferrière, 2005 ), constructionist (Kafai & Resnick, 1996 ) and design thinking approach (Bjögvinsson et al., 2012 ), we consider learning as a collaborative design and knowledge creation process that occurs in a non-linear way. In that, we partially agree with Wing ( 2008 ), who considers the process of abstraction as the core of computational thinking. Abstraction is part of computational thinking, but Papert ( 1980 , 1992 ) pointed out that programming solicits both concrete and abstract thinking skills and the line between these skills is not easy to trace. Papert ( 1980 ) suggests that an exposure to computer science concepts may give concrete meaning to what may be considered at first glance as abstract. He gives the example of using loops in programming, which may lose its abstract meaning after repeated use. If we expand that example by applying it to a widely-accepted definition of abstraction from the APA dictionary (i.e. “such a concept, especially a wholly intangible one, such as “goodness” or “beauty””, VandenBoss, 2006 ), we could envision a loop as something tangible in that it may be seen as such in the environment. The core of CT might be the capacity to transpose abstract meaning into concrete meaning. This makes CT a way to reify an abstract concept into something concrete like a computer program or algorithm. In this sense programming is a process by which, after a phase of analysis and entities identification and structuration, there is a reification of the abstract model derived from the analysis into a set of concrete instructions. CT is a set of cognitive and metacognitive strategies paired with processes and methods of computer science (analysis, abstraction, modelling). It may be related to computer science the same way as algorithmic thinking is related to mathematics.

“Algorithmic thinking is a method of thinking and guiding thought processes that uses step-by-step procedures, requires inputs and produces outputs, requires decisions about the quality and appropriateness of information coming and information going out, and monitors the thought processes as a means of controlling and directing the thinking process. In essence, algorithmic thinking is simultaneously a method of thinking and a means for thinking about one's thinking.” (Mingus & Grassl, 1998 , p. 34) .

Algorithmic thinking has to deal with the same problem as computational thinking: its limits are under discussion. To some authors it is limited to mathematics. But definitions such as that from Mingus and Grassl ( 1998 ) make the concept go beyond mathematics (Modeste, 2012 ). Viewing algorithmic thinking as the form of thinking associated to computer science at large instead of part of mathematics allows a more adequate understanding of its nature (Modeste, 2012 ). We can consider that algorithmic thinking is an important aspect of computational thinking. However, when considering computational thinking as a creative prototype-based approach we should not only consider the design thinking components (exploration, empathy, definition, ideation, prototyping, and creation) (Brown, 2009 ) but also the hardware dimension of computational thinking solutions (i.e. use of robotic components to execute a program). Within a design thinking perspective, different solutions are created and tested in the attempts to advance towards a solution. From this perspective we conceptualize CT as a set of cognitive and metacognitive strategies related to problem finding, problem framing, code literacy, and creative programming (Brennan & Resnick, 2013 ). It is a way to develop new thinking strategies to analyze, identify, and organize relatively complex and ill-defined tasks (Rourke & Sweller, 2009 ) and as creative problem solving activity (Brennan et al., 2014 ). We now elaborate on how computational thinking can be assessed in an ill-defined creative programming activity.

Assessment of computational thinking

There is a diversity of approaches for assessing CT. We analyze three approaches in this section: Computer Science Teachers Association’s (CSTA) curriculum in the USA (Reed & Nelson, 2016 ; Seehorn et al., 2011 ), Barefoot’s computational thinking model in the UK (Curzon, Dorling, Ng, Selby, & Woollard, 2014 ), and the analytical tool Dr. Scratch (Moreno-León & Robles, 2015 ).

CSTA’s curriculum includes expectation in terms of levels to reach at every school grade. It comprises five strands: (1) Collaboration, (2) Computational Thinking, (3) Computing Practice and Programming, (4) Computers and Communication Devices, and (5) Community, Global, and Ethical Impacts. Thus, CSTA’s model considers computational thinking as part of a wider computer science field. The progression between levels appears to be based on the transition between low-level programming and object-oriented programming (i.e. computer programs as step-by-step sequences at level 1, and parallelism at level 3). CSTA standards for K12 suggest that programming activities in K12 should “be designed with a focus on active learning, creativity, and exploration and will often be embedded within other curricular areas such as social science, language arts, mathematics, and science”. However, CSTA standards do not give creativity a particular status in their models; moreover, the evaluation of creativity in programming activities seems to be ultimately up to the evaluator. From our perspective, and because of the creative nature of CT, we need to consider creativity in an explicit way and provide educators with guidelines for assessing it.

The Barefoot CT framework is defined through five components: logic, algorithms, decomposition, patterns, abstraction, and evaluation. We agree on their relevance in computational thinking. Barefoot CT framework provides concrete examples of how each of the components may be observed in children of different ages. However, relying only on that model may result in assessing abilities instead of competency. These concepts represent a set of abilities more than an entire competency (Hoffmann, 1999 ).

Dr. Scratch is a code-analyzer that outputs a score for elements such as abstraction, logic, and flow control (Moreno-León & Robles, 2015 ). Scores are computed automatically from any Scratch program. It also provides instant feedback and acts as a tutorial about how one can improve his program, which makes it especially adequate for self-assessment. Hoover et al. ( 2016 ) believe that automated assessment of CT can potentially encourage CT development. However, Dr. Scratch only considers the complexity of programs, not their meaning. This tool is suitable to evaluate the level of technical mastery of Scratch that a user has, but it cannot be used to evaluate every component of a CT competency as we defined it (i.e. the program does not give evidence of thought processes, and does not consider the task demanded). Finally, it would be hard for an automated process to measure or teach creativity since that behaviour is an act of intelligence (Chomsky, 2008 ) which should be analyzed considering the originality, value, and usefulness for a given problem-situation. In other terms, there is a need to evaluate the appropriateness of the creative solution according to the context and avoid over-complex solutions, which use unnecessary or inappropriate code for a given situation. In automatic code-analyzers tools, it is impossible to rate creativity, parsimony and appropriateness of a program considering that ill-defined problem-situations could lead to different solutions. We therefore now elaborate on how computational thinking can be assessed while considering that CT is intertwined with other twenty-first century competencies such as creativity and problem-solving.

Computational thinking components within the #5c21

We consider CT as a coherent set of cognitive and metacognitive strategies engaged in (complex) systems identification, representation, programming, and evaluation. After identifying and analyzing a problem or a user need, programming is a creative problem-solving activity. The programming activity aims to design, write, test, debug, and maintain a set of information and instructions expressed through code, using a particular programming language, to produce a concrete computer program which aims to meet the problem or users’ needs. Programming is not a linear predefined activity, but rather a prototype-oriented approach in which intermediate solutions are considered before releasing a solution which is considered good enough to solve the situation problem. Within this approach of programming, which is not only focused on the techniques to code a program, we should consider different components which are related to the creative problem-solving process. In this sense, we identify six components of the CT competency in the #5c21 model: two related to code and technologies literacies and four related to the four phases of Collaborative Problem Solving (CPS) of PISA 2015. Firstly, component 1 (COMP1) is related to the ability to identify the components of a situation and their structure (analysis/representation), which certain authors refer to as problem identification. Component 2 (COMP2) is the ability to organize and model the situation efficiently (organize/model). Component 3 (COMP3) is the code literacy. Component 4 (COMP4) is the (technological) systems literacy (software/hardware). Component 5 (COMP5) focuses on the capacity to create a computer program (programming). Finally, component 6 (COMP6) is the ability to engage in the evaluation and iterative process of improving a computer program Fig. 2 .

Six components of the CT competency within the #5c21 framework

When we relate computational thinking components to the four phases of Collaborative Problem Solving (CPS) of PISA 2015, we can link the analysis/abstraction component (COMP1) to collaborative problem solving (CPS-A) Exploring and Understanding phase. model component (COMP2) is related to (CPS-B) representing/model component (COMP2) is related to (CPS-B) representing and formulating. The capacity to plan and create a computer program (COMP5) is linked to (CPS-C) planning and executing but also to (CPS-D) monitoring and reflecting (COMP6) Fig 3 .

Four components of the CT competency related to CPS of PISA 2015

Code literacy (COMP3) and (technological) systems literacy (COMP4) are programming and system concepts and processes that will help to better operationalize the other components. They are also important in CT because knowing about computer programming concepts and processes can help develop CT strategies (Brennan & Resnick, 2013 ) and at the same time, CT strategies can be enriched by code-independent cognitive and metacognitive strategies of thinking represented by CPS related components (COMP1, 2, 5 and 6). Like in the egg-hen paradox, knowing about the concepts and process (COMP3 and 4) could enrich the problem-solving process (COMP1, 2, 5 and 6) and vice versa. The ability to be creative when analyzing, organizing/modeling, programming, and evaluating a computer program is a meta-capacity that shows that the participant had to think of different alternatives and imagine a novel, original and valuable process, concept, or solution to the situation.

Advancing creative programming assessment through the #5c21 model

After revising three models of CT assessment (CSTA, Barefoot, Dr. Scratch), we describe in this section our proposal to evaluate CT in the context of creative programming activities. We named our creative programming assessment the #5c21 model, because of the importance of the five key competencies in twenty-first century education: CT, creativity, collaboration, problem solving, and critical thinking. First, we discuss the opportunity of learning the object-oriented programming (OOP) paradigm from the early steps of CT learning activities. Second, we examine the opportunity to develop CT in an interdisciplinary way without creating a new CT curriculum in one specific discipline such as mathematics. Third, we discuss the opportunity of developing CT at different levels of education from primary education to lifelong learning activities.

In certain computer sciences curricula, low-level programming is introduced before OOP, which is considered as a higher-level of programming. Nevertheless, following Kölling ( 1999 ) if the OOP paradigm is to be learnt, it should not be avoided in the early stages of the learning activities to avoid difficulties due to paradigmatic changes. For that reason, our model does not restrict programming to step-by-step at early stages of development and embraces the OOP paradigm from its early stages. Moreover, we should consider the potential of non-programmers to understand OOP concepts without knowing how to operationalize it through a programming language. For instance, we may partially understand the concept of heritage through the concept of family without knowing the heritage concepts in computer science. Our model of a CT competency recognizes the possibility for certain components to develop at different rhythms, or for an individual with no prior programming experience to master some components (i.e. abstraction). For that reason, we did not integrate age-associated expectations. These should be built upon the context and should be task-specific. While CSTA considers concept mapping as a level 1 skill (K-3), our model would consider that this skill may be evaluated with different degrees of complexity according to the context and prior pupil’s experience. Our view is that computational thinking encompasses many particular skills related to abstraction.

Our model pays attention to the integration of CT into existing curricula. We recognize the identification of CT related skills in the CSTA’s model, and we agree to its relevance in computer science courses. However, our CT model is intended for use in any subject. Thus, it carefully tries not to give over relative importance to subjects such as mathematics and science. In that, we are working to define computational thinking as a transferable skill that does not only belong to the field of computer science. We also made it to be reusable in different tasks and to measure abilities as well as interactions between them (i.e. “algorithm creation based on the data modelling”).

Our model of computational thinking is intended for both elementary and high school pupils. In that it differs from CSTA, which expects nothing in term of computational thinking for children under grade 4. Though CSTA expects K-3 pupils to “use technology resources […] to solve age-appropriate problems”, some statements suggest that they should be passive in problem-solving (i.e.: “Describe how a simulation can be used to solve a problem” instead of creating a simulation, “gather information” instead of produce information, and “recognize that software is created to control computer operations” instead of actually controlling something like a robot).

Methodology for assessing CT in creative programming activities

In order to assess the CT from the theoretical framework and its operationalization as components described in the prior section, we have developed an assessment protocol and a tool (#5c21) to evaluate CT in creative programming activities. Before the assessment, the teacher defines the specific observables to be evaluated through the use of the tool. Once the observables are identified, four levels of achievement for each observable are described in the tool. The #5c21 tool allows a pre-test, post-test or just-in time teacher-based assessment or learner self-assessment which aims at collecting the level of achievement in each observable for the activity. At the end of a certain period of time (e.g., session and academic year) the teacher can generate reports showing the evolution in learners’ CT assessment.

A distinctive characteristic of the #5c21 approach to assess CT is the consideration of ill-defined problem-situations. The creative potential of these activities engages the participants in the analysis, modelling and creation of artifacts, which may provide the teacher with evidence of an original, valuable, relevant, and parsimonious solution to a given problem-situation.

Participants

A total of 120 undergraduate students at Université Laval in Canada ( N = 120) were engaged in a story2code creative challenge. All of them were undergraduate students of a bachelor’s degree in elementary school education. They were in the third year of a four-year program and had no former educational technology courses. At the second week of the semester, they were asked to perform a programming task using Scratch. Scratch is a block-based programming language intended for children from 7 years of age. Participants were only presented two features of the language: creation of a new sprite (object) and the possibility to drag and drop blocks in each sprite’s program. They were also advised about the use of the green flag to start the program.