Sciencing_Icons_Science SCIENCE

Sciencing_icons_biology biology, sciencing_icons_cells cells, sciencing_icons_molecular molecular, sciencing_icons_microorganisms microorganisms, sciencing_icons_genetics genetics, sciencing_icons_human body human body, sciencing_icons_ecology ecology, sciencing_icons_chemistry chemistry, sciencing_icons_atomic & molecular structure atomic & molecular structure, sciencing_icons_bonds bonds, sciencing_icons_reactions reactions, sciencing_icons_stoichiometry stoichiometry, sciencing_icons_solutions solutions, sciencing_icons_acids & bases acids & bases, sciencing_icons_thermodynamics thermodynamics, sciencing_icons_organic chemistry organic chemistry, sciencing_icons_physics physics, sciencing_icons_fundamentals-physics fundamentals, sciencing_icons_electronics electronics, sciencing_icons_waves waves, sciencing_icons_energy energy, sciencing_icons_fluid fluid, sciencing_icons_astronomy astronomy, sciencing_icons_geology geology, sciencing_icons_fundamentals-geology fundamentals, sciencing_icons_minerals & rocks minerals & rocks, sciencing_icons_earth scructure earth structure, sciencing_icons_fossils fossils, sciencing_icons_natural disasters natural disasters, sciencing_icons_nature nature, sciencing_icons_ecosystems ecosystems, sciencing_icons_environment environment, sciencing_icons_insects insects, sciencing_icons_plants & mushrooms plants & mushrooms, sciencing_icons_animals animals, sciencing_icons_math math, sciencing_icons_arithmetic arithmetic, sciencing_icons_addition & subtraction addition & subtraction, sciencing_icons_multiplication & division multiplication & division, sciencing_icons_decimals decimals, sciencing_icons_fractions fractions, sciencing_icons_conversions conversions, sciencing_icons_algebra algebra, sciencing_icons_working with units working with units, sciencing_icons_equations & expressions equations & expressions, sciencing_icons_ratios & proportions ratios & proportions, sciencing_icons_inequalities inequalities, sciencing_icons_exponents & logarithms exponents & logarithms, sciencing_icons_factorization factorization, sciencing_icons_functions functions, sciencing_icons_linear equations linear equations, sciencing_icons_graphs graphs, sciencing_icons_quadratics quadratics, sciencing_icons_polynomials polynomials, sciencing_icons_geometry geometry, sciencing_icons_fundamentals-geometry fundamentals, sciencing_icons_cartesian cartesian, sciencing_icons_circles circles, sciencing_icons_solids solids, sciencing_icons_trigonometry trigonometry, sciencing_icons_probability-statistics probability & statistics, sciencing_icons_mean-median-mode mean/median/mode, sciencing_icons_independent-dependent variables independent/dependent variables, sciencing_icons_deviation deviation, sciencing_icons_correlation correlation, sciencing_icons_sampling sampling, sciencing_icons_distributions distributions, sciencing_icons_probability probability, sciencing_icons_calculus calculus, sciencing_icons_differentiation-integration differentiation/integration, sciencing_icons_application application, sciencing_icons_projects projects, sciencing_icons_news news.

- Share Tweet Email Print

- Home ⋅

- Math ⋅

- Probability & Statistics ⋅

- Distributions

How to Write a Hypothesis for Correlation

How to Calculate a P-Value

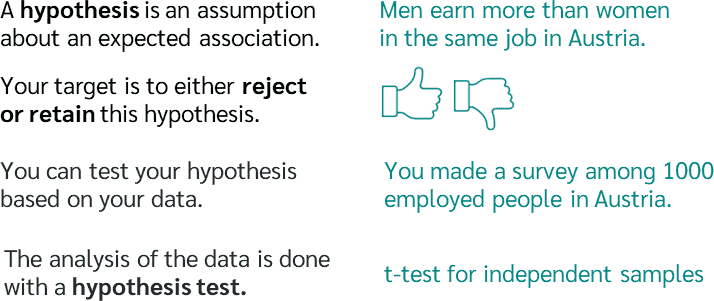

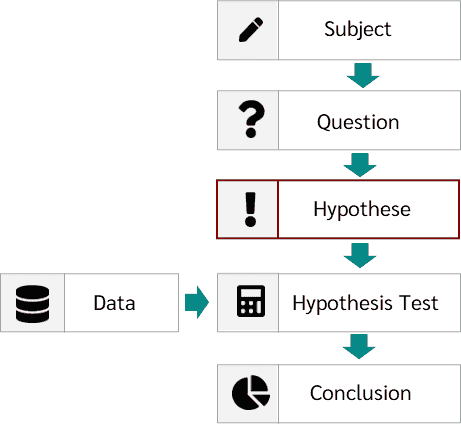

A hypothesis is a testable statement about how something works in the natural world. While some hypotheses predict a causal relationship between two variables, other hypotheses predict a correlation between them. According to the Research Methods Knowledge Base, a correlation is a single number that describes the relationship between two variables. If you do not predict a causal relationship or cannot measure one objectively, state clearly in your hypothesis that you are merely predicting a correlation.

Research the topic in depth before forming a hypothesis. Without adequate knowledge about the subject matter, you will not be able to decide whether to write a hypothesis for correlation or causation. Read the findings of similar experiments before writing your own hypothesis.

Identify the independent variable and dependent variable. Your hypothesis will be concerned with what happens to the dependent variable when a change is made in the independent variable. In a correlation, the two variables undergo changes at the same time in a significant number of cases. However, this does not mean that the change in the independent variable causes the change in the dependent variable.

Construct an experiment to test your hypothesis. In a correlative experiment, you must be able to measure the exact relationship between two variables. This means you will need to find out how often a change occurs in both variables in terms of a specific percentage.

Establish the requirements of the experiment with regard to statistical significance. Instruct readers exactly how often the variables must correlate to reach a high enough level of statistical significance. This number will vary considerably depending on the field. In a highly technical scientific study, for instance, the variables may need to correlate 98 percent of the time; but in a sociological study, 90 percent correlation may suffice. Look at other studies in your particular field to determine the requirements for statistical significance.

State the null hypothesis. The null hypothesis gives an exact value that implies there is no correlation between the two variables. If the results show a percentage equal to or lower than the value of the null hypothesis, then the variables are not proven to correlate.

Record and summarize the results of your experiment. State whether or not the experiment met the minimum requirements of your hypothesis in terms of both percentage and significance.

Related Articles

How to determine the sample size in a quantitative..., how to calculate a two-tailed test, how to interpret a student's t-test results, how to know if something is significant using spss, quantitative vs. qualitative data and laboratory testing, similarities of univariate & multivariate statistical..., what is the meaning of sample size, distinguishing between descriptive & causal studies, how to calculate cv values, how to determine your practice clep score, what are the different types of correlations, how to calculate p-hat, how to calculate percentage error, how to calculate percent relative range, how to calculate a sample size population, how to calculate bias, how to calculate the percentage of another number, how to find y value for the slope of a line, advantages & disadvantages of finding variance.

- University of New England; Steps in Hypothesis Testing for Correlation; 2000

- Research Methods Knowledge Base; Correlation; William M.K. Trochim; 2006

- Science Buddies; Hypothesis

About the Author

Brian Gabriel has been a writer and blogger since 2009, contributing to various online publications. He earned his Bachelor of Arts in history from Whitworth University.

Photo Credits

Thinkstock/Comstock/Getty Images

Find Your Next Great Science Fair Project! GO

We Have More Great Sciencing Articles!

How to Determine the Sample Size in a Quantitative Research Study

If you're seeing this message, it means we're having trouble loading external resources on our website.

If you're behind a web filter, please make sure that the domains *.kastatic.org and *.kasandbox.org are unblocked.

To log in and use all the features of Khan Academy, please enable JavaScript in your browser.

Statistics and probability

Course: statistics and probability > unit 5.

- Example: Correlation coefficient intuition

- Correlation coefficient intuition

- Calculating correlation coefficient r

Correlation coefficient review

What is a correlation coefficient.

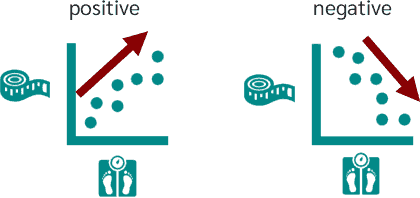

- It always has a value between − 1 and 1 .

- Strong positive linear relationships have values of r closer to 1 .

- Strong negative linear relationships have values of r closer to − 1 .

- Weaker relationships have values of r closer to 0 .

Practice problem

Want to join the conversation.

- Upvote Button navigates to signup page

- Downvote Button navigates to signup page

- Flag Button navigates to signup page

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

Conducting a hypothesis test for the population correlation coefficient p.

There is one more point we haven't stressed yet in our discussion about the correlation coefficient r and the coefficient of determination r 2 — namely, the two measures summarize the strength of a linear relationship in samples only . If we obtained a different sample, we would obtain different correlations, different r 2 values, and therefore potentially different conclusions. As always, we want to draw conclusions about populations , not just samples. To do so, we either have to conduct a hypothesis test or calculate a confidence interval. In this section, we learn how to conduct a hypothesis test for the population correlation coefficient ρ (the Greek letter "rho").

Incidentally, where does this topic fit in among the four regression analysis steps?

- Model formulation

- Model estimation

- Model evaluation

It's a situation in which we use the model to answer a specific research question, namely whether or not a linear relationship exists between two quantitative variables

In general, a researcher should use the hypothesis test for the population correlation ρ to learn of a linear association between two variables, when it isn't obvious which variable should be regarded as the response. Let's clarify this point with examples of two different research questions.

We previously learned that to evaluate whether or not a linear relationship exists between skin cancer mortality and latitude, we can perform either of the following tests:

- t -test for testing H 0 : β 1 = 0

- ANOVA F -test for testing H 0 : β 1 = 0

That's because it is fairly obvious that latitude should be treated as the predictor variable and skin cancer mortality as the response. Suppose we want to evaluate whether or not a linear relationship exists between a husband's age and his wife's age? In this case, one could treat the husband's age as the response:

or one could treat wife's age as the response:

Pearson correlation of HAge and WAge = 0.939

In cases such as these, we answer our research question concerning the existence of a linear relationship by using the t -test for testing the population correlation coefficient H 0 : ρ = 0.

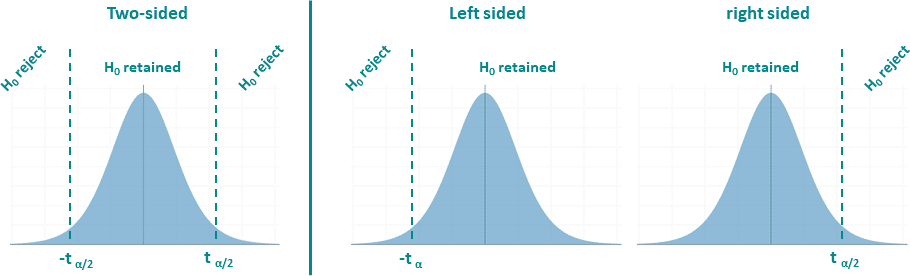

Let's jump right to it! We follow standard hypothesis test procedures in conducting a hypothesis test for the population correlation coefficient ρ . First, we specify the null and alternative hypotheses:

Null hypothesis H 0 : ρ = 0 Alternative hypothesis H A : ρ ≠ 0 or H A : ρ < 0 or H A : ρ > 0

Second, we calculate the value of the test statistic using the following formula:

Test statistic : \(t^*=\frac{r\sqrt{n-2}}{\sqrt{1-r^2}}\)

Third, we use the resulting test statistic to calculate the P -value. As always, the P -value is the answer to the question "how likely is it that we’d get a test statistic t* as extreme as we did if the null hypothesis were true?" The P -value is determined by referring to a t- distribution with n -2 degrees of freedom.

Finally, we make a decision:

- If the P -value is smaller than the significance level α, we reject the null hypothesis in favor of the alternative. We conclude "there is sufficient evidence at the α level to conclude that there is a linear relationship in the population between the predictor x and response y ."

- If the P -value is larger than the significance level α, we fail to reject the null hypothesis. We conclude "there is not enough evidence at the α level to conclude that there is a linear relationship in the population between the predictor x and response y ."

Let's perform the hypothesis test on the husband's age and wife's age data in which the sample correlation based on n = 170 couples is r = 0.939. To test H 0 : ρ = 0 against the alternative H A : ρ ≠ 0, we obtain the following test statistic:

\[t^*=\frac{r\sqrt{n-2}}{\sqrt{1-r^2}}=\frac{0.939\sqrt{170-2}}{\sqrt{1-0.939^2}}=35.39\]

To obtain the P -value, we need to compare the test statistic to a t -distribution with 168 degrees of freedom (since 170 - 2 = 168). In particular, we need to find the probability that we'd observe a test statistic more extreme than 35.39, and then, since we're conducting a two-sided test, multiply the probability by 2. Minitab helps us out here:

Incidentally, we can let statistical software like Minitab do all of the dirty work for us. In doing so, Minitab reports:

It should be noted that the three hypothesis tests we learned for testing the existence of a linear relationship — the t -test for H 0 : β 1 = 0, the ANOVA F -test for H 0 : β 1 = 0, and the t -test for H 0 : ρ = 0 — will always yield the same results. For example, if we treat the husband's age ("HAge") as the response and the wife's age ("WAge") as the predictor, each test yields a P -value of 0.000... < 0.001:

And similarly, if we treat the wife's age ("WAge") as the response and the husband's age ("HAge") as the predictor, each test yields of P -value of 0.000... < 0.001:

Technically, then, it doesn't matter what test you use to obtain the P -value. You will always get the same P -value. But, you should report the results of the test that make sense for your particular situation:

- If one of the variables can be clearly identified as the response, report that you conducted a t -test or F -test results for testing H 0 : β 1 = 0. (Does it make sense to use x to predict y ?)

- If it is not obvious which variable is the response, report that you conducted a t -test for testing H 0 : ρ = 0. (Does it only make sense to look for an association between x and y ?)

One final note ... as always, we should clarify when it is okay to use the t -test for testing H 0 : ρ = 0? The guidelines are a straightforward extension of the "LINE" assumptions made for the simple linear regression model. It's okay:

- When it is not obvious which variable is the response.

- For each x , the y 's are normal with equal variances.

- For each y , the x 's are normal with equal variances.

- Either, y can be considered a linear function of x .

- Or, x can be considered a linear function of y .

- The ( x , y ) pairs are independent

12.4 Testing the Significance of the Correlation Coefficient

The correlation coefficient, r , tells us about the strength and direction of the linear relationship between x and y . However, the reliability of the linear model also depends on how many observed data points are in the sample. We need to look at both the value of the correlation coefficient r and the sample size n , together.

We perform a hypothesis test of the "significance of the correlation coefficient" to decide whether the linear relationship in the sample data is strong enough to use to model the relationship in the population.

The sample data are used to compute r , the correlation coefficient for the sample. If we had data for the entire population, we could find the population correlation coefficient. But because we have only sample data, we cannot calculate the population correlation coefficient. The sample correlation coefficient, r , is our estimate of the unknown population correlation coefficient.

- The symbol for the population correlation coefficient is ρ , the Greek letter "rho."

- ρ = population correlation coefficient (unknown)

- r = sample correlation coefficient (known; calculated from sample data)

The hypothesis test lets us decide whether the value of the population correlation coefficient ρ is "close to zero" or "significantly different from zero". We decide this based on the sample correlation coefficient r and the sample size n .

If the test concludes that the correlation coefficient is significantly different from zero, we say that the correlation coefficient is "significant."

- Conclusion: There is sufficient evidence to conclude that there is a significant linear relationship between x and y because the correlation coefficient is significantly different from zero.

- What the conclusion means: There is a significant linear relationship between x and y . We can use the regression line to model the linear relationship between x and y in the population.

If the test concludes that the correlation coefficient is not significantly different from zero (it is close to zero), we say that correlation coefficient is "not significant".

- Conclusion: "There is insufficient evidence to conclude that there is a significant linear relationship between x and y because the correlation coefficient is not significantly different from zero."

- What the conclusion means: There is not a significant linear relationship between x and y . Therefore, we CANNOT use the regression line to model a linear relationship between x and y in the population.

- If r is significant and the scatter plot shows a linear trend, the line can be used to predict the value of y for values of x that are within the domain of observed x values.

- If r is not significant OR if the scatter plot does not show a linear trend, the line should not be used for prediction.

- If r is significant and if the scatter plot shows a linear trend, the line may NOT be appropriate or reliable for prediction OUTSIDE the domain of observed x values in the data.

PERFORMING THE HYPOTHESIS TEST

- Null Hypothesis: H 0 : ρ = 0

- Alternate Hypothesis: H a : ρ ≠ 0

WHAT THE HYPOTHESES MEAN IN WORDS:

- Null Hypothesis H 0 : The population correlation coefficient IS NOT significantly different from zero. There IS NOT a significant linear relationship (correlation) between x and y in the population.

- Alternate Hypothesis H a : The population correlation coefficient IS significantly DIFFERENT FROM zero. There IS A SIGNIFICANT LINEAR RELATIONSHIP (correlation) between x and y in the population.

DRAWING A CONCLUSION: There are two methods of making the decision. The two methods are equivalent and give the same result.

- Method 1: Using the p -value

- Method 2: Using a table of critical values

In this chapter of this textbook, we will always use a significance level of 5%, α = 0.05

Using the p -value method, you could choose any appropriate significance level you want; you are not limited to using α = 0.05. But the table of critical values provided in this textbook assumes that we are using a significance level of 5%, α = 0.05. (If we wanted to use a different significance level than 5% with the critical value method, we would need different tables of critical values that are not provided in this textbook.)

METHOD 1: Using a p -value to make a decision

Using the ti-83, 83+, 84, 84+ calculator.

To calculate the p -value using LinRegTTEST: On the LinRegTTEST input screen, on the line prompt for β or ρ , highlight " ≠ 0 " The output screen shows the p-value on the line that reads "p =". (Most computer statistical software can calculate the p -value.)

- Decision: Reject the null hypothesis.

- Conclusion: "There is sufficient evidence to conclude that there is a significant linear relationship between x and y because the correlation coefficient is significantly different from zero."

- Decision: DO NOT REJECT the null hypothesis.

- Conclusion: "There is insufficient evidence to conclude that there is a significant linear relationship between x and y because the correlation coefficient is NOT significantly different from zero."

- You will use technology to calculate the p -value. The following describes the calculations to compute the test statistics and the p -value:

- The p -value is calculated using a t -distribution with n - 2 degrees of freedom.

- The formula for the test statistic is t = r n − 2 1 − r 2 t = r n − 2 1 − r 2 . The value of the test statistic, t , is shown in the computer or calculator output along with the p -value. The test statistic t has the same sign as the correlation coefficient r .

- The p -value is the combined area in both tails.

An alternative way to calculate the p -value (p) given by LinRegTTest is the command 2*tcdf(abs(t),10^99, n-2) in 2nd DISTR.

- Consider the third exam/final exam example .

- The line of best fit is: ŷ = -173.51 + 4.83 x with r = 0.6631 and there are n = 11 data points.

- Can the regression line be used for prediction? Given a third exam score ( x value), can we use the line to predict the final exam score (predicted y value)?

- H 0 : ρ = 0

- H a : ρ ≠ 0

- The p -value is 0.026 (from LinRegTTest on your calculator or from computer software).

- The p -value, 0.026, is less than the significance level of α = 0.05.

- Decision: Reject the Null Hypothesis H 0

- Conclusion: There is sufficient evidence to conclude that there is a significant linear relationship between the third exam score ( x ) and the final exam score ( y ) because the correlation coefficient is significantly different from zero.

Because r is significant and the scatter plot shows a linear trend, the regression line can be used to predict final exam scores.

METHOD 2: Using a table of Critical Values to make a decision

The 95% Critical Values of the Sample Correlation Coefficient Table can be used to give you a good idea of whether the computed value of r r is significant or not . Compare r to the appropriate critical value in the table. If r is not between the positive and negative critical values, then the correlation coefficient is significant. If r is significant, then you may want to use the line for prediction.

Example 12.7

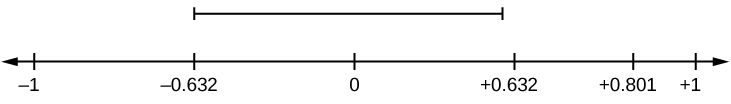

Suppose you computed r = 0.801 using n = 10 data points. df = n - 2 = 10 - 2 = 8. The critical values associated with df = 8 are -0.632 and + 0.632. If r < negative critical value or r > positive critical value, then r is significant. Since r = 0.801 and 0.801 > 0.632, r is significant and the line may be used for prediction. If you view this example on a number line, it will help you.

Try It 12.7

For a given line of best fit, you computed that r = 0.6501 using n = 12 data points and the critical value is 0.576. Can the line be used for prediction? Why or why not?

Example 12.8

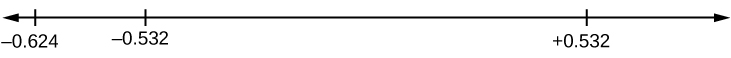

Suppose you computed r = –0.624 with 14 data points. df = 14 – 2 = 12. The critical values are –0.532 and 0.532. Since –0.624 < –0.532, r is significant and the line can be used for prediction

Try It 12.8

For a given line of best fit, you compute that r = 0.5204 using n = 9 data points, and the critical value is 0.666. Can the line be used for prediction? Why or why not?

Example 12.9

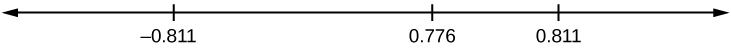

Suppose you computed r = 0.776 and n = 6. df = 6 – 2 = 4. The critical values are –0.811 and 0.811. Since –0.811 < 0.776 < 0.811, r is not significant, and the line should not be used for prediction.

Try It 12.9

For a given line of best fit, you compute that r = –0.7204 using n = 8 data points, and the critical value is = 0.707. Can the line be used for prediction? Why or why not?

THIRD-EXAM vs FINAL-EXAM EXAMPLE: critical value method

Consider the third exam/final exam example . The line of best fit is: ŷ = –173.51+4.83 x with r = 0.6631 and there are n = 11 data points. Can the regression line be used for prediction? Given a third-exam score ( x value), can we use the line to predict the final exam score (predicted y value)?

- Use the "95% Critical Value" table for r with df = n – 2 = 11 – 2 = 9.

- The critical values are –0.602 and +0.602

- Since 0.6631 > 0.602, r is significant.

- Conclusion:There is sufficient evidence to conclude that there is a significant linear relationship between the third exam score ( x ) and the final exam score ( y ) because the correlation coefficient is significantly different from zero.

Example 12.10

Suppose you computed the following correlation coefficients. Using the table at the end of the chapter, determine if r is significant and the line of best fit associated with each r can be used to predict a y value. If it helps, draw a number line.

- r = –0.567 and the sample size, n , is 19. The df = n – 2 = 17. The critical value is –0.456. –0.567 < –0.456 so r is significant.

- r = 0.708 and the sample size, n , is nine. The df = n – 2 = 7. The critical value is 0.666. 0.708 > 0.666 so r is significant.

- r = 0.134 and the sample size, n , is 14. The df = 14 – 2 = 12. The critical value is 0.532. 0.134 is between –0.532 and 0.532 so r is not significant.

- r = 0 and the sample size, n , is five. No matter what the dfs are, r = 0 is between the two critical values so r is not significant.

Try It 12.10

For a given line of best fit, you compute that r = 0 using n = 100 data points. Can the line be used for prediction? Why or why not?

Assumptions in Testing the Significance of the Correlation Coefficient

Testing the significance of the correlation coefficient requires that certain assumptions about the data are satisfied. The premise of this test is that the data are a sample of observed points taken from a larger population. We have not examined the entire population because it is not possible or feasible to do so. We are examining the sample to draw a conclusion about whether the linear relationship that we see between x and y in the sample data provides strong enough evidence so that we can conclude that there is a linear relationship between x and y in the population.

The regression line equation that we calculate from the sample data gives the best-fit line for our particular sample. We want to use this best-fit line for the sample as an estimate of the best-fit line for the population. Examining the scatterplot and testing the significance of the correlation coefficient helps us determine if it is appropriate to do this.

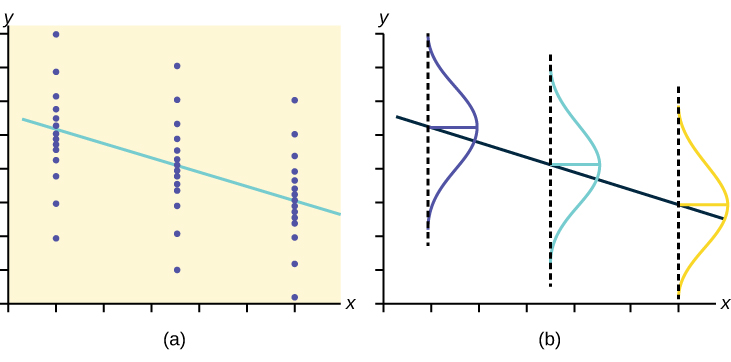

- There is a linear relationship in the population that models the average value of y for varying values of x . In other words, the expected value of y for each particular value lies on a straight line in the population. (We do not know the equation for the line for the population. Our regression line from the sample is our best estimate of this line in the population.)

- The y values for any particular x value are normally distributed about the line. This implies that there are more y values scattered closer to the line than are scattered farther away. Assumption (1) implies that these normal distributions are centered on the line: the means of these normal distributions of y values lie on the line.

- The standard deviations of the population y values about the line are equal for each value of x . In other words, each of these normal distributions of y values has the same shape and spread about the line.

- The residual errors are mutually independent (no pattern).

- The data are produced from a well-designed, random sample or randomized experiment.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/introductory-statistics/pages/1-introduction

- Authors: Barbara Illowsky, Susan Dean

- Publisher/website: OpenStax

- Book title: Introductory Statistics

- Publication date: Sep 19, 2013

- Location: Houston, Texas

- Book URL: https://openstax.org/books/introductory-statistics/pages/1-introduction

- Section URL: https://openstax.org/books/introductory-statistics/pages/12-4-testing-the-significance-of-the-correlation-coefficient

© Jun 23, 2022 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

Module 12: Linear Regression and Correlation

Hypothesis test for correlation, learning outcomes.

- Conduct a linear regression t-test using p-values and critical values and interpret the conclusion in context

The correlation coefficient, r , tells us about the strength and direction of the linear relationship between x and y . However, the reliability of the linear model also depends on how many observed data points are in the sample. We need to look at both the value of the correlation coefficient r and the sample size n , together.

We perform a hypothesis test of the “ significance of the correlation coefficient ” to decide whether the linear relationship in the sample data is strong enough to use to model the relationship in the population.

The sample data are used to compute r , the correlation coefficient for the sample. If we had data for the entire population, we could find the population correlation coefficient. But because we only have sample data, we cannot calculate the population correlation coefficient. The sample correlation coefficient, r , is our estimate of the unknown population correlation coefficient.

- The symbol for the population correlation coefficient is ρ , the Greek letter “rho.”

- ρ = population correlation coefficient (unknown)

- r = sample correlation coefficient (known; calculated from sample data)

The hypothesis test lets us decide whether the value of the population correlation coefficient ρ is “close to zero” or “significantly different from zero.” We decide this based on the sample correlation coefficient r and the sample size n .

If the test concludes that the correlation coefficient is significantly different from zero, we say that the correlation coefficient is “significant.”

- Conclusion: There is sufficient evidence to conclude that there is a significant linear relationship between x and y because the correlation coefficient is significantly different from zero.

- What the conclusion means: There is a significant linear relationship between x and y . We can use the regression line to model the linear relationship between x and y in the population.

If the test concludes that the correlation coefficient is not significantly different from zero (it is close to zero), we say that the correlation coefficient is “not significant.”

- Conclusion: “There is insufficient evidence to conclude that there is a significant linear relationship between x and y because the correlation coefficient is not significantly different from zero.”

- What the conclusion means: There is not a significant linear relationship between x and y . Therefore, we CANNOT use the regression line to model a linear relationship between x and y in the population.

- If r is significant and the scatter plot shows a linear trend, the line can be used to predict the value of y for values of x that are within the domain of observed x values.

- If r is not significant OR if the scatter plot does not show a linear trend, the line should not be used for prediction.

- If r is significant and if the scatter plot shows a linear trend, the line may NOT be appropriate or reliable for prediction OUTSIDE the domain of observed x values in the data.

Performing the Hypothesis Test

- Null Hypothesis: H 0 : ρ = 0

- Alternate Hypothesis: H a : ρ ≠ 0

What the Hypotheses Mean in Words

- Null Hypothesis H 0 : The population correlation coefficient IS NOT significantly different from zero. There IS NOT a significant linear relationship (correlation) between x and y in the population.

- Alternate Hypothesis H a : The population correlation coefficient IS significantly DIFFERENT FROM zero. There IS A SIGNIFICANT LINEAR RELATIONSHIP (correlation) between x and y in the population.

Drawing a Conclusion

There are two methods of making the decision. The two methods are equivalent and give the same result.

- Method 1: Using the p -value

- Method 2: Using a table of critical values

In this chapter of this textbook, we will always use a significance level of 5%, α = 0.05

Using the p -value method, you could choose any appropriate significance level you want; you are not limited to using α = 0.05. But the table of critical values provided in this textbook assumes that we are using a significance level of 5%, α = 0.05. (If we wanted to use a different significance level than 5% with the critical value method, we would need different tables of critical values that are not provided in this textbook).

Method 1: Using a p -value to make a decision

Using the ti-83, 83+, 84, 84+ calculator.

To calculate the p -value using LinRegTTEST:

- On the LinRegTTEST input screen, on the line prompt for β or ρ , highlight “≠ 0”

- The output screen shows the p-value on the line that reads “p =”.

- (Most computer statistical software can calculate the p -value).

If the p -value is less than the significance level ( α = 0.05)

- Decision: Reject the null hypothesis.

- Conclusion: “There is sufficient evidence to conclude that there is a significant linear relationship between x and y because the correlation coefficient is significantly different from zero.”

If the p -value is NOT less than the significance level ( α = 0.05)

- Decision: DO NOT REJECT the null hypothesis.

- Conclusion: “There is insufficient evidence to conclude that there is a significant linear relationship between x and y because the correlation coefficient is NOT significantly different from zero.”

Calculation Notes:

- You will use technology to calculate the p -value. The following describes the calculations to compute the test statistics and the p -value:

- The p -value is calculated using a t -distribution with n – 2 degrees of freedom.

- The formula for the test statistic is [latex]\displaystyle{t}=\dfrac{{{r}\sqrt{{{n}-{2}}}}}{\sqrt{{{1}-{r}^{{2}}}}}[/latex]. The value of the test statistic, t , is shown in the computer or calculator output along with the p -value. The test statistic t has the same sign as the correlation coefficient r .

- The p -value is the combined area in both tails.

Recall: ORDER OF OPERATIONS

1st find the numerator:

Step 1: Find [latex]n-2[/latex], and then take the square root.

Step 2: Multiply the value in Step 1 by [latex]r[/latex].

2nd find the denominator:

Step 3: Find the square of [latex]r[/latex], which is [latex]r[/latex] multiplied by [latex]r[/latex].

Step 4: Subtract this value from 1, [latex]1 -r^2[/latex].

Step 5: Find the square root of Step 4.

3rd take the numerator and divide by the denominator.

An alternative way to calculate the p -value (p) given by LinRegTTest is the command 2*tcdf(abs(t),10^99, n-2) in 2nd DISTR.

THIRD-EXAM vs FINAL-EXAM EXAM: p- value method

- Consider the third exam/final exam example (example 2).

- The line of best fit is: [latex]\hat{y}[/latex] = -173.51 + 4.83 x with r = 0.6631 and there are n = 11 data points.

- Can the regression line be used for prediction? Given a third exam score ( x value), can we use the line to predict the final exam score (predicted y value)?

- H 0 : ρ = 0

- H a : ρ ≠ 0

- The p -value is 0.026 (from LinRegTTest on your calculator or from computer software).

- The p -value, 0.026, is less than the significance level of α = 0.05.

- Decision: Reject the Null Hypothesis H 0

- Conclusion: There is sufficient evidence to conclude that there is a significant linear relationship between the third exam score ( x ) and the final exam score ( y ) because the correlation coefficient is significantly different from zero.

Because r is significant and the scatter plot shows a linear trend, the regression line can be used to predict final exam scores.

Method 2: Using a table of Critical Values to make a decision

The 95% Critical Values of the Sample Correlation Coefficient Table can be used to give you a good idea of whether the computed value of r is significant or not . Compare r to the appropriate critical value in the table. If r is not between the positive and negative critical values, then the correlation coefficient is significant. If r is significant, then you may want to use the line for prediction.

Suppose you computed r = 0.801 using n = 10 data points. df = n – 2 = 10 – 2 = 8. The critical values associated with df = 8 are -0.632 and + 0.632. If r < negative critical value or r > positive critical value, then r is significant. Since r = 0.801 and 0.801 > 0.632, r is significant and the line may be used for prediction. If you view this example on a number line, it will help you.

r is not significant between -0.632 and +0.632. r = 0.801 > +0.632. Therefore, r is significant.

For a given line of best fit, you computed that r = 0.6501 using n = 12 data points and the critical value is 0.576. Can the line be used for prediction? Why or why not?

If the scatter plot looks linear then, yes, the line can be used for prediction, because r > the positive critical value.

Suppose you computed r = –0.624 with 14 data points. df = 14 – 2 = 12. The critical values are –0.532 and 0.532. Since –0.624 < –0.532, r is significant and the line can be used for prediction

r = –0.624-0.532. Therefore, r is significant.

For a given line of best fit, you compute that r = 0.5204 using n = 9 data points, and the critical value is 0.666. Can the line be used for prediction? Why or why not?

No, the line cannot be used for prediction, because r < the positive critical value.

Suppose you computed r = 0.776 and n = 6. df = 6 – 2 = 4. The critical values are –0.811 and 0.811. Since –0.811 < 0.776 < 0.811, r is not significant, and the line should not be used for prediction.

–0.811 < r = 0.776 < 0.811. Therefore, r is not significant.

For a given line of best fit, you compute that r = –0.7204 using n = 8 data points, and the critical value is = 0.707. Can the line be used for prediction? Why or why not?

Yes, the line can be used for prediction, because r < the negative critical value.

THIRD-EXAM vs FINAL-EXAM EXAMPLE: critical value method

Consider the third exam/final exam example again. The line of best fit is: [latex]\hat{y}[/latex] = –173.51+4.83 x with r = 0.6631 and there are n = 11 data points. Can the regression line be used for prediction? Given a third-exam score ( x value), can we use the line to predict the final exam score (predicted y value)?

- Use the “95% Critical Value” table for r with df = n – 2 = 11 – 2 = 9.

- The critical values are –0.602 and +0.602

- Since 0.6631 > 0.602, r is significant.

Suppose you computed the following correlation coefficients. Using the table at the end of the chapter, determine if r is significant and the line of best fit associated with each r can be used to predict a y value. If it helps, draw a number line.

- r = –0.567 and the sample size, n , is 19. The df = n – 2 = 17. The critical value is –0.456. –0.567 < –0.456 so r is significant.

- r = 0.708 and the sample size, n , is nine. The df = n – 2 = 7. The critical value is 0.666. 0.708 > 0.666 so r is significant.

- r = 0.134 and the sample size, n , is 14. The df = 14 – 2 = 12. The critical value is 0.532. 0.134 is between –0.532 and 0.532 so r is not significant.

- r = 0 and the sample size, n , is five. No matter what the dfs are, r = 0 is between the two critical values so r is not significant.

For a given line of best fit, you compute that r = 0 using n = 100 data points. Can the line be used for prediction? Why or why not?

No, the line cannot be used for prediction no matter what the sample size is.

Assumptions in Testing the Significance of the Correlation Coefficient

Testing the significance of the correlation coefficient requires that certain assumptions about the data are satisfied. The premise of this test is that the data are a sample of observed points taken from a larger population. We have not examined the entire population because it is not possible or feasible to do so. We are examining the sample to draw a conclusion about whether the linear relationship that we see between x and y in the sample data provides strong enough evidence so that we can conclude that there is a linear relationship between x and y in the population.

The regression line equation that we calculate from the sample data gives the best-fit line for our particular sample. We want to use this best-fit line for the sample as an estimate of the best-fit line for the population. Examining the scatterplot and testing the significance of the correlation coefficient helps us determine if it is appropriate to do this.

The assumptions underlying the test of significance are:

- There is a linear relationship in the population that models the average value of y for varying values of x . In other words, the expected value of y for each particular value lies on a straight line in the population. (We do not know the equation for the line for the population. Our regression line from the sample is our best estimate of this line in the population).

- The y values for any particular x value are normally distributed about the line. This implies that there are more y values scattered closer to the line than are scattered farther away. Assumption (1) implies that these normal distributions are centered on the line: the means of these normal distributions of y values lie on the line.

- The standard deviations of the population y values about the line are equal for each value of x . In other words, each of these normal distributions of y values has the same shape and spread about the line.

- The residual errors are mutually independent (no pattern).

- The data are produced from a well-designed, random sample or randomized experiment.

The y values for each x value are normally distributed about the line with the same standard deviation. For each x value, the mean of the y values lies on the regression line. More y values lie near the line than are scattered further away from the line.

- Provided by : Lumen Learning. License : CC BY: Attribution

- Testing the Significance of the Correlation Coefficient. Provided by : OpenStax. Located at : https://openstax.org/books/introductory-statistics/pages/12-4-testing-the-significance-of-the-correlation-coefficient . License : CC BY: Attribution . License Terms : Access for free at https://openstax.org/books/introductory-statistics/pages/1-introduction

- Introductory Statistics. Authored by : Barbara Illowsky, Susan Dean. Provided by : OpenStax. Located at : https://openstax.org/books/introductory-statistics/pages/1-introduction . License : CC BY: Attribution . License Terms : Access for free at https://openstax.org/books/introductory-statistics/pages/1-introduction

Privacy Policy

Correlation Hypothesis

Understanding the relationships between variables is pivotal in research. Correlation hypotheses delve into the degree of association between two or more variables. In this guide, delve into an array of correlation hypothesis examples that explore connections, followed by a step-by-step tutorial on crafting these thesis statement hypothesis effectively. Enhance your research prowess with valuable tips tailored to unravel the intricate world of correlations.

What is Correlation Hypothesis?

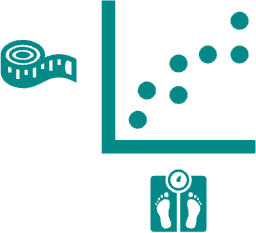

A correlation hypothesis is a statement that predicts a specific relationship between two or more variables based on the assumption that changes in one variable are associated with changes in another variable. It suggests that there is a correlation or statistical relationship between the variables, meaning that when one variable changes, the other variable is likely to change in a consistent manner.

What is an example of a Correlation Hypothesis Statement?

Example: “If the amount of exercise increases, then the level of physical fitness will also increase.”

In this example, the correlation hypothesis suggests that there is a positive correlation between the amount of exercise a person engages in and their level of physical fitness. As exercise increases, the hypothesis predicts that physical fitness will increase as well. This hypothesis can be tested by collecting data on exercise levels and physical fitness levels and analyzing the relationship between the two variables using statistical methods.

100 Correlation Hypothesis Statement Examples

Size: 277 KB

Discover the intriguing world of correlation through a collection of examples that illustrate how variables can be linked in research. Explore diverse scenarios where changes in one variable may correspond to changes in another, forming the basis of correlation hypotheses. These real-world instances shed light on the essence of correlation analysis and its role in uncovering connections between different aspects of data.

- Study Hours and Exam Scores : If students study more hours per week, then their exam scores will show a positive correlation, indicating that increased study time might lead to better performance.

- Income and Education : If the level of education increases, then income levels will also rise, demonstrating a positive correlation between education attainment and earning potential.

- Social Media Usage and Well-being : If individuals spend more time on social media platforms, then their self-reported well-being might exhibit a negative correlation, suggesting that excessive use could impact mental health.

- Temperature and Ice Cream Sales : If temperatures rise, then the sales of ice cream might increase, displaying a positive correlation due to the weather’s influence on consumer behavior.

- Physical Activity and Heart Rate : If the intensity of physical activity rises, then heart rate might increase, signifying a positive correlation between exercise intensity and heart rate.

- Age and Reaction Time : If age increases, then reaction time might show a positive correlation, indicating that as people age, their reaction times might slow down.

- Smoking and Lung Capacity : If the number of cigarettes smoked daily increases, then lung capacity might decrease, suggesting a negative correlation between smoking and respiratory health.

- Stress and Sleep Quality : If stress levels elevate, then sleep quality might decline, reflecting a negative correlation between psychological stress and restorative sleep.

- Rainfall and Crop Yield : If the amount of rainfall decreases, then crop yield might also decrease, illustrating a negative correlation between precipitation and agricultural productivity.

- Screen Time and Academic Performance : If screen time usage increases among students, then academic performance might show a negative correlation, suggesting that excessive screen time could be detrimental to studies.

- Exercise and Body Weight : If individuals engage in regular exercise, then their body weight might exhibit a negative correlation, implying that physical activity can contribute to weight management.

- Income and Crime Rates : If income levels decrease in a neighborhood, then crime rates might show a positive correlation, indicating a potential link between socio-economic factors and crime.

- Social Support and Mental Health : If the level of social support increases, then individuals’ mental health scores may exhibit a positive correlation, highlighting the potential positive impact of strong social networks on psychological well-being.

- Study Time and GPA : If students spend more time studying, then their Grade Point Average (GPA) might display a positive correlation, suggesting that increased study efforts may lead to higher academic achievement.

- Parental Involvement and Academic Success : If parents are more involved in their child’s education, then the child’s academic success may show a positive correlation, emphasizing the role of parental support in shaping student outcomes.

- Alcohol Consumption and Reaction Time : If alcohol consumption increases, then reaction time might slow down, indicating a negative correlation between alcohol intake and cognitive performance.

- Social Media Engagement and Loneliness : If time spent on social media platforms increases, then feelings of loneliness might show a positive correlation, suggesting a potential connection between excessive online interaction and emotional well-being.

- Temperature and Insect Activity : If temperatures rise, then the activity of certain insects might increase, demonstrating a potential positive correlation between temperature and insect behavior.

- Education Level and Voting Participation : If education levels rise, then voter participation rates may also increase, showcasing a positive correlation between education and civic engagement.

- Work Commute Time and Job Satisfaction : If work commute time decreases, then job satisfaction might show a positive correlation, indicating that shorter commutes could contribute to higher job satisfaction.

- Sleep Duration and Cognitive Performance : If sleep duration increases, then cognitive performance scores might also rise, suggesting a potential positive correlation between adequate sleep and cognitive functioning.

- Healthcare Access and Mortality Rate : If access to healthcare services improves, then the mortality rate might decrease, highlighting a potential negative correlation between healthcare accessibility and mortality.

- Exercise and Blood Pressure : If individuals engage in regular exercise, then their blood pressure levels might exhibit a negative correlation, indicating that physical activity can contribute to maintaining healthy blood pressure.

- Social Media Use and Academic Distraction : If students spend more time on social media during study sessions, then their academic focus might show a negative correlation, suggesting that excessive online engagement can hinder concentration.

- Age and Technological Adaptation : If age increases, then the speed of adapting to new technologies might exhibit a negative correlation, suggesting that younger individuals tend to adapt more quickly.

- Temperature and Plant Growth : If temperatures rise, then the rate of plant growth might increase, indicating a potential positive correlation between temperature and biological processes.

- Music Exposure and Mood : If individuals listen to upbeat music, then their reported mood might show a positive correlation, suggesting that music can influence emotional states.

- Income and Healthcare Utilization : If income levels increase, then the frequency of healthcare utilization might decrease, suggesting a potential negative correlation between income and healthcare needs.

- Distance and Communication Frequency : If physical distance between individuals increases, then their communication frequency might show a negative correlation, indicating that proximity tends to facilitate communication.

- Study Group Attendance and Exam Scores : If students regularly attend study groups, then their exam scores might exhibit a positive correlation, suggesting that collaborative study efforts could enhance performance.

- Temperature and Disease Transmission : If temperatures rise, then the transmission of certain diseases might increase, pointing to a potential positive correlation between temperature and disease spread.

- Interest Rates and Consumer Spending : If interest rates decrease, then consumer spending might show a positive correlation, suggesting that lower interest rates encourage increased economic activity.

- Digital Device Use and Eye Strain : If individuals spend more time on digital devices, then the occurrence of eye strain might show a positive correlation, suggesting that prolonged screen time can impact eye health.

- Parental Education and Children’s Educational Attainment : If parents have higher levels of education, then their children’s educational attainment might display a positive correlation, highlighting the intergenerational impact of education.

- Social Interaction and Happiness : If individuals engage in frequent social interactions, then their reported happiness levels might show a positive correlation, indicating that social connections contribute to well-being.

- Temperature and Energy Consumption : If temperatures decrease, then energy consumption for heating might increase, suggesting a potential positive correlation between temperature and energy usage.

- Physical Activity and Stress Reduction : If individuals engage in regular physical activity, then their reported stress levels might display a negative correlation, indicating that exercise can help alleviate stress.

- Diet Quality and Chronic Diseases : If diet quality improves, then the prevalence of chronic diseases might decrease, suggesting a potential negative correlation between healthy eating habits and disease risk.

- Social Media Use and Body Image Dissatisfaction : If time spent on social media increases, then feelings of body image dissatisfaction might show a positive correlation, suggesting that online platforms can influence self-perception.

- Income and Access to Quality Education : If household income increases, then access to quality education for children might improve, suggesting a potential positive correlation between financial resources and educational opportunities.

- Workplace Diversity and Innovation : If workplace diversity increases, then the rate of innovation might show a positive correlation, indicating that diverse teams often generate more creative solutions.

- Physical Activity and Bone Density : If individuals engage in weight-bearing exercises, then their bone density might exhibit a positive correlation, suggesting that exercise contributes to bone health.

- Screen Time and Attention Span : If screen time increases, then attention span might show a negative correlation, indicating that excessive screen exposure can impact sustained focus.

- Social Support and Resilience : If individuals have strong social support networks, then their resilience levels might display a positive correlation, suggesting that social connections contribute to coping abilities.

- Weather Conditions and Mood : If sunny weather persists, then individuals’ reported mood might exhibit a positive correlation, reflecting the potential impact of weather on emotional states.

- Nutrition Education and Healthy Eating : If individuals receive nutrition education, then their consumption of fruits and vegetables might show a positive correlation, suggesting that knowledge influences dietary choices.

- Physical Activity and Cognitive Aging : If adults engage in regular physical activity, then their cognitive decline with aging might show a slower rate, indicating a potential negative correlation between exercise and cognitive aging.

- Air Quality and Respiratory Illnesses : If air quality deteriorates, then the incidence of respiratory illnesses might increase, suggesting a potential positive correlation between air pollutants and health impacts.

- Reading Habits and Vocabulary Growth : If individuals read regularly, then their vocabulary size might exhibit a positive correlation, suggesting that reading contributes to language development.

- Sleep Quality and Stress Levels : If sleep quality improves, then reported stress levels might display a negative correlation, indicating that sleep can impact psychological well-being.

- Social Media Engagement and Academic Performance : If students spend more time on social media, then their academic performance might exhibit a negative correlation, suggesting that excessive online engagement can impact studies.

- Exercise and Blood Sugar Levels : If individuals engage in regular exercise, then their blood sugar levels might display a negative correlation, indicating that physical activity can influence glucose regulation.

- Screen Time and Sleep Duration : If screen time before bedtime increases, then sleep duration might show a negative correlation, suggesting that screen exposure can affect sleep patterns.

- Environmental Pollution and Health Outcomes : If exposure to environmental pollutants increases, then the occurrence of health issues might show a positive correlation, suggesting that pollution can impact well-being.

- Time Management and Academic Achievement : If students improve time management skills, then their academic achievement might exhibit a positive correlation, indicating that effective planning contributes to success.

- Physical Fitness and Heart Health : If individuals improve their physical fitness, then their heart health indicators might display a positive correlation, indicating that exercise benefits cardiovascular well-being.

- Weather Conditions and Outdoor Activities : If weather is sunny, then outdoor activities might show a positive correlation, suggesting that favorable weather encourages outdoor engagement.

- Media Exposure and Body Image Perception : If exposure to media images increases, then body image dissatisfaction might show a positive correlation, indicating media’s potential influence on self-perception.

- Community Engagement and Civic Participation : If individuals engage in community activities, then their civic participation might exhibit a positive correlation, indicating an active citizenry.

- Social Media Use and Productivity : If individuals spend more time on social media, then their productivity levels might exhibit a negative correlation, suggesting that online distractions can affect work efficiency.

- Income and Stress Levels : If income levels increase, then reported stress levels might exhibit a negative correlation, suggesting that financial stability can impact psychological well-being.

- Social Media Use and Interpersonal Skills : If individuals spend more time on social media, then their interpersonal skills might show a negative correlation, indicating potential effects on face-to-face interactions.

- Parental Involvement and Academic Motivation : If parents are more involved in their child’s education, then the child’s academic motivation may exhibit a positive correlation, highlighting the role of parental support.

- Technology Use and Sleep Quality : If screen time increases before bedtime, then sleep quality might show a negative correlation, suggesting that technology use can impact sleep.

- Outdoor Activity and Mood Enhancement : If individuals engage in outdoor activities, then their reported mood might display a positive correlation, suggesting the potential emotional benefits of nature exposure.

- Income Inequality and Social Mobility : If income inequality increases, then social mobility might exhibit a negative correlation, suggesting that higher inequality can hinder upward mobility.

- Vegetable Consumption and Heart Health : If individuals increase their vegetable consumption, then heart health indicators might show a positive correlation, indicating the potential benefits of a nutritious diet.

- Online Learning and Academic Achievement : If students engage in online learning, then their academic achievement might display a positive correlation, highlighting the effectiveness of digital education.

- Emotional Intelligence and Workplace Performance : If emotional intelligence improves, then workplace performance might exhibit a positive correlation, indicating the relevance of emotional skills.

- Community Engagement and Mental Well-being : If individuals engage in community activities, then their reported mental well-being might show a positive correlation, emphasizing social connections’ impact.

- Rainfall and Agriculture Productivity : If rainfall levels increase, then agricultural productivity might exhibit a positive correlation, indicating the importance of water for crops.

- Social Media Use and Body Posture : If screen time increases, then poor body posture might show a positive correlation, suggesting that screen use can influence physical habits.

- Marital Satisfaction and Relationship Length : If marital satisfaction decreases, then relationship length might show a negative correlation, indicating potential challenges over time.

- Exercise and Anxiety Levels : If individuals engage in regular exercise, then reported anxiety levels might exhibit a negative correlation, indicating the potential benefits of physical activity on mental health.

- Music Listening and Concentration : If individuals listen to instrumental music, then their concentration levels might display a positive correlation, suggesting music’s impact on focus.

- Internet Usage and Attention Deficits : If screen time increases, then attention deficits might show a positive correlation, implying that excessive internet use can affect concentration.

- Financial Literacy and Debt Levels : If financial literacy improves, then personal debt levels might exhibit a negative correlation, suggesting better financial decision-making.

- Time Spent Outdoors and Vitamin D Levels : If time spent outdoors increases, then vitamin D levels might show a positive correlation, indicating sun exposure’s role in vitamin synthesis.

- Family Meal Frequency and Nutrition : If families eat meals together frequently, then nutrition quality might display a positive correlation, emphasizing family dining’s impact on health.

- Temperature and Allergy Symptoms : If temperatures rise, then allergy symptoms might increase, suggesting a potential positive correlation between temperature and allergen exposure.

- Social Media Use and Academic Distraction : If students spend more time on social media, then their academic focus might exhibit a negative correlation, indicating that online engagement can hinder studies.

- Financial Stress and Health Outcomes : If financial stress increases, then the occurrence of health issues might show a positive correlation, suggesting potential health impacts of economic strain.

- Study Hours and Test Anxiety : If students study more hours, then test anxiety might show a negative correlation, suggesting that increased preparation can reduce anxiety.

- Music Tempo and Exercise Intensity : If music tempo increases, then exercise intensity might display a positive correlation, indicating music’s potential to influence workout vigor.

- Green Space Accessibility and Stress Reduction : If access to green spaces improves, then reported stress levels might exhibit a negative correlation, highlighting nature’s stress-reducing effects.

- Parenting Style and Child Behavior : If authoritative parenting increases, then positive child behaviors might display a positive correlation, suggesting parenting’s influence on behavior.

- Sleep Quality and Productivity : If sleep quality improves, then work productivity might show a positive correlation, emphasizing the connection between rest and efficiency.

- Media Consumption and Political Beliefs : If media consumption increases, then alignment with specific political beliefs might exhibit a positive correlation, suggesting media’s influence on ideology.

- Workplace Satisfaction and Employee Retention : If workplace satisfaction increases, then employee retention rates might show a positive correlation, indicating the link between job satisfaction and tenure.

- Digital Device Use and Eye Discomfort : If screen time increases, then reported eye discomfort might show a positive correlation, indicating potential impacts of screen exposure.

- Age and Adaptability to Technology : If age increases, then adaptability to new technologies might exhibit a negative correlation, indicating generational differences in tech adoption.

- Physical Activity and Mental Health : If individuals engage in regular physical activity, then reported mental health scores might exhibit a positive correlation, showcasing exercise’s impact.

- Video Gaming and Attention Span : If time spent on video games increases, then attention span might display a negative correlation, indicating potential effects on focus.

- Social Media Use and Empathy Levels : If social media use increases, then reported empathy levels might show a negative correlation, suggesting possible effects on emotional understanding.

- Reading Habits and Creativity : If individuals read diverse genres, then their creative thinking might exhibit a positive correlation, emphasizing reading’s cognitive benefits.

- Weather Conditions and Outdoor Exercise : If weather is pleasant, then outdoor exercise might show a positive correlation, suggesting weather’s influence on physical activity.

- Parental Involvement and Bullying Prevention : If parents are actively involved, then instances of bullying might exhibit a negative correlation, emphasizing parental impact on behavior.

- Digital Device Use and Sleep Disruption : If screen time before bedtime increases, then sleep disruption might show a positive correlation, indicating technology’s influence on sleep.

- Friendship Quality and Psychological Well-being : If friendship quality increases, then reported psychological well-being might show a positive correlation, highlighting social support’s impact.

- Income and Environmental Consciousness : If income levels increase, then environmental consciousness might also rise, indicating potential links between affluence and sustainability awareness.

Correlational Hypothesis Interpretation Statement Examples

Explore the art of interpreting correlation hypotheses with these illustrative examples. Understand the implications of positive, negative, and zero correlations, and learn how to deduce meaningful insights from data relationships.

- Relationship Between Exercise and Mood : A positive correlation between exercise frequency and mood scores suggests that increased physical activity might contribute to enhanced emotional well-being.

- Association Between Screen Time and Sleep Quality : A negative correlation between screen time before bedtime and sleep quality indicates that higher screen exposure could lead to poorer sleep outcomes.

- Connection Between Study Hours and Exam Performance : A positive correlation between study hours and exam scores implies that increased study time might correspond to better academic results.

- Link Between Stress Levels and Meditation Practice : A negative correlation between stress levels and meditation frequency suggests that engaging in meditation could be associated with lower perceived stress.

- Relationship Between Social Media Use and Loneliness : A positive correlation between social media engagement and feelings of loneliness implies that excessive online interaction might contribute to increased loneliness.

- Association Between Income and Happiness : A positive correlation between income and self-reported happiness indicates that higher income levels might be linked to greater subjective well-being.

- Connection Between Parental Involvement and Academic Performance : A positive correlation between parental involvement and students’ grades suggests that active parental engagement might contribute to better academic outcomes.

- Link Between Time Management and Stress Levels : A negative correlation between effective time management and reported stress levels implies that better time management skills could lead to lower stress.

- Relationship Between Outdoor Activities and Vitamin D Levels : A positive correlation between time spent outdoors and vitamin D levels suggests that increased outdoor engagement might be associated with higher vitamin D concentrations.

- Association Between Water Consumption and Skin Hydration : A positive correlation between water intake and skin hydration indicates that higher fluid consumption might lead to improved skin moisture levels.

Alternative Correlational Hypothesis Statement Examples

Explore alternative scenarios and potential correlations in these examples. Learn to articulate different hypotheses that could explain data relationships beyond the conventional assumptions.

- Alternative to Exercise and Mood : An alternative hypothesis could suggest a non-linear relationship between exercise and mood, indicating that moderate exercise might have the most positive impact on emotional well-being.

- Alternative to Screen Time and Sleep Quality : An alternative hypothesis might propose that screen time has a curvilinear relationship with sleep quality, suggesting that moderate screen exposure leads to optimal sleep outcomes.

- Alternative to Study Hours and Exam Performance : An alternative hypothesis could propose that there’s an interaction effect between study hours and study method, influencing the relationship between study time and exam scores.

- Alternative to Stress Levels and Meditation Practice : An alternative hypothesis might consider that the relationship between stress levels and meditation practice is moderated by personality traits, resulting in varying effects.

- Alternative to Social Media Use and Loneliness : An alternative hypothesis could posit that the relationship between social media use and loneliness depends on the quality of online interactions and content consumption.

- Alternative to Income and Happiness : An alternative hypothesis might propose that the relationship between income and happiness differs based on cultural factors, leading to varying happiness levels at different income ranges.

- Alternative to Parental Involvement and Academic Performance : An alternative hypothesis could suggest that the relationship between parental involvement and academic performance varies based on students’ learning styles and preferences.

- Alternative to Time Management and Stress Levels : An alternative hypothesis might explore the possibility of a curvilinear relationship between time management and stress levels, indicating that extreme time management efforts might elevate stress.

- Alternative to Outdoor Activities and Vitamin D Levels : An alternative hypothesis could consider that the relationship between outdoor activities and vitamin D levels is moderated by sunscreen usage, influencing vitamin synthesis.

- Alternative to Water Consumption and Skin Hydration : An alternative hypothesis might propose that the relationship between water consumption and skin hydration is mediated by dietary factors, influencing fluid retention and skin health.

Correlational Hypothesis Pearson Interpretation Statement Examples

Discover how the Pearson correlation coefficient enhances your understanding of data relationships with these examples. Learn to interpret correlation strength and direction using this valuable statistical measure.

- Strong Positive Correlation : A Pearson correlation coefficient of +0.85 between study time and exam scores indicates a strong positive relationship, suggesting that increased study time is strongly associated with higher grades.

- Moderate Negative Correlation : A Pearson correlation coefficient of -0.45 between screen time and sleep quality reflects a moderate negative correlation, implying that higher screen exposure is moderately linked to poorer sleep outcomes.

- Weak Positive Correlation : A Pearson correlation coefficient of +0.25 between social media use and loneliness suggests a weak positive correlation, indicating that increased online engagement is weakly related to higher loneliness.

- Strong Negative Correlation : A Pearson correlation coefficient of -0.75 between stress levels and meditation practice indicates a strong negative relationship, implying that engaging in meditation is strongly associated with lower stress.

- Moderate Positive Correlation : A Pearson correlation coefficient of +0.60 between income and happiness signifies a moderate positive correlation, suggesting that higher income is moderately linked to greater happiness.

- Weak Negative Correlation : A Pearson correlation coefficient of -0.30 between parental involvement and academic performance represents a weak negative correlation, indicating that higher parental involvement is weakly associated with lower academic performance.

- Strong Positive Correlation : A Pearson correlation coefficient of +0.80 between time management and stress levels reveals a strong positive relationship, suggesting that effective time management is strongly linked to lower stress.

- Weak Negative Correlation : A Pearson correlation coefficient of -0.20 between outdoor activities and vitamin D levels signifies a weak negative correlation, implying that higher outdoor engagement is weakly related to lower vitamin D levels.

- Moderate Positive Correlation : A Pearson correlation coefficient of +0.50 between water consumption and skin hydration denotes a moderate positive correlation, suggesting that increased fluid intake is moderately linked to better skin hydration.

- Strong Negative Correlation : A Pearson correlation coefficient of -0.70 between screen time and attention span indicates a strong negative relationship, implying that higher screen exposure is strongly associated with shorter attention spans.

Correlational Hypothesis Statement Examples in Psychology

Explore how correlation hypotheses apply to psychological research with these examples. Understand how psychologists investigate relationships between variables to gain insights into human behavior.

- Sleep Patterns and Cognitive Performance : There is a positive correlation between consistent sleep patterns and cognitive performance, suggesting that individuals with regular sleep schedules exhibit better cognitive functioning.

- Anxiety Levels and Social Media Use : There is a positive correlation between anxiety levels and excessive social media use, indicating that individuals who spend more time on social media might experience higher anxiety.

- Self-Esteem and Body Image Satisfaction : There is a negative correlation between self-esteem and body image satisfaction, implying that individuals with higher self-esteem tend to be more satisfied with their physical appearance.

- Parenting Styles and Child Aggression : There is a negative correlation between authoritative parenting styles and child aggression, suggesting that children raised by authoritative parents might exhibit lower levels of aggression.

- Emotional Intelligence and Conflict Resolution : There is a positive correlation between emotional intelligence and effective conflict resolution, indicating that individuals with higher emotional intelligence tend to resolve conflicts more successfully.

- Personality Traits and Career Satisfaction : There is a positive correlation between certain personality traits (e.g., extraversion, openness) and career satisfaction, suggesting that individuals with specific traits experience higher job contentment.

- Stress Levels and Coping Mechanisms : There is a negative correlation between stress levels and adaptive coping mechanisms, indicating that individuals with lower stress levels are more likely to employ effective coping strategies.

- Attachment Styles and Romantic Relationship Quality : There is a positive correlation between secure attachment styles and higher romantic relationship quality, suggesting that individuals with secure attachments tend to have healthier relationships.

- Social Support and Mental Health : There is a negative correlation between perceived social support and mental health issues, indicating that individuals with strong social support networks tend to experience fewer mental health challenges.

- Motivation and Academic Achievement : There is a positive correlation between intrinsic motivation and academic achievement, implying that students who are internally motivated tend to perform better academically.

Does Correlational Research Have Hypothesis?

Correlational research involves examining the relationship between two or more variables to determine whether they are related and how they change together. While correlational studies do not establish causation, they still utilize hypotheses to formulate expectations about the relationships between variables. These good hypotheses predict the presence, direction, and strength of correlations. However, in correlational research, the focus is on measuring and analyzing the degree of association rather than establishing cause-and-effect relationships.

How Do You Write a Null-Hypothesis for a Correlational Study?

The null hypothesis in a correlational study states that there is no significant correlation between the variables being studied. It assumes that any observed correlation is due to chance and lacks meaningful association. When writing a null hypothesis for a correlational study, follow these steps:

- Identify the Variables: Clearly define the variables you are studying and their relationship (e.g., “There is no significant correlation between X and Y”).

- Specify the Population: Indicate the population from which the data is drawn (e.g., “In the population of [target population]…”).

- Include the Direction of Correlation: If relevant, specify the direction of correlation (positive, negative, or zero) that you are testing (e.g., “…there is no significant positive/negative correlation…”).

- State the Hypothesis: Write the null hypothesis as a clear statement that there is no significant correlation between the variables (e.g., “…there is no significant correlation between X and Y”).

What Is Correlation Hypothesis Formula?