Conducting a Literature Review

- Literature Review

- Developing a Topic

- Planning Your Literature Review

- Developing a Search Strategy

- Managing Citations

- Critical Appraisal Tools

- Writing a Literature Review

Appraise Your Research Articles

The structure of a literature review should include the following :

- An overview of the subject, issue, or theory under consideration, along with the objectives of the literature review,

- Division of works under review into themes or categories [e.g. works that support a particular position, those against, and those offering alternative approaches entirely],

- An explanation of how each work is similar to and how it varies from the others,

- Conclusions as to which pieces are best considered in their argument, are most convincing of their opinions, and make the greatest contribution to the understanding and development of their area of research.

The critical evaluation of each work should consider :

- Provenance -- what are the author's credentials? Are the author's arguments supported by evidence [e.g. primary historical material, case studies, narratives, statistics, recent scientific findings]?

- Methodology -- were the techniques used to identify, gather, and analyze the data appropriate to addressing the research problem? Was the sample size appropriate? Were the results effectively interpreted and reported?

- Objectivity -- is the author's perspective even-handed or prejudicial? Is contrary data considered or is certain pertinent information ignored to prove the author's point?

- Persuasiveness -- which of the author's theses are most convincing or least convincing?

- Value -- are the author's arguments and conclusions convincing? Does the work ultimately contribute in any significant way to an understanding of the subject?

Reviewing the Literature

While conducting a review of the literature, maximize the time you devote to writing this part of your paper by thinking broadly about what you should be looking for and evaluating. Review not just what the articles are saying, but how are they saying it.

Some questions to ask:

- How are they organizing their ideas?

- What methods have they used to study the problem?

- What theories have been used to explain, predict, or understand their research problem?

- What sources have they cited to support their conclusions?

- How have they used non-textual elements [e.g., charts, graphs, figures, etc.] to illustrate key points?

- When you begin to write your literature review section, you'll be glad you dug deeper into how the research was designed and constructed because it establishes a means for developing more substantial analysis and interpretation of the research problem.

Tools for Critical Appraisal

Now, that you have found articles based on your research question you can appraise the quality of those articles. These are resources you can use to appraise different study designs.

Centre for Evidence Based Medicine (Oxford)

University of Glasgow

"AFP uses the Strength-of-Recommendation Taxonomy (SORT), to label key recommendations in clinical review articles."

- SORT: Rating the Strength of Evidence American Family Physician and other family medicine journals use the Strength of Recommendation Taxonomy (SORT) system for rating bodies of evidence for key clinical recommendations.

- The Interprofessional Health Sciences Library

- 123 Metro Boulevard

- Nutley, NJ 07110

- [email protected]

- Visiting Campus

- News and Events

- Parents and Families

- Web Accessibility

- Career Center

- Public Safety

- Accountability

- Privacy Statements

- Report a Problem

- Login to LibApps

About Systematic Reviews

Choosing the Best Systematic Review Critical Appraisal Tool

Automate every stage of your literature review to produce evidence-based research faster and more accurately.

What is a critical appraisal.

Critical appraisal involves the evaluation of the quality, reliability, and relevance of studies, which is assessed based on quality measures specific to the research question, its related topics, design, methodology, data analysis, and the reporting of different types of systematic reviews .

Planning a critical appraisal starts with identifying or developing checklists. There are several critical appraisal tools that can be used to guide the process, adapting evaluation measures to be relevant to the specific research. It is important to pilot test these checklists and ensure that they are comprehensive enough to tackle all aspects of your systematic review.

What is the Purpose of a Critical Appraisal?

A critical appraisal is an integral part of a systematic review because it helps determine which studies can support the research. Here are some additional reasons why critical appraisals are important.

Assessing Quality

Critical appraisals employ measures specific to the systematic review. Through these, researchers can assess the quality of the studies—their trustworthiness, value, and reliability. This helps weed out substandard reviews, saving researchers’ time that would have been wasted reading full texts.

Determining Relevance

By appraising studies, researchers can determine whether or not they are relevant to the systematic review, such as if they’re connected to the topic or if their results support the research, etc. By doing this, the question “ Can you include a systematic review in a scoping review? ” can also be answered depending on its relevance to the study.

Identifying Flaws

Critical appraisals aim to identify methodological flaws in the literature, helping researchers and readers make informed decisions about the research evidence. They also help reduce the risk of bias when selecting studies.

What to Consider in a Critical Appraisal

Critical appraisals vary as they are specific to the topic, nature, and methodology of each systematic review. However, they generally have the same goal, trying to answer the following questions about the studies being considered:

- Is the study relevant to the research question?

- Is the study valid?

- Did the study use appropriate methods to address the research question?

- Does the study support the findings and evidence claims of the review?

- Are the valid results of the study important?

- Are the valid results of the study applicable to the research?

Learn More About DistillerSR

(Article continues below)

Critical Appraisal Tools

There are hundreds of tools and worksheets that can serve as a guide through the critical appraisal process. Here are just some of the most common ones to consider:

- AMSTAR – to examine the effectiveness of interventions.

- CASP – to appraise randomized control trials, systematic reviews, cohort studies, case-control studies, qualitative research, economic evaluations, diagnostic tests, and clinical prediction rules.

- Cochrane Risk of Bias Tool – to assess the risk of bias of randomized control trials (RCTs).

- GRADE – to grade the quality of evidence in healthcare research and policy.

- JBI Critical Tools – to assess trustworthiness, relevance, and results of published papers.

- NOS – to assess the quality of non-randomized studies in meta-analyses.

- ROBIS – to assess the risk of bias in interventions, diagnosis, prognosis, and etiology.

- STROBE – to address cohort, case-control, and conduct cross-sectional studies.

What is the Best Critical Appraisal Tool?

There is no single best critical appraisal tool for any study design, nor is there a generic one that can be expected to consistently do well when used across different study types.

Critical appraisal tools vary considerably in intent, components, and construction, and the right one for your systematic review is the one that addresses the components that you need to tackle and ensures that your research results in comprehensive, unbiased, and valid findings.

3 Reasons to Connect

- University of Texas Libraries

- UT Libraries

Systematic Reviews & Evidence Synthesis Methods

Critical appraisal.

- Types of Reviews

- Formulate Question

- Find Existing Reviews & Protocols

- Register a Protocol

- Searching Systematically

- Supplementary Searching

- Managing Results

- Deduplication

- Glossary of terms

- Librarian Support

- Video tutorials This link opens in a new window

- Systematic Review & Evidence Synthesis Boot Camp

Some reviews require a critical appraisal for each study that makes it through the screening process. This involves a risk of bias assessment and/or a quality assessment. The goal of these reviews is not just to find all of the studies, but to determine their methodological rigor, and therefore, their credibility.

"Critical appraisal is the balanced assessment of a piece of research, looking for its strengths and weaknesses and them coming to a balanced judgement about its trustworthiness and its suitability for use in a particular context." 1

It's important to consider the impact that poorly designed studies could have on your findings and to rule out inaccurate or biased work.

Selection of a valid critical appraisal tool, testing the tool with several of the selected studies, and involving two or more reviewers in the appraisal are good practices to follow.

1. Purssell E, McCrae N. How to Perform a Systematic Literature Review: A Guide for Healthcare Researchers, Practitioners and Students. 1st ed. Springer ; 2020.

Evaluation Tools

- The Appraisal of Guidelines for Research & Evaluation Instrument (AGREE II) The Appraisal of Guidelines for Research & Evaluation Instrument (AGREE II) was developed to address the issue of variability in the quality of practice guidelines.

- Centre for Evidence-Based Medicine (CEBM). Critical Appraisal Tools "contains useful tools and downloads for the critical appraisal of different types of medical evidence. Example appraisal sheets are provided together with several helpful examples."

- Critical Appraisal Skills Programme (CASP) Checklists Critical Appraisal checklists for many different study types

- Critical Review Form for Qualitative Studies Version 2, developed out of McMaster University

- Development of a critical appraisal tool to assess the quality of cross-sectional studies (AXIS) Downes MJ, Brennan ML, Williams HC, et al. Development of a critical appraisal tool to assess the quality of cross-sectional studies (AXIS). BMJ Open 2016;6:e011458. doi:10.1136/bmjopen-2016-011458

- Downs & Black Checklist for Assessing Studies Downs, S. H., & Black, N. (1998). The Feasibility of Creating a Checklist for the Assessment of the Methodological Quality Both of Randomised and Non-Randomised Studies of Health Care Interventions. Journal of Epidemiology and Community Health (1979-), 52(6), 377–384.

- GRADE The Grading of Recommendations Assessment, Development and Evaluation (GRADE) working group "has developed a common, sensible and transparent approach to grading quality (or certainty) of evidence and strength of recommendations."

- Grade Handbook Full handbook on the GRADE method for grading quality of evidence.

- MAGIC (Making GRADE the Irresistible choice) Clear succinct guidance in how to use GRADE

- Joanna Briggs Institute. Critical Appraisal Tools "JBI’s critical appraisal tools assist in assessing the trustworthiness, relevance and results of published papers." Includes checklists for 13 types of articles.

- Latitudes Network This is a searchable library of validity assessment tools for use in evidence syntheses. This website also provides access to training on the process of validity assessment.

- Mixed Methods Appraisal Tool A tool that can be used to appraise a mix of studies that are included in a systematic review - qualitative research, RCTs, non-randomized studies, quantitative studies, mixed methods studies.

- RoB 2 Tool Higgins JPT, Sterne JAC, Savović J, Page MJ, Hróbjartsson A, Boutron I, Reeves B, Eldridge S. A revised tool for assessing risk of bias in randomized trials In: Chandler J, McKenzie J, Boutron I, Welch V (editors). Cochrane Methods. Cochrane Database of Systematic Reviews 2016, Issue 10 (Suppl 1). dx.doi.org/10.1002/14651858.CD201601.

- ROBINS-I Risk of Bias for non-randomized (observational) studies or cohorts of interventions Sterne J A, Hernán M A, Reeves B C, Savović J, Berkman N D, Viswanathan M et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions BMJ 2016; 355 :i4919 doi:10.1136/bmj.i4919

- Scottish Intercollegiate Guidelines Network. Critical Appraisal Notes and Checklists "Methodological assessment of studies selected as potential sources of evidence is based on a number of criteria that focus on those aspects of the study design that research has shown to have a significant effect on the risk of bias in the results reported and conclusions drawn. These criteria differ between study types, and a range of checklists is used to bring a degree of consistency to the assessment process."

- The TREND Statement (CDC) Des Jarlais DC, Lyles C, Crepaz N, and the TREND Group. Improving the reporting quality of nonrandomized evaluations of behavioral and public health interventions: The TREND statement. Am J Public Health. 2004;94:361-366.

- Assembling the Pieces of a Systematic Reviews, Chapter 8: Evaluating: Study Selection and Critical Appraisal.

- How to Perform a Systematic Literature Review, Chapter: Critical Appraisal: Assessing the Quality of Studies.

Other library guides

- Duke University Medical Center Library. Systematic Reviews: Assess for Quality and Bias

- UNC Health Sciences Library. Systematic Reviews: Assess Quality of Included Studies

- Last Updated: Apr 9, 2024 8:57 PM

- URL: https://guides.lib.utexas.edu/systematicreviews

- Mayo Clinic Libraries

- Systematic Reviews

- Critical Appraisal by Study Design

Systematic Reviews: Critical Appraisal by Study Design

- Knowledge Synthesis Comparison

- Knowledge Synthesis Decision Tree

- Standards & Reporting Results

- Materials in the Mayo Clinic Libraries

- Training Resources

- Review Teams

- Develop & Refine Your Research Question

- Develop a Timeline

- Project Management

- Communication

- PRISMA-P Checklist

- Eligibility Criteria

- Register your Protocol

- Other Resources

- Other Screening Tools

- Grey Literature Searching

- Citation Searching

- Data Extraction Tools

- Minimize Bias

- Synthesis & Meta-Analysis

- Publishing your Systematic Review

Tools for Critical Appraisal of Studies

“The purpose of critical appraisal is to determine the scientific merit of a research report and its applicability to clinical decision making.” 1 Conducting a critical appraisal of a study is imperative to any well executed evidence review, but the process can be time consuming and difficult. 2 The critical appraisal process requires “a methodological approach coupled with the right tools and skills to match these methods is essential for finding meaningful results.” 3 In short, it is a method of differentiating good research from bad research.

Critical Appraisal by Study Design (featured tools)

- Non-RCTs or Observational Studies

- Diagnostic Accuracy

- Animal Studies

- Qualitative Research

- Tool Repository

- AMSTAR 2 The original AMSTAR was developed to assess the risk of bias in systematic reviews that included only randomized controlled trials. AMSTAR 2 was published in 2017 and allows researchers to “identify high quality systematic reviews, including those based on non-randomised studies of healthcare interventions.” 4 more... less... AMSTAR 2 (A MeaSurement Tool to Assess systematic Reviews)

- ROBIS ROBIS is a tool designed specifically to assess the risk of bias in systematic reviews. “The tool is completed in three phases: (1) assess relevance(optional), (2) identify concerns with the review process, and (3) judge risk of bias in the review. Signaling questions are included to help assess specific concerns about potential biases with the review.” 5 more... less... ROBIS (Risk of Bias in Systematic Reviews)

- BMJ Framework for Assessing Systematic Reviews This framework provides a checklist that is used to evaluate the quality of a systematic review.

- CASP Checklist for Systematic Reviews This CASP checklist is not a scoring system, but rather a method of appraising systematic reviews by considering: 1. Are the results of the study valid? 2. What are the results? 3. Will the results help locally? more... less... CASP (Critical Appraisal Skills Programme)

- CEBM Systematic Reviews Critical Appraisal Sheet The CEBM’s critical appraisal sheets are designed to help you appraise the reliability, importance, and applicability of clinical evidence. more... less... CEBM (Centre for Evidence-Based Medicine)

- JBI Critical Appraisal Tools, Checklist for Systematic Reviews JBI Critical Appraisal Tools help you assess the methodological quality of a study and to determine the extent to which study has addressed the possibility of bias in its design, conduct and analysis.

- NHLBI Study Quality Assessment of Systematic Reviews and Meta-Analyses The NHLBI’s quality assessment tools were designed to assist reviewers in focusing on concepts that are key for critical appraisal of the internal validity of a study. more... less... NHLBI (National Heart, Lung, and Blood Institute)

- RoB 2 RoB 2 “provides a framework for assessing the risk of bias in a single estimate of an intervention effect reported from a randomized trial,” rather than the entire trial. 6 more... less... RoB 2 (revised tool to assess Risk of Bias in randomized trials)

- CASP Randomised Controlled Trials Checklist This CASP checklist considers various aspects of an RCT that require critical appraisal: 1. Is the basic study design valid for a randomized controlled trial? 2. Was the study methodologically sound? 3. What are the results? 4. Will the results help locally? more... less... CASP (Critical Appraisal Skills Programme)

- CONSORT Statement The CONSORT checklist includes 25 items to determine the quality of randomized controlled trials. “Critical appraisal of the quality of clinical trials is possible only if the design, conduct, and analysis of RCTs are thoroughly and accurately described in the report.” 7 more... less... CONSORT (Consolidated Standards of Reporting Trials)

- NHLBI Study Quality Assessment of Controlled Intervention Studies The NHLBI’s quality assessment tools were designed to assist reviewers in focusing on concepts that are key for critical appraisal of the internal validity of a study. more... less... NHLBI (National Heart, Lung, and Blood Institute)

- JBI Critical Appraisal Tools Checklist for Randomized Controlled Trials JBI Critical Appraisal Tools help you assess the methodological quality of a study and to determine the extent to which study has addressed the possibility of bias in its design, conduct and analysis.

- ROBINS-I ROBINS-I is a “tool for evaluating risk of bias in estimates of the comparative effectiveness… of interventions from studies that did not use randomization to allocate units… to comparison groups.” 8 more... less... ROBINS-I (Risk Of Bias in Non-randomized Studies – of Interventions)

- NOS This tool is used primarily to evaluate and appraise case-control or cohort studies. more... less... NOS (Newcastle-Ottawa Scale)

- AXIS Cross-sectional studies are frequently used as an evidence base for diagnostic testing, risk factors for disease, and prevalence studies. “The AXIS tool focuses mainly on the presented [study] methods and results.” 9 more... less... AXIS (Appraisal tool for Cross-Sectional Studies)

- NHLBI Study Quality Assessment Tools for Non-Randomized Studies The NHLBI’s quality assessment tools were designed to assist reviewers in focusing on concepts that are key for critical appraisal of the internal validity of a study. • Quality Assessment Tool for Observational Cohort and Cross-Sectional Studies • Quality Assessment of Case-Control Studies • Quality Assessment Tool for Before-After (Pre-Post) Studies With No Control Group • Quality Assessment Tool for Case Series Studies more... less... NHLBI (National Heart, Lung, and Blood Institute)

- Case Series Studies Quality Appraisal Checklist Developed by the Institute of Health Economics (Canada), the checklist is comprised of 20 questions to assess “the robustness of the evidence of uncontrolled, [case series] studies.” 10

- Methodological Quality and Synthesis of Case Series and Case Reports In this paper, Dr. Murad and colleagues “present a framework for appraisal, synthesis and application of evidence derived from case reports and case series.” 11

- MINORS The MINORS instrument contains 12 items and was developed for evaluating the quality of observational or non-randomized studies. 12 This tool may be of particular interest to researchers who would like to critically appraise surgical studies. more... less... MINORS (Methodological Index for Non-Randomized Studies)

- JBI Critical Appraisal Tools for Non-Randomized Trials JBI Critical Appraisal Tools help you assess the methodological quality of a study and to determine the extent to which study has addressed the possibility of bias in its design, conduct and analysis. • Checklist for Analytical Cross Sectional Studies • Checklist for Case Control Studies • Checklist for Case Reports • Checklist for Case Series • Checklist for Cohort Studies

- QUADAS-2 The QUADAS-2 tool “is designed to assess the quality of primary diagnostic accuracy studies… [it] consists of 4 key domains that discuss patient selection, index test, reference standard, and flow of patients through the study and timing of the index tests and reference standard.” 13 more... less... QUADAS-2 (a revised tool for the Quality Assessment of Diagnostic Accuracy Studies)

- JBI Critical Appraisal Tools Checklist for Diagnostic Test Accuracy Studies JBI Critical Appraisal Tools help you assess the methodological quality of a study and to determine the extent to which study has addressed the possibility of bias in its design, conduct and analysis.

- STARD 2015 The authors of the standards note that “[e]ssential elements of [diagnostic accuracy] study methods are often poorly described and sometimes completely omitted, making both critical appraisal and replication difficult, if not impossible.”10 The Standards for the Reporting of Diagnostic Accuracy Studies was developed “to help… improve completeness and transparency in reporting of diagnostic accuracy studies.” 14 more... less... STARD 2015 (Standards for the Reporting of Diagnostic Accuracy Studies)

- CASP Diagnostic Study Checklist This CASP checklist considers various aspects of diagnostic test studies including: 1. Are the results of the study valid? 2. What were the results? 3. Will the results help locally? more... less... CASP (Critical Appraisal Skills Programme)

- CEBM Diagnostic Critical Appraisal Sheet The CEBM’s critical appraisal sheets are designed to help you appraise the reliability, importance, and applicability of clinical evidence. more... less... CEBM (Centre for Evidence-Based Medicine)

- SYRCLE’s RoB “[I]mplementation of [SYRCLE’s RoB tool] will facilitate and improve critical appraisal of evidence from animal studies. This may… enhance the efficiency of translating animal research into clinical practice and increase awareness of the necessity of improving the methodological quality of animal studies.” 15 more... less... SYRCLE’s RoB (SYstematic Review Center for Laboratory animal Experimentation’s Risk of Bias)

- ARRIVE 2.0 “The [ARRIVE 2.0] guidelines are a checklist of information to include in a manuscript to ensure that publications [on in vivo animal studies] contain enough information to add to the knowledge base.” 16 more... less... ARRIVE 2.0 (Animal Research: Reporting of In Vivo Experiments)

- Critical Appraisal of Studies Using Laboratory Animal Models This article provides “an approach to critically appraising papers based on the results of laboratory animal experiments,” and discusses various “bias domains” in the literature that critical appraisal can identify. 17

- CEBM Critical Appraisal of Qualitative Studies Sheet The CEBM’s critical appraisal sheets are designed to help you appraise the reliability, importance and applicability of clinical evidence. more... less... CEBM (Centre for Evidence-Based Medicine)

- CASP Qualitative Studies Checklist This CASP checklist considers various aspects of qualitative research studies including: 1. Are the results of the study valid? 2. What were the results? 3. Will the results help locally? more... less... CASP (Critical Appraisal Skills Programme)

- Quality Assessment and Risk of Bias Tool Repository Created by librarians at Duke University, this extensive listing contains over 100 commonly used risk of bias tools that may be sorted by study type.

- Latitudes Network A library of risk of bias tools for use in evidence syntheses that provides selection help and training videos.

References & Recommended Reading

1. Kolaski, K., Logan, L. R., & Ioannidis, J. P. (2024). Guidance to best tools and practices for systematic reviews . British Journal of Pharmacology , 181 (1), 180-210

2. Portney LG. Foundations of clinical research : applications to evidence-based practice. Fourth edition. ed. Philadelphia: F A Davis; 2020.

3. Fowkes FG, Fulton PM. Critical appraisal of published research: introductory guidelines. BMJ (Clinical research ed). 1991;302(6785):1136-1140.

4. Singh S. Critical appraisal skills programme. Journal of Pharmacology and Pharmacotherapeutics. 2013;4(1):76-77.

5. Shea BJ, Reeves BC, Wells G, et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ (Clinical research ed). 2017;358:j4008.

6. Whiting P, Savovic J, Higgins JPT, et al. ROBIS: A new tool to assess risk of bias in systematic reviews was developed. Journal of clinical epidemiology. 2016;69:225-234.

7. Sterne JAC, Savovic J, Page MJ, et al. RoB 2: a revised tool for assessing risk of bias in randomised trials. BMJ (Clinical research ed). 2019;366:l4898.

8. Moher D, Hopewell S, Schulz KF, et al. CONSORT 2010 Explanation and Elaboration: Updated guidelines for reporting parallel group randomised trials. Journal of clinical epidemiology. 2010;63(8):e1-37.

9. Sterne JA, Hernan MA, Reeves BC, et al. ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ (Clinical research ed). 2016;355:i4919.

10. Downes MJ, Brennan ML, Williams HC, Dean RS. Development of a critical appraisal tool to assess the quality of cross-sectional studies (AXIS). BMJ open. 2016;6(12):e011458.

11. Guo B, Moga C, Harstall C, Schopflocher D. A principal component analysis is conducted for a case series quality appraisal checklist. Journal of clinical epidemiology. 2016;69:199-207.e192.

12. Murad MH, Sultan S, Haffar S, Bazerbachi F. Methodological quality and synthesis of case series and case reports. BMJ evidence-based medicine. 2018;23(2):60-63.

13. Slim K, Nini E, Forestier D, Kwiatkowski F, Panis Y, Chipponi J. Methodological index for non-randomized studies (MINORS): development and validation of a new instrument. ANZ journal of surgery. 2003;73(9):712-716.

14. Whiting PF, Rutjes AWS, Westwood ME, et al. QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Annals of internal medicine. 2011;155(8):529-536.

15. Bossuyt PM, Reitsma JB, Bruns DE, et al. STARD 2015: an updated list of essential items for reporting diagnostic accuracy studies. BMJ (Clinical research ed). 2015;351:h5527.

16. Hooijmans CR, Rovers MM, de Vries RBM, Leenaars M, Ritskes-Hoitinga M, Langendam MW. SYRCLE's risk of bias tool for animal studies. BMC medical research methodology. 2014;14:43.

17. Percie du Sert N, Ahluwalia A, Alam S, et al. Reporting animal research: Explanation and elaboration for the ARRIVE guidelines 2.0. PLoS biology. 2020;18(7):e3000411.

18. O'Connor AM, Sargeant JM. Critical appraisal of studies using laboratory animal models. ILAR journal. 2014;55(3):405-417.

- << Previous: Minimize Bias

- Next: GRADE >>

- Last Updated: Apr 25, 2024 8:44 AM

- URL: https://libraryguides.mayo.edu/systematicreviewprocess

Cookies on this website

We use cookies to ensure that we give you the best experience on our website. If you click 'Accept all cookies' we'll assume that you are happy to receive all cookies and you won't see this message again. If you click 'Reject all non-essential cookies' only necessary cookies providing core functionality such as security, network management, and accessibility will be enabled. Click 'Find out more' for information on how to change your cookie settings.

Critical Appraisal tools

Critical appraisal worksheets to help you appraise the reliability, importance and applicability of clinical evidence.

Critical appraisal is the systematic evaluation of clinical research papers in order to establish:

- Does this study address a clearly focused question ?

- Did the study use valid methods to address this question?

- Are the valid results of this study important?

- Are these valid, important results applicable to my patient or population?

If the answer to any of these questions is “no”, you can save yourself the trouble of reading the rest of it.

This section contains useful tools and downloads for the critical appraisal of different types of medical evidence. Example appraisal sheets are provided together with several helpful examples.

Critical Appraisal Worksheets

- Systematic Reviews Critical Appraisal Sheet

- Diagnostics Critical Appraisal Sheet

- Prognosis Critical Appraisal Sheet

- Randomised Controlled Trials (RCT) Critical Appraisal Sheet

- Critical Appraisal of Qualitative Studies Sheet

- IPD Review Sheet

Chinese - translated by Chung-Han Yang and Shih-Chieh Shao

- Systematic Reviews Critical Appraisal Sheet

- Diagnostic Study Critical Appraisal Sheet

- Prognostic Critical Appraisal Sheet

- RCT Critical Appraisal Sheet

- IPD reviews Critical Appraisal Sheet

- Qualitative Studies Critical Appraisal Sheet

German - translated by Johannes Pohl and Martin Sadilek

- Systematic Review Critical Appraisal Sheet

- Diagnosis Critical Appraisal Sheet

- Prognosis Critical Appraisal Sheet

- Therapy / RCT Critical Appraisal Sheet

Lithuanian - translated by Tumas Beinortas

- Systematic review appraisal Lithuanian (PDF)

- Diagnostic accuracy appraisal Lithuanian (PDF)

- Prognostic study appraisal Lithuanian (PDF)

- RCT appraisal sheets Lithuanian (PDF)

Portugese - translated by Enderson Miranda, Rachel Riera and Luis Eduardo Fontes

- Portuguese – Systematic Review Study Appraisal Worksheet

- Portuguese – Diagnostic Study Appraisal Worksheet

- Portuguese – Prognostic Study Appraisal Worksheet

- Portuguese – RCT Study Appraisal Worksheet

- Portuguese – Systematic Review Evaluation of Individual Participant Data Worksheet

- Portuguese – Qualitative Studies Evaluation Worksheet

Spanish - translated by Ana Cristina Castro

- Systematic Review (PDF)

- Diagnosis (PDF)

- Prognosis Spanish Translation (PDF)

- Therapy / RCT Spanish Translation (PDF)

Persian - translated by Ahmad Sofi Mahmudi

- Prognosis (PDF)

- PICO Critical Appraisal Sheet (PDF)

- PICO Critical Appraisal Sheet (MS-Word)

- Educational Prescription Critical Appraisal Sheet (PDF)

Explanations & Examples

- Pre-test probability

- SpPin and SnNout

- Likelihood Ratios

CASP Checklists

How to use our CASP Checklists

Referencing and Creative Commons

- Online Training Courses

- CASP Workshops

- What is Critical Appraisal

- Study Designs

- Useful Links

- Bibliography

- View all Tools and Resources

- Testimonials

Critical Appraisal Checklists

We offer a number of free downloadable checklists to help you more easily and accurately perform critical appraisal across a number of different study types.

The CASP checklists are easy to understand but in case you need any further guidance on how they are structured, take a look at our guide on how to use our CASP checklists .

CASP Checklist: Systematic Reviews with Meta-Analysis of Observational Studies

CASP Checklist: Systematic Reviews with Meta-Analysis of Randomised Controlled Trials (RCTs)

CASP Randomised Controlled Trial Checklist

- Print & Fill

CASP Systematic Review Checklist

CASP Qualitative Studies Checklist

CASP Cohort Study Checklist

CASP Diagnostic Study Checklist

CASP Case Control Study Checklist

CASP Economic Evaluation Checklist

CASP Clinical Prediction Rule Checklist

Checklist Archive

- CASP Randomised Controlled Trial Checklist 2018 fillable form

- CASP Randomised Controlled Trial Checklist 2018

CASP Checklist

Need more information?

- Online Learning

- Privacy Policy

Critical Appraisal Skills Programme

Critical Appraisal Skills Programme (CASP) will use the information you provide on this form to be in touch with you and to provide updates and marketing. Please let us know all the ways you would like to hear from us:

We use Mailchimp as our marketing platform. By clicking below to subscribe, you acknowledge that your information will be transferred to Mailchimp for processing. Learn more about Mailchimp's privacy practices here.

Copyright 2024 CASP UK - OAP Ltd. All rights reserved Website by Beyond Your Brand

Literature review methods

Critical appraisal, assess for quality.

Critical appraisal refers to the process of judging the validity and quality of a research paper. Because your review will be a synthesis of the research conducted by others, it is important to consider major points about the studies you include such as:

- Is the study valid?

- What are the results, and are they significant or trustworthy?

- Are the results applicable to your research question?

Critical appraisal tools

Several checklists or tools are available to help you assess the quality of studies to be included in your review.

- AACODS checklist for appraising grey literature

- CASP includes checklists for systematic reviews, RCTs, qualitative studies etc.

- Centre for Evidence Based Medicine appraisal worksheets to appraise the reliability, importance and applicability of clinical evidence

- Joanna Briggs Institute critical appraisal tools to assess the trustworthiness, relevance and results of published papers

- RoBiS tool for assessing the risk of bias in systematic reviews (rather than in primary studies)

- RoB 2 Cochrane risk-of-bias tool for randomized trials

Additional resources

- Greenhalgh, T. (2019). How to read a paper: the basics of evidence-based medicine and healthcare (6th ed). Wiley.

- << Previous: Screening

- Next: Synthesis analysis >>

Contact the library

- Ask the library

- Book time for search help

- Suggest an acquisition

- 036-10 10 10

- Follow us on Instagram

- Follow us on Facebook

Visiting address

University library Jönköping University campus, building C Gjuterigatan 5 553 18 Jönköping

- Delivery addresses

Opening hours

- Mondays 8 – 20

- Tuesdays 8 – 20

- Wednesdays 8 – 20

- Thursdays 8 – 20

- Fridays 8 – 18

- Saturdays 11 – 15

- Sundays Closed

See more opening hours .

Your web browser is outdated and may be insecure

The RCN recommends using an updated browser such as Microsoft Edge or Google Chrome

Critical Appraisal

Use this guide to find information resources about critical appraisal including checklists, books and journal articles.

Key Resources

- This online resource explains the sections commonly used in research articles. Understanding how research articles are organised can make reading and evaluating them easier View page

- Critical appraisal checklists

- Worksheets for appraising systematic reviews, diagnostics, prognostics and RCTs. View page

- A free online resource for both healthcare staff and patients; four modules of 30–45 minutes provide an introduction to evidence based medicine, clinical trials and Cochrane Evidence. View page

- This tool will guide you through a series of questions to help you to review and interpret a published health research paper. View page

- The PRISMA flow diagram depicts the flow of information through the different phases of a literature review. It maps out the number of records identified, included and excluded, and the reasons for exclusions. View page

- A useful resource for methods and evidence in applied social science. View page

- A comprehensive database of reporting guidelines. Covers all the main study types. View page

- A tool to assess the methodological quality of systematic reviews. View page

- Borrow from RCN Library services

- Chapter 5 covers critical appraisal of the literature. View this eBook

- Chapter 6 covers assessing the evidence base. Borrow from RCN Library services

- Section 1 covers an introduction to critical appraisal. Section 3 covers appraising difference types of papers including qualitative papers and observational studies. View this eBook

- Chapter 6 covers critically appraising the literature. Borrow from RCN Library services

- View this eBook

- Chapter 8 covers critical appraisal of the evidence. View this eBook

- Chapter 18 covers critical appraisal of nursing studies. View this eBook

- Borrow from RCN Library Services

Book subject search

- Critical appraisal

Journal articles

- View article

Shea BJ and others (2017) AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions or both, British Medical Journal, 358.

- An outline of AMSTAR 2 and its use for as a critical appraisal tool for systematic reviews. View article (open access)

- View articles

Editor of this guide

RCN Library and Archive Service

Upcoming events relating to this subject guide

Know How to Reference Accurately and Avoid Plagiarism

Learn how to use the Harvard refencing style and why referencing is important at this event for RCN members.

Easy referencing ... in 30 minutes

Learn how to generate quick references and citations using free, easy to use, online tools.

Know How to Evaluate Healthcare Information

This workshop is led by RCN librarians, who will help you develop the important skill of evaluating healthcare information.

Get online and get ahead with RCN Libraries

Kick start the new academic year and find out how the RCN Library and Archive Service can assist you with your studies.

Know How to Search CINAHL

Learn about using the CINAHL database for literature searches at this event for RCN members.

Library Search ... in 30 minutes

Learn how RCN members can quickly and easily search for articles, books and more using our fantastic and easy to use Library Search tool.

Page last updated - 08/02/2024

Your Spaces

- RCNi Profile

- Steward Portal

- RCN Foundation

- RCN Library

- RCN Starting Out

Work & Venue

- RCNi Nursing Jobs

- Work for the RCN

- RCN Working with us

Further Info

- Manage Cookie Preferences

- Modern slavery statement

- Accessibility

- Press office

Connect with us:

© 2024 Royal College of Nursing

- Methodology

- Open access

- Published: 26 March 2019

SANRA—a scale for the quality assessment of narrative review articles

- Christopher Baethge ORCID: orcid.org/0000-0001-6246-3674 1 , 2 ,

- Sandra Goldbeck-Wood 1 , 3 &

- Stephan Mertens 1

Research Integrity and Peer Review volume 4 , Article number: 5 ( 2019 ) Cite this article

136k Accesses

623 Citations

38 Altmetric

Metrics details

Narrative reviews are the commonest type of articles in the medical literature. However, unlike systematic reviews and randomized controlled trials (RCT) articles, for which formal instruments exist to evaluate quality, there is currently no instrument available to assess the quality of narrative reviews. In response to this gap, we developed SANRA, the Scale for the Assessment of Narrative Review Articles.

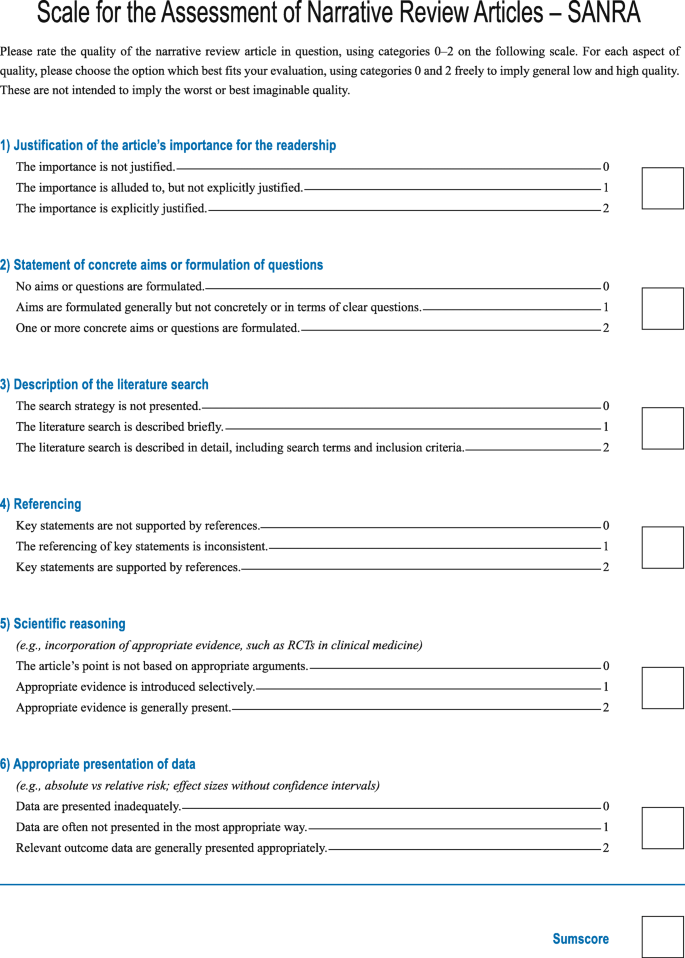

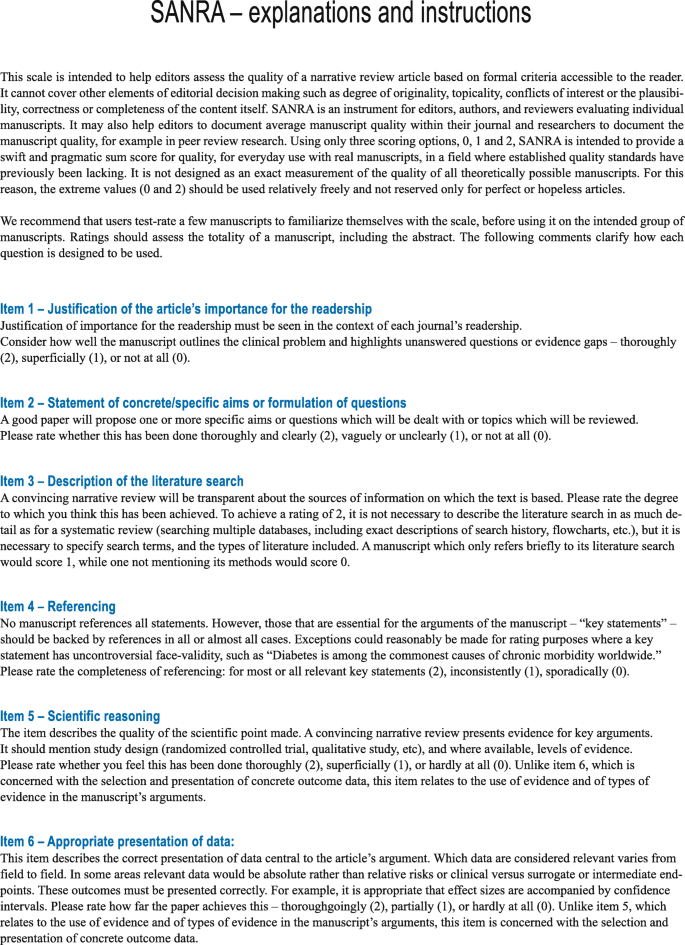

A team of three experienced journal editors modified or deleted items in an earlier SANRA version based on face validity, item-total correlations, and reliability scores from previous tests. We deleted an item which addressed a manuscript’s writing and accessibility due to poor inter-rater reliability. The six items which form the revised scale are rated from 0 (low standard) to 2 (high standard) and cover the following topics: explanation of (1) the importance and (2) the aims of the review, (3) literature search and (4) referencing and presentation of (5) evidence level and (6) relevant endpoint data. For all items, we developed anchor definitions and examples to guide users in filling out the form. The revised scale was tested by the same editors (blinded to each other’s ratings) in a group of 30 consecutive non-systematic review manuscripts submitted to a general medical journal.

Raters confirmed that completing the scale is feasible in everyday editorial work. The mean sum score across all 30 manuscripts was 6.0 out of 12 possible points (SD 2.6, range 1–12). Corrected item-total correlations ranged from 0.33 (item 3) to 0.58 (item 6), and Cronbach’s alpha was 0.68 (internal consistency). The intra-class correlation coefficient (average measure) was 0.77 [95% CI 0.57, 0.88] (inter-rater reliability). Raters often disagreed on items 1 and 4.

Conclusions

SANRA’s feasibility, inter-rater reliability, homogeneity of items, and internal consistency are sufficient for a scale of six items. Further field testing, particularly of validity, is desirable. We recommend rater training based on the “explanations and instructions” document provided with SANRA. In editorial decision-making, SANRA may complement journal-specific evaluation of manuscripts—pertaining to, e.g., audience, originality or difficulty—and may contribute to improving the standard of non-systematic reviews.

Peer Review reports

Narrative review articles are common in the medical literature. Bastian et al. found that they constitute the largest share of all text types in medicine and they concluded that they “remain the staple of medical literature” [ 1 ]. Narrative reviews also appear popular among both authors and readers, and it is plausible to assume that they exercise an enormous influence among doctors in clinical practice and research. However, because their quality varies widely, they have frequently been compared in blanket, negative terms with systematic reviews.

We use the term narrative review to refer to an attempt to summarize the literature in a way which is not explicitly systematic, where the minimum requirement for the term systematic relates to the method of the literature search, but in a wider sense includes a specific research question and a comprehensive summary of all studies [ 2 ].

While systematic reviews are not per se superior articles and while certain systematic reviews have been criticized lately [ 3 ], non-systematic reviews or narrative reviews have been widely criticized as unreliable [ 1 , 4 ]. Hence, the hierarchy of evidence-based medicine places systematic reviews much higher than non-systematic ones. However, it is likely—and even desirable—that good quality narrative reviews will continue to play an important role in medicine: while systematic reviews are superior to narrative reviews in answering specific questions (for example, whether it is advisable to switch an antidepressant among antidepressant non-responders in patients with major depressive disorder [ 5 ]), narrative reviews are better suited to addressing a topic in wider ways (for example, outlining the general principles of diagnosing and treating depression [ 6 ]).

Critical appraisal tools have been developed for systematic reviews (e.g., AMSTAR 2 [A MeaSurement Tool to Assess Systematic Reviews] [ 7 ]) and papers on RCTs (e.g., the CASP [Critical Appraisal Skills Program] checklist for randomized trials [ 8 ]) and other types of medical studies. For narrative reviews, in contrast, no critical appraisal, or quality assessment tool is available. Such a tool, however, if simple and brief enough for day-to-day use, may support editors in choosing or improving manuscripts, help reviewers and readers in assessing the quality of a paper, and aid authors in preparing narrative reviews. It may improve the general quality of narrative reviews.

As a consequence, we have developed SANRA, the Scale for the Assessment of Narrative Review Articles, a brief critical appraisal tool for the assessment of non-systematic articles. Here, we present the revised scale and the results of a field test regarding its feasibility, item-total correlation, internal consistency, reliability, and criterion validity.

SANRA was developed between 2010 and 2017 by three experienced editors (CB, SGW, and SM) working at a general medical journal, Deutsches Ärzteblatt , the journal of the German Medical Association and the National Association of Statutory Health Insurance Physicians . It is intended to be a simple and brief quality assessment instrument not only to assist editors in their decisions about manuscripts, but also to help reviewers and readers in their assessment of papers and authors in writing narrative reviews.

Two earlier, seven-item versions of SANRA have been developed and tested by the authors, the first in 10 narrative reviews from the field of neurology as retrieved through a PubMed search, the second among 12 consecutive narrative reviews submitted to Deutsches Ärzteblatt —both showing satisfactory internal consistency and inter-rater reliability [ 9 ].

The current version of SANRA [ 10 ] has been revised by the authors in 2014 in order to simplify the scale and make it more robust. We simplified the wording of the items, and we deleted an item addressing a manuscript’s writing and accessibility because ratings of that item differed considerably. The six items that form the revised scale are rated in integers from 0 (low standard) to 2 (high standard), with 1 as an intermediate score. The maximal sum score is 12.

The sum score of the scale is intended to measure the construct “quality of a narrative review article” and covers the following topics: explanation of the review’s importance (item 1) and statement of the aims (item 2) of the review, description of the literature search (item 3), referencing (item 4), scientific reasoning (item 5), and presentation of relevant and appropriate endpoint data (item 6) (Fig. 1 ). For all items, we developed anchor definitions and examples to guide users in filling out the instrument, provided in the document “explanations and instructions,” accompanying the scale. This document was also edited to improve clarity (Fig. 2 ).

SANRA - Scale

SANRA—explanations and instructions document

In 2015, one rater (CB) screened all submissions to Deutsches Ärzteblatt in 2015, and the first 30 consecutive review manuscripts without systematic literature searches were selected for inclusion in the present study. All three raters (CB, SGW, and SM) are editors, with, in 2015, at least 10 years of experience each. They scored the manuscripts independently and blinded to each other’s ratings.

Statistical analysis

Descriptive data are shown as means or medians, as appropriate, and as ranges, standard deviations, or confidence intervals. This study aimed at testing SANRA’s internal consistency (Cronbach’s alpha) and the item-total correlation—indicating whether the items measure the same phenomenon, here different aspects of review paper quality—as well as SANRA’s inter-rater reliability with regard to its sum score. Inter-rater reliability, as a measure of the consistency among different raters, was expressed as the average measure intra-class correlation, ICC, using a two-way random effects model (consistency definition). As an approximation of SANRA’s criterion validity (Is the score predictive of other indicators of paper quality, e.g., acceptance and rejection or citations?), we analyzed post hoc whether average sum scores of SANRA were associated with the decision to accept or reject the 30 manuscripts under study (point biserial correlation for the association between a dichotomous and a continuous variable). All calculations were carried out using SPSS. Where possible, the presentation follows the recommendations of the Guidelines for Reporting Reliability and Agreement Studies (GRRAS) [ 11 ].

All 90 ratings (3 raters × 30 manuscripts) were used for statistical analysis. The mean sum score across all 30 manuscripts ( N = 90) was 6.0 out of 12 possible points (SD 2.6, range 1–12, median 6). Highest scores were rated for item 4 (mean 1.25; SD 0.70), item 2 (mean 1.14; SD 0.84), and item 1 (mean 1.1; SD 0.69) whereas items 6, 5, and 3 had the lowest scores (means of 0.81 (SD 0.65), 0.83 (SD 0.67), and 0.84 (SD 0.60), respectively) (all single-item medians: 1).

The scale’s internal consistency, measured as Cronbach’s alpha, was 0.68. Corrected item-total correlations ranged from 0.33 to 0.58 (Table 1 ). Tentative deletions of each item to assess the effect of these on consistency showed reduced internal consistency with every deleted item (0.58–0.67) (as shown by the alpha values in Table 1 ).

Across 180 single-item ratings (6 items × 30 manuscripts), the maximum difference among the 3 raters was 2 in 12.8% ( n = 23; most often in items 1, 2, and 4), in 56.7% ( n = 102), the raters differed by no more than 1 point, and in 30.6% ( n = 55), they entirely agreed (most often in items 2 and 3). The intra-class correlation coefficient (average measure) amounted to 0.77 [95% CI 0.57, 0.88; F 4.3; df 29, 58]. Disagreements most often occurred with regard to items 1 and 4.

Average SANRA sum scores of the 30 manuscripts were modestly associated with the editorial decision of acceptance (mean score 6.6, SD 1.9; n = 17) or rejection (mean score 5.1, SD 2.1; n = 13): point biserial correlation of 0.37 ( t = 2.09, df 28; two-sided p = 0.046).

All raters confirmed that completing the scale is feasible in everyday editorial work.

This study yielded three important findings: (1) SANRA can be applied to manuscripts in everyday editorial work. (2) SANRA’s internal consistency and item-total correlation are sufficient. (3) SANRA’s inter-rater reliability is satisfactory.

Feasibility

It is our experience with the current and earlier SANRA versions that editors, once accustomed to the scale, can integrate the scale into their everyday routine. It is important, however, to learn how to fill out SANRA. To this end, together with SANRA, we provide definitions and examples in the explanations and instructions document, and we recommend that new users train filling out SANRA using this resource. Editorial teams or teams of scientists and/or clinicians may prefer to learn using SANRA in group sessions.

Consistency and homogeneity

With Cronbach’s alpha of 0.68 and corrected item-total correlations between 0.33 and 0.58, we consider the scale’s consistency and item homogeneity sufficient for widespread application. It should be noted that because coefficient alpha increases with the number of items [ 12 ], simplifying a scale by reducing the number of items—as we did—may decrease internal consistency. However, this needs to be balanced against the practical need for brevity. In fact, the earlier seven-item versions of SANRA had higher values of alpha: 0.80 and 0.84, respectively [ 9 ]. Still, the number of items is not necessarily the only explanation for differences in alpha values. For example, the manuscripts included in the two earlier studies may have been easier to rate.

Inter-rater reliability

The scale’s intra-class correlation (0.77 after 0.76 in [ 9 ]) indicates that SANRA can be used reliably by different raters—an important property of a scale that may be applied for manuscript preparation and review, in editorial decision-making, or even in research on narrative reviews. Like internal consistency, reliability increases with the number of items [ 12 ], and there is a trade-off between simplicity (e.g., a small number of items) and reliability. While the ICC suggests sufficient reliability, however, the lower confidence limit (0.57) does not preclude a level of reliability normally deemed unacceptable in most applications of critical appraisal tools. This finding underscores the importance of rater training. Raters more often disagreed on items 1 and 4. After the study, we have therefore slightly edited these items, along with items 5 and 6 which we edited for clarity. In the same vein, we revised our explanations and instructions document.

It is important to bear in mind that testing of a scale always relates only to the setting of a given study. Thus, in the strict sense, the results presented here are not a general feature of SANRA but of SANRA filled out by certain raters with regard to a particular sample of manuscripts. However, from our experience, we trust that our setting is similar to that of many journals, and our sample of manuscripts represents an average group of papers. As a consequence, we are confident SANRA can be applied by other editors, reviewers, readers, and authors.

In a post hoc analysis, we found a modest, but statistically significant correlation of SANRA sum scores with manuscript acceptance. We interpret this as a sign of criterion validity, but emphasize that this is both a post hoc result and only a weak correlation. The latter, however, points to the fact that, at the level of submitted papers, other aspects than quality alone influence editorial decision-making: for example, whether the topic has been covered in the journal recently or whether editors believe that authors or topics of manuscripts have potential, even with low initial SANRA scores. SANRA will therefore often be used as one, and not the only, decision aid. Also, the decision to accept a paper has been made after the papers had been revised.

Moreover, additional results on criterion validity are needed, as are results on SANRA’s construct validity. On the other hand, SANRA’s content validity, defined as a scale’s ability to completely cover all aspects of a construct, will be restricted because we decided to limit the scale to six items, too few to encompass all facets of review article quality—SANRA is a critical appraisal tool and not a reporting guideline. For example, we deleted an item on the accessibility of the manuscript. Other possible domains that are not part of SANRA are, for example, originality of the manuscript or quality of tables and figures. These features are important, but we believe the six items forming SANRA are a core set that sufficiently indicates the quality of a review manuscript and, at the same time, is short enough to be applied without too much time and effort. SANRA’s brevity is also in contrast to other tools to assess articles, such as AMSTAR 2, for systematic reviews, or, to a lesser extent, CASP for RCTs, with its 16 and 11 items, respectively.

Throughout this paper we have referred to the current version of SANRA as the revision of earlier forms. This is technically true. However, because it is normal that scales go through different versions before publication and because this paper is first widespread publication of SANRA, we propose to call the present version simpy SANRA.

While medicine has achieved a great deal in the formalization and improvement of the presentation of randomized trials and systematic review articles, and also a number of other text types in medicine, much less work have been done with regard to the most frequent form of medical publications, the narrative review. There are exceptions: Gasparyan et al. [ 13 ], for example, have provided guidance for writing narrative reviews, and Byrne [ 14 ] as well as Pautasso [ 15 ] has written, from different angles, thoughtful editorials on improving narrative reviews and presented lists of key features of writing a good review—lists that naturally overlap with SANRA items (e.g., on referencing). These lists, however, are not tested scales and not intended for comparing different manuscripts. SANRA can be used in comparisons of manuscripts the way we used it in our editorial office, that is, in one setting. At the present time, however, it seems unwise to compare manuscripts across different settings because, so far, there are no established cut-offs for different grades of quality (e.g., poor-fair-moderate-good-very good). Still, in our experience, a score of 4 or below indicates very poor quality.

Limitations

The main limitation of this study is its sample size. While, in our experience, a group of 30 is not unusual in testing scales, it represents a compromise between the aims of representativeness for our journal and adequate power and feasibility; it took us about 6 months to sample 30 consecutive narrative reviews. Also, in this study, the authors of the scale were also the test-raters, and it is possible that inter-rater reliability is lower in groups less familiar with the scale. As for most scales, this underscores the importance of using the instructions that belong to the scale, in the present case the explanations and instructions document. It is also advisable to train using the scale before applying SANRA for manuscript rating. In addition, by design, this is not a study of test-retest reliability, another important feature of a scale. Finally, as previously acknowledged, although we believe in the representativeness of our setting for medical journals, the present results refer to the setting of this study, and consistency and reliability measures are study-specific.

We present SANRA, a brief scale for the quality assessment of narrative review articles, the most widespread form of article in the medical literature. We suggest SANRA can be integrated into the work of editors, reviewers, and authors. We encourage readers to consider using SANRA as an aid to critically appraising articles, and authors to consider its use on preparing narrative reviews, with a view to improving the quality of submitted and published manuscripts.

SANRA and its explanations and instructions document are available (open access) at: https://www.aerzteblatt.de/down.asp?id=22862 , https://www.aerzteblatt.de/down.asp?id=22861 .

Abbreviations

A MeaSurement Tool to Assess Systematic Reviews

Critical Appraisal Skills Program

Guidelines for Reporting Reliability and Agreement Studies

Intra-class correlation

Randomized controlled trial

Scale for the Assessment of Narrative Review Articles

Bastian H, Glasziou P, Chalmers I. Seventy-five trials and eleven systematic reviews a day: how will we ever keep up? PLoS Med. 2010;7(9):e1000326. https://doi.org/10.1371/journal.pmed.1000326 .

Article Google Scholar

Higgins JPT, Green S. (eds.). Cochrane Handbook for Systematic Reviews and Interventions. Version 5.1.0. The Cochrane Collaboration 2011, section 1.2.2., www.handbook.cochrane.org , retrieved on Oct 31, 2018.

Ioannidis JP. The mass production of redundant, misleading, and conflicted systematic reviews and meta-analyses. Milbank Q. 2016;94(3):485–514. https://doi.org/10.1111/1468-0009.12210 .

Mulrow CD. The medical review article: state of the science. Ann Intern Med. 1987;106:485–8.

Bschor T, Kern H, Henssler J, Baethge C. Switching the antidepressant after nonresponse in adults with major depression: a systematic literature search and meta-analysis. J Clin Psychiatry. 2018;79(1):16r10749. https://doi.org/10.4088/JCP.16r10749 .

Bschor T, Adli M. Treatment of depressive disorders. Dtsch Arztebl Int. 2008;105(45):782–92.

Google Scholar

Shea BJ, Reeves BC, Wells G, Thuku M, Hamel C, Moran J, Moher D, Tugwell P, Welch V, Kristjansson E, Henry DA. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017;358:J4008.

Critical Appraisal Skills Programme 2018. CASP Randomised controlled trial checklist available at: https://casp-uk.net/casp-tools-checklists/ . Accessed: 31 Oct 2018.

Baethge C, Mertens S, Goldbeck-Wood S. Development of a quality score for the assessment of non-systematic review articles (SANRA). In: Poster, Seventh International Congress on Peer Review and Biomedical Publication. USA: Chicago, Illinois; 2013.

Baethge C, Goldbeck-Wood S, Mertens S. A scale for the assessment of non-systematic review articles (SANRA). In: Poster, Eighth International Congress on Peer and Biomedical Publication. USA: Chicago, Illinois; 2017.

Kottner J, Audige L, Brorson S, Donner A, Gajewski BJ, Hrobjartsson A, Roberts C, Shoukri M, Streiner DL. Guidelines for reporting reliability and agreement studies (GRASS) were proposed. J Clin Epidemiol. 2011;64:96–106.

Streiner DL, Norman GR. Health measurment scales—a practical guide to their development and use. Fourth edition. New York: Oxford University Press; 2008.

Book Google Scholar

Gasparyan AY, Ayvazyan L, Blackmore H, Kitas GD. Writing a narrative biomedical review: considerations for authors, peer reviewers, and editors. Rheumatol Int. 2011;31:1409–17.

Byrne JA. Improving the peer review of narrative literature reviews. Research Integrity and Peer Review. 2016;1(12). https://doi.org/10.1186/s41073-016-0019-2 .

Pautasso M. Ten simple rules for writing a literature review. PLoS Comput Biol. 2013;9(7):e1003149. https://doi.org/10.1371/journal.pcbi.1003149 .

Download references

Acknowledgements

This work has been presented at the Eighth International Congress on Peer Review and Scientific Publication in Chicago, Illinois, USA. (September 10-12, 2017) and at the 14th EASE Conference in Bucharest, Romania (June 8-10, 2018).

This work has not been externally funded.

Availability of data and materials

The dataset generated during the course of this study is available from the authors upon request.

Author information

Authors and affiliations.

Deutsches Ärzteblatt and Deutsches Ärzteblatt International, Dieselstraße 2, D-50859, Cologne, Germany

Christopher Baethge, Sandra Goldbeck-Wood & Stephan Mertens

Department of Psychiatry and Psychotherapy, University of Cologne Medical School, Cologne, Germany

Christopher Baethge

BMJ Sexual and Reproductive Health, London, UK

Sandra Goldbeck-Wood

You can also search for this author in PubMed Google Scholar

Contributions

All authors (CB, SM, and SGW) made substantial contributions to the conception of the study and to the acquisition and interpretation of data. CB analyzed the data and drafted the manuscript. SM and SGW revised the draft critically for important intellectual content. All authors sufficiently participated in this work to take public responsibility for its content, all finally approved the manuscript, and all are accountable for every aspect of this project.

Corresponding author

Correspondence to Christopher Baethge .

Ethics declarations

Ethics approval and consent to participate.

Not applicable

Consent for publication

Competing interests.

Non-financial competing interest: all authors (CB, SM, and SGW) had their part in the development of the scale under study. The authors declare that they have no financial competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

Reprints and permissions

About this article

Cite this article.

Baethge, C., Goldbeck-Wood, S. & Mertens, S. SANRA—a scale for the quality assessment of narrative review articles. Res Integr Peer Rev 4 , 5 (2019). https://doi.org/10.1186/s41073-019-0064-8

Download citation

Received : 02 December 2018

Accepted : 26 February 2019

Published : 26 March 2019

DOI : https://doi.org/10.1186/s41073-019-0064-8

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Periodicals as topic

- Narrative review articles

- Non-systematic review articles

- Reliability

- Item-total correlation

- Internal consistency

- Cronbach’s alpha

- Intra-class correlation coefficient

Research Integrity and Peer Review

ISSN: 2058-8615

- Submission enquiries: [email protected]

- General enquiries: [email protected]

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- PMC10348931

A scoping review on quality assessment tools used in systematic reviews and meta-analysis of real-world studies

Tadesse gebrye.

1 Department of Health Professions, Faculty of Health, Psychology, and Social Care, Manchester Metropolitan University, Brooks Building, Birley Fields Campus, Bonsall Street, 53 Bonsall Street, Manchester, M15 6GX UK

Francis Fatoye

2 Lifestyle Diseases, Faculty of Health Sciences, North-West University, Mahikeng, South Africa

Chidozie Mbada

Zalmai hakimi.

3 Apellis Pharmaceuticals, Zug, Switzerland

Associated Data

All results from our analyses are published in the Supplementary Material, available at Rheumatology International online. Items/domains employed to the included studies and extracted by our investigators are available upon reasonable request.

Risk of bias tools is important in identifying inherent methodical flaws and for generating evidence in studies involving systematic reviews (SRs) and meta-analyses (MAs), hence the need for sensitive and study-specific tools. This study aimed to review quality assessment (QA) tools used in SRs and MAs involving real-world data. Electronic databases involving PubMed, Allied and Complementary Medicine Database, Cumulated Index to Nursing and Allied Health Literature, and MEDLINE were searched for SRs and MAs involving real-world data. Search was delimited to articles published in English, and between inception to 20 of November 2022 following the SRs and MAs extension for scoping checklist. Sixteen articles on real-world data published between 2016 and 2021 that reported their methodological quality met the inclusion criteria. Seven of these articles were observational studies, while the others were of interventional type. Overall, 16 QA tools were identified. Except one, all the QA tools employed in SRs and MAs involving real-world data are generic, and only three of these were validated. Generic QA tools are mostly used for real-world data SRs and MAs, while no validated and reliable specific tool currently exist. Thus, there is need for a standardized and specific QA tool of SRs and MAs for real-world data.

Supplementary Information

The online version contains supplementary material available at 10.1007/s00296-023-05354-x.

Introduction

Systematic Reviews (SRs), evidence-based medicine, and clinical guidelines bring together trustworthy information by systematically acquiring, analysing, and transferring research findings into clinical, management, and policy arenas [ 1 ]. As such, findings of different work in medical literature on related topics are evaluated using SRs and meta-analyses (MAs), through the application of scientific strategies that limit bias and errors that occur by chance [ 2 ]. Availability of the best evidence obtained though SRs and MAs is necessary to help clinicians, policy makers and patients reach the best health care decisions [ 3 ]. However, SRs and MAs require resources, take time, and are labour-intensive, as well, they may not always be warranted or possible. For example, a study estimated the expense of SRs for academic institutions and pharmaceutical companies to cost approximately $141,194.80, and on average, the total cost of all SRs per year to academic institutions and pharmaceutical companies amounts to $18,660,304.77 and $16,761,234.71 [ 4 ]. Therefore, unnecessary duplication of SRs should be avoided for cost, as well as given the large unmet need for SRs of a wide range of questions and the need to keep reviews up-to-date [ 5 ].

To use the results of SRs and MAs, it is important to assess the methodological quality of the primary studies [ 6 ]. Methodological quality assessment (QA) is the process of assessing the design and conduct of the included studies, and it is useful to establish transparency of evidence synthesis and to guarantee the certainty of the body of evidence of the review objective [ 7 , 8 ]. The main reason for assessing methodological quality of primary studies is to identify risks of bias [ 9 ] which may be due to poor reporting and several design features that are dependent on the research question. Poor reporting may prevent assessment of key features of design, making it difficult to evaluate whether the study methodology has been adequate [ 10 ]. According to National Health and Medical Research Council [ 11 ], “risks of bias refer to the likelihood that features of the study design or conduct of the study will give misleading results”, and thus bring about misused resources, un-thriftiness for effective interventions or harm to consumers [ 11 ].

A systematic review of methodological assessment tools for preclinical and clinical studies, and clinical practice guidelines show that there are a variety of methodological assessment tools for different types of study design [ 12 ]. Thus, it is critical to identify the study type before choosing the corresponding QA tool. In accordance, Zeng and colleagues [ 12 ] submit that further efforts in the development of critical appraisal tools are warranted for areas that currently lack such tools. However, there is an apparent dearth of specific QA tool for real-world evidence (RWE) studies. According to Food and Drugs Administrations [ 13 ], “RWE is the clinical evidence about the usage and potential benefits, or risks of a medical product derived from analysis of real-world data (RWD)”. Whereas RWD are routinely collected data pertaining to health status and/or health care delivery of the patient which are collected from a range of sources” [ 14 ] including claims, clinical studies, clinical setting, pharmaceuticals, and patient-powered platforms [ 15 , 16 ].

The increasing use of electronic health records, and health information systems has led to repositories of large volumes of complex longitudinal RWD [ 17 ]. Thus, RWD are mostly diversified, but generally are medical records, prescription data and lifestyle-related information from health care providers, hospitals, and pharmacies [ 18 ]. For primary studies based on RWD, the quality of their data should be defined in context, clearly represented, and accessible [ 15 , 19 ]. For example, Hyrich [ 20 ] concludes that RWD plays significant role in rheumatology because it helps to better understand disease progression and treatment outcomes beyond the conclusions of a clinical trial, as it provides a platform to "test" outcomes in an uncontrolled, real-life environment. Furthermore, the author posits that there is need to generate trustworthy conclusions from RWD by ensuring appropriate methodological and ethical considerations for handling RWD. Given the importance of RWD in research, population health, quality improvement, clinical decision support, and personalised medicine [ 21 ], it is necessary to explore the existing QA tools that have been used for SRs and MAs that involved RWD. Hence, this scoping review of QA tools used for SRs and MAs that involved RWD.

Scoping review

We conducted a scoping review, a type of literature review that is used when it is difficult to identify a narrow review question; no prior synthesis has been undertaken on the topic; studies in the review sources are likely to have employed a range of data collection and analysis techniques; and a quality assessment of reviewed sources is not going to be conducted [ 22 ].

Search strategy

An electronic database search was carried out by the reviewers through November 2022 using the following databases: PubMed, Allied and Complementary Medicine Database (AMED), Cumulated Index to Nursing and Allied Health Literature (CINAHL), and MEDLINE. The keywords used in the search included a combination of RWE, RWD, routinely collected data, electronic health records, claims and billing activities, registries, meta‐analysis, and systematic review (Appendix 2). Further, a manual search of reference sections of the included studies was also checked for additional studies. The search was delimited to articles published in English language.

Study selection and data extraction

One reviewer screened the abstracts of all publications obtained by the search strategies. Studies meeting the following inclusion criteria were selected for further review: interventional or observational studies, using real-world data, employed methodological QA tools. SRs or MAs not based on RWD and not methodological quality assessed were excluded. The potential eligible papers were retrieved, and the full articles were obtained and assessed for their relevance by two reviewers (TG & CEM) based on the preplanned criteria for inclusion. Any disagreement in study selection was resolved through discussion and consultation with a third reviewer (FF) where necessary.

A summary table was used to display the extracted data. The following data were extracted: authors and date, type of study, type of QA tool, number of items, domains, whether the tool is generic or specific, time to complete the tool, psychometric properties (validity and reliability), population/studies used to validate the tool, and name of the unit that developed the tool. The reviewers resolved differences through discussion to achieve consensus.

Data synthesis

Study data were extracted by three reviewers into a template. Findings for each study focusing on the QA tools used in SRs and MAs of RWD were then summarized by one reviewer, and the summaries discussed and modified by the research team as necessary, to generate an overall conclusion about the quality assessment (QA) tools used in SRs and MAs involving real-world data.

The search strategy retrieved 4,954 (PubMed = 4369; AMED = 5; CINHAL = 182; Medline = 398) articles from four databases (Fig. 1 ). After duplicates removal, the tittles, and abstracts of 4,153 publications were screened. From this, only 75 studies were included for full-text screening and 16 articles met the inclusion criteria.

Flow diagram of publications included and excluded in the review

Characteristics of included studies

The characteristics of the included studies are presented in Table Table1. 1 . The included studies were published between 2016 and December 2021. Seven of the included studies were observational type and the remaining were interventional and observational type of studies. The included studies applied various QA tools. The number of items used for QA within the included studies ranged from 4 to 22. Seven of the included studies comprised core domains that contains different questions employed for quality assessment. Only one [ 23 ] of the included studies utilised very specific tools for methodological quality assessment. Three [ 24 – 26 ] of the included studies employed validated QA tools. In order to validate the tools used in the included studies, they employed 39 non-randomised studies [ 24 ], 131 cohort studies [ 25 ] and 30 cost-effectiveness studies [ 26 ]. On the other hand, the QA tools utilised to the remaining thirteen of the included studies were not validated.

Characteristics of the tools used in the included studies

GRADE The Grades of Recommendation, Assessment, Development, and Evaluation, ROBINS-I The Risk of Bias in Non-randomized Studies of Interventions, N/A Not Available, STROBE The Strengthening the Reporting of Observational Studies in Epidemiology Statement, CASP Critical Appraisal Skills Program

Ω These tools have not been independently published

Non-summative four-point system