PhD, Professor, and Postdoc Salaries in the United States

The United States is home to several of the world’s best universities making it a top destination for international researchers. Here’s a breakdown of the most common American job titles and their associated average annual salaries. All salary statistics in this article are in American Dollars (USD) and are pre-tax.

PhD Student

A Master’s degree is not always required to do a PhD in the US. Several top universities offer direct entry PhD programs. An American PhD begins with two to three years of coursework in order to pass qualifying exams. During this time doctoral students are able to develop their research interests and hone in on their thesis topic. They will then write a thesis proposal which must be approved before they can start their dissertation. Most programs require PhD students to gain two to three years of teaching experience as well, either by leading their own class or as teaching assistants for a professor. It takes an average of six years to earn a PhD in the US.

Unlike some European countries, there is no mandated minimum salary or national salary scale for PhD students in the US. PhD students ear n between $ 15,000 and $30,000 a year depending on their institution, field of study, and location. This stipend can be tax-free (if it is a fellowship award) or taxable (if it is a salary e.g from a teaching position). American PhD students are usually only paid for nine months of the year but many programs offer summer funding opportunities. A PhD funding package will also include a full or partial tuition waiver.

After earning a PhD, many researchers go on to a postdoc. A postdoc is a continuation of the researcher’s training that allows them to further specialize in a particular field and learn new techniques. Postdoc positions are usually two to three years and it is not unusual to do more than one postdoc. There is no limit on the number of years you can be a postdoc in the US. The average salary (2023 ) for postdocs in the US is $61,143 per year.

A lecturer is a non-tenure-track teaching position. They often have a higher teaching load than tenure track-faculty and no research obligations. These positions are more common in the humanities or as foreign language instructors. Lecturers hold advanced degrees, though not always PhDs. The average salary for a full time lecturer in 2021-2022 according to the American Association of University Professors was $69,499.

Assistant Professor

This is the start of the tenure track. An assistant professor is responsible for teaching, research, and service to the institution (committee membership). Assistant professors typically teach two to four courses per semester while also supervising graduate students. They are also expected to be active researchers and publish books, monographs, papers, and journal articles to meet their tenure requirements. The average salary for assistant professors in 2021-2022 was $85,063 according to the American Association of University Professors .

Associate Professor

An assistant professor who has been granted tenure is then promoted to an associate professor. An associate professor often has a national reputation and is involved in service activities beyond their university. The average salary for associate professors in 2021-2022 was $97,734 according to the American Association of University Professors .

This is the final destination of the tenure track. Five to seven years after receiving tenure, associate professors go through another review. If they are successful, they are promoted to the rank of professor (sometimes called full professor). Professors usually have a record of accomplishment that has established them as an international or national leader in their field. The average salary for professors in 2021-2022 according to the American Association of University Professors was $143,823.

Discover related jobs

Discover similar employers

Accelerate your academic career

Studying in the US with a J-1 Visa

Are you going to study in the US on a J-1 visa? Here are our tips for pr...

The Unexpected Benefits of Doing a PhD Abroad

There are also so many benefits to doing a PhD abroad, from both a caree...

The Difference Between a CV and a Resume

The terms CV and resume are used interchangeably, however, there are sev...

PhD, Postdoc, and Professor Salaries in Belgium

Academic salaries for Flemish (Dutch-speaking) or Wallonian (French-spea...

How to Apply for a PhD

Applying for a PhD can be overwhelming, but there are several things you...

6 Tips for Choosing Your Postdoc Lab

It can be difficult to know how to evaluate a different postdoc labs. Be...

Jobs by field

- Machine Learning 192

- Artificial Intelligence 181

- Electrical Engineering 177

- Programming Languages 132

- Molecular Biology 118

- Engineering Physics 105

- Materials Chemistry 103

- Applied Mathematics 103

- Electronics 102

- Materials Engineering 99

Jobs by type

- Postdoc 325

- Assistant / Associate Professor 203

- Professor 125

- Research assistant 113

- Researcher 107

- Lecturer / Senior Lecturer 90

- Tenure Track 72

- Management / Leadership 59

- Engineer 55

Jobs by country

- Belgium 284

- Netherlands 197

- Germany 107

- Switzerland 105

- Finland 100

- Luxembourg 61

Jobs by employer

- Mohammed VI Polytechnic Unive... 91

- KU Leuven 87

- Ghent University 71

- Eindhoven University of Techn... 63

- University of Luxembourg 59

- University of Twente 56

- KTH Royal Institute of Techno... 51

- ETH Zürich 51

- Wenzhou-Kean University 35

This website uses cookies

Jump to navigation

Search form

The Graduate School

- Faculty/Staff Resources

- Programs of Study Browse the list of MSU Colleges, Departments, and Programs

- Graduate Degree List Graduate degrees offered by Michigan State University

- Research Integrity Guidelines that recognize the rights and responsibilities of researchers

- Online Programs Find all relevant pre-application information for all of MSU’s online and hybrid degree and certificate programs

- Graduate Specializations A subdivision of a major for specialized study which is indicated after the major on official transcripts

- Graduate Certificates Non-degree-granting programs to expand student knowledge and understanding about a key topic

- Interdisciplinary Graduate Study Curricular and co-curricular opportunities for advanced study that crosses disciplinary boundaries

- Theses and Dissertations Doctoral and Plan A document submission process

- Policies and Procedures important documents relating to graduate students, mentoring, research, and teaching

- Academic Programs Catalog Listing of academic programs, policies and related information

- Traveling Scholar Doctoral students pursue studies at other BTAA institutions

- Apply Now Graduate Departments review applicants based on their criteria and recommends admission to the Office of Admissions

- International Applicants Application information specific to international students

- PhD Public Data Ph.D. Program Admissions, Enrollments, Completions, Time to Degree, and Placement Data

- Costs of Graduate School Tools to estimate costs involved with graduate education

- Recruitment Awards Opportunities for departments to utilize recruitment funding

- Readmission When enrollment is interrupted for three or more consecutive terms

- Assistantships More than 3,000 assistantships are available to qualified graduate students

- Fellowships Financial support to pursue graduate studies

- Research Support Find funding for your research

- Travel Funding Find funding to travel and present your research

- External Funding Find funding outside of MSU sources

- Workshops/Events Find opportunities provided by The Graduate School and others

- Research Opportunities and programs for Research at MSU

- Career Development Programs to help you get the career you want

- Teaching Development Resources, workshops, and development opportunities to advance your preparation in teaching

- Cohort Fellowship Programs Spartans are stronger together!

- The Edward A. Bouchet Graduate Honor Society (BGHS) A national network society for students who have traditionally been underrepresented

- Summer Research Opportunities Program (SROP) A gateway to graduate education at Big Ten Academic Alliance universities

- Alliances for Graduate Education and the Professoriate (AGEP) A community that supports retention, and graduation of underrepresented doctoral students

- Recruitment and Outreach Ongoing outreach activities by The Graduate School

- Diversity, Equity, and Inclusion Funding Funding resources to recruit diverse students

- Graduate Student Organizations MSU has over 900 registered student organizations

- Grad School Office of Well-Being Collaborates with graduate students in their pursuit of their advanced degree and a well-balanced life

- Housing and Living in MI MSU has an on and off-campus housing site to help find the perfect place to stay

- Mental Health Support MSU has several offices and systems to provide students with the mental health support that they need

- Spouse and Family Resources MSU recognizes that students with families have responsibilities that present challenges unique to this population

- Health Insurance Health insurance info for graduate student assistants and students in general at MSU

- Safety and Security MSU is committed to cultivating a safe and inclusive campus community characterized by a culture of safety and respect

- Why Mentoring Matters To Promote Inclusive Excellence in Graduate Education at MSU

- Guidelines Guidelines and tools intended to foster faculty-graduate student relationships

- Toolkit A set of resources for support units, faculty and graduate students

- Workshops Workshops covering important topics related to mentor professional development

- About the Graduate School We support graduate students in every program at MSU

- Strategic Plan Our Vision, Values, Mission, and Goals

- Social Media Connect with the Graduate School!

- History Advancing Graduate Education at MSU for over 25 years

- Staff Directory

- Driving Directions

PhD Salaries and Lifetime Earnings

PhDs employed across job sectors show impressive earning potential:

“…[T]here is strong evidence that advanced education levels continue to be associated with higher salaries. A study by the Georgetown Center on Education and the Workforce showed that across the fields examined, individuals with a graduate degree earned an average of 38.3% more than those with a bachelor’s degree in the same field. The expected lifetime earnings for someone without a high school degree is $973,000; with a high school diploma, $1.3 million; with a bachelor’s degree, $2.3 million; with a master’s degree, $2.7 million; and with a doctoral degree (excluding professional degrees), $3.3 million. Other data indicate that the overall unemployment rate for individuals who hold graduate degrees is far lower than for those who hold just an undergraduate degree.” - Pathways Through Graduate School and Into Careers , Council of Graduate Schools (CGS) and Educational Testing Service (ETS), pg. 3.

Average salaries by educational level and degree (data from the US Census Bureau, American Community Survey 2009-2011, courtesy of the Georgetown University Center on Education and the Workforce):

The Bureau of Labor and Statistics reports higher earnings and lower unemployment rates for doctoral degree holders in comparison to those with master’s and bachelor’s degrees:

According to national studies, more education translates not only to higher earnings, but also higher levels of job success and job satisfaction:

“Educational attainment – the number of years a person spends in school – strongly predicts adult earnings, and also predicts health and civic engagement. Moreover, individuals with higher levels of education appear to gain more knowledge and skills on the job than do those with lower levels of education and they are able, to some extent, to transfer what they learn across occupations.” - Education for Life and Work (2012), National Research Council of the National Academies, pg. 66.

- Call us: (517) 353-3220

- Contact Information

- Privacy Statement

- Site Accessibility

- Call MSU: (517) 355-1855

- Visit: msu.edu

- MSU is an affirmative-action, equal-opportunity employer.

- Notice of Nondiscrimination

- Spartans Will.

- © Michigan State University

- Skip to main content

- Prospective Students

- Current Students

- Apply Apply

- Follow Us

How PhD Students Get Paid

The most common questions (and biggest misconceptions) about getting a PhD revolve around money. Maybe you’ve heard that PhD students get paid just to study, or maybe you’ve even heard that PhD students don’t get paid at all.

It makes sense — how you make money as a PhD student is different from most other career routes, and the process can be highly variable depending on your school, discipline and research interests.

So, let’s address the big question: do PhD students get paid? Most of the time the answer is yes. PhD programs that don’t offer some form of compensation, like stipends, tuition remission or assistantships, are rare but they do exist. On the other hand, some programs, like a PhD in Economics , are so competitive that unpaid programs are virtually unheard of.

To help you gain a better understanding of PhD funding and decide if getting a PhD is worth it for you, here are some of the most common examples of how PhD students are paid.

PhD Stipends

Most PhD programs expect students to study full-time. In exchange, they’re usually offered a stipend — a fixed sum of money paid as a salary — to cover the cost of housing and other living expenses. How much you get as a stipend depends on your university, but the range for PhD stipends is usually between $20,000 - $30,000 per year.

In some cases, your stipend will be contingent upon an assistantship.

Assistantships

A PhD assistantship usually falls into one of two categories: research or teaching.

For research assistantships , faculty generally determine who and how many assistants they need to complete their research and provide funding for those assistants through their own research grants from outside organizations.

A teaching assistantship is usually arranged through your university and involves teaching an undergraduate or other class. Assistantships allow graduate students to gain valuable experience leading a classroom, and helps to balance out the university’s stipend costs.

Fellowships

Fellowships provide financial support for PhD students, usually without the teaching or research requirement of an assistantship. The requirements and conditions vary depending on the discipline, but fellowships are generally merit based and can be highly competitive. Fellowships usually cover at least the cost of tuition, but some may even pay for scholarly extracurricular activities, like trips, projects or presentations.

Fellowships can be offered through your university or department as well as outside sources.

Part-time Employment

PhD students don’t commonly have additional employment during their course of study, but it is possible depending on your discipline and the rigor of your program. Flexible, low-demand jobs like freelance writing or tutoring can be a natural fit for many PhD students, and might be flexible enough to balance along with your coursework.

All in all, it’s fair to say that though the form of payment may be unfamiliar, PhD students do in fact get paid. But keep in mind that while most PhD programs offer some kind of funding for students, it’s not guaranteed.

Want to know more about how to pay for a PhD ? Explore our Guide to Choosing and Applying for PhD Programs .

Learn more about

doctoral degrees at SMU, and how you can choose the right program and thrive in it, in our Guide to Getting a PhD.

Request more

Information.

Complete the form to reach out to us for more information

Published On

More articles, recommended articles for you, funding options for phd students.

Pursuing a PhD is a significant commitment of your finances and time. From tuition, living...

Do PhD Students Pay Tuition? Unpacking the Cost of a PhD

Choosing to pursue a PhD is a major milestone, but it comes with a host of concerns and questions....

Figuring Out How the Physical World Works: An Interview with Ph.D. Fellow, Rujeko Chinomona

From an early age Rujeko was fascinated by how the physical world worked. She began to find answers...

Browse articles by topic

Subscribe to.

Explore Jobs

- Jobs Near Me

- Remote Jobs

- Full Time Jobs

- Part Time Jobs

- Entry Level Jobs

- Work From Home Jobs

Find Specific Jobs

- $15 Per Hour Jobs

- $20 Per Hour Jobs

- Hiring Immediately Jobs

- High School Jobs

- H1b Visa Jobs

Explore Careers

- Business And Financial

- Architecture And Engineering

- Computer And Mathematical

Explore Professions

- What They Do

- Certifications

- Demographics

Best Companies

- Health Care

- Fortune 500

Explore Companies

- CEO And Executies

- Resume Builder

- Career Advice

- Explore Majors

- Questions And Answers

- Interview Questions

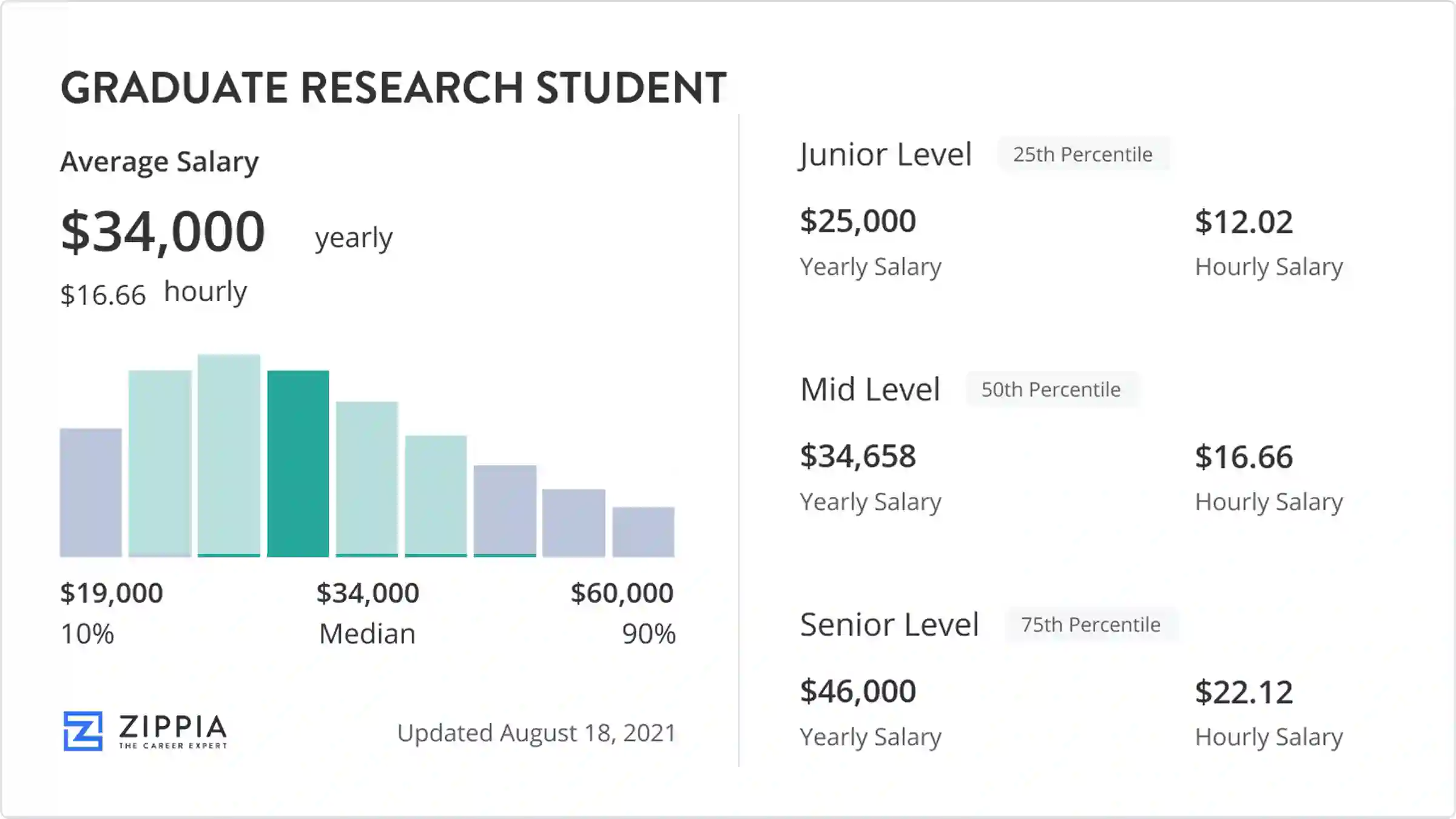

Graduate Research Student salary

Average graduate research student salary, how much does a graduate research student make.

The average graduate research student salary in the United States is $34,658. Graduate research student salaries typically range between $19,000 and $60,000 yearly. The average hourly rate for graduate research students is $16.66 per hour. Graduate research student salary is impacted by location, education, and experience. Graduate research students earn the highest average salary in Pennsylvania. According to Dr. Kenneth Klemow Ph.D. , Professor and Chair of Biology at Wilkes University, "I don't have sufficient familiarity with salary trends to give a good answer, though I know that individuals with data analysis skills command relatively high salaries."

Where can a Graduate Research Student earn more?

Average graduate research student salary by state.

The highest-paying states for graduate research students are Pennsylvania, Massachusetts, and Maryland. The lowest average graduate research student salary states are Georgia, Iowa, and Florida.

- County View

Highest paying states for graduate research students

Highest paying cities for graduate research students.

The highest-paying cities for graduate research students are Boston, MA, Edison, NJ, and Appleton, WI.

Graduate Research Student salary details

A graduate research student's salary ranges from $19,000 a year at the 10th percentile to $60,000 at the 90th percentile.

What is a graduate research student's salary?

Highest paying graduate research student jobs.

The highest paying types of graduate research students are research scientist, researcher, and assistant research scientist.

Top companies hiring graduate research students now:

- California State University Jobs (48)

- Rutgers University Jobs (43)

- Morgan State University Jobs (31)

- University of Wisconsin System Jobs (34)

- University of Washington Jobs (42)

Which companies pay graduate research students the most?

Graduate research student salaries at Capgemini and ConocoPhillips are the highest-paying according to our most recent salary estimates. In addition, the average graduate research student salary at companies like Google and Los Alamos National Laboratory are highly competitive.

Wage gap by gender, race and education

Graduate research student salary trends.

The average graduate research student salary has risen by $5,652 over the last ten years. In 2014, the average graduate research student earned $29,006 annually, but today, they earn $34,658 a year. That works out to a 9% change in pay for graduate research students over the last decade.

Compare graduate research student salaries for cities or states with the national average over time.

Average graduate research student salary over time

Compare graduate research student salaries for individual cities or states with the national average.

Graduate Research Student salary by year

Graduate research student salary faqs, what state pays graduate research students the most, how do i know if i'm being paid fairly as a graduate research student.

Search for graduate research student jobs

Graduate Research Student Related Salaries

- Assistant Research Scientist Salary

- Graduate Assistant Salary

- Laboratory Internship Salary

- Laboratory Researcher Salary

- PHD Researcher Salary

- Postdoctoral Research Associate Salary

- Postdoctoral Scholar Salary

- Research Associate Salary

- Research Fellow Salary

- Research Internship Salary

- Research Laboratory Technician Salary

- Research Scientist Salary

- Research Specialist Salary

- Research Technician Salary

- Researcher Salary

Graduate Research Student Related Careers

- Assistant Research Scientist

- Doctoral Student

- Graduate Assistant

- Graduate Researcher

- Graduate Student Internship

- Laboratory Internship

- Laboratory Researcher

- PHD Researcher

- Postdoctoral Research Associate

- Postdoctoral Scholar

- Research Associate

- Research Fellow

- Research Internship

- Research Laboratory Technician

- Research Scientist

Graduate Research Student Related Jobs

- Research Specialist

- Senior Research Fellow

- Teaching Assistant

What Similar Roles Do

- What Does an Assistant Research Scientist Do

- What Does a Graduate Assistant Do

- What Does a Laboratory Internship Do

- What Does a Laboratory Researcher Do

- What Does an PHD Researcher Do

- What Does a Postdoctoral Research Associate Do

- What Does a Postdoctoral Scholar Do

- What Does a Research Associate Do

- What Does a Research Fellow Do

- What Does a Research Internship Do

- What Does a Research Laboratory Technician Do

- What Does a Research Scientist Do

- What Does a Research Specialist Do

- What Does a Research Technician Do

- What Does a Researcher Do

- Zippia Careers

- Education, Training, and Library Industry

- Graduate Research Student

- Graduate Research Student Salary

Browse education, training, and library jobs

- Credit cards

- View all credit cards

- Banking guide

- Loans guide

- Insurance guide

- Personal finance

- View all personal finance

- Small business

- Small business guide

- View all taxes

You’re our first priority. Every time.

We believe everyone should be able to make financial decisions with confidence. And while our site doesn’t feature every company or financial product available on the market, we’re proud that the guidance we offer, the information we provide and the tools we create are objective, independent, straightforward — and free.

So how do we make money? Our partners compensate us. This may influence which products we review and write about (and where those products appear on the site), but it in no way affects our recommendations or advice, which are grounded in thousands of hours of research. Our partners cannot pay us to guarantee favorable reviews of their products or services. Here is a list of our partners .

How Much Do Graduate Students Get Paid?

Many or all of the products featured here are from our partners who compensate us. This influences which products we write about and where and how the product appears on a page. However, this does not influence our evaluations. Our opinions are our own. Here is a list of our partners and here's how we make money .

Dive deeper into attending grad school

Strategies to afford your next degree : How to pay for grad school

Who qualifies for aid : Financial aid for grad school

Paying for grad school: Subsidized loans for graduate school

Find a loan: Best graduate student loan options

Student loans from our partners

on Sallie Mae

4.5% - 15.49%

Mid-600's

on College Ave

College Ave

4.07% - 15.48%

4.09% - 15.66%

Low-Mid 600s

12.9% - 14.89%

13.74% - 15.01%

5.24% - 9.99%

5.49% - 12.18%

on Splash Financial

Splash Financial

6.64% - 8.95%

4.07% - 14.49%

5.09% - 14.76%

4.11% - 14.3%

6.5% - 14.83%

Graduate students who work as teaching assistants earn an average of $38,040 annually, according to 2021 data from the Bureau of Labor Statistics. But how much you get paid as a grad student can vary greatly.

Grad school compensation depends on your school’s policies and your role at the institution. For example, teaching assistants and research assistants may have different pay scales, as could first-year and fourth-year graduate students.

» MORE: Is a masters degree worth it?

How graduate students get paid

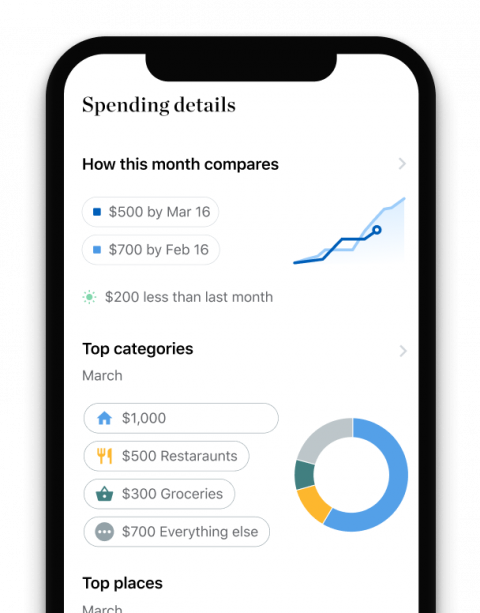

Colleges may pay graduate students who work at the school via a stipend or a salary. Generally, the key differences between these options are as follows:

Stipends are for students. You receive this funding as part of an assistantship or fellowship from the school. The money is meant to support your living expenses while you perform research or your other educational pursuits. Stipend amounts may be based on the length of the academic year, not the calendar year.

Salaries are for employees. The school has formally hired you as an employee to perform specific responsibilities, like leading a class, for instance. As a salaried worker, your wages may be a set amount or based on the hours you work. You may also receive employee benefits such as subsidized health care or workers’ compensation.

How much is a graduate student’s stipend?

Cornell University recently announced it would increase graduate student stipends by 8%, bringing the average annual assistantship stipends for Ithaca- and Cornell AgriTech-based students to $43,326.

But this is not the norm. Many graduate students are paid much less.

The Temple University Graduate Students' Association, for example, began negotiations with the university in January 2021 to raise their average graduate student stipend — currently at $19,500 year.

Because funding can vary by school, it's best to research stipend information on your school’s website. This will likely include how much you’ll receive, as well as any factors that affect your pay rate. For example, the Stanford School of Education pays research assistants more once they’re officially doctoral candidates.

Living on graduate student payments

Working while in school can help cover some graduate program costs. But even with multiple jobs, you’ll likely need additional money to afford all your expenses.

Apply for scholarships and grants you may qualify for. Also, explore any other assistance your school offers. For example, Duke University offers up to $7,000 a semester to Ph.D. students who need child care.

After exhausting free aid and your stipend or salary, you may have to turn to graduate student loans to close any additional gaps in funding. For the 2020-2021 academic year, the average grad student graduated with $17,680 in federal graduate student loans, according to the College Board, a not-for-profit association of educational institutions.

There aren’t subsidized loans for graduate school, where the government covers the cost of interest while you’re in school, but unsubsidized loans are available and you don't have to make payments while enrolled at least half-time.

You can also take out up to your program’s cost of attendance — minus other aid you’ve already received — in graduate PLUS loans from the federal government or private graduate school loans .

» MORE: How to pay for graduate school

On a similar note...

/images/cornell/logo35pt_cornell_white.svg" alt="average phd student salary in usa"> Cornell University --> Graduate School

Stipend rates.

2024-25 Graduate Student Assistantship and Fellowship Stipend Rates

Effective August 21, 2024 ( View 2023-24 rates )

[1] Weekly hours spent on summer appointments must comply with University Policy 1.3, and stipend rates must meet the Board of Trustees mandated minimum (nine-month) stipend rate, prorated for the number of weeks of the summer appointment. The length of the summer appointment (number of weeks) is determined by the Principal Investigator, department, unit, college, or other source of funding.

[2] The maximum academic-year stipend amount that a graduate student may receive when any portion of the stipend comes from any funds held at Cornell (university accounts, college accounts, department accounts, unit accounts, or Principal Investigator sponsored funds) is $52,026. The increase may be from the same funding source as the basic stipend (an “adjustment”) or from a different source (a “supplement”). The limit applies to support from any combination of fellowships or assistantships when part of the stipend is paid from funds held at Cornell. There is no restriction on summer stipends and fellowships.

Prorated Stipends for Non-Standard Appointments

Minimum stipend rates for non-standard appointments classified as graduate assistantships (TA, GA, RA, or GRA) must be proportional to the board-approved stipend. Examples are provided in the table below.

Partial assistantships must include tuition proportional to the stipend. That is, if a student receives a partial TAship with 50% stipend for the semester, the hours must be limited to 7.5 or less per week and he or she must receive 50% tuition for that semester in addition to the stipend. Awards that do not provide tuition and stipend in amounts proportional to the hours expected of a regular assistant are not assistantships and should not be portrayed as such.

Examples – Adjusted Stipend Rates for Non-Standard Appointments

Assistantships for professional degree students.

Students who are enrolled in professional degree programs are generally ineligible for assistantships outside of their graduate field of study, unless the director of graduate studies for the student’s program requests an exception based on the student gaining experience directly supporting the student’s ability to teach the subject matter of the profession. Requests for exceptions must be approved in advance by both the dean of the Graduate School and the dean of the college in which the professional degree program is housed. The college that administers the professional degree in which the student is enrolled is responsible for payment of the full tuition. Professional degree students may be appointed as graduate teaching/research specialists (GTRS) (see below). They may not accept an assistantship without:

- A signed letter from the director of graduate studies for the student’s program requesting an exception based on the student gaining experience directly supporting the student’s ability to teach the subject matter of the profession.

- A signed letter from the student’s college dean or dean’s designate indicating that the college will apply a tuition credit of at least $14,750 per semester.

- A signed letter from the Graduate School Dean or Associate Dean of Administration, approving the assistantship appointment.

Graduate Teaching/Research Specialists

Students in the professional degree programs may be appointed as graduate teaching/research specialists (GTRS). The GTRS is not an assistantship; GTRSs receive a stipend in proportion to the percent time of their appointment as compared to a full-time graduate assistantship but not tuition and health insurance. Hours are limited to no more than 10 per week. Before a program may begin using the GTRS title, approval must be given by the Graduate School.

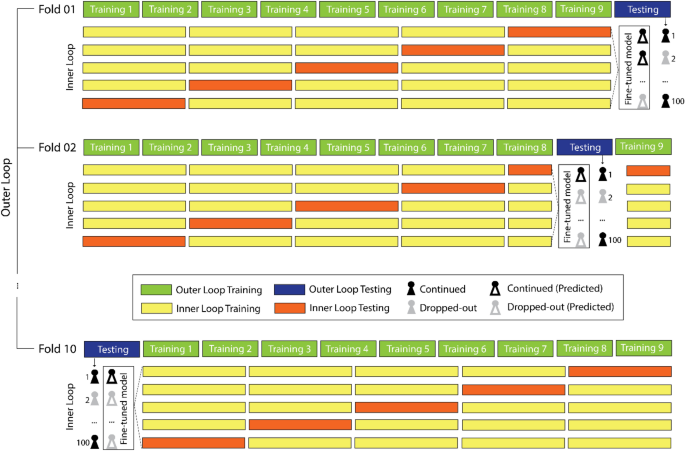

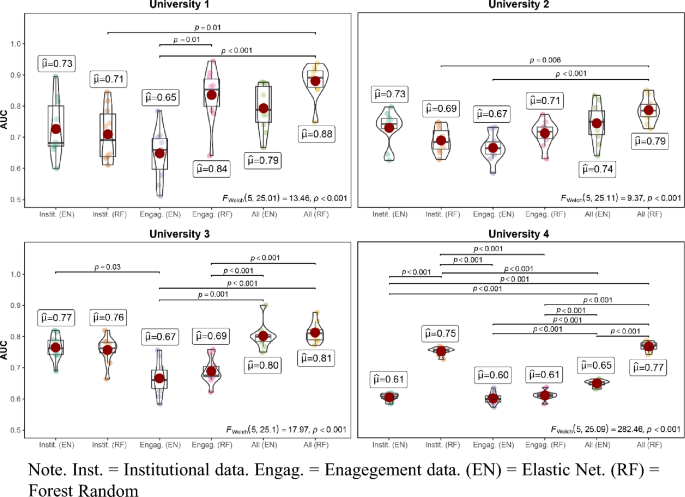

English PhD Stipends in the United States: Statistical Report

By Eric Weiskott

This report presents the results of research into stipends for PhD candidates in English conducted between summer 2021 and spring 2022. The report surveys the top 135 universities in the U.S. News and World Report 2022 “Best National University Ranking,” plus the Graduate Center, City University of New York. Of these universities, 80 offer a PhD in English and guarantee full funding for five or more years. Graduate administrators at three universities declined to grant permission to have current or historical stipend amounts published, citing legal concerns (appendix A). The remaining 77 institutions form the data set. Stipend amounts are expressed in absolute dollars (table 1), in cost-of-living-adjusted dollars (table 2), relative to endowment size for universities with institutional endowments of $3.5 billion or less (figure 1), and broken down by type of university (public or private) (tables 3a–3b) and by region (tables 4a–4d).

The stipend data were gathered by consulting program websites and, if no URL is cited, by canvassing departmental faculty and staff members responsible for administering English PhD programs, often holding the title “Director of Graduate Studies” (DGS). 1 In some cases, the standard stipend must be expressed as a dollar range rather than a fixed amount, for reasons specified in the notes.

All figures given in this report are gross pay, reflecting neither tax withholding schemes nor any mandatory student fees. All figures are rounded to the nearest dollar. All figures reflect the base or standard stipend offer, not including supplemental funding offered on a competitive basis at the department, college, or university level. All figures represent twelve-month pay, regardless of whether the program distinguishes between academic-year stipend and any summer stipend, provided both are guaranteed. While every effort was made to procure academic year 2021–22 or 2022–23 figures, in a few cases this was not possible. A limitation of the data therefore is that they mix current and recent stipend amounts. For some programs, the standard stipend increases or decreases during the course of the degree. Where the changes in pay occur in specific years, they are accordingly factored into the numbers given in the report, which represent a five-year average in these instances. However, where the changes depend on the unpredictable completion of program requirements, or reflect differential pay based on past degrees earned or not earned at the time of matriculation, I express the standard stipend as a range. Because programs with a stipend range are ranked and averaged according to the average of the low and high ends of the range, the report may slightly overstate or understate the total value of the stipend over the length of the degree depending how candidates tend to move through those programs, or depending on the academic background of the candidates who matriculate into them.

Cost-of-living comparisons were made using Nerdwallet ’s cost-of-living calculator (“Cost”), checked against the standardized cost-of-living rating on BestPlaces (“2022 Cost”). Nerdwallet ’s calculator has the advantage of splitting up geography into medium-sized benchmark areas, often roughly corresponding to a commutable radius around a town or city, as opposed to the jurisdiction-by-jurisdiction comparisons of BestPlaces and other cost-of-living calculators, which would be more pertinent to real estate purchases. However, use of the Nerdwallet tool entails limitations, occasionally acute. Some university campuses are located closer to available Nerdwallet benchmarks than others. Certain rural and suburban campuses are located in jurisdictions with somewhat higher or lower cost of living than the closest available Nerdwallet benchmark, often a city. These limitations were corrected for in the more severe cases and to the extent possible by averaging multiple benchmarks selected for geographic proximity and comparable cost of living (as given on BestPlaces ) to the location of the campus, as noted in each case in table 2. The possibility of PhD candidates’ commuting to campus from a distance greater than the radius of a Nerdwallet benchmark, not to mention the possibility of their living farther afield when teaching remotely in the COVID-19 pandemic or dissertating, further complicates a direct benchmark-to-benchmark cost-of-living conversion.

It was particularly difficult to determine the cost of living for one campus, Rutgers University, New Brunswick. This is because Rutgers is within commuting distance of New York, the highest cost-of-living metropolitan area in the United States, coupled with the fact that the Nerdwallet benchmark to which the city of New Brunswick belongs, “Middlesex-Monmouth,” covers two New Jersey counties that include many towns as distant from New Brunswick to the south and west as Brooklyn and Manhattan are to the north and east. That is, New Brunswick is inadvantageously situated in its Nerdwallet benchmark for the purposes of stating an average cost of living that captures patterns of commuting to and from campus. Commutes from south and west of campus are included, while commutes from north and east are excluded. In the Midwest and West, where Nerdwallet tends to have fewer benchmark areas, suburban and smaller urban campuses within commuting distance of a large city often are benchmarked to that city—for example, the University of Colorado, Boulder, to the Denver benchmark and the University of Michigan, Ann Arbor, to the Detroit benchmark. It would therefore seem to be inconsistent to omit to factor New York into the cost-of-living-adjusted value of a stipend paid by Rutgers University, New Brunswick, particularly as the difference between the cost of living in New York and New Brunswick is so much greater than the difference between the cost of living in Detroit and Ann Arbor, or between Denver and Boulder. My solution, to average the average of the Nerdwallet results for Brooklyn, Manhattan, and Queens together with the results for Middlesex-Monmouth, is an admittedly provisional one that risks overstating the cost of living of pursuing a PhD in English at Rutgers, which, after all, is not located in Brooklyn, Manhattan, or Queens. In a private communication, the DGS reports that a little over one quarter of current Rutgers English graduate candidates reside in Brooklyn, Manhattan, Queens, or adjacent Jersey City, NJ. I consider this proportion large enough to confirm my initial expectation that the very high cost of living in New York should factor into an estimate of the cost of living associated with a Rutgers English PhD in some way. I have not systematically polled DGSs about where candidates live. If nothing else, I hope the difficult case of Rutgers illuminates the limitations of representing cost of living with a single standardized number in an age of urban agglomeration, rapid transport, and a prevailing tolerance for work commutes of up to one hour or so.

Endowment figures (figure 1) were drawn from the fiscal year 2020 statistical report on North American university endowments published by the National Association of College and University Business Officers ( U.S. and Canadian Institutions ).

This stipend report is not a substitute for a holistic assessment of the strengths and weaknesses of an individual PhD program and is not intended to guide prospective PhD applicants toward or away from any given program. The report does not take account of such significant variables as relative strength of the program in the applicant’s area of specialty; any competitive fellowships and stipends available; exam requirements burden; teaching and service expectations; cultural life and nearby off-campus intellectual institutions; the number of years of full funding guaranteed past five, if any; or record of placing graduates into full-time academic employment. The report isolates the stipend as one important factor among several shaping the experience, opportunity cost, and financial, intellectual, and professional benefit of pursuing graduate study in English. Graduate candidates are workers as well as students, and the stipend is their salary. It is hoped that by understanding these data, program administrators, graduate administrators, department chairs, current PhDs, and prospective PhD applicants can form an evidence-based impression of what the English PhD pays around the country and in divergent institutional and regional settings.

For completeness, appendixes list the universities among the 135 that either offer the PhD in English but do not guarantee full funding for five or more years (appendix B) or do not offer the PhD in English (appendix C). Note

1 I thank Anna Chang for assistance gathering updated stipend amounts at a late stage of the project.

Works Cited

“Best National University Rankings.” U.S. News and World Report , 2022, www.usnews.com/best-colleges/rankings/national-universities .

“Cost of Living Calculator.” Nerdwallet , 2022, www.nerdwallet.com/cost-of-living-calculator .

“2022 Cost of Living Calculator.” BestPlaces , 2022, www.bestplaces.net/cost-of-living/ .

U.S. and Canadian Institutions Listed by Fiscal Year (FY) 2020 Endowment Market Value and Change in Endowment Market Value from FY19 to FY20 . National Association of College and University Business Officers , 2021, www.nacubo.org/-/media/Documents/Research/2020-NTSE-Public-Tables–Endowment-Market-Values–FINAL-FEBRUARY-19-2021.ashx.

Table 1. English PhD Standard Stipend Nationwide Comparison

Table 1 Average: $25,006

Table 1 Median: $25,000

Table 1 Notes

1 The figure reflects a stipend of $30,800 for the first year and $36,570 thereafter, averaged over five years.

2 gfs.stanford.edu/salary/salary22/tal_all.pdf . I obtained this figure by tripling the standard arts and sciences per-quarter rate to reflect Stanford University’s three-quarter, nine-month academic year.

3 The figure reflects an academic-year stipend of $27,605 ($3,067 per month), plus a summer stipend that is the average of the 2020–21 summer stipend of $5,300 ($1,767 per month) and three months of the 2021–22 academic-year rate—namely, $7,251 ($2,417 per month). Brown University is phasing in a summer stipend to match the academic-year stipend over the next year.

4 www.tgs.northwestern.edu/funding/index.html .

5 gsas.yale.edu/resources-students/finances-fellowships/stipend-payments#:~:text=students%20receive%20a%20semi%2Dmonthly,2022%20academic%20year%20is%20%2433%2C600 .

6 The figure reflects an academic-year stipend of $28,654, plus a summer stipend of $6,037 for the first four years, averaged over five years.

7 today.duke.edu/2019/04/duke-makes-12-month-funding-commitment-phd-students#:~:text=students%20in%20their%20guaranteed%20funding,54%20programs%20across%20the%20university .

8 english.rutgers.edu/images/5_10_2021_-_Fall_2022_grad_website_updated_des_of_funding_for_prospectives.pdf . The figure reflects an academic-year stipend of $25,000 for the first year and $29,426 thereafter, plus a summer stipend of $5,000 the first summer and $2,500 each of the next two summers, averaged over five years.

9 The figure is anticipated for 2022–23 following an admissions pause in 2021–22.

10 The low figure is a teaching assistant offer; the high figure is a university fellowship. While funding in excess of the rate for teaching assistants is competitive, it is also de facto guaranteed: for 2021–22, all eight offers of admission exceeded the rate for teaching assistants.

11 policy.wisc.edu/library/UW-1238 . The figure reflects a stipend of $25,000 with $1,000 in summer funding in year 3 and $4,500 in summer funding in years 4-5, averaged over five years.

12 The figures reflect a stipend range of $18,240–$25,000 for the first year and $23,835 thereafter, averaged over five years.

13 The figure reflects a stipend of $25,166 for the first year, $24,166 for the second through fourth years, and $19,000 for the fifth year, averaged over five years.

14 grad.ucdavis.edu/sites/default/files/upload/files/facstaff/salary_21-22_october_2021.pdf . I obtained this figure by halving the standard teaching assistant annual rate to reflect the rule that PhD candidates at the University of California, Davis, may work no more than half time.

15 Lehigh University guarantees full funding for five years for candidates classified as full-time. This includes all candidates except a few who are nontraditional students and bring an outside salary or other outside funding to the degree.

16 miamioh.edu/cas/academics/departments/english/admission/graduate-admission/graduate-funding/teaching-positions/index.html .

17 The figures reflect an academic-year stipend of $17,100, plus a summer stipend range of $2,500–$5,000.

18 The figures reflect a stipend of $23,688 for the first year and a range of $19,480–$20,250 thereafter, averaged over five years.

19 hr.uic.edu/hr-staff-managers/compensation/minima-for-graduate-appointments/ .

20 The University of Utah guarantees full funding for five years for those entering with a BA but four years for those entering with an MA.

21 Among the doctoral degrees offered by the English department at Purdue University, West Lafayette, the one in question is the PhD in literature, theory, and cultural studies.

22 The University of Florida guarantees full funding for six years for those entering with a BA but four years for those entering with an MA.

23 These figures reflect the range between FTE .40 at level I (BA holder, precandidacy) and FTE .49 at level II (MA holder, advanced to candidacy). See https://graduatestudies.uoregon.edu/funding/ge/salary-benefits for a schedule of salaries.

Table 2. English PhD Standard Stipend Nationwide Comparison, Adjusted for Cost of Living (Expressed in Boston-Area Dollars)

Table 2 Average: $33,060

Table 2 Median: $31,718

Table 2 Notes

1 I used the benchmark for Philadelphia, which, although geographically distant from State College / University Park, has a more comparable cost of living than other benchmarks for Pennsylvania.

2 For programs located in New York City—in this listing, Columbia University; New York University; Graduate Center, City University of New York; and Fordham University—I averaged the results for Brooklyn, Manhattan, and Queens.

3 I averaged the results for Austin and Houston.

4 I averaged the New York City triborough average with the results for Middlesex-Monmouth, NJ. This reflects Rutgers’s liminal geographic location: it is much closer to New York City, without being in the city, than any other campus on this list, and a substantial minority of Rutgers PhD candidates commute to campus from the city.

5 I averaged the results for San Francisco and Oakland.

6 I averaged the results for Bakersfield and San Diego. While Los Angeles is closer geographically, it has a much higher cost of living than Riverside and is just outside of convenient commuting range.

7 I averaged the results for Boston and Pittsfield.

8 I averaged the results for Queens and Albany, a better approximation of the cost of living on eastern Long Island than averaging the cost of living in Brooklyn, Manhattan, and Queens.

9 I averaged the results for Los Angeles and San Francisco.

10 I averaged the results for Washington, DC, and Bethesda-Gaithersburg-Frederick, MD.

Table 3a. English PhD Standard Stipend Nationwide Comparison: Private Universities

Table 3a Average: $28,653

Table 3a Median: $28,967

Table 3b. English PhD Standard Stipend Nationwide Comparison: Public Universities

Table 3b Average: $22,230

Table 3b Median: $21,500

Table 4a. English PhD Standard Stipend Comparison: West and Southwest

Table 4a Average: $25,661

Table 4a Median: $25,500

Table 4b. English PhD Standard Stipend Comparison: Midwest

Table 4b Average: $23,234

Table 4b Median: $21,966

Table 4c. English PhD Standard Stipend Comparison: Northeast

Table 4c Average: $26,741

Table 4c Median: $26,235

Table 4d. English PhD Standard Stipend Comparison: South

Table 4d Average: $22,438

Table 4d Median: $20,881

Appendix A. English PhD Programs Declining to Have Stipend Data Published

Appendix b. english phd programs not guaranteeing full funding for five or more years.

Appendix B Notes

1 The department will “attempt to fully fund all students admitted to the PhD program for five years” ( english.columbian.gwu.edu/graduate-admissions-aid#phd ).

2 Guarantees full funding for four years.

3 “All admitted students receive a multi-year funding package” ( www.humanities.uci.edu/english/graduate/index.php ).

4 Guarantees full funding for four years.

Appendix C. Universities Not Offering the PhD in English

Appendix C Notes

* Offers a terminal MA in English.

1 Offers a terminal MA in literature, culture, and technology.

2 Offers a terminal MA in English literature and publishing.

3 Offers a PhD in rhetoric and professional communication.

4 Offers a PhD in communication, rhetoric, and digital media.

5 Offers a PhD in communication and rhetoric.

6 Offers a PhD in literature. The University of California, Davis, and the University of Kansas also offer a PhD in literature, yet, unlike the University of California, San Diego, or the University of California, Santa Cruz, the Davis and Kansas degrees are housed in English departments and retain an explicitly anglophone focus.

7 Offers a PhD in rhetoric and writing.

*Campus-specific endowment information is not available in the National Association of College and University Business Officers report.

Eric Weiskott is professor of English at Boston College, where he directs the English PhD program. His most recent book is Meter and Modernity in English Verse, 1350–1650 (U of Pennsylvania P, 2021).

Leave a Reply Cancel Reply

Your e-mail address will not be published. Required fields are marked * .

You may use these HTML tags and attributes:

Are you sure?

PhD student salary – How much cash will you get?

When considering starting a PhD you need to think about how much you will get as a PhD student at a minimum. Ideally, you would be fully funded so that you could focus 100% on your studies.

A PhD student salary ranges from US$17,000 a year (New Zealand) all the way up to US$104,000 a year (Austria). The amount you need depends significantly on the living costs of a particular country. Places like the Netherlands, Finland, Denmark and Sweden have the highest living cost ratio.

Generally speaking, you can expect to receive a modest stipend for living expenses as well as tuition assistance.

In 2007 my PhD stipend was AU$20,000 (approximately US$13,000). At the time, this was enough for me to live comfortably and save a little bit of money as well.

As the cost-of-living increases PhD student salaries are being stretched to their limits.

Here is data for a range of countries ordered by the best living ratio the higher. The living to cost ratio the further the stipend goes. Data was collected from Glassdoor.com and Numeo .

In the US, most PhD students make between $20,000 and $45,000 per year. Some more prestigious programs may offer higher salaries.

Salaries vary by institution and field of study, so you should check with your school’s department to find out what kind of compensation they offer.

Additionally, many universities provide additional funding opportunities such as research grants or teaching assistantships that can help supplement your income. While you may not get rich off of a PhD student salary, it is possible to make enough money to cover basic needs while continuing your studies.

What Are PhD Student Salaries?

PhD students don’t necessarily get “salaries”.

Full-time doctoral students are typically paid a stipend which is usually a fixed amount that covers living expenses as well as tuition.

Other forms of financial support may include fellowships, grants and teaching or research assistantships.

In addition to monetary compensation, PhD students may also receive health insurance and other benefits such as free housing or childcare services. Many universities also offer career counselling services for their PhD students in order to help them find jobs after graduation.

Ultimately, PhD student salaries can vary greatly and it’s important to consider all factors when evaluating PhD offers.

Countries offering Highest PhD stipends in the World

There are a variety of countries that are better at funding PhD students than others. Check out my YouTube video which goes through the countries with the highest PhD stipend and how you can boost yours.

Here is a quick rundown of other benefits if you are considering doing a PhD abroad.

Netherlands

As an international student, you may be considering studying for a PhD in the Netherlands. The Netherlands is home to some of the top universities in Europe and offers a wide range of PhD programs. In addition, the Dutch government offers a number of scholarships and grants for international students.

I’ve done some research and found that the average salary for a PhD student in the Netherlands is around US$74163 per year . This figure is before any additional income from grants or scholarships. So, if you’re planning on studying for a PhD in the Netherlands, it’s important to bear in mind that you’ll need to budget for living costs on top of your tuition.

Switzerland

Every year, the Swiss Confederation and Swiss National Science Foundation award scholarships to international postgraduate researchers who desire to pursue their PhD in Switzerland. It’s home to some of our planet’s most stunning landscapes and among its brightest minds.

Switzerland is known for its degrees in business, is home to some of the best institutes of technology, and is a world leader in finance and banking.

Sweden is a well-developed and prosperous country with a strong tradition of academic excellence.

Swedish universities are consistently ranked highly in international rankings, making it an attractive destination for students from all over the world.

PhD students in Sweden can expect to receive a competitive stipend to help cover living costs during their studies. About USD$42618 per year , according to my research.

In addition, there are a number of scholarships and grants available to help cover the costs of tuition and other expenses.

Denmark is one of the top countries in the world for research and development, making it an attractive prospect for PhD students. The country offers generous stipends to PhD students, with no additional fees for being a student. The average PhD stipend in Denmark is around US$53,436 per year.

Norway is one of the countries offering a high PhD stipend. The average PhD stipend in Norway is around US$50,268 per year . PhD students in Norway also benefit from a high quality of life, as the country is regularly ranked as one of the best places to live in the world.

If you are considering pursuing a PhD, Norway should definitely be on your list of potential countries to study in.

Things to consider for PhD stipends

Before you settle on your PhD there are a few things to consider about your stipend.

Things such as industry top ups can significantly increase your earning potential as a PhD student and looking at the living costs in a particular country as well as the particular terms and conditions for your PhD stipend will mean you do not end up being shortchanged.

Industry Top-ups

One of the best ways that I have seen PhD students earn more money and raise their minimum salary is by looking for industry supported PhD positions and top ups.

For example, while I was on AU$20,000 a year, one of my colleagues in the department was on AU$60,000 a year and was guaranteed a job after their PhD. They had a top up scholarship from an industry partner sponsoring their battery research.

Looking for these opportunities may help you earn significantly more money during your PhD.

Living costs

Quite frankly, PhD living costs vary dramatically from country to country and city to city. European countries may have a relatively high PhD stipend but the living costs are also higher.

The best way to determine the buying power of your PhD scholarship is to consider it in terms of the living costs. The best way to do that is with the living cost index.

Here are the best countries to get a PhD stipend relative to the cost of living. The higher the living ratio the better.

You can see that Austria tops this list and many of the Scandinavian countries also are high on the list. Places like Ireland and New Zealand are one of the worst places to do your PhD if you want your stipend to go a long way.

Length of the PhD

In countries like the United States of America, the PhD system means that you need to do a 5 to 7 year PhD. This significantly increases the amount of time that you will spend in university and, therefore, your earning potential will be limited for a longer amount of time.

I chose to do my PhD in Australia because it would only take me three years as an international student. Choosing a PhD with a shorter timescale from the UK, Australia, New Zealand or European countries may be best for you.

Terms and conditions

Lastly, it is important to scrutinise the terms and conditions of your PhD stipend.

Some stipends do not allow students to get a second job which significantly limits their full-time earning potential. Other places, do not put any restrictions on their PhD students even with a full scholarship.

If you want to know more about earning more money during your PhD check out the two articles below.

- The best PhD student part-time jobs [Full guide]

- Is it possible to earn a PhD while working? The brutal truth

How to Get a PhD Stipend

To get a stipend, you will need to apply for funding through the university or other organizations offering scholarships and grants.

Be sure to carefully read through all requirements of the application process and submit all necessary documents, such as transcripts, essays, recommendation letters and financial aid forms.

You may also need to show proof of academic excellence, such as high grades or awards. For example, I was required to achieve a first class masters before being able to access any funding from a foreign university.

Once accepted, you will usually receive a monthly payment from the organization as well as tuition assistance. Additionally, many universities offer research assistantships which provide students with an opportunity to gain hands-on experience in their field while earning money at the same time.

With dedication and hard work, obtaining a PhD stipend can help reduce some of the financial burden associated with higher education.

Wrapping up

This article has been through everything you need to know that PhD student salaries and giving you some real-world numbers on what you can expect in different countries.

The real important value is the living cost ratio to ensure that your PhD stipend goes as far as possible and is not eaten up quickly by rent, food, and other basic necessities.

It is possible for PhD students to not only live comfortably but also put some money aside if you are very careful about choosing a PhD with a full stipend and looking for other opportunities to top up the money with industry partnerships and other grants.

Dr Andrew Stapleton has a Masters and PhD in Chemistry from the UK and Australia. He has many years of research experience and has worked as a Postdoctoral Fellow and Associate at a number of Universities. Although having secured funding for his own research, he left academia to help others with his YouTube channel all about the inner workings of academia and how to make it work for you.

Thank you for visiting Academia Insider.

We are here to help you navigate Academia as painlessly as possible. We are supported by our readers and by visiting you are helping us earn a small amount through ads and affiliate revenue - Thank you!

2024 © Academia Insider

PhD Handbook

Phd Stipend In ...

Phd Stipend In USA: How to Find a Job After PhD in USA?

A PhD is considered to be one of the most challenging degrees around the world but equally rewarding too. It takes about 4 to 6 years to complete a PhD in USA. One of the best parts about doing a PhD is that you get paid for it. If you are interested in the academic field, a PhD can give your career a boost and result in a salary increment by almost 25% than a master’s degree. Not only will you be entitled to a PhD stipend in USA that is around $15,000-30,000 per year but also a lucrative salary after PhD in USA between $60,000-1,00,000 and above.

You have come to the right place to know about PhD stipend in USA. Here is a complete guide to provide you with details of PhD stipend in USA for international students, average salary after PhD in USA and answers to all your commonly asked questions about job after PhD in USA!

PhD Stipend in USA for International Students

Let us kick start the discussion on job and salary after PhD in USA by answering one of the most common questions among international students: what is the average stipend for PhD students in USA?

Take note of the following details regarding PhD stipend in USA:

- Just like salaries for employees, students pursuing PhD are paid stipends which are funding from the institution to help you meet your living expenses while performing research.

- Here it is to be noted that the PhD student stipend in USA is paid on the basis of the number of months in an academic year i.e. usually 9 months per year rather than the entire calendar year.

- You can expect an average PhD stipend in USA between $15,000-30,000 per year.

- PhD stipend in USA for international students vary depending on the institution, your field of specialization and location.

- There is no minimum fixed stipend in PhD in USA like in some European countries.

- A PhD stipend in USA which is a fellowship award is tax-free while the one that is a salary for a teaching position is taxable.

Also Read: What is the total cost of pursuing Phd In USA?

Job and Salary after PhD in USA

Your salary after PhD in USA will depend on the type of job position, industry, level of experience and skills among other competent factors. Most commonly after earning a PhD, the candidate is likely to look for an academic position such as professor, lecturer, post doc, etc. Nowadays, it is not uncommon to find jobs outside the academic arena as multinational companies are also looking for expert researchers who can take their organization to the next level.

Having said that let us take a look at the table below and explore the various job and salary after PhD in USA:

Also Read: How you can apply for Phd In USA to earn from above-listed jobs?

Top Recruiter and Salary after PhD in USA

It is a pre-assumed notion that the scope of getting a job after PhD in USA is limited to academics. Opposed to this, with your level of knowledge and field of expertise, you can approach recruiters in various sectors including government institutions, hospitals, insurance companies, laboratories, large corporations as well as private companies.

Here is a list of top recruiters that you can eye on depending upon your area of specialization after PhD in USA:

How to Find a Job after PhD in USA?

As you must have understood from the above discussion that PhD job roles are related to academic positions. Here what needs to be understood is that as a PhD candidate you will be treated more as a part of the university staff than a student. A job vacancy for pursuing PhD is the opening of the position to apply for PhD at a university itself where you will be given a stipend.

Here are the ways you can find a job after PhD in USA:

- Research: Proper research on your part is quite essential as after completing your PhD which will already be a long journey, you cannot sit back and relax to find a job. Being proactive and looking for job opportunities is necessary to make your PhD worth all the effort and time invested.

- Networking: Networking is indispensable in today’s job market as almost 70% of the vacancies in USA are filled through networking.

- Job search websites:

- Career services at the university: The university where you have completed your PhD can help you by providing resources and information about job openings.

- Newspapers: You can keep an eye on the newspapers to find details of jobs that require a PhD qualified candidate.

This was all about PhD stipend in USA for Indian students. We hope this article has helped you realize the salary and job opportunities in PhD beyond the all-time favorite academic field. With several leading universities, the US is considered to be one of the hotspots among international researchers and could provide you with great career prospects. Speak to our Yocket counselors today to know about the best opportunities for PhD in USA and get insights on salary after PhD in USA.

Also Read: How to pursue Phd in USA without GRE?

Frequently Asked Questions about PhD Stipend in USA

How much is the PhD stipend in USA for Indian students?

The PhD stipend in USA for Indian students is around 12,00,000-24,00,000 INR per year.

What are the top non-academic job after PhD in USA?

The top non-academic/part-time jobs after PhD in USA include market research analyst, actuary, life science researcher, data scientist, research scientist, business analyst, operations analyst, biomedical scientist, etc.

Does a PhD increase salary?

Yes, a PhD can help you increase your salary by almost 25-30%.

How can I work in USA after a PhD?

You can apply for a H-1B visa to live and work in the US after completing your PhD. You will need an employer from the academic or industrial field to sponsor your H-1B visa to the USCIS.

Is a master’s degree required to pursue PhD in USA?

It is not mandatory to hold a master’s degree to pursue PhD in USA. Several top universities offer direct admission to PhD programs without a master’s degree.

Kashyap Matani

Articles you might like

Graduate Student average salary in the USA, 2024

Find out what the average graduate student salary is.

How much does a Graduate Student make in USA?

Graduate Student: salaries per region

Related salaries.

People also ask

Active jobs with salaries

Graduate Student Assistant

Position Summary The Graduate Student Assistant will complete highly complex assignments which enhance.. The Graduate Student Assistant will periodically coordinate the work of students and provide training..

Graduate Student Assistant - Library

Establish and maintain effective working relationships with students, faculty, staff and community.. HSC or UNT student in good standing and making satisfactory progress toward completion graduate degree..

Graduate Student Research Assistant

Posting Title Graduate Student Research Assistant Department DAL SERCH Institute 500005 Job Location.. Position Summary. Engage university student body with ongoing service, education, and research..

Graduate Staff Assistant (Student)

The position will also assist with various data entry and organization projects related to administration of Exams and digital archiving. Students must be dependable, organized, friendly, have a..

Lifeguard (Student)

(including those employed in student positions) must apply through their employee Workday account. If you are a current employee at American University, please log into Workday and select the..

STEP Coordinator (Student)

Description. Summary Position Overview. STEP Student Coordinators (STUCO)positionreports directly to the.. team' and actively work toaddress tensions interpersonal issues of the STEP students as they arise..

Graduate Student Services Advisor

Do you excel at helping others? Does student success motivate you? Are you an excellent listener.. Student Success Advisors primarily communicate with students by phone and through email. The Academy is..

Assistant Athletic Trainer

Supervision and management of Graduate Assistant Athletic Trainer, Athletic Training Interns, and.. and Student Athletic Trainers Workers.Act as a consultant for the Strength and Conditioning Department..

Art and Design Instructors (part-time) (remote)

Share your talent and knowledge with our students as they explore their creativity, define their vision.. A master's degree is required for teaching graduate level courses.Compensation. Starting at. 43..

Graduate Student Affairs Officer

674 Reports To. Department Manager Working Title. Graduate Student Affairs Officer Department.. Our students thrive in a diverse environment of inclusive excellence and our alumni lead top companies..

Graduate Student Affairs Officer, Master's Program (4575U) #63672

Our culture of openness, freedom and belonging make it a special place for students, faculty and staff.. Berkeley Social Welfare graduates are dedicated to meeting the growing needs of marginalized..

Graduate Advisor

The Education Department currently has 25 students in the PhD program. The students who enter the PhD.. Principal functional areas include. Graduate admissions process, TA allocations, fellowship..

Responsibilities. Graduate Student Historian or Designer needed to work on insideinside.org, a curated.. Under the supervision of a faculty advisor, student will research and post historical interior and..

Student Assistant Specialist - Graduate Student Grant Writer, Information Technology

The Graduate Student Grant Writer will work directly with the Senior Director of the Innovation Center.. This position is intended for a graduate student seeking professional development in the grant writing..

Orientation Leader - Undergraduate & Graduate Student

Demonstrating a strong sense of pride in the University and the New Student Orientation program.. Minimum Qualifications. Current enrollment as an undergraduate or graduate student at the University..

Graduate Program Student Assistant

Department or Unit Name Argyros College of Business and Economics Position Headcount 2 Position Title Graduate Program Student Assistant Academic Year Academic Year 2023 24 Term or Semester All..

Graduate Assistant - SE Student Organizations

Department or Unit Name SE. Student Organizations Position Headcount 2 Position Title Graduate Assistant.. SE Student Organizations Academic Year Academic Year 2024 25 Term or Semester All Academic Year Is this..

Graduate Assistant for SGA and Student Organizations

Department or Unit Name SE. Student Organizations Position Headcount 1 Position Title Graduate Assistant for SGA and Student Organizations Academic Year Academic Year 2023 24 Term or Semester..

Student Success Coordinator - Graduate

Description of Work The university is seeking an innovative, dynamic, and entrepreneurial individual who will contribute to graduate student success by engaging in the design and development of..

Graduate Student Recruitment & Outreach Specialist

Description of Work The Graduate Student Recruitment & Outreach Specialist for the Department of.. The Graduate Student Recruitment & Outreach Specialist will also coordinate, write, and disseminate..

Student Services Associate

Provide professional administrative support for department faculty, temporary faculty, staff, and students. Administrative support for faculty includes, but is not limited to, formatting..

Student Affairs Graduate Assistant

This position supports our retention initiatives with first year students, first generation students.. This individual will also work with our New Student Retention Coordinator on facilitating our P.A.C.E..

of social and environmental predictors of high risk alcohol drinking among college students. Candidates should have good data management and data analysis skills in SAS and or R. The RA..

Graduate Student Researcher (Contributing Scholar)

Description. The graduate student researcher will conduct the following activities. Research and writing support for Lead Scholars. Publication support including editing, design, or visual aid..

Graduate Student Researcher (Lead Scholar)

Description. The graduate student researcher will conduct the following activities. Researching, writing, and editing blog pieces to be published and shared through the Collective Catalyst blog..

- About the Hub

- Announcements

- Faculty Experts Guide

- Subscribe to the newsletter

Explore by Topic

- Arts+Culture

- Politics+Society

- Science+Technology

- Student Life

- University News

- Voices+Opinion

- About Hub at Work

- Gazette Archive

- Benefits+Perks

- Health+Well-Being

- Current Issue

- About the Magazine

- Past Issues

- Support Johns Hopkins Magazine

- Subscribe to the Magazine

You are using an outdated browser. Please upgrade your browser to improve your experience.

Johns Hopkins University, PhD union reach tentative agreement

The proposed three-year collective bargaining agreement, which includes enhanced pay and benefits, will now go to a ratification vote.

By Hub staff report

Johns Hopkins University and the union representing PhD student employees at the university have reached agreement on a proposed three-year collective bargaining agreement covering a broad range of important topics, including minimum stipend levels, years of guaranteed funding, union and management rights, discipline, grievance and arbitration procedures, and health and safety provisions.

Since last May, bargaining committees from JHU and the union that represents the PhD students—Teachers and Researchers United – United Electrical, Radio and Machine Workers of America Local 197 (TRU-UE Local 197)—have been negotiating details of the CBA. After more than 40 bargaining sessions, a tentative agreement was reached on Friday night. The proposed CBA, which includes 29 articles, will now go to all PhD students represented by TRU-UE for a ratification vote.

The proposed CBA offers enhanced pay and benefits that raise the minimum stipend to $47,000 per year beginning this July. Stipend increases are more than 40% on average across the bargaining unit and more than 50% in some departments. The three-year agreement also includes guaranteed increases of more than 6% in the second year of the contract to $50,000, and then a 4% increase in the third year of the contract. Among other benefit enhancements, the proposed CBA also includes paid health benefits for children and some spouses, up to 12 weeks of paid parental leave, increased vacation and sick time, and a one-time $1,000 signing bonus for all bargaining unit members if the agreement is ratified.

"We are pleased to have reached an agreement on the first contract between the university and TRU-UE Local 197 and appreciate the constructive and collaborative spirit union leaders brought to the negotiations as we sought common ground on a wide range of important issues," said Sabine Stanley , vice provost of graduate education and a member of the university's bargaining team. "If ratified, this agreement will strengthen PhD education at JHU."

Posted in University News

Related Content

Hopkins PhD students vote in favor of unionization

Hopkins PhD students to decide whether to unionize

You might also like, news network.

- Johns Hopkins Magazine

- Get Email Updates

- Submit an Announcement

- Submit an Event

- Privacy Statement

- Accessibility

Discover JHU

- About the University

- Schools & Divisions

- Academic Programs

- Plan a Visit

- my.JohnsHopkins.edu

- © 2024 Johns Hopkins University . All rights reserved.

- University Communications

- 3910 Keswick Rd., Suite N2600, Baltimore, MD

- X Facebook LinkedIn YouTube Instagram

University of South Florida

Main Navigation

Nearly two dozen graduate programs at USF considered among the best in America

- April 9, 2024

University News

By Althea Johnson , University Communications and Marketing

The University of South Florida is home to nearly two dozen graduate programs considered among the best in America, according to new rankings released today by U.S. News & World Report. USF features 23 graduate programs ranked inside the top 100 among all public and private institutions, including 11 ranked in the top 50.

USF’s highest-ranked programs are industrial and organizational psychology at No. 3, criminology at No. 18 and audiology, which comes in at No. 22.

Among USF Health’s ranked programs, nursing anesthesia jumped 58 spots into the top 50, the physical therapy program rose by double digits to No. 33 and the nursing master’s program now sits in the top 25 at No. 24.

In addition, USF’s social work and part-time MBA programs both saw double-digit gains and the education program broke into the top 50.