Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

Social impact in social media: A new method to evaluate the social impact of research

Roles Investigation, Writing – original draft

* E-mail: [email protected]

Affiliation Department of Journalism and Communication Studies, Universitat Autonoma de Barcelona, Barcelona, Spain

Affiliation Department of Psychology and Sociology, Universidad de Zaragoza, Zaragoza, Spain

Roles Conceptualization, Investigation, Methodology, Supervision, Writing – review & editing

Affiliation Department of Sociology, Universitat Autonoma de Barcelona, Barcelona, Spain

Affiliation Department of Sociology, Universitat de Barcelona (UB), Barcelona, Spain

- Cristina M. Pulido,

- Gisela Redondo-Sama,

- Teresa Sordé-Martí,

- Ramon Flecha

- Published: August 29, 2018

- https://doi.org/10.1371/journal.pone.0203117

- Reader Comments

The social impact of research has usually been analysed through the scientific outcomes produced under the auspices of the research. The growth of scholarly content in social media and the use of altmetrics by researchers to track their work facilitate the advancement in evaluating the impact of research. However, there is a gap in the identification of evidence of the social impact in terms of what citizens are sharing on their social media platforms. This article applies a social impact in social media methodology (SISM) to identify quantitative and qualitative evidence of the potential or real social impact of research shared on social media, specifically on Twitter and Facebook. We define the social impact coverage ratio (SICOR) to identify the percentage of tweets and Facebook posts providing information about potential or actual social impact in relation to the total amount of social media data found related to specific research projects. We selected 10 projects in different fields of knowledge to calculate the SICOR, and the results indicate that 0.43% of the tweets and Facebook posts collected provide linkages with information about social impact. However, our analysis indicates that some projects have a high percentage (4.98%) and others have no evidence of social impact shared in social media. Examples of quantitative and qualitative evidence of social impact are provided to illustrate these results. A general finding is that novel evidences of social impact of research can be found in social media, becoming relevant platforms for scientists to spread quantitative and qualitative evidence of social impact in social media to capture the interest of citizens. Thus, social media users are showed to be intermediaries making visible and assessing evidence of social impact.

Citation: Pulido CM, Redondo-Sama G, Sordé-Martí T, Flecha R (2018) Social impact in social media: A new method to evaluate the social impact of research. PLoS ONE 13(8): e0203117. https://doi.org/10.1371/journal.pone.0203117

Editor: Sergi Lozano, Institut Català de Paleoecologia Humana i Evolució Social (IPHES), SPAIN

Received: November 8, 2017; Accepted: August 15, 2018; Published: August 29, 2018

Copyright: © 2018 Pulido et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: All relevant data are within the paper and its Supporting Information files.

Funding: The research leading to these results has received funding from the 7th Framework Programme of the European Commission under the Grant Agreement n° 613202 P.I. Ramon Flecha, https://ec.europa.eu/research/fp7/index_en.cfm . The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

Introduction

The social impact of research is at the core of some of the debates influencing how scientists develop their studies and how useful results for citizens and societies may be obtained. Concrete strategies to achieve social impact in particular research projects are related to a broader understanding of the role of science in contemporary society. There is a need to explore dialogues between science and society not only to communicate and disseminate science but also to achieve social improvements generated by science. Thus, the social impact of research emerges as an increasing concern within the scientific community [ 1 ]. As Bornmann [ 2 ] said, the assessment of this type of impact is badly needed and is more difficult than the measurement of scientific impact; for this reason, it is urgent to advance in the methodologies and approaches to measuring the social impact of research.

Several authors have approached the conceptualization of social impact, observing a lack of generally accepted conceptual and instrumental frameworks [ 3 ]. It is common to find a wide range of topics included in the contributions about social impact. In their analysis of the policies affecting land use, Hemling et al. [ 4 ] considered various domains in social impact, for instance, agricultural employment or health risk. Moving to the field of flora and fauna, Wilder and Walpole [ 5 ] studied the social impact of conservation projects, focusing on qualitative stories that provided information about changes in attitudes, behaviour, wellbeing and livelihoods. In an extensive study by Godin and Dore [ 6 ], the authors provided an overview and framework for the assessment of the contribution of science to society. They identified indicators of the impact of science, mentioning some of the most relevant weaknesses and developing a typology of impact that includes eleven dimensions, with one of them being the impact on society. The subdimensions of the impact of science on society focus on individuals (wellbeing and quality of life, social implication and practices) and organizations (speeches, interventions and actions). For the authors, social impact “refers to the impact knowledge has on welfare, and on the behaviours, practices and activities of people and groups” (p. 7).

In addition, the terms “social impact” and “societal impact” are sometimes used interchangeably. For instance, Bornmann [ 2 ] said that due to the difficulty of distinguishing social benefits from the superior term of societal benefits, “in much literature the term ‘social impact’ is used instead of ‘societal impact’”(p. 218). However, in other cases, the distinction is made [ 3 ], as in the present research. Similar to the definition used by the European Commission [ 7 ], social impact is used to refer to economic impact, societal impact, environmental impact and, additionally, human rights impact. Therefore, we use the term social impact as the broader concept that includes social improvements in all the above mentioned areas obtained from the transference of research results and representing positive steps towards the fulfilment of those officially defined social goals, including the UN Sustainable Development Goals, the EU 2020 Agenda, or similar official targets. For instance, the Europe 2020 strategy defines five priority targets with concrete indicators (employment, research and development, climate change and energy, education and poverty and social exclusion) [ 8 ], and we consider the targets addressed by objectives defined in the specific call that funds the research project.

This understanding of the social impact of research is connected to the creation of the Social Impact Open Repository (SIOR), which constitutes the first open repository worldwide that displays, cites and stores the social impact of research results [ 9 ]. The SIOR has linked to ORCID and Wikipedia to allow the synergies of spreading information about the social impact of research through diverse channels and audiences. It is relevant to mention that currently, SIOR includes evidence of real social impact, which implies that the research results have led to actual improvements in society. However, it is common to find evidence of potential social impact in research projects. The potential social impact implies that in the development of the research, there has been some evidence of the effectiveness of the research results in terms of social impact, but the results have not yet been transferred.

Additionally, a common confusion is found among the uses of dissemination, transference (policy impact) and social impact. While dissemination means to disseminate the knowledge created by research to citizens, companies and institutions, transference refers to the use of this knowledge by these different actors (or others), and finally, as already mentioned, social impact refers to the actual improvements resulting from the use of this knowledge in relation to the goals motivating the research project (such as the United Nations Sustainable Development Goals). In the present research [ 3 ], it is argued that “social impact can be understood as the culmination of the prior three stages of the research” (p.3). Therefore, this study builds on previous contributions measuring the dissemination and transference of research and goes beyond to propose a novel methodological approach to track social impact evidences.

In fact, the contribution that we develop in this article is based on the creation of a new method to evaluate the evidence of social impact shared in social media. The evaluation proposed is to measure the social impact coverage ratio (SICOR), focusing on the presence of evidence of social impact shared in social media. Then, the article first presents some of the contributions from the literature review focused on the research on social media as a source for obtaining key data for monitoring or evaluating different research purposes. Second, the SISM (social impact through social media) methodology[ 10 ] developed is introduced in detail. This methodology identifies quantitative and qualitative evidence of the social impact of the research shared on social media, specifically on Twitter and Facebook, and defines the SICOR, the social impact coverage ratio. Next, the results are discussed, and lastly, the main conclusions and further steps are presented.

Literature review

Social media research includes the analysis of citizens’ voices on a wide range of topics [ 11 ]. According to quantitative data from April 2017 published by Statista [ 12 ], Twitter and Facebook are included in the top ten leading social networks worldwide, as ranked by the number of active users. Facebook is at the top of the list, with 1,968 million active users, and Twitter ranks 10 th , with 319 million active users. Between them are the following social networks: WhatsApp, YouTube, Facebook Messenger, WeChat, QQ, Instagram,Qzone and Tumblr. If we look at altmetrics, the tracking of social networks for mentions of research outputs includes Facebook, Twitter, Google+,LinkedIn, Sina Weibo and Pinterest. The social networks common to both sources are Facebook and Twitter. These are also popular platforms that have a relevant coverage of scientific content and easy access to data, and therefore, the research projects selected here for application of the SISM methodology were chosen on these platforms.

Chew and Eysenbach [ 13 ] studied the presence of selected keywords in Twitter related to public health issues, particularly during the 2009 H1N1 pandemic, identifying the potential for health authorities to use social media to respond to the concerns and needs of society. Crooks et al.[ 14 ] investigated Twitter activity in the context of a 5.8 magnitude earthquake in 2011 on the East Coast of the United States, concluding that social media content can be useful for event monitoring and can complement other sources of data to improve the understanding of people’s responses to such events. Conversations among young Canadians posted on Facebook and analysed by Martinello and Donelle [ 15 ] revealed housing and transportation as main environmental concerns, and the project FoodRisc examined the role of social media to illustrate consumers’ quick responses during food crisis situations [ 16 ]. These types of contributions illustrate that social media research implies the understanding of citizens’ concerns in different fields, including in relation to science.

Research on the synergies between science and citizens has increased over the years, according to Fresco [ 17 ], and there is a growing interest among researchers and funding agencies in how to facilitate communication channels to spread scientific results. For instance, in 1998, Lubchenco [ 18 ] advocated for a social contract that “represents a commitment on the part of all scientists to devote their energies and talents to the most pressing problems of the day, in proportion to their importance, in exchange for public funding”(p.491).

In this framework, the recent debates on how to increase the impact of research have acquired relevance in all fields of knowledge, and major developments address the methods for measuring it. As highlighted by Feng Xia et al. [ 19 ], social media constitute an emerging approach to evaluating the impact of scholarly publications, and it is relevant to consider the influence of the journal, discipline, publication year and user type. The authors revealed that people’s concerns differ by discipline and observed more interest in papers related to everyday life, biology, and earth and environmental sciences. In the field of biomedical sciences, Haustein et al. [ 20 ] analysed the dissemination of journal articles on Twitter to explore the correlations between tweets and citations and proposed a framework to evaluate social media-based metrics. In fact, different studies address the relationship between the presence of articles on social networks and citations [ 21 ]. Bornmann [ 22 ] conducted a case study using a sample of 1,082 PLOS journal articles recommended in F1000 to explore the usefulness of altmetrics for measuring the broader impact of research. The author presents evidence about Facebook and Twitter as social networks that may indicate which papers in the biomedical sciences can be of interest to broader audiences, not just to specialists in the area. One aspect of particular interest resulting from this contribution is the potential to use altmetrics to measure the broader impacts of research, including the societal impact. However, most of the studies investigating social or societal impact lack a conceptualization underlying its measurement.

To the best of our knowledge, the assessment of social impact in social media (SISM) has developed according to this gap. At the core of this study, we present and discuss the results obtained through the application of the SICOR (social impact coverage ratio) with examples of evidence of social impact shared in social media, particularly on Twitter and Facebook, and the implications for further research.

Following these previous contributions, our research questions were as follows: Is there evidence of social impact of research shared by citizens in social media? If so, is there quantitative or qualitative evidence? How can social media contribute to identifying the social impact of research?

Methods and data presentation

A group of new methodologies related to the analysis of online data has recently emerged. One of these emerging methodologies is social media analytics [ 23 ], which was initially used most in the marketing research field but also came to be used in other domains due to the multiple possibilities opened up by the availability and richness of the data for different research purposes. Likewise, the concern of how to evaluate the social impact of research as well as the development of methodologies for addressing this concern has occupied central attention. The development of SISM (Social Impact in Social Media) and the application of the SICOR (Social Impact Coverage Ratio) is a contribution to advancement in the evaluation of the social impact of research through the analysis of the social media selected (in this case, Twitter and Facebook). Thus, SISM is novel in both social media analytics and among the methodologies used to evaluate the social impact of research. This development has been made under IMPACT-EV, a research project funded under the Framework Program FP7 of the Directorate-General for Research and Innovation of the European Commission. The main difference from other methodologies for measuring the social impact of research is the disentanglement between dissemination and social impact. While altmetrics is aimed at measuring research results disseminated beyond academic and specialized spheres, SISM contribute to advancing this measurement by shedding light on to what extent evidence of the social impact of research is found in social media data. This involves the need to differentiate between tweets or Facebook posts (Fb/posts) used to disseminate research findings from those used to share the social impact of research. We focus on the latter, investigating whether there is evidence of social impact, including both potential and real social impact. In fact, the question is whether research contributes and/or has the potential to contribute to improve the society or living conditions considering one of these goals defined. What is the evidence? Next, we detail the application of the methodology.

Data collection

To develop this study, the first step was to select research projects with social media data to be analysed. The selection of research projects for application of the SISM methodology was performed according to three criteria.

Criteria 1. Selection of success projects in FP7. The projects were success stories of the 7 th Framework Programme (FP7) highlighted by the European Commission [ 24 ] in the fields of knowledge of medicine, public health, biology and genomics. The FP7 published calls for project proposals from 2007 to 2013. This implies that most of the projects funded in the last period of the FP7 (2012 and 2013) are finalized or in the last phase of implementation.

Criteria 2. Period of implementation. We selected projects in the 2012–2013 period because they combine recent research results with higher possibilities of having Twitter and Facebook accounts compared with projects of previous years, as the presence of social accounts in research increased over this period.

Criteria 3. Twitter and Facebook accounts. It was crucial that the selected projects had active Twitter and Facebook accounts.

Table 1 summarizes the criteria and the final number of projects identified. As shown, 10 projects met the defined criteria. Projects in medical research and public health had higher presence.

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

https://doi.org/10.1371/journal.pone.0203117.t001

After the selection of projects, we defined the timeframe of social media data extraction on Twitter and Facebook from the starting date of the project until the day of the search, as presented in Table 2 .

https://doi.org/10.1371/journal.pone.0203117.t002

The second step was to define the search strategies for extracting social media data related to the research projects selected. In this line, we defined three search strategies.

Strategy 1. To extract messages published on the Twitter account and the Facebook page of the selected projects. We listed the Twitter accounts and Facebook pages related to each project in order to look at the available information. In this case, it is important to clarify that the tweets published under the corresponding Twitter project account are original tweets or retweets made from this account. It is relevant to mention that in one case, the Twitter account and Facebook page were linked to the website of the research group leading the project. In this case, we selected tweets and Facebook posts related to the project. For instance, in the case of the Twitter account, the research group created a specific hashtag to publish messages related to the project; therefore, we selected only the tweets published under this hashtag. In the analysis, we prioritized the analysis of the tweets and Facebook posts that received some type of interaction (likes, retweets or shares) because such interaction is a proxy for citizens’ interest. In doing so, we used the R program and NVivoto extract the data and proceed with the analysis. Once we obtained the data from Twitter and Facebook, we were able to have an overview of the information to be further analysed, as shown in Table 3 .

https://doi.org/10.1371/journal.pone.0203117.t003

We focused the second and third strategies on Twitter data. In both strategies, we extracted Twitter data directly from the Twitter Advanced Search tool, as the API connected to NVivo and the R program covers only a specific period of time limited to 7/9 days. Therefore, the use of the Twitter Advanced Search tool made it possible to obtain historic data without a period limitation. We downloaded the results in PDF and then uploaded them to NVivo.

Strategy 2. To use the project acronym combined with other keywords, such as FP7 or EU. This strategy made it possible to obtain tweets mentioning the project. Table 4 presents the number of tweets obtained with this strategy.

https://doi.org/10.1371/journal.pone.0203117.t004

Strategy 3. To use searchable research results of projects to obtain Twitter data. We defined a list of research results, one for each project, and converted them into keywords. We selected one searchable keyword for each project from its website or other relevant sources, for instance, the brief presentations prepared by the European Commission and published in CORDIS. Once we had the searchable research results, we used the Twitter Advanced Search tool to obtain tweets, as presented in Table 5 .

https://doi.org/10.1371/journal.pone.0203117.t005

The sum of the data obtained from these three strategies allowed us to obtain a total of 3,425 tweets and 1,925 posts on public Facebook pages. Table 6 presents a summary of the results.

https://doi.org/10.1371/journal.pone.0203117.t006

We imported the data obtained from the three search strategies into NVivo to analyse. Next, we select tweets and Facebook posts providing linkages with quantitative or qualitative evidence of social impact, and we complied with the terms of service for the social media from which the data were collected. By quantitative and qualitative evidence, we mean data or information that shows how the implementation of research results has led to improvements towards the fulfilment of the objectives defined in the EU2020 strategy of the European Commission or other official targets. For instance, in the case of quantitative evidence, we searched tweets and Facebook posts providing linkages with quantitative information about improvements obtained through the implementation of the research results of the project. In relation to qualitative evidence, for example, we searched for testimonies that show a positive evaluation of the improvement due to the implementation of research results. In relation to this step, it is important to highlight that social media users are intermediaries making visible evidence of social impact. Users often share evidence, sometimes sharing a link to an external resource (e.g., a video, an official report, a scientific article, news published on media). We identified evidence of social impact in these sources.

Data analysis

γ i is the total number of messages obtained about project i with evidence of social impact on social media platforms (Twitter, Facebook, Instagram, etc.);

T i is the total number of messages from project i on social media platforms (Twitter, Facebook, Instagram, etc.); and

n is the number of projects selected.

Analytical categories and codebook

The researchers who carried out the analysis of the social media data collected are specialists in the social impact of research and research on social media. Before conducting the full analysis, two aspects were guaranteed. First, how to identify evidence of social impact relating to the targets defined by the EU2020 strategy or to specific goals defined by the call addressed was clarified. Second, we held a pilot to test the methodology with one research project that we know has led to considerable social impact, which allowed us to clarify whether or not it was possible to detect evidence of social impact shared in social media. Once the pilot showed positive results, the next step was to extend the analysis to another set of projects and finally to the whole sample. The construction of the analytical categories was defined a priori, revised accordingly and lastly applied to the full sample.

Different observations should be made. First, in this previous analysis, we found that the tweets and Facebook users play a key role as “intermediaries,” serving as bridges between the larger public and the evidence of social impact. Social media users usually share a quote or paragraph introducing evidence of social impact and/or link to an external resource, for instance, a video, official report, scientific article, news story published on media, etc., where evidence of the social impact is available. This fact has implications for our study, as our unit of analysis is all the information included in the tweets or Facebook posts. This means that our analysis reaches the external resources linked to find evidence of social impact, and for this reason, we defined tweets or Facebook posts providing linkages with information about social impact.

Second, the other important aspect is the analysis of the users’ profile descriptions, which requires much more development in future research given the existing limitations. For instance, some profiles are users’ restricted due to privacy reasons, so the information is not available; other accounts have only the name of the user with no description of their profile available. Therefore, we gave priority to the identification of evidence of social impact including whether a post obtained interaction (retweets, likes or shares) or was published on accounts other than that of the research project itself. In the case of the profile analysis, we added only an exploratory preliminary result because this requires further development. Considering all these previous details, the codebook (see Table 7 ) that we present as follows is a result of this previous research.

https://doi.org/10.1371/journal.pone.0203117.t007

How to analyse Twitter and Facebook data

To illustrate how we analysed data from Twitter and Facebook, we provide one example of each type of evidence of social impact defined, considering both real and potential social impact, with the type of interaction obtained and the profiles of those who have interacted.

QUANESISM. Tweet by ZeroHunger Challenge @ZeroHunger published on 3 May 2016. Text: How re-using food waste for animal feed cuts carbon emissions.-NOSHAN project hubs.ly/H02SmrP0. 7 retweets and 5 likes.

The unit of analysis is all the content of the tweet, including the external link. If we limited our analysis to the tweet itself, it would not be evidence. Examining the external link is necessary to find whether there is evidence of social impact. The aim of this project was to investigate the process and technologies needed to use food waste for feed production at low cost, with low energy consumption and with a maximal evaluation of the starting wastes. This tweet provides a link to news published in the PHYS.org portal [ 25 ], which specializes in science news. The news story includes an interview with the main researcher that provides the following quotation with quantitative evidence:

'Our results demonstrated that with a NOSHAN 10 percent mix diet, for every kilogram of broiler chicken feed, carbon dioxide emissions were reduced by 0.3 kg compared to a non-food waste diet,' explains Montse Jorba, NOSHAN project coordinator. 'If 1 percent of total chicken broiler feed in Europe was switched to the 10 percent NOSHAN mix diet, the total amount of CO2 emissions avoided would be 0.62 million tons each year.'[ 25 ]

This quantitative evidence “a NOSHAN 10 percent mix diet, for every kilogram of broiler chicken feed, carbon dioxide emissions carbon dioxide emissions were reduced by 0.3 kg to a non-food waste diet” is linked directly with the Europe 2020 target of Climate Change & Energy, specifically with the target of reducing greenhouse gas emissions by 20% compared to the levels in 1990 [ 8 ]. The illustrative extrapolation the coordinator mentioned in the news is also an example of quantitative evidence, although is an extrapolation based on the specific research result.

This tweet was captured by the Acronym search strategy. It is a message tweeted by an account that is not related to the research project. The twitter account is that of the Zero Hunger Challenge movement, which supports the goals of the UN. The interaction obtained is 7 retweets and 5 likes. Regarding the profiles of those who retweeted and clicked “like”, there were activists, a journalist, an eco-friendly citizen, a global news service, restricted profiles (no information is available on those who have retweeted) and one account with no information in its profile.

The following example illustrates the analysis of QUALESISM: Tweet by @eurofitFP7 published on4 October 2016. Text: See our great new EuroFIT video on youtube! https://t.co/TocQwMiW3c 9 retweets and 5 likes.

The aim of this project is to improve health through the implementation of two novel technologies to achieve a healthier lifestyle. The tweet provides a link to a video on YouTube on the project’s results. In this video, we found qualitative evidence from people who tested the EuroFit programme; there are quotes from men who said that they have experienced improved health results using this method and that they are more aware of how to manage their health:

One end-user said: I have really amazing results from the start, because I managed to change a lot of things in my life. And other one: I was more conscious of what I ate, I was more conscious of taking more steps throughout the day and also standing up a little more. [ 26 ]

The research applies the well researched scientific evidence to the management of health issues in daily life. The video presents the research but also includes a section where end-users talk about the health improvements they experienced. The quotes extracted are some examples of the testimonies collected. All agree that they have improved their health and learned healthy habits for their daily lives. These are examples of qualitative evidence linked with the target of the call HEALTH.2013.3.3–1—Social innovation for health promotion [ 27 ] that has the objectives of reducing sedentary habits in the population and promoting healthy habits. This research contributes to this target, as we see in the video testimonies. Regarding the interaction obtained, this tweet achieved 9 retweets and 5 likes. In this case, the profiles of the interacting citizens show involvement in sport issues, including sport trainers, sport enthusiasts and some researchers.

To summarize the analysis, in Table 8 below, we provide a summary with examples illustrating the evidence found.

https://doi.org/10.1371/journal.pone.0203117.t008

Quantitative evidence of social impact in social media

There is a greater presence of tweets/Fb posts with quantitative evidence (14) than with qualitative evidence (9) in the total number of tweets/Fb posts identified with evidence of social impact. Most of the tweets/Fb posts with quantitative evidence of social impact are from scientific articles published in peer-reviewed international journals and show potential social impact. In Table 8 , we introduce 3 examples of this type of tweets/Fb posts with quantitative evidence:

The first tweet with quantitative social impact selected is from project 7. The aim of this project was to provide high-quality scientific evidence for preventing vitamin D deficiency in European citizens. The tweet highlighted the main contribution of the published study, that is, “Weekly consumption of 7 vitamin D-enhanced eggs has an important impact on winter vitamin D status in adults” [ 28 ]. The quantitative evidence shared in social media was extracted from a news publication in a blog on health news. This blog collects scientific articles of research results. In this case, the blog disseminated the research result focused on how vitamin D-enhanced eggs improve vitamin D deficiency in wintertime, with the published results obtained by the research team of the project selected. The quantitative evidence illustrates that the group of adults who consumed vitamin D-enhanced eggs did not suffer from vitamin D deficiency, as opposed to the control group, which showed a significant decrease in vitamin D over the winter. The specific evidence is the following extracted from the article [ 28 ]:

With the use of a within-group analysis, it was shown that, although serum 25(OH) D in the control group significantly decreased over winter (mean ± SD: -6.4 ± 6.7 nmol/L; P = 0.001), there was no change in the 2 groups who consumed vitamin D-enhanced eggs (P>0.1 for both. (p. 629)

This evidence contributes to achievement of the target defined in the call addressed that is KBBE.2013.2.2–03—Food-based solutions for the eradication of vitamin D deficiency and health promotion throughout the life cycle [ 29 ]. The quantitative evidence shows how the consumption of vitamin D-enhanced eggs reduces vitamin D deficiency.

The second example of this table corresponds to the example of quantitative evidence of social impact provided in the previous section.

The third example is a Facebook post from project 3 that is also tweeted. Therefore, this evidence was published in both social media sources analysed. The aim of this project was to measure a range of chemical and physical environmental hazards in food, consumer products, water, air, noise, and the built environment in the pre- and postnatal early-life periods. This Facebook post and tweet links directly to a scientific article [ 30 ] that shows the precision of the spectroscopic platform:

Using 1H NMR spectroscopy we characterized short-term variability in urinary metabolites measured from 20 children aged 8–9 years old. Daily spot morning, night-time and pooled (50:50 morning and night-time) urine samples across six days (18 samples per child) were analysed, and 44 metabolites quantified. Intraclass correlation coefficients (ICC) and mixed effect models were applied to assess the reproducibility and biological variance of metabolic phenotypes. Excellent analytical reproducibility and precision was demonstrated for the 1H NMR spectroscopic platform (median CV 7.2%) . (p.1)

This evidence is linked to the target defined in the call “ENV.2012.6.4–3—Integrating environmental and health data to advance knowledge of the role of environment in human health and well-being in support of a European exposome initiative” [ 31 ]. The evidence provided shows how the project’s results have contributed to building technology for improving the data collection to advance in the knowledge of the role of the environment in human health, especially in early life. The interaction obtained is one retweet from a citizen from Nigeria interested in health issues, according to the information available in his profile.

Qualitative evidence of social impact in social media

We found qualitative evidence of the social impact of different projects, as shown in Table 9 . Similarly to the quantitative evidence, the qualitative cases also demonstrate potential social impact. The three examples provided have in common that they are tweets or Facebook posts that link to videos where the end users of the research project explain their improvements once they have implemented the research results.

https://doi.org/10.1371/journal.pone.0203117.t009

The first tweet with qualitative evidence selected is from project 4. The aim of this project is to produce a system that helps in the prevention of obesity and eating disorders, targeting young people and adults [ 32 ]. The twitter account that published this tweet is that of the Future and Emerging Technologies Programme of the European Commission, and a link to a Euronews video is provided. This video shows how the patients using the technology developed in the research achieved control of their eating disorders, through the testimonies of patients commenting on the positive results they have obtained. These testimonies are included in the news article that complements the video. An example of these testimonies is as follows:

Pierre Vial has lost 43 kilos over the past nine and a half months. He and other patients at the eating disorder clinic explain the effects obesity and anorexia have had on their lives. Another patient, Karin Borell, still has some months to go at the clinic but, after decades of battling anorexia, is beginning to be able to visualise life without the illness: “On a good day I see myself living a normal life without an eating disorder, without problems with food. That’s really all I wish right now”.[ 32 ]

This qualitative evidence shows how the research results contribute to the achievement of the target goals of the call addressed:“ICT-2013.5.1—Personalised health, active ageing, and independent living”. [ 33 ] In this case, the results are robust, particularly for people suffering chronic diseases and desiring to improve their health; people who have applied the research findings are improving their eating disorders and better managing their health. The value of this evidence is the inclusion of the patients’ voices stating the impact of the research results on their health.

The second example is a Facebook post from project 9, which provides a link to a Euronews video. The aim of this project is to bring some tools from the lab to the farm in order to guarantee a better management of the farm and animal welfare. In this video [ 34 ], there are quotes from farmers using the new system developed through the research results of the project. These quotes show how use of the new system is improving the management of the farm and the health of the animals; some examples are provided:

Cameras and microphones help me detect in real time when the animals are stressed for whatever reason,” explained farmer Twan Colberts. “So I can find solutions faster and in more efficient ways, without me being constantly here, checking each animal.”

This evidence shows how the research results contribute to addressing the objectives specified in the call “KBBE.2012.1.1–02—Animal and farm-centric approach to precision livestock farming in Europe” [ 29 ], particularly, to improve the precision of livestock farming in Europe. The interaction obtained is composed of6 likes and 1 share. The profiles are diverse, but some of them do not disclose personal information; others have not added a profile description, and only their name and photo are available.

Interrater reliability (kappa)

The analysis of tweets and Facebook posts providing linkages with information about social impact was conducted following a content analysis method in which reliability was based on a peer review process. This sample is composed of 3,425 tweets and 1,925 Fb/posts. Each tweet and Facebook post was analysed to identify whether or not it contains evidence of social impact. Each researcher has the codebook a priori. We used interrater reliability in examining the agreement between the two raters on the assignment of the categories defined through Cohen’s kappa. We used SPSS to calculate this coefficient. We exported an excel sheet with the sample coded by the two researchers being 1 (is evidence of social impact, either potential or real) and 0 (is not evidence of social impact) to SPSS. The cases where agreement was not achieved were not considered as containing evidence of social impact. The result obtained is 0.979; considering the interpretation of this number according to Landis & Koch [ 35 ], our level of agreement is almost perfect, and thus, our analysis is reliable. To sum up the data analysis, the description of the steps followed is explained:

Step 1. Data analysis I. We included all data collected in an excel sheet to proceed with the analysis. Prior to the analysis, researchers read the codebook to keep in mind the information that should be identified.

Step 2. Each researcher involved reviewed case by case the tweets and Facebook posts to identify whether they provide links with evidence of social impact or not. If the researcher considers there to be evidence of social impact, he or she introduces the value of 1into the column, and if not, the value of 0.

Step 3. Once all the researchers have finished this step, the next step is to export the excel sheet to SPSS to extract the kappa coefficient.

Step 4. Data Analysis II. The following step was to analyse case by case the tweets and Facebook posts identified as providing linkages with information of social impact and classify them as quantitative or qualitative evidence of social impact.

Step 5. The interaction received was analysed because this determines to which extent this evidence of social impact has captured the attention of citizens (in the form of how many likes, shares, or retweets the post has).

Step 6. Finally, if available, the profile descriptions of the citizens interacting through retweeting or sharing the Facebook post were considered.

Step 7. SICOR was calculated. It could be applied to the complete sample (all data projects) or to each project, as we will see in the next section.

The total number of tweets and Fb/posts collected from the 10 projects is 5,350. After the content analysis, we identified 23 tweets and Facebook posts providing linkages to information about social impact. To respond to the research question, which considered whether there is evidence of social impact shared by citizens in social media, the answer was affirmative, although the coverage ratio is low. Both Twitter and Facebook users retweeted or shared evidence of social impact, and therefore, these two social media networks are valid sources for expanding knowledge on the assessment of social impact. Table 10 shows the social impact coverage ratio in relation to the total number of messages analysed.

https://doi.org/10.1371/journal.pone.0203117.t010

The analysis of each of the projects selected revealed some results to consider. Of the 10 projects, 7 had evidence, but those projects did not necessarily have more Tweets and Facebook posts. In fact, some projects with fewer than 70 tweets and 50 Facebook posts have more evidence of social impact than other projects with more than 400 tweets and 400 Facebook posts. This result indicates that the number of tweets and Facebook posts does not determine the existence of evidence of social impact in social media. For example, project 2 has 403 tweets and 423 Facebooks posts, but it has no evidence of social impact on social media. In contrast, project 9 has 62 tweets, 43 Facebook posts, and 2 pieces of evidence of social impact in social media, as shown in Table 11 .

https://doi.org/10.1371/journal.pone.0203117.t011

The ratio of tweets/Fb posts to evidence is 0.43%, and it differs depending on the project, as shown below in Table 12 . There is one project (P7) with a ratio of 4.98%, which is a social impact coverage ratio higher than that of the other projects. Next, a group of projects (P3, P9, P10) has a social impact coverage ratio between 1.41% and 2,99%.The next slot has three projects (P1, P4, P5), with a ratio between 0.13% and 0.46%. Finally, there are three projects (P2, P6, P8) without any tweets/Fb posts evidence of social impact.

https://doi.org/10.1371/journal.pone.0203117.t012

Considering the three strategies for obtaining data, each is related differently to the evidence of social impact. In terms of the social impact coverage ratio, as shown in Table 13 , the most successful strategy is number 3 (searchable research results), as it has a relation of 17.86%, which is much higher than the ratios for the other 2 strategies. The second strategy (acronym search) is more effective than the first (profile accounts),with 1.77% for the former as opposed to 0.27% for the latter.

https://doi.org/10.1371/journal.pone.0203117.t013

Once tweets and Facebook posts providing linkages with information about social impact(ESISM)were identified, we classified them in terms of quantitative (QUANESISM) or qualitative evidence (QUALESISM)to determine which type of evidence was shared in social media. Table 14 indicates the amount of quantitative and qualitative evidence identified for each search strategy.

https://doi.org/10.1371/journal.pone.0203117.t014

First, the results obtained indicated that the SISM methodology aids in calculating the social impact coverage ratio of the research projects selected and evaluating whether the social impact of the corresponding research is shared by citizens in social media. The social impact coverage ratio applied to the sample selected is low, but when we analyse the SICOR of each project separately, we can observe that some projects have a higher social impact coverage ratio than others. Complementary to altmetrics measuring the extent to which research results reach out society, the SICOR considers the question whether this process includes evidence of potential or real social impact. In this sense, the overall methodology of SISM contributes to advancement in the evaluation of the social impact of research by providing a more precise approach to what we are evaluating.

This contribution complements current evaluation methodologies of social impact that consider which improvements are shared by citizens in social media. Exploring the results in more depth, it is relevant to highlight that of the ten projects selected, there is one research project with a social impact coverage ratio higher than those of the others, which include projects without any tweets or Facebook posts with evidence of social impact. This project has a higher ratio of evidence than the others because evidence of its social impact is shared more than is that of other projects. This also means that the researchers produced evidence of social impact and shared it during the project. Another relevant result is that the quantity of tweets and Fb/posts collected did not determine the number of tweets and Fb/posts found with evidence of social impact. Moreover, the analysis of the research projects selected showed that there are projects with less social media interaction but with more tweets and Fb/posts containing evidence of social media impact. Thus, the number of tweets and Fb/posts with evidence of social impact is not determined by the number of publication messages collected; it is determined by the type of messages published and shared, that is, whether they contain evidence of social impact or not.

The second main finding is related to the effectiveness of the search strategies defined. Related to the strategies carried out under this methodology, one of the results found is that the most effective search strategy is the searchable research results, which reveals a higher percentage of evidence of social impact than the own account and acronym search strategies. However, the use of these three search strategies is highly recommended because the combination of all of them makes it possible to identify more tweets and Facebook posts with evidence of social impact.

Another result is related to the type of evidence of social impact found. There is both quantitative and qualitative evidence. Both types are useful for understanding the type of social impact achieved by the corresponding research project. In this sense, quantitative evidence allows us to understand the improvements obtained by the implementation of the research results and capture their impact. In contrast, qualitative evidence allows us to deeply understand how the resultant improvements obtained from the implementation of the research results are evaluated by the end users by capturing their corresponding direct quotes. The social impact includes the identification of both real and potential social impact.

Conclusions

After discussing the main results obtained, we conclude with the following points. Our study indicates that there is incipient evidence of social impact, both potential and real, in social media. This demonstrates that researchers from different fields, in the present case involved in medical research, public health, animal welfare and genomics, are sharing the improvements generated by their research and opening up new venues for citizens to interact with their work. This would imply that scientists are promoting not only the dissemination of their research results but also the evidence on how their results may lead to the improvement of societies. Considering the increasing relevance and presence of the dissemination of research, the results indicate that scientists still need to include in their dissemination and communication strategies the aim of sharing the social impact of their results. This implies the publication of concrete qualitative or quantitative evidence of the social impact obtained. Because of the inclusion of this strategy, citizens will pay more attention to the content published in social media because they are interested in knowing how science can contribute to improving their living conditions and in accessing crucial information. Sharing social impact in social media facilitates access to citizens of different ages, genders, cultural backgrounds and education levels. However, what is most relevant for our argument here is how citizens should also be able to participate in the evaluation of the social impact of research, with social media a great source to reinforce this democratization process. This contributes not only to greatly improving the social impact assessment, as in addition to experts, policy makers and scientific publications, citizens through social media contribute to making this assessment much more accurate. Thus, citizens’ contribution to the dissemination of evidence of the social impact of research yields access to more diverse sectors of society and information that might be unknown by the research or political community. Two future steps are opened here. On the one hand, it is necessary to further examine the profiles of users who interact with this evidence of social impact considering the limitations of the privacy and availability of profile information. A second future task is to advance in the articulation of the role played by citizens’ participation in social impact assessment, as citizens can contribute to current worldwide efforts by shedding new light on this process of social impact assessment and contributing to making science more relevant and useful for the most urgent and poignant social needs.

Supporting information

S1 file. interrater reliability (kappa) result..

This file contains the SPSS file with the result of the calculation of Cohen’s Kappa regards the interrater reliability. The word document exported with the obtained result is also included.

https://doi.org/10.1371/journal.pone.0203117.s001

S2 File. Data collected and SICOR calculation.

This excel contains four sheets, the first one titled “data collected” contains the number of tweets and Facebook posts collected through the three defined search strategies; the second sheet titled “sample” contains the sample classified by project indicating the ID of the message or code assigned, the type of message (tweet or Facebook post) and the codification done by researchers being 1 (is evidence of social impact, either potential or real) and 0 (is not evidence of social impact); the third sheet titled “evidence found” contains the number of type of evidences of social impact founded by project (ESISM-QUANESIM or ESISM-QUALESIM), search strategy and type of message (tweet or Facebook posts); and the last sheet titled “SICOR” contains the Social Impact Coverage Ratio calculation by projects in one table and type of search strategy done in another one.

https://doi.org/10.1371/journal.pone.0203117.s002

Acknowledgments

The research leading to these results received funding from the 7 th Framework Programme of the European Commission under Grant Agreement n° 613202. The extraction of available data using the list of searchable keywords on Twitter and Facebook followed the ethical guidelines for social media research supported by the Economic and Social Research Council (UK) [ 36 ] and the University of Aberdeen [ 37 ]. Furthermore, the research results have already been published and made public, and hence, there are no ethical issues.

- View Article

- PubMed/NCBI

- Google Scholar

- 6. Godin B, Dore C. Measuring the impacts of science; beyond the economic dimension. INRS Urbanisation, Culture et Sociult, HSIT Lecture. 2005. Helsinki, Finland: Helsinki Institute for Science and Technology Studies.

- 7. European Commission. Better regulation Toolbox. 2017: 129–130. Available from https://ec.europa.eu/info/sites/info/files/better-regulation-toolbox_0.pdf

- 8. European 2020 Strategy. 2010. Available from https://ec.europa.eu/info/business-economy-euro/economic-and-fiscal-policy-coordination/eu-economic-governance-monitoring-prevention-correction/european-semester/framework/europe-2020-strategy_en

- 10. Flecha R, Sordé-Martí T. SISM Methodology (Social Impact through Social Media).2016. Available from https://archive.org/details/SISMMethodology

- 12. Statista. Statistics and facts about social media usage. 2017. Available from https://www.statista.com/topics/1164/social-networks/

- 16. FoodRisc Consortium, European Commission, Main researcher: Patrick Wall. FoodRisc—Result in Brief (Seventh Framework Programme). 2017. Available from http://cordis.europa.eu/result/rcn/90678_en.html

- 23. Murphy, L. Grit Report. Greenbook research industry trends report. Grit Q3-Q4 2016; (Vol. 3–4). New York. Retrieved from https://www.greenbook.org/grit

- 24. EC Research & Innovation. Success Stories [Internet]. 2017 [cited 2017 Apr 25]. Available from: http://ec.europa.eu/research/infocentre/success_stories_en.cfm

- 25. PhysOrg. How re-using food waste for animal feed cuts carbon emissions. 2016. Available from: https://phys.org/news/2016-04-re-using-food-animal-carbon-emissions.html

- 26. EuroFIT. EuroFIT International PP Youtube. 2016; minute 2.05–2.20. Available from: https://www.youtube.com/watch?time_continue=155&v=CHkbnD8IgZw

- 27. European Commission<. Work Programme 2013. Cooperation Theme 1 Health. (2013). Available from: https://ec.europa.eu/research/participants/portal/doc/call/fp7/common/1567645-1._health_upd_2013_wp_27_june_2013_en.pdf

- 29. European Commission. Work Programme 2013. Cooperation Theme 2. Food, agriculture, and fisheries, and biotechnology. (2013). Available from: http://ec.europa.eu/research/participants/data/ref/fp7/192042/b-wp-201302_en.pdf

- 31. European Commission. Work Programme 2012. Cooperation Theme 6 Environment (including climate change). (2011). Available from: http://ec.europa.eu/research/participants/data/ref/fp7/89467/f-wp-201201_en.pdf

- 32. Research Information Center. Technology trialled in fight against ticking timebomb of obesity. 2016. Available from: http://www.euronews.com/2016/08/10/technology-trialled-in-fight-against-ticking-timebomb-of-obesity

- 33. European Commission. Work Programme 2013. Cooperation Theme 3. ICT (Information and Communication Technologies) (2012). Available from: http://ec.europa.eu/research/participants/data/ref/fp7/132099/c-wp-201301_en.pdf

- 34. Euronews. Big farmer is watching! Surveillance technology monitors animal wellbeing. 2016. Available from http://www.euronews.com/2016/05/09/big-farmer-is-watching-surveillance-technology-monitors-animal-wellbeing

- 36. Economic and Social Research Council. Social Media Best Practice and Guidance. Using Social Media. 2017. Available from: http://www.esrc.ac.uk/research/impact-toolkit/social-media/using-social-media/

- 37. Townsend, L. & Wallace, C. Social Media Research: A Guide to Ethics. 2016. Available from http://www.gla.ac.uk/media/media_487729_en.pdf

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Elsevier - PMC COVID-19 Collection

An Analysis of Demographic and Behavior Trends Using Social Media: Facebook, Twitter, and Instagram

Personality and character have major effects on certain behavioral outcomes. As advancements in technology occur, more people these days are using social media such as Facebook, Twitter, and Instagram. Due to the increase in social media's popularity, the types of behaviors are now easier to group and study as this is important to know the behavior of users via social networking in order to analyze similarities of certain behavior types and this can be used to predict what they post as well as what they comment, share, and like on social networking sites. However, very few review studies have undertaken grouping according to similarities and differences to predict the personality and behavior of individuals with the help of social networking sites such as Facebook, Twitter, and Instagram. Therefore, the purpose of this research is to collect data from previous researches and to analyze the methods they have used. This chapter reviewed 30 research studies on the topic of behavioral analysis using the social media from 2015 to 2017. This research is based on the method of previous publications and analyzed the results, limitations, and number of users to draw conclusions. Our results indicated that the percentage of completed research on the Facebook, Twitter, and Instagram show that 50% of the studies were done on Twitter, 27% on Facebook, and 23% on Instagram. Twitter seems to be more popular and recent than the other two spheres as there are more studies on it. Further, we extracted the studies based on the year and graphs in 2015 which indicated that more research has been done on Facebook to analyze the behavior of users and the trends are decreasing in the following year. However, more studies have been done on Twitter in 2016 than any other social media. The results also show the classifications based on different methods to analyze individual behavior. However, most of the studies have been done on Twitter, as it is more popular and newer than Facebook and Instagram particularly from 2015 to 2017, and more research needs to be done on other social media spheres in order to analyze the trending behaviors of users. This study should be useful to obtain knowledge about the methods used to analyze user behavior with description, limitations, and results. Although some researchers collect demographic information on users’ gender on Facebook, others on Twitter do not. This lack of demographic data, which is typically available in more traditional sources such as surveys, has created a new focus on developing methods to work out these traits as a means of expanding Big Data research.

Graphical Abstract

1. Introduction

Technology has become a very important part of everyone's life. Everyone from the age of 5 to 65 years is on social media every day with billions of users sending messages, sharing information, comments, and the like [1] . With the advancement in information technology, social networking sites such as Facebook, Twitter, Instagram, and LinkedIn are available for the users to interact with families, colleagues, and friends. As a result, social activities are shifting from real things to virtual machines [2] . Analyzing the behavior of individuals from social networking sites is a complex task because there are several methods used. By gathering information from different resources and then analyzing that information, the behavior of the users can be examined. In this research, we have collected different studies about assessing human behavior with the help of social media and compared them according to the different methods used by different authors [3] .

To know the personal preference of the users on the social media is a very important task for businesses [4] . Companies can then target those interested customers who are active on the social media in related areas. By gathering information about user behavior pattern, the preferences of the individuals can be identified [5] . Different researchers have found various methods to collect information about human intentions. In this research, our main aim is to analyze how information is analyzed in social media and how this information is useful. This research is very useful as methods to detect human behavior that has been analyzed on different social media [6] .

In this research, 30 research papers have been collected from different social media providers such as Facebook, Instagram, and Twitter. After analyzing the data given in these papers, the different methods used were examined. In particular, the behavior of users was analyzed from aspects such as likes, comment, and shares from Facebook, Instagram, and Twitter [7] .

The first section provides the material and methods used by the 30 authors to predict the behavior of social media users. This section included data collection, data inclusion criteria, and data analysis [8] . The next section is the result section which provides the statistical analysis and the percentage of research completed on different social media. The result section includes a table which provides the research paper analysis according to the year along with pie chart figures, data collection, and behavior analysis methods and classifications based on different methods with line graphs [9] . The next section is a discussion on the given topic and the last section is the conclusion of this research work.

2. Material and Methods

Data were collected from different conference papers published in the IEEE. From these papers, different methods of analyzing the user behavior [10] was assessed. This report is based on a review of the published articles and analyzes the methods they have used. The data are given in a tabular form.

2.1. Data Collection

Data were collected from 30 various journal papers from the IEEE library regarding the analysis of the user behavior using social media from 2015 to 2017. The collected data were related to Facebook, Twitter, and Instagram in different countries [11] . The attributes that were used for data collection were: applications, methods used, description of the method, number of users, limitations, and results. This raw data is presented in Table 1 [32] .

Behavior Analysis Using Social Media Data Extracted From 30 Scientific Research Papers During 2015–17

2.2. Data Inclusion Criteria

The different data attributes used to analyze the papers are given in Table 1 . This included the following: author name, applications, methods used, detail of methods, number of users, limitations, and results. Data were gathered relating to different social networking sites [17] . In our analysis, the different methods that have been used by researchers to analyze the user's behavior are explored. In this research, three different social media datasets have been collected, which represents the methods and technologies used to understand the behavior of the users.

2.3. Analysis of Raw Data

The raw data presented in Table 1 specifies the attributes that were used to conduct this research. We pooled and analyzed 30 studies based on the impact of variables used in their studies. The descriptive details of the study based on the publication year were then analyzed to observe the behavior of the social media user from 2015 to 2017. A comparison of the methods they used to investigate the behavior of users was then done.

This research included papers from the last 3 years from 2015 to 2017. All papers used data from Facebook, Instagram, and Twitter.

The aim of this research is to know the methods used by researchers to predict the behavior of social media users. In this research, data were collected based on the use of three different social networking sites such as Facebook, Instagram, and Twitter. A random user list was used to analyze the behavior. In our final analysis, we pooled the data, which showed a statistically significant difference in various parameters (published year, methods, results, and limitations) for different social media sites. The results section includes the percentage of research on the three social networking sites, research papers according to year with bar graph representations, data collection and behavior analysis methods and classification based on the different methods with line graph representations.

3.1. Statistical Analysis

We performed statistical analysis to organize the data and predict the trends based on the analysis. This showed the different social media sites used based on the data given in Table 1 .

As shown in Fig. 1 and Table 2 , 27% of data was based on Facebook users, 23% of data was based on Instagram users, and 50% of data was based on Twitter users. As such, it is clear that Twitter is used more than other two social media sites for the analysis of the behavior of users.

Analysis of different social media to predict the behavior of users.

Percentage of Number of Researches Completed in Three Different Social Media

3.2. Research Papers According to Year

Table 3 represents the data based on the year published. This indicates that most of research was completed on Twitter in 2016 and there was no research done in 2017 on Facebook regarding the behavior of users.

Number of Researches According to Year

Fig. 2 shows that most of the research studies have been completed on Twitter in 2016. There was one research on the behavior analysis topic on Facebook in 2017.

Research papers according to 2015–17.

3.3. Data Collection Method and Behavior Analysis Methods Used

Data collection techniques and behavior analysis methods used by different studies are shown in Table 4 .

Data Collection and Behavior Analysis Methods Used by Different Authors

3.4. Classification Based on Different Methods

The behavior of users can be analyzed using different methods as shown in Table 5 .

Classification Based on Different Methods to Analysis the Behavior of Users

Fig. 3 is based on the classification of papers based on the different methods used and it is clear that the researchers have used analysis techniques more than others and they have rarely used coding rules.

Classification based on different methods used by 30 different studies.

4. Discussion

In this analysis, we observed that the amount of studies on Facebook and Instagram in the period from 2015 to 2017 was low, so there is a need of more research in these important areas.

This review study will help the readers to understand the different methods that the authors have used in their research studies on behavior analysis in social media.

An examination of the different methods of behavior analysis carried out with the help of social media is the main aim of this research. Thirty research studies were collected and analyzed to understand the personality of individuals who use social media such as Facebook, Twitter, and Instagram. Only three types of social network sites were included in this research. This analysis from the reported studies gives an overview of methods used to predict the personality of social media users.

As seen from Fig. 1 , 50% of research was done on Twitter from 2015 to 2017, whereas as the other two social networking sites, Facebook and Instagram, only had 27% and 23%, respectively. Moreover, some studies [14] , [21] proposed more than one method to analyze individuals’ behavior.

A major issue in this area is the security and privacy of the information that the users put on the social media. However, some of the studies included in this review provided suggestions and methods to help secure the personal information of users. Many authors also discussed machine learning technique to observe the personality of social networking site users.

The results showed that most of the research completed in 2016 were on Twitter rather than Facebook and Instagram. In 2015, most research was done on Facebook and the least research was done on Instagram. On the other hand, in 2016 Twitter has the highest numbers of research papers and Facebook had the lowest numbers. In 2017, Twitter and Instagram had the highest number of research paper while Facebook had none at all.

Data collection and behavior analysis methods provided by authors were collected as raw data and analyzed. A classification based on the methods used by the authors for analysis was created.

Previous review studies did not include the limitations and number of users’ attribute in their analysis. We have included these two attributes in Table 1 to make the research more specific and easy to understand for the readers [13] .

The analysis of the papers indicated that Twitter has been the most used to predict the personality of social media users. Considering Table 1 , there is a need for more variety in research methods on Instagram to understand the behavior of users.

A cut-based classification method was used to analyze the behavior of Twitter users by Bhagat et al. [4] . From the analysis done by these authors, they have concluded that cut-based classification method can be extended in the future to provide GUI for users for polarity classifications and subjectivity classifications. Real-time user messaging can also be analyzed in the future [18] .

This review study is based on the analysis of behavior of individuals, who use social network in their daily life. This study benefits readers as it helps to identify the methods used by different researchers and the number of researchers that applied these methods. This review study provides a clear description of the methods, limitations, and results that have been used by previous researches in studies during 2015–17.

More than 37% people of the world use social media; however, the way social media users interact with each other vary greatly. There are demographic and behavioral trends from the Facebook, Twitter, and Instagram that are discussed in Table 6 .

Demographic and Behaviour Trends From the Different Social Media

5. Conclusion

In this review paper, we have reviewed and analyzed data collected from 30 different published articles from 2015 to 2017 on the topic of behavior analysis using social media. It is found that there were 69 different methods used by the researchers to analyze their data. From these methods, the most common technique to analyze the behavior of individuals was analysis techniques. From this study, it is clear that there is need for more research to predict the personality and behavior of individuals on the Instagram. This study found that 50% of research was done on Twitter and 11 different analysis techniques were sued. While reviewing the research articles, it was clear that the researchers have used more than one method for data collection and behavior analysis. Table 1 has all the data analysis of the paper reviewed in the study. Furthermore, unlike past research papers, this chapter included the attributes of the number of users and the limitations of the work done. These studies mostly focused on Twitter with some research on Facebook and Instagram. In this research paper, we have attempted to fill the gap by including the number of users and limitation attributes. There are some challenges to find the solutions to the issues that have been discussed, but these require urgent attention. This study should be useful as a reference for researchers interested in the analysis of the behavior of social media users.

Author Contribution

A.S. and M.N.H. conceived the study idea and developed the analysis plan. A.S. analyzed the data and wrote the initial paper. M.N.H. helped in preparing the figures and tables, and in finalizing the manuscript. All authors read the manuscript.

Further Reading

OPINION article

Challenges and opportunities for human behavior research in the coronavirus disease (covid-19) pandemic.

- 1 Department of General Psychology, University of Padova, Padua, Italy

- 2 Department of Brain and Behavioral Sciences, University of Pavia, Pavia, Italy

The COVID-19 pandemic is a serious public health crisis that is causing major worldwide disruption. So far, the most widely deployed interventions have been non-pharmacological (NPI), such as various forms of social distancing, pervasive use of personal protective equipment (PPE), such as facemasks, shields, or gloves, and hand washing and disinfection of fomites. These measures will very likely continue to be mandated in the medium or even long term until an effective treatment or vaccine is found ( Leung et al., 2020 ). Even beyond that time frame, many of these public health recommendations will have become part of individual lifestyles and hence continue to be observed. Moreover, it is implausible that the disruption caused by COVID-19 will dissipate soon. Analysis of transmission dynamics suggests that the disease could persist into 2025, with prolonged or intermittent social distancing in place until 2022 ( Kissler et al., 2020 ).

Human behavior research will be profoundly impacted beyond the stagnation resulting from the closure of laboratories during government-mandated lockdowns. In this viewpoint article, we argue that disruption provides an important opportunity for accelerating structural reforms already underway to reduce waste in planning, conducting, and reporting research ( Cristea and Naudet, 2019 ). We discuss three aspects relevant to human behavior research: (1) unavoidable, extensive changes in data collection and ensuing untoward consequences; (2) the possibility of shifting research priorities to aspects relevant to the pandemic; (3) recommendations to enhance adaptation to the disruption caused by the pandemic.

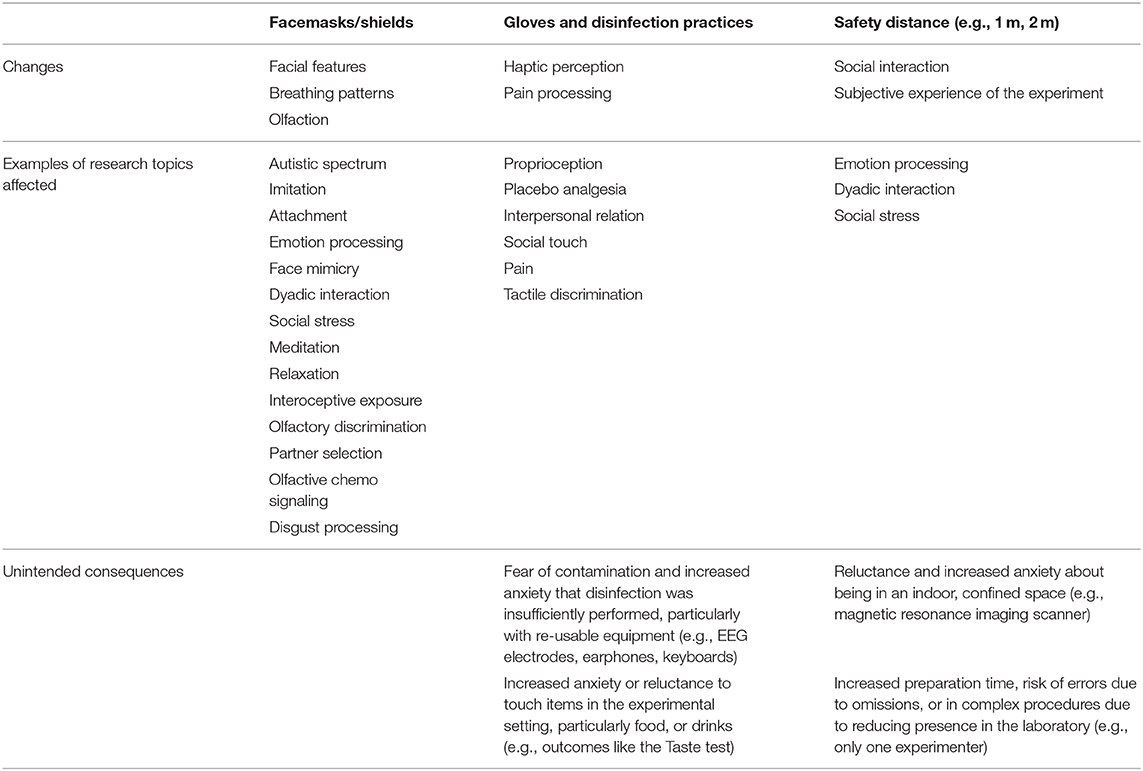

Data collection is very unlikely to return to the “old” normal for the foreseeable future. For example, neuroimaging studies usually involve placing participants in the confined space of a magnetic resonance imaging scanner. Studies measuring stress hormones, electroencephalography, or psychophysiology also involve close contact to collect saliva and blood samples or to place electrodes. Behavioral studies often involve interaction with persons who administer tasks or require that various surfaces and materials be touched. One immediate solution would be conducting “socially distant” experiments, for instance, by keeping a safe distance and making participants and research personnel wear PPE. Though data collection in this way would resemble pre-COVID times, it would come with a range of unintended consequences ( Table 1 ). First, it would significantly augment costs in terms of resources, training of personnel, and time spent preparing experiments. For laboratories or researchers with scarce resources, these costs could amount to a drastic reduction in the experiments performed, with an ensuing decrease in publication output, which might further affect the capacity to attract new funding and retain researchers. Secondly, even with the use of PPE, some participants might be reluctant or anxious to expose themselves to close and unnecessary physical interaction. Participants with particular vulnerabilities, like neuroticism, social anxiety, or obsessive-compulsive traits, might find the trade-off between risks, and gains unacceptable. Thirdly, some research topics (e.g., face processing, imitation, emotional expression, dyadic interaction) or study populations (e.g., autistic spectrum, social anxiety, obsessive-compulsive) would become difficult to study with the current experimental paradigms ( Table 1 ). New paradigms can be developed, but they will need to first be assessed for reliability and validated, which will undoubtedly take time. Finally, generalized use of PPE by participants and personnel could alter the “usual” experimental setting, introducing additional biases, similarly to the experimenter effect ( Rosenthal, 1976 ).

Table 1 . Possible consequences of non-pharmacological interventions for COVID-19 on human behavior research.

Data collection could also adapt by leveraging technology, such as running experiments remotely via available platforms, like for instance Amazon's Mechanical Turk (MTurk), where any task that programmable with standard browser technology can be used ( Crump et al., 2013 ). Templates of already-programmed and easily customizable experimental tasks, such as the Stroop or Balloon Analog Risk Task, are also available on platforms like Pavlovia. Ecological momentary assessment is another feasible option, since it was conceived from the beginning for remote use, with participants logging in to fill in scales or activity journals in a naturalistic environment ( Shiffman et al., 2008 ). Increasingly affordable wearables can be used for collecting physiological data ( Javelot et al., 2014 ). Web-based research was already expanding before the pandemic, and the quality of the data collected in this way is comparable with that of laboratory studies ( Germine et al., 2012 ). Still, there are lingering issues. For instance, for some MTurk experiments, disparities have been evidenced between laboratory and online data collection ( Crump et al., 2013 ). Further clarifications about quality, such as consistency or interpretability ( Abdolkhani et al., 2020 ), are also needed for data collected using wearables.