- Deep Learning Research Proposal

The word deep learning is the study and analysis of deep features that are hidden in the data using some intelligent deep learning models . Recently, it turns out to be the most important research paradigm for advanced automated systems for decision-making . Deep learning is derived from machine learning technologies that learn based on hierarchical concepts . So, it is best for performing complex and long mathematical computations in deep learning .

This page describes to you the innovations of deep learning research proposals with major challenges, techniques, limitations, tools, etc.!!!

One most important thing about deep learning is the multi-layered approach . It enables the machine to construct and work the algorithms in different layers for deep analysis . Further, it also works on the principle of artificial neural networks which functions in the same human brain. Since it got inspiration from the human brain to make machines automatically understand the situation and make smart decisions accordingly. Here, we have given you some of the important real-time applications of deep learning.

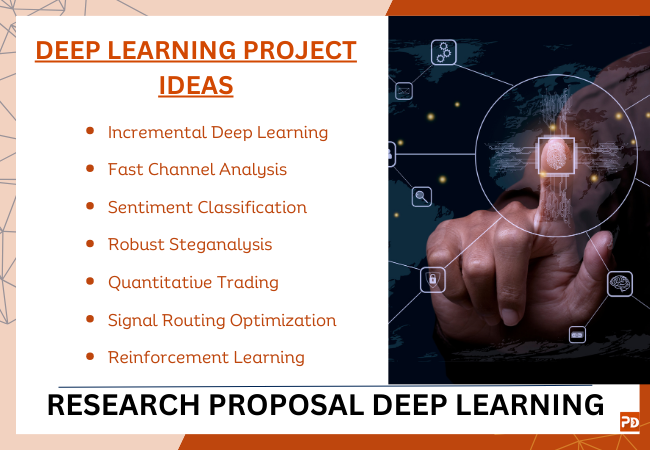

Deep Learning Project Ideas

- Natural Language Processing

- Pattern detection in Human Face

- Image Recognition and Object Detection

- Driverless UAV Control Systems

- Prediction of Weather Condition Variation

- Machine Translation for Autonomous Cars

- Medical Disorder Diagnosis and Treatment

- Traffic and Speed Control in Motorized Systems

- Voice Assistance for Dense Areas Navigation

- Altitude Control System for UAV and Satellites

Now, we can see the workflow of deep learning models . Here, we have given you the steps involved in the deep learning model. This assists you to know the general procedure of deep learning model execution . Similarly, we precisely guide you in every step of your proposed deep learning model . Further, the steps may vary based on the requirement of the handpicked deep learning project idea. Anyway, the deep learning model is intended to grab deep features of data by processing through neural networks . Then, the machine will learn and understand the sudden scenarios for controlling systems.

Process Flow of Deep Learning

- Step 1 – Load the dataset as input

- Step 2 – Extraction of features

- Step 3 – Process add-on layers for more abstract features

- Step 4 – Perform feature mapping

- Step 5 –Display the output

Although deep learning is more efficient to automatically learn features than conventional methods, it has some technical constraints. Here, we have specified only a few constraints to make you aware of current research. Beyond these primary constraints, we also handpicked more number of other constraints. To know other exciting research limitations in deep learning , approach us. We will make you understand more from top research areas.

Deep Learning Limitations

- Test Data Variation – When the test data is different from training data, then the employed deep learning technique may get failure. Further, it also does not efficiently work in a controlled environment.

- Huge Dataset – Deep learning models efficiently work on large-scale datasets than limited data

Our research team is highly proficient to handle different deep learning technologies . To present you with up-to-date information, we constantly upgrade our research knowledge in all advanced developments. So, we are good not only at handpicking research challenges but also more skilled to develop novel solutions. For your information, here we have given you some most common data handling issues with appropriate solutions.

What are the data handling techniques?

- Variables signifies the linear combo of factors with errors

- Depends on the presence of different unobserved variables (i.e., assumption)

- Identify the correlations between existing observed variables

- If the data in a column has fixed values, then it has “0” variance.

- Further, these kinds of variables are not considered in target variables

- If there is the issue of outliers, variables, and missing values, then effective feature selection will help you to get rid out of it.

- So, we can employ the random forest method

- Remove the unwanted features from the model

- Repeat the same process until attaining maximum error rate

- At last, define the minimum features

- Remove one at a time and check the error rate

- If there are dependent values among data columns, then may have redundant information due to similarities.

- So, we can filter the largely correlated columns based on coefficients of correlation

- Add one at a time for high performance

- Enhance the entire model efficiency

- Addresses the possibility where data points are associated with high-dimensional space

- Select low-dimensional embedding to generate related distribution

- Identify the missing value columns and remove them by threshold

- Present variable set is converted to a new variable set

- Also, referred to as a linear combo of new variables

- Determine the location of each point by pair-wise spaces among all points which are represented in a matrix

- Further, use standard multi-dimensional scaling (MDS) for determining low-dimensional points locations

In addition, we have also given you the broadly utilized deep learning models in current research . Here, we have classified the models into two major classifications such as discriminant models and generative models . Further, we have also specified the deep learning process with suitable techniques. If there is a complex situation, then we design new algorithms based on the project’s needs . On the whole, we find apt solutions for any sort of problem through our smart approach to problems.

Deep Learning Models

- CNN and NLP (Hybrid)

- Domain-specific

- Image conversion

- Meta-Learning

Furthermore, our developers are like to share the globally suggested deep learning software and tools . In truth, we have thorough practice on all these developing technologies. So, we are ready to fine-tuned guidance on deep learning libraries, modules, packages, toolboxes , etc. to ease your development process. By the by, we will also suggest you best-fitting software/tool for your project . We ensure you that our suggested software/tool will make your implementation process of deep learning projects techniques more simple and reliable .

Deep Learning Software and Tools

- Caffe & Caffe2

- Deep Learning 4j

- Microsoft Cognitive Toolkit

So far, we have discussed important research updates of deep learning . Now, we can see the importance of handpicking a good research topic for an impressive deep learning research proposal. In the research topic, we have to outline your research by mentioning the research problem and efficient solutions . Also, it is necessary to check the future scope of research for that particular topic.

The topic without future research direction is not meant to do research!!!

For more clarity, here we have given you a few significant tips to select a good deep learning research topic.

How to write a research paper on deep learning?

- Check whether your selected research problem is inspiring to overcome but not take more complex to solve

- Check whether your selected problem not only inspires you but also create interest among readers and followers

- Check whether your proposed research create a contribution to social developments

- Check whether your selected research problem is unique

From the above list, you can get an idea about what exactly a good research topic is. Now, we can see how a good research topic is identified.

- To recognize the best research topic, first undergo in-depth research on recent deep learning studied by referring latest reputed journal papers.

- Then, perform a review process over the collected papers to detect what are the current research limitations, which aspect not addressed yet, which is a problem is not solved effectively, which solution is needed to improve, what the techniques are followed in recent research, etc.

- This literature review process needs more time and effort to grasp knowledge on research demands among scholars.

- If you are new to this field, then it is suggested to take the advice of field experts who recommend good and resourceful research papers.

- Majorly, the drawbacks of the existing research are proposed as a problem to provide suitable research solutions.

- Usually, it is good to work on resource-filled research areas than areas that have limited reference.

- When you find the desired research idea, then immediately check the originality of the idea. Make sure that no one is already proved your research idea.

- Since, it is better to find it in the initial stage itself to choose some other one.

- For that, the search keyword is more important because someone may already conduct the same research in a different name. So, concentrate on choosing keywords for the literature study.

How to describe your research topic?

One common error faced by beginners in research topic selection is a misunderstanding. Some researchers think topic selection means is just the title of your project. But it is not like that, you have to give detailed information about your research work on a short and crisp topic . In other words, the research topic is needed to act as an outline for your research work.

For instance: “deep learning for disease detection” is not the topic with clear information. In this, you can mention the details like type of deep learning technique, type of image and its process, type of human parts, symptoms , etc.

The modified research topic for “deep learning for disease detection” is “COVID-19 detection using automated deep learning algorithm”

For your awareness, here we have given you some key points that need to focus on while framing research topics. To clearly define your research topic, we recommend writing some text explaining:

- Research title

- Previous research constraints

- Importance of the problem that overcomes in proposed research

- Reason of challenges in the research problem

- Outline of problem-solving possibility

To the end, now we can see different research perspectives of deep learning among the research community. In the following, we have presented you with the most demanded research topics in deep learning such as image denoising, moving object detection, and event recognition . In addition to this list, we also have a repository of recent deep learning research proposal topics, machine learning thesis topics . So, communicate with us to know the advanced research ideas of deep learning.

Research Topics in Deep Learning

- Continuous Network Monitoring and Pipeline Representation in Temporal Segment Networks

- Dynamic Image Networks and Semantic Image Networks

- Advance Non-uniform denoising verification based on FFDNet and DnCNN

- Efficient image denoising based on ResNets and CNNs

- Accurate object recognition in deep architecture using ResNeXts, Inception Nets and Squeeze and Excitation Networks

- Improved object detection using Faster R-CNN, YOLO, Fast R-CNN, and Mask-RCNN

Overall, we are ready to support you in all significant and new research areas of deep learning . We guarantee you that we provide you novel deep learning research proposal in your interested area with writing support. Further, we also give you code development , paper writing, paper publication, and thesis writing services . So, create a bond with us to create a strong foundation for your research career in the deep learning field.

Related Pages

Services we offer.

Mathematical proof

Pseudo code

Conference Paper

Research Proposal

System Design

Literature Survey

Data Collection

Thesis Writing

Data Analysis

Rough Draft

Paper Collection

Code and Programs

Paper Writing

Course Work

reason.town

How to Write a Machine Learning Research Proposal

Introduction, what is a machine learning research proposal, the structure of a machine learning research proposal, tips for writing a machine learning research proposal, how to get started with writing a machine learning research proposal, the importance of a machine learning research proposal, why you should take the time to write a machine learning research proposal, how to make your machine learning research proposal stand out, the bottom line: why writing a machine learning research proposal is worth it, further resources on writing machine learning research proposals.

If you want to get into machine learning, you first need to get past the research proposal stage. We’ll show you how.

Checkout this video:

A machine learning research proposal is a document that summarizes your research project, methods, and expected outcomes. It is typically used to secure funding for your project from a sponsor or institution, and can also be used to assessment your project by peers. Your proposal should be clear, concise, and well-organized. It should also provide enough detail to allow reviewers to assess your project’s feasibility and potential impact.

In this guide, we will cover the basics of what you need to include in a machine learning research proposal. We will also provide some tips on how to create a strong proposal that is more likely to be funded.

A machine learning research proposal is a document that describes a proposed research project that uses machine learning algorithms and techniques. The proposal should include a brief overview of the problem to be tackled, the proposed solution, and the expected results. It should also briefly describe the dataset to be used, the evaluation metric, and any other relevant details.

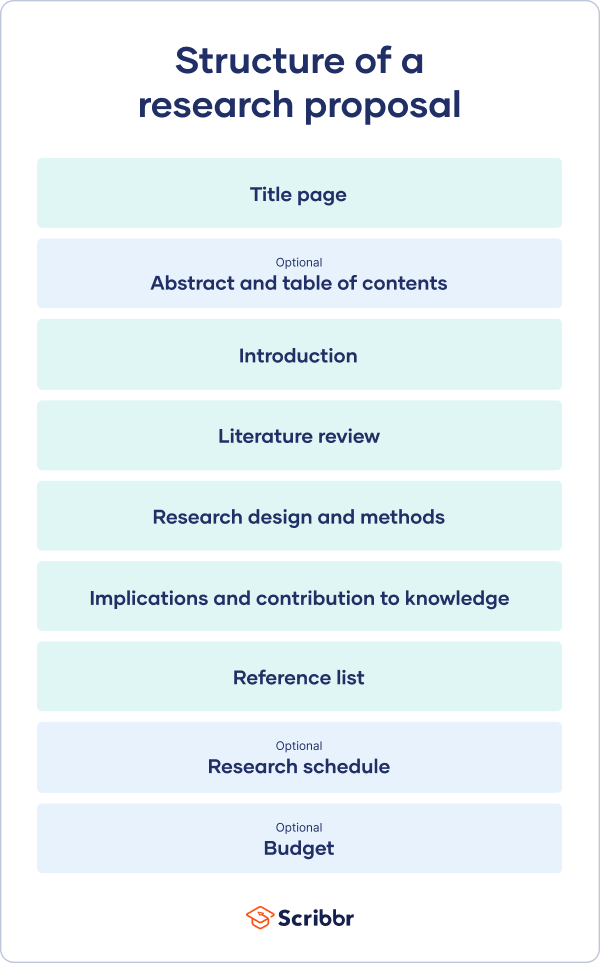

There is no one-size-fits-all answer to this question, as the structure of a machine learning research proposal will vary depending on the specific research question you are proposing to answer, the methods you plan to use, and the overall focus of your proposal. However, there are some general principles that all good proposals should follow.

In general, a machine learning research proposal should include:

-A summary of the problem you are trying to solve and the motivation for solving it -A brief overview of previous work in this area, including any relevant background information -A description of your proposed solution and a discussion of how it compares to existing approaches -An evaluation plan outlining how you will evaluate the effectiveness of your proposed solution -A discussion of any potential risks or limitations associated with your proposed research

Useful tips for writing a machine learning research proposal:

-Your proposal should address a specific problem or question in machine learning.

-Before writing your proposal, familiarize yourself with the existing literature in the field. Your proposal should build on the existing body of knowledge and contribute to the understanding of the chosen problem or question.

-Your proposal should be clear and concise. It should be easy for non-experts to understand what you are proposing and why it is important.

-Your proposal should be well organized. Include a brief introduction, literature review, methodology, expected results, and significance of your work.

-Make sure to proofread your proposal carefully before submitting it.

A machine learning research proposal is a document that outlines the problem you want to solve with machine learning, the methods you will use to solve it, the data you will use, and the anticipated results. This guide provides an overview of what should be included in a machine learning research proposal so that you can get started on writing your own.

1. Introduction 2. Problem statement 3. Methodology 4. Data 5. Evaluation 6. References

A machine learning research proposal is a document that outlines the rationale for a proposed machine learning research project. The proposal should convince potential supervisors or funding bodies that the project is worthwhile and that the researcher is competent to undertake it.

The proposal should include:

– A clear statement of the problem to be addressed or the question to be answered – A review of relevant literature – An outline of the proposed research methodology – A discussion of the expected outcome of the research – A realistic timeline for completing the project

A machine learning research proposal is not just a formal exercise; it is an opportunity to sell your idea to potential supervisors or funding bodies. Take advantage of this opportunity by doing your best to make your proposal as clear, concise, and convincing as possible.

Your machine learning research proposal is your chance to sell your project to potential supervisors and funders. It should be clear, concise and make a strong case for why your project is worth undertaking.

A well-written proposal will convince others that you have a worthwhile project and that you have the necessary skills and experience to complete it successfully. It will also help you to clarify your own ideas and focus your research.

Writing a machine learning research proposal can seem like a daunting task, but it doesn’t have to be. If you take it one step at a time, you’ll be well on your way to writing a strong proposal that will get the support you need.

In order to make your machine learning research proposal stand out, you will need to do several things. First, make sure that your proposal is well written and free of grammatical errors. Second, make sure that your proposal is clear and concise. Third, make sure that your proposal is organized and includes all of the necessary information. Finally, be sure to proofread your proposal carefully before submitting it.

The benefits of writing a machine learning research proposal go beyond helping you get funding for your project. A good proposal will also force you to think carefully about your problem and how you plan to solve it. This process can help you identify potential flaws in your approach and make sure that your project is as strong as possible before you start.

It can also be helpful to have a machine learning research proposal on hand when you’re talking to potential collaborators or presenting your work to a wider audience. A well-written proposal can give people a clear sense of what your project is about and why it’s important, which can make it easier to get buy-in and find people who are excited to work with you.

In short, writing a machine learning research proposal is a valuable exercise that can help you hone your ideas and make sure that your project is as strong as possible before you start.

Here are some further resources on writing machine learning research proposals:

– How to Write a Machine Learning Research Paper: https://MachineLearningMastery.com/how-to-write-a-machine-learning-research-paper/

– 10 Tips for Writing a Machine Learning Research Paper: https://blog.MachineLearning.net/10-tips-for-writing-a-machine-learning-research-paper/

Please also see our other blog post on writing research proposals: https://www.MachineLearningMastery.com/how-to-write-a-research-proposal/

Similar Posts

Scoring and Ranking in Machine Learning: What You Need to Know

ContentsIntroductionWhat is scoring and ranking in machine learning?Why is scoring and ranking important in machine learning?How is scoring and ranking used in machine learning?What are some benefits of scoring and ranking in machine learning?What are some challenges of scoring and ranking in machine learning?How can you improve your scoring and ranking in machine learning?ConclusionResourcesAbout the…

How to Get Machine Learning Grants

ContentsIntroductionWhat is Machine Learning?What are the benefits of Machine Learning?What are the types of Machine Learning?What are the applications of Machine Learning?How can Machine Learning be used to get grants?What are the steps involved in getting Machine Learning grants?What are the benefits of getting Machine Learning grants?What are the risks involved in getting Machine Learning…

What is Computer Vision?

ContentsWhat is Computer Vision?What are the goals of Computer Vision?What are the applications of Computer Vision?What are the challenges of Computer Vision?What is the history of Computer Vision?What are the state-of-the-art methods in Computer Vision?What are the datasets used in Computer Vision?What are the evaluation metrics used in Computer Vision?What are the tools used in…

Machine Learning and Your Credit Card

ContentsHow machine learning is changing the credit card industryThe benefits of using machine learning for credit card companiesThe benefits of using machine learning for consumersHow machine learning can help prevent credit card fraudThe future of machine learning in the credit card industryThe challenges of using machine learning for credit card companiesThe challenges of using machine…

Is C++ Good for Machine Learning?

ContentsIntroductionWhat is C++?What is Machine Learning?The Benefits of C++ for Machine LearningThe Drawbacks of C++ for Machine LearningThe Future of C++ and Machine LearningConclusionReferences If you’re wondering if C++ is a good language for machine learning, the answer is yes! Check out our blog post to learn more about the benefits of using C++ for…

Probability and Statistics for Machine Learning

ContentsIntroduction to Probability and StatisticsProbability FundamentalsStatistical InferenceRegression AnalysisClassification MethodsAnomaly DetectionDimensionality ReductionModel SelectionBoosting MethodsDeep Learning This blog post covers the basics of probability and statistics for machine learning. It covers topics such as probability distributions, statistical inference, and model selection. After reading this post, you will have a better understanding of how to apply these concepts…

EXPANSE, A Continual Deep Learning System; Research Proposal

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Deep-learning Enhanced Healthcare Modeling and Optimization - Research - College of Engineering - Purdue University

Deep-learning Enhanced Healthcare Modeling and Optimization

Project Description

In the age of big data analytics, one must consider the continuum from predictive to prescriptive analytics to help managers to improve their day-to-day operations in large-scale healthcare systems. These systems often run under uncertainties and in rapidly changing environments. Good prescriptive management solutions require building high-fidelity models that are adaptive to the changing environment. Consequently, a framework for learning stochastic models from data in this setting is imperative. These learnt models need to be seamlessly integrated with data-driven prescriptive methods to optimize system operations.

In this research project, we work closely with the largest hospital systems in the state of Indiana and propose a methodological framework that collaboratively leverages deep learning and stochastic process theory to revolutionize workload prediction and resource planning, such as capacity and staffing. These developments are expected to enable fundamental improvements in short-term and long-term operations for healthcare delivery. Our research agenda, in support of this broader goal, includes (i) a novel framework for inferring stochastic models of time-varying, large-scale healthcare systems; (ii) a robust resource allocation framework that accounts for model uncertainty and natural stochastic variation; (iii) integration and deployment of our algorithms into all 16 hospitals belonging to the collaborating healthcare system.

Postdoc Qualifications

Applicants hold (or are about to complete) a PhD in Operations Research, Industrial Engineering, Applied Mathematics, Electrical Engineering or a related discipline. A strong background in stochastic modeling and optimization methods is required. Research experience in statistics/machine learning/deep learning would be a great advantage. A willingness to learn the fundamental theory and methods of statistics/machine learning/deep learning is necessary. Programming skills, fluency in English and excellent communication and presentation skills are essential.

Co-Advisors

Harsha Honnappa, [email protected], School of Industrial Engineering, engineering.purdue.edu/SSL

Pengyi Shi, [email protected], Krannert School of Business, https://web.ics.purdue.edu/~shi178

“Estimating Stochastic Poisson Intensities Using Deep Latent Models”, R. Wang, P. Jaiswal and H. Honnappa, Proceedings of the Winter Simulation Conference (2020).

“Timing it Right: Balancing Inpatient Congestion versus Readmission Risk at Discharge," P. Shi, J. E. Helm, J. Deglise-Hawkinson, and J. Pan. Operations Research, forthcoming

“The ∆(i)/GI/1 Queueing Model, and its Fluid and Diffusion Approximations”, H. Honnappa , R. Jain and A. R. Ward, Queueing Systems: Theory and Applications, 80.1-2 (2015): 71-103.

"Inpatient Bed Overflow: An Approximate Dynamic Programming Approach," J. G. Dai, P. Shi I Manufacturing and Service Operations Management. 2019; 21(4):894-911.

RESEARCH PROPOSAL DEEP LEARNING

Get your deep learning proposal work from high end trained professionals. The passion of your areas of interest will be clearly reflected in your proposal. Chose an expert to provide you with custom research proposal work. To interpret the real-time process of the art, historical context and future scopes we have made a literature survey in Deep Learning (DL).

- Define Objectives:

- Clearly sketch what we need to execute with our comprehensive view.

- Take transformers in Natural Language Processing (NLP) as an example and note its specific tasks and issues.

- Primary Sources:

- Research Databases: We can use the fields such as Google Scholar, arXIv, PubMed (for biomedical papers), IEEE Xplore, and others.

- Conference: Here NeurIPS, ICML, ICLR, CVPR, ICCV, ACL, EMNLP are the basic conferences in DL.

- Journal: The Journal of Machine Learning Research (JMLR) and Neural Computation are the papers frequently establish DL related studies.

- Start by Reviews and Surveys:

- Find the latest survey and review papers on our area of interest which gives a literature outline and frequently see the seminal latest works.

- Begin with Convolutional Neural Networks (CNNs) architecture survey paper if we search for CNN.

- Reading Papers:

- Skim: Begin with reading abstracts, introductions, conclusions, and figures.

- Deep Dive: When a study shows high similar to our work, then look in-depth to its methodology, experiments, and results.

- Take Notes: Look down the basic plans, methods, datasets, Evaluation metrics, and open issues described in the paper and note it.

- Forward and Backward Search:

- Forward: We can detect how the area is emerging using the tools such as Google Scholar’s “Cited by” feature to find latest papers in our research.

- Backward : We can track the improvement of designs by seeing the reference which is gives more knowledge in our study.

- Organize and Combine:

- Classify the papers by its themes, methodologies and version.

- We have to analyze the trends, patterns, and gaps in the literature.

- Keep Updates:

- We need to stay update with notifications on fields such as Google Scholar and arXiv for keywords similar to our title with the recent publications, because Dl is a fast-emerging area.

- Tools and Platforms:

- Utilize the tools such as Mendeley, Zotero and EndNote for maintaining and citing papers.

- We find similar papers with AI-driven suggestions from Semantic Scholar platform.

- Engage with the Community:

- Join into mailing lists, social media groups and online conference to get related with DL. Websites such as Reddit’s r/Machine Learning or the AI Alignment Forum frequently gather latest papers.

- By attending the webinars, workshops and meetings often can help us to gain skills from recent techniques and find knowledge of what the group seems essential.

- Report and Share:

- If we want to establish the paper make annotated bibliographies, presentations, and review papers based on our motive and file the research.

- We can our scope to help others and publish us a skilled person in this topic.

The objective of this review is to crucially recognize and integrate the real-time content in the area. Though it is a time-consuming work, it will be useful for someone aims to make research and latest works in DL.

Deep Learning project face recognition with python OpenCV

Designing a face remembering system using Python and OpenCV is an amazing work that introduces us into the world of computer vision and DL. The following are the step-by-step guide to construct a simple face recognition system:

- Install Necessary Libraries

Make sure that we have the required libraries installed:

pip install opencv-python opencv-python-headless

- Capture Faces

We require a dataset for training. We utilize the pre-defined dataset and capture our own using OpenCV.

cam = cv2.VideoCapture(0)

detector = cv2.CascadeClassifier(cv2.data.haarcascades + ‘haarcascade_frontalface_default.xml’)

id = input(‘Enter user ID: ‘)

sampleNum = 0

while True:

ret, img = cam.read()

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

faces = detector.detectMultiScale(gray, 1.3, 5)

for (x,y,w,h) in faces:

sampleNum += 1

cv2.imwrite(f”faces/User.{id}.{sampleNum}.jpg”, gray[y:y+h,x:x+w])

cv2.rectangle(img, (x,y), (x+w, y+h), (255,0,0), 2)

cv2.waitKey(100)

cv2.imshow(‘Capture’, img)

cv2.waitKey(1)

if sampleNum > 20: # capture 20 images

break

cam.release()

cv2.destroyAllWindows()

- Training the Recognizer

OpenCV has a built-in face recognizer. For this example, we’ll use the LBPH (Local Binary Pattern Histogram) face recognizer.

import numpy as np

from PIL import Image

path = ‘faces’

recognizer = cv2.face.LBPHFaceRecognizer_create()

def getImagesAndLabels(path):

imagePaths = [os.path.join(path,f) for f in os.listdir(path)]

faceSamples=[]

ids = []

for imagePath in imagePaths:

PIL_img = Image.open(imagePath).convert(‘L’)

img_numpy = np.array(PIL_img,’uint8′)

id = int(os.path.split(imagePath)[-1].split(“.”)[1])

faces = detector.detectMultiScale(img_numpy)

for (x,y,w,h) in faces:

faceSamples.append(img_numpy[y:y+h,x:x+w])

ids.append(id)

return faceSamples, np.array(ids)

faces,ids = getImagesAndLabels(path)

recognizer.train(faces, ids)

recognizer.save(‘trainer/trainer.yml’)

- Recognizing Faces

recognizer.read(‘trainer/trainer.yml’)

cascadePath = cv2.data.haarcascades + “haarcascade_frontalface_default.xml”

faceCascade = cv2.CascadeClassifier(cascadePath)

font = cv2.FONT_HERSHEY_SIMPLEX

minW = 0.1*cam.get(3)

minH = 0.1*cam.get(4)

faces = faceCascade.detectMultiScale(

gray,

scaleFactor=1.2,

minNeighbors=5,

minSize=(int(minW), int(minH)),

id, confidence = recognizer.predict(gray[y:y+h,x:x+w])

if (confidence < 100):

confidence = f” {round(100 – confidence)}%”

else:

id = “unknown”

cv2.putText(img, str(id), (x+5,y-5), font, 1, (255,255,255), 2)

cv2.putText(img, str(confidence), (x+5,y+h-5), font, 1, (255,255,0), 1)

cv2.imshow(‘Face Recognition’,img)

if cv2.waitKey(1) & 0xFF == ord(‘q’):

We have proper directories (faces and trainer) to design. It will be a basic face recognition system and can strengthen with DL models for better accuracy and robustness against various states in real-time. To achieve better accuracy in real-time conditions, we discover latest DL based techniques like FaceNet or pre-trained models from DL frameworks.

Deep learning MS Thesis topics

Have a conversation with our faculty members to get the best topics that matches with your interest. Some of the unique topic ideas are shared below …. contact us for more support.

- Modulation Recognition based on Incremental Deep Learning

- Fast Channel Analysis and Design Approach using Deep Learning Algorithm for 112Gbs HSI Signal Routing Optimization

- Deep Learning of Process Data with Supervised Variational Auto-encoder for Soft Sensor

- Methodological Principles for Deep Learning in Software Engineering

- Recent Trends in Deep Learning for Natural Language Processing and Scope for Asian Languages

- Adding Context to Source Code Representations for Deep Learning

- Weekly Power Generation Forecasting using Deep Learning Techniques: Case Study of a 1.5 MWp Floating PV Power Plant

- A Study of Deep Learning Approaches and Loss Functions for Abundance Fractions Estimation

- A Trustless Federated Framework for Decentralized and Confidential Deep Learning

- Research on Financial Data Analysis Based on Applied Deep Learning in Quantitative Trading

- A Deep Learning model for day-ahead load forecasting taking advantage of expert knowledge

- Locational marginal price forecasting using Transformer-based deep learning network

- H-Stegonet: A Hybrid Deep Learning Framework for Robust Steganalysis

- Comparison of Deep Learning Approaches for Sentiment Classification

- An Unmanned Network Intrusion Detection Model Based on Deep Reinforcement Learning

- Indoor Object Localization and Tracking Using Deep Learning over Received Signal Strength

- Analysis of Deep Learning 3-D Imaging Methods Based on UAV SAR

- Research and improvement of deep learning tool chain for electric power applications

- Hybrid Intrusion Detector using Deep Learning Technique

- Non-Trusted user Classification-Comparative Analysis of Machine and Deep Learning Approaches

Why Work With Us ?

Senior research member, research experience, journal member, book publisher, research ethics, business ethics, valid references, explanations, paper publication, 9 big reasons to select us.

Our Editor-in-Chief has Website Ownership who control and deliver all aspects of PhD Direction to scholars and students and also keep the look to fully manage all our clients.

Our world-class certified experts have 18+years of experience in Research & Development programs (Industrial Research) who absolutely immersed as many scholars as possible in developing strong PhD research projects.

We associated with 200+reputed SCI and SCOPUS indexed journals (SJR ranking) for getting research work to be published in standard journals (Your first-choice journal).

PhDdirection.com is world’s largest book publishing platform that predominantly work subject-wise categories for scholars/students to assist their books writing and takes out into the University Library.

Our researchers provide required research ethics such as Confidentiality & Privacy, Novelty (valuable research), Plagiarism-Free, and Timely Delivery. Our customers have freedom to examine their current specific research activities.

Our organization take into consideration of customer satisfaction, online, offline support and professional works deliver since these are the actual inspiring business factors.

Solid works delivering by young qualified global research team. "References" is the key to evaluating works easier because we carefully assess scholars findings.

Detailed Videos, Readme files, Screenshots are provided for all research projects. We provide Teamviewer support and other online channels for project explanation.

Worthy journal publication is our main thing like IEEE, ACM, Springer, IET, Elsevier, etc. We substantially reduces scholars burden in publication side. We carry scholars from initial submission to final acceptance.

Related Pages

Our benefits, throughout reference, confidential agreement, research no way resale, plagiarism-free, publication guarantee, customize support, fair revisions, business professionalism, domains & tools, we generally use, wireless communication (4g lte, and 5g), ad hoc networks (vanet, manet, etc.), wireless sensor networks, software defined networks, network security, internet of things (mqtt, coap), internet of vehicles, cloud computing, fog computing, edge computing, mobile computing, mobile cloud computing, ubiquitous computing, digital image processing, medical image processing, pattern analysis and machine intelligence, geoscience and remote sensing, big data analytics, data mining, power electronics, web of things, digital forensics, natural language processing, automation systems, artificial intelligence, mininet 2.1.0, matlab (r2018b/r2019a), matlab and simulink, apache hadoop, apache spark mlib, apache mahout, apache flink, apache storm, apache cassandra, pig and hive, rapid miner, support 24/7, call us @ any time, +91 9444829042, [email protected].

Questions ?

Click here to chat with us

- Warning : Invalid argument supplied for foreach() in /home/customer/www/opendatascience.com/public_html/wp-includes/nav-menu.php on line 95 Warning : array_merge(): Expected parameter 2 to be an array, null given in /home/customer/www/opendatascience.com/public_html/wp-includes/nav-menu.php on line 102

- ODSC EUROPE

- AI+ Training

- Speak at ODSC

- Data Analytics

- Data Engineering

- Data Visualization

- Deep Learning

- Generative AI

- Machine Learning

- NLP and LLMs

- Business & Use Cases

- Career Advice

- Write for us

- ODSC Community Slack Channel

- Upcoming Webinars

Best Deep Learning Research of 2021 So Far

Deep Learning Modeling Research posted by Daniel Gutierrez, ODSC August 2, 2021 Daniel Gutierrez, ODSC

The discipline of AI most often mentioned these days is deep learning (DL) along with its many incarnations implemented with deep neural networks. DL also is a rapidly accelerating area of research with papers being published at a fast clip by research teams from around the globe.

I enjoy keeping a pulse on deep learning research and so far in 2021 research innovations have propagated at a quick pace. Some of the top topical areas for deep learning research are: causality, explainability/interpretability, transformers, NLP, GPT, language models, GANs, deep learning for tabular data, and many others.

In this article, we’ll take a brief tour of my top picks for deep learning research (in no particular order) of papers that I found to be particularly compelling. I’m pretty attached to this leading-edge research. I’m known to carry a thick folder of recent research papers around in my backpack and consume all the great developments when I have a spare moment. Enjoy!

Check out my previous lists: Best Machine Learning Research of 2021 So Far , Best of Deep Reinforcement Learning Research of 2019 , Most Influential NLP Research of 2019 , and Most Influential Deep Learning Research of 2019 .

Cause and Effect: Concept-based Explanation of Neural Networks

In many scenarios, human decisions are explained based on some high-level concepts. This paper takes a step in the interpretability of neural networks by examining their internal representation or neuron’s activations against concepts. A concept is characterized by a set of samples that have specific features in common. A framework is proposed to check the existence of a causal relationship between a concept (or its negation) and task classes. While the previous methods focus on the importance of a concept to a task class, the paper goes further and introduces four measures to quantitatively determine the order of causality. Through experiments, the effectiveness of the proposed method is demonstrated in explaining the relationship between a concept and the predictive behavior of a neural network.

Pretrained Language Models for Text Generation: A Survey

Text generation has become one of the most important yet challenging tasks in natural language processing (NLP). The resurgence of deep learning has greatly advanced this field by neural generation models, especially the paradigm of pretrained language models (PLMs). This paper presents an overview of the major advances achieved in the topic of PLMs for text generation. As the preliminaries, the paper presents the general task definition and briefly describes the mainstream architectures of PLMs for text generation. As the core content, the deep learning research paper discusses how to adapt existing PLMs to model different input data and satisfy special properties in the generated text.

A Short Survey of Pre-trained Language Models for Conversational AI-A NewAge in NLP

Building a dialogue system that can communicate naturally with humans is a challenging yet interesting problem of agent-based computing. The rapid growth in this area is usually hindered by the long-standing problem of data scarcity as these systems are expected to learn syntax, grammar, decision making, and reasoning from insufficient amounts of task-specific data sets. The recently introduced pre-trained language models have the potential to address the issue of data scarcity and bring considerable advantages by generating contextualized word embeddings. These models are considered counterparts of ImageNet in NLP and have demonstrated the ability to capture different facets of language such as hierarchical relations, long-term dependency, and sentiment. This short survey paper discusses the recent progress made in the field of pre-trained language models.

TrustyAI Explainability Toolkit

AI is becoming increasingly more popular and can be found in workplaces and homes around the world. However, how do we ensure trust in these systems? Regulation changes such as the GDPR mean that users have a right to understand how their data has been processed as well as saved. Therefore if, for example, you are denied a loan you have the right to ask why. This can be hard if the method for working this out uses “black box” machine learning techniques such as neural networks. TrustyAI is a new initiative which looks into explainable artificial intelligence (XAI) solutions to address trustworthiness in ML as well as decision services landscapes. This deep learning research paper looks at how TrustyAI can support trust in decision services and predictive models. The paper investigates techniques such as LIME, SHAP and counterfactuals, benchmarking both LIME and counterfactual techniques against existing implementations.

Generative Adversarial Network: Some Analytical Perspectives

Ever since its debut, generative adversarial networks (GANs) have attracted tremendous amount of attention. Over the past years, different variations of GANs models have been developed and tailored to different applications in practice. Meanwhile, some issues regarding the performance and training of GANs have been noticed and investigated from various theoretical perspectives. This paper starts from an introduction of GANs from an analytical perspective, then moves onto the training of GANs via SDE approximations and finally discusses some applications of GANs in computing high dimensional MFGs as well as tackling mathematical finance problems.

PyTorch Tabular: A Framework for Deep Learning with Tabular Data

In spite of showing unreasonable effectiveness in modalities like Text and Image, deep learning has always lagged gradient boosting in tabular data – both in popularity and performance. But recently there have been newer models created specifically for tabular data, which is pushing the performance bar. But popularity is still a challenge because there is no easy, ready-to-use library like scikit-learn for deep learning. PyTorch Tabular is a new deep learning library which makes working with deep learning and tabular data easy and fast. It is a library built on top of PyTorch and PyTorch Lightning and works on Pandas dataframes directly. Many SOTA models like NODE and TabNet are already integrated and implemented in the library with a unified API. PyTorch Tabular is designed to be easily extensible for researchers, simple for practitioners, and robust in industrial deployments.

A Survey of Quantization Methods for Efficient Neural Network Inference

As soon as abstract mathematical computations were adapted to computation on digital computers, the problem of efficient representation, manipulation, and communication of the numerical values in those computations arose. Strongly related to the problem of numerical representation is the problem of quantization : in what manner should a set of continuous real-valued numbers be distributed over a fixed discrete set of numbers to minimize the number of bits required and also to maximize the accuracy of the attendant computations? This perennial problem of quantization is particularly relevant whenever memory and/or computational resources are severely restricted, and it has come to the forefront in recent years due to the remarkable performance of Neural Network models in computer vision, natural language processing, and related areas. Moving from floating-point representations to low-precision fixed integer values represented in four bits or less holds the potential to reduce the memory footprint and latency by a factor of 16x; and, in fact, reductions of 4x to 8x are often realized in practice in these applications. Thus, it is not surprising that quantization has emerged recently as an important and very active sub-area of research in the efficient implementation of computations associated with Neural Networks. This paper surveys approaches to the problem of quantizing the numerical values in deep Neural Network computations, covering the advantages/disadvantages of current methods.

How to decay your learning rate

Complex learning rate schedules have become an integral part of deep learning. This research finds empirically that common fine-tuned schedules decay the learning rate after the weight norm bounces. This leads to the proposal of ABEL : an automatic scheduler which decays the learning rate by keeping track of the weight norm. ABEL’s performance matches that of tuned schedules and is more robust with respect to its parameters. Through extensive experiments in vision, NLP, and RL, it is shown that if the weight norm does not bounce, it is possible to simplify schedules even further with no loss in performance. In such cases, a complex schedule has similar performance to a constant learning rate with a decay at the end of training.

GPT Understands, Too

While GPTs with traditional fine-tuning fail to achieve strong results on natural language understanding (NLU), this paper shows that GPTs can be better than or comparable to similar-sized BERTs on NLU tasks with a novel method P-tuning — which employs trainable continuous prompt embeddings. On the knowledge probing (LAMA) benchmark, the best GPT recovers 64% (P@1) of world knowledge without any additional text provided during test time, which substantially improves the previous best by 20+ percentage points. On the SuperGlue benchmark, GPTs achieve comparable and sometimes better performance to similar-sized BERTs in supervised learning. Importantly, it is found that P-tuning also improves BERTs’ performance in both few-shot and supervised settings while largely reducing the need for prompt engineering. Consequently, P-tuning outperforms the state-of-the-art approaches on the few-shot SuperGlue benchmark.

Understanding Robustness of Transformers for Image Classification

Deep Convolutional Neural Networks (CNNs) have long been the architecture of choice for computer vision tasks. Recently, Transformer-based architectures like Vision Transformer (ViT) have matched or even surpassed ResNets for image classification. However, details of the Transformer architecture — such as the use of non-overlapping patches — lead one to wonder whether these networks are as robust. This paper performs an extensive study of a variety of different measures of robustness of ViT models and compare the findings to ResNet baselines. Investigated is robustness to input perturbations as well as robustness to model perturbations. The paper finds that when pre-trained with a sufficient amount of data, ViT models are at least as robust as the ResNet counterparts on a broad range of perturbations. Also found is that Transformers are robust to the removal of almost any single layer, and that while activations from later layers are highly correlated with each other, they nevertheless play an important role in classification.

Improving DeepFake Detection Using Dynamic Face Augmentation

The creation of altered and manipulated faces has become more common due to the improvement of DeepFake generation methods. Simultaneously, we have seen the development of detection models for differentiating between a manipulated and original face from image or video content. We have observed that most publicly available DeepFake detection datasets have limited variations, where a single face is used in many videos, resulting in an oversampled training dataset. Due to this, deep neural networks tend to overfit to the facial features instead of learning to detect manipulation features of DeepFake content. As a result, most detection architectures perform poorly when tested on unseen data. This paper provides a quantitative analysis to investigate this problem and present a solution to prevent model overfitting due to the high volume of samples generated from a small number of actors.

An Evaluation of Edge TPU Accelerators for Convolutional Neural Networks

Edge TPUs are a domain of accelerators for low-power, edge devices and are widely used in various Google products such as Coral and Pixel devices. This paper first discusses the major microarchitectural details of Edge TPUs. This is followed by an extensive evaluation of three classes of Edge TPUs, covering different computing ecosystems that are either currently deployed in Google products or are the product pipeline. Building upon this extensive study, the paper discusses critical and interpretable microarchitectural insights about the studied classes of Edge TPUs. Mainly discussed is how Edge TPU accelerators perform across CNNs with different structures. Finally, the paper presents ongoing efforts in developing high-accuracy learned machine learning models to estimate the major performance metrics of accelerators such as latency and energy consumption. These learned models enable significantly faster (in the order of milliseconds) evaluations of accelerators as an alternative to time-consuming cycle-accurate simulators and establish an exciting opportunity for rapid hard-ware/software co-design.

Attention Models for Point Clouds in Deep Learning: A Survey

Recently, the advancement of 3D point clouds in deep learning has attracted intensive research in different application domains such as computer vision and robotic tasks. However, creating feature representation of robust, discriminative from unordered and irregular point clouds is challenging. The goal of this paper is to provide a comprehensive overview of the point clouds feature representation which uses attention models. More than 75+ key contributions in the recent three years are summarized in this survey, including the 3D objective detection, 3D semantic segmentation, 3D pose estimation, point clouds completion etc. Also provided are: a detailed characterization of (i) the role of attention mechanisms, (ii) the usability of attention models into different tasks, and (iii) the development trend of key technology.

Constrained Optimization for Training Deep Neural Networks Under Class Imbalance

Deep neural networks (DNNs) are notorious for making more mistakes for the classes that have substantially fewer samples than the others during training. Such class imbalance is ubiquitous in clinical applications and very crucial to handle because the classes with fewer samples most often correspond to critical cases (e.g., cancer) where misclassifications can have severe consequences. Not to miss such cases, binary classifiers need to be operated at high True Positive Rates (TPR) by setting a higher threshold but this comes at the cost of very high False Positive Rates (FPR) for problems with class imbalance. Existing methods for learning under class imbalance most often do not take this into account. This paper argues that prediction accuracy should be improved by emphasizing reducing FPRs at high TPRs for problems where misclassification of the positive samples are associated with higher cost. To this end, it’s posed the training of a DNN for binary classification as a constrained optimization problem and introduce a novel constraint that can be used with existing loss functions to enforce maximal area under the ROC curve (AUC). The resulting constrained optimization problem is solved using an Augmented Lagrangian method (ALM), where the constraint emphasizes reduction of FPR at high TPR. Results demonstrate that the proposed method almost always improves the loss functions it is used with by attaining lower FPR at high TPR and higher or equal AUC.

Deep Convolutional Neural Networks with Unitary Weights

While normalizations aim to fix the exploding and vanishing gradient problem in deep neural networks, they have drawbacks in speed or accuracy because of their dependency on the data set statistics. This paper is a comprehensive study of a novel method based on unitary synaptic weights derived from Lie Group to construct intrinsically stable neural systems. Here it’s shown that unitary convolutional neural networks deliver up to 32% faster inference speeds while maintaining competitive prediction accuracy. Unlike prior arts restricted to square synaptic weights, the paper expands the unitary networks to weights of any size and dimension.

TransGAN: Two Pure Transformers Can Make One Strong GAN, and That Can Scale Up

The recent explosive interest with transformers has suggested their potential to become powerful “universal” models for computer vision tasks, such as classification, detection, and segmentation. An important question is how much further transformers can go – are they ready to take some more notoriously difficult vision tasks, e.g., generative adversarial networks (GANs)? Driven by that curiosity, this paper conducts the first pilot study in building a GAN completely free of convolutions, using only pure transformer-based architectures. The proposed vanilla GAN architecture, dubbed TransGAN , consists of a memory-friendly transformer-based generator that progressively increases feature resolution while decreasing embedding dimension, and a patch-level discriminator that is also transformer-based. TransGAN is seen to notably benefit from data augmentations (more than standard GANs), a multi-task co-training strategy for the generator, and a locally initialized self-attention that emphasizes the neighborhood smoothness of natural images. Equipped with those findings, TransGAN can effectively scale up with bigger models and high-resolution image datasets. Specifically, the architecture achieves highly competitive performance compared to current state-of-the-art GANs based on convolutional backbones. The GitHub repo associated with this paper can be found HERE .

Deep Learning for Scene Classification: A Survey

Scene classification , aiming at classifying a scene image to one of the predefined scene categories by comprehending the entire image, is a longstanding, fundamental and challenging problem in computer vision. The rise of large-scale datasets, which constitute a dense sampling of diverse real-world scenes, and the renaissance of deep learning techniques, which learn powerful feature representations directly from big raw data, have been bringing remarkable progress in the field of scene representation and classification. To help researchers master needed advances in this field, the goal of this paper is to provide a comprehensive survey of recent achievements in scene classification using deep learning. More than 260 major publications are included in this survey covering different aspects of scene classification, including challenges, benchmark datasets, taxonomy, and quantitative performance comparisons of the reviewed methods. In retrospect of what has been achieved so far, this paper is concluded with a list of promising research opportunities.

Introducing and assessing the explainable AI (XAI) method: SIDU

Explainable Artificial Intelligence (XAI) has in recent years become a well-suited framework to generate human-understandable explanations of black box models. This paper presents a novel XAI visual explanation algorithm denoted SIDU that can effectively localize entire object regions responsible for prediction. The paper analyzes its robustness and effectiveness through various computational and human subject experiments. In particular, the SIDU algorithm is assessed using three different types of evaluations (Application, Human and Functionally-Grounded) to demonstrate its superior performance. The robustness of SIDU is further studied in presence of adversarial attack on black box models to better understand its performance.

Evolving Reinforcement Learning Algorithms

This paper proposes a method for meta-learning reinforcement learning algorithms by searching over the space of computational graphs which compute the loss function for a value-based model-free RL agent to optimize. The learned algorithms are domain-agnostic and can generalize to new environments not seen during training. The method can both learn from scratch and bootstrap off known existing algorithms, like DQN, enabling interpretable modifications which improve performance. Learning from scratch on simple classical control and gridworld tasks, the method rediscovers the temporal-difference (TD) algorithm. Bootstrapped from DQN, two learned algorithms are highlighted which obtain good generalization performance over other classical control tasks, gridworld type tasks, and Atari games. The analysis of the learned algorithm behavior shows resemblance to recently proposed RL algorithms that address overestimation in value-based methods.

RepVGG: Making VGG-style ConvNets Great Again

VGG-style ConvNets, although now considered a classic architecture, were attractive due to their simplicity. In contrast, ResNets have become popular due to their high accuracy but are more difficult to customize and display undesired inference drawbacks. To address these issues, Ding et al. propose RepVGG – the return of the VGG!

RepVGG is an efficient and simple architecture using plain VGG-style ConvNets. It decouples the inference-time and training-time architecture through a structural re-parameterization technique. The researchers report favorable speed-accuracy tradeoff compared to state-of-the-art models, such as EfficientNet and RegNet. RepVGG achieves 80% top-1 accuracy on ImageNet and is benchmarked as being 83% faster than ResNet-50. This research is part of a broader effort to build more efficient models using simpler architectures and operations. The GitHub repo associated with this paper can be found HERE .

Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity

In deep learning, models typically reuse the same parameters for all inputs. Mixture of Experts (MoE) defies this and instead selects different parameters for each incoming example. The result is a sparsely-activated model — with outrageous numbers of parameters — but a constant computational cost. However, despite several notable successes of MoE, widespread adoption has been hindered by complexity, communication costs and training instability — this paper addresses these with the Switch Transformer . The Google Brain researchers simplify the MoE routing algorithm and design intuitive improved models with reduced communication and computational costs. The proposed training techniques help wrangle the instabilities and it is shown that large sparse models may be trained, for the first time, with lower precision (bfloat16) formats. They design models based off T5-Base and T5-Large to obtain up to 7x increases in pre-training speed with the same computational resources. These improvements extend into multilingual settings to measure gains over the mT5-Base version across all 101 languages. Finally, the paper advances the current scale of language models by pre-training up to trillion parameter models on the “Colossal Clean Crawled Corpus” and achieve a 4x speedup over the T5-XXL model. The GitHub repo associated with this paper can be found HERE .

How to Learn More about Deep Learning Research

At our upcoming event this November 16th-18th in San Francisco, ODSC West 2021 will feature a plethora of talks, workshops, and training sessions on deep learning and deep learning research. You can register now for 60% off all ticket types before the discount drops to 40% in a few weeks. Some highlighted sessions on deep learning include:

Sessions on Deep Learning and Deep Learning Research:

- GANs: Theory and Practice, Image Synthesis With GANs Using TensorFlow: Ajay Baranwal | Center Director | Center for Deep Learning in Electronic Manufacturing, Inc

- Machine Learning With Graphs: Going Beyond Tabular Data: Dr. Clair J. Sullivan | Data Science Advocate | Neo4j

- Deep Dive into Reinforcement Learning with PPO using TF-Agents & TensorFlow 2.0: Oliver Zeigermann | Software Developer | embarc Software Consulting GmbH

- Get Started with Time-Series Forecasting using the Google Cloud AI Platform: Karl Weinmeister | Developer Relations Engineering Manager | Google

Sessions on Machine Learning:

- Towards More Energy-Efficient Neural Networks? Use Your Brain!: Olaf de Leeuw | Data Scientist | Dataworkz

- Practical MLOps: Automation Journey: Evgenii Vinogradov, PhD | Head of DHW Development | YooMoney

- Applications of Modern Survival Modeling with Python: Brian Kent, PhD | Data Scientist | Founder The Crosstab Kite

- Using Change Detection Algorithms for Detecting Anomalous Behavior in Large Systems: Veena Mendiratta, PhD | Adjunct Faculty, Network Reliability and Analytics Researcher | Northwestern University

Sessions on MLOps:

- Tuning Hyperparameters with Reproducible Experiments: Milecia McGregor | Senior Software Engineer | Iterative

- MLOps… From Model to Production: Filipa Peleja, PhD | Lead Data Scientist | Levi Strauss & Co

- Operationalization of Models Developed and Deployed in Heterogeneous Platforms: Sourav Mazumder | Data Scientist, Thought Leader, AI & ML Operationalization Leader | IBM

- Develop and Deploy a Machine Learning Pipeline in 45 Minutes with Ploomber: Eduardo Blancas | Data Scientist | Fidelity Investment

Daniel Gutierrez, ODSC

Daniel D. Gutierrez is a practicing data scientist who’s been working with data long before the field came in vogue. As a technology journalist, he enjoys keeping a pulse on this fast-paced industry. Daniel is also an educator having taught data science, machine learning and R classes at the university level. He has authored four computer industry books on database and data science technology, including his most recent title, “Machine Learning and Data Science: An Introduction to Statistical Learning Methods with R.” Daniel holds a BS in Mathematics and Computer Science from UCLA.

Tesla CEO Elon Musk Boosting Salaries in Bid to Fight Off OpenAI Poachers

AI and Data Science News posted by ODSC Team Apr 5, 2024 In a bid to fight off OpenAI poachers, Tesla CEO Elon Musk announced significant pay raises...

ODSC’s AI Weekly Recap: Week of April 5th

AI and Data Science News posted by Jorge Arenas Apr 5, 2024 Open Data Science Blog Recap The White House Office of Management and Budget, or OMB has...

White House Pushes Fed Agencies to Hire AI Chiefs

AI and Data Science News posted by ODSC Team Apr 4, 2024 The White House Office of Management and Budget, or OMB has introduced guidelines mandating all U.S....

deep learning Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Synergic Deep Learning for Smart Health Diagnosis of COVID-19 for Connected Living and Smart Cities

COVID-19 pandemic has led to a significant loss of global deaths, economical status, and so on. To prevent and control COVID-19, a range of smart, complex, spatially heterogeneous, control solutions, and strategies have been conducted. Earlier classification of 2019 novel coronavirus disease (COVID-19) is needed to cure and control the disease. It results in a requirement of secondary diagnosis models, since no precise automated toolkits exist. The latest finding attained using radiological imaging techniques highlighted that the images hold noticeable details regarding the COVID-19 virus. The application of recent artificial intelligence (AI) and deep learning (DL) approaches integrated to radiological images finds useful to accurately detect the disease. This article introduces a new synergic deep learning (SDL)-based smart health diagnosis of COVID-19 using Chest X-Ray Images. The SDL makes use of dual deep convolutional neural networks (DCNNs) and involves a mutual learning process from one another. Particularly, the representation of images learned by both DCNNs is provided as the input of a synergic network, which has a fully connected structure and predicts whether the pair of input images come under the identical class. Besides, the proposed SDL model involves a fuzzy bilateral filtering (FBF) model to pre-process the input image. The integration of FBL and SDL resulted in the effective classification of COVID-19. To investigate the classifier outcome of the SDL model, a detailed set of simulations takes place and ensures the effective performance of the FBF-SDL model over the compared methods.

A deep learning approach for remote heart rate estimation

Weakly supervised spatial deep learning for earth image segmentation based on imperfect polyline labels.

In recent years, deep learning has achieved tremendous success in image segmentation for computer vision applications. The performance of these models heavily relies on the availability of large-scale high-quality training labels (e.g., PASCAL VOC 2012). Unfortunately, such large-scale high-quality training data are often unavailable in many real-world spatial or spatiotemporal problems in earth science and remote sensing (e.g., mapping the nationwide river streams for water resource management). Although extensive efforts have been made to reduce the reliance on labeled data (e.g., semi-supervised or unsupervised learning, few-shot learning), the complex nature of geographic data such as spatial heterogeneity still requires sufficient training labels when transferring a pre-trained model from one region to another. On the other hand, it is often much easier to collect lower-quality training labels with imperfect alignment with earth imagery pixels (e.g., through interpreting coarse imagery by non-expert volunteers). However, directly training a deep neural network on imperfect labels with geometric annotation errors could significantly impact model performance. Existing research that overcomes imperfect training labels either focuses on errors in label class semantics or characterizes label location errors at the pixel level. These methods do not fully incorporate the geometric properties of label location errors in the vector representation. To fill the gap, this article proposes a weakly supervised learning framework to simultaneously update deep learning model parameters and infer hidden true vector label locations. Specifically, we model label location errors in the vector representation to partially reserve geometric properties (e.g., spatial contiguity within line segments). Evaluations on real-world datasets in the National Hydrography Dataset (NHD) refinement application illustrate that the proposed framework outperforms baseline methods in classification accuracy.

Prediction of Failure Categories in Plastic Extrusion Process with Deep Learning

Hyperparameters tuning of faster r-cnn deep learning transfer for persistent object detection in radar images, a comparative study of automated legal text classification using random forests and deep learning, a semi-supervised deep learning approach for vessel trajectory classification based on ais data, an improved approach towards more robust deep learning models for chemical kinetics, power system transient security assessment based on deep learning considering partial observability, a multi-attention collaborative deep learning approach for blood pressure prediction.

We develop a deep learning model based on Long Short-term Memory (LSTM) to predict blood pressure based on a unique data set collected from physical examination centers capturing comprehensive multi-year physical examination and lab results. In the Multi-attention Collaborative Deep Learning model (MAC-LSTM) we developed for this type of data, we incorporate three types of attention to generate more explainable and accurate results. In addition, we leverage information from similar users to enhance the predictive power of the model due to the challenges with short examination history. Our model significantly reduces predictive errors compared to several state-of-the-art baseline models. Experimental results not only demonstrate our model’s superiority but also provide us with new insights about factors influencing blood pressure. Our data is collected in a natural setting instead of a setting designed specifically to study blood pressure, and the physical examination items used to predict blood pressure are common items included in regular physical examinations for all the users. Therefore, our blood pressure prediction results can be easily used in an alert system for patients and doctors to plan prevention or intervention. The same approach can be used to predict other health-related indexes such as BMI.

Export Citation Format

Share document.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Brief Bioinform

- v.19(6); 2018 Nov

Deep learning for healthcare: review, opportunities and challenges

Riccardo miotto.

1 Institute for Next Generation Healthcare, Department of Genetics and Genomic Sciences at the Icahn School of Medicine at Mount Sinai, New York, NY

2 Division of Health Informatics, Department of Healthcare Policy and Research at Weill Cornell Medicine at Cornell University, New York, NY

Shuang Wang

3 Department of Biomedical Informatics at the University of California San Diego, La Jolla, CA

Xiaoqian Jiang

Joel t dudley.

4 the Institute for Next Generation Healthcare and associate professor in the Department of Genetics and Genomic Sciences at the Icahn School of Medicine at Mount Sinai, New York, NY

Gaining knowledge and actionable insights from complex, high-dimensional and heterogeneous biomedical data remains a key challenge in transforming health care. Various types of data have been emerging in modern biomedical research, including electronic health records, imaging, -omics, sensor data and text, which are complex, heterogeneous, poorly annotated and generally unstructured. Traditional data mining and statistical learning approaches typically need to first perform feature engineering to obtain effective and more robust features from those data, and then build prediction or clustering models on top of them. There are lots of challenges on both steps in a scenario of complicated data and lacking of sufficient domain knowledge. The latest advances in deep learning technologies provide new effective paradigms to obtain end-to-end learning models from complex data. In this article, we review the recent literature on applying deep learning technologies to advance the health care domain. Based on the analyzed work, we suggest that deep learning approaches could be the vehicle for translating big biomedical data into improved human health. However, we also note limitations and needs for improved methods development and applications, especially in terms of ease-of-understanding for domain experts and citizen scientists. We discuss such challenges and suggest developing holistic and meaningful interpretable architectures to bridge deep learning models and human interpretability.

Introduction

Health care is coming to a new era where the abundant biomedical data are playing more and more important roles. In this context, for example, precision medicine attempts to ‘ensure that the right treatment is delivered to the right patient at the right time’ by taking into account several aspects of patient's data, including variability in molecular traits, environment, electronic health records (EHRs) and lifestyle [ 1–3 ].

The large availability of biomedical data brings tremendous opportunities and challenges to health care research. In particular, exploring the associations among all the different pieces of information in these data sets is a fundamental problem to develop reliable medical tools based on data-driven approaches and machine learning. To this aim, previous works tried to link multiple data sources to build joint knowledge bases that could be used for predictive analysis and discovery [ 4–6 ]. Although existing models demonstrate great promises (e.g. [ 7–11 ]), predictive tools based on machine learning techniques have not been widely applied in medicine [ 12 ]. In fact, there remain many challenges in making full use of the biomedical data, owing to their high-dimensionality, heterogeneity, temporal dependency, sparsity and irregularity [ 13–15 ]. These challenges are further complicated by various medical ontologies used to generalize the data (e.g. Systematized Nomenclature of Medicine-Clinical Terms (SNOMED-CT) [ 16 ], Unified Medical Language System (UMLS) [ 17 ], International Classification of Disease-9th version (ICD-9) [ 18 ]), which often contain conflicts and inconsistency [ 19 ]. Sometimes, the same clinical phenotype is also expressed in different ways across the data. As an example, in the EHRs, a patient diagnosed with ‘type 2 diabetes mellitus’ can be identified by laboratory values of hemoglobin A1C >7.0, presence of 250.00 ICD-9 code, ‘type 2 diabetes mellitus’ mentioned in the free-text clinical notes and so on. Consequently, it is nontrivial to harmonize all these medical concepts to build a higher-level semantic structure and understand their correlations [ 6 , 20 ].

A common approach in biomedical research is to have a domain expert to specify the phenotypes to use in an ad hoc manner. However, supervised definition of the feature space scales poorly and misses the opportunities to discover novel patterns. Alternatively, representation learning methods allow to automatically discover the representations needed for prediction from the raw data [ 21 , 22 ]. Deep learning methods are representation-learning algorithms with multiple levels of representation, obtained by composing simple but nonlinear modules that each transform the representation at one level (starting with the raw input) into a representation at a higher, slightly more abstract level [ 23 ]. Deep learning models demonstrated great performance and potential in computer vision, speech recognition and natural language processing tasks [ 24–27 ].

Given its demonstrated performance in different domains and the rapid progresses of methodological improvements, deep learning paradigms introduce exciting new opportunities for biomedical informatics. Efforts to apply deep learning methods to health care are already planned or underway. For example, Google DeepMind has announced plans to apply its expertise to health care [ 28 ] and Enlitic is using deep learning intelligence to spot health problems on X-rays and Computed Tomography (CT) scans [ 29 ].

However, deep learning approaches have not been extensively evaluated for a broad range of medical problems that could benefit from its capabilities. There are many aspects of deep learning that could be helpful in health care, such as its superior performance, end-to-end learning scheme with integrated feature learning, capability of handling complex and multi-modality data and so on. To accelerate these efforts, the deep learning research field as a whole must address several challenges relating to the characteristics of health care data (i.e. sparse, noisy, heterogeneous, time-dependent) as need for improved methods and tools that enable deep learning to interface with health care information workflows and clinical decision support.