Evidence-Based Research Series-Paper 1: What Evidence-Based Research is and why is it important?

Affiliations.

- 1 Johns Hopkins Evidence-based Practice Center, Division of General Internal Medicine, Department of Medicine, Johns Hopkins University, Baltimore, MD, USA.

- 2 Digital Content Services, Operations, Elsevier Ltd., 125 London Wall, London, EC2Y 5AS, UK.

- 3 School of Nursing, McMaster University, Health Sciences Centre, Room 2J20, 1280 Main Street West, Hamilton, Ontario, Canada, L8S 4K1; Section for Evidence-Based Practice, Western Norway University of Applied Sciences, Inndalsveien 28, Bergen, P.O.Box 7030 N-5020 Bergen, Norway.

- 4 Department of Sport Science and Clinical Biomechanics, University of Southern Denmark, Campusvej 55, 5230, Odense M, Denmark; Department of Physiotherapy and Occupational Therapy, University Hospital of Copenhagen, Herlev & Gentofte, Kildegaardsvej 28, 2900, Hellerup, Denmark.

- 5 Musculoskeletal Statistics Unit, the Parker Institute, Bispebjerg and Frederiksberg Hospital, Copenhagen, Nordre Fasanvej 57, 2000, Copenhagen F, Denmark; Department of Clinical Research, Research Unit of Rheumatology, University of Southern Denmark, Odense University Hospital, Denmark.

- 6 Section for Evidence-Based Practice, Western Norway University of Applied Sciences, Inndalsveien 28, Bergen, P.O.Box 7030 N-5020 Bergen, Norway. Electronic address: [email protected].

- PMID: 32979491

- DOI: 10.1016/j.jclinepi.2020.07.020

Objectives: There is considerable actual and potential waste in research. Evidence-based research ensures worthwhile and valuable research. The aim of this series, which this article introduces, is to describe the evidence-based research approach.

Study design and setting: In this first article of a three-article series, we introduce the evidence-based research approach. Evidence-based research is the use of prior research in a systematic and transparent way to inform a new study so that it is answering questions that matter in a valid, efficient, and accessible manner.

Results: We describe evidence-based research and provide an overview of the approach of systematically and transparently using previous research before starting a new study to justify and design the new study (article #2 in series) and-on study completion-place its results in the context with what is already known (article #3 in series).

Conclusion: This series introduces evidence-based research as an approach to minimize unnecessary and irrelevant clinical health research that is unscientific, wasteful, and unethical.

Keywords: Clinical health research; Clinical trials; Evidence synthesis; Evidence-based research; Medical ethics; Research ethics; Systematic review.

Copyright © 2020 Elsevier Inc. All rights reserved.

Publication types

- Research Support, Non-U.S. Gov't

- Biomedical Research* / methods

- Biomedical Research* / organization & administration

- Clinical Trials as Topic / ethics

- Clinical Trials as Topic / methods

- Clinical Trials as Topic / organization & administration

- Ethics, Research

- Evidence-Based Medicine / methods*

- Needs Assessment

- Reproducibility of Results

- Research Design* / standards

- Research Design* / trends

- Systematic Reviews as Topic

- Treatment Outcome

- Library databases

- Library website

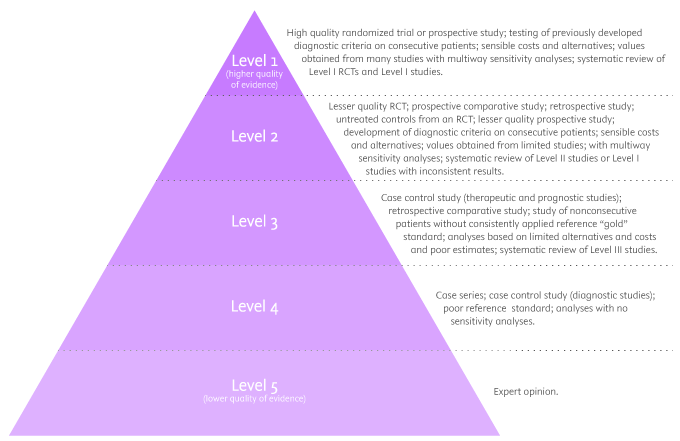

Evidence-Based Research: Levels of Evidence Pyramid

Introduction.

One way to organize the different types of evidence involved in evidence-based practice research is the levels of evidence pyramid. The pyramid includes a variety of evidence types and levels.

- systematic reviews

- critically-appraised topics

- critically-appraised individual articles

- randomized controlled trials

- cohort studies

- case-controlled studies, case series, and case reports

- Background information, expert opinion

Levels of evidence pyramid

The levels of evidence pyramid provides a way to visualize both the quality of evidence and the amount of evidence available. For example, systematic reviews are at the top of the pyramid, meaning they are both the highest level of evidence and the least common. As you go down the pyramid, the amount of evidence will increase as the quality of the evidence decreases.

Text alternative for Levels of Evidence Pyramid diagram

EBM Pyramid and EBM Page Generator, copyright 2006 Trustees of Dartmouth College and Yale University. All Rights Reserved. Produced by Jan Glover, David Izzo, Karen Odato and Lei Wang.

Filtered Resources

Filtered resources appraise the quality of studies and often make recommendations for practice. The main types of filtered resources in evidence-based practice are:

Scroll down the page to the Systematic reviews , Critically-appraised topics , and Critically-appraised individual articles sections for links to resources where you can find each of these types of filtered information.

Systematic reviews

Authors of a systematic review ask a specific clinical question, perform a comprehensive literature review, eliminate the poorly done studies, and attempt to make practice recommendations based on the well-done studies. Systematic reviews include only experimental, or quantitative, studies, and often include only randomized controlled trials.

You can find systematic reviews in these filtered databases :

- Cochrane Database of Systematic Reviews Cochrane systematic reviews are considered the gold standard for systematic reviews. This database contains both systematic reviews and review protocols. To find only systematic reviews, select Cochrane Reviews in the Document Type box.

- JBI EBP Database (formerly Joanna Briggs Institute EBP Database) This database includes systematic reviews, evidence summaries, and best practice information sheets. To find only systematic reviews, click on Limits and then select Systematic Reviews in the Publication Types box. To see how to use the limit and find full text, please see our Joanna Briggs Institute Search Help page .

You can also find systematic reviews in this unfiltered database :

To learn more about finding systematic reviews, please see our guide:

- Filtered Resources: Systematic Reviews

Critically-appraised topics

Authors of critically-appraised topics evaluate and synthesize multiple research studies. Critically-appraised topics are like short systematic reviews focused on a particular topic.

You can find critically-appraised topics in these resources:

- Annual Reviews This collection offers comprehensive, timely collections of critical reviews written by leading scientists. To find reviews on your topic, use the search box in the upper-right corner.

- Guideline Central This free database offers quick-reference guideline summaries organized by a new non-profit initiative which will aim to fill the gap left by the sudden closure of AHRQ’s National Guideline Clearinghouse (NGC).

- JBI EBP Database (formerly Joanna Briggs Institute EBP Database) To find critically-appraised topics in JBI, click on Limits and then select Evidence Summaries from the Publication Types box. To see how to use the limit and find full text, please see our Joanna Briggs Institute Search Help page .

- National Institute for Health and Care Excellence (NICE) Evidence-based recommendations for health and care in England.

- Filtered Resources: Critically-Appraised Topics

Critically-appraised individual articles

Authors of critically-appraised individual articles evaluate and synopsize individual research studies.

You can find critically-appraised individual articles in these resources:

- EvidenceAlerts Quality articles from over 120 clinical journals are selected by research staff and then rated for clinical relevance and interest by an international group of physicians. Note: You must create a free account to search EvidenceAlerts.

- ACP Journal Club This journal publishes reviews of research on the care of adults and adolescents. You can either browse this journal or use the Search within this publication feature.

- Evidence-Based Nursing This journal reviews research studies that are relevant to best nursing practice. You can either browse individual issues or use the search box in the upper-right corner.

To learn more about finding critically-appraised individual articles, please see our guide:

- Filtered Resources: Critically-Appraised Individual Articles

Unfiltered resources

You may not always be able to find information on your topic in the filtered literature. When this happens, you'll need to search the primary or unfiltered literature. Keep in mind that with unfiltered resources, you take on the role of reviewing what you find to make sure it is valid and reliable.

Note: You can also find systematic reviews and other filtered resources in these unfiltered databases.

The Levels of Evidence Pyramid includes unfiltered study types in this order of evidence from higher to lower:

You can search for each of these types of evidence in the following databases:

TRIP database

Background information & expert opinion.

Background information and expert opinions are not necessarily backed by research studies. They include point-of-care resources, textbooks, conference proceedings, etc.

- Family Physicians Inquiries Network: Clinical Inquiries Provide the ideal answers to clinical questions using a structured search, critical appraisal, authoritative recommendations, clinical perspective, and rigorous peer review. Clinical Inquiries deliver best evidence for point-of-care use.

- Harrison, T. R., & Fauci, A. S. (2009). Harrison's Manual of Medicine . New York: McGraw-Hill Professional. Contains the clinical portions of Harrison's Principles of Internal Medicine .

- Lippincott manual of nursing practice (8th ed.). (2006). Philadelphia, PA: Lippincott Williams & Wilkins. Provides background information on clinical nursing practice.

- Medscape: Drugs & Diseases An open-access, point-of-care medical reference that includes clinical information from top physicians and pharmacists in the United States and worldwide.

- Virginia Henderson Global Nursing e-Repository An open-access repository that contains works by nurses and is sponsored by Sigma Theta Tau International, the Honor Society of Nursing. Note: This resource contains both expert opinion and evidence-based practice articles.

- Previous Page: Phrasing Research Questions

- Next Page: Evidence Types

- Office of Student Disability Services

Walden Resources

Departments.

- Academic Residencies

- Academic Skills

- Career Planning and Development

- Customer Care Team

- Field Experience

- Military Services

- Student Success Advising

- Writing Skills

Centers and Offices

- Center for Social Change

- Office of Academic Support and Instructional Services

- Office of Degree Acceleration

- Office of Research and Doctoral Services

- Office of Student Affairs

Student Resources

- Doctoral Writing Assessment

- Form & Style Review

- Quick Answers

- ScholarWorks

- SKIL Courses and Workshops

- Walden Bookstore

- Walden Catalog & Student Handbook

- Student Safety/Title IX

- Legal & Consumer Information

- Website Terms and Conditions

- Cookie Policy

- Accessibility

- Accreditation

- State Authorization

- Net Price Calculator

- Contact Walden

Walden University is a member of Adtalem Global Education, Inc. www.adtalem.com Walden University is certified to operate by SCHEV © 2024 Walden University LLC. All rights reserved.

- What is the best evidence and how to find it

Why is research evidence better than expert opinion alone?

In a broad sense, research evidence can be any systematic observation in order to establish facts and reach conclusions. Anything not fulfilling this definition is typically classified as “expert opinion”, the basis of which includes experience with patients, an understanding of biology, knowledge of pre-clinical research, as well as of the results of studies. Using expert opinion as the only basis to make decisions has proved problematic because in practice doctors often introduce new treatments too quickly before they have been shown to work, or they are too slow to introduce proven treatments.

However, clinical experience is key to interpret and apply research evidence into practice, and to formulate recommendations, for instance in the context of clinical guidelines. In other words, research evidence is necessary but not sufficient to make good health decisions.

Which studies are more reliable?

Not all evidence is equally reliable.

Any study design, qualitative or quantitative, where data is collected from individuals or groups of people is usually called a primary study. There are many types of primary study designs, but for each type of health question there is one that provides more reliable information.

For treatment decisions, there is consensus that the most reliable primary study is the randomised controlled trial (RCT). In this type of study, patients are randomly assigned to have either the treatment being tested or a comparison treatment (sometimes called the control treatment). Random really means random. The decision to put someone into one group or another is made like tossing a coin: heads they go into one group, tails they go into the other.

The control treatment might be a different type of treatment or a dummy treatment that shouldn't have any effect (a placebo). Researchers then compare the effects of the different treatments.

Large randomised trials are expensive and take time. In addition sometimes it may be unethical to undertake a study in which some people were randomly assigned not to have a treatment. For example, it wouldn't be right to give oxygen to some children having an asthma attack and not give it to others. In cases like this, other primary study designs may be the best choice.

Laboratory studies are another type of study. Newspapers often have stories of studies showing how a drug cured cancer in mice. But just because a treatment works for animals in laboratory experiments, this doesn't mean it will work for humans. In fact, most drugs that have been shown to cure cancer in mice do not work for people.

Very rarely we cannot base our health decisions on the results of studies. Sometimes the research hasn't been done because doctors are used to treating a condition in a way that seems to work. This is often true of treatments for broken bones and operations. But just because there's no research for a treatment doesn't mean it doesn't work. It just means that no one can say for sure.

Why we shouldn’t read studies

An enormous amount of effort is required to be able to identify and summarise everything we know with regard to any given health intervention. The amount of data has soared dramatically. A conservative estimation is there are more than 35,000 medical journals and almost 20 million research articles published every year. On the other hand, up to half of existing data might be unpublished.

How can anyone keep up with all this? And how can you tell if the research is good or not? Each primary study is only one piece of a jigsaw that may take years to finish. Rarely does any one piece of research answer either a doctor's, or a patient's questions.

Even though reading large numbers of studies is impractical, high-quality primary studies, especially RCTs, constitute the foundations of what we know, and they are the best way of advancing the knowledge. Any effort to support or promote the conduct of sound, transparent, and independent trials that are fully and clearly published is worth endorsing. A prominent project in this regard is the All trials initiative.

Why we should read systematic reviews

Most of the time a single study doesn't tell us enough. The best answers are found by combining the results of many studies.

A systematic review is a type of research that looks at the results from all of the good-quality studies. It puts together the results of these individual studies into one summary. This gives an estimate of a treatment's risks and benefits. Sometimes these reviews include a statistical analysis, called a meta-analysis , which combines the results of several studies to give a treatment effect.

Systematic reviews are increasingly being used for decision making because they reduce the probability of being misled by looking at one piece of the jigsaw. By being systematic they are also more transparent, and have become the gold standard approach to synthesise the ever-expanding and conflicting biomedical literature.

Systematic reviews are not fool proof. Their findings are only as good as the studies that they include and the methods they employ. But the best reviews clearly state whether the studies they include are good quality or not.

Three reasons why we shouldn’t read (most) systematic reviews

Firstly, systematic reviews have proliferated over time. From 11 per day in 2010, they skyrocketed up to 40 per day or more in 2015.[1][2] Some have described this production as having reached epidemic proportions where the large majority of produced systematic reviews and meta-analyses are unnecessary, misleading, and/or conflicted.[3][4] So, finding more than one systematic review for a question is the rule more than the exception, and it is not unusual to find several dozen for the hottest questions.

Second, most systematic reviews address a narrow question. It is difficult to put them in the context of all of the available alternatives for an individual case. Reading multiple reviews to assess all of the alternatives is impractical, even more if we consider they are typically difficult to read for the average clinician, who will need to solve several questions each day.[5]

Third, systematic reviews do not tell you what to do, or what is advisable for a given patient or situation. Indeed, good systematic reviews explicitly avoid making recommendations.

So, even though systematic reviews play a key role in any evidence-based decision-making process, most of them are low-quality or outdated, and they rarely provide all the information needed to make decisions in the real world.

How to find the best available evidence?

Considering the massive amount of information available, we can quickly discard periodically reviewing our favourite journals as a means of sourcing the best available evidence.

The traditional approach to search for evidence has been using major databases, such as PubMed or EMBASE . These constitute comprehensive sources including millions of relevant, but also irrelevant articles. Even though in the past they were the preferred approach to searching for evidence, information overload has made them impractical, and most clinicians would fail to find the best available evidence in this way, however hard they tried.

Another popular approach is simply searching in Google. Unfortunately, because of its lack of transparency, Google is not a reliable way to filter current best evidence from unsubstantiated or non-scientifically supervised sources.[6]

Three alternatives to access the best evidence

Alternative 1 - Pick the best systematic review Mastering the art of identifying, appraising, and applying high-quality systematic reviews into practice can be very rewarding. It is not easy, but once mastered it gives a view of the bigger picture: of what is known, and what is not known.

The best single source of highest-quality systematic reviews is produced by an international organisation called the Cochrane Collaboration, named after a well-known researcher.[4] They can be accessed at The Cochrane Library .

Unfortunately, Cochrane reviews do not cover all of the existing questions and they are not always up to date. Also, there might be non-Cochrane reviews out-performing Cochrane reviews.

There are many resources that facilitate access to systematic reviews (and other resources), such as Trip database , PubMed Health , ACCESSSS , or Epistemonikos (the Cochrane Collaboration maintains a comprehensive list of these resources).

Epistemonikos database is innovative both in simultaneously searching multiple resources and in indexing and interlinking relevant evidence. For example, Epistemonikos connects systematic reviews and their included studies, and thus allows clustering of systematic reviews based on the primary studies they have in common. Epistemonikos is also unique in offering an appreciable multilingual user interface, multilingual search, and translation of abstracts in more than nine languages.[6] This database includes several tools to compare systematic reviews, including the matrix of evidence, a dynamic table showing all of the systematic reviews, and the primary studies included in those reviews.

Additionally, Epistemonikos partnered with Cochrane, and during 2017 a combined search in both the Cochrane Library and Epistemonikos was released.

Alternative 2 - Read trustworthy guidelines Although systematic reviews can provide a synthesis of the benefits and harms of the interventions, they do not integrate these factors with patients’ values and preferences or resource considerations to provide a suggested course of action. Also, to fully address the questions, clinicians would need to integrate the information of several systematic reviews covering all the relevant alternatives and outcomes. Most clinicians will likely prefer guidance rather than interpreting systematic reviews themselves.

Trustworthy guidelines, especially if developed with high standards, such as the Grading of Recommendations, Assessment, Development, and Evaluation ( GRADE ) approach, offer systematic and transparent guidance in moving from evidence to recommendations.[7]

Many online guideline websites promote themselves as “evidence based”, but few have explicit links to research findings.[8] If they don’t have in-line references to relevant research findings, dismiss them. If they have, you can judge the strength of the commitment to evidence to support inference, checking whether statements are based on high-quality versus low-quality evidence using alternative 1 explained above.

Unfortunately, most guidelines have serious limitations or are outdated.[9][10] The exercise of locating and appraising the best guideline is time consuming. This is particularly challenging for generalists addressing questions from different conditions or diseases.

Alternative 3 - Use point-of-care tools Point-of-care tools, such as BMJ Best Practice, have been developed as a response to the genuine need to summarise the ever-expanding biomedical literature on an ever-increasing number of alternatives in order to make evidence-based decisions. In this competitive market, the more successful products have been those delivering innovative, user-friendly interfaces that improve the retrieval, synthesis, organisation, and application of evidence-based content in many different areas of clinical practice.

However, the same impossibility in catching up with new evidence without compromising quality that affects guidelines also affects point-of-care tools. Clinicians should become familiar with the point-of-care information resource they want or can access, and examine the in-line references to relevant research findings. Clinicians can easily judge the strength of the commitment to evidence checking whether statements are based on high-quality versus low-quality evidence using alternative 1 explained above. Comprehensiveness, use of GRADE approach, and independence are other characteristics to bear in mind when selecting among point-of-care information summaries.

A comprehensive list of these resources can be found in a study by Kwag et al .

Finding the best available evidence is more challenging than it was in the dawn of the evidence-based movement, and the main cause is the exponential growth of evidence-based information, in any of the flavours described above.

However, with a little bit of patience and practice, the busy clinician will discover evidence-based practice is far easier than it was 5 or 10 years ago. We are entering a stage where information is flowing between the different systems, technology is being harnessed for good, and the different players are starting to generate alliances.

The early adopters will surely enjoy the first experiments of living systematic reviews (high-quality, up-to-date online summaries of health research that are updated as new research becomes available), living guidelines, and rapid reviews tied to rapid recommendations, just to mention a few. [13][14][15]

It is unlikely that the picture of countless low-quality studies and reviews will change in the foreseeable future. However, it would not be a surprise if, in 3 to 5 years, separating the wheat from the chaff becomes trivial. Maybe the promise of evidence-based medicine of more effective, safer medical intervention resulting in better health outcomes for patients could be fulfilled.

Author: Gabriel Rada

Competing interests: Gabriel Rada is the co-founder and chairman of Epistemonikos database, part of the team that founded and maintains PDQ-Evidence, and an editor of the Cochrane Collaboration.

Related Blogs

Living Systematic Reviews: towards real-time evidence for health-care decision making

- Bastian H, Glasziou P, Chalmers I. Seventy-five trials and eleven systematic reviews a day: how will we ever keep up? PLoS Med. 2010 Sep 21;7(9):e1000326. doi: 10.1371/journal.pmed.1000326

- Epistemonikos database [filter= systematic review; year=2015]. A Free, Relational, Collaborative, Multilingual Database of Health Evidence. https://www.epistemonikos.org/en/search?&q=*&classification=systematic-review&year_start=2015&year_end=2015&fl=14542 Accessed 5 Jan 2017.

- Ioannidis JP. The Mass Production of Redundant, Misleading, and Conflicted Systematic Reviews and Meta-analyses. Milbank Q. 2016 Sep;94(3):485-514. doi: 10.1111/1468-0009.12210.

- Page MJ, Shamseer L, Altman DG, et al. Epidemiology and reporting characteristics of systematic reviews of biomedical research: a cross-sectional study. PLoS Med. 2016;13(5):e1002028.

- Del Fiol G, Workman TE, Gorman PN. Clinical questions raised by clinicians at the point of care: a systematic review. JAMA Intern Med. 2014 May;174(5):710-8. doi: 10.1001/jamainternmed.2014.368.

- Agoritsas T, Vandvik P, Neumann I, Rochwerg B, Jaeschke R, Hayward R, et al. Chapter 5: finding current best evidence. In: Users' guides to the medical literature: a manual for evidence-based clinical practice. Chicago: MacGraw-Hill, 2014.

- Guyatt GH, Oxman AD, Vist GE, et al. GRADE: An emerging consensus on rating quality of evidence and strength of recommendations. BMJ. 2008;336(7650):924-926. doi: 10.1136/bmj.39489.470347

- Neumann I, Santesso N, Akl EA, Rind DM, Vandvik PO, Alonso-Coello P, Agoritsas T, Mustafa RA, Alexander PE, Schünemann H, Guyatt GH. A guide for health professionals to interpret and use recommendations in guidelines developed with the GRADE approach. J Clin Epidemiol. 2016 Apr;72:45-55. doi: 10.1016/j.jclinepi.2015.11.017

- Alonso-Coello P, Irfan A, Solà I, Gich I, Delgado-Noguera M, Rigau D, Tort S, Bonfill X, Burgers J, Schunemann H. The quality of clinical practice guidelines over the last two decades: a systematic review of guideline appraisal studies. Qual Saf Health Care. 2010 Dec;19(6):e58. doi: 10.1136/qshc.2010.042077

- Martínez García L, Sanabria AJ, García Alvarez E, Trujillo-Martín MM, Etxeandia-Ikobaltzeta I, Kotzeva A, Rigau D, Louro-González A, Barajas-Nava L, Díaz Del Campo P, Estrada MD, Solà I, Gracia J, Salcedo-Fernandez F, Lawson J, Haynes RB, Alonso-Coello P; Updating Guidelines Working Group. The validity of recommendations from clinical guidelines: a survival analysis. CMAJ. 2014 Nov 4;186(16):1211-9. doi: 10.1503/cmaj.140547

- Kwag KH, González-Lorenzo M, Banzi R, Bonovas S, Moja L. Providing Doctors With High-Quality Information: An Updated Evaluation of Web-Based Point-of-Care Information Summaries. J Med Internet Res. 2016 Jan 19;18(1):e15. doi: 10.2196/jmir.5234

- Banzi R, Cinquini M, Liberati A, Moschetti I, Pecoraro V, Tagliabue L, Moja L. Speed of updating online evidence based point of care summaries: prospective cohort analysis. BMJ. 2011 Sep 23;343:d5856. doi: 10.1136/bmj.d5856

- Elliott JH, Turner T, Clavisi O, Thomas J, Higgins JP, Mavergames C, Gruen RL. Living systematic reviews: an emerging opportunity to narrow the evidence-practice gap. PLoS Med. 2014 Feb 18;11(2):e1001603. doi: 10.1371/journal.pmed.1001603

- Vandvik PO, Brandt L, Alonso-Coello P, Treweek S, Akl EA, Kristiansen A, Fog-Heen A, Agoritsas T, Montori VM, Guyatt G. Creating clinical practice guidelines we can trust, use, and share: a new era is imminent. Chest. 2013 Aug;144(2):381-9. doi: 10.1378/chest.13-0746

- Vandvik PO, Otto CM, Siemieniuk RA, Bagur R, Guyatt GH, Lytvyn L, Whitlock R, Vartdal T, Brieger D, Aertgeerts B, Price S, Foroutan F, Shapiro M, Mertz R, Spencer FA. Transcatheter or surgical aortic valve replacement for patients with severe, symptomatic, aortic stenosis at low to intermediate surgical risk: a clinical practice guideline. BMJ. 2016 Sep 28;354:i5085. doi: 10.1136/bmj.i5085

Discuss EBM

- What does evidence-based actually mean?

- Simply making evidence simple

- Six proposals for EBMs future

- Promoting informed healthcare choices by helping people assess treatment claims

- The blind leading the blind in the land of risk communication

- Transforming the communication of evidence for better health

- Clinical search, big data, and the hunt for meaning

- Living systematic reviews: towards real-time evidence for health-care decision making

- The rise of rapid reviews

- Evidence for the Brave New World on multimorbidity

- Genetics and personalised medicine: where’s the revolution?

- Policy, practice, and politics

- The straw men of integrative health and alternative medicine

- Where’s the evidence for teaching evidence-based medicine?

EBM Toolkit home

Learn, Practise, Discuss, Tools

Systematic Reviews

- Levels of Evidence

- Evidence Pyramid

- Joanna Briggs Institute

The evidence pyramid is often used to illustrate the development of evidence. At the base of the pyramid is animal research and laboratory studies – this is where ideas are first developed. As you progress up the pyramid the amount of information available decreases in volume, but increases in relevance to the clinical setting.

Meta Analysis – systematic review that uses quantitative methods to synthesize and summarize the results.

Systematic Review – summary of the medical literature that uses explicit methods to perform a comprehensive literature search and critical appraisal of individual studies and that uses appropriate st atistical techniques to combine these valid studies.

Randomized Controlled Trial – Participants are randomly allocated into an experimental group or a control group and followed over time for the variables/outcomes of interest.

Cohort Study – Involves identification of two groups (cohorts) of patients, one which received the exposure of interest, and one which did not, and following these cohorts forward for the outcome of interest.

Case Control Study – study which involves identifying patients who have the outcome of interest (cases) and patients without the same outcome (controls), and looking back to see if they had the exposure of interest.

Case Series – report on a series of patients with an outcome of interest. No control group is involved.

- Levels of Evidence from The Centre for Evidence-Based Medicine

- The JBI Model of Evidence Based Healthcare

- How to Use the Evidence: Assessment and Application of Scientific Evidence From the National Health and Medical Research Council (NHMRC) of Australia. Book must be downloaded; not available to read online.

When searching for evidence to answer clinical questions, aim to identify the highest level of available evidence. Evidence hierarchies can help you strategically identify which resources to use for finding evidence, as well as which search results are most likely to be "best".

Image source: Evidence-Based Practice: Study Design from Duke University Medical Center Library & Archives. This work is licensed under a Creativ e Commons Attribution-ShareAlike 4.0 International License .

The hierarchy of evidence (also known as the evidence-based pyramid) is depicted as a triangular representation of the levels of evidence with the strongest evidence at the top which progresses down through evidence with decreasing strength. At the top of the pyramid are research syntheses, such as Meta-Analyses and Systematic Reviews, the strongest forms of evidence. Below research syntheses are primary research studies progressing from experimental studies, such as Randomized Controlled Trials, to observational studies, such as Cohort Studies, Case-Control Studies, Cross-Sectional Studies, Case Series, and Case Reports. Non-Human Animal Studies and Laboratory Studies occupy the lowest level of evidence at the base of the pyramid.

- Finding Evidence-Based Answers to Clinical Questions – Quickly & Effectively A tip sheet from the health sciences librarians at UC Davis Libraries to help you get started with selecting resources for finding evidence, based on type of question.

- << Previous: What is a Systematic Review?

- Next: Locating Systematic Reviews >>

- Getting Started

- What is a Systematic Review?

- Locating Systematic Reviews

- Searching Systematically

- Developing Answerable Questions

- Identifying Synonyms & Related Terms

- Using Truncation and Wildcards

- Identifying Search Limits/Exclusion Criteria

- Keyword vs. Subject Searching

- Where to Search

- Search Filters

- Sensitivity vs. Precision

- Core Databases

- Other Databases

- Clinical Trial Registries

- Conference Presentations

- Databases Indexing Grey Literature

- Web Searching

- Handsearching

- Citation Indexes

- Documenting the Search Process

- Managing your Review

Research Support

- Last Updated: Apr 8, 2024 3:33 PM

- URL: https://guides.library.ucdavis.edu/systematic-reviews

- Research Process

Levels of evidence in research

- 5 minute read

- 97.6K views

Table of Contents

Level of evidence hierarchy

When carrying out a project you might have noticed that while searching for information, there seems to be different levels of credibility given to different types of scientific results. For example, it is not the same to use a systematic review or an expert opinion as a basis for an argument. It’s almost common sense that the first will demonstrate more accurate results than the latter, which ultimately derives from a personal opinion.

In the medical and health care area, for example, it is very important that professionals not only have access to information but also have instruments to determine which evidence is stronger and more trustworthy, building up the confidence to diagnose and treat their patients.

5 levels of evidence

With the increasing need from physicians – as well as scientists of different fields of study-, to know from which kind of research they can expect the best clinical evidence, experts decided to rank this evidence to help them identify the best sources of information to answer their questions. The criteria for ranking evidence is based on the design, methodology, validity and applicability of the different types of studies. The outcome is called “levels of evidence” or “levels of evidence hierarchy”. By organizing a well-defined hierarchy of evidence, academia experts were aiming to help scientists feel confident in using findings from high-ranked evidence in their own work or practice. For Physicians, whose daily activity depends on available clinical evidence to support decision-making, this really helps them to know which evidence to trust the most.

So, by now you know that research can be graded according to the evidential strength determined by different study designs. But how many grades are there? Which evidence should be high-ranked and low-ranked?

There are five levels of evidence in the hierarchy of evidence – being 1 (or in some cases A) for strong and high-quality evidence and 5 (or E) for evidence with effectiveness not established, as you can see in the pyramidal scheme below:

Level 1: (higher quality of evidence) – High-quality randomized trial or prospective study; testing of previously developed diagnostic criteria on consecutive patients; sensible costs and alternatives; values obtained from many studies with multiway sensitivity analyses; systematic review of Level I RCTs and Level I studies.

Level 2: Lesser quality RCT; prospective comparative study; retrospective study; untreated controls from an RCT; lesser quality prospective study; development of diagnostic criteria on consecutive patients; sensible costs and alternatives; values obtained from limited stud- ies; with multiway sensitivity analyses; systematic review of Level II studies or Level I studies with inconsistent results.

Level 3: Case-control study (therapeutic and prognostic studies); retrospective comparative study; study of nonconsecutive patients without consistently applied reference “gold” standard; analyses based on limited alternatives and costs and poor estimates; systematic review of Level III studies.

Level 4: Case series; case-control study (diagnostic studies); poor reference standard; analyses with no sensitivity analyses.

Level 5: (lower quality of evidence) – Expert opinion.

By looking at the pyramid, you can roughly distinguish what type of research gives you the highest quality of evidence and which gives you the lowest. Basically, level 1 and level 2 are filtered information – that means an author has gathered evidence from well-designed studies, with credible results, and has produced findings and conclusions appraised by renowned experts, who consider them valid and strong enough to serve researchers and scientists. Levels 3, 4 and 5 include evidence coming from unfiltered information. Because this evidence hasn’t been appraised by experts, it might be questionable, but not necessarily false or wrong.

Examples of levels of evidence

As you move up the pyramid, you will surely find higher-quality evidence. However, you will notice there is also less research available. So, if there are no resources for you available at the top, you may have to start moving down in order to find the answers you are looking for.

- Systematic Reviews: -Exhaustive summaries of all the existent literature about a certain topic. When drafting a systematic review, authors are expected to deliver a critical assessment and evaluation of all this literature rather than a simple list. Researchers that produce systematic reviews have their own criteria to locate, assemble and evaluate a body of literature.

- Meta-Analysis: Uses quantitative methods to synthesize a combination of results from independent studies. Normally, they function as an overview of clinical trials. Read more: Systematic review vs meta-analysis .

- Critically Appraised Topic: Evaluation of several research studies.

- Critically Appraised Article: Evaluation of individual research studies.

- Randomized Controlled Trial: a clinical trial in which participants or subjects (people that agree to participate in the trial) are randomly divided into groups. Placebo (control) is given to one of the groups whereas the other is treated with medication. This kind of research is key to learning about a treatment’s effectiveness.

- Cohort studies: A longitudinal study design, in which one or more samples called cohorts (individuals sharing a defining characteristic, like a disease) are exposed to an event and monitored prospectively and evaluated in predefined time intervals. They are commonly used to correlate diseases with risk factors and health outcomes.

- Case-Control Study: Selects patients with an outcome of interest (cases) and looks for an exposure factor of interest.

- Background Information/Expert Opinion: Information you can find in encyclopedias, textbooks and handbooks. This kind of evidence just serves as a good foundation for further research – or clinical practice – for it is usually too generalized.

Of course, it is recommended to use level A and/or 1 evidence for more accurate results but that doesn’t mean that all other study designs are unhelpful or useless. It all depends on your research question. Focusing once more on the healthcare and medical field, see how different study designs fit into particular questions, that are not necessarily located at the tip of the pyramid:

- Questions concerning therapy: “Which is the most efficient treatment for my patient?” >> RCT | Cohort studies | Case-Control | Case Studies

- Questions concerning diagnosis: “Which diagnose method should I use?” >> Prospective blind comparison

- Questions concerning prognosis: “How will the patient’s disease will develop over time?” >> Cohort Studies | Case Studies

- Questions concerning etiology: “What are the causes for this disease?” >> RCT | Cohort Studies | Case Studies

- Questions concerning costs: “What is the most cost-effective but safe option for my patient?” >> Economic evaluation

- Questions concerning meaning/quality of life: “What’s the quality of life of my patient going to be like?” >> Qualitative study

Find more about Levels of evidence in research on Pinterest:

17 March 2021 – Elsevier’s Mini Program Launched on WeChat Brings Quality Editing Straight to your Smartphone

- Manuscript Review

Professor Anselmo Paiva: Using Computer Vision to Tackle Medical Issues with a Little Help from Elsevier Author Services

You may also like.

Descriptive Research Design and Its Myriad Uses

Five Common Mistakes to Avoid When Writing a Biomedical Research Paper

Making Technical Writing in Environmental Engineering Accessible

To Err is Not Human: The Dangers of AI-assisted Academic Writing

When Data Speak, Listen: Importance of Data Collection and Analysis Methods

Choosing the Right Research Methodology: A Guide for Researchers

Why is data validation important in research?

Writing a good review article

Input your search keywords and press Enter.

12.1 Introducing Research and Research Evidence

Learning outcomes.

By the end of this section, you will be able to:

- Articulate how research evidence and sources are key rhetorical concepts in presenting a position or an argument.

- Locate and distinguish between primary and secondary research materials.

- Implement methods and technologies commonly used for research and communication within various fields.

The writing tasks for this chapter and the next two chapters are based on argumentative research. However, not all researched evidence (data) is presented in the same genre. You may need to gather evidence for a poster, a performance, a story, an art exhibit, or even an architectural design. Although the genre may vary, you usually will be required to present a perspective , or viewpoint, about a debatable issue and persuade readers to support the “validity of your viewpoint,” as discussed in Position Argument: Practicing the Art of Rhetoric . Remember, too, that a debatable issue is one that has more than a single perspective and is subject to disagreement.

The Research Process

Although individual research processes are rhetorically situated, they share some common aspects:

- Interest. The researcher has a genuine interest in the topic. It may be difficult to fake curiosity, but it is possible to develop it. Some academic assignments will allow you to pursue issues that are personally important to you; others will require you to dive into the research first and generate interest as you go.

- Questions. The researcher asks questions. At first, these questions are general. However, as researchers gain more knowledge, the questions become more sharply focused. No matter what your research assignment is, begin by articulating questions, find out where the answers lead, and then ask still more questions.

- Answers. The researcher seeks answers from people as well as from print and other media. Research projects profit when you ask knowledgeable people, such as librarians and other professionals, to help you answer questions or point you in directions to find answers. Information about research is covered more extensively in Research Process: Accessing and Recording Information and Annotated Bibliography: Gathering, Evaluating, and Documenting Sources .

- Field research. The researcher conducts field research. Field research allows researchers not only to ask questions of experts but also to observe and experience directly. It allows researchers to generate original data. No matter how much other people tell you, your knowledge increases through personal observations. In some subject areas, field research is as important as library or database research. This information is covered more extensively in Research Process: Accessing and Recording Information .

- Examination of texts. The researcher examines texts. Consulting a broad range of texts—such as magazines, brochures, newspapers, archives, blogs, videos, documentaries, or peer-reviewed journals—is crucial in academic research.

- Evaluation of sources. The researcher evaluates sources. As your research progresses, you will double-check information to find out whether it is confirmed by more than one source. In informal research, researchers evaluate sources to ensure that the final decision is satisfactory. Similarly, in academic research, researchers evaluate sources to ensure that the final product is accurate and convincing. Previewed here, this information is covered more extensively in Research Process: Accessing and Recording Information .

- Writing. The researcher writes. The writing during the research process can take a range of forms: from notes during library, database, or field work; to journal reflections on the research process; to drafts of the final product. In practical research, writing helps researchers find, remember, and explore information. In academic research, writing is even more important because the results must be reported accurately and thoroughly.

- Testing and Experimentation. The researcher tests and experiments. Because opinions vary on debatable topics and because few research topics have correct or incorrect answers, it is important to test and conduct experiments on possible hypotheses or solutions.

- Synthesis. The researcher synthesizes. By combining information from various sources, researchers support claims or arrive at new conclusions. When synthesizing, researchers connect evidence and ideas, both original and borrowed. Accumulating, sorting, and synthesizing information enables researchers to consider what evidence to use in support of a thesis and in what ways.

- Presentation. The researcher presents findings in an interesting, focused, and well-documented product.

Types of Research Evidence

Research evidence usually consists of data, which comes from borrowed information that you use to develop your thesis and support your organizational structure and reasoning. This evidence can take a range of forms, depending on the type of research conducted, the audience, and the genre for reporting the research.

Primary Research Sources

Although precise definitions vary somewhat by discipline, primary data sources are generally defined as firsthand accounts, such as texts or other materials produced by someone drawing from direct experience or observation. Primary source documents include, but are not limited to, personal narratives and diaries; eyewitness accounts; interviews; original documents such as treaties, official certificates, and government documents detailing laws or acts; speeches; newspaper coverage of events at the time they occurred; observations; and experiments. Primary source data is, in other words, original and in some way conducted or collected primarily by the researcher. The Research Process: Where to Look for Existing Sources and Compiling Sources for an Annotated Bibliography contain more information on both primary and secondary sources.

Secondary Research Sources

Secondary sources , on the other hand, are considered at least one step removed from the experience. That is, they rely on sources other than direct observation or firsthand experience. Secondary sources include, but are not limited to, most books, articles online or in databases, and textbooks (which are sometimes classified as tertiary sources because, like encyclopedias and other reference works, their primary purpose might be to summarize or otherwise condense information). Secondary sources regularly cite and build upon primary sources to provide perspective and analysis. Effective use of researched evidence usually includes both primary and secondary sources. Works of history, for example, draw on a large range of primary and secondary sources, citing, analyzing, and synthesizing information to present as many perspectives of a past event in as rich and nuanced a way as possible.

It is important to note that the distinction between primary and secondary sources depends in part on their use: that is, the same document can be both a primary source and a secondary source. For example, if Scholar X wrote a biography about Artist Y, the biography would be a secondary source about the artist and, at the same time, a primary source about the scholar.

As an Amazon Associate we earn from qualifying purchases.

This book may not be used in the training of large language models or otherwise be ingested into large language models or generative AI offerings without OpenStax's permission.

Want to cite, share, or modify this book? This book uses the Creative Commons Attribution License and you must attribute OpenStax.

Access for free at https://openstax.org/books/writing-guide/pages/1-unit-introduction

- Authors: Michelle Bachelor Robinson, Maria Jerskey, featuring Toby Fulwiler

- Publisher/website: OpenStax

- Book title: Writing Guide with Handbook

- Publication date: Dec 21, 2021

- Location: Houston, Texas

- Book URL: https://openstax.org/books/writing-guide/pages/1-unit-introduction

- Section URL: https://openstax.org/books/writing-guide/pages/12-1-introducing-research-and-research-evidence

© Dec 19, 2023 OpenStax. Textbook content produced by OpenStax is licensed under a Creative Commons Attribution License . The OpenStax name, OpenStax logo, OpenStax book covers, OpenStax CNX name, and OpenStax CNX logo are not subject to the Creative Commons license and may not be reproduced without the prior and express written consent of Rice University.

- Tools and Resources

- Customer Services

- Original Language Spotlight

- Alternative and Non-formal Education

- Cognition, Emotion, and Learning

- Curriculum and Pedagogy

- Education and Society

- Education, Change, and Development

- Education, Cultures, and Ethnicities

- Education, Gender, and Sexualities

- Education, Health, and Social Services

- Educational Administration and Leadership

- Educational History

- Educational Politics and Policy

- Educational Purposes and Ideals

- Educational Systems

- Educational Theories and Philosophies

- Globalization, Economics, and Education

- Languages and Literacies

- Professional Learning and Development

- Research and Assessment Methods

- Technology and Education

- Share This Facebook LinkedIn Twitter

Article contents

Evidence-based educational practice.

- Tone Kvernbekk Tone Kvernbekk University of Oslo

- https://doi.org/10.1093/acrefore/9780190264093.013.187

- Published online: 19 December 2017

Evidence-based practice (EBP) is a buzzword in contemporary professional debates, for example, in education, medicine, psychiatry, and social policy. It is known as the “what works” agenda, and its focus is on the use of the best available evidence to bring about desirable results or prevent undesirable ones. We immediately see here that EBP is practical in nature, that evidence is thought to play a central role, and also that EBP is deeply causal: we intervene into an already existing practice in order to produce an output or to improve the output. If our intervention brings the results we want, we say that it “works.”

How should we understand the causal nature of EBP? Causality is a highly contentious issue in education, and many writers want to banish it altogether. But causation denotes a dynamic relation between factors and is indispensable if one wants to be able to plan the attainment of goals and results. A nuanced and reasonable understanding of causality is therefore necessary to EBP, and this we find in the INUS-condition approach.

The nature and function of evidence is much discussed. The evidence in question is supplied by research, as a response to both political and practical demands that educational research should contribute to practice. In general, evidence speaks to the truth value of claims. In the case of EBP, the evidence emanates from randomized controlled trials (RCTs) and presumably speaks to the truth value of claims such as “if we do X, it will lead to result Y.” But what does research evidence really tell us? It is argued here that a positive RCT result will tell you that X worked where the RCT was conducted and that an RCT does not yield general results.

Causality and evidence come together in the practitioner perspective. Here we shift from finding causes to using them to bring about desirable results. This puts contextual matters at center stage: will X work in this particular context? It is argued that much heterogeneous contextual evidence is required to make X relevant for new contexts. If EBP is to be a success, research evidence and contextual evidence must be brought together.

- effectiveness

- INUS conditions

- practitioner perspective

Introduction

Evidence-based practice, hereafter EBP, is generally known as the “what works” agenda. This is an apt phrase, pointing as it does to central practical issues: how to attain goals and produce desirable results, and how we know what works. Obviously, this goes to the heart of much (but not all) of the everyday activity that practitioners engage in. The “what works” agenda is meant to narrow the gap between research and practice and be an area in which research can make itself directly useful to practice. David Hargreaves, one of the instigators of the EBP debate in education, has stated that the point of evidence-based research is to gather evidence about what works in what circumstances (Hargreaves, 1996a , 1996b ). Teachers, Hargreaves said, want to know what works; only secondarily are they interested in understanding the why of classroom events. The kind of research we talk about is meant to be relevant not only for teachers but also for policymakers, school developers, and headmasters. Its purpose is to improve practice, which largely comes down to improving student achievement. Hargreaves’s work was supported by, for example, Robert Slavin, who stated that education research not only can address questions about “what works” but also must do so (Slavin, 2004 ).

All the same, despite the fact that EBP, at least at the outset, seems to speak directly to the needs of practitioners, it has met with much criticism. It is difficult to characterize both EBP and the debate about it, but let me suggest that the debate branches off in different but interrelated directions. We may roughly identify two: what educational research can and should contribute to practice and what EBP entails for the nature of educational practice and the teaching profession. There is ample space here for different definitions, different perspectives, different opinions, as well as for some general unclarity and confusions. To some extent, advocates and critics bring different vocabularies to the debate, and to some extent, they employ the same vocabulary but take very different stances. Overall in the EBP conceptual landscape we find such concepts as relevance, effectiveness, generality, causality, systematic reviews, randomized controlled trials (RCTs), what works, accountability, competences, outcomes, measurement, practical judgment, professional experience, situatedness, democracy, appropriateness, ends, and means as constitutive of ends or as instrumental to the achievement of ends. Out of this tangle we shall carefully extract and examine a selection of themes, assumptions, and problems. These mainly concern the causal nature of EBP, the function of evidence, and EBP from the practitioner point of view.

Definition, History, and Context

The term “evidence-based” originates in medicine—evidence-based medicine—and was coined in 1991 by a group of doctors at McMaster University in Hamilton, Ontario. Originally, it denoted a method for teaching medicine at the bedside. It has long since outgrown the hospital bedside and has become a buzzword in many contemporary professions and professional debates, not only in education, but also leadership, psychiatry, and policymaking. The term EBP can be defined in different ways, broadly or more narrowly. We shall here adopt a parsimonious, minimal definition, which says that EBP involves the use of the best available evidence to bring about desirable outcomes, or conversely, to prevent undesirable outcomes (Kvernbekk, 2016 ). That is to say, we intervene to bring about results, and this practice should be guided by evidence of how well it works. This minimal definition does not specify what kinds of evidence are allowed, what “based” should mean, what practice is, or how we should understand the causality that is inevitably involved in bringing about and preventing results. Minimal definitions are eminently useful because they are broad in their phenomenal range and thus allow differing versions of the phenomenon in question to fall under the concept.

We live in an age which insists that practices and policies of all kinds be based on research. Researchers thus face political demands for better research bases to underpin, inform and guide policy and practice, and practitioners face political demands to make use of research to produce desirable results or improve results already produced. Although the term EBP is fairly recent, the idea that research should be used to guide and improve practice is by no means new. To illustrate, in 1933 , the School Commission of Norwegian Teacher Unions (Lærerorganisasjonenes skolenevnd, 1933 ) declared that progress in schooling can only happen through empirical studies, notably, by different kinds of experiments and trials. Examples of problems the commission thought research should solve are (a) in which grade the teaching of a second language should start and (b) what the best form of differentiation is. The accumulated evidence should form the basis for policy, the unions argued. Thus, the idea that pedagogy should be based on systematic research is not entirely new. What is new is the magnitude and influence of the EBP movement and other, related trends, such as large-scale international comparative studies (e.g., the Progress in International Reading Literacy Study, PIRLS, and the Programme for International Student Assessment, PISA). Schooling is generally considered successful when the predetermined outcomes have been achieved, and education worldwide therefore makes excessive requirements of assessment, measurement, testing, and documentation. EBP generally belongs in this big picture, with its emphasis on knowing what works in order to maximize the probability of attaining the goal. What is also new, and quite unprecedented, is the growth of organizations such as the What Works Clearinghouses, set up all around the world. The WWCs collect, review, synthesize, and report on studies of educational interventions. Their main functions are, first, to provide hierarchies that rank evidence. The hierarchies may differ in their details, but they all rank RCTs, meta-analyses, and systematic reviews on top and professional judgment near the bottom (see, e.g., Oancea & Pring, 2008 ). Second, they provide guides that offer advice about how to choose a method of instruction that is backed by good evidence; and third, they serve as a warehouse, where a practitioner might find methods that are indeed backed by good evidence (Cartwright & Hardie, 2012 ).

Educationists today seem to have a somewhat ambiguous relationship to research and what it can do for practice. Some, such as Robert Slavin ( 2002 ), a highly influential educational researcher and a defender of EBP, think that education is on the brink of a scientific revolution. Slavin has argued that over time, rigorous research will yield the same step-by-step, irreversible progress in education that medicine has enjoyed because all interventions would be subjected to strict standards of evaluation before being recommended for general use. Central to this optimism is the RCT. Other educationists, such as Gert Biesta ( 2007 , 2010 ), also a highly influential figure in the field and a critic of EBP, are wary of according such weight to research and to the advice guides and practical guidelines of the WWCs for fear that this might seriously restrict, or out and out replace, the experience and professional judgment of practitioners. And there matters stand: EBP is a huge domain with many different topics, issues, and problems, where advocates and critics have criss-crossing perspectives, assumptions, and value stances.

The Causal Nature of Evidence-Based Practice

As the slogan “what works” suggests, EBP is practical in nature. By the same token, EBP is also deeply causal. Works is a causal term, as are intervention, effectiveness , bring about , influence , and prevent . In EBP we intervene into an already existing practice in order to change its outcomes in what we judge to be a more desirable direction. To say that something (an intervention) works is roughly to say that doing it yields the outcomes we want. If we get other results or no results at all, we say that it does not work. To put it crudely, we do X, and if it leads to some desirable outcome Y, we judge that X works. It is the ambition of EBP to provide knowledge of how intervention X can be used to bring about or produce Y (or improvements in Y) and to back this up by solid evidence—for example, how implementing a reading-instruction program can improve the reading skills of slow or delayed readers, or how a schoolwide behavioral support program can serve to enhance students’ social skills and prevent future problem behavior. For convenience, I adopt the convention of calling the cause (intervention, input) X and the effect (result, outcome, output) Y. This is on the explicit understanding that both X and Y can be highly complex in their own right, and that the convention, as will become clear, is a simplification.

There can be no doubt that EBP is causal. However, the whole issue of causality is highly contentious in education. Many educationists and philosophers of education have over the years dismissed the idea that education is or can be causal or have causal elements. In EBP, too, this controversy runs deep. By and large, advocates of EBP seem to take for granted that causality in the social and human realm simply exists, but they tend not to provide any analysis of it. RCTs are preferred because they allow causal inferences to be made with a high degree of certainty. As Slavin ( 2002 ) put it, “The experiment is the design of choice for studies that seek to make causal conclusions, and particularly for evaluations of educational innovations” (p. 18). In contrast, critics often make much of the causal of nature of EBP, since for many of them this is reason to reject EBP altogether. Biesta is a case in point. For him and many others, education is a moral and social practice and therefore non causal. According to Biesta ( 2010 ):

The most important argument against the idea that education is a causal process lies in the fact that education is not a process of physical interaction but a process of symbolic or symbolically mediated interaction. (p. 34)

Since education is noncausal and EBP is causal, on this line of reasoning, it follows that EBP must be rejected—it fundamentally mistakes the nature of education.

Such wholesale dismissals rest on certain assumptions about the nature of causality, for example, that it is deterministic, positivist, and physical and that it essentially belongs in the natural sciences. Biesta, for example, clearly assumes that causality requires a physical process. But since the mid-1900s our understanding of causality has witnessed dramatic developments; arguably the most important of which is its reformulation in probabilistic terms, thus making it compatible with indeterminism. A quick survey of the field reveals that causality is a highly varied thing. The concept is used in different ways in different contexts, and not all uses are compatible. There are several competing theories, all with counterexamples. As Nancy Cartwright ( 2007b ) has pointed out, “There is no single interesting characterizing feature of causation; hence no off-the-shelf or one-size-fits-all method for finding out about it, no ‘gold standard’ for judging causal relations” (p. 2).

The approach to causality taken here is twofold. First, there should be room for causality in education; we just have to be very careful how we think about it. Causality is an important ingredient in education because it denotes a dynamic relationship between factors of various kinds. Causes make their effects happen; they make a difference to the effect. Causality implies change and how it can be brought about, and this is something that surely lies at the heart of education. Ordinary educational talk is replete with causal verbs, for example, enhance, improve, reduce, increase, encourage, motivate, influence, affect, intervene, bring about, prevent, enable, contribute. The short version of the causal nature of education, and so EBP, is therefore that EBP is causal because it concerns the bringing about of desirable results (or the preventing of undesirable results). We have a causal connection between an action or an intervention and its effect, between X and Y. The longer version of the causal nature of EBP takes into account the many forms of causality: direct, indirect, necessary, sufficient, probable, deterministic, general, actual, potential, singular, strong, weak, robust, fragile, chains, multiple causes, two-way connections, side-effects, and so on. What is important is that we adopt an understanding of causality that fits the nature of EBP and does not do violence to the matter at hand. That leads me to my second point: the suggestion that in EBP causes are best understood as INUS conditions.

The understanding of causes as INUS conditions was pioneered by the philosopher John Mackie ( 1975 ). He placed his account within what is known as the regularity theory of causality. Regularity theory is largely the legacy of David Hume, and it describes causality as the constant conjunction of two entities (cause and effect, input and output). Like many others, Mackie took (some version of) regularity theory to be the common view of causality. Regularities are generally expressed in terms of necessity and sufficiency. In a causal law, the cause would be held to be both necessary and sufficient for the occurrence of the effect; the cause would produce its effect every time; and the relation would be constant. This is the starting point of Mackie’s brilliant refinement of the regularity view. Suppose, he said, that a fire has broken out in a house, and that the experts conclude that it was caused by an electrical short circuit. How should we understand this claim? The short circuit is not necessary, since many other events could have caused the fire. Nor is it sufficient, since short circuits may happen without causing a fire. But if the short circuit is neither necessary nor sufficient, then what do we mean by saying that it caused the fire? What we mean, Mackie ( 1975 ) suggests, is that the short circuit is an INUS condition: “an insufficient but necessary part of a condition which is itself unnecessary but sufficient for the result” (p. 16), INUS being an acronym formed of the initial letters of the italicized words. The main point is that a short circuit does not cause a fire all by itself; it requires the presence of oxygen and combustible material and the absence of a working sprinkler. On this approach, therefore, a cause is a complex set of conditions, of which some may be positive (present), and some may be negative (absent). In this constellation of factors, the event that is the focus of the definition (the insufficient but necessary factor) is the one that is salient to us. When we speak of an event causing another, we tend to let this factor represent the whole complex constellation.

In EBP, our own intervention X (strategy, method of instruction) is the factor we focus on, the factor that is salient to us, is within our control, and receives our attention. I propose that we understand any intervention we implement as an INUS condition. Then it immediately transpires that X not only does not bring about Y alone, but also that it cannot do so.

Before inquiring further into interventions as INUS -conditions, we should briefly characterize causality in education more broadly. Most causal theories, but not all of them, understand causal connections in terms of probability—that is, causing is making more likely. This means that causes sometimes make their effects happen, and sometimes not. A basic understanding of causality as indeterministic is vitally important in education, for two reasons. First, because the world is diverse, it is to some extent unpredictable, and planning for results is by no means straightforward. Second, because we can here clear up a fundamental misunderstanding about causality in education: causality is not deterministic and the effect is therefore not necessitated by the cause. The most common phrase in causal theory seems to be that causes make a difference for the effect (Schaffer, 2007 ). We must be flexible in our thinking here. One factor can make a difference for another factor in a great variety of ways: prevent it, contribute to it, enhance it as part of a causal chain, hinder it via one path and increase it via another, delay it, or produce undesirable side effects, and so on. This is not just conceptual hair-splitting; it has great practical import. Educational researchers may tell us that X causes Y, but what a practitioner can do with that knowledge differs radically if X is a potential cause, a disabler, a sufficient cause, or the absence of a hindrance.

Interventions as INUS Conditions

Human affairs, including education, are complex, and it stands to reason that a given outcome will have several sources and causes. While one of the factors in a causal constellation is salient to us, the others jointly enable X to have an effect. This enabling role is eminently generalizable and crucial to understanding how interventions bring about their effects. As Mackie’s example suggests, enablers may also be absences—that is vital to note, since absences normally go under our radar.

The term “intervention” deserves brief mention. To some it seems to denote a form of practice that is interested only (or mainly) in producing measurable changes on selected output variables. It is not obvious that there is a clear conception of intervention in EBP, but we should refrain from imposing heavy restrictions on it. I thus propose to employ the broad understanding suggested by Peter Menzies and Huw Price ( 1993 )—namely, interventions as a natural part of human agency. We all have the ability to intervene in the world and influence it; that is, to act as agents. Educational interventions may thus take many forms and encompass actions, strategies, programs and methods of instruction. Most interventions will be composites consisting of many different activities, and some, for instance, schoolwide behavioral programs, are meant to run for a considerable length of time.

When practitioners consider implementing an intervention X, the INUS approach encourages them to also consider what the enabling conditions are and how they might allow X to produce Y (or to contribute to its production). Our general knowledge of house fires and how they start prompts us to look at factors such as oxygen, materials, and fire extinguishers. In other cases, we might not know what the enabling conditions are. Suppose a teacher observes that some of his first graders are reading delayed. What to do? The teacher may decide to implement what we might call “Hatcher’s method” (Hatcher et al., 2006 ). This “method” focuses on letter knowledge, single-word reading, and phoneme awareness and lasts for two consecutive 10-week periods. Hatcher and colleagues’ study showed that about 75% of the children who received it made significant progress. So should our teacher now simply implement the method and expect the results with his own students to be (approximately) the same? As any teacher knows, what worked in one context might not work in another context. What we can infer from the fact that the method, X, worked where the data were collected is that a sufficient set of support factors were present to enable X to work. That is, Hatcher’s method serves as an INUS condition in a larger constellation of factors that together are sufficient for a positive result for a good many of the individuals in the study population. Do we know what the enabling factors are—the factors that correspond to presence of oxygen and inflammable material and absence of sprinkler in Mackie’s example? Not necessarily. General educational knowledge may tell us something, but enablers are also contextual. Examples of possible enablers include student motivation, parental support (important if the method requires homework), adequate materials, a separate room, and sufficient time. Maybe the program requires a teacher’s assistant? The enablers are factors that X requires to bring about or improve Y; if they are missing, X might not be able to do its work.

Understanding X as an INUS condition adds quite a lot of complexity to the simple X–Y picture and may thus alleviate at least some of the EBP critics’ fear that EBP is inherently reductionist and oversimplified. EBP is at heart causal, but that does not entail a deterministic, simplistic or physical understanding. Rather, I have argued, to do justice to EBP in education its causal nature must be understood to be both complex and sophisticated. We should also note here that X can enter into different constellations. The enablers in one context need not be the same as the enablers in another context. In fact, we should expect them to be different, simply because contexts are different.

Evidence and Its Uses

Evidence is an epistemological concept. In its immediate surroundings we find such concepts as justification, support, hypotheses, reasons, grounds, truth, confirmation, disconfirmation, falsification, and others. It is often unclear what people take evidence and its function to be. In epistemology, evidence is that which serves to confirm or disconfirm a hypothesis (claim, belief, theory; Achinstein, 2001 ; Kelly, 2008 ). The basic function of evidence is thus summed up in the word “support”: evidence is something that stands in a relation of support (confirmation, disconfirmation) to a claim or hypothesis, and provides us with good reason to believe that a claim is true (or false). The question of what can count as evidence is the question of what kind of stuff can enter into such evidential relations with a claim. This question is controversial in EBP and usually amounts to criticism of evidence hierarchies. The standard criticisms are that such hierarchies unduly privilege certain forms of knowledge and research design (Oancea & Pring, 2008 ), undervalue the contribution of other research perspectives (Pawson, 2012 ), and undervalue professional experience and judgment (Hammersley, 1997 , 2004 ). It is, however, not of much use to discuss evidence in and of itself—we must look at what we want evidence for . Evidence is that which can perform a support function, including all sorts of data, facts, personal experiences, and even physical traces and objects. In murder mysteries, bloody footprints, knives, and witness observations count as evidence, for or against the hypothesis that the butler did it. In everyday life, a face covered in ice cream is evidence of who ate the dessert before dinner.