- Subscriber Services

- For Authors

- Publications

- Archaeology

- Art & Architecture

- Bilingual dictionaries

- Classical studies

- Encyclopedias

- English Dictionaries and Thesauri

- Language reference

- Linguistics

- Media studies

- Medicine and health

- Names studies

- Performing arts

- Science and technology

- Social sciences

- Society and culture

- Overview Pages

- Subject Reference

- English Dictionaries

- Bilingual Dictionaries

Recently viewed (0)

- Save Search

- Share This Facebook LinkedIn Twitter

Related Content

Related overviews.

classification

More Like This

Show all results sharing these subjects:

- Life Sciences

hypothesis-generating method

Quick reference.

A data-structuring technique, such as a classification and ordination method which, by grouping and ranking data, suggests possible relationships with other factors (i.e. generates an hypothesis). Appropriate data may then be collected to test the hypothesis statistically.

From: hypothesis-generating method in A Dictionary of Zoology »

Subjects: Science and technology — Life Sciences

Related content in Oxford Reference

Reference entries.

View all related items in Oxford Reference »

Search for: 'hypothesis-generating method' in Oxford Reference »

- Oxford University Press

PRINTED FROM OXFORD REFERENCE (www.oxfordreference.com). (c) Copyright Oxford University Press, 2023. All Rights Reserved. Under the terms of the licence agreement, an individual user may print out a PDF of a single entry from a reference work in OR for personal use (for details see Privacy Policy and Legal Notice ).

date: 10 April 2024

- Cookie Policy

- Privacy Policy

- Legal Notice

- Accessibility

- [66.249.64.20|185.126.86.119]

- 185.126.86.119

Character limit 500 /500

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Perspective

- Published: 10 January 2012

Machine learning and data mining: strategies for hypothesis generation

- M A Oquendo 1 ,

- E Baca-Garcia 1 , 2 ,

- A Artés-Rodríguez 3 ,

- F Perez-Cruz 3 , 4 ,

- H C Galfalvy 1 ,

- H Blasco-Fontecilla 2 ,

- D Madigan 5 &

- N Duan 1 , 6

Molecular Psychiatry volume 17 , pages 956–959 ( 2012 ) Cite this article

5413 Accesses

60 Citations

6 Altmetric

Metrics details

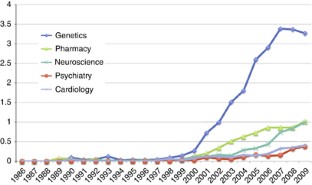

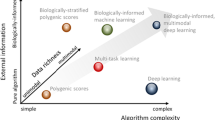

- Data mining

- Machine learning

- Neurological models

Strategies for generating knowledge in medicine have included observation of associations in clinical or research settings and more recently, development of pathophysiological models based on molecular biology. Although critically important, they limit hypothesis generation to an incremental pace. Machine learning and data mining are alternative approaches to identifying new vistas to pursue, as is already evident in the literature. In concert with these analytic strategies, novel approaches to data collection can enhance the hypothesis pipeline as well. In data farming, data are obtained in an ‘organic’ way, in the sense that it is entered by patients themselves and available for harvesting. In contrast, in evidence farming (EF), it is the provider who enters medical data about individual patients. EF differs from regular electronic medical record systems because frontline providers can use it to learn from their own past experience. In addition to the possibility of generating large databases with farming approaches, it is likely that we can further harness the power of large data sets collected using either farming or more standard techniques through implementation of data-mining and machine-learning strategies. Exploiting large databases to develop new hypotheses regarding neurobiological and genetic underpinnings of psychiatric illness is useful in itself, but also affords the opportunity to identify novel mechanisms to be targeted in drug discovery and development.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 12 print issues and online access

251,40 € per year

only 20,95 € per issue

Rent or buy this article

Prices vary by article type

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

A primer on the use of machine learning to distil knowledge from data in biological psychiatry

Thomas P. Quinn, Jonathan L. Hess, … on behalf of the Machine Learning in Psychiatry (MLPsych) Consortium

Axes of a revolution: challenges and promises of big data in healthcare

Smadar Shilo, Hagai Rossman & Eran Segal

Deep learning for small and big data in psychiatry

Georgia Koppe, Andreas Meyer-Lindenberg & Daniel Durstewitz

Carlsson A . A paradigm shift in brain research. Science 2001; 294 : 1021–1024.

Article CAS Google Scholar

Mitchell TM . The Discipline of Machine Learning . School of Computer Science: Pittsburgh, PA, 2006. Available from: http://aaai.org/AITopics/MachineLearning .

Google Scholar

Nilsson NJ . Introduction to Machine Learning. An early draft of a proposed textbook . Robotics Laboratory, Department of Computer Science, Stanford University: Stanford, 1996. Available from: http://robotics.stanford.edu/people/nilsson/mlbook.html .

Hand DJ . Mining medical data. Stat Methods Med Res 2000; 9 : 305–307.

PubMed CAS Google Scholar

Smyth P . Data mining: data analysis on a grand scale? Stat Methods Med Res 2000; 9 : 309–327.

Burgun A, Bodenreider O . Accessing and integrating data and knowledge for biomedical research. Yearb Med Inform 2008; 47 (Suppl 1): 91–101.

Hochberg AM, Hauben M, Pearson RK, O’Hara DJ, Reisinger SJ, Goldsmith DI et al . An evaluation of three signal-detection algorithms using a highly inclusive reference event database. Drug Saf 2009; 32 : 509–525.

Article Google Scholar

Sanz EJ, De-las-Cuevas C, Kiuru A, Bate A, Edwards R . Selective serotonin reuptake inhibitors in pregnant women and neonatal withdrawal syndrome: a database analysis. Lancet 2005; 365 : 482–487.

Baca-Garcia E, Perez-Rodriguez MM, Basurte-Villamor I, Saiz-Ruiz J, Leiva-Murillo JM, de Prado-Cumplido M et al . Using data mining to explore complex clinical decisions: A study of hospitalization after a suicide attempt. J Clin Psychiatry 2006; 67 : 1124–1132.

Ray S, Britschgi M, Herbert C, Takeda-Uchimura Y, Boxer A, Blennow K et al . Classification and prediction of clinical Alzheimer's diagnosis based on plasma signaling proteins. Nat Med 2007; 13 : 1359–1362.

Baca-Garcia E, Perez-Rodriguez MM, Basurte-Villamor I, Lopez-Castroman J, Fernandez del Moral AL, Jimenez-Arriero MA et al . Diagnostic stability and evolution of bipolar disorder in clinical practice: a prospective cohort study. Acta Psychiatr Scand 2007; 115 : 473–480.

Baca-Garcia E, Vaquero-Lorenzo C, Perez-Rodriguez MM, Gratacos M, Bayes M, Santiago-Mozos R et al . Nucleotide variation in central nervous system genes among male suicide attempters. Am J Med Genet B Neuropsychiatr Genet 2010; 153B : 208–213.

Sun D, van Erp TG, Thompson PM, Bearden CE, Daley M, Kushan L et al . Elucidating a magnetic resonance imaging-based neuroanatomic biomarker for psychosis: classification analysis using probabilistic brain atlas and machine learning algorithms. Biol Psychiatry 2009; 66 : 1055–1060.

Shen H, Wang L, Liu Y, Hu D . Discriminative analysis of resting-state functional connectivity patterns of schizophrenia using low dimensional embedding of fMRI. Neuroimage 2010; 49 : 3110–3121.

Hay MC, Weisner TS, Subramanian S, Duan N, Niedzinski EJ, Kravitz RL . Harnessing experience: exploring the gap between evidence-based medicine and clinical practice. J Eval Clin Pract 2008; 14 : 707–713.

Unutzer J, Choi Y, Cook IA, Oishi S . A web-based data management system to improve care for depression in a multicenter clinical trial. Psychiatr Serv 2002; 53 : 671–673.

Download references

Acknowledgements

Dr Blasco-Fontecilla acknowledges the Spanish Ministry of Health (Rio Hortega CM08/00170), Alicia Koplowitz Foundation, and Conchita Rabago Foundation for funding his post-doctoral rotation at CHRU, Montpellier, France. SAF2010-21849.

Author information

Authors and affiliations.

Department of Psychiatry, New York State Psychiatric Institute and Columbia University, New York, NY, USA

M A Oquendo, E Baca-Garcia, H C Galfalvy & N Duan

Fundacion Jimenez Diaz and Universidad Autonoma, CIBERSAM, Madrid, Spain

E Baca-Garcia & H Blasco-Fontecilla

Department of Signal Theory and Communications, Universidad Carlos III de Madrid, Madrid, Spain

A Artés-Rodríguez & F Perez-Cruz

Princeton University, Princeton, NJ, USA

F Perez-Cruz

Department of Statistics, Columbia University, New York, NY, USA

Department of Biostatistics, Columbia University, New York, NY, USA

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to M A Oquendo .

Ethics declarations

Competing interests.

Dr Oquendo has received unrestricted educational grants and/or lecture fees form Astra-Zeneca, Bristol Myers Squibb, Eli Lilly, Janssen, Otsuko, Pfizer, Sanofi-Aventis and Shire. Her family owns stock in Bistol Myers Squibb. The remaining authors declare no conflict of interest.

PowerPoint slides

Powerpoint slide for fig. 1, rights and permissions.

Reprints and permissions

About this article

Cite this article.

Oquendo, M., Baca-Garcia, E., Artés-Rodríguez, A. et al. Machine learning and data mining: strategies for hypothesis generation. Mol Psychiatry 17 , 956–959 (2012). https://doi.org/10.1038/mp.2011.173

Download citation

Received : 15 July 2011

Revised : 20 October 2011

Accepted : 21 November 2011

Published : 10 January 2012

Issue Date : October 2012

DOI : https://doi.org/10.1038/mp.2011.173

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- data farming

- inductive reasoning

This article is cited by

Applications of artificial intelligence−machine learning for detection of stress: a critical overview.

- Alexios-Fotios A. Mentis

- Donghoon Lee

- Panos Roussos

Molecular Psychiatry (2023)

Optimizing prediction of response to antidepressant medications using machine learning and integrated genetic, clinical, and demographic data

- Dekel Taliaz

- Amit Spinrad

- Bernard Lerer

Translational Psychiatry (2021)

Computational psychiatry: a report from the 2017 NIMH workshop on opportunities and challenges

- Michele Ferrante

- A. David Redish

- Joshua A. Gordon

Molecular Psychiatry (2019)

The role of machine learning in neuroimaging for drug discovery and development

- Orla M. Doyle

- Mitul A. Mehta

- Michael J. Brammer

Psychopharmacology (2015)

Stabilized sparse ordinal regression for medical risk stratification

- Truyen Tran

- Svetha Venkatesh

Knowledge and Information Systems (2015)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Enter search terms to find related medical topics, multimedia and more.

Advanced Search:

- Use “ “ for exact phrases.

- For example: “pediatric abdominal pain”

- Use – to remove results with certain keywords.

- For example: abdominal pain -pediatric

- Use OR to account for alternate keywords.

- For example: teenager OR adolescent

Clinical Decision-Making Strategies

, MD, PhD, Cleveland Clinic Lerner College of Medicine at Case Western Reserve University

Hypothesis Generation

Hypothesis testing, probability estimations and the testing threshold, probability estimations and the treatment threshold.

- 3D Models (0)

One of the most commonly used strategies for medical decision making mirrors the scientific method of hypothesis generation followed by hypothesis testing. Diagnostic hypotheses are accepted or rejected based on testing.

Clinicians often use vague terms such as “highly likely,” “improbable,” and “cannot rule out” to describe the likelihood of disease. Both clinicians and patients may misinterpret such semiquantitative terms; explicit statistical terminology should be used instead, if and when available. Mathematical computations assist clinical decision making and, even when exact numbers are unavailable, can better define clinical probabilities and narrow the list of hypothetical diseases further.

Probability and odds

The probability of a disease (or event) occurring in a patient whose clinical information is unknown is the frequency with which that disease or event occurs in a population. Probabilities range from 0.0 (impossible) to 1.0 (certain) and are often expressed as percentages (from 0 to 100). A disease that occurs in 2 of 10 patients has a probability of 2/10 (0.2 or 20%). Rounding very small probabilities to 0, thus excluding all possibility of disease (sometimes done in implicit clinical reasoning), can lead to erroneous conclusions when quantitative methods are used.

Odds represent the ratio of affected to unaffected patients (ie, the ratio of disease to no disease). Thus, a disease that occurs in 2 of 10 patients (probability of 2/10) has odds of 2/8 (0.25, often expressed as 1 to 4). Odds ( Ω ) and probabilities (p) can be converted one to the other, as in Ω = p/(1 − p) or p = Ω /(1 + Ω ).

The initial differential diagnosis based on chief complaint and demographics is often large, so the clinician first generates and filters the hypothetical possibilities by obtaining the detailed history and doing a directed physical examination to support or refute suspected diagnoses. For instance, in a patient with chest pain, a history of leg pain and a swollen, tender leg detected during examination increases the probability of pulmonary embolism.

It may seem intuitive that the sum of probabilities of all diagnostic possibilities should equal nearly 100% and that a single diagnosis can be derived from a complex array of symptoms and signs. However, applying the principle that the best explanation for a complex situation involves a single cause (often referred to as Occam's razor) can lead clinicians astray. Rigid application of this principle discounts the possibility that a patient may have more than one active disease. For example, a dyspneic patient with known chronic obstructive pulmonary disease (COPD) may be presumed to be having an exacerbation of COPD but may also be suffering from a pulmonary embolism or heart failure.

Even when diagnosis is uncertain, testing is not always useful. A test should be done only if its results will affect management. When disease pre-test probability is above a certain threshold, treatment is warranted ( treatment threshold Probability Estimations and the Treatment Threshold One of the most commonly used strategies for medical decision making mirrors the scientific method of hypothesis generation followed by hypothesis testing. Diagnostic hypotheses are accepted... read more ) and testing may not be indicated.

Below the treatment threshold, testing is indicated when a positive test result would raise the post-test probability above the treatment threshold. The lowest pre-test probability at which this can occur depends on test characteristics and is termed the testing threshold. The testing threshold Testing Thresholds Test results may help make a diagnosis in symptomatic patients (diagnostic testing) or identify occult disease in asymptomatic patients (screening). If the tests were appropriately ordered on... read more is discussed in greater detail elsewhere.

The disease probability at and above which treatment is given and no further testing is warranted is termed the treatment threshold (TT).

The above hypothetical example of a patient with chest pain converged on a near-certain diagnosis (98% probability). When diagnosis of a disease is certain, the decision to treat is a straightforward determination of whether there is a benefit of treatment (compared with no treatment, and taking into account the potential adverse effects of treatment). When the diagnosis has some degree of uncertainty, as is almost always the case, the decision to treat also must balance the benefit of treating a sick person against the risk of erroneously treating a well person or a person with a different disorder; benefit and risk encompass financial, social, and medical consequences. This balance must take into account both the likelihood of disease and the magnitude of the benefit and risk. This balance determines where the clinician sets the treatment threshold.

Pearls & Pitfalls

Variation of treatment threshold (tt) with risk of treatment.

Quantitatively, the treatment threshold can be described as the point at which probability of disease (p) times benefit of treating a person with disease (B) equals probability of no disease (1 − p) times risk of treating a person without disease (R). Thus, at the treatment threshold

p × B = (1 − p) × R

Solving for p, this equation reduces to

p = R/(B + R)

From this equation, it is apparent that if B (benefit) and R (risk) are the same, the treatment threshold becomes 1/(1 + 1) = 0.5, which means that when the probability of disease is > 50%, clinicians would treat, and when probability is < 50%, clinicians would not treat.

For a clinical example, a patient with chest pain can be considered. How high should the clinical likelihood of acute myocardial infarction (MI) be before thrombolytic therapy should be given, assuming the only risk considered is short-term mortality? If it is postulated (for illustration) that mortality due to intracranial hemorrhage with thrombolytic therapy is 1%, then 1% is R, the fatality rate of mistakenly treating a patient who does not have an MI. If net mortality in patients with MI is decreased by 3% with thrombolytic therapy, then 3% is B. Then, treatment threshold is 1/(3 + 1), or 25%; thus, treatment should be given if the probability of acute MI is > 25%.

Alternatively, the treatment threshold equation can be rearranged to show that the treatment threshold is the point at which the odds of disease p/(1 − p) equal the risk:benefit ratio (R/B). The same numerical result is obtained as in the previously described example, with the treatment threshold occurring at the odds of the risk:benefit ratio (1/3); 1/3 odds corresponds to the previously obtained probability of 25% (see probability and odds Probability and odds One of the most commonly used strategies for medical decision making mirrors the scientific method of hypothesis generation followed by hypothesis testing. Diagnostic hypotheses are accepted... read more ).

Limitations of quantitative decision methods

Quantitative clinical decision making seems precise, but because many elements in the calculations (eg, pre-test probability) are often imprecisely known (if they are known at all), this methodology is difficult to use in all but the most well-defined and studied clinical situations. In addition, the patient's philosophy regarding medical care (ie, tolerance of risk and uncertainty) also needs to be taken into account in a shared decision-making process. For instance, although clinical guidelines do not recommend starting a lifelong course of urate-lowering drugs after a first attack of gout, some patients prefer to begin such treatment immediately because they strongly want to avoid a second attack.

Was This Page Helpful?

Test your knowledge

Brought to you by Merck & Co, Inc., Rahway, NJ, USA (known as MSD outside the US and Canada) — dedicated to using leading-edge science to save and improve lives around the world. Learn more about the Merck Manuals and our commitment to Global Medical Knowledge.

- Permissions

- Cookie Settings

- Terms of use

- Veterinary Manual

- IN THIS TOPIC

- Search Menu

- Browse content in A - General Economics and Teaching

- Browse content in A1 - General Economics

- A11 - Role of Economics; Role of Economists; Market for Economists

- Browse content in B - History of Economic Thought, Methodology, and Heterodox Approaches

- Browse content in B4 - Economic Methodology

- B49 - Other

- Browse content in C - Mathematical and Quantitative Methods

- Browse content in C0 - General

- C00 - General

- C01 - Econometrics

- Browse content in C1 - Econometric and Statistical Methods and Methodology: General

- C10 - General

- C11 - Bayesian Analysis: General

- C12 - Hypothesis Testing: General

- C13 - Estimation: General

- C14 - Semiparametric and Nonparametric Methods: General

- C18 - Methodological Issues: General

- Browse content in C2 - Single Equation Models; Single Variables

- C21 - Cross-Sectional Models; Spatial Models; Treatment Effect Models; Quantile Regressions

- C23 - Panel Data Models; Spatio-temporal Models

- C26 - Instrumental Variables (IV) Estimation

- Browse content in C3 - Multiple or Simultaneous Equation Models; Multiple Variables

- C30 - General

- C31 - Cross-Sectional Models; Spatial Models; Treatment Effect Models; Quantile Regressions; Social Interaction Models

- C32 - Time-Series Models; Dynamic Quantile Regressions; Dynamic Treatment Effect Models; Diffusion Processes; State Space Models

- C35 - Discrete Regression and Qualitative Choice Models; Discrete Regressors; Proportions

- Browse content in C4 - Econometric and Statistical Methods: Special Topics

- C40 - General

- Browse content in C5 - Econometric Modeling

- C52 - Model Evaluation, Validation, and Selection

- C53 - Forecasting and Prediction Methods; Simulation Methods

- C55 - Large Data Sets: Modeling and Analysis

- Browse content in C6 - Mathematical Methods; Programming Models; Mathematical and Simulation Modeling

- C63 - Computational Techniques; Simulation Modeling

- C67 - Input-Output Models

- Browse content in C7 - Game Theory and Bargaining Theory

- C71 - Cooperative Games

- C72 - Noncooperative Games

- C73 - Stochastic and Dynamic Games; Evolutionary Games; Repeated Games

- C78 - Bargaining Theory; Matching Theory

- C79 - Other

- Browse content in C8 - Data Collection and Data Estimation Methodology; Computer Programs

- C83 - Survey Methods; Sampling Methods

- Browse content in C9 - Design of Experiments

- C90 - General

- C91 - Laboratory, Individual Behavior

- C92 - Laboratory, Group Behavior

- C93 - Field Experiments

- C99 - Other

- Browse content in D - Microeconomics

- Browse content in D0 - General

- D00 - General

- D01 - Microeconomic Behavior: Underlying Principles

- D02 - Institutions: Design, Formation, Operations, and Impact

- D03 - Behavioral Microeconomics: Underlying Principles

- D04 - Microeconomic Policy: Formulation; Implementation, and Evaluation

- Browse content in D1 - Household Behavior and Family Economics

- D10 - General

- D11 - Consumer Economics: Theory

- D12 - Consumer Economics: Empirical Analysis

- D13 - Household Production and Intrahousehold Allocation

- D14 - Household Saving; Personal Finance

- D15 - Intertemporal Household Choice: Life Cycle Models and Saving

- D18 - Consumer Protection

- Browse content in D2 - Production and Organizations

- D20 - General

- D21 - Firm Behavior: Theory

- D22 - Firm Behavior: Empirical Analysis

- D23 - Organizational Behavior; Transaction Costs; Property Rights

- D24 - Production; Cost; Capital; Capital, Total Factor, and Multifactor Productivity; Capacity

- Browse content in D3 - Distribution

- D30 - General

- D31 - Personal Income, Wealth, and Their Distributions

- D33 - Factor Income Distribution

- Browse content in D4 - Market Structure, Pricing, and Design

- D40 - General

- D41 - Perfect Competition

- D42 - Monopoly

- D43 - Oligopoly and Other Forms of Market Imperfection

- D44 - Auctions

- D47 - Market Design

- D49 - Other

- Browse content in D5 - General Equilibrium and Disequilibrium

- D50 - General

- D51 - Exchange and Production Economies

- D52 - Incomplete Markets

- D53 - Financial Markets

- D57 - Input-Output Tables and Analysis

- Browse content in D6 - Welfare Economics

- D60 - General

- D61 - Allocative Efficiency; Cost-Benefit Analysis

- D62 - Externalities

- D63 - Equity, Justice, Inequality, and Other Normative Criteria and Measurement

- D64 - Altruism; Philanthropy

- D69 - Other

- Browse content in D7 - Analysis of Collective Decision-Making

- D70 - General

- D71 - Social Choice; Clubs; Committees; Associations

- D72 - Political Processes: Rent-seeking, Lobbying, Elections, Legislatures, and Voting Behavior

- D73 - Bureaucracy; Administrative Processes in Public Organizations; Corruption

- D74 - Conflict; Conflict Resolution; Alliances; Revolutions

- D78 - Positive Analysis of Policy Formulation and Implementation

- Browse content in D8 - Information, Knowledge, and Uncertainty

- D80 - General

- D81 - Criteria for Decision-Making under Risk and Uncertainty

- D82 - Asymmetric and Private Information; Mechanism Design

- D83 - Search; Learning; Information and Knowledge; Communication; Belief; Unawareness

- D84 - Expectations; Speculations

- D85 - Network Formation and Analysis: Theory

- D86 - Economics of Contract: Theory

- D89 - Other

- Browse content in D9 - Micro-Based Behavioral Economics

- D90 - General

- D91 - Role and Effects of Psychological, Emotional, Social, and Cognitive Factors on Decision Making

- D92 - Intertemporal Firm Choice, Investment, Capacity, and Financing

- Browse content in E - Macroeconomics and Monetary Economics

- Browse content in E0 - General

- E00 - General

- E01 - Measurement and Data on National Income and Product Accounts and Wealth; Environmental Accounts

- E02 - Institutions and the Macroeconomy

- E03 - Behavioral Macroeconomics

- Browse content in E1 - General Aggregative Models

- E10 - General

- E12 - Keynes; Keynesian; Post-Keynesian

- E13 - Neoclassical

- Browse content in E2 - Consumption, Saving, Production, Investment, Labor Markets, and Informal Economy

- E20 - General

- E21 - Consumption; Saving; Wealth

- E22 - Investment; Capital; Intangible Capital; Capacity

- E23 - Production

- E24 - Employment; Unemployment; Wages; Intergenerational Income Distribution; Aggregate Human Capital; Aggregate Labor Productivity

- E25 - Aggregate Factor Income Distribution

- Browse content in E3 - Prices, Business Fluctuations, and Cycles

- E30 - General

- E31 - Price Level; Inflation; Deflation

- E32 - Business Fluctuations; Cycles

- E37 - Forecasting and Simulation: Models and Applications

- Browse content in E4 - Money and Interest Rates

- E40 - General

- E41 - Demand for Money

- E42 - Monetary Systems; Standards; Regimes; Government and the Monetary System; Payment Systems

- E43 - Interest Rates: Determination, Term Structure, and Effects

- E44 - Financial Markets and the Macroeconomy

- Browse content in E5 - Monetary Policy, Central Banking, and the Supply of Money and Credit

- E50 - General

- E51 - Money Supply; Credit; Money Multipliers

- E52 - Monetary Policy

- E58 - Central Banks and Their Policies

- Browse content in E6 - Macroeconomic Policy, Macroeconomic Aspects of Public Finance, and General Outlook

- E60 - General

- E62 - Fiscal Policy

- E66 - General Outlook and Conditions

- Browse content in E7 - Macro-Based Behavioral Economics

- E71 - Role and Effects of Psychological, Emotional, Social, and Cognitive Factors on the Macro Economy

- Browse content in F - International Economics

- Browse content in F0 - General

- F00 - General

- Browse content in F1 - Trade

- F10 - General

- F11 - Neoclassical Models of Trade

- F12 - Models of Trade with Imperfect Competition and Scale Economies; Fragmentation

- F13 - Trade Policy; International Trade Organizations

- F14 - Empirical Studies of Trade

- F15 - Economic Integration

- F16 - Trade and Labor Market Interactions

- F18 - Trade and Environment

- Browse content in F2 - International Factor Movements and International Business

- F20 - General

- F21 - International Investment; Long-Term Capital Movements

- F22 - International Migration

- F23 - Multinational Firms; International Business

- Browse content in F3 - International Finance

- F30 - General

- F31 - Foreign Exchange

- F32 - Current Account Adjustment; Short-Term Capital Movements

- F34 - International Lending and Debt Problems

- F35 - Foreign Aid

- F36 - Financial Aspects of Economic Integration

- Browse content in F4 - Macroeconomic Aspects of International Trade and Finance

- F40 - General

- F41 - Open Economy Macroeconomics

- F42 - International Policy Coordination and Transmission

- F43 - Economic Growth of Open Economies

- F44 - International Business Cycles

- Browse content in F5 - International Relations, National Security, and International Political Economy

- F50 - General

- F51 - International Conflicts; Negotiations; Sanctions

- F52 - National Security; Economic Nationalism

- F55 - International Institutional Arrangements

- Browse content in F6 - Economic Impacts of Globalization

- F60 - General

- F61 - Microeconomic Impacts

- F63 - Economic Development

- Browse content in G - Financial Economics

- Browse content in G0 - General

- G00 - General

- G01 - Financial Crises

- G02 - Behavioral Finance: Underlying Principles

- Browse content in G1 - General Financial Markets

- G10 - General

- G11 - Portfolio Choice; Investment Decisions

- G12 - Asset Pricing; Trading volume; Bond Interest Rates

- G14 - Information and Market Efficiency; Event Studies; Insider Trading

- G15 - International Financial Markets

- G18 - Government Policy and Regulation

- Browse content in G2 - Financial Institutions and Services

- G20 - General

- G21 - Banks; Depository Institutions; Micro Finance Institutions; Mortgages

- G22 - Insurance; Insurance Companies; Actuarial Studies

- G23 - Non-bank Financial Institutions; Financial Instruments; Institutional Investors

- G24 - Investment Banking; Venture Capital; Brokerage; Ratings and Ratings Agencies

- G28 - Government Policy and Regulation

- Browse content in G3 - Corporate Finance and Governance

- G30 - General

- G31 - Capital Budgeting; Fixed Investment and Inventory Studies; Capacity

- G32 - Financing Policy; Financial Risk and Risk Management; Capital and Ownership Structure; Value of Firms; Goodwill

- G33 - Bankruptcy; Liquidation

- G34 - Mergers; Acquisitions; Restructuring; Corporate Governance

- G38 - Government Policy and Regulation

- Browse content in G4 - Behavioral Finance

- G40 - General

- G41 - Role and Effects of Psychological, Emotional, Social, and Cognitive Factors on Decision Making in Financial Markets

- Browse content in G5 - Household Finance

- G50 - General

- G51 - Household Saving, Borrowing, Debt, and Wealth

- Browse content in H - Public Economics

- Browse content in H0 - General

- H00 - General

- Browse content in H1 - Structure and Scope of Government

- H10 - General

- H11 - Structure, Scope, and Performance of Government

- Browse content in H2 - Taxation, Subsidies, and Revenue

- H20 - General

- H21 - Efficiency; Optimal Taxation

- H22 - Incidence

- H23 - Externalities; Redistributive Effects; Environmental Taxes and Subsidies

- H24 - Personal Income and Other Nonbusiness Taxes and Subsidies; includes inheritance and gift taxes

- H25 - Business Taxes and Subsidies

- H26 - Tax Evasion and Avoidance

- Browse content in H3 - Fiscal Policies and Behavior of Economic Agents

- H31 - Household

- Browse content in H4 - Publicly Provided Goods

- H40 - General

- H41 - Public Goods

- H42 - Publicly Provided Private Goods

- H44 - Publicly Provided Goods: Mixed Markets

- Browse content in H5 - National Government Expenditures and Related Policies

- H50 - General

- H51 - Government Expenditures and Health

- H52 - Government Expenditures and Education

- H53 - Government Expenditures and Welfare Programs

- H54 - Infrastructures; Other Public Investment and Capital Stock

- H55 - Social Security and Public Pensions

- H56 - National Security and War

- H57 - Procurement

- Browse content in H6 - National Budget, Deficit, and Debt

- H63 - Debt; Debt Management; Sovereign Debt

- Browse content in H7 - State and Local Government; Intergovernmental Relations

- H70 - General

- H71 - State and Local Taxation, Subsidies, and Revenue

- H73 - Interjurisdictional Differentials and Their Effects

- H75 - State and Local Government: Health; Education; Welfare; Public Pensions

- H76 - State and Local Government: Other Expenditure Categories

- H77 - Intergovernmental Relations; Federalism; Secession

- Browse content in H8 - Miscellaneous Issues

- H81 - Governmental Loans; Loan Guarantees; Credits; Grants; Bailouts

- H83 - Public Administration; Public Sector Accounting and Audits

- H87 - International Fiscal Issues; International Public Goods

- Browse content in I - Health, Education, and Welfare

- Browse content in I0 - General

- I00 - General

- Browse content in I1 - Health

- I10 - General

- I11 - Analysis of Health Care Markets

- I12 - Health Behavior

- I13 - Health Insurance, Public and Private

- I14 - Health and Inequality

- I15 - Health and Economic Development

- I18 - Government Policy; Regulation; Public Health

- Browse content in I2 - Education and Research Institutions

- I20 - General

- I21 - Analysis of Education

- I22 - Educational Finance; Financial Aid

- I23 - Higher Education; Research Institutions

- I24 - Education and Inequality

- I25 - Education and Economic Development

- I26 - Returns to Education

- I28 - Government Policy

- Browse content in I3 - Welfare, Well-Being, and Poverty

- I30 - General

- I31 - General Welfare

- I32 - Measurement and Analysis of Poverty

- I38 - Government Policy; Provision and Effects of Welfare Programs

- Browse content in J - Labor and Demographic Economics

- Browse content in J0 - General

- J00 - General

- J01 - Labor Economics: General

- J08 - Labor Economics Policies

- Browse content in J1 - Demographic Economics

- J10 - General

- J12 - Marriage; Marital Dissolution; Family Structure; Domestic Abuse

- J13 - Fertility; Family Planning; Child Care; Children; Youth

- J14 - Economics of the Elderly; Economics of the Handicapped; Non-Labor Market Discrimination

- J15 - Economics of Minorities, Races, Indigenous Peoples, and Immigrants; Non-labor Discrimination

- J16 - Economics of Gender; Non-labor Discrimination

- J18 - Public Policy

- Browse content in J2 - Demand and Supply of Labor

- J20 - General

- J21 - Labor Force and Employment, Size, and Structure

- J22 - Time Allocation and Labor Supply

- J23 - Labor Demand

- J24 - Human Capital; Skills; Occupational Choice; Labor Productivity

- Browse content in J3 - Wages, Compensation, and Labor Costs

- J30 - General

- J31 - Wage Level and Structure; Wage Differentials

- J33 - Compensation Packages; Payment Methods

- J38 - Public Policy

- Browse content in J4 - Particular Labor Markets

- J40 - General

- J42 - Monopsony; Segmented Labor Markets

- J44 - Professional Labor Markets; Occupational Licensing

- J45 - Public Sector Labor Markets

- J48 - Public Policy

- J49 - Other

- Browse content in J5 - Labor-Management Relations, Trade Unions, and Collective Bargaining

- J50 - General

- J51 - Trade Unions: Objectives, Structure, and Effects

- J53 - Labor-Management Relations; Industrial Jurisprudence

- Browse content in J6 - Mobility, Unemployment, Vacancies, and Immigrant Workers

- J60 - General

- J61 - Geographic Labor Mobility; Immigrant Workers

- J62 - Job, Occupational, and Intergenerational Mobility

- J63 - Turnover; Vacancies; Layoffs

- J64 - Unemployment: Models, Duration, Incidence, and Job Search

- J65 - Unemployment Insurance; Severance Pay; Plant Closings

- J68 - Public Policy

- Browse content in J7 - Labor Discrimination

- J71 - Discrimination

- J78 - Public Policy

- Browse content in J8 - Labor Standards: National and International

- J81 - Working Conditions

- J88 - Public Policy

- Browse content in K - Law and Economics

- Browse content in K0 - General

- K00 - General

- Browse content in K1 - Basic Areas of Law

- K14 - Criminal Law

- K2 - Regulation and Business Law

- Browse content in K3 - Other Substantive Areas of Law

- K31 - Labor Law

- Browse content in K4 - Legal Procedure, the Legal System, and Illegal Behavior

- K40 - General

- K41 - Litigation Process

- K42 - Illegal Behavior and the Enforcement of Law

- Browse content in L - Industrial Organization

- Browse content in L0 - General

- L00 - General

- Browse content in L1 - Market Structure, Firm Strategy, and Market Performance

- L10 - General

- L11 - Production, Pricing, and Market Structure; Size Distribution of Firms

- L13 - Oligopoly and Other Imperfect Markets

- L14 - Transactional Relationships; Contracts and Reputation; Networks

- L15 - Information and Product Quality; Standardization and Compatibility

- L16 - Industrial Organization and Macroeconomics: Industrial Structure and Structural Change; Industrial Price Indices

- L19 - Other

- Browse content in L2 - Firm Objectives, Organization, and Behavior

- L21 - Business Objectives of the Firm

- L22 - Firm Organization and Market Structure

- L23 - Organization of Production

- L24 - Contracting Out; Joint Ventures; Technology Licensing

- L25 - Firm Performance: Size, Diversification, and Scope

- L26 - Entrepreneurship

- Browse content in L3 - Nonprofit Organizations and Public Enterprise

- L33 - Comparison of Public and Private Enterprises and Nonprofit Institutions; Privatization; Contracting Out

- Browse content in L4 - Antitrust Issues and Policies

- L40 - General

- L41 - Monopolization; Horizontal Anticompetitive Practices

- L42 - Vertical Restraints; Resale Price Maintenance; Quantity Discounts

- Browse content in L5 - Regulation and Industrial Policy

- L50 - General

- L51 - Economics of Regulation

- Browse content in L6 - Industry Studies: Manufacturing

- L60 - General

- L62 - Automobiles; Other Transportation Equipment; Related Parts and Equipment

- L63 - Microelectronics; Computers; Communications Equipment

- L66 - Food; Beverages; Cosmetics; Tobacco; Wine and Spirits

- Browse content in L7 - Industry Studies: Primary Products and Construction

- L71 - Mining, Extraction, and Refining: Hydrocarbon Fuels

- L73 - Forest Products

- Browse content in L8 - Industry Studies: Services

- L81 - Retail and Wholesale Trade; e-Commerce

- L83 - Sports; Gambling; Recreation; Tourism

- L84 - Personal, Professional, and Business Services

- L86 - Information and Internet Services; Computer Software

- Browse content in L9 - Industry Studies: Transportation and Utilities

- L91 - Transportation: General

- L93 - Air Transportation

- L94 - Electric Utilities

- Browse content in M - Business Administration and Business Economics; Marketing; Accounting; Personnel Economics

- Browse content in M1 - Business Administration

- M11 - Production Management

- M12 - Personnel Management; Executives; Executive Compensation

- M14 - Corporate Culture; Social Responsibility

- Browse content in M2 - Business Economics

- M21 - Business Economics

- Browse content in M3 - Marketing and Advertising

- M31 - Marketing

- M37 - Advertising

- Browse content in M4 - Accounting and Auditing

- M42 - Auditing

- M48 - Government Policy and Regulation

- Browse content in M5 - Personnel Economics

- M50 - General

- M51 - Firm Employment Decisions; Promotions

- M52 - Compensation and Compensation Methods and Their Effects

- M53 - Training

- M54 - Labor Management

- Browse content in N - Economic History

- Browse content in N0 - General

- N00 - General

- N01 - Development of the Discipline: Historiographical; Sources and Methods

- Browse content in N1 - Macroeconomics and Monetary Economics; Industrial Structure; Growth; Fluctuations

- N10 - General, International, or Comparative

- N11 - U.S.; Canada: Pre-1913

- N12 - U.S.; Canada: 1913-

- N13 - Europe: Pre-1913

- N17 - Africa; Oceania

- Browse content in N2 - Financial Markets and Institutions

- N20 - General, International, or Comparative

- N22 - U.S.; Canada: 1913-

- N23 - Europe: Pre-1913

- Browse content in N3 - Labor and Consumers, Demography, Education, Health, Welfare, Income, Wealth, Religion, and Philanthropy

- N30 - General, International, or Comparative

- N31 - U.S.; Canada: Pre-1913

- N32 - U.S.; Canada: 1913-

- N33 - Europe: Pre-1913

- N34 - Europe: 1913-

- N36 - Latin America; Caribbean

- N37 - Africa; Oceania

- Browse content in N4 - Government, War, Law, International Relations, and Regulation

- N40 - General, International, or Comparative

- N41 - U.S.; Canada: Pre-1913

- N42 - U.S.; Canada: 1913-

- N43 - Europe: Pre-1913

- N44 - Europe: 1913-

- N45 - Asia including Middle East

- N47 - Africa; Oceania

- Browse content in N5 - Agriculture, Natural Resources, Environment, and Extractive Industries

- N50 - General, International, or Comparative

- N51 - U.S.; Canada: Pre-1913

- Browse content in N6 - Manufacturing and Construction

- N63 - Europe: Pre-1913

- Browse content in N7 - Transport, Trade, Energy, Technology, and Other Services

- N71 - U.S.; Canada: Pre-1913

- Browse content in N8 - Micro-Business History

- N82 - U.S.; Canada: 1913-

- Browse content in N9 - Regional and Urban History

- N91 - U.S.; Canada: Pre-1913

- N92 - U.S.; Canada: 1913-

- N93 - Europe: Pre-1913

- N94 - Europe: 1913-

- Browse content in O - Economic Development, Innovation, Technological Change, and Growth

- Browse content in O1 - Economic Development

- O10 - General

- O11 - Macroeconomic Analyses of Economic Development

- O12 - Microeconomic Analyses of Economic Development

- O13 - Agriculture; Natural Resources; Energy; Environment; Other Primary Products

- O14 - Industrialization; Manufacturing and Service Industries; Choice of Technology

- O15 - Human Resources; Human Development; Income Distribution; Migration

- O16 - Financial Markets; Saving and Capital Investment; Corporate Finance and Governance

- O17 - Formal and Informal Sectors; Shadow Economy; Institutional Arrangements

- O18 - Urban, Rural, Regional, and Transportation Analysis; Housing; Infrastructure

- O19 - International Linkages to Development; Role of International Organizations

- Browse content in O2 - Development Planning and Policy

- O23 - Fiscal and Monetary Policy in Development

- O25 - Industrial Policy

- Browse content in O3 - Innovation; Research and Development; Technological Change; Intellectual Property Rights

- O30 - General

- O31 - Innovation and Invention: Processes and Incentives

- O32 - Management of Technological Innovation and R&D

- O33 - Technological Change: Choices and Consequences; Diffusion Processes

- O34 - Intellectual Property and Intellectual Capital

- O38 - Government Policy

- Browse content in O4 - Economic Growth and Aggregate Productivity

- O40 - General

- O41 - One, Two, and Multisector Growth Models

- O43 - Institutions and Growth

- O44 - Environment and Growth

- O47 - Empirical Studies of Economic Growth; Aggregate Productivity; Cross-Country Output Convergence

- Browse content in O5 - Economywide Country Studies

- O52 - Europe

- O53 - Asia including Middle East

- O55 - Africa

- Browse content in P - Economic Systems

- Browse content in P0 - General

- P00 - General

- Browse content in P1 - Capitalist Systems

- P10 - General

- P16 - Political Economy

- P17 - Performance and Prospects

- P18 - Energy: Environment

- Browse content in P2 - Socialist Systems and Transitional Economies

- P26 - Political Economy; Property Rights

- Browse content in P3 - Socialist Institutions and Their Transitions

- P37 - Legal Institutions; Illegal Behavior

- Browse content in P4 - Other Economic Systems

- P48 - Political Economy; Legal Institutions; Property Rights; Natural Resources; Energy; Environment; Regional Studies

- Browse content in P5 - Comparative Economic Systems

- P51 - Comparative Analysis of Economic Systems

- Browse content in Q - Agricultural and Natural Resource Economics; Environmental and Ecological Economics

- Browse content in Q1 - Agriculture

- Q10 - General

- Q12 - Micro Analysis of Farm Firms, Farm Households, and Farm Input Markets

- Q13 - Agricultural Markets and Marketing; Cooperatives; Agribusiness

- Q14 - Agricultural Finance

- Q15 - Land Ownership and Tenure; Land Reform; Land Use; Irrigation; Agriculture and Environment

- Q16 - R&D; Agricultural Technology; Biofuels; Agricultural Extension Services

- Browse content in Q2 - Renewable Resources and Conservation

- Q25 - Water

- Browse content in Q3 - Nonrenewable Resources and Conservation

- Q32 - Exhaustible Resources and Economic Development

- Q34 - Natural Resources and Domestic and International Conflicts

- Browse content in Q4 - Energy

- Q41 - Demand and Supply; Prices

- Q48 - Government Policy

- Browse content in Q5 - Environmental Economics

- Q50 - General

- Q51 - Valuation of Environmental Effects

- Q53 - Air Pollution; Water Pollution; Noise; Hazardous Waste; Solid Waste; Recycling

- Q54 - Climate; Natural Disasters; Global Warming

- Q56 - Environment and Development; Environment and Trade; Sustainability; Environmental Accounts and Accounting; Environmental Equity; Population Growth

- Q58 - Government Policy

- Browse content in R - Urban, Rural, Regional, Real Estate, and Transportation Economics

- Browse content in R0 - General

- R00 - General

- Browse content in R1 - General Regional Economics

- R11 - Regional Economic Activity: Growth, Development, Environmental Issues, and Changes

- R12 - Size and Spatial Distributions of Regional Economic Activity

- R13 - General Equilibrium and Welfare Economic Analysis of Regional Economies

- Browse content in R2 - Household Analysis

- R20 - General

- R23 - Regional Migration; Regional Labor Markets; Population; Neighborhood Characteristics

- R28 - Government Policy

- Browse content in R3 - Real Estate Markets, Spatial Production Analysis, and Firm Location

- R30 - General

- R31 - Housing Supply and Markets

- R38 - Government Policy

- Browse content in R4 - Transportation Economics

- R40 - General

- R41 - Transportation: Demand, Supply, and Congestion; Travel Time; Safety and Accidents; Transportation Noise

- R48 - Government Pricing and Policy

- Browse content in Z - Other Special Topics

- Browse content in Z1 - Cultural Economics; Economic Sociology; Economic Anthropology

- Z10 - General

- Z12 - Religion

- Z13 - Economic Sociology; Economic Anthropology; Social and Economic Stratification

- Advance Articles

- Editor's Choice

- Author Guidelines

- Submission Site

- Open Access Options

- Self-Archiving Policy

- Why Submit?

- About The Quarterly Journal of Economics

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Dispatch Dates

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

I. introduction, ii. a simple framework for discovery, iii. application and data, iv. the surprising importance of the face, v. algorithm-human communication, vi. evaluating these new hypotheses, vii. conclusion, data availability.

- < Previous

Machine Learning as a Tool for Hypothesis Generation *

- Article contents

- Figures & tables

- Supplementary Data

Jens Ludwig, Sendhil Mullainathan, Machine Learning as a Tool for Hypothesis Generation, The Quarterly Journal of Economics , Volume 139, Issue 2, May 2024, Pages 751–827, https://doi.org/10.1093/qje/qjad055

- Permissions Icon Permissions

While hypothesis testing is a highly formalized activity, hypothesis generation remains largely informal. We propose a systematic procedure to generate novel hypotheses about human behavior, which uses the capacity of machine learning algorithms to notice patterns people might not. We illustrate the procedure with a concrete application: judge decisions about whom to jail. We begin with a striking fact: the defendant’s face alone matters greatly for the judge’s jailing decision. In fact, an algorithm given only the pixels in the defendant’s mug shot accounts for up to half of the predictable variation. We develop a procedure that allows human subjects to interact with this black-box algorithm to produce hypotheses about what in the face influences judge decisions. The procedure generates hypotheses that are both interpretable and novel: they are not explained by demographics (e.g., race) or existing psychology research, nor are they already known (even if tacitly) to people or experts. Though these results are specific, our procedure is general. It provides a way to produce novel, interpretable hypotheses from any high-dimensional data set (e.g., cell phones, satellites, online behavior, news headlines, corporate filings, and high-frequency time series). A central tenet of our article is that hypothesis generation is a valuable activity, and we hope this encourages future work in this largely “prescientific” stage of science.

Science is curiously asymmetric. New ideas are meticulously tested using data, statistics, and formal models. Yet those ideas originate in a notably less meticulous process involving intuition, inspiration, and creativity. The asymmetry between how ideas are generated versus tested is noteworthy because idea generation is also, at its core, an empirical activity. Creativity begins with “data” (albeit data stored in the mind), which are then “analyzed” (through a purely psychological process of pattern recognition). What feels like inspiration is actually the output of a data analysis run by the human brain. Despite this, idea generation largely happens off stage, something that typically happens before “actual science” begins. 1 Things are likely this way because there is no obvious alternative. The creative process is so human and idiosyncratic that it would seem to resist formalism.

That may be about to change because of two developments. First, human cognition is no longer the only way to notice patterns in the world. Machine learning algorithms can also find patterns, including patterns people might not notice themselves. These algorithms can work not just with structured, tabular data but also with the kinds of inputs that traditionally could only be processed by the mind, like images or text. Second, data on human behavior is exploding: second-by-second price and volume data in asset markets, high-frequency cellphone data on location and usage, CCTV camera and police bodycam footage, news stories, children’s books, the entire text of corporate filings, and so on. The kind of information researchers once relied on for inspiration is now machine readable: what was once solely mental data is increasingly becoming actual data. 2

We suggest that these changes can be leveraged to expand how hypotheses are generated. Currently, researchers do of course look at data to generate hypotheses, as in exploratory data analysis, but this depends on the idiosyncratic creativity of investigators who must decide what statistics to calculate. In contrast, we suggest capitalizing on the capacity of machine learning algorithms to automatically detect patterns, especially ones people might never have considered. A key challenge is that we require hypotheses that are interpretable to people. One important goal of science is to generalize knowledge to new contexts. Predictive patterns in a single data set alone are rarely useful; they become insightful when they can be generalized. Currently, that generalization is done by people, and people can only generalize things they understand. The predictors produced by machine learning algorithms are, however, notoriously opaque—hard-to-decipher “black boxes.” We propose a procedure that integrates these algorithms into a pipeline that results in human-interpretable hypotheses that are both novel and testable.

While our procedure is broadly applicable, we illustrate it in a concrete application: judicial decision making. Specifically we study pretrial decisions about which defendants are jailed versus set free awaiting trial, a decision that by law is supposed to hinge on a prediction of the defendant’s risk ( Dobbie and Yang 2021 ). 3 This is also a substantively interesting application in its own right because of the high stakes involved and mounting evidence that judges make these decisions less than perfectly ( Kleinberg et al. 2018 ; Rambachan et al. 2021 ; Angelova, Dobbie, and Yang 2023 ).

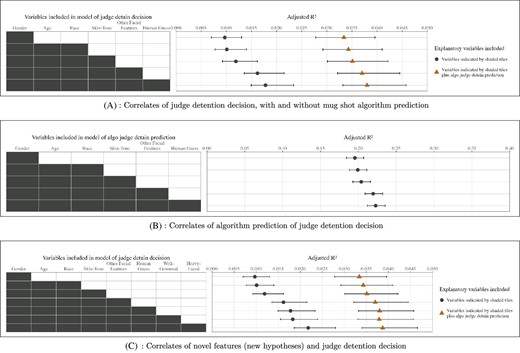

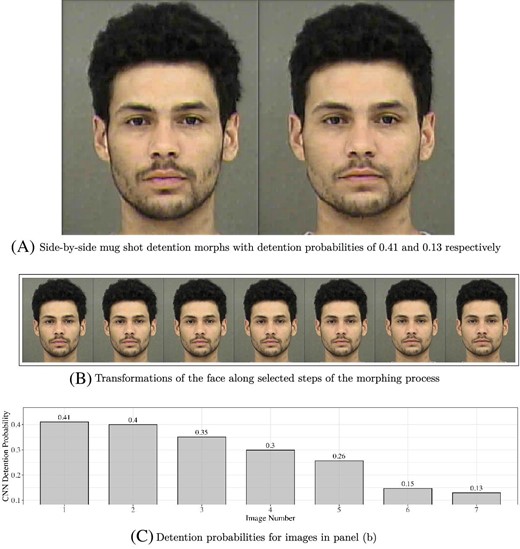

We begin with a striking fact. When we build a deep learning model of the judge—one that predicts whether the judge will detain a given defendant—a single factor emerges as having large explanatory power: the defendant’s face. A predictor that uses only the pixels in the defendant’s mug shot explains from one-quarter to nearly one-half of the predictable variation in detention. 4 Defendants whose mug shots fall in the bottom quartile of predicted detention are 20.4 percentage points more likely to be jailed than those in the top quartile. By comparison, the difference in detention rates between those arrested for violent versus nonviolent crimes is 4.8 percentage points. Notice what this finding is and is not. We are not claiming the mug shot predicts defendant behavior; that would be the long-discredited field of phrenology ( Schlag 1997 ). We instead claim the mug shot predicts judge behavior: how the defendant looks correlates strongly with whether the judge chooses to jail them. 5

Has the algorithm found something new in the pixels of the mug shot or simply rediscovered something long known or intuitively understood? After all, psychologists have been studying people’s reactions to faces for at least 100 years ( Todorov et al. 2015 ; Todorov and Oh 2021 ), while economists have shown that judges are influenced by factors (like race) that can be seen from someone’s face ( Arnold, Dobbie, and Yang 2018 ; Arnold, Dobbie, and Hull 2020 ). When we control for age, gender, race, skin color, and even the facial features suggested by previous psychology research (dominance, trustworthiness, attractiveness, and competence), none of these factors (individually or jointly) meaningfully diminishes the algorithm’s predictive power (see Figure I , Panel A). It is perhaps worth noting that the algorithm on its own does rediscover some of the signal from these features: in fact, collectively these known features explain |$22.3\%$| of the variation in predicted detention (see Figure I , Panel B). The key point is that the algorithm has discovered a great deal more as well.

Correlates of Judge Detention Decision and Algorithmic Prediction of Judge Decision

Panel A summarizes the explanatory power of a regression model in explaining judge detention decisions, controlling for the different explanatory variables indicated at left (shaded tiles), either on their own (dark circles) or together with the algorithmic prediction of the judge decisions (triangles). Each row represents a different regression specification. By “other facial features,” we mean variables that previous psychology research suggests matter for how faces influence people’s reactions to others (dominance, trustworthiness, competence, and attractiveness). Ninety-five percent confidence intervals around our R 2 estimates come from drawing 10,000 bootstrap samples from the validation data set. Panel B shows the relationship between the different explanatory variables as indicated at left by the shaded tiles with the algorithmic prediction itself as the outcome variable in the regressions. Panel C examines the correlation with judge decisions of the two novel hypotheses generated by our procedure about what facial features affect judge detention decisions: well-groomed and heavy-faced.

Perhaps we should control for something else? Figuring out that “something else” is itself a form of hypothesis generation. To avoid a possibly endless—and misleading—process of generating other controls, we take a different approach. We show mug shots to subjects and ask them to guess whom the judge will detain and incentivize them for accuracy. These guesses summarize the facial features people readily (if implicitly) believe influence jailing. Although subjects are modestly good at this task, the algorithm is much better. It remains highly predictive even after controlling for these guesses. The algorithm seems to have found something novel beyond what scientists have previously hypothesized and beyond whatever patterns people can even recognize in data (whether or not they can articulate them).

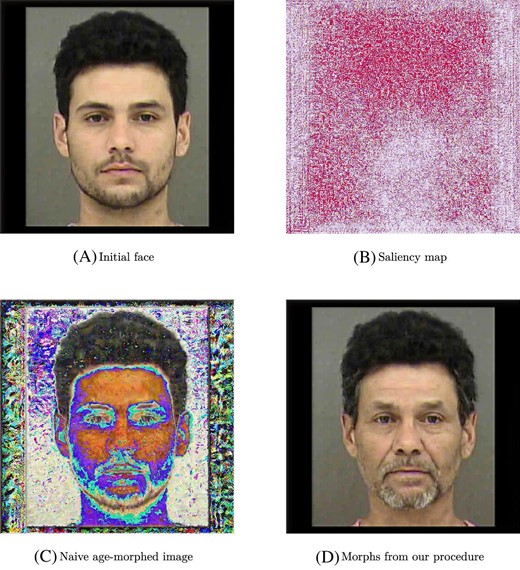

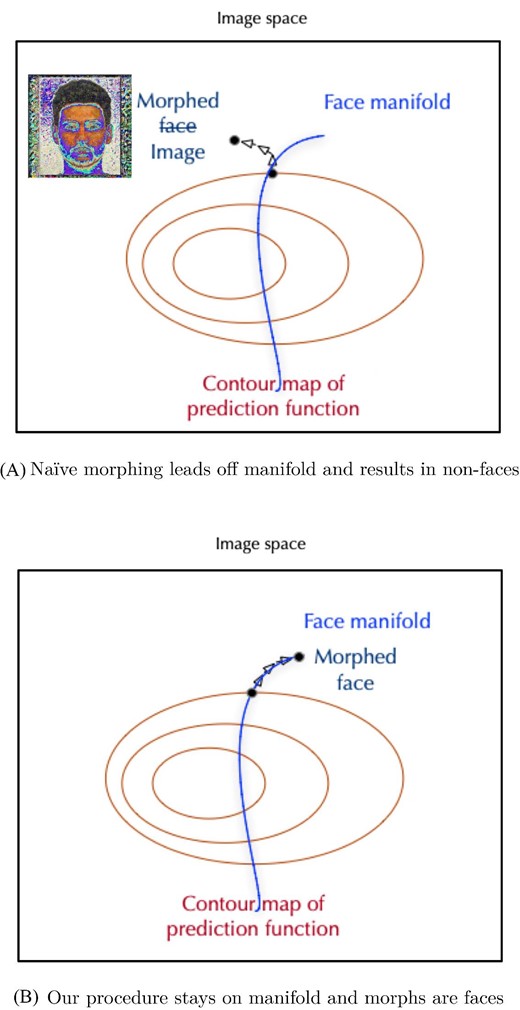

What, then, are the novel facial features the algorithm has discovered? If we are unable to answer that question, we will have simply replaced one black box (the judge’s mind) with another (an algorithmic model of the judge’s mind). We propose a solution whereby the algorithm can communicate what it “sees.” Specifically, our procedure begins with a mug shot and “morphs” it to create a mug shot that maximally increases (or decreases) the algorithm’s predicted detention probability. The result is pairs of synthetic mug shots that can be examined to understand and articulate what differs within the pairs. The algorithm discovers, and people name that discovery. In principle we could have just shown subjects actual mug shots with higher versus lower predicted detention odds. But faces are so rich that between any pair of actual mug shots, many things will happen to be different and most will be unrelated to detention (akin to the curse of dimensionality). Simply looking at pairs of actual faces can, as a result, lead to many spurious observations. Morphing creates counterfactual synthetic images that are as similar as possible except with respect to detention odds, to minimize extraneous differences and help focus on what truly matters for judge detention decisions.

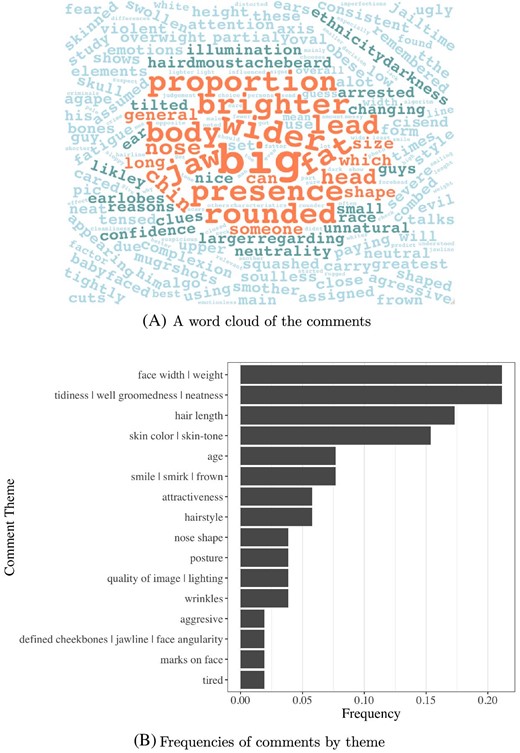

Importantly, we do not generate hypotheses by looking at the morphs ourselves; instead, they are shown to independent study subjects (MTurk or Prolific workers) in an experimental design. Specifically, we showed pairs of morphed images and asked participants to guess which image the algorithm predicts to have higher detention risk. Subjects were given both incentives and feedback, so they had motivation and opportunity to learn the underlying patterns. While subjects initially guess the judge’s decision correctly from these morphed mug shots at about the same rate as they do when looking at “raw data,” that is, actual mug shots (modestly above the |$50\%$| random guessing mark), they quickly learn from these morphed images what the algorithm is seeing and reach an accuracy of nearly |$70\%$| . At the end, participants are asked to put words to the differences they see across images in each pair, that is, to name what they think are the key facial features the algorithm is relying on to predict judge decisions. Comfortingly, there is substantial agreement on what subjects see: a sizable share of subjects all name the same feature. To verify whether the feature they identify is used by the algorithm, a separate sample of subjects independently coded mug shots for this new feature. We show that the new feature is indeed correlated with the algorithm’s predictions. What subjects think they’re seeing is indeed what the algorithm is also “seeing.”

Having discovered a single feature, we can iterate the procedure—the first feature explains only a fraction of what the algorithm has captured, suggesting there are many other factors to be discovered. We again produce morphs, but this time hold the first feature constant: that is, we orthogonalize so that the pairs of morphs do not differ on the first feature. When these new morphs are shown to subjects, they consistently name a second feature, which again correlates with the algorithm’s prediction. Both features are quite important. They explain a far larger share of what the algorithm sees than all the other variables (including race and skin color) besides gender. These results establish our main goals: show that the procedure produces meaningful communication, and that it can be iterated.

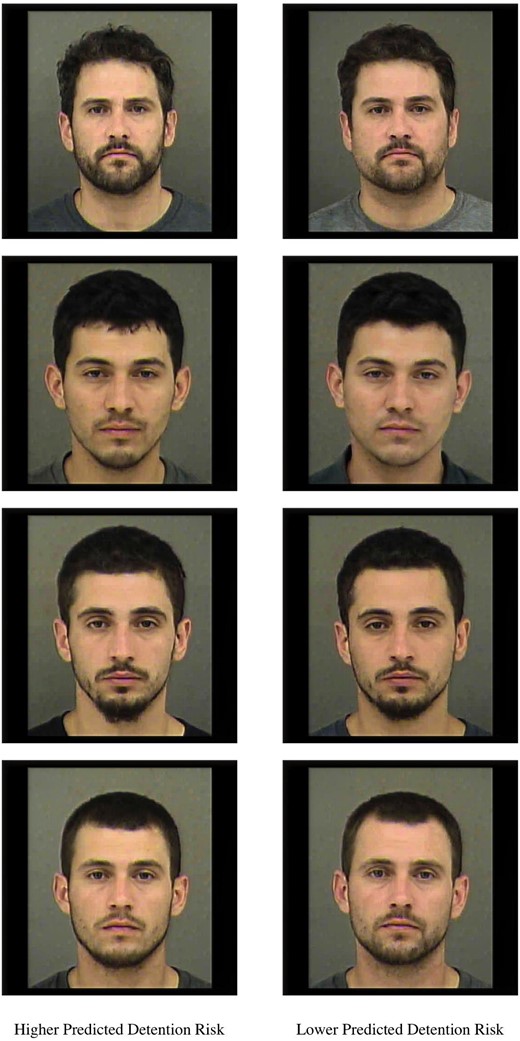

What are the two discovered features? The first can be called “well-groomed” (e.g., tidy, clean, groomed, versus unkept, disheveled, sloppy look), and the second can be called “heavy-faced” (e.g., wide facial shape, puffier face, wider face, rounder face, heavier). These features are not just predictive of what the algorithm sees, but also of what judges actually do ( Figure I , Panel C). We find that both well-groomed and heavy-faced defendants are more likely to be released, even controlling for demographic features and known facial features from psychology. Detention rates of defendants in the top and bottom quartile of well-groomedness differ by 5.5 percentage points ( |$24\%$| of the base rate), while the top versus bottom quartile difference in heavy-facedness is 7 percentage points (about |$30\%$| of the base rate). Both differences are larger than the 4.8 percentage points detention rate difference between those arrested for violent versus nonviolent crimes. Not only are these magnitudes substantial, these hypotheses are novel even to practitioners who work in the criminal justice system (in a public defender’s office and a legal aid society).

Establishing whether these hypotheses are truly causally related to judge decisions is obviously beyond the scope of the present article. But we nonetheless present a few additional findings that are at least suggestive. These novel features do not appear to be simply proxies for factors like substance abuse, mental health, or socioeconomic status. Moreover, we carried out a lab experiment in which subjects are asked to make hypothetical pretrial release decisions as if they were a judge. They are shown information about criminal records (current charge, prior arrests) along with mug shots that are randomly morphed in the direction of higher or lower values of well-groomed (or heavy-faced). Subjects tend to detain those with higher-risk structured variables (criminal records), all else equal, suggesting they are taking the task seriously. These same subjects, though, are also more likely to detain defendants who are less heavy-faced or well-groomed, even though these were randomly assigned.

Ultimately, though, this is not a study about well-groomed or heavy-faced defendants, nor are its implications limited to faces or judges. It develops a general procedure that can be applied wherever behavior can be predicted using rich (especially high-dimensional) data. Development of such a procedure has required overcoming two key challenges.

First, to generate interpretable hypotheses, we must overcome the notorious black box nature of most machine learning algorithms. Unlike with a regression, one cannot simply inspect the coefficients. A modern deep-learning algorithm, for example, can have tens of millions of parameters. Noninspectability is especially problematic when the data are rich and high dimensional since the parameters are associated with primitives such as pixels. This problem of interpretation is fundamental and remains an active area of research. 6 Part of our procedure here draws on the recent literature in computer science that uses generative models to create counterfactual explanations. Most of those methods are designed for AI applications that seek to automate tasks humans do nearly perfectly, like image classification, where predictability of the outcome (is this image of a dog or a cat?) is typically quite high. 7 Interpretability techniques are used to ensure the algorithm is not picking up on spurious signal. 8 We developed our method, which has similar conceptual underpinnings to this existing literature, for social science applications where the outcome (human behavior) is typically more challenging to predict. 9 To what degree existing methods (as they currently stand or with some modification) could perform as well or better in social science applications like ours is a question we leave to future work.

Second, we must overcome what we might call the Rorschach test problem. Suppose we, the authors, were to look at these morphs and generate a hypothesis. We would not know if the procedure played any meaningful role. Perhaps the morphs, like ink blots, are merely canvases onto which we project our creativity. 10 Put differently, a single research team’s idiosyncratic judgments lack the kind of replicability we desire of a scientific procedure. To overcome this problem, it is key that we use independent (nonresearcher) subjects to inspect the morphs. The fact that a sizable share of subjects all name the same discovery suggests that human-algorithm communication has occurred and the procedure is replicable, rather than reflecting some unique spark of creativity.

At the same time, the fact that our procedure is not fully automatic implies that it will be shaped and constrained by people. Human participants are needed to name the discoveries. So whole new concepts that humans do not yet understand cannot be produced. Such breakthroughs clearly happen (e.g., gravity or probability) but are beyond the scope of procedures like ours. People also play a crucial role in curating the data the algorithm sees. Here, for example, we chose to include mug shots. The creative acquisition of rich data is an important human input into this hypothesis generation procedure. 11

Our procedure can be applied to a broad range of settings and will be particularly useful for data that are not already intrinsically interpretable. Many data sets contain a few variables that already have clear, fixed meanings and are unlikely to lead to novel discoveries. In contrast, images, text, and time series are rich high-dimensional data with many possible interpretations. Just as there is an ocean of plausible facial features, these sorts of data contain a large set of potential hypotheses that an algorithm can search through. Such data are increasingly available and used by economists, including news headlines, legislative deliberations, annual corporate reports, Federal Open Market Committee statements, Google searches, student essays, résumés, court transcripts, doctors’ notes, satellite images, housing photos, and medical images. Our procedure could, for example, raise hypotheses about what kinds of news lead to over- or underreaction of stock prices, which features of a job interview increase racial disparities, or what features of an X-ray drive misdiagnosis.

Central to this work is the belief that hypothesis generation is a valuable activity in and of itself. Beyond whatever the value might be of our specific procedure and empirical application, we hope these results also inspire greater attention to this traditionally “prescientific” stage of science.

We develop a simple framework to clarify the goals of hypothesis generation and how it differs from testing, how algorithms might help, and how our specific approach to algorithmic hypothesis generation differs from existing methods. 12

II.A. The Goals of Hypothesis Generation

What criteria should we use for assessing hypothesis generation procedures? Two common goals for hypothesis generation are ones that we ensure ex post. First is novelty. In our application, we aim to orthogonalize against known factors, recognizing that it may be hard to orthogonalize against all known hypotheses. Second, we require that hypotheses be testable ( Popper 2002 ). But what can be tested is hard to define ex ante, in part because it depends on the specific hypothesis and the potential experimental setups. Creative empiricists over time often find ways to test hypotheses that previously seemed untestable. 13 To these, we add two more: interpretability and empirical plausibility.

What do we mean by empirically plausible? Let y be some outcome of interest, which for simplicity we assume is binary, and let h ( x ) be some hypothesis that maps the features of each instance, x , to [0,1]. By empirical plausibility we mean some correlation between y and h ( x ). Our ultimate aim is to uncover causal relationships. But causality can only be known after causal testing. That raises the question of how to come up with ideas worth causally testing, and how we would recognize them when we see them. Many true hypotheses need not be visible in raw correlations. Those can only be identified with background knowledge (e.g., theory). Other procedures would be required to surface those. Our focus here is on searching for true hypotheses that are visible in raw correlations. Of course not every correlation will turn out to be a true hypothesis, but even in those cases, generating such hypotheses and then invalidating them can be a valuable activity. Debunking spurious correlations has long been one of the most useful roles of empirical work. Understanding what confounders produce those correlations can also be useful.

We care about our final goal for hypothesis generation, interpretability, because science is largely about helping people make forecasts into new contexts, and people can only do that with hypotheses they meaningfully understand. Consider an uninterpretable hypothesis like “this set of defendants is more likely to be jailed than that set,” but we cannot articulate a reason why. From that hypothesis, nothing could be said about a new set of courtroom defendants. In contrast an interpretable hypothesis like “skin color affects detention” has implications for other samples of defendants and for entirely different settings. We could ask whether skin color also affects, say, police enforcement choices or whether these effects differ by time of day. By virtue of being interpretable, these hypotheses let us use a wider set of knowledge (police may share racial biases; skin color is not as easily detected at night). 14 Interpretable descriptions let us generalize to novel situations, in addition to being easier to communicate to key stakeholders and lending themselves to interpretable solutions.

II.B. Human versus Algorithmic Hypothesis Generation

Human hypothesis generation has the advantage of generating hypotheses that are interpretable. By construction, the ideas that humans come up with are understandable by humans. But as a procedure for generating new ideas, human creativity has the drawback of often being idiosyncratic and not necessarily replicable. A novel hypothesis is novel exactly because one person noticed it when many others did not. A large body of evidence shows that human judgments have a great deal of “noise.” It is not just that different people draw different conclusions from the same observations, but the same person may notice different things at different times ( Kahneman, Sibony, and Sunstein 2022 ). A large body of psychology research shows that people typically are not able to introspect and understand why we notice specific things those times we do notice them. 15

There is also no guarantee that human-generated hypotheses need be empirically plausible. The intuition is related to “overfitting.” Suppose that people look at a subset of all data and look for something that differentiates positive ( y = 1) from negative ( y = 0) cases. Even with no noise in y , there is randomness in which observations are in the data. That can lead to idiosyncratic differences between y = 0 and y = 1 cases. As the number of comprehensible hypotheses gets large, there is a “curse of dimensionality”: many plausible hypotheses for these idiosyncratic differences. That is, many different hypotheses can look good in sample but need not work out of sample. 16

In contrast, supervised learning tools in machine learning are designed to generate predictions in new (out-of-sample) data. 17 That is, algorithms generate hypotheses that are empirically plausible by construction. 18 Moreover, machine learning can detect patterns in data that humans cannot. Algorithms can notice, for example, that livestock all tend to be oriented north ( Begall et al. 2008 ), whether someone is about to have a heart attack based on subtle indications in an electrocardiogram ( Mullainathan and Obermeyer 2022 ), or that a piece of machinery is about to break ( Mobley 2002 ). We call these machine learning prediction functions m ( x ), which for a binary outcome y map to [0, 1].

The challenge is that most m ( x ) are not interpretable. For this type of statistical model to yield an interpretable hypothesis, its parameters must be interpretable. That can happen in some simple cases. For example, if we had a data set where each dimension of x was interpretable (such as individual structured variables in a tabular data set) and we used a predictor such as OLS (or LASSO), we could just read the hypotheses from the nonzero coefficients: which variables are significant? Even in that case, interpretation is challenging because machine learning tools, built to generate accurate predictions rather than apportion explanatory power across explanatory variables, yield coefficients that can be unstable across realizations of the data ( Mullainathan and Spiess 2017 ). 19 Often interpretation is much less straightforward than that. If x is an image, text, or time series, the estimated models (such as convolutional neural networks) can have literally millions of parameters. The models are defined on granular inputs with no particular meaning: if we knew m ( x ) weighted a particular pixel, what have we learned? In these cases, the estimated model m ( x ) is not interpretable. Our focus is on these contexts where algorithms, as black-box models, are not readily interpreted.

Ideally one might marry people’s unique knowledge of what is comprehensible with an algorithm’s superior capacity to find meaningful correlations in data: to have the algorithm discover new signal and then have humans name that discovery. How to do so is not straightforward. We might imagine formalizing the set of interpretable prediction functions, and then focus on creating machine learning techniques that search over functions in that set. But mathematically characterizing those functions is typically not possible. Or we might consider seeking insight from a low-dimensional representation of face space, or “eigenfaces,” which are a common teaching tool for principal components analysis ( Sirovich and Kirby 1987 ). But those turn out not to provide much useful insight for our purposes. 20 In some sense it is obvious why: the subset of actual faces is unlikely to be a linear subspace of the space of pixels. If we took two faces and linearly interpolated them the resulting image would not look like a face. Some other approach is needed. We build on methods in computer science that use generative models to generate counterfactual explanations.

II.C. Related Methods

Our hypothesis generation procedure is part of a growing literature that aims to integrate machine learning into the way science is conducted. A common use (outside of economics) is in what could be called “closed world problems”: situations where the fundamental laws are known, but drawing out predictions is computationally hard. For example, the biochemical rules of how proteins fold are known, but it is hard to predict the final shape of a protein. Machine learning has provided fundamental breakthroughs, in effect by making very hard-to-compute outcomes computable in a feasible timeframe. 21