Purdue Online Writing Lab Purdue OWL® College of Liberal Arts

Descriptive Statistics

Welcome to the Purdue OWL

This page is brought to you by the OWL at Purdue University. When printing this page, you must include the entire legal notice.

Copyright ©1995-2018 by The Writing Lab & The OWL at Purdue and Purdue University. All rights reserved. This material may not be published, reproduced, broadcast, rewritten, or redistributed without permission. Use of this site constitutes acceptance of our terms and conditions of fair use.

This handout explains how to write with statistics including quick tips, writing descriptive statistics, writing inferential statistics, and using visuals with statistics.

The mean, the mode, the median, the range, and the standard deviation are all examples of descriptive statistics. Descriptive statistics are used because in most cases, it isn't possible to present all of your data in any form that your reader will be able to quickly interpret.

Generally, when writing descriptive statistics, you want to present at least one form of central tendency (or average), that is, either the mean, median, or mode. In addition, you should present one form of variability , usually the standard deviation.

Measures of Central Tendency and Other Commonly Used Descriptive Statistics

The mean, median, and the mode are all measures of central tendency. They attempt to describe what the typical data point might look like. In essence, they are all different forms of 'the average.' When writing statistics, you never want to say 'average' because it is difficult, if not impossible, for your reader to understand if you are referring to the mean, the median, or the mode.

The mean is the most common form of central tendency, and is what most people usually are referring to when the say average. It is simply the total sum of all the numbers in a data set, divided by the total number of data points. For example, the following data set has a mean of 4: {-1, 0, 1, 16}. That is, 16 divided by 4 is 4. If there isn't a good reason to use one of the other forms of central tendency, then you should use the mean to describe the central tendency.

The median is simply the middle value of a data set. In order to calculate the median, all values in the data set need to be ordered, from either highest to lowest, or vice versa. If there are an odd number of values in a data set, then the median is easy to calculate. If there is an even number of values in a data set, then the calculation becomes more difficult. Statisticians still debate how to properly calculate a median when there is an even number of values, but for most purposes, it is appropriate to simply take the mean of the two middle values. The median is useful when describing data sets that are skewed or have extreme values. Incomes of baseballs players, for example, are commonly reported using a median because a small minority of baseball players makes a lot of money, while most players make more modest amounts. The median is less influenced by extreme scores than the mean.

The mode is the most commonly occurring number in the data set. The mode is best used when you want to indicate the most common response or item in a data set. For example, if you wanted to predict the score of the next football game, you may want to know what the most common score is for the visiting team, but having an average score of 15.3 won't help you if it is impossible to score 15.3 points. Likewise, a median score may not be very informative either, if you are interested in what score is most likely.

Standard Deviation

The standard deviation is a measure of variability (it is not a measure of central tendency). Conceptually it is best viewed as the 'average distance that individual data points are from the mean.' Data sets that are highly clustered around the mean have lower standard deviations than data sets that are spread out.

For example, the first data set would have a higher standard deviation than the second data set:

Notice that both groups have the same mean (5) and median (also 5), but the two groups contain different numbers and are organized much differently. This organization of a data set is often referred to as a distribution. Because the two data sets above have the same mean and median, but different standard deviation, we know that they also have different distributions. Understanding the distribution of a data set helps us understand how the data behave.

Quant Analysis 101: Descriptive Statistics

Everything You Need To Get Started (With Examples)

By: Derek Jansen (MBA) | Reviewers: Kerryn Warren (PhD) | October 2023

If you’re new to quantitative data analysis , one of the first terms you’re likely to hear being thrown around is descriptive statistics. In this post, we’ll unpack the basics of descriptive statistics, using straightforward language and loads of examples . So grab a cup of coffee and let’s crunch some numbers!

Overview: Descriptive Statistics

What are descriptive statistics.

- Descriptive vs inferential statistics

- Why the descriptives matter

- The “ Big 7 ” descriptive statistics

- Key takeaways

At the simplest level, descriptive statistics summarise and describe relatively basic but essential features of a quantitative dataset – for example, a set of survey responses. They provide a snapshot of the characteristics of your dataset and allow you to better understand, roughly, how the data are “shaped” (more on this later). For example, a descriptive statistic could include the proportion of males and females within a sample or the percentages of different age groups within a population.

Another common descriptive statistic is the humble average (which in statistics-talk is called the mean ). For example, if you undertook a survey and asked people to rate their satisfaction with a particular product on a scale of 1 to 10, you could then calculate the average rating. This is a very basic statistic, but as you can see, it gives you some idea of how this data point is shaped .

What about inferential statistics?

Now, you may have also heard the term inferential statistics being thrown around, and you’re probably wondering how that’s different from descriptive statistics. Simply put, descriptive statistics describe and summarise the sample itself , while inferential statistics use the data from a sample to make inferences or predictions about a population .

Put another way, descriptive statistics help you understand your dataset , while inferential statistics help you make broader statements about the population , based on what you observe within the sample. If you’re keen to learn more, we cover inferential stats in another post , or you can check out the explainer video below.

Why do descriptive statistics matter?

While descriptive statistics are relatively simple from a mathematical perspective, they play a very important role in any research project . All too often, students skim over the descriptives and run ahead to the seemingly more exciting inferential statistics, but this can be a costly mistake.

The reason for this is that descriptive statistics help you, as the researcher, comprehend the key characteristics of your sample without getting lost in vast amounts of raw data. In doing so, they provide a foundation for your quantitative analysis . Additionally, they enable you to quickly identify potential issues within your dataset – for example, suspicious outliers, missing responses and so on. Just as importantly, descriptive statistics inform the decision-making process when it comes to choosing which inferential statistics you’ll run, as each inferential test has specific requirements regarding the shape of the data.

Long story short, it’s essential that you take the time to dig into your descriptive statistics before looking at more “advanced” inferentials. It’s also worth noting that, depending on your research aims and questions, descriptive stats may be all that you need in any case . So, don’t discount the descriptives!

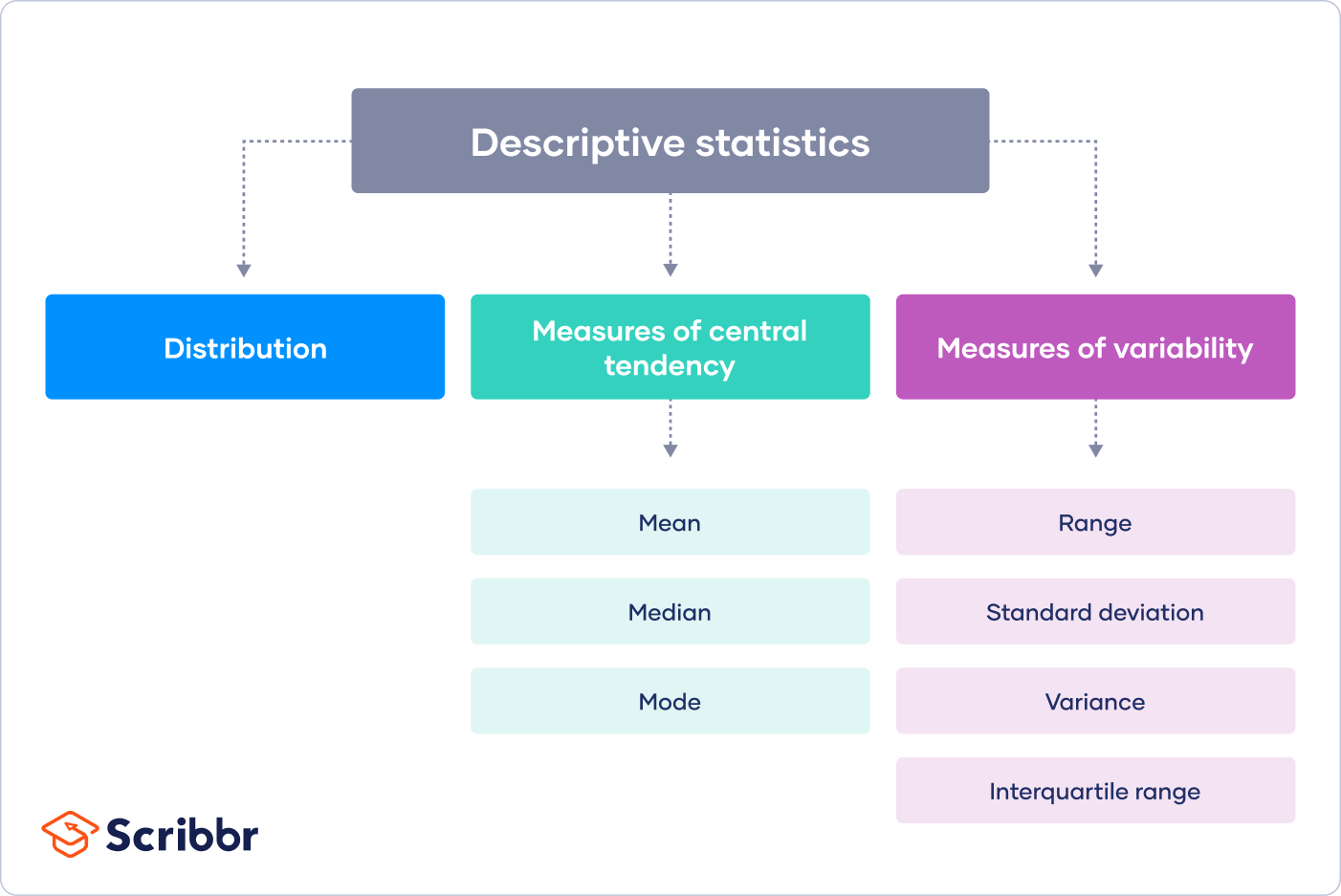

The “Big 7” descriptive statistics

With the what and why out of the way, let’s take a look at the most common descriptive statistics. Beyond the counts, proportions and percentages we mentioned earlier, we have what we call the “Big 7” descriptives. These can be divided into two categories – measures of central tendency and measures of dispersion.

Measures of central tendency

True to the name, measures of central tendency describe the centre or “middle section” of a dataset. In other words, they provide some indication of what a “typical” data point looks like within a given dataset. The three most common measures are:

The mean , which is the mathematical average of a set of numbers – in other words, the sum of all numbers divided by the count of all numbers.

The median , which is the middlemost number in a set of numbers, when those numbers are ordered from lowest to highest.

The mode , which is the most frequently occurring number in a set of numbers (in any order). Naturally, a dataset can have one mode, no mode (no number occurs more than once) or multiple modes.

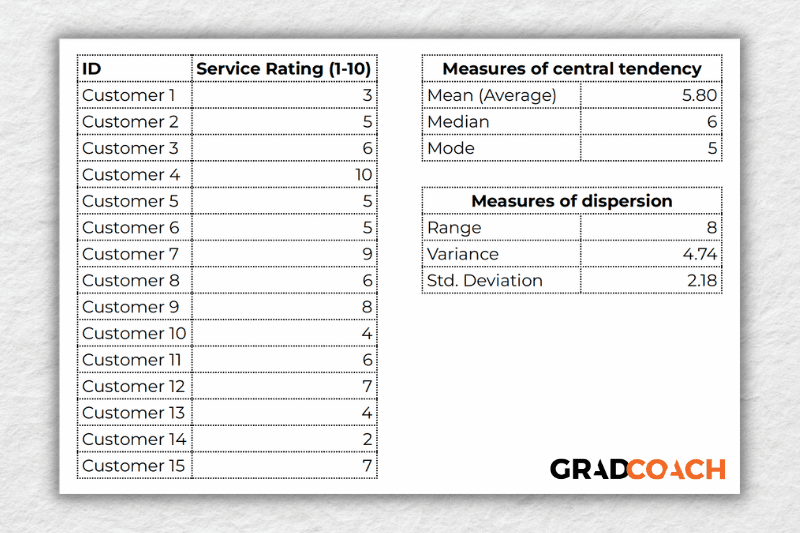

To make this a little more tangible, let’s look at a sample dataset, along with the corresponding mean, median and mode. This dataset reflects the service ratings (on a scale of 1 – 10) from 15 customers.

As you can see, the mean of 5.8 is the average rating across all 15 customers. Meanwhile, 6 is the median . In other words, if you were to list all the responses in order from low to high, Customer 8 would be in the middle (with their service rating being 6). Lastly, the number 5 is the most frequent rating (appearing 3 times), making it the mode.

Together, these three descriptive statistics give us a quick overview of how these customers feel about the service levels at this business. In other words, most customers feel rather lukewarm and there’s certainly room for improvement. From a more statistical perspective, this also means that the data tend to cluster around the 5-6 mark , since the mean and the median are fairly close to each other.

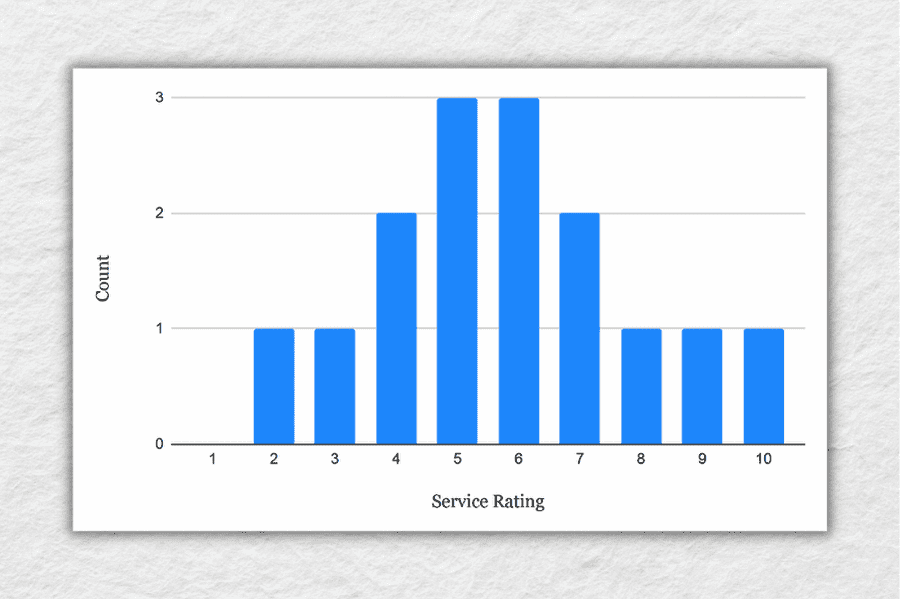

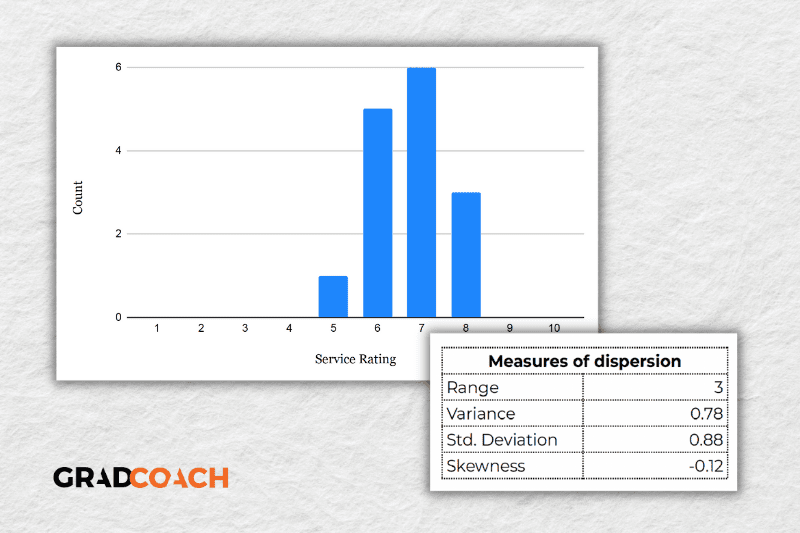

To take this a step further, let’s look at the frequency distribution of the responses . In other words, let’s count how many times each rating was received, and then plot these counts onto a bar chart.

As you can see, the responses tend to cluster toward the centre of the chart , creating something of a bell-shaped curve. In statistical terms, this is called a normal distribution .

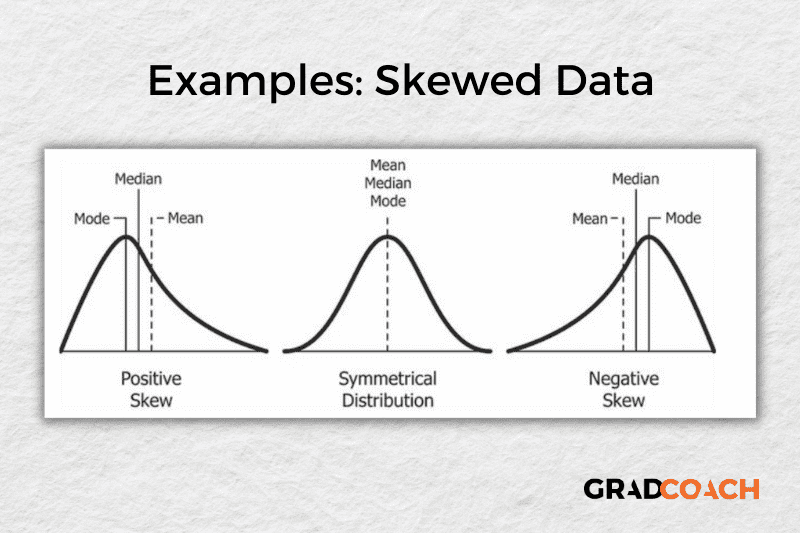

As you delve into quantitative data analysis, you’ll find that normal distributions are very common , but they’re certainly not the only type of distribution. In some cases, the data can lean toward the left or the right of the chart (i.e., toward the low end or high end). This lean is reflected by a measure called skewness , and it’s important to pay attention to this when you’re analysing your data, as this will have an impact on what types of inferential statistics you can use on your dataset.

Measures of dispersion

While the measures of central tendency provide insight into how “centred” the dataset is, it’s also important to understand how dispersed that dataset is . In other words, to what extent the data cluster toward the centre – specifically, the mean. In some cases, the majority of the data points will sit very close to the centre, while in other cases, they’ll be scattered all over the place. Enter the measures of dispersion, of which there are three:

Range , which measures the difference between the largest and smallest number in the dataset. In other words, it indicates how spread out the dataset really is.

Variance , which measures how much each number in a dataset varies from the mean (average). More technically, it calculates the average of the squared differences between each number and the mean. A higher variance indicates that the data points are more spread out , while a lower variance suggests that the data points are closer to the mean.

Standard deviation , which is the square root of the variance . It serves the same purposes as the variance, but is a bit easier to interpret as it presents a figure that is in the same unit as the original data . You’ll typically present this statistic alongside the means when describing the data in your research.

Again, let’s look at our sample dataset to make this all a little more tangible.

As you can see, the range of 8 reflects the difference between the highest rating (10) and the lowest rating (2). The standard deviation of 2.18 tells us that on average, results within the dataset are 2.18 away from the mean (of 5.8), reflecting a relatively dispersed set of data .

For the sake of comparison, let’s look at another much more tightly grouped (less dispersed) dataset.

As you can see, all the ratings lay between 5 and 8 in this dataset, resulting in a much smaller range, variance and standard deviation . You might also notice that the data are clustered toward the right side of the graph – in other words, the data are skewed. If we calculate the skewness for this dataset, we get a result of -0.12, confirming this right lean.

In summary, range, variance and standard deviation all provide an indication of how dispersed the data are . These measures are important because they help you interpret the measures of central tendency within context . In other words, if your measures of dispersion are all fairly high numbers, you need to interpret your measures of central tendency with some caution , as the results are not particularly centred. Conversely, if the data are all tightly grouped around the mean (i.e., low dispersion), the mean becomes a much more “meaningful” statistic).

Key Takeaways

We’ve covered quite a bit of ground in this post. Here are the key takeaways:

- Descriptive statistics, although relatively simple, are a critically important part of any quantitative data analysis.

- Measures of central tendency include the mean (average), median and mode.

- Skewness indicates whether a dataset leans to one side or another

- Measures of dispersion include the range, variance and standard deviation

If you’d like hands-on help with your descriptive statistics (or any other aspect of your research project), check out our private coaching service , where we hold your hand through each step of the research journey.

Psst… there’s more!

This post is an extract from our bestselling Udemy Course, Methodology Bootcamp . If you want to work smart, you don't want to miss this .

You Might Also Like:

Good day. May I ask about where I would be able to find the statistics cheat sheet?

Right above you comment 🙂

Good job. you saved me

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Have a thesis expert improve your writing

Check your thesis for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

Descriptive Statistics | Definitions, Types, Examples

Published on 4 November 2022 by Pritha Bhandari . Revised on 9 January 2023.

Descriptive statistics summarise and organise characteristics of a data set. A data set is a collection of responses or observations from a sample or entire population .

In quantitative research , after collecting data, the first step of statistical analysis is to describe characteristics of the responses, such as the average of one variable (e.g., age), or the relation between two variables (e.g., age and creativity).

The next step is inferential statistics , which help you decide whether your data confirms or refutes your hypothesis and whether it is generalisable to a larger population.

Table of contents

Types of descriptive statistics, frequency distribution, measures of central tendency, measures of variability, univariate descriptive statistics, bivariate descriptive statistics, frequently asked questions.

There are 3 main types of descriptive statistics:

- The distribution concerns the frequency of each value.

- The central tendency concerns the averages of the values.

- The variability or dispersion concerns how spread out the values are.

You can apply these to assess only one variable at a time, in univariate analysis, or to compare two or more, in bivariate and multivariate analysis.

- Go to a library

- Watch a movie at a theater

- Visit a national park

A data set is made up of a distribution of values, or scores. In tables or graphs, you can summarise the frequency of every possible value of a variable in numbers or percentages.

- Simple frequency distribution table

- Grouped frequency distribution table

From this table, you can see that more women than men or people with another gender identity took part in the study. In a grouped frequency distribution, you can group numerical response values and add up the number of responses for each group. You can also convert each of these numbers to percentages.

Measures of central tendency estimate the center, or average, of a data set. The mean , median and mode are 3 ways of finding the average.

Here we will demonstrate how to calculate the mean, median, and mode using the first 6 responses of our survey.

The mean , or M , is the most commonly used method for finding the average.

To find the mean, simply add up all response values and divide the sum by the total number of responses. The total number of responses or observations is called N .

The median is the value that’s exactly in the middle of a data set.

To find the median, order each response value from the smallest to the biggest. Then, the median is the number in the middle. If there are two numbers in the middle, find their mean.

The mode is the simply the most popular or most frequent response value. A data set can have no mode, one mode, or more than one mode.

To find the mode, order your data set from lowest to highest and find the response that occurs most frequently.

Measures of variability give you a sense of how spread out the response values are. The range, standard deviation and variance each reflect different aspects of spread.

The range gives you an idea of how far apart the most extreme response scores are. To find the range , simply subtract the lowest value from the highest value.

Standard deviation

The standard deviation ( s ) is the average amount of variability in your dataset. It tells you, on average, how far each score lies from the mean. The larger the standard deviation, the more variable the data set is.

There are six steps for finding the standard deviation:

- List each score and find their mean.

- Subtract the mean from each score to get the deviation from the mean.

- Square each of these deviations.

- Add up all of the squared deviations.

- Divide the sum of the squared deviations by N – 1.

- Find the square root of the number you found.

Step 5: 421.5/5 = 84.3

Step 6: √84.3 = 9.18

The variance is the average of squared deviations from the mean. Variance reflects the degree of spread in the data set. The more spread the data, the larger the variance is in relation to the mean.

To find the variance, simply square the standard deviation. The symbol for variance is s 2 .

Univariate descriptive statistics focus on only one variable at a time. It’s important to examine data from each variable separately using multiple measures of distribution, central tendency and spread. Programs like SPSS and Excel can be used to easily calculate these.

If you were to only consider the mean as a measure of central tendency, your impression of the ‘middle’ of the data set can be skewed by outliers, unlike the median or mode.

Likewise, while the range is sensitive to extreme values, you should also consider the standard deviation and variance to get easily comparable measures of spread.

If you’ve collected data on more than one variable, you can use bivariate or multivariate descriptive statistics to explore whether there are relationships between them.

In bivariate analysis, you simultaneously study the frequency and variability of two variables to see if they vary together. You can also compare the central tendency of the two variables before performing further statistical tests .

Multivariate analysis is the same as bivariate analysis but with more than two variables.

Contingency table

In a contingency table, each cell represents the intersection of two variables. Usually, an independent variable (e.g., gender) appears along the vertical axis and a dependent one appears along the horizontal axis (e.g., activities). You read ‘across’ the table to see how the independent and dependent variables relate to each other.

Interpreting a contingency table is easier when the raw data is converted to percentages. Percentages make each row comparable to the other by making it seem as if each group had only 100 observations or participants. When creating a percentage-based contingency table, you add the N for each independent variable on the end.

From this table, it is more clear that similar proportions of children and adults go to the library over 17 times a year. Additionally, children most commonly went to the library between 5 and 8 times, while for adults, this number was between 13 and 16.

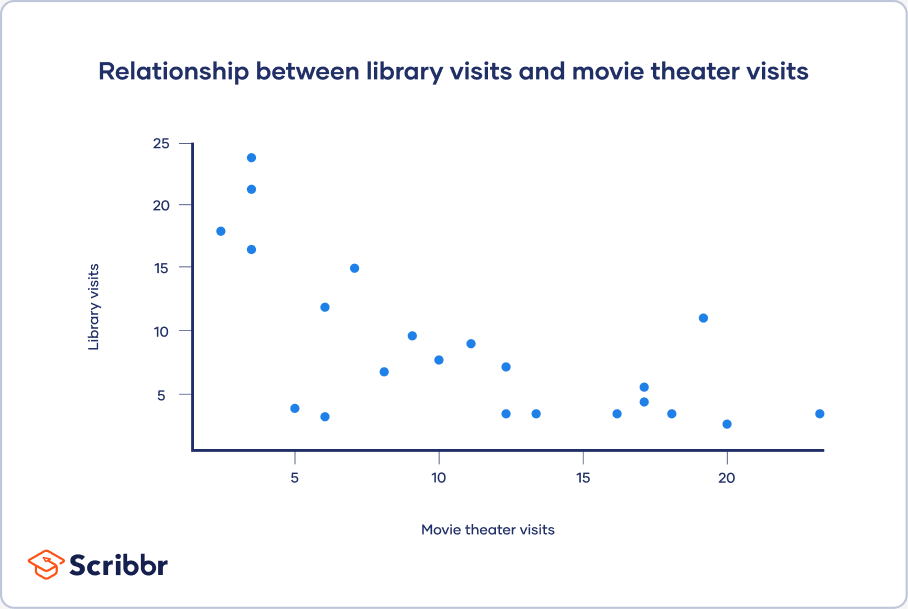

Scatter plots

A scatter plot is a chart that shows you the relationship between two or three variables. It’s a visual representation of the strength of a relationship.

In a scatter plot, you plot one variable along the x-axis and another one along the y-axis. Each data point is represented by a point in the chart.

From your scatter plot, you see that as the number of movies seen at movie theaters increases, the number of visits to the library decreases. Based on your visual assessment of a possible linear relationship, you perform further tests of correlation and regression.

Descriptive statistics summarise the characteristics of a data set. Inferential statistics allow you to test a hypothesis or assess whether your data is generalisable to the broader population.

The 3 main types of descriptive statistics concern the frequency distribution, central tendency, and variability of a dataset.

- Distribution refers to the frequencies of different responses.

- Measures of central tendency give you the average for each response.

- Measures of variability show you the spread or dispersion of your dataset.

- Univariate statistics summarise only one variable at a time.

- Bivariate statistics compare two variables .

- Multivariate statistics compare more than two variables .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bhandari, P. (2023, January 09). Descriptive Statistics | Definitions, Types, Examples. Scribbr. Retrieved 20 March 2024, from https://www.scribbr.co.uk/stats/descriptive-statistics-explained/

Is this article helpful?

Pritha Bhandari

Other students also liked, data collection methods | step-by-step guide & examples, variability | calculating range, iqr, variance, standard deviation, normal distribution | examples, formulas, & uses.

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

1.4 - example: descriptive statistics, example 1-5: women's health survey (descriptive statistics) section .

Let us take a look at an example. In 1985, the USDA commissioned a study of women’s nutrition. Nutrient intake was measured for a random sample of 737 women aged 25-50 years. The following variables were measured:

- Calcium(mg)

- Vitamin A(μg)

- Vitamin C(mg)

Using Technology

- Example

We will use the SAS program to carry out the calculations that we would like to see.

Download the data file: nutrient.csv

The lines of this program are saved in a simple text file with a .sas file extension. If you have SAS installed on the machine on which you have downloaded this file, it should launch SAS and open the program within the SAS application. Marking up a printout of the SAS program is also a good strategy for learning how this program is put together.

Note : In the upper right-hand corner of the code block you will have the option of copying ( ) the code to your clipboard or downloading ( ) the file to your computer.

The first part of this SAS output, (download below), is the results of the Means Procedure - proc means. Because the SAS output is usually a relatively long document, printing these pages of output out and marking them with notes is highly recommended if not required!

Example: Nutrient Intake Data - Descriptive Statistics

The MEANS Procedure

The Means Procedure

Summary statistics.

Download the SAS Output file: nutrient2.lst

The first column of the Means Procedure table above gives the variable name. The second column reports the sample size. This is then followed by the sample means (third column) and the sample standard deviations (fourth column) for each variable. I have copied these values into the table below. I have also rounded these numbers a bit to make them easier to use for this example.

Here are the steps to find the descriptive statistics for the Women's Nutrition dataset in Minitab:

Descriptive Statistics in Minitab

- Go to File > Open > Worksheet [open nutrient_tf.csv ]

- Highlight and select C2 through C6 and choose ‘Select ’ to move the variables into the window on the right.

- Select ‘ Statistics... ’, and check the boxes for the statistics of interest.

Descriptive Statistics

A summary of the descriptive statistics is given here for ease of reference.

Notice that the standard deviations are large relative to their respective means, especially for Vitamin A & C. This would indicate a high variability among women in nutrient intake. However, whether the standard deviations are relatively large or not, will depend on the context of the application. Skill in interpreting the statistical analysis depends very much on the researcher's subject matter knowledge.

The variance-covariance matrix is also copied into the matrix below.

\[S = \left(\begin{array}{RRRRR}157829.4 & 940.1 & 6075.8 & 102411.1 & 6701.6 \\ 940.1 & 35.8 & 114.1 & 2383.2 & 137.7 \\ 6075.8 & 114.1 & 934.9 & 7330.1 & 477.2 \\ 102411.1 & 2383.2 & 7330.1 & 2668452.4 & 22063.3 \\ 6701.6 & 137.7 & 477.2 & 22063.3 & 5416.3 \end{array}\right)\]

Interpretation

Because this covariance is positive, we see that calcium intake tends to increase with increasing iron intake. The strength of this positive association can only be judged by comparing s 12 to the product of the sample standard deviations for calcium and iron. This comparison is most readily accomplished by looking at the sample correlation between the two variables.

- The sample variances are given by the diagonal elements of S . For example, the variance of iron intake is \(s_{2}^{2}\). 35. 8 mg 2 .

- The covariances are given by the off-diagonal elements of S . For example, the covariance between calcium and iron intake is \(s_{12}\)= 940. 1.

- Note that, the covariances are all positive, indicating that the daily intake of each nutrient increases with increased intake of the remaining nutrients.

Sample Correlations

The sample correlations are included in the table below.

Here we can see that the correlation between each of the variables and themselves is all equal to one, and the off-diagonal elements give the correlation between each of the pairs of variables.

Generally, we look for the strongest correlations first. The results above suggest that protein, iron, and calcium are all positively associated. Each of these three nutrient increases with increasing values of the remaining two.

The coefficient of determination is another measure of association and is simply equal to the square of the correlation. For example, in this case, the coefficient of determination between protein and iron is \((0.623)^2\) or about 0.388.

\[r^2_{23} = 0.62337^2 = 0.38859\]

This says that about 39% of the variation in iron intake is explained by protein intake. Or, conversely, 39% of the protein intake is explained by the variation in the iron intake. Both interpretations are equivalent.

Popular searches

- How to Get Participants For Your Study

- How to Do Segmentation?

- Conjoint Preference Share Simulator

- MaxDiff Analysis

- Likert Scales

- Reliability & Validity

Request consultation

Do you need support in running a pricing or product study? We can help you with agile consumer research and conjoint analysis.

Looking for an online survey platform?

Conjointly offers a great survey tool with multiple question types, randomisation blocks, and multilingual support. The Basic tier is always free.

Research Methods Knowledge Base

- Navigating the Knowledge Base

- Foundations

- Measurement

- Research Design

- Conclusion Validity

- Data Preparation

- Correlation

- Inferential Statistics

- Table of Contents

Fully-functional online survey tool with various question types, logic, randomisation, and reporting for unlimited number of surveys.

Completely free for academics and students .

Descriptive Statistics

Descriptive statistics are used to describe the basic features of the data in a study. They provide simple summaries about the sample and the measures. Together with simple graphics analysis, they form the basis of virtually every quantitative analysis of data.

Descriptive statistics are typically distinguished from inferential statistics . With descriptive statistics you are simply describing what is or what the data shows. With inferential statistics, you are trying to reach conclusions that extend beyond the immediate data alone. For instance, we use inferential statistics to try to infer from the sample data what the population might think. Or, we use inferential statistics to make judgments of the probability that an observed difference between groups is a dependable one or one that might have happened by chance in this study. Thus, we use inferential statistics to make inferences from our data to more general conditions; we use descriptive statistics simply to describe what’s going on in our data.

Descriptive Statistics are used to present quantitative descriptions in a manageable form. In a research study we may have lots of measures. Or we may measure a large number of people on any measure. Descriptive statistics help us to simplify large amounts of data in a sensible way. Each descriptive statistic reduces lots of data into a simpler summary. For instance, consider a simple number used to summarize how well a batter is performing in baseball, the batting average. This single number is simply the number of hits divided by the number of times at bat (reported to three significant digits). A batter who is hitting .333 is getting a hit one time in every three at bats. One batting .250 is hitting one time in four. The single number describes a large number of discrete events. Or, consider the scourge of many students, the Grade Point Average (GPA). This single number describes the general performance of a student across a potentially wide range of course experiences.

Every time you try to describe a large set of observations with a single indicator you run the risk of distorting the original data or losing important detail. The batting average doesn’t tell you whether the batter is hitting home runs or singles. It doesn’t tell whether she’s been in a slump or on a streak. The GPA doesn’t tell you whether the student was in difficult courses or easy ones, or whether they were courses in their major field or in other disciplines. Even given these limitations, descriptive statistics provide a powerful summary that may enable comparisons across people or other units.

Univariate Analysis

Univariate analysis involves the examination across cases of one variable at a time. There are three major characteristics of a single variable that we tend to look at:

- the distribution

- the central tendency

- the dispersion

In most situations, we would describe all three of these characteristics for each of the variables in our study.

The Distribution

The distribution is a summary of the frequency of individual values or ranges of values for a variable. The simplest distribution would list every value of a variable and the number of persons who had each value. For instance, a typical way to describe the distribution of college students is by year in college, listing the number or percent of students at each of the four years. Or, we describe gender by listing the number or percent of males and females. In these cases, the variable has few enough values that we can list each one and summarize how many sample cases had the value. But what do we do for a variable like income or GPA? With these variables there can be a large number of possible values, with relatively few people having each one. In this case, we group the raw scores into categories according to ranges of values. For instance, we might look at GPA according to the letter grade ranges. Or, we might group income into four or five ranges of income values.

One of the most common ways to describe a single variable is with a frequency distribution . Depending on the particular variable, all of the data values may be represented, or you may group the values into categories first (e.g., with age, price, or temperature variables, it would usually not be sensible to determine the frequencies for each value. Rather, the value are grouped into ranges and the frequencies determined.). Frequency distributions can be depicted in two ways, as a table or as a graph. The table above shows an age frequency distribution with five categories of age ranges defined. The same frequency distribution can be depicted in a graph as shown in Figure 1. This type of graph is often referred to as a histogram or bar chart.

Distributions may also be displayed using percentages. For example, you could use percentages to describe the:

- percentage of people in different income levels

- percentage of people in different age ranges

- percentage of people in different ranges of standardized test scores

Central Tendency

The central tendency of a distribution is an estimate of the “center” of a distribution of values. There are three major types of estimates of central tendency:

The Mean or average is probably the most commonly used method of describing central tendency. To compute the mean all you do is add up all the values and divide by the number of values. For example, the mean or average quiz score is determined by summing all the scores and dividing by the number of students taking the exam. For example, consider the test score values:

The sum of these 8 values is 167 , so the mean is 167/8 = 20.875 .

The Median is the score found at the exact middle of the set of values. One way to compute the median is to list all scores in numerical order, and then locate the score in the center of the sample. For example, if there are 500 scores in the list, score #250 would be the median. If we order the 8 scores shown above, we would get:

There are 8 scores and score #4 and #5 represent the halfway point. Since both of these scores are 20 , the median is 20 . If the two middle scores had different values, you would have to interpolate to determine the median.

The Mode is the most frequently occurring value in the set of scores. To determine the mode, you might again order the scores as shown above, and then count each one. The most frequently occurring value is the mode. In our example, the value 15 occurs three times and is the model. In some distributions there is more than one modal value. For instance, in a bimodal distribution there are two values that occur most frequently.

Notice that for the same set of 8 scores we got three different values ( 20.875 , 20 , and 15 ) for the mean, median and mode respectively. If the distribution is truly normal (i.e., bell-shaped), the mean, median and mode are all equal to each other.

Dispersion refers to the spread of the values around the central tendency. There are two common measures of dispersion, the range and the standard deviation. The range is simply the highest value minus the lowest value. In our example distribution, the high value is 36 and the low is 15 , so the range is 36 - 15 = 21 .

The Standard Deviation is a more accurate and detailed estimate of dispersion because an outlier can greatly exaggerate the range (as was true in this example where the single outlier value of 36 stands apart from the rest of the values. The Standard Deviation shows the relation that set of scores has to the mean of the sample. Again lets take the set of scores:

to compute the standard deviation, we first find the distance between each value and the mean. We know from above that the mean is 20.875 . So, the differences from the mean are:

Notice that values that are below the mean have negative discrepancies and values above it have positive ones. Next, we square each discrepancy:

Now, we take these “squares” and sum them to get the Sum of Squares (SS) value. Here, the sum is 350.875 . Next, we divide this sum by the number of scores minus 1 . Here, the result is 350.875 / 7 = 50.125 . This value is known as the variance . To get the standard deviation, we take the square root of the variance (remember that we squared the deviations earlier). This would be SQRT(50.125) = 7.079901129253 .

Although this computation may seem convoluted, it’s actually quite simple. To see this, consider the formula for the standard deviation:

- X is each score,

- X̄ is the mean (or average),

- n is the number of values,

- Σ means we sum across the values.

In the top part of the ratio, the numerator, we see that each score has the mean subtracted from it, the difference is squared, and the squares are summed. In the bottom part, we take the number of scores minus 1 . The ratio is the variance and the square root is the standard deviation. In English, we can describe the standard deviation as:

the square root of the sum of the squared deviations from the mean divided by the number of scores minus one.

Although we can calculate these univariate statistics by hand, it gets quite tedious when you have more than a few values and variables. Every statistics program is capable of calculating them easily for you. For instance, I put the eight scores into SPSS and got the following table as a result:

which confirms the calculations I did by hand above.

The standard deviation allows us to reach some conclusions about specific scores in our distribution. Assuming that the distribution of scores is normal or bell-shaped (or close to it!), the following conclusions can be reached:

- approximately 68% of the scores in the sample fall within one standard deviation of the mean

- approximately 95% of the scores in the sample fall within two standard deviations of the mean

- approximately 99% of the scores in the sample fall within three standard deviations of the mean

For instance, since the mean in our example is 20.875 and the standard deviation is 7.0799 , we can from the above statement estimate that approximately 95% of the scores will fall in the range of 20.875-(2*7.0799) to 20.875+(2*7.0799) or between 6.7152 and 35.0348 . This kind of information is a critical stepping stone to enabling us to compare the performance of an individual on one variable with their performance on another, even when the variables are measured on entirely different scales.

Cookie Consent

Conjointly uses essential cookies to make our site work. We also use additional cookies in order to understand the usage of the site, gather audience analytics, and for remarketing purposes.

For more information on Conjointly's use of cookies, please read our Cookie Policy .

Which one are you?

I am new to conjointly, i am already using conjointly.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Indian Dermatol Online J

- v.10(1); Jan-Feb 2019

Types of Variables, Descriptive Statistics, and Sample Size

Feroze kaliyadan.

Department of Dermatology, King Faisal University, Al Hofuf, Saudi Arabia

Vinay Kulkarni

1 Department of Dermatology, Prayas Amrita Clinic, Pune, Maharashtra, India

This short “snippet” covers three important aspects related to statistics – the concept of variables , the importance, and practical aspects related to descriptive statistics and issues related to sampling – types of sampling and sample size estimation.

What is a variable?[ 1 , 2 ] To put it in very simple terms, a variable is an entity whose value varies. A variable is an essential component of any statistical data. It is a feature of a member of a given sample or population, which is unique, and can differ in quantity or quantity from another member of the same sample or population. Variables either are the primary quantities of interest or act as practical substitutes for the same. The importance of variables is that they help in operationalization of concepts for data collection. For example, if you want to do an experiment based on the severity of urticaria, one option would be to measure the severity using a scale to grade severity of itching. This becomes an operational variable. For a variable to be “good,” it needs to have some properties such as good reliability and validity, low bias, feasibility/practicality, low cost, objectivity, clarity, and acceptance. Variables can be classified into various ways as discussed below.

Quantitative vs qualitative

A variable can collect either qualitative or quantitative data. A variable differing in quantity is called a quantitative variable (e.g., weight of a group of patients), whereas a variable differing in quality is called a qualitative variable (e.g., the Fitzpatrick skin type)

A simple test which can be used to differentiate between qualitative and quantitative variables is the subtraction test. If you can subtract the value of one variable from the other to get a meaningful result, then you are dealing with a quantitative variable (this of course will not apply to rating scales/ranks).

Quantitative variables can be either discrete or continuous

Discrete variables are variables in which no values may be assumed between the two given values (e.g., number of lesions in each patient in a sample of patients with urticaria).

Continuous variables, on the other hand, can take any value in between the two given values (e.g., duration for which the weals last in the same sample of patients with urticaria). One way of differentiating between continuous and discrete variables is to use the “mid-way” test. If, for every pair of values of a variable, a value exactly mid-way between them is meaningful, the variable is continuous. For example, two values for the time taken for a weal to subside can be 10 and 13 min. The mid-way value would be 11.5 min which makes sense. However, for a number of weals, suppose you have a pair of values – 5 and 8 – the midway value would be 6.5 weals, which does not make sense.

Under the umbrella of qualitative variables, you can have nominal/categorical variables and ordinal variables

Nominal/categorical variables are, as the name suggests, variables which can be slotted into different categories (e.g., gender or type of psoriasis).

Ordinal variables or ranked variables are similar to categorical, but can be put into an order (e.g., a scale for severity of itching).

Dependent and independent variables

In the context of an experimental study, the dependent variable (also called outcome variable) is directly linked to the primary outcome of the study. For example, in a clinical trial on psoriasis, the PASI (psoriasis area severity index) would possibly be one dependent variable. The independent variable (sometime also called explanatory variable) is something which is not affected by the experiment itself but which can be manipulated to affect the dependent variable. Other terms sometimes used synonymously include blocking variable, covariate, or predictor variable. Confounding variables are extra variables, which can have an effect on the experiment. They are linked with dependent and independent variables and can cause spurious association. For example, in a clinical trial for a topical treatment in psoriasis, the concomitant use of moisturizers might be a confounding variable. A control variable is a variable that must be kept constant during the course of an experiment.

Descriptive Statistics

Statistics can be broadly divided into descriptive statistics and inferential statistics.[ 3 , 4 ] Descriptive statistics give a summary about the sample being studied without drawing any inferences based on probability theory. Even if the primary aim of a study involves inferential statistics, descriptive statistics are still used to give a general summary. When we describe the population using tools such as frequency distribution tables, percentages, and other measures of central tendency like the mean, for example, we are talking about descriptive statistics. When we use a specific statistical test (e.g., Mann–Whitney U-test) to compare the mean scores and express it in terms of statistical significance, we are talking about inferential statistics. Descriptive statistics can help in summarizing data in the form of simple quantitative measures such as percentages or means or in the form of visual summaries such as histograms and box plots.

Descriptive statistics can be used to describe a single variable (univariate analysis) or more than one variable (bivariate/multivariate analysis). In the case of more than one variable, descriptive statistics can help summarize relationships between variables using tools such as scatter plots.

Descriptive statistics can be broadly put under two categories:

- Sorting/grouping and illustration/visual displays

- Summary statistics.

Sorting and grouping

Sorting and grouping is most commonly done using frequency distribution tables. For continuous variables, it is generally better to use groups in the frequency table. Ideally, group sizes should be equal (except in extreme ends where open groups are used; e.g., age “greater than” or “less than”).

Another form of presenting frequency distributions is the “stem and leaf” diagram, which is considered to be a more accurate form of description.

Suppose the weight in kilograms of a group of 10 patients is as follows:

56, 34, 48, 43, 87, 78, 54, 62, 61, 59

The “stem” records the value of the “ten's” place (or higher) and the “leaf” records the value in the “one's” place [ Table 1 ].

Stem and leaf plot

Illustration/visual display of data

The most common tools used for visual display include frequency diagrams, bar charts (for noncontinuous variables) and histograms (for continuous variables). Composite bar charts can be used to compare variables. For example, the frequency distribution in a sample population of males and females can be illustrated as given in Figure 1 .

Composite bar chart

A pie chart helps show how a total quantity is divided among its constituent variables. Scatter diagrams can be used to illustrate the relationship between two variables. For example, global scores given for improvement in a condition like acne by the patient and the doctor [ Figure 2 ].

Scatter diagram

Summary statistics

The main tools used for summary statistics are broadly grouped into measures of central tendency (such as mean, median, and mode) and measures of dispersion or variation (such as range, standard deviation, and variance).

Imagine that the data below represent the weights of a sample of 15 pediatric patients arranged in ascending order:

30, 35, 37, 38, 38, 38, 42, 42, 44, 46, 47, 48, 51, 53, 86

Just having the raw data does not mean much to us, so we try to express it in terms of some values, which give a summary of the data.

The mean is basically the sum of all the values divided by the total number. In this case, we get a value of 45.

The problem is that some extreme values (outliers), like “'86,” in this case can skew the value of the mean. In this case, we consider other values like the median, which is the point that divides the distribution into two equal halves. It is also referred to as the 50 th percentile (50% of the values are above it and 50% are below it). In our previous example, since we have already arranged the values in ascending order we find that the point which divides it into two equal halves is the 8 th value – 42. In case of a total number of values being even, we choose the two middle points and take an average to reach the median.

The mode is the most common data point. In our example, this would be 38. The mode as in our case may not necessarily be in the center of the distribution.

The median is the best measure of central tendency from among the mean, median, and mode. In a “symmetric” distribution, all three are the same, whereas in skewed data the median and mean are not the same; lie more toward the skew, with the mean lying further to the skew compared with the median. For example, in Figure 3 , a right skewed distribution is seen (direction of skew is based on the tail); data values' distribution is longer on the right-hand (positive) side than on the left-hand side. The mean is typically greater than the median in such cases.

Location of mode, median, and mean

Measures of dispersion

The range gives the spread between the lowest and highest values. In our previous example, this will be 86-30 = 56.

A more valuable measure is the interquartile range. A quartile is one of the values which break the distribution into four equal parts. The 25 th percentile is the data point which divides the group between the first one-fourth and the last three-fourth of the data. The first one-fourth will form the first quartile. The 75 th percentile is the data point which divides the distribution into a first three-fourth and last one-fourth (the last one-fourth being the fourth quartile). The range between the 25 th percentile and 75 th percentile is called the interquartile range.

Variance is also a measure of dispersion. The larger the variance, the further the individual units are from the mean. Let us consider the same example we used for calculating the mean. The mean was 45.

For the first value (30), the deviation from the mean will be 15; for the last value (86), the deviation will be 41. Similarly we can calculate the deviations for all values in a sample. Adding these deviations and averaging will give a clue to the total dispersion, but the problem is that since the deviations are a mix of negative and positive values, the final total becomes zero. To calculate the variance, this problem is overcome by adding squares of the deviations. So variance would be the sum of squares of the variation divided by the total number in the population (for a sample we use “n − 1”). To get a more realistic value of the average dispersion, we take the square root of the variance, which is called the “standard deviation.”

The box plot

The box plot is a composite representation that portrays the mean, median, range, and the outliers [ Figure 4 ].

The concept of skewness and kurtosis

Skewness is a measure of the symmetry of distribution. Basically if the distribution curve is symmetric, it looks the same on either side of the central point. When this is not the case, it is said to be skewed. Kurtosis is a representation of outliers. Distributions with high kurtosis tend to have “heavy tails” indicating a larger number of outliers, whereas distributions with low kurtosis have light tails, indicating lesser outliers. There are formulas to calculate both skewness and kurtosis [Figures [Figures5 5 – 8 ].

Positive skew

High kurtosis (positive kurtosis – also called leptokurtic)

Negative skew

Low kurtosis (negative kurtosis – also called “Platykurtic”)

Sample Size

In an ideal study, we should be able to include all units of a particular population under study, something that is referred to as a census.[ 5 , 6 ] This would remove the chances of sampling error (difference between the outcome characteristics in a random sample when compared with the true population values – something that is virtually unavoidable when you take a random sample). However, it is obvious that this would not be feasible in most situations. Hence, we have to study a subset of the population to reach to our conclusions. This representative subset is a sample and we need to have sufficient numbers in this sample to make meaningful and accurate conclusions and reduce the effect of sampling error.

We also need to know that broadly sampling can be divided into two types – probability sampling and nonprobability sampling. Examples of probability sampling include methods such as simple random sampling (each member in a population has an equal chance of being selected), stratified random sampling (in nonhomogeneous populations, the population is divided into subgroups – followed be random sampling in each subgroup), systematic (sampling is based on a systematic technique – e.g., every third person is selected for a survey), and cluster sampling (similar to stratified sampling except that the clusters here are preexisting clusters unlike stratified sampling where the researcher decides on the stratification criteria), whereas nonprobability sampling, where every unit in the population does not have an equal chance of inclusion into the sample, includes methods such as convenience sampling (e.g., sample selected based on ease of access) and purposive sampling (where only people who meet specific criteria are included in the sample).

An accurate calculation of sample size is an essential aspect of good study design. It is important to calculate the sample size much in advance, rather than have to go for post hoc analysis. A sample size that is too less may make the study underpowered, whereas a sample size which is more than necessary might lead to a wastage of resources.

We will first go through the sample size calculation for a hypothesis-based design (like a randomized control trial).

The important factors to consider for sample size calculation include study design, type of statistical test, level of significance, power and effect size, variance (standard deviation for quantitative data), and expected proportions in the case of qualitative data. This is based on previous data, either based on previous studies or based on the clinicians' experience. In case the study is something being conducted for the first time, a pilot study might be conducted which helps generate these data for further studies based on a larger sample size). It is also important to know whether the data follow a normal distribution or not.

Two essential aspects we must understand are the concept of Type I and Type II errors. In a study that compares two groups, a null hypothesis assumes that there is no significant difference between the two groups, and any observed difference being due to sampling or experimental error. When we reject a null hypothesis, when it is true, we label it as a Type I error (also denoted as “alpha,” correlating with significance levels). In a Type II error (also denoted as “beta”), we fail to reject a null hypothesis, when the alternate hypothesis is actually true. Type II errors are usually expressed as “1- β,” correlating with the power of the test. While there are no absolute rules, the minimal levels accepted are 0.05 for α (corresponding to a significance level of 5%) and 0.20 for β (corresponding to a minimum recommended power of “1 − 0.20,” or 80%).

Effect size and minimal clinically relevant difference

For a clinical trial, the investigator will have to decide in advance what clinically detectable change is significant (for numerical data, this is could be the anticipated outcome means in the two groups, whereas for categorical data, it could correlate with the proportions of successful outcomes in two groups.). While we will not go into details of the formula for sample size calculation, some important points are as follows:

In the context where effect size is involved, the sample size is inversely proportional to the square of the effect size. What this means in effect is that reducing the effect size will lead to an increase in the required sample size.

Reducing the level of significance (alpha) or increasing power (1-β) will lead to an increase in the calculated sample size.

An increase in variance of the outcome leads to an increase in the calculated sample size.

A note is that for estimation type of studies/surveys, sample size calculation needs to consider some other factors too. This includes an idea about total population size (this generally does not make a major difference when population size is above 20,000, so in situations where population size is not known we can assume a population of 20,000 or more). The other factor is the “margin of error” – the amount of deviation which the investigators find acceptable in terms of percentages. Regarding confidence levels, ideally, a 95% confidence level is the minimum recommended for surveys too. Finally, we need an idea of the expected/crude prevalence – either based on previous studies or based on estimates.

Sample size calculation also needs to add corrections for patient drop-outs/lost-to-follow-up patients and missing records. An important point is that in some studies dealing with rare diseases, it may be difficult to achieve desired sample size. In these cases, the investigators might have to rework outcomes or maybe pool data from multiple centers. Although post hoc power can be analyzed, a better approach suggested is to calculate 95% confidence intervals for the outcome and interpret the study results based on this.

Financial support and sponsorship

Conflicts of interest.

There are no conflicts of interest.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

68 Descriptive Statistics

Quantitative data are analyzed in two main ways: (1) Descriptive statistics, which describe the data (the characteristics of the sample); and (2) Inferential statistics. More formally, descriptive analysis “refers to statistically describing, aggregating, and presenting the constructs of interest or associations between these constructs” (Bhattacherjee, 2012, p. 119). All quantitative data analysis must provide some descriptive statistics. Inferential analysis, on the other hand, allows you to draw inferences from the data, i.e., make predictions or deductions about the population from which the sample is drawn.

Developing Descriptive Statistics

As mentioned above, descriptive statistics are used to summarize data (mean, mode, median, variance, percentages, ratios, standard deviation, range, skewness and kurtosis). When one is describing or summarizing the distribution of a single variable, he/she/they are doing univariate descriptive statistics (e.g. mean age) . However, if you are interested in describing the relationship between two variables, this is called bivariate descriptive statistics (e.g. mean female age) and if you are interested in more than two variables, you are presenting multivariate descriptive statistics (e.g. mean rural female age). You should always present descriptive statistics in your quantitative papers because they provide your readers with baseline information about variables in a dataset, which can indicate potential relationships between variables. In other words, they provide information on what kind of bivariate, multivariate and inferential analyses might be possible. Box 10.4.1.1 provide some resources for generating and interpreting descriptive statistics. Next, we will discuss how to present and describe descriptive statistics in your papers.

Box 10.2 – Resources for Generating and Interpreting Descriptive Resources

See UBC Research Commons for tutorials on how to generate and interpret descriptive statistics in SPSS: https://researchcommons.library.ubc.ca/introduction-to-spss-for-statistical-analysis/

See also this video for a STATA tutorial on how to generate descriptive statistics: Descriptive statistics in Stata® – YouTube

Presenting descriptive statistics

There are several ways of presenting descriptive statistics in your paper. These include graphs, central tendency, dispersion and measures of association tables.

- Graphs: Quantitative data can be graphically represented in histograms, pie charts, scatter plots, line graphs, sociograms and geographic information systems. You are likely familiar with the first four from your social statistics course, so let us discuss the latter two. Sociograms are tools for “charting the relationships within a group. It’s a visual representation of the social links and preferences that each person has” (Six Seconds, 2020). They are a quick way for researchers to represent and understand networks of relationships among variables. Geographic information systems (GIS) help researchers to develop maps to represent the data according to locations. GIS can be used when spatial data is part of your dataset and might be useful in research concerning environmental degradation, social demography and migration patterns (see Higgins, 2017 for more details about GIS in social research).

There are specific ways of presenting graphs in your paper depending on the referencing style used. Since many social sciences disciplines use APA, in this chapter, we demonstrate the presentation of data according to the APA referencing style. Box 10.4.2.3 below outlines some guidance for presenting graphs and other figures in your paper according to the APA format while Box 10.4.2.4 provides tips for presenting descriptives for continuous variables.

Box 10.3 – Graphs and Figures in APA

Graphs and figures presented in APA must follow the guidelines linked below.

Source: APA. (2022). Figure Setup. American Psychological Association. https://apastyle.apa.org/style-grammar-guidelines/tables-figures/figures

Box 10.4 – Tips for Presenting Descriptives for Continuous Variables

- Remember, we do not calculate the means for Nominal and Ordinal Variables. We only describe the percentages for each attribute.

- For continuous variables (Ratio/Interval), we do not describe the percentages, we describe, means, range (min, max), standard errors, standard deviation.

- Present all the continuous variables in one table

- Variables (not attributes) go in the rows

- Use separate columns for the descriptive (Mean, S.E. Std. Deviation, Min, Max, N).

To provide a practical illustration of the tips presented in Box 10.4.2.4, we provide some hypothetical data of what a descriptive table might look like in your paper (following APA guidance) in the following box.

Frequency distributions are tables that summarize the distribution of variables by reporting the number of cases contained in each category of the variable. Frequency distributions are best used to represent nominal and ordinal variables but typically not continuous variables interval and ratio variables because of the potentially large number of categories. APA has specific guidelines for presenting tables (including frequency tables, correlation tables, factor analysis tables, analysis of variance tables, and regression tables), see the following box.

Box 10.5 – Presenting Tables in APA

Tables presented in APA are required to follow the APA guidelines outlined in the following link.

Source: APA. (2021). Table Setup. American Psychological Association. https://apastyle.apa.org/style-grammar-guidelines/tables-figures/tables

Measures of central tendency & Dispersion

Measures of central tendency are values describe a set of data by identifying the central positions within it. These include mean, mode, media, point estimate, skewness and confidence interval. Measures of dispersion tell how spread out a variable’s values are. There are four key measures of dispersion: range, variance, standard deviation and skewness. In your paper, you will typically report on N (number of cases), SD (standard deviation, M (mean).

Consider the output from SPSS as presented in Box 10.4.3.1. Note that even though the SPSS output includes all the statistics that you need for central tendency, you will need to convert this table so it fits APA standards (see Box 10.4.2.5 and Box 10.4.2.6). We encourage you to practice by converting Box 10.4.3.1 to APA standard for presenting descriptive statistics.

UBC Research Commons for tutorials on how to generate and interpret measures of central tendency and discpersion in SPSS https://researchcommons.library.ubc.ca/introduction-to-spss-for-statistical-analysis/

In your paper, you are most likely going to report on N, SD and M (see Box 10.3.3.2). You would simply report the findings as follows:

“The computed measures of central tendency and dispersion were as follows: N=1525, M=72.56, SD=6.52”

You should never leave your results without interpretation. Hence, you might add a sentence such as:

“The average grade in this course is typical at the university, but the large standard deviation indicates that there was considerable variation around the mean”.

Remember, that Means (M) might not be the best measure of central tendency to report. The kind of variable dictates the best measure of central tendency. For instance, when discussing nominal variables, it is best to report the mode; for ordinal variables, it is best to report the median; and for interval/ratio variables (as in our example above), it is best to report the mean. However, if interval/ratio variables are skewed, it is best to report the median.

APA. (2022). Figure Setup. American Psychological Association. https://apastyle.apa.org/style-grammar-guidelines/tables-figures/figures

APA. (2021). Table Setup. American Psychological Association. https://apastyle.apa.org/style-grammar-guidelines/tables-figures/tables

Bhattacherjee, Anol. (2012). Social Science Research: Principles, Methods, and Practices Textbooks Collection. https://scholarcommons.usf.edu/cgi/viewcontent.cgi?referer=&httpsredir=1&article=1002&context=oa_textbooks

Higgins, A. (2017). Using GIS in social science research. Susplace:Sustainable Place Shaping. https://www.sustainableplaceshaping.net/using-gis-in-social-scientific-research/

Tools for charting the relationships and visually representing the social links and preferences of individuals.

Representations, either in a graphical or tabular format, that displays the number of observations.

Practicing and Presenting Social Research Copyright © 2022 by Oral Robinson and Alexander Wilson is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License , except where otherwise noted.

Share This Book

18 Descriptive Research Examples

Descriptive research involves gathering data to provide a detailed account or depiction of a phenomenon without manipulating variables or conducting experiments.

A scholarly definition is:

“Descriptive research is defined as a research approach that describes the characteristics of the population, sample or phenomenon studied. This method focuses more on the “what” rather than the “why” of the research subject.” (Matanda, 2022, p. 63)

The key feature of descriptive research is that it merely describes phenomena and does not attempt to manipulate variables nor determine cause and effect .

To determine cause and effect , a researcher would need to use an alternate methodology, such as experimental research design .

Common approaches to descriptive research include:

- Cross-sectional research : A cross-sectional study gathers data on a population at a specific time to get descriptive data that could include categories (e.g. age or income brackets) to get a better understanding of the makeup of a population.

- Longitudinal research : Longitudinal studies return to a population to collect data at several different points in time, allowing for description of changes in categories over time. However, as it’s descriptive, it cannot infer cause and effect (Erickson, 2017).

Methods that could be used include:

- Surveys: For example, sending out a census survey to be completed at the exact same date and time by everyone in a population.

- Case Study : For example, an in-depth description of a specific person or group of people to gain in-depth qualitative information that can describe a phenomenon but cannot be generalized to other cases.

- Observational Method : For example, a researcher taking field notes in an ethnographic study. (Siedlecki, 2020)

Descriptive Research Examples

1. Understanding Autism Spectrum Disorder (Psychology): Researchers analyze various behavior patterns, cognitive skills, and social interaction abilities specific to children with Autism Spectrum Disorder to comprehensively describe the disorder’s symptom spectrum. This detailed description classifies it as descriptive research, rather than analytical or experimental, as it merely records what is observed without altering any variables or trying to establish causality.

2. Consumer Purchase Decision Process in E-commerce Marketplaces (Marketing): By documenting and describing all the factors that influence consumer decisions on online marketplaces, researchers don’t attempt to predict future behavior or establish causes—just describe observed behavior—making it descriptive research.

3. Impacts of Climate Change on Agricultural Practices (Environmental Studies): Descriptive research is seen as scientists outline how climate changes influence various agricultural practices by observing and then meticulously categorizing the impacts on crop variability, farming seasons, and pest infestations without manipulating any variables in real-time.

4. Work Environment and Employee Performance (Human Resources Management): A study of this nature, describing the correlation between various workplace elements and employee performance, falls under descriptive research as it merely narrates the observed patterns without altering any conditions or testing hypotheses.

5. Factors Influencing Student Performance (Education): Researchers describe various factors affecting students’ academic performance, such as studying techniques, parental involvement, and peer influence. The study is categorized as descriptive research because its principal aim is to depict facts as they stand without trying to infer causal relationships.

6. Technological Advances in Healthcare (Healthcare): This research describes and categorizes different technological advances (such as telemedicine, AI-enabled tools, digital collaboration) in healthcare without testing or modifying any parameters, making it an example of descriptive research.

7. Urbanization and Biodiversity Loss (Ecology): By describing the impact of rapid urban expansion on biodiversity loss, this study serves as a descriptive research example. It observes the ongoing situation without manipulating it, offering a comprehensive depiction of the existing scenario rather than investigating the cause-effect relationship.

8. Architectural Styles across Centuries (Art History): A study documenting and describing various architectural styles throughout centuries essentially represents descriptive research. It aims to narrate and categorize facts without exploring the underlying reasons or predicting future trends.

9. Media Usage Patterns among Teenagers (Sociology): When researchers document and describe the media consumption habits among teenagers, they are performing a descriptive research study. Their main intention is to observe and report the prevailing trends rather than establish causes or predict future behaviors.

10. Dietary Habits and Lifestyle Diseases (Nutrition Science): By describing the dietary patterns of different population groups and correlating them with the prevalence of lifestyle diseases, researchers perform descriptive research. They merely describe observed connections without altering any diet plans or lifestyles.

11. Shifts in Global Energy Consumption (Environmental Economics): When researchers describe the global patterns of energy consumption and how they’ve shifted over the years, they conduct descriptive research. The focus is on recording and portraying the current state without attempting to infer causes or predict the future.

12. Literacy and Employment Rates in Rural Areas (Sociology): A study aims at describing the literacy rates in rural areas and correlating it with employment levels. It falls under descriptive research because it maps the scenario without manipulating parameters or proving a hypothesis.

13. Women Representation in Tech Industry (Gender Studies): A detailed description of the presence and roles of women across various sectors of the tech industry is a typical case of descriptive research. It merely observes and records the status quo without establishing causality or making predictions.

14. Impact of Urban Green Spaces on Mental Health (Environmental Psychology): When researchers document and describe the influence of green urban spaces on residents’ mental health, they are undertaking descriptive research. They seek purely to understand the current state rather than exploring cause-effect relationships.

15. Trends in Smartphone usage among Elderly (Gerontology): Research describing how the elderly population utilizes smartphones, including popular features and challenges encountered, serves as descriptive research. Researcher’s aim is merely to capture what is happening without manipulating variables or posing predictions.

16. Shifts in Voter Preferences (Political Science): A study describing the shift in voter preferences during a particular electoral cycle is descriptive research. It simply records the preferences revealed without drawing causal inferences or suggesting future voting patterns.

17. Understanding Trust in Autonomous Vehicles (Transportation Psychology): This comprises research describing public attitudes and trust levels when it comes to autonomous vehicles. By merely depicting observed sentiments, without engineering any situations or offering predictions, it’s considered descriptive research.

18. The Impact of Social Media on Body Image (Psychology): Descriptive research to outline the experiences and perceptions of individuals relating to body image in the era of social media. Observing these elements without altering any variables qualifies it as descriptive research.

Descriptive vs Experimental Research

Descriptive research merely observes, records, and presents the actual state of affairs without manipulating any variables, while experimental research involves deliberately changing one or more variables to determine their effect on a particular outcome.

De Vaus (2001) succinctly explains that descriptive studies find out what is going on , but experimental research finds out why it’s going on /

Simple definitions are below:

- Descriptive research is primarily about describing the characteristics or behaviors in a population, often through surveys or observational methods. It provides rich detail about a specific phenomenon but does not allow for conclusive causal statements; however, it can offer essential leads or ideas for further experimental research (Ivey, 2016).

- Experimental research , often conducted in controlled environments, aims to establish causal relationships by manipulating one or more independent variables and observing the effects on dependent variables (Devi, 2017; Mukherjee, 2019).

Experimental designs often involve a control group and random assignment . While it can provide compelling evidence for cause and effect, its artificial setting might not perfectly mirror real-worldly conditions, potentially affecting the generalizability of its findings.

These two types of research are complementary, with descriptive studies often leading to hypotheses that are then tested experimentally (Devi, 2017; Zhao et al., 2021).

Benefits and Limitations of Descriptive Research

Descriptive research offers several benefits: it allows researchers to gather a vast amount of data and present a complete picture of the situation or phenomenon under study, even within large groups or over long time periods.

It’s also flexible in terms of the variety of methods used, such as surveys, observations, and case studies, and it can be instrumental in identifying patterns or trends and generating hypotheses (Erickson, 2017).

However, it also has its limitations.

The primary drawback is that it can’t establish cause-effect relationships, as no variables are manipulated. This lack of control over variables also opens up possibilities for bias, as researchers might inadvertently influence responses during data collection (De Vaus, 2001).