- Open access

- Published: 15 December 2015

Qualitative and mixed methods in systematic reviews

- David Gough 1

Systematic Reviews volume 4 , Article number: 181 ( 2015 ) Cite this article

22k Accesses

46 Citations

23 Altmetric

Metrics details

Expanding the range of methods of systematic review

The logic of systematic reviews is very simple. We use transparent rigorous approaches to undertake primary research, and so we should do the same in bringing together studies to describe what has been studied (a research map) or to integrate the findings of the different studies to answer a research question (a research synthesis). We should not really need to use the term ‘systematic’ as it should be assumed that researchers are using and reporting systematic methods in all of their research, whether primary or secondary. Despite the universality of this logic, systematic reviews (maps and syntheses) are much better known in health research and for answering questions of the effectiveness of interventions (what works). Systematic reviews addressing other sorts of questions have been around for many years, as in, for example, meta ethnography [ 1 ] and other forms of conceptual synthesis [ 2 ], but only recently has there been a major increase in the use of systematic review approaches to answer other sorts of research questions.

There are probably several reasons for this broadening of approach. One may be that the increased awareness of systematic reviews has made people consider the possibilities for all areas of research. A second related factor may be that more training and funding resources have become available and increased the capacity to undertake such varied review work.

A third reason could be that some of the initial anxieties about systematic reviews have subsided. Initially, there were concerns that their use was being promoted by a new managerialism where reviews, particularly effectiveness reviews, were being used to promote particular ideological and theoretical assumptions and to indirectly control research agendas. However, others like me believe that explicit methods should be used to enable transparency of perspectives driving research and to open up access to and participation in research agendas and priority setting [ 3 ] as illustrated, for example, by the James Lind Alliance (see http://www.jla.nihr.ac.uk/ ).

A fourth possible reason for the development of new approaches is that effectiveness reviews have themselves broadened. Some ‘what works’ reviews can be open to criticism for only testing a ‘black box’ hypothesis of what works with little theorizing or any logic model about why any such hypothesis should be true and the mechanisms involved in such processes. There is now more concern to develop theory and to test how variables combine and interact. In primary research, qualitative strategies are advised prior to undertaking experimental trials [ 4 , 5 ] and similar approaches are being advocated to address complexity in reviews [ 6 ], in order to ask questions and use methods that address theories and processes that enable an understanding of both impact and context.

This Special Issue of Systematic Reviews Journal is providing a focus for these new methods of review whether these use qualitative review methods on their own or mixed together with more quantitative approaches. We are linking together with the sister journal Trials for this Special Issue as there is a similar interest in what qualitative approaches can and should contribute to primary research using experimentally controlled trials (see Trials Special Issue editorial by Claire Snowdon).

Dimensions of difference in reviews

Developing the range of methods to address different questions for review creates a challenge in describing and understanding such methods. There are many names and brands for the new methods which may or may not withstand the changes of historical time, but another way to comprehend the changes and new developments is to consider the dimensions on which the approaches to review differ [ 7 , 8 ].

One important distinction is the research question being asked and the associated paradigm underlying the method used to address this question. Research assumes a particular theoretical position and then gathers data within this conceptual lens. In some cases, this is a very specific hypothesis that is then tested empirically, and sometimes, the research is more exploratory and iterative with concepts being emergent and constructed during the research process. This distinction is often labelled as quantitative or positivist versus qualitative or constructionist. However, this can be confusing as much research taking a ‘quantitative’ perspective does not have the necessary numeric data to analyse. Even if it does have such data, this might be explored for emergent properties. Similarly, research taking a ‘qualitative’ perspective may include implicit quantitative themes in terms of the extent of different qualitative findings reported by a study.

Sandelowski and colleagues’ solution is to consider the analytic activity and whether this aggregates (adds up) or configures (arranges) the data [ 9 ]. In a randomized controlled trial and an effectiveness review of such studies, the main analysis is the aggregation of data using a priori non-emergent strategies with little iteration. However, there may also be post hoc analysis that is more exploratory in arranging (configuring) data to identify patterns as in, for example, meta regression or qualitative comparative analysis aiming to identify the active ingredients of effective interventions [ 10 ]. Similarly, qualitative primary research or reviews of such research are predominantly exploring emergent patterns and developing concepts iteratively, yet there may be some aggregation of data to make statements of generalizations of extent.

Even where the analysis is predominantly configuration, there can be a wide variation in the dimensions of difference of iteration of theories and concepts. In thematic synthesis [ 11 ], there may be few presumptions about the concepts that will be configured. In meta ethnography which can be richer in theory, there may be theoretical assumptions underlying the review question framing the analysis. In framework synthesis, there is an explicit conceptual framework that is iteratively developed and changed through the review process [ 12 , 13 ].

In addition to the variation in question, degree of configuration, complexity of theory, and iteration are many other dimensions of difference between reviews. Some of these differences follow on from the research questions being asked and the research paradigm being used such as in the approach to searching (exhaustive or based on exploration or saturation) and the appraisal of the quality and relevance of included studies (based more on risk of bias or more on meaning). Others include the extent that reviews have a broad question, depth of analysis, and the extent of resultant ‘work done’ in terms of progressing a field of inquiry [ 7 , 8 ].

Mixed methods reviews

As one reason for the growth in qualitative synthesis is what they can add to quantitative reviews, it is not surprising that there is also growing interest in mixed methods reviews. This reflects similar developments in primary research in mixing methods to examine the relationship between theory and empirical data which is of course the cornerstone of much research. But, both primary and secondary mixed methods research also face similar challenges in examining complex questions at different levels of analysis and of combining research findings investigated in different ways and may be based on very different epistemological assumptions [ 14 , 15 ].

Some mixed methods approaches are convergent in that they integrate different data and methods of analysis together at the same time [ 16 , 17 ]. Convergent systematic reviews could be described as having broad inclusion criteria (or two or more different sets of criteria) for methods of primary studies and have special methods for the synthesis of the resultant variation in data. Other reviews (and also primary mixed methods studies) are sequences of sub-reviews in that one sub-study using one research paradigm is followed by another sub-study with a different research paradigm. In other words, a qualitative synthesis might be used to explore the findings of a prior quantitative synthesis or vice versa [ 16 , 17 ].

An example of a predominantly aggregative sub-review followed by a configuring sub-review is the EPPI-Centre’s mixed methods review of barriers to healthy eating [ 18 ]. A sub-review on the effectiveness of public health interventions showed a modest effect size. A configuring review of studies of children and young people’s understanding and views about eating provided evidence that the public health interventions did not take good account of such user views research, and that the interventions most closely aligned to the user views were the most effective. The already mentioned qualitative comparative analysis to identify the active ingredients within interventions leading to impact could also be considered a qualitative configuring investigation of an existing quantitative aggregative review [ 10 ].

An example of a predominantly configurative review followed by an aggregative review is realist synthesis. Realist reviews examine the evidence in support of mid-range theories [ 19 ] with a first stage of a configuring review of what is proposed by the theory or proposal (what would need to be in place and what casual pathways would have to be effective for the outcomes proposed by the theory to be supported?) and a second stage searching for empirical evidence to test for those necessary conditions and effectiveness of the pathways. The empirical testing does not however use a standard ‘what works’ a priori methods approach but rather a more iterative seeking out of evidence that confirms or undermines the theory being evaluated [ 20 ].

Although sequential mixed methods approaches are considered to be sub-parts of one larger study, they could be separate studies as part of a long-term strategic approach to studying an issue. We tend to see both primary studies and reviews as one-off events, yet reviews are a way of examining what we know and what more we want to know as a strategic approach to studying an issue over time. If we are in favour of mixing paradigms of research to enable multiple levels and perspectives and mixing of theory development and empirical evaluation, then we are really seeking mixed methods research strategies rather than simply mixed methods studies and reviews.

Noblit G. Hare RD: meta-ethnography: synthesizing qualitative studies. Newbury Park NY: Sage Publications; 1988.

Google Scholar

Barnett-Page E, Thomas J. Methods for the synthesis of qualitative research: a critical review. BMC Med Res Methodol. 2009;9:59.

Article PubMed PubMed Central Google Scholar

Gough D, Elbourne D. Systematic research synthesis to inform policy, practice and democratic debate. Soc Pol Soc. 2002;2002:1.

Moore GF, Audrey S, Barker M, Bond L, Bonell C, Hardeman W, et al. Process evaluation of complex interventions: Medical Research Council guidance 2015. BMJ. 2015;350:h1258

Candy B, Jone L, King M, Oliver S. Using qualitative evidence to help understand complex palliative care interventions: a novel evidence synthesis approach. BMJ Support Palliat Care. 2014;4:Supp A41–A42.

Article Google Scholar

Noyes J, Gough D, Lewin S, Mayhew A, Michie S, Pantoja T, et al. A research and development agenda for systematic reviews that ask complex questions about complex interventions. J Clin Epidemiol. 2013;66:11.

Gough D, Oliver S, Thomas J. Introduction to systematic reviews. London: Sage; 2012.

Gough D, Thomas J, Oliver S. Clarifying differences between review designs and methods. Syst Rev. 2012;1:28.

Sandelowski M, Voils CJ, Leeman J, Crandlee JL. Mapping the mixed methods-mixed research synthesis terrain. J Mix Methods Res. 2012;6:4.

Thomas J, O’Mara-Eves A, Brunton G. Using qualitative comparative analysis (QCA) in systematic reviews of complex interventions: a worked example. Syst Rev. 2014;3:67.

Thomas J, Harden A. Methods for the thematic synthesis of qualitative research in systematic reviews. BMC Med Res Methodol. 2008;8:45.

Oliver S, Rees R, Clarke-Jones L, Milne R, Oakley AR, Gabbay J, et al. A multidimensional conceptual framework for analysing public involvement in health services research. Health Exp. 2008;11:72–84.

Booth A, Carroll C. How to build up the actionable knowledge base: the role of ‘best fit’ framework synthesis for studies of improvement in healthcare. BMJ Qual Saf. 2015. 2014-003642.

Brannen J. Mixed methods research: a discussion paper. NCRM Methods Review Papers, 2006. NCRM/005.

Creswell J. Mapping the developing landscape of mixed methods research. In: Teddlie C, Tashakkori A, editors. SAGE handbook of mixed methods in social & behavioral research. New York: Sage; 2011.

Morse JM. Principles of mixed method and multi-method research design. In: Teddlie C, Tashakkori A, editors. Handbook of mixed methods in social and behavioural research. London: Sage; 2003.

Pluye P, Hong QN. Combining the power of stories and the power of numbers: mixed methods research and mixed studies reviews. Annu Rev Public Health. 2014;35:29–45.

Harden A, Thomas J. Mixed methods and systematic reviews: examples and emerging issues. In: Tashakkori A, Teddlie C, editors. Handbook of mixed methods in the social and behavioral sciences. 2nd ed. London: Sage; 2010. p. 749–74.

Chapter Google Scholar

Pawson R. Evidenced-based policy: a realist perspective. London: Sage; 2006.

Book Google Scholar

Gough D. Meta-narrative and realist reviews: guidance, rules, publication standards and quality appraisal. BMC Med. 2013;11:22.

Download references

Author information

Authors and affiliations.

EPPI-Centre, Social Science Research Unit, University College London, London, WC1H 0NR, UK

David Gough

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to David Gough .

Additional information

Competing interests.

The author is a writer and researcher in this area. The author declares that he has no other competing interests.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

Reprints and permissions

About this article

Cite this article.

Gough, D. Qualitative and mixed methods in systematic reviews. Syst Rev 4 , 181 (2015). https://doi.org/10.1186/s13643-015-0151-y

Download citation

Received : 13 October 2015

Accepted : 29 October 2015

Published : 15 December 2015

DOI : https://doi.org/10.1186/s13643-015-0151-y

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Systematic Reviews

ISSN: 2046-4053

- Submission enquiries: Access here and click Contact Us

- General enquiries: [email protected]

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- Write for Us

- BMJ Journals More You are viewing from: Google Indexer

You are here

- Volume 15, Issue 4

- What is a qualitative synthesis?

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- Correspondence to: Kate Seers RCN Research Institute, School of Health & Social Studies, University of Warwick, CV4 7AL Warwick, UK; kate.seers{at}warwick.ac.uk

https://doi.org/10.1136/ebnurs-2012-100977

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

If you want to inform your practice with answers to questions such as ‘What is it like for people to suffer chronic pain?’ or ‘What are patients’ understanding and experience of hypertension and taking medication’ 1 or ‘What are older people's views about falling and taking part in a falls programme’ 2 then what might you do? You could read individual research papers, but often there are many papers and they can be difficult to track down and access. A qualitative synthesis (sometimes called a qualitative systematic review) systematically searches for research on a topic, and draws the findings from individual studies together. Although systematic reviews of quantitative data are well established, for qualitative research, this is a fairly new field, and methods are still developing.

One type of interpretive qualitative synthesis is meta-ethnography. This was described by Noblit and Hare 7 and has recently been identified as the most widely cited method used in qualitative synthesis 6 . Meta-ethnography can be used with different qualitative methodologies, not just ethnography. The actual themes or concepts or metaphors as described by the authors of the original research become the data for the meta-ethnography. Studies are compared by looking at these concepts and how they relate to each other. You ask questions such as ‘How does this concept in this study relate to concepts in another study’. The next step is ‘translation’. This has been described as ‘reinterpretation and transformation of the analytical and theoretical concepts provided by individual studies into one another’ 3 p. 79. A line of argument is built up; having compared studies, what can now be said—are there new concepts or a new understanding leading to a new theory, only apparent when you have looked at key concepts from the studies and how they might be connected. For example, a qualitative synthesis on medicine taking identified ‘resistance’ as the concept that best helped understand lay responses to prescribed medicines. 9

Another type of interpretative qualitative systematic review is Critical Interpretative Synthesis. 8 , 10 Key concepts come from qualitative studies and from quantitative studies that ‘explicitly allows the integration of qualitative and quantitative evidence through an interpretative process’. 8 p. 39.

So a qualitative synthesis is a developing area. It can be useful in drawing together qualitative research, and often enables new understandings of the data to emerge. This ensures findings from primary research can contribute, through a qualitative synthesis, to a greater understanding of an area, which may be relevant to your practice.

- Marshall IJ ,

- Wolfe CDA ,

- McInnes E ,

- Sandelowski M ,

- Barnett-Page E ,

- Campbell R ,

- Dixon-Woods M ,

- Britten N ,

- Agarwal S ,

Competing interests None.

Read the full text or download the PDF:

- Langson Library

- Science Library

- Grunigen Medical Library

- Law Library

- Connect From Off-Campus

- Accessibility

- Gateway Study Center

Email this link

Systematic reviews & evidence synthesis methods.

- Schedule a Consultation / Meet our Team

- What is Evidence Synthesis?

- Types of Evidence Synthesis

- Evidence Synthesis Across Disciplines

- Finding and Appraising Existing Systematic Reviews

- 1. Develop a Protocol

- 2. Draft your Research Question

- 3. Select Databases

- 4. Select Grey Literature Sources

- 5. Write a Search Strategy

- 6. Register a Protocol

- 7. Translate Search Strategies

- 8. Citation Management

- 9. Article Screening

- 10. Risk of Bias Assessment

- 11. Data Extraction

- 12. Synthesize, Map, or Describe the Results

- Open Access Evidence Synthesis Resources

What are Evidence Syntheses?

According to the Royal Society, 'evidence synthesis' refers to the process of bringing together information from a range of sources and disciplines to inform debates and decisions on specific issues. They generally include a methodical and comprehensive literature synthesis focused on a well-formulated research question. Their aim is to identify and synthesize all of the scholarly research on a particular topic, including both published and unpublished studies. Evidence syntheses are conducted in an unbiased, reproducible way to provide evidence for practice and policy-making, as well as to identify gaps in the research. Evidence syntheses may also include a meta-analysis, a more quantitative process of synthesizing and visualizing data retrieved from various studies.

Evidence syntheses are much more time-intensive than traditional literature reviews and require a multi-person research team. See this PredicTER tool to get a sense of a systematic review timeline (one type of evidence synthesis). Before embarking on an evidence synthesis, it's important to clearly identify your reasons for conducting one. For a list of types of evidence synthesis projects, see the Types of Evidence Synthesis tab.

How Does a Traditional Literature Review Differ From an Evidence Synthesis?

One commonly used form of evidence synthesis is a systematic review. This table compares a traditional literature review with a systematic review.

Video: Reproducibility and transparent methods (Video 3:25)

Reporting Standards

There are some reporting standards for evidence syntheses. These can serve as guidelines for protocol and manuscript preparation and journals may require that these standards are followed for the review type that is being employed (e.g. systematic review, scoping review, etc).

- PRISMA checklist Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) is an evidence-based minimum set of items for reporting in systematic reviews and meta-analyses.

- PRISMA-P Standards An updated version of the original PRISMA standards for protocol development.

- PRISMA - ScR Reporting guidelines for scoping reviews and evidence maps

- PRISMA-IPD Standards Extension of the original PRISMA standards for systematic reviews and meta-analyses of individual participant data.

- EQUATOR Network The EQUATOR (Enhancing the QUAlity and Transparency Of health Research) Network is an international initiative that seeks to improve the reliability and value of published health research literature by promoting transparent and accurate reporting and wider use of robust reporting guidelines. They provide a list of various standards for reporting in systematic reviews.

Video: Guidelines and reporting standards

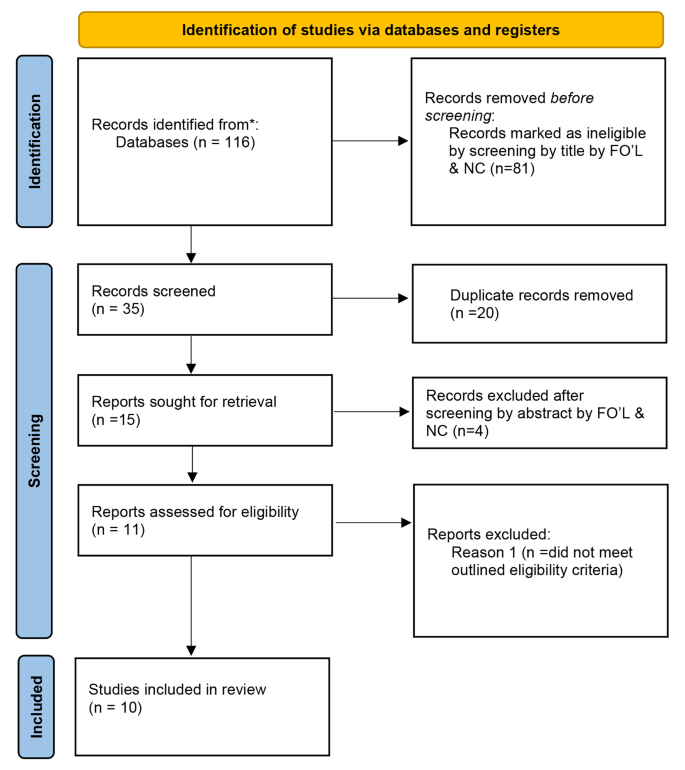

PRISMA Flow Diagram

The PRISMA flow diagram depicts the flow of information through the different phases of an evidence synthesis. It maps the search (number of records identified), screening (number of records included and excluded), and selection (reasons for exclusion). Many evidence syntheses include a PRISMA flow diagram in the published manuscript.

See below for resources to help you generate your own PRISMA flow diagram.

- PRISMA Flow Diagram Tool

- PRISMA Flow Diagram Word Template

- << Previous: Schedule a Consultation / Meet our Team

- Next: Types of Evidence Synthesis >>

- Last Updated: Apr 22, 2024 7:04 PM

- URL: https://guides.lib.uci.edu/evidence-synthesis

Off-campus? Please use the Software VPN and choose the group UCIFull to access licensed content. For more information, please Click here

Software VPN is not available for guests, so they may not have access to some content when connecting from off-campus.

- University of Texas Libraries

- UT Libraries

Systematic Reviews & Evidence Synthesis Methods

- Types of Reviews

- Formulate Question

- Find Existing Reviews & Protocols

- Register a Protocol

- Searching Systematically

- Supplementary Searching

- Managing Results

- Deduplication

- Critical Appraisal

- Glossary of terms

- Librarian Support

- Video tutorials This link opens in a new window

- Systematic Review & Evidence Synthesis Boot Camp

Once you have completed your analysis, you will want to both summarize and synthesize those results. You may have a qualitative synthesis, a quantitative synthesis, or both.

Qualitative Synthesis

In a qualitative synthesis, you describe for readers how the pieces of your work fit together. You will summarize, compare, and contrast the characteristics and findings, exploring the relationships between them. Further, you will discuss the relevance and applicability of the evidence to your research question. You will also analyze the strengths and weaknesses of the body of evidence. Focus on where the gaps are in the evidence and provide recommendations for further research.

Quantitative Synthesis

Whether or not your Systematic Review includes a full meta-analysis, there is typically some element of data analysis. The quantitative synthesis combines and analyzes the evidence using statistical techniques. This includes comparing methodological similarities and differences and potentially the quality of the studies conducted.

Summarizing vs. Synthesizing

In a systematic review, researchers do more than summarize findings from identified articles. You will synthesize the information you want to include.

While a summary is a way of concisely relating important themes and elements from a larger work or works in a condensed form, a synthesis takes the information from a variety of works and combines them together to create something new.

Synthesis :

"The goal of a systematic synthesis of qualitative research is to integrate or compare the results across studies in order to increase understanding of a particular phenomenon, not to add studies together. Typically the aim is to identify broader themes or new theories – qualitative syntheses usually result in a narrative summary of cross-cutting or emerging themes or constructs, and/or conceptual models."

Denner, J., Marsh, E. & Campe, S. (2017). Approaches to reviewing research in education. In D. Wyse, N. Selwyn, & E. Smith (Eds.), The BERA/SAGE Handbook of educational research (Vol. 2, pp. 143-164). doi: 10.4135/9781473983953.n7

- Approaches to Reviewing Research in Education from Sage Knowledge

Data synthesis (Collaboration for Environmental Evidence Guidebook)

Interpreting findings and and reporting conduct (Collaboration for Environmental Evidence Guidebook)

Interpreting results and drawing conclusions (Cochrane Handbook, Chapter 15)

Guidance on the conduct of narrative synthesis in systematic reviews (ESRC Methods Programme)

- Last Updated: Apr 9, 2024 8:57 PM

- URL: https://guides.lib.utexas.edu/systematicreviews

A systematic review and synthesis of the quantitative and qualitative evidence behind patient-centred goal setting in stroke rehabilitation

Affiliation.

- 1 School of Health and Population Sciences-Nursing and Physiotherapy, University of Birmingham, UK. [email protected]

- PMID: 21441308

- DOI: 10.1177/0269215510394467

Objective: To map out from the literature the nature, extent and effects of application of patient-centred goal setting in stroke rehabilitation practice.

Design: Systematic review.

Data sources: A search was conducted in the Cochrane (Wiley), AMED, Medline (EBSCO), Embase, Sports discuss, Medline (Ovid) and CINAHL databases. Secondary search based on references from the preliminary search was undertaken.

Review methods: Quantitative and qualitative studies that included aspects of patient-centredness and goal setting in stroke patients from 1980 to June 2010 were collected. Studies were scrutinized for relevance and quality based on published methodology. The findings were synthesized by aggregating the themes from the qualitative studies and relating them to relevant findings from the quantitative studies.

Results: Eighteen qualitative and eight quantitative and one mixed method study conducted in stroke rehabilitation services ranging from acute to community rehabilitation were included. Themes that emerged were related to perceptions of patients and professionals regarding patient-centredness, nominal adoption of this concept, consequences of discrepancies in the perceptions and practice, related ethical conflicts, challenges to application and strategies to improve its application. The effects of following patient-centred goal-setting practice have been studied mostly with weak methodologies and studies show some benefit with psychological outcomes.

Conclusion: Patient-centred goal setting is minimally adopted in goal-setting practice due to various barriers. Since the effects of incorporating this concept have not been evaluated rigorously it is suggested that further research is essential to investigate its effect on patient outcomes.

Publication types

- Systematic Review

- Attitude of Health Personnel*

- Databases, Bibliographic

- Evaluation Studies as Topic

- Patient Participation / psychology*

- Patient Preference*

- Patient-Centered Care

- Professional-Patient Relations*

- Qualitative Research

- Stroke Rehabilitation*

- Open access

- Published: 19 April 2024

Person-centered care assessment tool with a focus on quality healthcare: a systematic review of psychometric properties

- Lluna Maria Bru-Luna 1 ,

- Manuel Martí-Vilar 2 ,

- César Merino-Soto 3 ,

- José Livia-Segovia 4 ,

- Juan Garduño-Espinosa 5 &

- Filiberto Toledano-Toledano 5 , 6 , 7

BMC Psychology volume 12 , Article number: 217 ( 2024 ) Cite this article

305 Accesses

Metrics details

The person-centered care (PCC) approach plays a fundamental role in ensuring quality healthcare. The Person-Centered Care Assessment Tool (P-CAT) is one of the shortest and simplest tools currently available for measuring PCC. The objective of this study was to conduct a systematic review of the evidence in validation studies of the P-CAT, taking the “Standards” as a frame of reference.

First, a systematic literature review was conducted following the PRISMA method. Second, a systematic descriptive literature review of validity tests was conducted following the “Standards” framework. The search strategy and information sources were obtained from the Cochrane, Web of Science (WoS), Scopus and PubMed databases. With regard to the eligibility criteria and selection process, a protocol was registered in PROSPERO (CRD42022335866), and articles had to meet criteria for inclusion in the systematic review.

A total of seven articles were included. Empirical evidence indicates that these validations offer a high number of sources related to test content, internal structure for dimensionality and internal consistency. A moderate number of sources pertain to internal structure in terms of test-retest reliability and the relationship with other variables. There is little evidence of response processes, internal structure in measurement invariance terms, and test consequences.

The various validations of the P-CAT are not framed in a structured, valid, theory-based procedural framework like the “Standards” are. This can affect clinical practice because people’s health may depend on it. The findings of this study show that validation studies continue to focus on the types of validity traditionally studied and overlook interpretation of the scores in terms of their intended use.

Peer Review reports

Person-centered care (PCC)

Quality care for people with chronic diseases, functional limitations, or both has become one of the main objectives of medical and care services. The person-centered care (PCC) approach is an essential element not only in achieving this goal but also in providing high-quality health maintenance and medical care [ 1 , 2 , 3 ]. In addition to guaranteeing human rights, PCC provides numerous benefits to both the recipient and the provider [ 4 , 5 ]. Additionally, PCC includes a set of necessary competencies for healthcare professionals to address ongoing challenges in this area [ 6 ]. PCC includes the following elements [ 7 ]: an individualized, goal-oriented care plan based on individuals’ preferences; an ongoing review of the plan and the individual’s goals; support from an interprofessional team; active coordination among all medical and care providers and support services; ongoing information exchange, education and training for providers; and quality improvement through feedback from the individual and caregivers.

There is currently a growing body of literature on the application of PCC. A good example of this is McCormack’s widely known mid-range theory [ 8 ], an internationally recognized theoretical framework for PCC and how it is operationalized in practice. This framework forms a guide for care practitioners and researchers in hospital settings. This framework is elaborated in PCC and conceived of as “an approach to practice that is established through the formation and fostering of therapeutic relationships between all care providers, service users, and others significant to them, underpinned by values of respect for persons, [the] individual right to self-determination, mutual respect, and understanding” [ 9 ].

Thus, as established by PCC, it is important to emphasize that reference to the person who is the focus of care refers not only to the recipient but also to everyone involved in a care interaction [ 10 , 11 ]. PCC ensures that professionals are trained in relevant skills and methodology since, as discussed above, carers are among the agents who have the greatest impact on the quality of life of the person in need of care [ 12 , 13 , 14 ]. Furthermore, due to the high burden of caregiving, it is essential to account for caregivers’ well-being. In this regard, studies on professional caregivers are beginning to suggest that the provision of PCC can produce multiple benefits for both the care recipient and the caregiver [ 15 ].

Despite a considerable body of literature and the frequent inclusion of the term in health policy and research [ 16 ], PCC involves several complications. There is no standard consensus on the definition of this concept [ 17 ], which includes problematic areas such as efficacy assessment [ 18 , 19 ]. In addition, the difficulty of measuring the subjectivity involved in identifying the dimensions of the CPC and the infrequent use of standardized measures are acute issues [ 20 ]. These limitations and purposes motivated the creation of the Person-Centered Care Assessment Tool (P-CAT; [ 21 ]), which emerged from the need for a brief, economical, easily applied, versatile and comprehensive assessment instrument to provide valid and reliable measures of PCC for research purposes [ 21 ].

Person-centered care assessment tool (P-CAT)

There are several instruments that can measure PCC from different perspectives (i.e., the caregiver or the care recipient) and in different contexts (e.g., hospitals and nursing homes). However, from a practical point of view, the P-CAT is one of the shortest and simplest tools and contains all the essential elements of PCC described in the literature. It was developed in Australia to measure the approach of long-term residential settings to older people with dementia, although it is increasingly used in other healthcare settings, such as oncology units [ 22 ] and psychiatric hospitals [ 23 ].

Due to the brevity and simplicity of its application, the versatility of its use in different medical and care contexts, and its potential emic characteristics (i.e., constructs that can be cross-culturally applicable with reasonable and similar structure and interpretation; [ 24 ]), the P-CAT is one of the most widely used tests by professionals to measure PCC [ 25 , 26 ]. It has expanded to several countries with cultural and linguistic differences. Since its creation, it has been adapted in countries separated by wide cultural and linguistic differences, such as Norway [ 27 ], Sweden [ 28 ], China [ 29 ], South Korea [ 30 ], Spain [ 25 ], and Italy [ 31 ].

The P-CAT comprises 13 items rated on a 5-point ordinal scale (from “strongly disagree” to “strongly agree”), with high scores indicating a high degree of person-centeredness. The scale consists of three dimensions: person-centered care (7 items), organizational support (4 items) and environmental accessibility (2 items). In the original study ( n = 220; [ 21 ]), the internal consistency of the instrument yielded satisfactory values for the total scale ( α = 0.84) and good test-retest reliability ( r =.66) at one-week intervals. A reliability generalization study conducted in 2021 [ 32 ] that estimated the internal consistency of the P-CAT and analyzed possible factors that could affect the it revealed that the mean α value for the 25 meta-analysis samples (some of which were part of the validations included in this study) was 0.81, and the only variable that had a statistically significant relationship with the reliability coefficient was the mean age of the sample. With respect to internal structure validity, three factors (56% of the total variance) were obtained, and content validity was assessed by experts, literature reviews and stakeholders [ 33 ].

Although not explicitly stated, the apparent commonality between validation studies of different versions of the P-CAT may be influenced by an influential decades-old validity framework that differentiates three categories: content validity, construct validity, and criterion validity [ 34 , 35 ]. However, a reformulation of the validity of the P-CAT within a modern framework, which would provide a different definition of validity, has not been performed.

Scale validity

Traditionally, validation is a process focused on the psychometric properties of a measurement instrument [ 36 ]. In the early 20th century, with the frequent use of standardized measurement tests in education and psychology, two definitions emerged: the first defined validity as the degree to which a test measures what it intends to measure, while the second described the validity of an instrument in terms of the correlation it presents with a variable [ 35 ].

However, in the past century, validity theory has evolved, leading to the understanding that validity should be based on specific interpretations for an intended purpose. It should not be limited to empirically obtained psychometric properties but should also be supported by the theory underlying the construct measured. Thus, to speak of classical or modern validity theory suggests an evolution in the classical or modern understanding of the concept of validity. Therefore, a classical approach (called classical test theory, CTT) is specifically differentiated from a modern approach. In general, recent concepts associated with a modern view of validity are based on (a) a unitary conception of validity and (b) validity judgments based on inferences and interpretations of the scores of a measure [ 37 , 38 ]. This conceptual advance in the concept of validity led to the creation of a guiding framework to for obtaining evidence to support the use and interpretation of the scores obtained by a measure [ 39 ].

This purpose is addressed by the Standards for Educational and Psychological Testing (“Standards”), a guide created by the American Educational Research Association (AERA), the American Psychological Association (APA) and the National Council on Measurement in Education (NCME) in 2014 with the aim of providing guidelines to assess the validity of the interpretations of scores of an instrument based on their intended use. Two conceptual aspects stand out in this modern view of validity: first, validity is a unitary concept centered on the construct; second, validity is defined as “the degree to which evidence and theory support the interpretations of test scores for proposed uses of tests” [ 37 ]. Thus, the “Standards” propose several sources that serve as a reference for assessing different aspects of validity. The five sources of valid evidence are as follows [ 37 ]: test content, response processes, internal structure, relations to other variables and consequences of testing. According to AERA et al. [ 37 ], test content validity refers to the relationship of the administration process, subject matter, wording and format of test items to the construct they are intended to measure. It is measured predominantly with qualitative methods but without excluding quantitative approaches. The validity of the responses is based on analysis of the cognitive processes and interpretation of the items by respondents and is measured with qualitative methods. Internal structure validity is based on the interrelationship between the items and the construct and is measured by quantitative methods. Validity in terms of the relationship with other variables is based on comparison between the variable that the instrument intends to measure and other theoretically relevant external variables and is measured by quantitative methods. Finally, validity based on the results of the test analyses consequences, both intended and unintended, that may be due to a source of invalidity. It is measured mainly by qualitative methods.

Thus, although validity plays a fundamental role in providing a strong scientific basis for interpretations of test scores, validation studies in the health field have traditionally focused on content validity, criterion validity and construct validity and have overlooked the interpretation and use of scores [ 34 ].

“Standards” are considered a suitable validity theory-based procedural framework for reviewing the validity of questionnaires due to its ability to analyze sources of validity from both qualitative and quantitative approaches and its evidence-based method [ 35 ]. Nevertheless, due to a lack of knowledge or the lack of a systematic description protocol, very few instruments to date have been reviewed within the framework of the “Standards” [ 39 ].

Current study

Although the P-CAT is one of the most widely used instruments by professionals and has seven validations [ 25 , 27 , 28 , 29 , 30 , 31 , 40 ], no analysis has been conducted of its validity within the framework of the “Standards”. That is, empirical evidence of the validity of the P-CAT has not been obtained in a way that helps to develop a judgment based on a synthesis of the available information.

A review of this type is critical given that some methodological issues seem to have not been resolved in the P-CAT. For example, although the multidimensionality of the P-CAT was identified in the study that introduced it, Bru-Luna et al. [ 32 ] recently stated that in adaptations of the P-CAT [ 25 , 27 , 28 , 29 , 30 , 40 ], the total score is used for interpretation and multidimensionality is disregarded. Thus, the multidimensionality of the original study was apparently not replicated. Bru-Luna et al. [ 32 ] also indicated that the internal structure validity of the P-CAT is usually underreported due to a lack of sufficiently rigorous approaches to establish with certainty how its scores are calculated.

The validity of the P-CAT, specifically its internal structure, appears to be unresolved. Nevertheless, substantive research and professional practice point to this measure as relevant to assessing PCC. This perception is contestable and judgment-based and may not be sufficient to assess the validity of the P-CAT from a cumulative and synthetic angle based on preceding validation studies. An adequate assessment of validity requires a model to conceptualize validity followed by a review of previous studies of the validity of the P-CAT using this model.

Therefore, the main purpose of this study was to conduct a systematic review of the evidence provided by P-CAT validation studies while taking the “Standards” as a framework.

The present study comprises two distinct but interconnected procedures. First, a systematic literature review was conducted following the PRISMA method ( [ 41 ]; Additional file 1; Additional file 2) with the aim of collecting all validations of the P-CAT that have been developed. Second, a systematic description of the validity evidence for each of the P-CAT validations found in the systematic review was developed following the “Standards” framework [ 37 ]. The work of Hawkins et al. [ 39 ], the first study to review validity sources according to the guidelines proposed by the “Standards”, was also used as a reference. Both provided conceptual and pragmatic guidance for organizing and classifying validity evidence for the P-CAT.

The procedure conducted in the systematic review is described below, followed by the procedure for examining the validity studies.

Systematic review

Search strategy and information sources.

Initially, the Cochrane database was searched with the aim of identifying systematic reviews of the P-CAT. When no such reviews were found, subsequent preliminary searches were performed in the Web of Science (WoS), Scopus and PubMed databases. These databases play a fundamental role in recent scientific literature since they are the main sources of published articles that undergo high-quality content and editorial review processes [ 42 ]. The search formula was as follows. The original P-CAT article [ 21 ] was located, after which all articles that cited it through 2021 were identified and analyzed. This approach ensured the inclusion of all validations. No articles were excluded on the basis of language to avoid language bias [ 43 ]. Moreover, to reduce the effects of publication bias, a complementary search in Google Scholar was also performed to allow the inclusion of “gray” literature [ 44 ]. Finally, a manual search was performed through a review of the references of the included articles to identify other articles that met the search criteria but were not present in any of the aforementioned databases.

This process was conducted by one of the authors and corroborated by another using the Covidence tool [ 45 ]. A third author was consulted in case of doubt.

Eligibility criteria and selection process

The protocol was registered in PROSPERO, and the search was conducted according to these criteria. The identification code is CRD42022335866.

The articles had to meet the following criteria for inclusion in the systematic review: (a) a methodological approach to P-CAT validations, (b) an experimental or quasiexperimental studies, (c) studies with any type of sample, and (d) studies in any language. We discarded studies that met at least one of the following exclusion criteria: (a) systematic reviews or bibliometric reviews of the instrument or meta-analyses or (b) studies published after 2021.

Data collection process

After the articles were selected, the most relevant information was extracted from each article. Fundamental data were recorded in an Excel spreadsheet for each of the sections: introduction, methodology, results and discussion. Information was also recorded about the limitations mentioned in each article as well as the practical implications and suggestions for future research.

Given the aim of the study, information was collected about the sources of validity of each study, including test content (judges’ evaluation, literature review and translation), response processes, internal structure (factor analysis, design, estimator, factor extraction method, factors and items, interfactor R, internal replication, effect of the method, and factor loadings), and relationships with other variables (convergent, divergent, concurrent and predictive validity) and consequences of measurement.

Description of the validity study

To assess the validity of the studies, an Excel table was used. Information was recorded for the seven articles included in the systematic review. The data were extracted directly from the texts of the articles and included information about the authors, the year of publication, the country where each P-CAT validation was produced and each of the five standards proposed in the “Standards” [ 37 ].

The validity source related to internal structure was divided into three sections to record information about dimensionality (e.g., factor analysis, design, estimator, factor extraction method, factors and items, interfactor R, internal replication, effect of the method, and factor loadings), reliability expression (i.e., internal consistency and test-retest) and the study of factorial invariance according to the groups into which it was divided (e.g., sex, age, profession) and the level of study (i.e., metric, intercepts). This approach allowed much more information to be obtained than relying solely on source validity based on internal structure. This division was performed by the same researcher who performed the previous processes.

Study selection and study characteristics

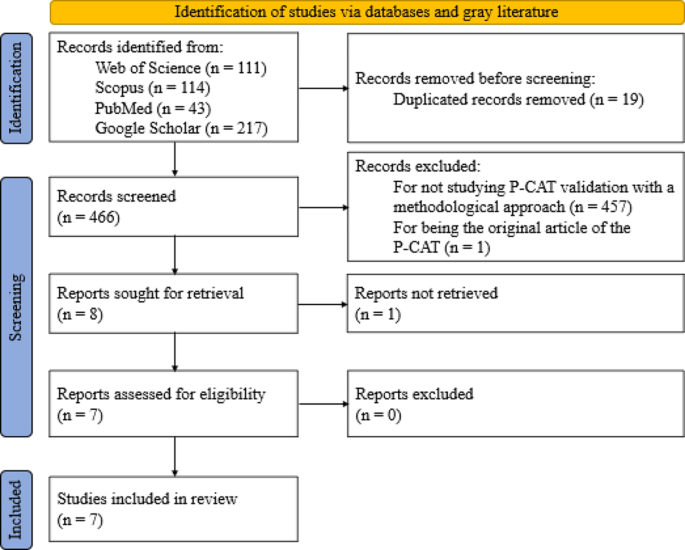

The systematic review process was developed according to the PRISMA methodology [ 41 ].

The WoS, Scopus, PubMed and Google Scholar databases were searched on February 12, 2022 and yielded a total of 485 articles. Of these, 111 were found in WoS, 114 in Scopus, 43 in PubMed and 217 in Google Scholar. In the first phase, the title and abstracts of all the articles were read. In this first screening, 457 articles were eliminated because they did not include studies with a methodological approach to P-CAT validation and one article was excluded because it was the original P-CAT article. This resulted in a total of 27 articles, 19 of which were duplicated in different databases and, in the case of Google Scholar, within the same database. This process yielded a total of eight articles that were evaluated for eligibility by a complete reading of the text. In this step, one of the articles was excluded due to a lack of access to the full text of the study [ 31 ] (although the original manuscript was found, it was impossible to access the complete content; in addition, the authors of the manuscript were contacted, but no reply was received). Finally, a manual search was performed by reviewing the references of the seven studies, but none were considered suitable for inclusion. Thus, the review was conducted with a total of seven articles.

Of the seven studies, six were original validations in other languages. These included Norwegian [ 27 ], Swedish [ 28 ], Chinese (which has two validations [ 29 , 40 ]), Spanish [ 25 ], and Korean [ 30 ]. The study by Selan et al. [ 46 ] included a modification of the Swedish version of the P-CAT and explored the psychometric properties of both versions (i.e., the original Swedish version and the modified version).

The item selection and screening process are illustrated in detail in Fig. 1 .

PRISMA 2020 flow diagram for new systematic reviews including database searches

Validity analysis

To provide a clear overview of the validity analyses, Table 1 descriptively shows the percentages of items that provide information about the five standards proposed by the “Standards” guide [ 37 ].

The table shows a high number of validity sources related to test content and internal structure in relation to dimensionality and internal consistency, followed by a moderate number of sources for test-retest and relationship with other variables. A rate of 0% is observed for validity sources related to response processes, invariance and test consequences. Below, different sections related to each of the standards are shown, and the information is presented in more detail.

Evidence based on test content

The first standard, which focused on test content, was met for all items (100%). Translation, which refers to the equivalence of content between the original language and the target language, was met in the six articles that conducted validation in another language and/or culture. These studies reported that the validations were translated by bilingual experts and/or experts in the area of care. In addition, three studies [ 25 , 29 , 40 ] reported that the translation process followed International Test Commission guidelines, such as those of Beaton et al. [ 47 ], Guillemin [ 48 ], Hambleton et al. [ 49 ], and Muñiz et al. [ 50 ]. Evaluation by judges, who referred to the relevance, clarity and importance of the content, was divided into two categories: expert evaluation (a panel of expert judges for each of the areas to consider in the evaluation instrument) and experiential evaluation (potential participants testing the test). The first type of evaluation occurred in three of the articles [ 28 , 29 , 46 ], while the other occurred in two [ 25 , 40 ]. Only one of the items [ 29 ] reported that the scale contained items that reflected the dimension described in the literature. The validity evidence related to the test content presented in each article can be found in Table 2 .

Evidence based on response processes

The second standard, related to the validity of the response process, was obtained according to the “Standards” from the analysis of individual responses: “questioning test takers about their performance strategies or response to particular items (…), maintaining records that monitor the development of a response to a writing task (…), documentation of other aspects of performance, like eye movement or response times…” [ 37 ] (p. 15). According to the analysis of the validity of the response processes, none of the articles complied with this evidence.

Evidence based on internal structure

The third standard, validity related to internal structure, was divided into three sections. First, the dimensionality of each study was examined in terms of factor analysis, design, estimator, factor extraction method, factors and items, interfactor R, internal replication, effect of the method, and factor loadings. Le et al. [ 40 ] conducted an exploratory-confirmatory design while Sjögren et al. [ 28 ] conducted a confirmatory-exploratory design to assess construct validity using confirmatory factor analysis (CFA) and investigated it further using exploratory factor analysis (EFA). The remaining articles employed only a single form of factor analysis: three employed EFA, and two employed CFA. Regarding the next point, only three of the articles reported the factor extraction method used, including Kaiser’s eigenvalue, criterion, scree plot test, parallel analysis and Velicer’s MAP test. Instrument validations yielded a total of two factors in five of the seven articles, while one yielded a single dimension [ 25 ] and the other yielded three dimensions [ 29 ], as in the original instrument. The interfactor R was reported only in the study by Zhong and Lou [ 29 ], whereas in the study by Martínez et al. [ 25 ], it could be easily obtained since it consisted of only one dimension. Internal replication was also calculated in the Spanish validation by randomly splitting the sample into two to test the correlations between factors. The effectiveness of the method was not reported in any of the articles. This information is presented in Table 3 in addition to a summary of the factor loadings.

The second section examined reliability. All the studies presented measures of internal consistency conducted in their entirety with Cronbach’s α coefficient for both the total scale and the subscales. The ω coefficient of McDonald was not used in any case. Four of the seven articles performed a test-retest test. Martínez et al. [ 25 ] conducted a test-retest after a period of seven days, while Le et al. [ 40 ] and Rokstad et al. [ 27 ] performed it between one and two weeks later and Sjögren et al. [ 28 ] allowed approximately two weeks to pass after the initial test.

The third section analyzes the calculation of invariance, which was not reported in any of the studies.

Evidence based on relationships with other variables

In the fourth standard, based on validity according to the relationship with other variables, the articles that reported it used only convergent validity (i.e., it was hypothesized that the variables related to the construct measured by the test—in this case, person-centeredness—were positively or negatively related to another construct). Discriminant validity hypothesizes that the variables related to the PCC construct are not correlated in any way with any other variable studied. No article (0%) measured discriminant evidence, while four (57%) measured convergent evidence [ 25 , 29 , 30 , 46 ]. Convergent validity was obtained through comparisons with instruments such as the Person-Centered Climate Questionnaire–Staff Version (PCQ-S), the Staff-Based Measures of Individualized Care for Institutionalized Persons with Dementia (IC), the Caregiver Psychological Elder Abuse Behavior Scale (CPEAB), the Organizational Climate (CLIOR) and the Maslach Burnout Inventory (MBI). In the case of Selan et al. [ 46 ], convergent validity was assessed on two items considered by the authors as “crude measures of person-centered care (i.e., external constructs) giving an indication of the instruments’ ability to measure PCC” (p. 4). Concurrent validity, which measures the degree to which the results of one test are or are not similar to those of another test conducted at more or less the same time with the same participants, and predictive validity, which allows predictions to be established regarding behavior based on comparison between the values of the instrument and the criterion, were not reported in any of the studies.

Evidence based on the consequences of testing

The fifth and final standard was related to the consequences of the test. It analyzed the consequences, both intended and unintended, of applying the test to a given sample. None of the articles presented explicit or implicit evidence of this.

The last two sources of validity can be seen in Table 4 .

Table 5 shows the results of the set of validity tests for each study according to the described standards.

The main purpose of this article is to analyze the evidence of validity in different validation studies of the P-CAT. To gather all existing validations, a systematic review of all literature citing this instrument was conducted.

The publication of validation studies of the P-CAT has been constant over the years. Since the publication of the original instrument in 2010, seven validations have been published in other languages (taking into account the Italian version by Brugnolli et al. [ 31 ], which could not be included in this study) as well as a modification of one of these versions. The very unequal distribution of validations between languages and countries is striking. A recent systematic review [ 51 ] revealed that in Europe, the countries where the PCC approach is most widely used are the United Kingdom, Sweden, the Netherlands, Northern Ireland, and Norway. It has also been shown that the neighboring countries seem to exert an influence on each other due to proximity [ 52 ] such that they tend to organize healthcare in a similar way, as is the case for Scandinavian countries. This favors the expansion of PCC and explains the numerous validations we found in this geographical area.

Although this approach is conceived as an essential element of healthcare for most governments [ 53 ], PCC varies according to the different definitions and interpretations attributed to it, which can cause confusion in its application (e.g., between Norway and the United Kingdom [ 54 ]). Moreover, facilitators of or barriers to implementation depend on the context and level of development of each country, and financial support remains one of the main factors in this regard [ 53 ]. This fact explains why PCC is not globally widespread among all territories. In countries where access to healthcare for all remains out of reach for economic reasons, the application of this approach takes a back seat, as does the validation of its assessment tools. In contrast, in a large part of Europe or in countries such as China or South Korea that have experienced decades of rapid economic development, patients are willing to be involved in their medical treatment and enjoy more satisfying and efficient medical experiences and environments [ 55 ], which facilitates the expansion of validations of instruments such as the P-CAT.

Regarding validity testing, the guidelines proposed by the “Standards” [ 37 ] were followed. According to the analysis of the different validations of the P-CAT instrument, none of the studies used a structured validity theory-based procedural framework for conducting validation. The most frequently reported validity tests were on the content of the test and two of the sections into which the internal structure was divided (i.e., dimensionality and internal consistency).

In the present article, the most cited source of validity in the studies was the content of the test because most of the articles were validations of the P-CAT in other languages, and the authors reported that the translation procedure was conducted by experts in all cases. In addition, several of the studies employed International Test Commission guidelines, such as those by Beaton et al. [ 47 ], Guillemin [ 48 ], Hambleton et al. [ 49 ], and Muñiz et al. [ 50 ]. Several studies also assessed the relevance, clarity and importance of the content.

The third source of validity, internal structure, was the next most often reported, although it appeared unevenly among the three sections into which this evidence was divided. Dimensionality and internal consistency were reported in all studies, followed by test-retest consistency. In relation to the first section, factor analysis, a total of five EFAs and four CFAs were presented in the validations. Traditionally, EFA has been used in research to assess dimensionality and identify key psychological constructs, although this approach involves a number of inconveniences, such as difficulty testing measurement invariance and incorporating latent factors into subsequent analyses [ 56 ] or the major problem of factor loading matrix rotation [ 57 ]. Studies eventually began to employ CFA, a technique that overcame some of these obstacles [ 56 ] but had other drawbacks; for example, the strict requirement of zero cross-loadings often does not fit the data well, and misspecification of zero loadings tends to produce distorted factors [ 57 ]. Recently, exploratory structural equation modeling (ESEM) has been proposed. This technique is widely recommended both conceptually and empirically to assess the internal structure of psychological tools [ 58 ] since it overcomes the limitations of EFA and CFA in estimating their parameters [ 56 , 57 ].

The next section, reliability, reports the total number of items according to Cronbach’s α reliability coefficient. Reliability is defined as a combination of systematic and random influences that determine the observed scores on a psychological test. Reporting the reliability measure ensures that item-based scores are consistent, that the tool’s responses are replicable and that they are not modified solely by random noise [ 59 , 60 ]. Currently, the most commonly employed reliability coefficient in studies with a multi-item measurement scale (MIMS) is Cronbach’s α [ 60 , 61 ].

Cronbach’s α [ 62 ] is based on numerous strict assumptions (e.g., the test must be unidimensional, factor loadings must be equal for all items and item errors should not covary) to estimate internal consistency. These assumptions are difficult to meet, and their violation may produce small reliability estimates [ 60 ]. One of the alternative measures to α that is increasingly recommended by the scientific literature is McDonald’s ω [ 63 ], a composite reliability measure. This coefficient is recommended for congeneric scales in which tau equivalence is not assumed. It has several advantages. For example, estimates of ω are usually robust when the estimated model contains more factors than the true model, even with small samples, or when skewness in univariate item distributions produces lower biases than those found when using α [ 59 ].

The test-retest method was the next most commonly reported internal structure section in these studies. This type of reliability considers the consistency of the scores of a test between two measurements separated by a period [ 64 ]. It is striking that test-retest consistency does not have a prevalence similar to that of internal consistency since, unlike internal consistency, test-retest consistency can be assessed for practically all types of patient-reported outcomes. It is even considered by some measurement experts to report reliability with greater relevance than internal consistency since it plays a fundamental role in the calculation of parameters for health measures [ 64 ]. However, the literature provides little guidance regarding the assessment of this type of reliability.

The internal structure section that was least frequently reported in the studies in this review was invariance. A lack of invariance refers to a difference between scores on a test that is not explained by group differences in the structure it is intended to measure [ 65 ]. The invariance of the measure should be emphasized as a prerequisite in comparisons between groups since “if scale invariance is not examined, item bias may not be fully recognized and this may lead to a distorted interpretation of the bias in a particular psychological measure” [ 65 ].

Evidence related to other variables was the next most reported source of validity in the studies included in this review. Specifically, the four studies that reported this evidence did so according to convergent validity and cited several instruments. None of the studies included evidence of discriminant validity, although this may be because there are currently several obstacles related to the measurement of this type of validity [ 66 ]. On the one hand, different definitions are used in the applied literature, which makes its evaluation difficult; on the other hand, the literature on discriminant validity focuses on techniques that require the use of multiple measurement methods, which often seem to have been introduced without sufficient evidence or are applied randomly.

Validity related to response processes was not reported by any of the studies. There are several methods to analyze this validity. These methods can be divided into two groups: “those that directly access the psychological processes or cognitive operations (think aloud, focus group, and interviews), compared to those which provide indirect indicators which in turn require additional inference (eye tracking and response times)” [ 38 ]. However, this validity evidence has traditionally been reported less frequently than others in most studies, perhaps because there are fewer clear and accepted practices on how to design or report these studies [ 67 ].

Finally, the consequences of testing were not reported in any of the studies. There is debate regarding this source of validity, with two main opposing streams of thought. On the one hand [ 68 , 69 ]) suggests that consequences that appear after the application of a test should not derive from any source of test invalidity and that “adverse consequences only undermine the validity of an assessment if they can be attributed to a problem of fit between the test and the construct” (p. 6). In contrast, Cronbach [ 69 , 70 ] notes that adverse social consequences that may result from the application of a test may call into question the validity of the test. However, the potential risks that may arise from the application of a test should be minimized in any case, especially in regard to health assessments. To this end, it is essential that this aspect be assessed by instrument developers and that the experiences of respondents be protected through the development of comprehensive and informed practices [ 39 ].

This work is not without limitations. First, not all published validation studies of the P-CAT, such as the Italian version by Brugnolli et al. [ 31 ], were available. These studies could have provided relevant information. Second, many sources of validity could not be analyzed because the studies provided scant or no data, such as response processes [ 25 , 27 , 28 , 29 , 30 , 40 , 46 ], relationships with other variables [ 27 , 28 , 40 ], consequences of testing [ 25 , 27 , 28 , 29 , 30 , 40 , 46 ], or invariance [ 25 , 27 , 28 , 29 , 30 , 40 , 46 ] in the case of internal structure and interfactor R [ 27 , 28 , 30 , 40 , 46 ], internal replication [ 27 , 28 , 29 , 30 , 40 , 46 ] or the effect of the method [ 25 , 27 , 28 , 29 , 30 , 40 , 46 ] in the case of dimensionality. In the future, it is hoped that authors will become aware of the importance of validity, as shown in this article and many others, and provide data on unreported sources so that comprehensive validity studies can be performed.

The present work also has several strengths. The search was extensive, and many studies were obtained using three different databases, including WoS, one of the most widely used and authoritative databases in the world. This database includes a large number and variety of articles and is not fully automated due to its human team [ 71 , 72 , 73 ]. In addition, to prevent publication bias, gray literature search engines such as Google Scholar were used to avoid the exclusion of unpublished research [ 44 ]. Finally, linguistic bias was prevented by not limiting the search to articles published in only one or two languages, thus avoiding the overrepresentation of studies in one language and underrepresentation in others [ 43 ].

Conclusions

Validity is understood as the degree to which tests and theory support the interpretations of instrument scores for their intended use [ 37 ]. From this perspective, the various validations of the P-CAT are not presented in a structured, valid, theory-based procedural framework like the “Standards” are. After integration and analysis of the results, it was observed that these validation reports offer a high number of sources of validity related to test content, internal structure in dimensionality and internal consistency, a moderate number of sources for internal structure in terms of test-retest reliability and the relationship with other variables, and a very low number of sources for response processes, internal structure in terms of invariance, and test consequences.

Validity plays a fundamental role in ensuring a sound scientific basis for test interpretations because it provides evidence of the extent to which the data provided by the test are valid for the intended purpose. This can affect clinical practice as people’s health may depend on it. In this sense, the “Standards” are considered a suitable and valid theory-based procedural framework for studying this modern conception of questionnaire validity, which should be taken into account in future research in this area.

Although the P-CAT is one of the most widely used instruments for assessing PCC, as shown in this study, PCC has rarely been studied. The developers of measurement tests applied to the health care setting, on which the health and quality of life of many people may depend, should use this validity framework to reflect the clear purpose of the measurement. This approach is important because the equity of decision making by healthcare professionals in daily clinical practice may depend on the source of validity. Through a more extensive study of validity that includes the interpretation of scores in terms of their intended use, the applicability of the P-CAT, an instrument that was initially developed for long-term care homes for elderly people, could be expanded to other care settings. However, the findings of this study show that validation studies continue to focus on traditionally studied types of validity and overlook the interpretation of scores in terms of their intended use.

Data availability

All data relevant to the study were included in the article or uploaded as additional files. Additional template data extraction forms are available from the corresponding author upon reasonable request.

Abbreviations

American Educational Research Association

American Psychological Association

Confirmatory factor analysis

Organizational Climate

Caregiver Psychological Elder Abuse Behavior Scale

Exploratory factor analysis

Exploratory structural equation modeling

Staff-based Measures of Individualized Care for Institutionalized Persons with Dementia

Maslach Burnout Inventory

Multi-item measurement scale

Maximum likelihood

National Council on Measurement in Education

Person-Centered Care Assessment Tool

- Person-centered care

Person-Centered Climate Questionnaire–Staff Version

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

International Register of Systematic Review Protocols

Standards for Educational and Psychological Testing

weighted least square mean and variance adjusted

Web of Science

Institute of Medicine. Crossing the quality chasm: a new health system for the 21st century. Washington, DC: National Academy; 2001.

Google Scholar

International Alliance of Patients’ Organizations. What is patient-centred healthcare? A review of definitions and principles. 2nd ed. London, UK: International Alliance of Patients’ Organizations; 2007.

World Health Organization. WHO global strategy on people-centred and integrated health services: interim report. Geneva, Switzerland: World Health Organization; 2015.

Britten N, Ekman I, Naldemirci Ö, Javinger M, Hedman H, Wolf A. Learning from Gothenburg model of person centred healthcare. BMJ. 2020;370:m2738.

Article PubMed Google Scholar

Van Diepen C, Fors A, Ekman I, Hensing G. Association between person-centred care and healthcare providers’ job satisfaction and work-related health: a scoping review. BMJ Open. 2020;10:e042658.

Article PubMed PubMed Central Google Scholar

Ekman N, Taft C, Moons P, Mäkitalo Å, Boström E, Fors A. A state-of-the-art review of direct observation tools for assessing competency in person-centred care. Int J Nurs Stud. 2020;109:103634.

American Geriatrics Society Expert Panel on Person-Centered Care. Person-centered care: a definition and essential elements. J Am Geriatr Soc. 2016;64:15–8.

Article Google Scholar

McCormack B, McCance TV. Development of a framework for person-centred nursing. J Adv Nurs. 2006;56:472–9.

McCormack B, McCance T. Person-centred practice in nursing and health care: theory and practice. Chichester, England: Wiley; 2016.

Nolan MR, Davies S, Brown J, Keady J, Nolan J. Beyond person-centred care: a new vision for gerontological nursing. J Clin Nurs. 2004;13:45–53.

McCormack B, McCance T. Person-centred nursing: theory, models and methods. Oxford, UK: Wiley-Blackwell; 2010.

Book Google Scholar

Abraha I, Rimland JM, Trotta FM, Dell’Aquila G, Cruz-Jentoft A, Petrovic M, et al. Systematic review of systematic reviews of non-pharmacological interventions to treat behavioural disturbances in older patients with dementia. The SENATOR-OnTop series. BMJ Open. 2017;7:e012759.

Anderson K, Blair A. Why we need to care about the care: a longitudinal study linking the quality of residential dementia care to residents’ quality of life. Arch Gerontol Geriatr. 2020;91:104226.

Bauer M, Fetherstonhaugh D, Haesler E, Beattie E, Hill KD, Poulos CJ. The impact of nurse and care staff education on the functional ability and quality of life of people living with dementia in aged care: a systematic review. Nurse Educ Today. 2018;67:27–45.

Smythe A, Jenkins C, Galant-Miecznikowska M, Dyer J, Downs M, Bentham P, et al. A qualitative study exploring nursing home nurses’ experiences of training in person centred dementia care on burnout. Nurse Educ Pract. 2020;44:102745.

McCormack B, Borg M, Cardiff S, Dewing J, Jacobs G, Janes N, et al. Person-centredness– the ‘state’ of the art. Int Pract Dev J. 2015;5:1–15.

Wilberforce M, Challis D, Davies L, Kelly MP, Roberts C, Loynes N. Person-centredness in the care of older adults: a systematic review of questionnaire-based scales and their measurement properties. BMC Geriatr. 2016;16:63.

Rathert C, Wyrwich MD, Boren SA. Patient-centered care and outcomes: a systematic review of the literature. Med Care Res Rev. 2013;70:351–79.

Sharma T, Bamford M, Dodman D. Person-centred care: an overview of reviews. Contemp Nurse. 2016;51:107–20.

Ahmed S, Djurkovic A, Manalili K, Sahota B, Santana MJ. A qualitative study on measuring patient-centered care: perspectives from clinician-scientists and quality improvement experts. Health Sci Rep. 2019;2:e140.

Edvardsson D, Fetherstonhaugh D, Nay R, Gibson S. Development and initial testing of the person-centered Care Assessment Tool (P-CAT). Int Psychogeriatr. 2010;22:101–8.

Tamagawa R, Groff S, Anderson J, Champ S, Deiure A, Looyis J, et al. Effects of a provincial-wide implementation of screening for distress on healthcare professionals’ confidence and understanding of person-centered care in oncology. J Natl Compr Canc Netw. 2016;14:1259–66.

Degl’ Innocenti A, Wijk H, Kullgren A, Alexiou E. The influence of evidence-based design on staff perceptions of a supportive environment for person-centered care in forensic psychiatry. J Forensic Nurs. 2020;16:E23–30.

Hulin CL. A psychometric theory of evaluations of item and scale translations: fidelity across languages. J Cross Cult Psychol. 1987;18:115–42.

Martínez T, Suárez-Álvarez J, Yanguas J, Muñiz J. Spanish validation of the person-centered Care Assessment Tool (P-CAT). Aging Ment Health. 2016;20:550–8.