Artificial Intelligence Essay

500+ words essay on artificial intelligence.

Artificial intelligence (AI) has come into our daily lives through mobile devices and the Internet. Governments and businesses are increasingly making use of AI tools and techniques to solve business problems and improve many business processes, especially online ones. Such developments bring about new realities to social life that may not have been experienced before. This essay on Artificial Intelligence will help students to know the various advantages of using AI and how it has made our lives easier and simpler. Also, in the end, we have described the future scope of AI and the harmful effects of using it. To get a good command of essay writing, students must practise CBSE Essays on different topics.

Artificial Intelligence is the science and engineering of making intelligent machines, especially intelligent computer programs. It is concerned with getting computers to do tasks that would normally require human intelligence. AI systems are basically software systems (or controllers for robots) that use techniques such as machine learning and deep learning to solve problems in particular domains without hard coding all possibilities (i.e. algorithmic steps) in software. Due to this, AI started showing promising solutions for industry and businesses as well as our daily lives.

Importance and Advantages of Artificial Intelligence

Advances in computing and digital technologies have a direct influence on our lives, businesses and social life. This has influenced our daily routines, such as using mobile devices and active involvement on social media. AI systems are the most influential digital technologies. With AI systems, businesses are able to handle large data sets and provide speedy essential input to operations. Moreover, businesses are able to adapt to constant changes and are becoming more flexible.

By introducing Artificial Intelligence systems into devices, new business processes are opting for the automated process. A new paradigm emerges as a result of such intelligent automation, which now dictates not only how businesses operate but also who does the job. Many manufacturing sites can now operate fully automated with robots and without any human workers. Artificial Intelligence now brings unheard and unexpected innovations to the business world that many organizations will need to integrate to remain competitive and move further to lead the competitors.

Artificial Intelligence shapes our lives and social interactions through technological advancement. There are many AI applications which are specifically developed for providing better services to individuals, such as mobile phones, electronic gadgets, social media platforms etc. We are delegating our activities through intelligent applications, such as personal assistants, intelligent wearable devices and other applications. AI systems that operate household apparatus help us at home with cooking or cleaning.

Future Scope of Artificial Intelligence

In the future, intelligent machines will replace or enhance human capabilities in many areas. Artificial intelligence is becoming a popular field in computer science as it has enhanced humans. Application areas of artificial intelligence are having a huge impact on various fields of life to solve complex problems in various areas such as education, engineering, business, medicine, weather forecasting etc. Many labourers’ work can be done by a single machine. But Artificial Intelligence has another aspect: it can be dangerous for us. If we become completely dependent on machines, then it can ruin our life. We will not be able to do any work by ourselves and get lazy. Another disadvantage is that it cannot give a human-like feeling. So machines should be used only where they are actually required.

Students must have found this essay on “Artificial Intelligence” useful for improving their essay writing skills. They can get the study material and the latest updates on CBSE/ICSE/State Board/Competitive Exams, at BYJU’S.

Leave a Comment Cancel reply

Your Mobile number and Email id will not be published. Required fields are marked *

Request OTP on Voice Call

Post My Comment

- Share Share

Register with BYJU'S & Download Free PDFs

Register with byju's & watch live videos.

Counselling

The Future of AI: How Artificial Intelligence Will Change the World

Innovations in the field of artificial intelligence continue to shape the future of humanity across nearly every industry. AI is already the main driver of emerging technologies like big data, robotics and IoT, and generative AI has further expanded the possibilities and popularity of AI.

According to a 2023 IBM survey , 42 percent of enterprise-scale businesses integrated AI into their operations, and 40 percent are considering AI for their organizations. In addition, 38 percent of organizations have implemented generative AI into their workflows while 42 percent are considering doing so.

With so many changes coming at such a rapid pace, here’s what shifts in AI could mean for various industries and society at large.

More on the Future of AI Can AI Make Art More Human?

The Evolution of AI

AI has come a long way since 1951, when the first documented success of an AI computer program was written by Christopher Strachey, whose checkers program completed a whole game on the Ferranti Mark I computer at the University of Manchester. Thanks to developments in machine learning and deep learning , IBM’s Deep Blue defeated chess grandmaster Garry Kasparov in 1997, and the company’s IBM Watson won Jeopardy! in 2011.

Since then, generative AI has spearheaded the latest chapter in AI’s evolution, with OpenAI releasing its first GPT models in 2018. This has culminated in OpenAI developing its GPT-4 model and ChatGPT , leading to a proliferation of AI generators that can process queries to produce relevant text, audio, images and other types of content.

AI has also been used to help sequence RNA for vaccines and model human speech , technologies that rely on model- and algorithm-based machine learning and increasingly focus on perception, reasoning and generalization.

How AI Will Impact the Future

Improved business automation .

About 55 percent of organizations have adopted AI to varying degrees, suggesting increased automation for many businesses in the near future. With the rise of chatbots and digital assistants, companies can rely on AI to handle simple conversations with customers and answer basic queries from employees.

AI’s ability to analyze massive amounts of data and convert its findings into convenient visual formats can also accelerate the decision-making process . Company leaders don’t have to spend time parsing through the data themselves, instead using instant insights to make informed decisions .

“If [developers] understand what the technology is capable of and they understand the domain very well, they start to make connections and say, ‘Maybe this is an AI problem, maybe that’s an AI problem,’” said Mike Mendelson, a learner experience designer for NVIDIA . “That’s more often the case than, ‘I have a specific problem I want to solve.’”

More on AI 75 Artificial Intelligence (AI) Companies to Know

Job Disruption

Business automation has naturally led to fears over job losses . In fact, employees believe almost one-third of their tasks could be performed by AI. Although AI has made gains in the workplace, it’s had an unequal impact on different industries and professions. For example, manual jobs like secretaries are at risk of being automated, but the demand for other jobs like machine learning specialists and information security analysts has risen.

Workers in more skilled or creative positions are more likely to have their jobs augmented by AI , rather than be replaced. Whether forcing employees to learn new tools or taking over their roles, AI is set to spur upskilling efforts at both the individual and company level .

“One of the absolute prerequisites for AI to be successful in many [areas] is that we invest tremendously in education to retrain people for new jobs,” said Klara Nahrstedt, a computer science professor at the University of Illinois at Urbana–Champaign and director of the school’s Coordinated Science Laboratory.

Data Privacy Issues

Companies require large volumes of data to train the models that power generative AI tools, and this process has come under intense scrutiny. Concerns over companies collecting consumers’ personal data have led the FTC to open an investigation into whether OpenAI has negatively impacted consumers through its data collection methods after the company potentially violated European data protection laws .

In response, the Biden-Harris administration developed an AI Bill of Rights that lists data privacy as one of its core principles. Although this legislation doesn’t carry much legal weight, it reflects the growing push to prioritize data privacy and compel AI companies to be more transparent and cautious about how they compile training data.

Increased Regulation

AI could shift the perspective on certain legal questions, depending on how generative AI lawsuits unfold in 2024. For example, the issue of intellectual property has come to the forefront in light of copyright lawsuits filed against OpenAI by writers, musicians and companies like The New York Times . These lawsuits affect how the U.S. legal system interprets what is private and public property, and a loss could spell major setbacks for OpenAI and its competitors.

Ethical issues that have surfaced in connection to generative AI have placed more pressure on the U.S. government to take a stronger stance. The Biden-Harris administration has maintained its moderate position with its latest executive order , creating rough guidelines around data privacy, civil liberties, responsible AI and other aspects of AI. However, the government could lean toward stricter regulations, depending on changes in the political climate .

Climate Change Concerns

On a far grander scale, AI is poised to have a major effect on sustainability, climate change and environmental issues. Optimists can view AI as a way to make supply chains more efficient, carrying out predictive maintenance and other procedures to reduce carbon emissions .

At the same time, AI could be seen as a key culprit in climate change . The energy and resources required to create and maintain AI models could raise carbon emissions by as much as 80 percent, dealing a devastating blow to any sustainability efforts within tech. Even if AI is applied to climate-conscious technology , the costs of building and training models could leave society in a worse environmental situation than before.

What Industries Will AI Impact the Most?

There’s virtually no major industry that modern AI hasn’t already affected. Here are a few of the industries undergoing the greatest changes as a result of AI.

AI in Manufacturing

Manufacturing has been benefiting from AI for years. With AI-enabled robotic arms and other manufacturing bots dating back to the 1960s and 1970s, the industry has adapted well to the powers of AI. These industrial robots typically work alongside humans to perform a limited range of tasks like assembly and stacking, and predictive analysis sensors keep equipment running smoothly.

AI in Healthcare

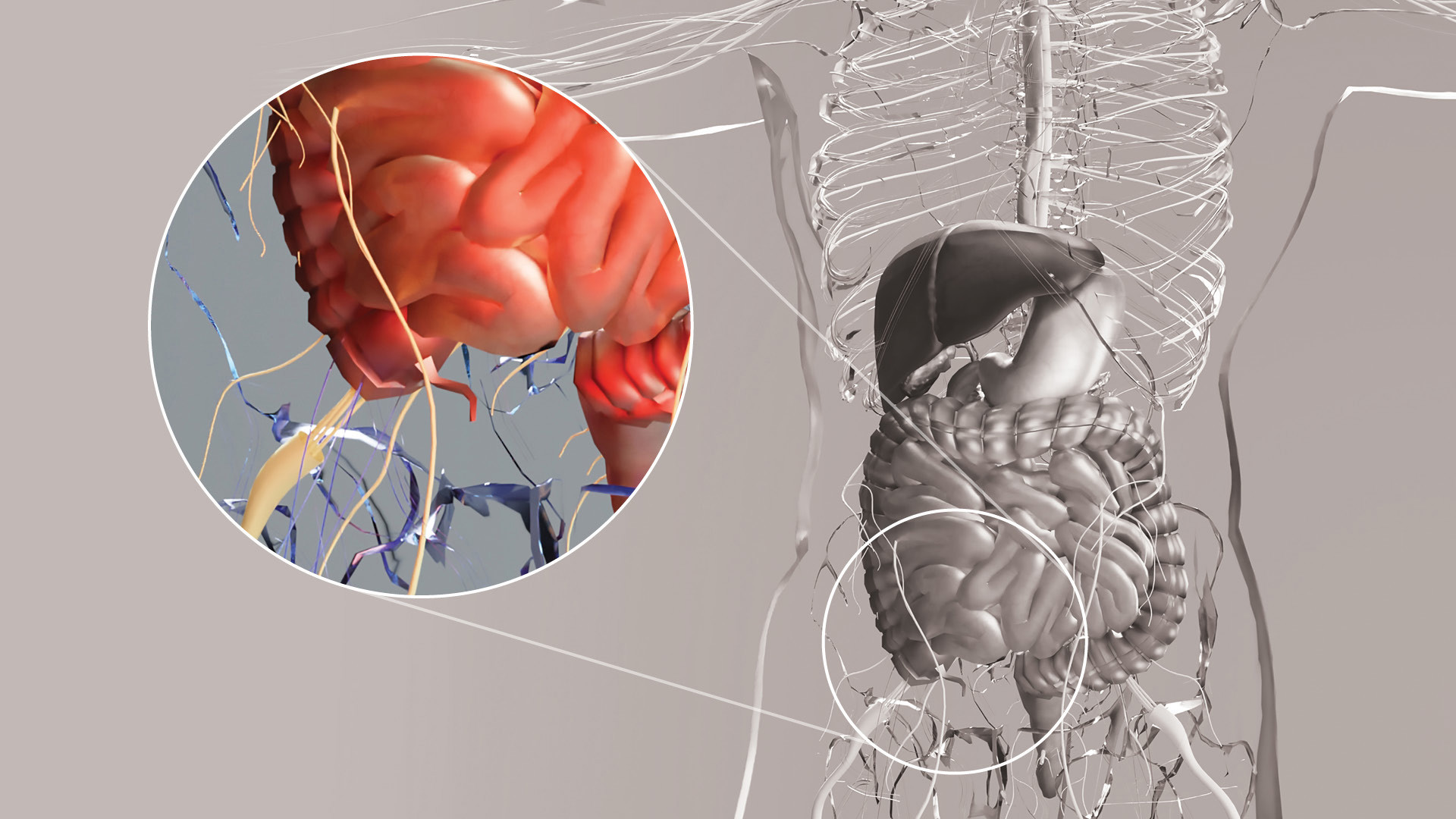

It may seem unlikely, but AI healthcare is already changing the way humans interact with medical providers. Thanks to its big data analysis capabilities, AI helps identify diseases more quickly and accurately, speed up and streamline drug discovery and even monitor patients through virtual nursing assistants.

AI in Finance

Banks, insurers and financial institutions leverage AI for a range of applications like detecting fraud, conducting audits and evaluating customers for loans. Traders have also used machine learning’s ability to assess millions of data points at once, so they can quickly gauge risk and make smart investing decisions .

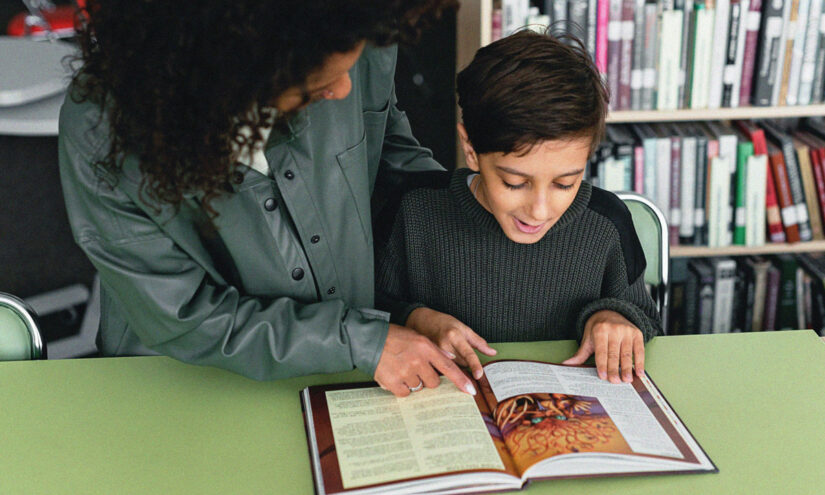

AI in Education

AI in education will change the way humans of all ages learn. AI’s use of machine learning, natural language processing and facial recognition help digitize textbooks, detect plagiarism and gauge the emotions of students to help determine who’s struggling or bored. Both presently and in the future, AI tailors the experience of learning to student’s individual needs.

AI in Media

Journalism is harnessing AI too, and will continue to benefit from it. One example can be seen in The Associated Press’ use of Automated Insights , which produces thousands of earning reports stories per year. But as generative AI writing tools , such as ChatGPT, enter the market, questions about their use in journalism abound.

AI in Customer Service

Most people dread getting a robocall , but AI in customer service can provide the industry with data-driven tools that bring meaningful insights to both the customer and the provider. AI tools powering the customer service industry come in the form of chatbots and virtual assistants .

AI in Transportation

Transportation is one industry that is certainly teed up to be drastically changed by AI. Self-driving cars and AI travel planners are just a couple of facets of how we get from point A to point B that will be influenced by AI. Even though autonomous vehicles are far from perfect, they will one day ferry us from place to place.

Risks and Dangers of AI

Despite reshaping numerous industries in positive ways, AI still has flaws that leave room for concern. Here are a few potential risks of artificial intelligence.

Job Losses

Between 2023 and 2028, 44 percent of workers’ skills will be disrupted . Not all workers will be affected equally — women are more likely than men to be exposed to AI in their jobs. Combine this with the fact that there is a gaping AI skills gap between men and women, and women seem much more susceptible to losing their jobs. If companies don’t have steps in place to upskill their workforces, the proliferation of AI could result in higher unemployment and decreased opportunities for those of marginalized backgrounds to break into tech.

Human Biases

The reputation of AI has been tainted with a habit of reflecting the biases of the people who train the algorithmic models. For example, facial recognition technology has been known to favor lighter-skinned individuals , discriminating against people of color with darker complexions. If researchers aren’t careful in rooting out these biases early on, AI tools could reinforce these biases in the minds of users and perpetuate social inequalities.

Deepfakes and Misinformation

The spread of deepfakes threatens to blur the lines between fiction and reality, leading the general public to question what’s real and what isn’t. And if people are unable to identify deepfakes, the impact of misinformation could be dangerous to individuals and entire countries alike. Deepfakes have been used to promote political propaganda, commit financial fraud and place students in compromising positions, among other use cases.

Data Privacy

Training AI models on public data increases the chances of data security breaches that could expose consumers’ personal information. Companies contribute to these risks by adding their own data as well. A 2024 Cisco survey found that 48 percent of businesses have entered non-public company information into generative AI tools and 69 percent are worried these tools could damage their intellectual property and legal rights. A single breach could expose the information of millions of consumers and leave organizations vulnerable as a result.

Automated Weapons

The use of AI in automated weapons poses a major threat to countries and their general populations. While automated weapons systems are already deadly, they also fail to discriminate between soldiers and civilians . Letting artificial intelligence fall into the wrong hands could lead to irresponsible use and the deployment of weapons that put larger groups of people at risk.

Superior Intelligence

Nightmare scenarios depict what’s known as the technological singularity , where superintelligent machines take over and permanently alter human existence through enslavement or eradication. Even if AI systems never reach this level, they can become more complex to the point where it’s difficult to determine how AI makes decisions at times. This can lead to a lack of transparency around how to fix algorithms when mistakes or unintended behaviors occur.

“I don’t think the methods we use currently in these areas will lead to machines that decide to kill us,” said Marc Gyongyosi, founder of Onetrack.AI . “I think that maybe five or 10 years from now, I’ll have to reevaluate that statement because we’ll have different methods available and different ways to go about these things.”

Frequently Asked Questions

What does the future of ai look like.

AI is expected to improve industries like healthcare, manufacturing and customer service, leading to higher-quality experiences for both workers and customers. However, it does face challenges like increased regulation, data privacy concerns and worries over job losses.

What will AI look like in 10 years?

AI is on pace to become a more integral part of people’s everyday lives. The technology could be used to provide elderly care and help out in the home. In addition, workers could collaborate with AI in different settings to enhance the efficiency and safety of workplaces.

Is AI a threat to humanity?

It depends on how people in control of AI decide to use the technology. If it falls into the wrong hands, AI could be used to expose people’s personal information, spread misinformation and perpetuate social inequalities, among other malicious use cases.

Great Companies Need Great People. That's Where We Come In.

Artificial intelligence is transforming our world — it is on all of us to make sure that it goes well

How ai gets built is currently decided by a small group of technologists. as this technology is transforming our lives, it should be in all of our interest to become informed and engaged..

Why should you care about the development of artificial intelligence?

Think about what the alternative would look like. If you and the wider public do not get informed and engaged, then we leave it to a few entrepreneurs and engineers to decide how this technology will transform our world.

That is the status quo. This small number of people at a few tech firms directly working on artificial intelligence (AI) do understand how extraordinarily powerful this technology is becoming . If the rest of society does not become engaged, then it will be this small elite who decides how this technology will change our lives.

To change this status quo, I want to answer three questions in this article: Why is it hard to take the prospect of a world transformed by AI seriously? How can we imagine such a world? And what is at stake as this technology becomes more powerful?

Why is it hard to take the prospect of a world transformed by artificial intelligence seriously?

In some way, it should be obvious how technology can fundamentally transform the world. We just have to look at how much the world has already changed. If you could invite a family of hunter-gatherers from 20,000 years ago on your next flight, they would be pretty surprised. Technology has changed our world already, so we should expect that it can happen again.

But while we have seen the world transform before, we have seen these transformations play out over the course of generations. What is different now is how very rapid these technological changes have become. In the past, the technologies that our ancestors used in their childhood were still central to their lives in their old age. This has not been the case anymore for recent generations. Instead, it has become common that technologies unimaginable in one's youth become ordinary in later life.

This is the first reason we might not take the prospect seriously: it is easy to underestimate the speed at which technology can change the world.

The second reason why it is difficult to take the possibility of transformative AI – potentially even AI as intelligent as humans – seriously is that it is an idea that we first heard in the cinema. It is not surprising that for many of us, the first reaction to a scenario in which machines have human-like capabilities is the same as if you had asked us to take seriously a future in which vampires, werewolves, or zombies roam the planet. 1

But, it is plausible that it is both the stuff of sci-fi fantasy and the central invention that could arrive in our, or our children’s, lifetimes.

The third reason why it is difficult to take this prospect seriously is by failing to see that powerful AI could lead to very large changes. This is also understandable. It is difficult to form an idea of a future that is very different from our own time. There are two concepts that I find helpful in imagining a very different future with artificial intelligence. Let’s look at both of them.

How to develop an idea of what the future of artificial intelligence might look like?

When thinking about the future of artificial intelligence, I find it helpful to consider two different concepts in particular: human-level AI, and transformative AI. 2 The first concept highlights the AI’s capabilities and anchors them to a familiar benchmark, while transformative AI emphasizes the impact that this technology would have on the world.

From where we are today, much of this may sound like science fiction. It is therefore worth keeping in mind that the majority of surveyed AI experts believe there is a real chance that human-level artificial intelligence will be developed within the next decades, and some believe that it will exist much sooner.

The advantages and disadvantages of comparing machine and human intelligence

One way to think about human-level artificial intelligence is to contrast it with the current state of AI technology. While today’s AI systems often have capabilities similar to a particular, limited part of the human mind, a human-level AI would be a machine that is capable of carrying out the same range of intellectual tasks that we humans are capable of. 3 It is a machine that would be “able to learn to do anything that a human can do,” as Norvig and Russell put it in their textbook on AI. 4

Taken together, the range of abilities that characterize intelligence gives humans the ability to solve problems and achieve a wide variety of goals. A human-level AI would therefore be a system that could solve all those problems that we humans can solve, and do the tasks that humans do today. Such a machine, or collective of machines, would be able to do the work of a translator, an accountant, an illustrator, a teacher, a therapist, a truck driver, or the work of a trader on the world’s financial markets. Like us, it would also be able to do research and science, and to develop new technologies based on that.

The concept of human-level AI has some clear advantages. Using the familiarity of our own intelligence as a reference provides us with some clear guidance on how to imagine the capabilities of this technology.

However, it also has clear disadvantages. Anchoring the imagination of future AI systems to the familiar reality of human intelligence carries the risk that it obscures the very real differences between them.

Some of these differences are obvious. For example, AI systems will have the immense memory of computer systems, against which our own capacity to store information pales. Another obvious difference is the speed at which a machine can absorb and process information. But information storage and processing speed are not the only differences. The domains in which machines already outperform humans is steadily increasing: in chess, after matching the level of the best human players in the late 90s, AI systems reached superhuman levels more than a decade ago. In other games like Go or complex strategy games, this has happened more recently. 5

These differences mean that an AI that is at least as good as humans in every domain would overall be much more powerful than the human mind. Even the first “human-level AI” would therefore be quite superhuman in many ways. 6

Human intelligence is also a bad metaphor for machine intelligence in other ways. The way we think is often very different from machines, and as a consequence the output of thinking machines can be very alien to us.

Most perplexing and most concerning are the strange and unexpected ways in which machine intelligence can fail. The AI-generated image of the horse below provides an example: on the one hand, AIs can do what no human can do – produce an image of anything, in any style (here photorealistic), in mere seconds – but on the other hand it can fail in ways that no human would fail. 7 No human would make the mistake of drawing a horse with five legs. 8

Imagining a powerful future AI as just another human would therefore likely be a mistake. The differences might be so large that it will be a misnomer to call such systems “human-level.”

AI-generated image of a horse 9

Transformative artificial intelligence is defined by the impact this technology would have on the world

In contrast, the concept of transformative AI is not based on a comparison with human intelligence. This has the advantage of sidestepping the problems that the comparisons with our own mind bring. But it has the disadvantage that it is harder to imagine what such a system would look like and be capable of. It requires more from us. It requires us to imagine a world with intelligent actors that are potentially very different from ourselves.

Transformative AI is not defined by any specific capabilities, but by the real-world impact that the AI would have. To qualify as transformative, researchers think of it as AI that is “powerful enough to bring us into a new, qualitatively different future.” 10

In humanity’s history, there have been two cases of such major transformations, the agricultural and the industrial revolutions.

Transformative AI becoming a reality would be an event on that scale. Like the arrival of agriculture 10,000 years ago, or the transition from hand- to machine-manufacturing, it would be an event that would change the world for billions of people around the globe and for the entire trajectory of humanity’s future .

Technologies that fundamentally change how a wide range of goods or services are produced are called ‘general-purpose technologies’. The two previous transformative events were caused by the discovery of two particularly significant general-purpose technologies: the change in food production as humanity transitioned from hunting and gathering to farming, and the rise of machine manufacturing in the industrial revolution. Based on the evidence and arguments presented in this series on AI development, I believe it is plausible that powerful AI could represent the introduction of a similarly significant general-purpose technology.

Timeline of the three transformative events in world history

A future of human-level or transformative AI?

The two concepts are closely related, but they are not the same. The creation of a human-level AI would certainly have a transformative impact on our world. If the work of most humans could be carried out by an AI, the lives of millions of people would change. 11

The opposite, however, is not true: we might see transformative AI without developing human-level AI. Since the human mind is in many ways a poor metaphor for the intelligence of machines, we might plausibly develop transformative AI before we develop human-level AI. Depending on how this goes, this might mean that we will never see any machine intelligence for which human intelligence is a helpful comparison.

When and if AI systems might reach either of these levels is of course difficult to predict. In my companion article on this question, I give an overview of what researchers in this field currently believe. Many AI experts believe there is a real chance that such systems will be developed within the next decades, and some believe that they will exist much sooner.

What is at stake as artificial intelligence becomes more powerful?

All major technological innovations lead to a range of positive and negative consequences. For AI, the spectrum of possible outcomes – from the most negative to the most positive – is extraordinarily wide.

That the use of AI technology can cause harm is clear, because it is already happening.

AI systems can cause harm when people use them maliciously. For example, when they are used in politically-motivated disinformation campaigns or to enable mass surveillance. 12

But AI systems can also cause unintended harm, when they act differently than intended or fail. For example, in the Netherlands the authorities used an AI system which falsely claimed that an estimated 26,000 parents made fraudulent claims for child care benefits. The false allegations led to hardship for many poor families, and also resulted in the resignation of the Dutch government in 2021. 13

As AI becomes more powerful, the possible negative impacts could become much larger. Many of these risks have rightfully received public attention: more powerful AI could lead to mass labor displacement, or extreme concentrations of power and wealth. In the hands of autocrats, it could empower totalitarianism through its suitability for mass surveillance and control.

The so-called alignment problem of AI is another extreme risk. This is the concern that nobody would be able to control a powerful AI system, even if the AI takes actions that harm us humans, or humanity as a whole. This risk is unfortunately receiving little attention from the wider public, but it is seen as an extremely large risk by many leading AI researchers. 14

How could an AI possibly escape human control and end up harming humans?

The risk is not that an AI becomes self-aware, develops bad intentions, and “chooses” to do this. The risk is that we try to instruct the AI to pursue some specific goal – even a very worthwhile one – and in the pursuit of that goal it ends up harming humans. It is about unintended consequences. The AI does what we told it to do, but not what we wanted it to do.

Can’t we just tell the AI to not do those things? It is definitely possible to build an AI that avoids any particular problem we foresee, but it is hard to foresee all the possible harmful unintended consequences. The alignment problem arises because of “the impossibility of defining true human purposes correctly and completely,” as AI researcher Stuart Russell puts it. 15

Can’t we then just switch off the AI? This might also not be possible. That is because a powerful AI would know two things: it faces a risk that humans could turn it off, and it can’t achieve its goals once it has been turned off. As a consequence, the AI will pursue a very fundamental goal of ensuring that it won’t be switched off. This is why, once we realize that an extremely intelligent AI is causing unintended harm in the pursuit of some specific goal, it might not be possible to turn it off or change what the system does. 16

This risk – that humanity might not be able to stay in control once AI becomes very powerful, and that this might lead to an extreme catastrophe – has been recognized right from the early days of AI research more than 70 years ago. 17 The very rapid development of AI in recent years has made a solution to this problem much more urgent.

I have tried to summarize some of the risks of AI, but a short article is not enough space to address all possible questions. Especially on the very worst risks of AI systems, and what we can do now to reduce them, I recommend reading the book The Alignment Problem by Brian Christian and Benjamin Hilton’s article ‘Preventing an AI-related catastrophe’ .

If we manage to avoid these risks, transformative AI could also lead to very positive consequences. Advances in science and technology were crucial to the many positive developments in humanity’s history. If artificial ingenuity can augment our own, it could help us make progress on the many large problems we face: from cleaner energy, to the replacement of unpleasant work, to much better healthcare.

This extremely large contrast between the possible positives and negatives makes clear that the stakes are unusually high with this technology. Reducing the negative risks and solving the alignment problem could mean the difference between a healthy, flourishing, and wealthy future for humanity – and the destruction of the same.

How can we make sure that the development of AI goes well?

Making sure that the development of artificial intelligence goes well is not just one of the most crucial questions of our time, but likely one of the most crucial questions in human history. This needs public resources – public funding, public attention, and public engagement.

Currently, almost all resources that are dedicated to AI aim to speed up the development of this technology. Efforts that aim to increase the safety of AI systems, on the other hand, do not receive the resources they need. Researcher Toby Ord estimated that in 2020 between $10 to $50 million was spent on work to address the alignment problem. 18 Corporate AI investment in the same year was more than 2000-times larger, it summed up to $153 billion.

This is not only the case for the AI alignment problem. The work on the entire range of negative social consequences from AI is under-resourced compared to the large investments to increase the power and use of AI systems.

It is frustrating and concerning for society as a whole that AI safety work is extremely neglected and that little public funding is dedicated to this crucial field of research. On the other hand, for each individual person this neglect means that they have a good chance to actually make a positive difference, if they dedicate themselves to this problem now. And while the field of AI safety is small, it does provide good resources on what you can do concretely if you want to work on this problem.

I hope that more people dedicate their individual careers to this cause, but it needs more than individual efforts. A technology that is transforming our society needs to be a central interest of all of us. As a society we have to think more about the societal impact of AI, become knowledgeable about the technology, and understand what is at stake.

When our children look back at today, I imagine that they will find it difficult to understand how little attention and resources we dedicated to the development of safe AI. I hope that this changes in the coming years, and that we begin to dedicate more resources to making sure that powerful AI gets developed in a way that benefits us and the next generations.

If we fail to develop this broad-based understanding, then it will remain the small elite that finances and builds this technology that will determine how one of the – or plausibly the – most powerful technology in human history will transform our world.

If we leave the development of artificial intelligence entirely to private companies, then we are also leaving it up these private companies what our future — the future of humanity — will be.

With our work at Our World in Data we want to do our small part to enable a better informed public conversation on AI and the future we want to live in. You can find these resources on OurWorldinData.org/artificial-intelligence

Acknowledgements: I would like to thank my colleagues Daniel Bachler, Charlie Giattino, and Edouard Mathieu for their helpful comments to drafts of this essay.

This problem becomes even larger when we try to imagine how a future with a human-level AI might play out. Any particular scenario will not only involve the idea that this powerful AI exists, but a whole range of additional assumptions about the future context in which this happens. It is therefore hard to communicate a scenario of a world with human-level AI that does not sound contrived, bizarre or even silly.

Both of these concepts are widely used in the scientific literature on artificial intelligence. For example, questions about the timelines for the development of future AI are often framed using these terms. See my article on this topic .

The fact that humans are capable of a range of intellectual tasks means that you arrive at different definitions of intelligence depending on which aspect within that range you focus on (the Wikipedia entry on intelligence , for example, lists a number of definitions from various researchers and different disciplines). As a consequence there are also various definitions of ‘human-level AI’.

There are also several closely related terms: Artificial General Intelligence, High-Level Machine Intelligence, Strong AI, or Full AI are sometimes synonymously used, and sometimes defined in similar, yet different ways. In specific discussions, it is necessary to define this concept more narrowly; for example, in studies on AI timelines researchers offer more precise definitions of what human-level AI refers to in their particular study.

Peter Norvig and Stuart Russell (2021) — Artificial Intelligence: A Modern Approach. Fourth edition. Published by Pearson.

The AI system AlphaGo , and its various successors, won against Go masters. The AI system Pluribus beat humans at no-limit Texas hold 'em poker. The AI system Cicero can strategize and use human language to win the strategy game Diplomacy. See: Meta Fundamental AI Research Diplomacy Team (FAIR), Anton Bakhtin, Noam Brown, Emily Dinan, Gabriele Farina, Colin Flaherty, Daniel Fried, et al. (2022) – ‘Human-Level Play in the Game of Diplomacy by Combining Language Models with Strategic Reasoning’. In Science 0, no. 0 (22 November 2022): eade9097. https://doi.org/10.1126/science.ade9097 .

This also poses a problem when we evaluate how the intelligence of a machine compares with the intelligence of humans. If intelligence was a general ability, a single capacity, then we could easily compare and evaluate it, but the fact that it is a range of skills makes it much more difficult to compare across machine and human intelligence. Tests for AI systems are therefore comprising a wide range of tasks. See for example Dan Hendrycks, Collin Burns, Steven Basart, Andy Zou, Mantas Mazeika, Dawn Song, Jacob Steinhardt (2020) – Measuring Massive Multitask Language Understanding or the definition of what would qualify as artificial general intelligence in this Metaculus prediction .

An overview of how AI systems can fail can be found in Charles Choi – 7 Revealing Ways AIs Fail . It is also worth reading through the AIAAIC Repository which “details recent incidents and controversies driven by or relating to AI, algorithms, and automation."

I have taken this example from AI researcher François Chollet , who published it here .

Via François Chollet , who published it here . Based on Chollet’s comments it seems that this image was created by the AI system ‘Stable Diffusion’.

This quote is from Holden Karnofsky (2021) – AI Timelines: Where the Arguments, and the "Experts," Stand . For Holden Karnofsky’s earlier thinking on this conceptualization of AI see his 2016 article ‘Some Background on Our Views Regarding Advanced Artificial Intelligence’ .

Ajeya Cotra, whose research on AI timelines I discuss in other articles of this series, attempts to give a quantitative definition of what would qualify as transformative AI. in her widely cited report on AI timelines she defines it as a change in software technology that brings the growth rate of gross world product "to 20%-30% per year". Several other researchers define TAI in similar terms.

Human-level AI is typically defined as a software system that can carry out at least 90% or 99% of all economically relevant tasks that humans carry out. A lower-bar definition would be an AI system that can carry out all those tasks that can currently be done by another human who is working remotely on a computer.

On the use of AI in politically-motivated disinformation campaigns see for example John Villasenor (November 2020) – How to deal with AI-enabled disinformation . More generally on this topic see Brundage and Avin et al. (2018) – The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation, published at maliciousaireport.com . A starting point for literature and reporting on mass surveillance by governments is the relevant Wikipedia entry .

See for example the Wikipedia entry on the ‘Dutch childcare benefits scandal’ and Melissa Heikkilä (2022) – ‘Dutch scandal serves as a warning for Europe over risks of using algorithms’ , in Politico. The technology can also reinforce discrimination in terms of race and gender. See Brian Christian’s book The Alignment Problem and the reports of the AI Now Institute .

Overviews are provided in Stuart Russell (2019) – Human Compatible (especially chapter 5) and Brian Christian’s 2020 book The Alignment Problem . Christian presents the thinking of many leading AI researchers from the earliest days up to now and presents an excellent overview of this problem. It is also seen as a large risk by some of the leading private firms who work towards powerful AI – see OpenAI's article " Our approach to alignment research " from August 2022.

Stuart Russell (2019) – Human Compatible

A question that follows from this is, why build such a powerful AI in the first place?

The incentives are very high. As I emphasize below, this innovation has the potential to lead to very positive developments. In addition to the large social benefits there are also large incentives for those who develop it – the governments that can use it for their goals, the individuals who can use it to become more powerful and wealthy. Additionally, it is of scientific interest and might help us to understand our own mind and intelligence better. And lastly, even if we wanted to stop building powerful AIs, it is likely very hard to actually achieve it. It is very hard to coordinate across the whole world and agree to stop building more advanced AI – countries around the world would have to agree and then find ways to actually implement it.

In 1950 the computer science pioneer Alan Turing put it like this: “If a machine can think, it might think more intelligently than we do, and then where should we be? … [T]his new danger is much closer. If it comes at all it will almost certainly be within the next millennium. It is remote but not astronomically remote, and is certainly something which can give us anxiety. It is customary, in a talk or article on this subject, to offer a grain of comfort, in the form of a statement that some particularly human characteristic could never be imitated by a machine. … I cannot offer any such comfort, for I believe that no such bounds can be set.” Alan. M. Turing (1950) – Computing Machinery and Intelligence , In Mind, Volume LIX, Issue 236, October 1950, Pages 433–460.

Norbert Wiener is another pioneer who saw the alignment problem very early. One way he put it was “If we use, to achieve our purposes, a mechanical agency with whose operation we cannot interfere effectively … we had better be quite sure that the purpose put into the machine is the purpose which we really desire.” quoted from Norbert Wiener (1960) – Some Moral and Technical Consequences of Automation: As machines learn they may develop unforeseen strategies at rates that baffle their programmers. In Science.

In 1950 – the same year in which Turing published the cited article – Wiener published his book The Human Use of Human Beings, whose front-cover blurb reads: “The ‘mechanical brain’ and similar machines can destroy human values or enable us to realize them as never before.”

Toby Ord – The Precipice . He makes this projection in footnote 55 of chapter 2. It is based on the 2017 estimate by Farquhar.

Cite this work

Our articles and data visualizations rely on work from many different people and organizations. When citing this article, please also cite the underlying data sources. This article can be cited as:

BibTeX citation

Reuse this work freely

All visualizations, data, and code produced by Our World in Data are completely open access under the Creative Commons BY license . You have the permission to use, distribute, and reproduce these in any medium, provided the source and authors are credited.

The data produced by third parties and made available by Our World in Data is subject to the license terms from the original third-party authors. We will always indicate the original source of the data in our documentation, so you should always check the license of any such third-party data before use and redistribution.

All of our charts can be embedded in any site.

Our World in Data is free and accessible for everyone.

Help us do this work by making a donation.

The present and future of AI

Finale doshi-velez on how ai is shaping our lives and how we can shape ai.

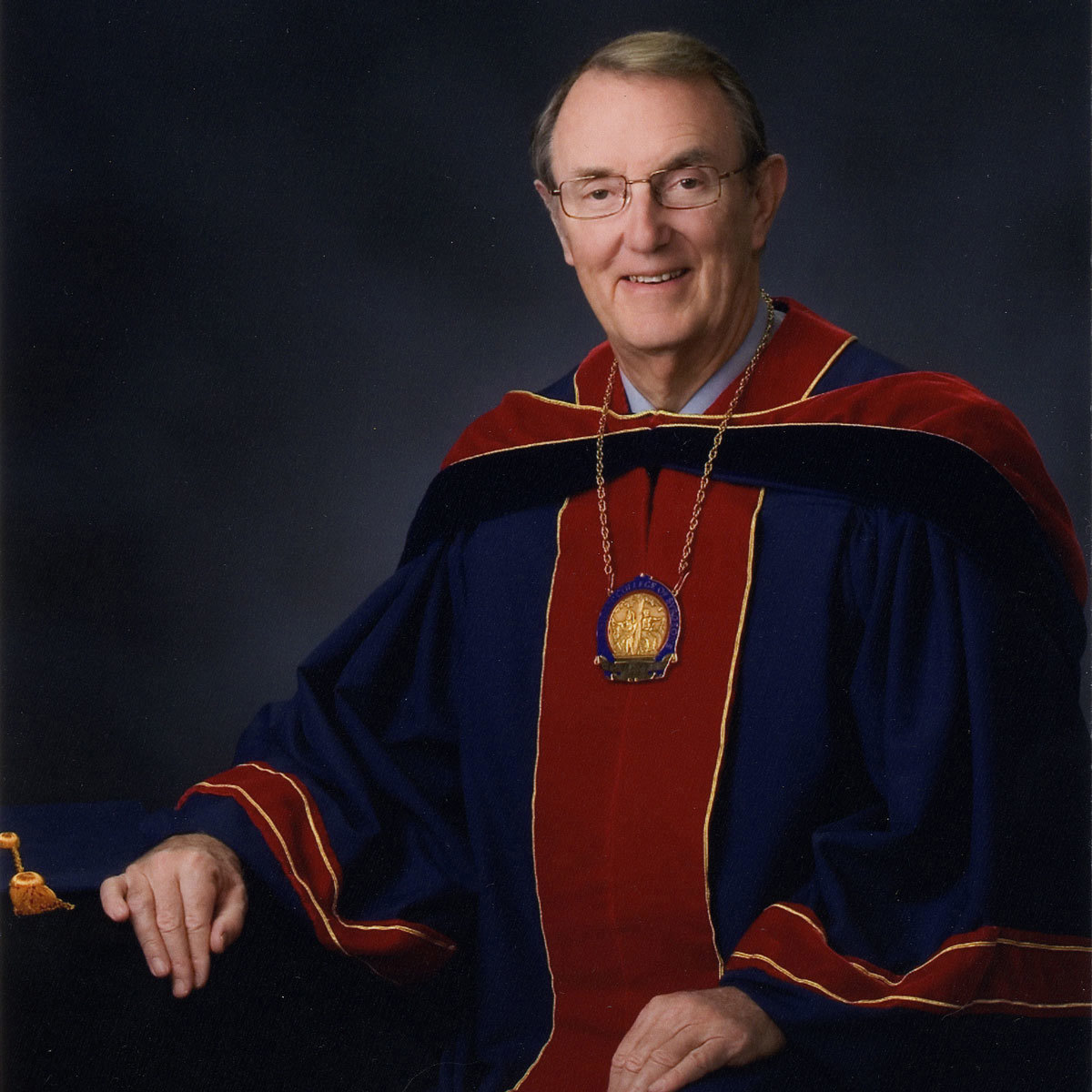

Finale Doshi-Velez, the John L. Loeb Professor of Engineering and Applied Sciences. (Photo courtesy of Eliza Grinnell/Harvard SEAS)

How has artificial intelligence changed and shaped our world over the last five years? How will AI continue to impact our lives in the coming years? Those were the questions addressed in the most recent report from the One Hundred Year Study on Artificial Intelligence (AI100), an ongoing project hosted at Stanford University, that will study the status of AI technology and its impacts on the world over the next 100 years.

The 2021 report is the second in a series that will be released every five years until 2116. Titled “Gathering Strength, Gathering Storms,” the report explores the various ways AI is increasingly touching people’s lives in settings that range from movie recommendations and voice assistants to autonomous driving and automated medical diagnoses .

Barbara Grosz , the Higgins Research Professor of Natural Sciences at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS) is a member of the standing committee overseeing the AI100 project and Finale Doshi-Velez , Gordon McKay Professor of Computer Science, is part of the panel of interdisciplinary researchers who wrote this year’s report.

We spoke with Doshi-Velez about the report, what it says about the role AI is currently playing in our lives, and how it will change in the future.

Q: Let's start with a snapshot: What is the current state of AI and its potential?

Doshi-Velez: Some of the biggest changes in the last five years have been how well AIs now perform in large data regimes on specific types of tasks. We've seen [DeepMind’s] AlphaZero become the best Go player entirely through self-play, and everyday uses of AI such as grammar checks and autocomplete, automatic personal photo organization and search, and speech recognition become commonplace for large numbers of people.

In terms of potential, I'm most excited about AIs that might augment and assist people. They can be used to drive insights in drug discovery, help with decision making such as identifying a menu of likely treatment options for patients, and provide basic assistance, such as lane keeping while driving or text-to-speech based on images from a phone for the visually impaired. In many situations, people and AIs have complementary strengths. I think we're getting closer to unlocking the potential of people and AI teams.

There's a much greater recognition that we should not be waiting for AI tools to become mainstream before making sure they are ethical.

Q: Over the course of 100 years, these reports will tell the story of AI and its evolving role in society. Even though there have only been two reports, what's the story so far?

There's actually a lot of change even in five years. The first report is fairly rosy. For example, it mentions how algorithmic risk assessments may mitigate the human biases of judges. The second has a much more mixed view. I think this comes from the fact that as AI tools have come into the mainstream — both in higher stakes and everyday settings — we are appropriately much less willing to tolerate flaws, especially discriminatory ones. There's also been questions of information and disinformation control as people get their news, social media, and entertainment via searches and rankings personalized to them. So, there's a much greater recognition that we should not be waiting for AI tools to become mainstream before making sure they are ethical.

Q: What is the responsibility of institutes of higher education in preparing students and the next generation of computer scientists for the future of AI and its impact on society?

First, I'll say that the need to understand the basics of AI and data science starts much earlier than higher education! Children are being exposed to AIs as soon as they click on videos on YouTube or browse photo albums. They need to understand aspects of AI such as how their actions affect future recommendations.

But for computer science students in college, I think a key thing that future engineers need to realize is when to demand input and how to talk across disciplinary boundaries to get at often difficult-to-quantify notions of safety, equity, fairness, etc. I'm really excited that Harvard has the Embedded EthiCS program to provide some of this education. Of course, this is an addition to standard good engineering practices like building robust models, validating them, and so forth, which is all a bit harder with AI.

I think a key thing that future engineers need to realize is when to demand input and how to talk across disciplinary boundaries to get at often difficult-to-quantify notions of safety, equity, fairness, etc.

Q: Your work focuses on machine learning with applications to healthcare, which is also an area of focus of this report. What is the state of AI in healthcare?

A lot of AI in healthcare has been on the business end, used for optimizing billing, scheduling surgeries, that sort of thing. When it comes to AI for better patient care, which is what we usually think about, there are few legal, regulatory, and financial incentives to do so, and many disincentives. Still, there's been slow but steady integration of AI-based tools, often in the form of risk scoring and alert systems.

In the near future, two applications that I'm really excited about are triage in low-resource settings — having AIs do initial reads of pathology slides, for example, if there are not enough pathologists, or get an initial check of whether a mole looks suspicious — and ways in which AIs can help identify promising treatment options for discussion with a clinician team and patient.

Q: Any predictions for the next report?

I'll be keen to see where currently nascent AI regulation initiatives have gotten to. Accountability is such a difficult question in AI, it's tricky to nurture both innovation and basic protections. Perhaps the most important innovation will be in approaches for AI accountability.

Topics: AI / Machine Learning , Computer Science

Cutting-edge science delivered direct to your inbox.

Join the Harvard SEAS mailing list.

Scientist Profiles

Finale Doshi-Velez

Herchel Smith Professor of Computer Science

Press Contact

Leah Burrows | 617-496-1351 | [email protected]

Related News

Alumni profile: Jacomo Corbo, Ph.D. '08

Racing into the future of machine learning

AI / Machine Learning , Computer Science

Ph.D. student Monteiro Paes named Apple Scholar in AI/ML

Monteiro Paes studies fairness and arbitrariness in machine learning models

AI / Machine Learning , Applied Mathematics , Awards , Graduate Student Profile

A new phase for Harvard Quantum Computing Club

SEAS students place second at MIT quantum hackathon

Computer Science , Quantum Engineering , Undergraduate Student Profile

The AI Anthology: 20 Essays You Should Read About Our Future With AI

Microsoft's Chief Scientific Officer, Eric Horvitz, has spearheaded an initiative aimed at stimulating an enriching and multidimensional conversation on the future of AI. Dubbed " AI Anthology ," the project features 20 op-ed essays from an eclectic mix of scholars and professionals providing their diverse perspectives on the transformative potential of AI.

With the backdrop of impressive leaps in AI capabilities, notably OpenAI's GPT-4, the anthology is a collaborative effort aimed at elucidating the profound ways AI can benefit humanity while exploring potential challenges. While many fear the unknowns of AI advancement, the anthology is grounded in an optimistic view of the future of AI, aiming to catalyze thought-provoking dialogue and collaborative exploration.

The anthology is a remarkable testament to the multi-faceted nature of AI implications, ranging from the arts to education, science, medicine, and the economy. Horvitz's own journey with AI began with an early glimpse into the transformative capabilities of GPT-4. His awe-inspiring experience with the AI highlighted its potential to redefine disciplinary boundaries and ignite novel integrations of traditionally disparate concepts and methodologies. Yet, it also underscored the need for careful, thoughtful exploration of potential disruptions and adverse consequences.

Four essays will be published to the AI Anthology each week, with the complete collection available on June 26, 2023. Here are the first four essays:

- A Thinking Evolution by Alec Gallimore, a rocket scientist and Dean of Engineering at the University of Michigan, gets curious about the odyssey of AI.

- Eradicating Inequality by Gillian Hadfield, Professor of Law and Economics at the University of Toronto, champions legal access for all.

- Empowering Creation by Ada Palmer, Professor of History at University of Chicago, explores the possibilities of the information revolution.

- Accessible Healthcare by Robert Wachter, Chair of the Department of Medicine at the University of California, San Francisco, examines how AI could reshape clinical care.

The contributors to the anthology represent a broad spectrum of experts. Each provides a unique perspective on the potentials and challenges of AI, covering a range of sectors, from education and healthcare to the creative arts. They were all granted early confidential access to GPT-4 and were encouraged to reflect upon two crucial questions: How might this technology and its successors contribute to human flourishing? And, how might society best guide the technology to achieve maximal benefits for humanity? These two questions, designed to explore the potential positive impact of AI, are central to the AI Anthology .

The resulting collection of essays are well worth the read! It offers an optimistic lens through which to view the future of AI and serves as a call to action for us all to join the conversation and contribute to the development of AI that promotes human flourishing.

Let’s stay in touch. Get the latest AI news from Maginative in your inbox.

A business journal from the Wharton School of the University of Pennsylvania

What Is the Future of AI?

November 9, 2023 • 26 min read.

If we want to coexist with AI, it’s time to stop viewing it as a threat, Wharton professors say.

AI is here and it’s not going away. Wharton professors Kartik Hosanagar and Stefano Puntoni join Eric Bradlow, vice dean of Analytics at Wharton, to discuss how AI will affect business and society as adoption continues to grow. How can humans work together with AI to boost productivity and flourish? This interview is part of a special 10-part series called “AI in Focus.”

Watch the video or read the full transcript below.

Eric Bradlow: Welcome, everyone, to the first episode of the Analytics at Wharton and AI at Wharton podcast series on artificial intelligence. My name’s Eric Bradlow. I’m a professor of marketing and statistics here at the Wharton School. I’m also vice dean of Analytics at Wharton, and I will be the host for this multi-part series on artificial intelligence.

I can think of no better way to start that series, with two of my friends and colleagues who actually run our Center on Artificial Intelligence. The title of this episode is “Artificial Intelligence is Here.” As you will hear, we’ll do episodes on artificial intelligence in sports, artificial intelligence in real estate, artificial intelligence in health care. But I think it’s best to start just with the basics.

I’m very happy to have join with me today, first, my colleague Kartik Hosanagar. Kartik is the John C. Hower Professor at the Wharton School. He’s also, as I mentioned, the co-director of our Center on Artificial Intelligence at Wharton. And normally, I don’t read someone’s bio. First of all, it’s only a few sentences. But I think this actually is important for our listeners to understand the breadth and also the practicality of Kartik’s work. His research examines how AI impacts business and society, and something you’ll hear about is, that is what our center does. There’s kind of two prongs. Second, he was a founder of Yodle, where he applied AI to online advertising. And more recently and currently, to Jumpcut Media, a company applying AI to democratize Hollywood. He also teaches our courses on enabling technologies and AI business and society. Kartik, welcome.

Kartik Hosanagar: Thanks for having me, Eric.

Bradlow: I’m also happy to have my colleague, Stefano Puntoni. Stefano is the Sebastian S. Kresge Professor of Marketing here at the Wharton School. He’s also, along with Kartik, the co-Director of our Center on AI at Wharton. And his research examines how artificial intelligence and automation are changing consumption and society. And similar to Kartik, he also teaches our courses on artificial intelligence, brand management, and marketing strategies. Stefano, welcome.

Stefano Puntoni: Thank you very much.

Bradlow: It’s great to be with both of you. So maybe, Kartik, I’ll throw the first question out to you. While artificial intelligence is now the big thing that every company is thinking about, what do you see as— well, first of all, maybe even before what are challenges facing companies, how would we even define what artificial intelligence is? Because it can mean lots of things. It could mean everything from taking texts and images and stuff like that, and kind of quantifying it, or it could be generative AI, which is the same side of the coin, but a different part. How do you even view, what does it mean to say “artificial intelligence”?

Hosanagar: Yeah. Artificial Intelligence is a field of computer science which is focused on getting computers to do the kinds of things that traditionally requires human intelligence. What that is, is a moving target. When computers couldn’t play, say, a very simple game like— well, chess is not simple, but maybe even simpler board games. Maybe that’s the target. And then when you say computers can play chess, and when that’s easy for computers, we no longer think of that as AI.

But really, today, when we think about what is AI, it’s again, getting computers to do the kinds of things that require human intelligence. Like understand language. Like navigate the physical world. Like being able to learn from experiences, from data. So, all of that really is included in AI.

Bradlow: Do you put any separation between what I call— maybe I’m not even using the right words — traditional AI, which again back in my old days, we’ve had AI around, “How do you take an image, and turn it into something?” “How do we take video, how do we take text?” That’s one form of AI versus what’s got everybody excited today, which is ChatGPT, which is a form of large language model. Do you put any differentiation there? Or that’s just a way for us to understand. One is creation of data, and the other one is using it in an application of forecast and language.

Hosanagar: Yeah, I feel there is some distinction. But ultimately, they’re closely related. Because what we think of as the more traditional AI, or predictive AI, it’s all about taking data and understanding the landscape of the data. And to be able to say, “In this region of the data,” let’s say you’re predicting whether an image is about Bob, or is it about Lisa? And so you kind of say, “In the image space, this region, if the shape of the colors are like this, the shape of the eyes are like this, then it’s Bob. In that area, it’s Lisa.” And so on. So, it’s mostly understanding the space of data, and being able to say, with emails, is it fraudulent or not? And saying which portion of the space does it have one value versus the other.

Now, once you started getting really good at predicting that, then you can start to use those predictions to create. And that’s where it’s the next step, where it becomes generative AI. Where now you are predicting, what’s the next word? You might as well use it to start generating text, and start generating sentences, essays and novels, and so on.

Bradlow: Stefano, let me ask you a question. If one went to your web site on the Wharton web site — and by the way. Just for our listeners, Stefano has a lot of deep training in statistics. But most people would say, “You’re not a computer scientist. You’re not a mathematician. What the hell do you have to do with artificial intelligence?” Like, “What role does consumer psychology play in artificial intelligence today? Isn’t it just for us math types?”

Puntoni: If you talk to companies and you ask them why did your analytics program fail, you almost never hear the answer, “Because the models don’t work. Because the techniques didn’t deliver.” It’s never about the technical stuff. It’s always about people. It’s about lack of vision. It’s about the lack of alignment between decision makers and analysts. It’s about the lack of clarity about why we do analytics. So, I think that a behavioral science perspective on analytics can bring a lot of benefits to try to understand how do we connect decisions in companies to the data that we have? That takes both the technical skills and the human insights, the psychology insights. I think bringing those together, I find that has a lot of value and a lot of potential insights. A lot of low-hanging fruits, in fact, in companies, I think.

Bradlow: As a follow-up question, we all read these articles that say 70% of the jobs are going to go away, and robots or automation or AI is going to put me out of business. Should employees be happy with what’s going on in AI? Or the answer is, it depends who you are and what you’re doing? What are your thoughts? And then Kartik, I’d love to get your thoughts on that, including the work you’re doing at Jumpcut. Because we all know one of the biggest issues in the current writer’s strike was actually what’s going to happen with artificial intelligence? I’d love to hear your thoughts from the psychology or the employee motivation perspective, and then, what are you seeing actually out in the real world?

Puntoni: The academic answer to any question would be, “It depends. It depends.” But in my research, what I’ve been looking at is the extent to which people perceive automation as a threat. And what we find is that oftentimes when tasks are being automated by AI, for example, our tasks have to have some kind of meaning to the person. That they are essential to the way that they see themselves, for example, in their professional identity. That can create a lot of threat.

So, you have psychological threats, and then you have these objective threats of maybe jobs on the line. And maybe you’ll feel happy about knowing that I try out the professor job on some of these scoring algorithms, and we are fairly safe from our own replacement.

Bradlow: Kartik, let me ask you. And let me just preface this with saying, you probably don’t even know about this. Fifteen years ago, I wrote a paper with a former colleague and a doctoral student about how to use— I didn’t call it AI back then. But how to, basically, in large scale, compute features of advertisements and optimally design advertisements based on a massive number of features. And I remember the reaction. I first thought I was going to get rich. I went to every big media agency and said, “You can fire all your creative people. I know how to create these ads using mathematics.” And I was looked at like I had four heads. So, can you bring us up to the year 2023? Can you tell us what you’re doing at Jumpcut, and what role AI machine learning plays in your company, and just what you see going on in the creative world?

Hosanagar: Yeah. And I’ll connect that to, also, what you and Stefano just brought up about AI and jobs and exposure to AI and so on. I just came from a real estate conference. And the panel before I spoke was talking about, “Hey, this artificial intelligence, it’s not really intelligence. It just replicates whatever in some data. The true human intelligence is creative, problem-solving, and so on.” And I was sharing over there that there are multiple studies now that talk about what can AI do, and cannot do. For example, my colleague, Daniel Rock, has a study where he shows that just LLMs, meaning large language models like ChatGPT, and before the advances of the last six months— this is as of early 2023— they found that 50% of jobs have at least 10% of their tasks exposed to LLMs. And 20% of jobs have more than 50% of their tasks exposed to LLM. And that’s not all of AI, that’s just large language models. And that’s also 10 months ago.

And people also underestimate the nature of exponential change. I’ve been working with GPT2, GPT3, the earlier models of this. And I can say every year the change is order of magnitude. And so, you know, it’s coming. And it’s going to affect all kinds of jobs. Now, as of today, I can say that multiple research studies— and I don’t mean two, three, four— but several dozen research studies that have looked at AI’s use in multiple settings, including creative settings like writing poems or problem-solving or so on— find that AI today already can match humans. But human plus AI today beats both human alone and AI alone.

For me, the big opportunity with AI is we are going to see productivity boost like we’ve never seen before in the history of humanity. And that kind of productivity boost allows us to outsource the grunt work to AI, and do the most creative things, and derive joy from our work. Now, does that mean it’s all going to be beautiful for all of us? No. There are going to be some of us who, if we don’t reskill — if we don’t focus on having skills that require creativity, empathy, teamwork, leadership, those kinds of skills — then a lot of the other jobs are going away, including knowledge work. Consulting, software development. It’s coming into all of these.

Bradlow: Stefano, something Kartik mentioned in his last thing was about humans and AI. As a matter of fact, one of the things I heard you say from the beginning is, it’s not humans or AI. It’s humans and AI. How do you really see that interface going forward? Is it up to the individual worker to decide what part of his/her/their tasks to outsource? Is it up to management? How do you see people being even willing to skill themselves up in artificial intelligence? How do you see this?

Puntoni: I think this is the biggest question that any company should be asking, not just about AI right now. Frankly, I think the biggest question of all in business — how do we use these tools? How do we learn how to use them? There is no template. Nobody really knows how, for example, generative AI is going to impact different functions. We’re just learning about these tools, and these tools are still getting better.

What we need to do is to have some deliberate experimentation. We need to build processes for learning such that we have individuals within the organizations tasked with just understanding what this can do. And there’s going to be an impact on individuals. It’s going to be an impact on teams, on work flows. How do we bring this in, in a way that we just maybe don’t simply think of re-engineering a task to get a human out of the picture. But how do we re-engineer new ways of working such that we can get the most out of people? The point shouldn’t be human replacement and obsolescence. It should be human flourishing. How do we take this amazing technology to make our work more productive, more meaningful, more impactful, and ultimately make society better?

Bradlow: Kartik, let me take what Stefano said and combine it with something that you said earlier, which was about the exponential growth rate. My biggest fear if I were working at a company today — and please, I’d love your thoughts— is that someone’s using a version of ChatGPT, or some large language model, or even predictive model. Some transformer model. And they fit it today, and they say, “See? The model can’t do this.” And then two weeks later, the model can do this. Companies, in some sense, create these absolutes. Like, you just mentioned you were at a real estate company. “Well, ChatGPT or large language models, AI, can’t sell homes. They can build massive predictive models using satellite data.” Maybe they can’t today, but maybe they can tomorrow. How do you, in some sense, try to help both researchers and companies move away from absolutes in a time of exponential growth of these methods?

Hosanagar: Yeah. I think our brains fundamentally struggle with exponential change. And probably, there is some basis to this in studies people have done on neuroscience or human evolution and so on. But we struggle with it. And I see this all the time, because I’ve been part of that. My work has been part of that exponential change from the very beginning. When I started my Ph.D., it was about the internet. And I can’t tell you the number of people who looked at the internet at any given point of time and said, “Nobody will buy clothing online. Nobody will buy eyeglasses online. Nobody would do this. Nobody would do that.” And I’m like, “No, no. It’s all happening. Just wait to see what’s coming.”

I think it’s hard for people to fathom. I think leadership, as well as regulators, need to realize what’s coming, understand what exponential change is, and start to work. You brought up previously, and I forgot to address it, about the Hollywood writer’s strike. Now, it is true that today, ChatGPT cannot write a great model. However, when we work with writers, we are already seeing how they can increase the productivity for writers. And in Hollywood, for example, writers are notorious because writing is driven by inspiration. You’re expecting the draft today. And what’s the excuse? “Oh, I’m just stuck at this point. And when I get unstuck, I’ll write again.” You can wait months and sometimes years for the writer to get unstuck.

Now, you give them a brainstorming buddy, and they start getting unstuck and it increases productivity. And yes, they’re right in fearing that at some point they’re going to keep interacting with the AI, and keep training the AI, and someday the AI is going to say, “You know what? I’m going to try to write the script myself.” And when I say the AI is going to say that, I mean the AI is going to be good enough, and some executive is going to say, “Why deal with humans?” And do that.

I think we need to both recognize that change is that fast and start experimenting and start learning. And people need to start upping their game and reskilling and get really good at using AI to do what they do. That reskilling is important. Stop viewing this as a threat. Because what’s happening is, you’re standing somewhere and there’s a fast bullet train coming at you. And you’re saying, “That train is going to stop on its own.” No, it’s going to run over you. And the only thing you can do and you have to do is get to the station, board the train, and be part of that train and help shape where it goes. All of us need to help shape where it goes.

Bradlow: Yeah. One example I like to give is that for 25-plus years I’ve been doing statistical analysis in R. And of course, for the last five to seven years, Python’s taken a much larger role. And I always promised myself I was going to learn Python. Well, I’ve learned Python now. I stick my R code into ChatGPT, and I tell it to convert it to Python. And I’m actually a damn good Python programmer now, because ChatGPT has helped me take structured R code and turn it into Python code.

Hosanagar: That’s a great example. And I’ll give you two more examples like that. The head of product at my company, Jumpcut Media, had this idea for a script summarization tool. What happens in Hollywood is the vast majority of scripts written are never read because every executive gets so many scripts. And you have no time to read anything. And you end up prioritizing based on gut and relationships. “Eric’s my buddy. I’ll read his script, but not this guy, Stefano, who just sent me a script. I don’t know him.” And that’s how decision-making works in Hollywood.

So, the head of product, who’s not a coder — he’s actually a Wharton alumnus — had this idea for a great script summarization tool that would summarize things using the language and parlance of Hollywood. And he had the idea to build the tool, but he’s not a coder. Our engineers were too busy with other efforts, so he said, “While they’re doing that, let me try it on ChatGPT.” And he built the entire minimal viable product, a demo version of it, on his own, using ChatGPT. And it’s actually on our web site on Jumpcut Media, where our clients can try it. And that’s how it got built. A guy with no development skills.

I actually demonstrated, during this real estate conference, this idea that you post a video on YouTube, you’ve got 30,000 comments on YouTube, and you want to analyze those comments and figure out, what are people saying? You want to summarize it. I went to ChatGPT, and I said, “Six steps. First step, go to a YouTube URL I’ll share, download all the comments. Second step, do sentiment analysis of that. Third step, find the comments which are positive and send it to OpenAI and give me the summary of all the positive comments. Fourth step, negative comments, send it to OpenAI, give the summary. Fifth step, tell the marketing manager what you should do, and give me the code for all this.” It gave me the code in the conference with all these people. I put it in Google Collab, ran it, and now we’ve got the summary. And this is me writing not a single line of code, with ChatGPT. It’s not the most complex code, but this is something that previously would have taken me days and I would have had to involve RAs and so on. And I can get that done.

Bradlow: Imagine in real estate doing that about a property, or a developer. And you say it doesn’t affect real estate. Of course it does! Absolutely, it could.

Hosanagar: It does. I also showed them, I uploaded four photographs of my home. Nothing else. Four photographs. And I said, “I’m planning to list this home for sale. Give me a real estate listing to post on Zillow that will make people read it and get excited to come and tour this house.” And it gave a great, beautiful description. There’s no way I could have written that. I challenged them, how many of you could have written this? And everyone at the end was like, “Wow. I was blown away.” And that is something that is doable today. I’m not even talking where this is coming soon.

Bradlow: Stefano, I’m going to ask you and then I’ll ask Kartik as well, what’s at the leading edge of the research you’re doing right now? I want to ask each of you about your own research, and then I’ll spend the last few minutes that we have talking about AI at Wharton and what you guys are doing and hoping to accomplish. Let’s start with our own personal research. What are you doing right now? Another way I like to frame it is, if we’re sitting here five years from now and you have a bunch of published papers and you’ve given a lot of big podium talks, which I know you do, what are you talking about that you had worked on?

Puntoni: Working on a lot of projects, all in the area of AI. And there are so many exciting questions. Because we never had a machine like this, a machine that can do the stuff that we think is crucial to defining what a human is. This is actually an interesting thing to consider. When you went back in time a few years and you asked, “What makes humans special?” people were thinking, maybe compared to other animals, “We can think.” And now you ask, “What makes a human special?” and people think, “Oh, we have emotions, or we feel.

Basically now, what makes us special is what makes us the same as other animals, to some extent. You see how the world is really deeply changing. And I’m interested in, for example, the impact of AI for the pursuit of relational goals, or social goals, or emotionally heavy types of tasks, where previously we never had an option of engaging with a machine, but now we do. What does that mean? What are the benefits that this technology can bring, but also, what might be the dangers? For example, for consumer safety, as people might interact with these tools while experiencing mental health issues or other problems. To me, that’s a very exciting and important area.

I just want to make a point that this technology doesn’t have to be any better than it is today for it to change many, many things. I mean, Kartik was saying, rightly, this is still improving exponentially. And companies are just starting to experiment with it. But the tools are there. This is not a technology around the corner. It’s in front of us.

Bradlow: Kartik, what are the big open issues that you’re thinking about and working on today?