Indirect Speech Definition and Examples

- An Introduction to Punctuation

- Ph.D., Rhetoric and English, University of Georgia

- M.A., Modern English and American Literature, University of Leicester

- B.A., English, State University of New York

Indirect speech is a report on what someone else said or wrote without using that person's exact words (which is called direct speech). It's also called indirect discourse or reported speech .

Direct vs. Indirect Speech

In direct speech , a person's exact words are placed in quotation marks and set off with a comma and a reporting clause or signal phrase , such as "said" or "asked." In fiction writing, using direct speech can display the emotion of an important scene in vivid detail through the words themselves as well as the description of how something was said. In nonfiction writing or journalism, direct speech can emphasize a particular point, by using a source's exact words.

Indirect speech is paraphrasing what someone said or wrote. In writing, it functions to move a piece along by boiling down points that an interview source made. Unlike direct speech, indirect speech is not usually placed inside quote marks. However, both are attributed to the speaker because they come directly from a source.

How to Convert

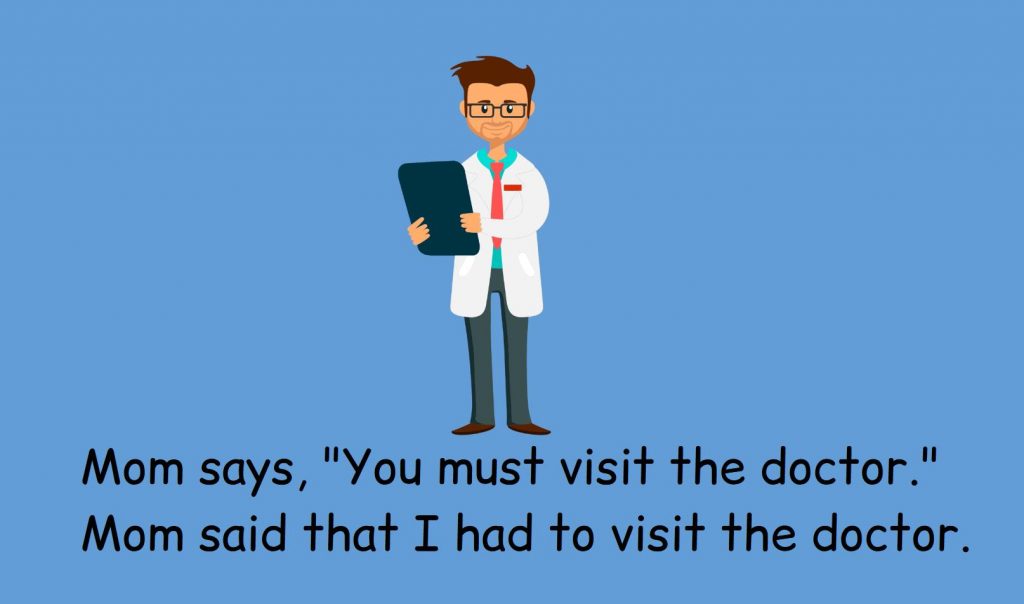

In the first example below, the verb in the present tense in the line of direct speech ( is) may change to the past tense ( was ) in indirect speech, though it doesn't necessarily have to with a present-tense verb. If it makes sense in context to keep it present tense, that's fine.

- Direct speech: "Where is your textbook? " the teacher asked me.

- Indirect speech: The teacher asked me where my textbook was.

- Indirect speech: The teacher asked me where my textbook is.

Keeping the present tense in reported speech can give the impression of immediacy, that it's being reported soon after the direct quote,such as:

- Direct speech: Bill said, "I can't come in today, because I'm sick."

- Indirect speech: Bill said (that) he can't come in today because he's sick.

Future Tense

An action in the future (present continuous tense or future) doesn't have to change verb tense, either, as these examples demonstrate.

- Direct speech: Jerry said, "I'm going to buy a new car."

- Indirect speech: Jerry said (that) he's going to buy a new car.

- Direct speech: Jerry said, "I will buy a new car."

- Indirect speech: Jerry said (that) he will buy a new car.

Indirectly reporting an action in the future can change verb tenses when needed. In this next example, changing the am going to was going implies that she has already left for the mall. However, keeping the tense progressive or continuous implies that the action continues, that she's still at the mall and not back yet.

- Direct speech: She said, "I'm going to the mall."

- Indirect speech: She said (that) she was going to the mall.

- Indirect speech: She said (that) she is going to the mall.

Other Changes

With a past-tense verb in the direct quote, the verb changes to past perfect.

- Direct speech: She said, "I went to the mall."

- Indirect speech: She said (that) she had gone to the mall.

Note the change in first person (I) and second person (your) pronouns and word order in the indirect versions. The person has to change because the one reporting the action is not the one actually doing it. Third person (he or she) in direct speech remains in the third person.

Free Indirect Speech

In free indirect speech, which is commonly used in fiction, the reporting clause (or signal phrase) is omitted. Using the technique is a way to follow a character's point of view—in third-person limited omniscient—and show her thoughts intermingled with narration.

Typically in fiction italics show a character's exact thoughts, and quote marks show dialogue. Free indirect speech makes do without the italics and simply combines the internal thoughts of the character with the narration of the story. Writers who have used this technique include James Joyce, Jane Austen, Virginia Woolf, Henry James, Zora Neale Hurston, and D.H. Lawrence.

- Indirect Speech in the English Language

- Direct Speech Definition and Examples

- French Grammar: Direct and Indirect Speech

- How to Teach Reported Speech

- Definition and Examples of Direct Quotations

- How to Use Indirect Quotations in Writing for Complete Clarity

- Backshift (Sequence-of-Tense Rule in Grammar)

- What Is Attribution in Writing?

- Indirect Question: Definition and Examples

- Reported Speech

- Using Reported Speech: ESL Lesson Plan

- Constructed Dialogue in Storytelling and Conversation

- The Subjunctive Present in German

- What Are Reporting Verbs in English Grammar?

- Preterit(e) Verbs

- Dialogue Guide Definition and Examples

Direct and Indirect Speech: Useful Rules and Examples

Are you having trouble understanding the difference between direct and indirect speech? Direct speech is when you quote someone’s exact words, while indirect speech is when you report what someone said without using their exact words. This can be a tricky concept to grasp, but with a little practice, you’ll be able to use both forms of speech with ease.

Direct and Indirect Speech

When someone speaks, we can report what they said in two ways: direct speech and indirect speech. Direct speech is when we quote the exact words that were spoken, while indirect speech is when we report what was said without using the speaker’s exact words. Here’s an example:

Direct speech: “I love pizza,” said John. Indirect speech: John said that he loved pizza.

Using direct speech can make your writing more engaging and can help to convey the speaker’s tone and emotion. However, indirect speech can be useful when you want to summarize what someone said or when you don’t have the exact words that were spoken.

To change direct speech to indirect speech, you need to follow some rules. Firstly, you need to change the tense of the verb in the reported speech to match the tense of the reporting verb. Secondly, you need to change the pronouns and adverbs in the reported speech to match the new speaker. Here’s an example:

Direct speech: “I will go to the park,” said Sarah. Indirect speech: Sarah said that she would go to the park.

It’s important to note that when you use indirect speech, you need to use reporting verbs such as “said,” “told,” or “asked” to indicate who is speaking. Here’s an example:

Direct speech: “What time is it?” asked Tom. Indirect speech: Tom asked what time it was.

In summary, understanding direct and indirect speech is crucial for effective communication and writing. Direct speech can be used to convey the speaker’s tone and emotion, while indirect speech can be useful when summarizing what someone said. By following the rules for changing direct speech to indirect speech, you can accurately report what was said while maintaining clarity and readability in your writing.

Differences between Direct and Indirect Speech

When it comes to reporting speech, there are two ways to go about it: direct and indirect speech. Direct speech is when you report someone’s exact words, while indirect speech is when you report what someone said without using their exact words. Here are some of the key differences between direct and indirect speech:

Change of Pronouns

In direct speech, the pronouns used are those of the original speaker. However, in indirect speech, the pronouns have to be changed to reflect the perspective of the reporter. For example:

- Direct speech: “I am going to the store,” said John.

- Indirect speech: John said he was going to the store.

In the above example, the pronoun “I” changes to “he” in indirect speech.

Change of Tenses

Another major difference between direct and indirect speech is the change of tenses. In direct speech, the verb tense used is the same as that used by the original speaker. However, in indirect speech, the verb tense may change depending on the context. For example:

- Direct speech: “I am studying for my exams,” said Sarah.

- Indirect speech: Sarah said she was studying for her exams.

In the above example, the present continuous tense “am studying” changes to the past continuous tense “was studying” in indirect speech.

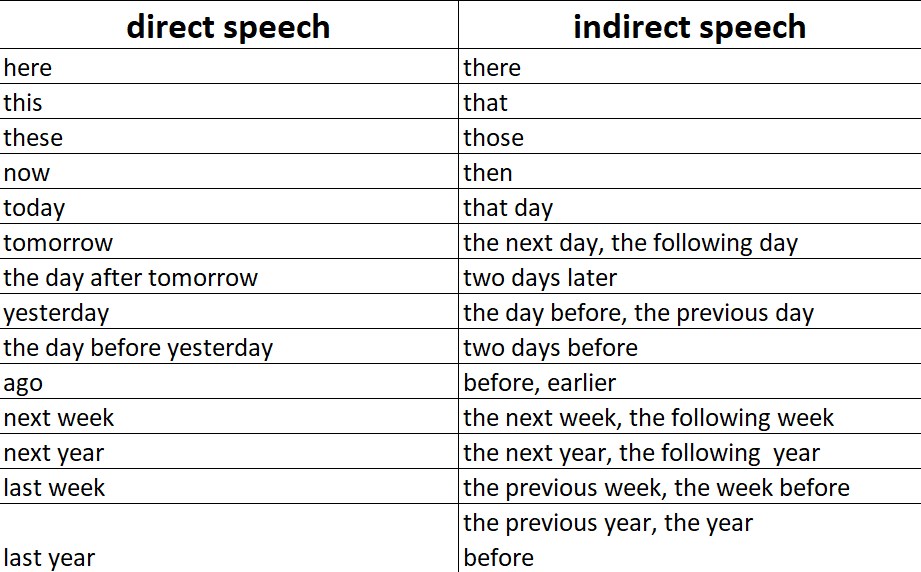

Change of Time and Place References

When reporting indirect speech, the time and place references may also change. For example:

- Direct speech: “I will meet you at the park tomorrow,” said Tom.

- Indirect speech: Tom said he would meet you at the park the next day.

In the above example, “tomorrow” changes to “the next day” in indirect speech.

Overall, it is important to understand the differences between direct and indirect speech to report speech accurately and effectively. By following the rules of direct and indirect speech, you can convey the intended message of the original speaker.

Converting Direct Speech Into Indirect Speech

When you need to report what someone said in your own words, you can use indirect speech. To convert direct speech into indirect speech, you need to follow a few rules.

Step 1: Remove the Quotation Marks

The first step is to remove the quotation marks that enclose the relayed text. This is because indirect speech does not use the exact words of the speaker.

Step 2: Use a Reporting Verb and a Linker

To indicate that you are reporting what someone said, you need to use a reporting verb such as “said,” “asked,” “told,” or “exclaimed.” You also need to use a linker such as “that” or “whether” to connect the reporting verb to the reported speech.

For example:

- Direct speech: “I love ice cream,” said Mary.

- Indirect speech: Mary said that she loved ice cream.

Step 3: Change the Tense of the Verb

When you use indirect speech, you need to change the tense of the verb in the reported speech to match the tense of the reporting verb.

- Indirect speech: John said that he was going to the store.

Step 4: Change the Pronouns

You also need to change the pronouns in the reported speech to match the subject of the reporting verb.

- Direct speech: “Are you busy now?” Tina asked me.

- Indirect speech: Tina asked whether I was busy then.

By following these rules, you can convert direct speech into indirect speech and report what someone said in your own words.

Converting Indirect Speech Into Direct Speech

Converting indirect speech into direct speech involves changing the reported speech to its original form as spoken by the speaker. Here are the steps to follow when converting indirect speech into direct speech:

- Identify the reporting verb: The first step is to identify the reporting verb used in the indirect speech. This will help you determine the tense of the direct speech.

- Change the pronouns: The next step is to change the pronouns in the indirect speech to match the person speaking in the direct speech. For example, if the indirect speech is “She said that she was going to the store,” the direct speech would be “I am going to the store,” if you are the person speaking.

- Change the tense: Change the tense of the verbs in the indirect speech to match the tense of the direct speech. For example, if the indirect speech is “He said that he would visit tomorrow,” the direct speech would be “He says he will visit tomorrow.”

- Remove the reporting verb and conjunction: In direct speech, there is no need for a reporting verb or conjunction. Simply remove them from the indirect speech to get the direct speech.

Here is an example to illustrate the process:

Indirect Speech: John said that he was tired and wanted to go home.

Direct Speech: “I am tired and want to go home,” John said.

By following these steps, you can easily convert indirect speech into direct speech.

Examples of Direct and Indirect Speech

Direct and indirect speech are two ways to report what someone has said. Direct speech reports the exact words spoken by a person, while indirect speech reports the meaning of what was said. Here are some examples of both types of speech:

Direct Speech Examples

Direct speech is used when you want to report the exact words spoken by someone. It is usually enclosed in quotation marks and is often used in dialogue.

- “I am going to the store,” said Sarah.

- “It’s a beautiful day,” exclaimed John.

- “Please turn off the lights,” Mom told me.

- “I will meet you at the library,” said Tom.

- “We are going to the beach tomorrow,” announced Mary.

Indirect Speech Examples

Indirect speech, also known as reported speech, is used to report what someone said without using their exact words. It is often used in news reports, academic writing, and in situations where you want to paraphrase what someone said.

Here are some examples of indirect speech:

- Sarah said that she was going to the store.

- John exclaimed that it was a beautiful day.

- Mom told me to turn off the lights.

- Tom said that he would meet me at the library.

- Mary announced that they were going to the beach tomorrow.

In indirect speech, the verb tense may change to reflect the time of the reported speech. For example, “I am going to the store” becomes “Sarah said that she was going to the store.” Additionally, the pronouns and possessive adjectives may also change to reflect the speaker and the person being spoken about.

Overall, both direct and indirect speech are important tools for reporting what someone has said. By using these techniques, you can accurately convey the meaning of what was said while also adding your own interpretation and analysis.

Frequently Asked Questions

What is direct and indirect speech?

Direct and indirect speech refer to the ways in which we communicate what someone has said. Direct speech involves repeating the exact words spoken, using quotation marks to indicate that you are quoting someone. Indirect speech, on the other hand, involves reporting what someone has said without using their exact words.

How do you convert direct speech to indirect speech?

To convert direct speech to indirect speech, you need to change the tense of the verbs, pronouns, and time expressions. You also need to introduce a reporting verb, such as “said,” “told,” or “asked.” For example, “I love ice cream,” said Mary (direct speech) can be converted to “Mary said that she loved ice cream” (indirect speech).

What is the difference between direct speech and indirect speech?

The main difference between direct speech and indirect speech is that direct speech uses the exact words spoken, while indirect speech reports what someone has said without using their exact words. Direct speech is usually enclosed in quotation marks, while indirect speech is not.

What are some examples of direct and indirect speech?

Some examples of direct speech include “I am going to the store,” said John and “I love pizza,” exclaimed Sarah. Some examples of indirect speech include John said that he was going to the store and Sarah exclaimed that she loved pizza .

What are the rules for converting direct speech to indirect speech?

The rules for converting direct speech to indirect speech include changing the tense of the verbs, pronouns, and time expressions. You also need to introduce a reporting verb and use appropriate reporting verbs such as “said,” “told,” or “asked.”

What is a summary of direct and indirect speech?

Direct and indirect speech are two ways of reporting what someone has said. Direct speech involves repeating the exact words spoken, while indirect speech reports what someone has said without using their exact words. To convert direct speech to indirect speech, you need to change the tense of the verbs, pronouns, and time expressions and introduce a reporting verb.

You might also like:

- List of Adjectives

- Predicate Adjective

- Superlative Adjectives

Related Posts:

This website is AMNAZING

MY NAAMEE IS KISHU AND I WANTED TO TELL THERE ARE NO EXERCISES AVAILLABLEE BY YOUR WEBSITE PLEASE ADD THEM SSOON FOR OUR STUDENTS CONVIENCE IM A EIGHT GRADER LOVED YOUR EXPLABATIO

sure cries l miss my friend

Reported Speech in English Grammar

Direct speech, changing the tense (backshift), no change of tenses, question sentences, demands/requests, expressions with who/what/how + infinitive, typical changes of time and place.

- Lingolia Plus English

Introduction

In English grammar, we use reported speech to say what another person has said. We can use their exact words with quotation marks , this is known as direct speech , or we can use indirect speech . In indirect speech , we change the tense and pronouns to show that some time has passed. Indirect speech is often introduced by a reporting verb or phrase such as ones below.

Learn the rules for writing indirect speech in English with Lingolia’s simple explanation. In the exercises, you can test your grammar skills.

When turning direct speech into indirect speech, we need to pay attention to the following points:

- changing the pronouns Example: He said, “ I saw a famous TV presenter.” He said (that) he had seen a famous TV presenter.

- changing the information about time and place (see the table at the end of this page) Example: He said, “I saw a famous TV presenter here yesterday .” He said (that) he had seen a famous TV presenter there the day before .

- changing the tense (backshift) Example: He said, “She was eating an ice-cream at the table where you are sitting .” He said (that) she had been eating an ice-cream at the table where I was sitting .

If the introductory clause is in the simple past (e.g. He said ), the tense has to be set back by one degree (see the table). The term for this in English is backshift .

The verbs could, should, would, might, must, needn’t, ought to, used to normally do not change.

If the introductory clause is in the simple present , however (e.g. He says ), then the tense remains unchanged, because the introductory clause already indicates that the statement is being immediately repeated (and not at a later point in time).

In some cases, however, we have to change the verb form.

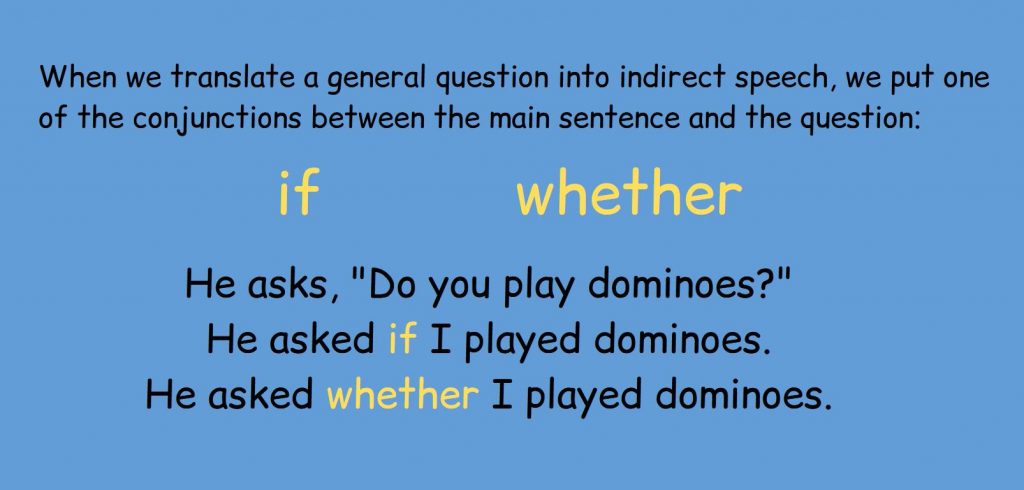

When turning questions into indirect speech, we have to pay attention to the following points:

- As in a declarative sentence, we have to change the pronouns, the time and place information, and set the tense back ( backshift ).

- Instead of that , we use a question word. If there is no question word, we use whether / if instead. Example: She asked him, “ How often do you work?” → She asked him how often he worked. He asked me, “Do you know any famous people?” → He asked me if/whether I knew any famous people.

- We put the subject before the verb in question sentences. (The subject goes after the auxiliary verb in normal questions.) Example: I asked him, “ Have you met any famous people before?” → I asked him if/whether he had met any famous people before.

- We don’t use the auxiliary verb do for questions in indirect speech. Therefore, we sometimes have to conjugate the main verb (for third person singular or in the simple past ). Example: I asked him, “What do you want to tell me?” → I asked him what he wanted to tell me.

- We put the verb directly after who or what in subject questions. Example: I asked him, “ Who is sitting here?” → I asked him who was sitting there.

We don’t just use indirect questions to report what another person has asked. We also use them to ask questions in a very polite manner.

When turning demands and requests into indirect speech, we only need to change the pronouns and the time and place information. We don’t have to pay attention to the tenses – we simply use an infinitive .

If it is a negative demand, then in indirect speech we use not + infinitive .

To express what someone should or can do in reported speech, we leave out the subject and the modal verb and instead we use the construction who/what/where/how + infinitive.

Say or Tell?

The words say and tell are not interchangeable. say = say something tell = say something to someone

How good is your English?

Find out with Lingolia’s free grammar test

Take the test!

Maybe later

- B1-B2 grammar

Reported speech: statements

Do you know how to report what somebody else said? Test what you know with interactive exercises and read the explanation to help you.

Look at these examples to see how we can tell someone what another person said.

direct speech: 'I love the Toy Story films,' she said. indirect speech: She said she loved the Toy Story films. direct speech: 'I worked as a waiter before becoming a chef,' he said. indirect speech: He said he'd worked as a waiter before becoming a chef. direct speech: 'I'll phone you tomorrow,' he said. indirect speech: He said he'd phone me the next day.

Try this exercise to test your grammar.

Grammar B1-B2: Reported speech 1: 1

Read the explanation to learn more.

Grammar explanation

Reported speech is when we tell someone what another person said. To do this, we can use direct speech or indirect speech.

direct speech: 'I work in a bank,' said Daniel. indirect speech: Daniel said that he worked in a bank.

In indirect speech, we often use a tense which is 'further back' in the past (e.g. worked ) than the tense originally used (e.g. work ). This is called 'backshift'. We also may need to change other words that were used, for example pronouns.

Present simple, present continuous and present perfect

When we backshift, present simple changes to past simple, present continuous changes to past continuous and present perfect changes to past perfect.

'I travel a lot in my job.' Jamila said that she travelled a lot in her job. 'The baby's sleeping!' He told me the baby was sleeping. 'I've hurt my leg.' She said she'd hurt her leg.

Past simple and past continuous

When we backshift, past simple usually changes to past perfect simple, and past continuous usually changes to past perfect continuous.

'We lived in China for five years.' She told me they'd lived in China for five years. 'It was raining all day.' He told me it had been raining all day.

Past perfect

The past perfect doesn't change.

'I'd tried everything without success, but this new medicine is great.' He said he'd tried everything without success, but the new medicine was great.

No backshift

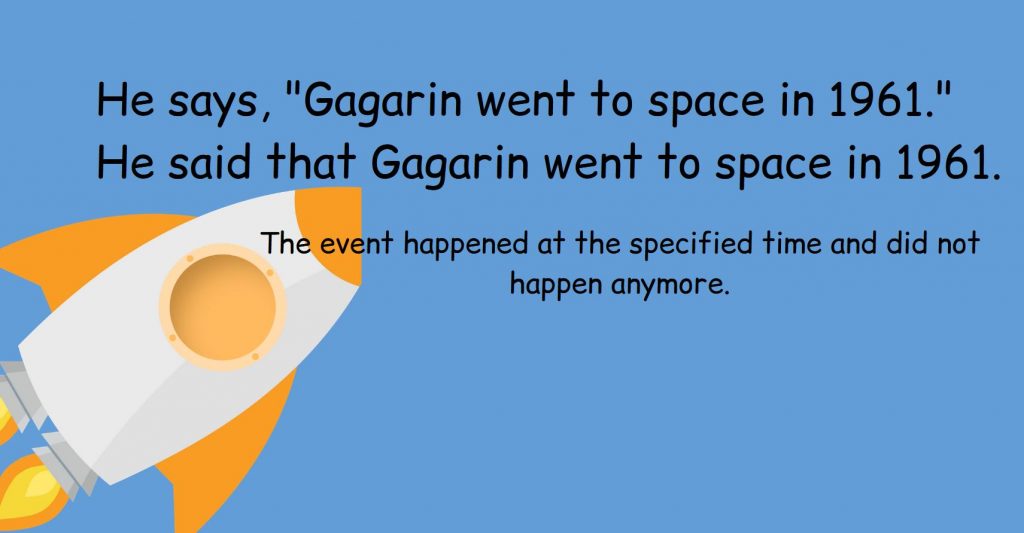

If what the speaker has said is still true or relevant, it's not always necessary to change the tense. This might happen when the speaker has used a present tense.

'I go to the gym next to your house.' Jenny told me that she goes to the gym next to my house. I'm thinking about going with her. 'I'm working in Italy for the next six months.' He told me he's working in Italy for the next six months. Maybe I should visit him! 'I've broken my arm!' She said she's broken her arm, so she won't be at work this week.

Pronouns, demonstratives and adverbs of time and place

Pronouns also usually change in indirect speech.

'I enjoy working in my garden,' said Bob. Bob said that he enjoyed working in his garden. 'We played tennis for our school,' said Alina. Alina told me they'd played tennis for their school.

However, if you are the person or one of the people who spoke, then the pronouns don't change.

'I'm working on my thesis,' I said. I told her that I was working on my thesis. 'We want our jobs back!' we said. We said that we wanted our jobs back.

We also change demonstratives and adverbs of time and place if they are no longer accurate.

'This is my house.' He said this was his house. [You are currently in front of the house.] He said that was his house. [You are not currently in front of the house.] 'We like it here.' She told me they like it here. [You are currently in the place they like.] She told me they like it there. [You are not in the place they like.] 'I'm planning to do it today.' She told me she's planning to do it today. [It is currently still the same day.] She told me she was planning to do it that day. [It is not the same day any more.]

In the same way, these changes to those , now changes to then , yesterday changes to the day before , tomorrow changes to the next/following day and ago changes to before .

Do this exercise to test your grammar again.

Grammar B1-B2: Reported speech 1: 2

Language level

Hello Team. If the reporting verb is in the present perfect, do we have to backshift the tenses of the direct speech or not? For example: He has said, "I bought a car yesterday."

1- He has said that he bought a car yesterday.

2- He has said that he had bought a car the previous day.

- Log in or register to post comments

Hello Ahmed Imam,

It's not necessary to backshift the verb form if the situation being reported is still true. For example:

"I'm a doctor"

She told me she is a doctor. [she was a doctor when she said it and she is still doctor now]

She told me she was a doctor. [she was a doctor when she said it and may or may not still be a doctor now]

The reporting verb in your example would be 'said' rather than 'has said' as we are talking about a particular moment in the past. For the other verb both 'bought' and 'had bought' are possible without any change in meaning. In fact, when the verb is past in the original sentence we usually do not shift the verb form back.

The LearnEnglish Team

Hello again. Which one is correct? Why?

- He has said that he (will - would) travel to Cairo with his father.

The present perfect is a present form, so generally 'will' is the correct form.

In this case, assuming that the man said 'I will travel to Cairo', then 'will' is the correct form. But if the man said 'I would travel to Cairo if I had time to do it', then 'would' would be the correct form since it is part of a conditional statement.

I think you were asking about the first situation (the general one), though. Does that make sense?

Best wishes, Kirk LearnEnglish team

Thank you for the information. It states that If what the speaker has said is still true or relevant, it's not always necessary to change the tense. I wonder if it is still correct to change the tense in this example: 'London is in the UK', he said. to He said London was in the UK. Or it has to be the present tense.

Hello Wen1996,

Yes, your version of the sentence is also correct. In this case, the past tense refers to the time the speaker made this statement. But this doesn't mean the statement isn't also true now.

Good evening from Turkey.

Is the following example correct: Question: When did she watch the movie?

She asked me when she had watched the movie. or is it had she watched the movie.

Do Subjects come before the verbs? Thank you.

Hello muratt,

This is a reported question, not an actual question, as you can see from the fact that it has no question mark at the end. Therefore no inversion is needed and the normal subject-verb word order is maintained: ...she had watched... is correct.

You can read more about this here:

https://learnenglish.britishcouncil.org/grammar/b1-b2-grammar/reported-speech-questions

Thank you for your response.

Hello Sir, kindly help with the following sentence-

She said, "When I was a child I wasn't afraid of ghosts."

Please tell me how to write this sentence in reported/ indirect speech.

Online courses

Group and one-to-one classes with expert teachers.

Learn English in your own time, at your own pace.

One-to-one sessions focused on a personal plan.

Get the score you need with private and group classes.

Reported Speech

Perfect english grammar.

Reported Statements

Here's how it works:

We use a 'reporting verb' like 'say' or 'tell'. ( Click here for more about using 'say' and 'tell' .) If this verb is in the present tense, it's easy. We just put 'she says' and then the sentence:

- Direct speech: I like ice cream.

- Reported speech: She says (that) she likes ice cream.

We don't need to change the tense, though probably we do need to change the 'person' from 'I' to 'she', for example. We also may need to change words like 'my' and 'your'. (As I'm sure you know, often, we can choose if we want to use 'that' or not in English. I've put it in brackets () to show that it's optional. It's exactly the same if you use 'that' or if you don't use 'that'.)

But , if the reporting verb is in the past tense, then usually we change the tenses in the reported speech:

- Reported speech: She said (that) she liked ice cream.

* doesn't change.

- Direct speech: The sky is blue.

- Reported speech: She said (that) the sky is/was blue.

Click here for a mixed tense exercise about practise reported statements. Click here for a list of all the reported speech exercises.

Reported Questions

So now you have no problem with making reported speech from positive and negative sentences. But how about questions?

- Direct speech: Where do you live?

- Reported speech: She asked me where I lived.

- Direct speech: Where is Julie?

- Reported speech: She asked me where Julie was.

- Direct speech: Do you like chocolate?

- Reported speech: She asked me if I liked chocolate.

Click here to practise reported 'wh' questions. Click here to practise reported 'yes / no' questions. Reported Requests

There's more! What if someone asks you to do something (in a polite way)? For example:

- Direct speech: Close the window, please

- Or: Could you close the window please?

- Or: Would you mind closing the window please?

- Reported speech: She asked me to close the window.

- Direct speech: Please don't be late.

- Reported speech: She asked us not to be late.

Reported Orders

- Direct speech: Sit down!

- Reported speech: She told me to sit down.

- Click here for an exercise to practise reported requests and orders.

- Click here for an exercise about using 'say' and 'tell'.

- Click here for a list of all the reported speech exercises.

Hello! I'm Seonaid! I'm here to help you understand grammar and speak correct, fluent English.

Read more about our learning method

My English Grammar

Ultimate English Grammar, Vocabulary, and Names Database

Changes in Indirect Speech

Welcome to a comprehensive tutorial providing guidance on the proper use, types, and rules of indirect speech in English grammar. Indirect speech, also called reported speech, allows us to share another person’s exact words without using quotes. It is particularly useful in written language. This tutorial aims to brief you about the changes that occur when switching from direct speech to indirect speech. It further explains the necessary rules which must be followed during this transition.

Table of Contents

Understanding Direct and Indirect Speech

Direct speech refers to the exact wording that someone uses when performing an act of speech. However, indirect speech implicitly shares the content of the person’s original words.

Direct Speech: He said, “I am hungry.” Indirect Speech: He said that he was hungry.

Notably, an essential component of indirect speech is the change in verb tense. In the direct speech example, the speaker uses the present tense “am.” In the indirect version, even though the speaker is still hungry, the tense changes to the past “was.”

Changes in Verb Tenses

The verb tense in indirect speech is one step back in time from the tense in the direct speech. Here are the common changes:

- Present Simple becomes Past Simple.

- Present Continuous becomes Past Continuous.

- Present Perfect becomes Past Perfect.

- Present Perfect Continuous becomes Past Perfect Continuous.

- Past Simple becomes Past Perfect.

Direct: He says, “I need help.” Indirect: He said he needed help.

Direct: She is saying, “I am reading a book.” Indirect: She was saying that she was reading a book.

Changes in Time and Place References

Besides the tense, word usage for place and time often changes when converting from direct to indirect speech.

- ‘Now’ changes to ‘then’.

- ‘Today’ changes to ‘that day’.

- ‘Yesterday’ turns into ‘the day before’ or ‘the previous day’.

- ‘Tomorrow’ changes to ‘the next day’ or ‘the following day’.

- ‘Last week/month/year’ switches to ‘the previous week/month/year’.

- ‘Next week/month/year’ changes to ‘the following week/month/year’.

- ‘Here’ turns into ‘there’.

Direct: He said, “I will do it tomorrow.” Indirect: He said that he would do it the next day.

Direct: She said, “I was here.”

Indirect: She said that she was there.

Changes in Modals

Modals also change when transforming direct speech into indirect speech. Here are some common changes:

- ‘Can’ changes to ‘could’.

- ‘May’ changes to ‘might’.

- ‘Will’ changes to ‘would’.

- ‘Shall’ changes to ‘should’.

Direct: She said, “I can play the piano.” Indirect: She said that she could play the piano.

Direct: He said, “I will go shopping.” Indirect: He said that he would go shopping.

Reporting Orders, Requests, and Questions

When reporting orders, requests, and questions, the structure also changes. The following is the structure:

- ‘To’ + infinitive for orders.

- Interrogative word + subject + verb for questions.

- Could/Would + subject + verb for polite requests.

Direct: He said to her, “Close the door.” Indirect: He told her to close the door.

Direct: She asked, “Where is the station?” Indirect: She asked where the station was.

In conclusion, reported speech becomes easier to understand and use effectively with practice. Understanding the transition from direct to indirect speech is vital to expressing yourself accurately and professionally, especially in written English. This guide provides the foundational information for mastering the changes in indirect speech. Practice these rules to become more fluent and confident in your English communication skills.

Related Posts:

Leave a Reply Cancel reply

You must be logged in to post a comment.

Direct and Indirect Speech: The Ultimate Guide

Direct and Indirect Speech are the two ways of reporting what someone said. The use of both direct and indirect speech is crucial in effective communication and writing. Understanding the basics of direct and indirect speech is important, but mastering the advanced techniques of these two forms of speech can take your writing to the next level. In this article, we will explore direct and indirect speech in detail and provide you with a comprehensive guide that covers everything you need to know.

Table of Contents

What is Direct Speech?

Direct speech is a way of reporting what someone said using their exact words. Direct speech is typically enclosed in quotation marks to distinguish it from the writer’s own words. Here are some examples of direct speech:

- “I am going to the store,” said John.

- “I love ice cream,” exclaimed Mary.

- “The weather is beautiful today,” said Sarah.

In direct speech, the exact words spoken by the speaker are used, and the tense and pronouns used in the quote are maintained. Punctuation is also important in direct speech. Commas are used to separate the quote from the reporting verb, and full stops, question marks, or exclamation marks are used at the end of the quote, depending on the tone of the statement.

What is Indirect Speech?

Indirect speech is a way of reporting what someone said using a paraphrased version of their words. In indirect speech, the writer rephrases the speaker’s words and incorporates them into the sentence. Here are some examples of indirect speech:

- John said that he was going to the store.

- Mary exclaimed that she loved ice cream.

- Sarah said that the weather was beautiful that day.

In indirect speech, the tense and pronouns may change, depending on the context of the sentence. Indirect speech is not enclosed in quotation marks, and the use of reporting verbs is important.

Differences Between Direct and Indirect Speech

The structure of direct and indirect speech is different. Direct speech is presented in quotation marks, whereas indirect speech is incorporated into the sentence without quotation marks. The tenses and pronouns used in direct and indirect speech also differ. In direct speech, the tense and pronouns used in the quote are maintained, whereas, in indirect speech, they may change depending on the context of the sentence. Reporting verbs are also used differently in direct and indirect speech. In direct speech, they are used to introduce the quote, while in indirect speech, they are used to report what was said.

How to Convert Direct Speech to Indirect Speech

Converting direct speech to indirect speech involves changing the tense, pronouns, and reporting verb. Here are the steps involved in converting direct speech to indirect speech:

- Remove the quotation marks.

- Use a reporting verb to introduce the indirect speech.

- Change the tense of the verb in the quote if necessary.

- Change the pronouns if necessary.

- Use the appropriate conjunction if necessary.

Here is an example of converting direct speech to indirect speech:

Direct speech: “I am going to the store,” said John. Indirect speech: John said that he was going to the store.

How to Convert Indirect Speech to Direct Speech

Converting indirect speech to direct speech involves using the same tense, pronouns, and reporting verb as the original quote. Here are the steps involved in converting indirect speech to direct speech:

- Remove the reporting verb.

- Use quotation marks to enclose the direct speech.

- Maintain the tense of the verb in the quote.

- Use the same pronouns as the original quote.

Here is an example of converting indirect speech to direct speech:

Indirect speech: John said that he was going to the store. Direct speech: “I am going to the store,” said John.

Advanced Techniques for Using Direct and Indirect Speech

Using direct and indirect speech effectively can add depth and complexity to your writing. Here are some advanced techniques for using direct and indirect speech:

Blending Direct and Indirect Speech

Blending direct and indirect speech involves using both forms of speech in a single sentence or paragraph. This technique can create a more engaging and realistic narrative. Here is an example:

“Sarah said, ‘I can’t believe it’s already winter.’ Her friend replied that she loved the cold weather and was excited about the snowboarding season.”

In this example, direct speech is used to convey Sarah’s words, and indirect speech is used to convey her friend’s response.

Using Reported Questions

Reported questions are a form of indirect speech that convey a question someone asked without using quotation marks. Reported questions often use reporting verbs like “asked” or “wondered.” Here is an example:

“John asked if I had seen the movie last night.”

In this example, the question “Have you seen the movie last night?” is reported indirectly without using quotation marks.

Using Direct Speech to Convey Emotion

Direct speech can be used to convey emotion more effectively than indirect speech. When using direct speech to convey emotion, it’s important to choose the right tone and emphasis. Here is an example:

“She screamed, ‘I hate you!’ as she slammed the door.”

In this example, the use of direct speech and the exclamation mark convey the intense emotion of the moment.

- When should I use direct speech?

- Direct speech should be used when you want to report what someone said using their exact words. Direct speech is appropriate when you want to convey the speaker’s tone, emphasis, and emotion.

- When should I use indirect speech?

- Indirect speech should be used when you want to report what someone said using a paraphrased version of their words. Indirect speech is appropriate when you want to provide information without conveying the speaker’s tone, emphasis, or emotion.

- What are some common reporting verbs?

- Some common reporting verbs include “said,” “asked,” “exclaimed,” “whispered,” “wondered,” and “suggested.”

Direct and indirect speech are important tools for effective communication and writing. Understanding the differences between these two forms of speech and knowing how to use them effectively can take your writing to the next level. By using advanced techniques like blending direct and indirect speech and using direct speech to convey emotion, you can create engaging and realistic narratives that resonate with your readers.

Related Posts

What Is The Parts of Speech? Definitions, Types & Examples

Simple Subject and Predicate Examples With Answers

100 Question Tags Examples with Answers

What is WH Question Words? Definition and Examples

List of Contractions in English With Examples

14 Punctuation Marks With Examples

Add comment cancel reply.

Save my name, email, and website in this browser for the next time I comment.

100 Reported Speech Examples: How To Change Direct Speech Into Indirect Speech

Reported speech, also known as indirect speech, is a way of communicating what someone else has said without quoting their exact words. For example, if your friend said, “ I am going to the store ,” in reported speech, you might convey this as, “ My friend said he was going to the store. ” Reported speech is common in both spoken and written language, especially in storytelling, news reporting, and everyday conversations.

Reported speech can be quite challenging for English language learners because in order to change direct speech into reported speech, one must change the perspective and tense of what was said by the original speaker or writer. In this guide, we will explain in detail how to change direct speech into indirect speech and provide lots of examples of reported speech to help you understand. Here are the key aspects of converting direct speech into reported speech.

Reported Speech: Changing Pronouns

Pronouns are usually changed to match the perspective of the person reporting the speech. For example, “I” in direct speech may become “he” or “she” in reported speech, depending on the context. Here are some example sentences:

- Direct : “I am going to the park.” Reported : He said he was going to the park .

- Direct : “You should try the new restaurant.” Reported : She said that I should try the new restaurant.

- Direct : “We will win the game.” Reported : They said that they would win the game.

- Direct : “She loves her new job.” Reported : He said that she loves her new job.

- Direct : “He can’t come to the party.” Reported : She said that he couldn’t come to the party.

- Direct : “It belongs to me.” Reported : He said that it belonged to him .

- Direct : “They are moving to a new city.” Reported : She said that they were moving to a new city.

- Direct : “You are doing a great job.” Reported : He told me that I was doing a great job.

- Direct : “I don’t like this movie.” Reported : She said that she didn’t like that movie.

- Direct : “We have finished our work.” Reported : They said that they had finished their work.

- Direct : “You will need to sign here.” Reported : He said that I would need to sign there.

- Direct : “She can solve the problem.” Reported : He said that she could solve the problem.

- Direct : “He was not at home yesterday.” Reported : She said that he had not been at home the day before.

- Direct : “It is my responsibility.” Reported : He said that it was his responsibility.

- Direct : “We are planning a surprise.” Reported : They said that they were planning a surprise.

Reported Speech: Reporting Verbs

In reported speech, various reporting verbs are used depending on the nature of the statement or the intention behind the communication. These verbs are essential for conveying the original tone, intent, or action of the speaker. Here are some examples demonstrating the use of different reporting verbs in reported speech:

- Direct: “I will help you,” she promised . Reported: She promised that she would help me.

- Direct: “You should study harder,” he advised . Reported: He advised that I should study harder.

- Direct: “I didn’t take your book,” he denied . Reported: He denied taking my book .

- Direct: “Let’s go to the cinema,” she suggested . Reported: She suggested going to the cinema .

- Direct: “I love this song,” he confessed . Reported: He confessed that he loved that song.

- Direct: “I haven’t seen her today,” she claimed . Reported: She claimed that she hadn’t seen her that day.

- Direct: “I will finish the project,” he assured . Reported: He assured me that he would finish the project.

- Direct: “I’m not feeling well,” she complained . Reported: She complained of not feeling well.

- Direct: “This is how you do it,” he explained . Reported: He explained how to do it.

- Direct: “I saw him yesterday,” she stated . Reported: She stated that she had seen him the day before.

- Direct: “Please open the window,” he requested . Reported: He requested that I open the window.

- Direct: “I can win this race,” he boasted . Reported: He boasted that he could win the race.

- Direct: “I’m moving to London,” she announced . Reported: She announced that she was moving to London.

- Direct: “I didn’t understand the instructions,” he admitted . Reported: He admitted that he didn’t understand the instructions.

- Direct: “I’ll call you tonight,” she promised . Reported: She promised to call me that night.

Reported Speech: Tense Shifts

When converting direct speech into reported speech, the verb tense is often shifted back one step in time. This is known as the “backshift” of tenses. It’s essential to adjust the tense to reflect the time elapsed between the original speech and the reporting. Here are some examples to illustrate how different tenses in direct speech are transformed in reported speech:

- Direct: “I am eating.” Reported: He said he was eating.

- Direct: “They will go to the park.” Reported: She mentioned they would go to the park.

- Direct: “We have finished our homework.” Reported: They told me they had finished their homework.

- Direct: “I do my exercises every morning.” Reported: He explained that he did his exercises every morning.

- Direct: “She is going to start a new job.” Reported: He heard she was going to start a new job.

- Direct: “I can solve this problem.” Reported: She said she could solve that problem.

- Direct: “We are visiting Paris next week.” Reported: They said they were visiting Paris the following week.

- Direct: “I will be waiting outside.” Reported: He stated he would be waiting outside.

- Direct: “They have been studying for hours.” Reported: She mentioned they had been studying for hours.

- Direct: “I can’t understand this chapter.” Reported: He complained that he couldn’t understand that chapter.

- Direct: “We were planning a surprise.” Reported: They told me they had been planning a surprise.

- Direct: “She has to complete her assignment.” Reported: He said she had to complete her assignment.

- Direct: “I will have finished the project by Monday.” Reported: She stated she would have finished the project by Monday.

- Direct: “They are going to hold a meeting.” Reported: She heard they were going to hold a meeting.

- Direct: “I must leave.” Reported: He said he had to leave.

Reported Speech: Changing Time and Place References

When converting direct speech into reported speech, references to time and place often need to be adjusted to fit the context of the reported speech. This is because the time and place relative to the speaker may have changed from the original statement to the time of reporting. Here are some examples to illustrate how time and place references change:

- Direct: “I will see you tomorrow .” Reported: He said he would see me the next day .

- Direct: “We went to the park yesterday .” Reported: They said they went to the park the day before .

- Direct: “I have been working here since Monday .” Reported: She mentioned she had been working there since Monday .

- Direct: “Let’s meet here at noon.” Reported: He suggested meeting there at noon.

- Direct: “I bought this last week .” Reported: She said she had bought it the previous week .

- Direct: “I will finish this by tomorrow .” Reported: He stated he would finish it by the next day .

- Direct: “She will move to New York next month .” Reported: He heard she would move to New York the following month .

- Direct: “They were at the festival this morning .” Reported: She said they were at the festival that morning .

- Direct: “I saw him here yesterday.” Reported: She mentioned she saw him there the day before.

- Direct: “We will return in a week .” Reported: They said they would return in a week .

- Direct: “I have an appointment today .” Reported: He said he had an appointment that day .

- Direct: “The event starts next Friday .” Reported: She mentioned the event starts the following Friday .

- Direct: “I lived in Berlin two years ago .” Reported: He stated he had lived in Berlin two years before .

- Direct: “I will call you tonight .” Reported: She said she would call me that night .

- Direct: “I was at the office yesterday .” Reported: He mentioned he was at the office the day before .

Reported Speech: Question Format

When converting questions from direct speech into reported speech, the format changes significantly. Unlike statements, questions require rephrasing into a statement format and often involve the use of introductory verbs like ‘asked’ or ‘inquired’. Here are some examples to demonstrate how questions in direct speech are converted into statements in reported speech:

- Direct: “Are you coming to the party?” Reported: She asked if I was coming to the party.

- Direct: “What time is the meeting?” Reported: He inquired what time the meeting was.

- Direct: “Why did you leave early?” Reported: They wanted to know why I had left early.

- Direct: “Can you help me with this?” Reported: She asked if I could help her with that.

- Direct: “Where did you buy this?” Reported: He wondered where I had bought that.

- Direct: “Who is going to the concert?” Reported: They asked who was going to the concert.

- Direct: “How do you solve this problem?” Reported: She questioned how to solve that problem.

- Direct: “Is this the right way to the station?” Reported: He inquired whether it was the right way to the station.

- Direct: “Do you know her name?” Reported: They asked if I knew her name.

- Direct: “Why are they moving out?” Reported: She wondered why they were moving out.

- Direct: “Have you seen my keys?” Reported: He asked if I had seen his keys.

- Direct: “What were they talking about?” Reported: She wanted to know what they had been talking about.

- Direct: “When will you return?” Reported: He asked when I would return.

- Direct: “Can she drive a manual car?” Reported: They inquired if she could drive a manual car.

- Direct: “How long have you been waiting?” Reported: She asked how long I had been waiting.

Reported Speech: Omitting Quotation Marks

In reported speech, quotation marks are not used, differentiating it from direct speech which requires them to enclose the spoken words. Reported speech summarizes or paraphrases what someone said without the need for exact wording. Here are examples showing how direct speech with quotation marks is transformed into reported speech without them:

- Direct: “I am feeling tired,” she said. Reported: She said she was feeling tired.

- Direct: “We will win the game,” he exclaimed. Reported: He exclaimed that they would win the game.

- Direct: “I don’t like apples,” the boy declared. Reported: The boy declared that he didn’t like apples.

- Direct: “You should visit Paris,” she suggested. Reported: She suggested that I should visit Paris.

- Direct: “I will be late,” he warned. Reported: He warned that he would be late.

- Direct: “I can’t believe you did that,” she expressed in surprise. Reported: She expressed her surprise that I had done that.

- Direct: “I need help with this task,” he admitted. Reported: He admitted that he needed help with the task.

- Direct: “I have never been to Italy,” she confessed. Reported: She confessed that she had never been to Italy.

- Direct: “We saw a movie last night,” they mentioned. Reported: They mentioned that they saw a movie the night before.

- Direct: “I am learning to play the piano,” he revealed. Reported: He revealed that he was learning to play the piano.

- Direct: “You must finish your homework,” she instructed. Reported: She instructed that I must finish my homework.

- Direct: “I will call you tomorrow,” he promised. Reported: He promised that he would call me the next day.

- Direct: “I have finished my assignment,” she announced. Reported: She announced that she had finished her assignment.

- Direct: “I cannot attend the meeting,” he apologized. Reported: He apologized for not being able to attend the meeting.

- Direct: “I don’t remember where I put it,” she confessed. Reported: She confessed that she didn’t remember where she put it.

Reported Speech Quiz

Thanks for reading! I hope you found these reported speech examples useful. Before you go, why not try this Reported Speech Quiz and see if you can change indirect speech into reported speech?

- Cambridge Dictionary +Plus

Reported speech

Reported speech is how we represent the speech of other people or what we ourselves say. There are two main types of reported speech: direct speech and indirect speech.

Direct speech repeats the exact words the person used, or how we remember their words:

Barbara said, “I didn’t realise it was midnight.”

In indirect speech, the original speaker’s words are changed.

Barbara said she hadn’t realised it was midnight .

In this example, I becomes she and the verb tense reflects the fact that time has passed since the words were spoken: didn’t realise becomes hadn’t realised .

Indirect speech focuses more on the content of what someone said rather than their exact words:

“I’m sorry,” said Mark. (direct)

Mark apologised . (indirect: report of a speech act)

In a similar way, we can report what people wrote or thought:

‘I will love you forever,’ he wrote, and then posted the note through Alice’s door. (direct report of what someone wrote)

He wrote that he would love her forever , and then posted the note through Alice’s door. (indirect report of what someone wrote)

I need a new direction in life , she thought. (direct report of someone’s thoughts)

She thought that she needed a new direction in life . (indirect report of someone’s thoughts)

Reported speech: direct speech

Reported speech: indirect speech

Reported speech: reporting and reported clauses

Speech reports consist of two parts: the reporting clause and the reported clause. The reporting clause includes a verb such as say, tell, ask, reply, shout , usually in the past simple, and the reported clause includes what the original speaker said.

Reported speech: punctuation

Direct speech.

In direct speech we usually put a comma between the reporting clause and the reported clause. The words of the original speaker are enclosed in inverted commas, either single (‘…’) or double (“…”). If the reported clause comes first, we put the comma inside the inverted commas:

“ I couldn’t sleep last night, ” he said.

Rita said, ‘ I don’t need you any more. ’

If the direct speech is a question or exclamation, we use a question mark or exclamation mark, not a comma:

‘Is there a reason for this ? ’ she asked.

“I hate you ! ” he shouted.

We sometimes use a colon (:) between the reporting clause and the reported clause when the reporting clause is first:

The officer replied: ‘It is not possible to see the General. He’s busy.’

Punctuation

Indirect speech

In indirect speech it is more common for the reporting clause to come first. When the reporting clause is first, we don’t put a comma between the reporting clause and the reported clause. When the reporting clause comes after the reported clause, we use a comma to separate the two parts:

She told me they had left her without any money.

Not: She told me, they had left her without any money .

Nobody had gone in or out during the previous hour, he informed us.

We don’t use question marks or exclamation marks in indirect reports of questions and exclamations:

He asked me why I was so upset.

Not: He asked me why I was so upset?

Reported speech: reporting verbs

Say and tell.

We can use say and tell to report statements in direct speech, but say is more common. We don’t always mention the person being spoken to with say , but if we do mention them, we use a prepositional phrase with to ( to me, to Lorna ):

‘I’ll give you a ring tomorrow,’ she said .

‘Try to stay calm,’ she said to us in a low voice.

Not: ‘Try to stay calm,’ she said us in a low voice .

With tell , we always mention the person being spoken to; we use an indirect object (underlined):

‘Enjoy yourselves,’ he told them .

Not: ‘Enjoy yourselves,’ he told .

In indirect speech, say and tell are both common as reporting verbs. We don’t use an indirect object with say , but we always use an indirect object (underlined) with tell :

He said he was moving to New Zealand.

Not: He said me he was moving to New Zealand .

He told me he was moving to New Zealand.

Not: He told he was moving to New Zealand .

We use say , but not tell , to report questions:

‘Are you going now?’ she said .

Not: ‘Are you going now?’ she told me .

We use say , not tell , to report greetings, congratulations and other wishes:

‘Happy birthday!’ she said .

Not: Happy birthday!’ she told me .

Everyone said good luck to me as I went into the interview.

Not: Everyone told me good luck …

Say or tell ?

Other reporting verbs

The reporting verbs in this list are more common in indirect reports, in both speaking and writing:

Simon admitted that he had forgotten to email Andrea.

Louis always maintains that there is royal blood in his family.

The builder pointed out that the roof was in very poor condition.

Most of the verbs in the list are used in direct speech reports in written texts such as novels and newspaper reports. In ordinary conversation, we don’t use them in direct speech. The reporting clause usually comes second, but can sometimes come first:

‘Who is that person?’ she asked .

‘It was my fault,’ he confessed .

‘There is no cause for alarm,’ the Minister insisted .

Verb patterns: verb + that -clause

Word of the Day

veterinary surgeon

Your browser doesn't support HTML5 audio

formal for vet

Dead ringers and peas in pods (Talking about similarities, Part 2)

Learn more with +Plus

- Recent and Recommended {{#preferredDictionaries}} {{name}} {{/preferredDictionaries}}

- Definitions Clear explanations of natural written and spoken English English Learner’s Dictionary Essential British English Essential American English

- Grammar and thesaurus Usage explanations of natural written and spoken English Grammar Thesaurus

- Pronunciation British and American pronunciations with audio English Pronunciation

- English–Chinese (Simplified) Chinese (Simplified)–English

- English–Chinese (Traditional) Chinese (Traditional)–English

- English–Dutch Dutch–English

- English–French French–English

- English–German German–English

- English–Indonesian Indonesian–English

- English–Italian Italian–English

- English–Japanese Japanese–English

- English–Norwegian Norwegian–English

- English–Polish Polish–English

- English–Portuguese Portuguese–English

- English–Spanish Spanish–English

- English–Swedish Swedish–English

- Dictionary +Plus Word Lists

Add ${headword} to one of your lists below, or create a new one.

{{message}}

Something went wrong.

There was a problem sending your report.

- English Grammar

- Reported Speech

Reported Speech - Definition, Rules and Usage with Examples

Reported speech or indirect speech is the form of speech used to convey what was said by someone at some point of time. This article will help you with all that you need to know about reported speech, its meaning, definition, how and when to use them along with examples. Furthermore, try out the practice questions given to check how far you have understood the topic.

Table of Contents

Definition of reported speech, rules to be followed when using reported speech, table 1 – change of pronouns, table 2 – change of adverbs of place and adverbs of time, table 3 – change of tense, table 4 – change of modal verbs, tips to practise reported speech, examples of reported speech, check your understanding of reported speech, frequently asked questions on reported speech in english, what is reported speech.

Reported speech is the form in which one can convey a message said by oneself or someone else, mostly in the past. It can also be said to be the third person view of what someone has said. In this form of speech, you need not use quotation marks as you are not quoting the exact words spoken by the speaker, but just conveying the message.

Now, take a look at the following dictionary definitions for a clearer idea of what it is.

Reported speech, according to the Oxford Learner’s Dictionary, is defined as “a report of what somebody has said that does not use their exact words.” The Collins Dictionary defines reported speech as “speech which tells you what someone said, but does not use the person’s actual words.” According to the Cambridge Dictionary, reported speech is defined as “the act of reporting something that was said, but not using exactly the same words.” The Macmillan Dictionary defines reported speech as “the words that you use to report what someone else has said.”

Reported speech is a little different from direct speech . As it has been discussed already, reported speech is used to tell what someone said and does not use the exact words of the speaker. Take a look at the following rules so that you can make use of reported speech effectively.

- The first thing you have to keep in mind is that you need not use any quotation marks as you are not using the exact words of the speaker.

- You can use the following formula to construct a sentence in the reported speech.

- You can use verbs like said, asked, requested, ordered, complained, exclaimed, screamed, told, etc. If you are just reporting a declarative sentence , you can use verbs like told, said, etc. followed by ‘that’ and end the sentence with a full stop . When you are reporting interrogative sentences, you can use the verbs – enquired, inquired, asked, etc. and remove the question mark . In case you are reporting imperative sentences , you can use verbs like requested, commanded, pleaded, ordered, etc. If you are reporting exclamatory sentences , you can use the verb exclaimed and remove the exclamation mark . Remember that the structure of the sentences also changes accordingly.

- Furthermore, keep in mind that the sentence structure , tense , pronouns , modal verbs , some specific adverbs of place and adverbs of time change when a sentence is transformed into indirect/reported speech.

Transforming Direct Speech into Reported Speech

As discussed earlier, when transforming a sentence from direct speech into reported speech, you will have to change the pronouns, tense and adverbs of time and place used by the speaker. Let us look at the following tables to see how they work.

Here are some tips you can follow to become a pro in using reported speech.

- Select a play, a drama or a short story with dialogues and try transforming the sentences in direct speech into reported speech.

- Write about an incident or speak about a day in your life using reported speech.

- Develop a story by following prompts or on your own using reported speech.

Given below are a few examples to show you how reported speech can be written. Check them out.

- Santana said that she would be auditioning for the lead role in Funny Girl.

- Blaine requested us to help him with the algebraic equations.

- Karishma asked me if I knew where her car keys were.

- The judges announced that the Warblers were the winners of the annual acapella competition.

- Binsha assured that she would reach Bangalore by 8 p.m.

- Kumar said that he had gone to the doctor the previous day.

- Lakshmi asked Teena if she would accompany her to the railway station.

- Jibin told me that he would help me out after lunch.

- The police ordered everyone to leave from the bus stop immediately.

- Rahul said that he was drawing a caricature.

Transform the following sentences into reported speech by making the necessary changes.

1. Rachel said, “I have an interview tomorrow.”

2. Mahesh said, “What is he doing?”

3. Sherly said, “My daughter is playing the lead role in the skit.”

4. Dinesh said, “It is a wonderful movie!”

5. Suresh said, “My son is getting married next month.”

6. Preetha said, “Can you please help me with the invitations?”

7. Anna said, “I look forward to meeting you.”

8. The teacher said, “Make sure you complete the homework before tomorrow.”

9. Sylvester said, “I am not going to cry anymore.”

10. Jade said, “My sister is moving to Los Angeles.”

Now, find out if you have answered all of them correctly.

1. Rachel said that she had an interview the next day.

2. Mahesh asked what he was doing.

3. Sherly said that her daughter was playing the lead role in the skit.

4. Dinesh exclaimed that it was a wonderful movie.

5. Suresh said that his son was getting married the following month.

6. Preetha asked if I could help her with the invitations.

7. Anna said that she looked forward to meeting me.

8. The teacher told us to make sure we completed the homework before the next day.

9. Sylvester said that he was not going to cry anymore.

10. Jade said that his sister was moving to Los Angeles.

What is reported speech?

What is the definition of reported speech.

Reported speech, according to the Oxford Learner’s Dictionary, is defined as “a report of what somebody has said that does not use their exact words.” The Collins Dictionary defines reported speech as “speech which tells you what someone said, but does not use the person’s actual words.” According to the Cambridge Dictionary, reported speech is defined as “the act of reporting something that was said, but not using exactly the same words.” The Macmillan Dictionary defines reported speech as “the words that you use to report what someone else has said.”

What is the formula of reported speech?

You can use the following formula to construct a sentence in the reported speech. Subject said that (report whatever the speaker said)

Give some examples of reported speech.

Given below are a few examples to show you how reported speech can be written.

Leave a Comment Cancel reply

Your Mobile number and Email id will not be published. Required fields are marked *

Request OTP on Voice Call

Post My Comment

- Share Share

Register with BYJU'S & Download Free PDFs

Register with byju's & watch live videos.

What is Reported Speech and how to use it? with Examples

Reported speech and indirect speech are two terms that refer to the same concept, which is the act of expressing what someone else has said. Reported speech is different from direct speech because it does not use the speaker's exact words. Instead, the reporting verb is used to introduce the reported speech, and the tense and pronouns are changed to reflect the shift in perspective. There are two main types of reported speech: statements and questions. 1. Reported Statements: In reported statements, the reporting verb is usually "said." The tense in the reported speech changes from the present simple to the past simple, and any pronouns referring to the speaker or listener are changed to reflect the shift in perspective. For example, "I am going to the store," becomes "He said that he was going to the store." 2. Reported Questions: In reported questions, the reporting verb is usually "asked." The tense in the reported speech changes from the present simple to the past simple, and the word order changes from a question to a statement. For example, "What time is it?" becomes "She asked what time it was." It's important to note that the tense shift in reported speech depends on the context and the time of the reported speech. Here are a few more examples: ● Direct speech: "I will call you later." Reported speech: He said that he would call me later. ● Direct speech: "Did you finish your homework?" Reported speech: She asked if I had finished my homework. ● Direct speech: "I love pizza." Reported speech: They said that they loved pizza.

When do we use reported speech?

Reported speech is used to report what someone else has said, thought, or written. It is often used in situations where you want to relate what someone else has said without quoting them directly. Reported speech can be used in a variety of contexts, such as in news reports, academic writing, and everyday conversation. Some common situations where reported speech is used include: News reports: Journalists often use reported speech to quote what someone said in an interview or press conference. Business and professional communication: In professional settings, reported speech can be used to summarize what was discussed in a meeting or to report feedback from a customer. Conversational English: In everyday conversations, reported speech is used to relate what someone else said. For example, "She told me that she was running late." Narration: In written narratives or storytelling, reported speech can be used to convey what a character said or thought.

How to make reported speech?

1. Change the pronouns and adverbs of time and place: In reported speech, you need to change the pronouns, adverbs of time and place to reflect the new speaker or point of view. Here's an example: Direct speech: "I'm going to the store now," she said. Reported speech: She said she was going to the store then. In this example, the pronoun "I" is changed to "she" and the adverb "now" is changed to "then." 2. Change the tense: In reported speech, you usually need to change the tense of the verb to reflect the change from direct to indirect speech. Here's an example: Direct speech: "I will meet you at the park tomorrow," he said. Reported speech: He said he would meet me at the park the next day. In this example, the present tense "will" is changed to the past tense "would." 3. Change reporting verbs: In reported speech, you can use different reporting verbs such as "say," "tell," "ask," or "inquire" depending on the context of the speech. Here's an example: Direct speech: "Did you finish your homework?" she asked. Reported speech: She asked if I had finished my homework. In this example, the reporting verb "asked" is changed to "said" and "did" is changed to "had." Overall, when making reported speech, it's important to pay attention to the verb tense and the changes in pronouns, adverbs, and reporting verbs to convey the original speaker's message accurately.

How do I change the pronouns and adverbs in reported speech?

1. Changing Pronouns: In reported speech, the pronouns in the original statement must be changed to reflect the perspective of the new speaker. Generally, the first person pronouns (I, me, my, mine, we, us, our, ours) are changed according to the subject of the reporting verb, while the second and third person pronouns (you, your, yours, he, him, his, she, her, hers, it, its, they, them, their, theirs) are changed according to the object of the reporting verb. For example: Direct speech: "I love chocolate." Reported speech: She said she loved chocolate. Direct speech: "You should study harder." Reported speech: He advised me to study harder. Direct speech: "She is reading a book." Reported speech: They noticed that she was reading a book. 2. Changing Adverbs: In reported speech, the adverbs and adverbial phrases that indicate time or place may need to be changed to reflect the perspective of the new speaker. For example: Direct speech: "I'm going to the cinema tonight." Reported speech: She said she was going to the cinema that night. Direct speech: "He is here." Reported speech: She said he was there. Note that the adverb "now" usually changes to "then" or is omitted altogether in reported speech, depending on the context. It's important to keep in mind that the changes made to pronouns and adverbs in reported speech depend on the context and the perspective of the new speaker. With practice, you can become more comfortable with making these changes in reported speech.

How do I change the tense in reported speech?

In reported speech, the tense of the reported verb usually changes to reflect the change from direct to indirect speech. Here are some guidelines on how to change the tense in reported speech: Present simple in direct speech changes to past simple in reported speech. For example: Direct speech: "I like pizza." Reported speech: She said she liked pizza. Present continuous in direct speech changes to past continuous in reported speech. For example: Direct speech: "I am studying for my exam." Reported speech: He said he was studying for his exam. Present perfect in direct speech changes to past perfect in reported speech. For example: Direct speech: "I have finished my work." Reported speech: She said she had finished her work. Past simple in direct speech changes to past perfect in reported speech. For example: Direct speech: "I visited my grandparents last weekend." Reported speech: She said she had visited her grandparents the previous weekend. Will in direct speech changes to would in reported speech. For example: Direct speech: "I will help you with your project." Reported speech: He said he would help me with my project. Can in direct speech changes to could in reported speech. For example: Direct speech: "I can speak French." Reported speech: She said she could speak French. Remember that the tense changes in reported speech depend on the tense of the verb in the direct speech, and the tense you use in reported speech should match the time frame of the new speaker's perspective. With practice, you can become more comfortable with changing the tense in reported speech.

Do I always need to use a reporting verb in reported speech?

No, you do not always need to use a reporting verb in reported speech. However, using a reporting verb can help to clarify who is speaking and add more context to the reported speech. In some cases, the reported speech can be introduced by phrases such as "I heard that" or "It seems that" without using a reporting verb. For example: Direct speech: "I'm going to the cinema tonight." Reported speech with a reporting verb: She said she was going to the cinema tonight. Reported speech without a reporting verb: It seems that she's going to the cinema tonight. However, it's important to note that using a reporting verb can help to make the reported speech more formal and accurate. When using reported speech in academic writing or journalism, it's generally recommended to use a reporting verb to make the reporting more clear and credible. Some common reporting verbs include say, tell, explain, ask, suggest, and advise. For example: Direct speech: "I think we should invest in renewable energy." Reported speech with a reporting verb: She suggested that they invest in renewable energy. Overall, while using a reporting verb is not always required, it can be helpful to make the reported speech more clear and accurate.

How to use reported speech to report questions and commands?

1. Reporting Questions: When reporting questions, you need to use an introductory phrase such as "asked" or "wondered" followed by the question word (if applicable), subject, and verb. You also need to change the word order to make it a statement. Here's an example: Direct speech: "What time is the meeting?" Reported speech: She asked what time the meeting was. Note that the question mark is not used in reported speech. 2. Reporting Commands: When reporting commands, you need to use an introductory phrase such as "ordered" or "told" followed by the person, to + infinitive, and any additional information. Here's an example: Direct speech: "Clean your room!" Reported speech: She ordered me to clean my room. Note that the exclamation mark is not used in reported speech. In both cases, the tense of the reported verb should be changed accordingly. For example, present simple changes to past simple, and future changes to conditional. Here are some examples: Direct speech: "Will you go to the party with me?" Reported speech: She asked if I would go to the party with her. Direct speech: "Please bring me a glass of water." Reported speech: She requested that I bring her a glass of water. Remember that when using reported speech to report questions and commands, the introductory phrases and verb tenses are important to convey the intended meaning accurately.

How to make questions in reported speech?