The Future of AI Research: 20 Thesis Ideas for Undergraduate Students in Machine Learning and Deep Learning for 2023!

A comprehensive guide for crafting an original and innovative thesis in the field of ai..

By Aarafat Islam on 2023-01-11

“The beauty of machine learning is that it can be applied to any problem you want to solve, as long as you can provide the computer with enough examples.” — Andrew Ng

This article provides a list of 20 potential thesis ideas for an undergraduate program in machine learning and deep learning in 2023. Each thesis idea includes an introduction , which presents a brief overview of the topic and the research objectives . The ideas provided are related to different areas of machine learning and deep learning, such as computer vision, natural language processing, robotics, finance, drug discovery, and more. The article also includes explanations, examples, and conclusions for each thesis idea, which can help guide the research and provide a clear understanding of the potential contributions and outcomes of the proposed research. The article also emphasized the importance of originality and the need for proper citation in order to avoid plagiarism.

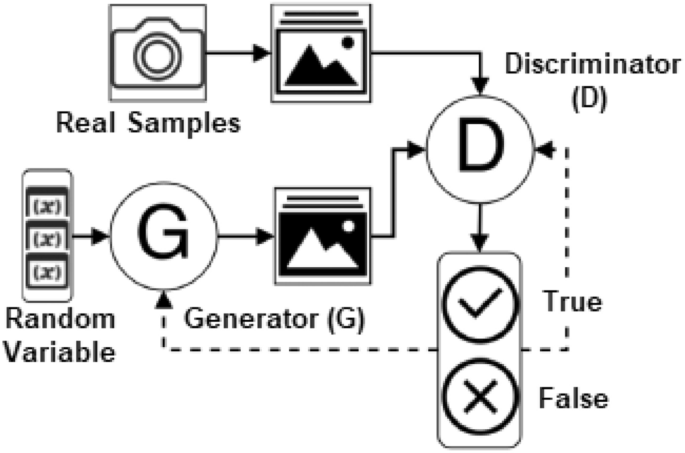

1. Investigating the use of Generative Adversarial Networks (GANs) in medical imaging: A deep learning approach to improve the accuracy of medical diagnoses.

Introduction: Medical imaging is an important tool in the diagnosis and treatment of various medical conditions. However, accurately interpreting medical images can be challenging, especially for less experienced doctors. This thesis aims to explore the use of GANs in medical imaging, in order to improve the accuracy of medical diagnoses.

2. Exploring the use of deep learning in natural language generation (NLG): An analysis of the current state-of-the-art and future potential.

Introduction: Natural language generation is an important field in natural language processing (NLP) that deals with creating human-like text automatically. Deep learning has shown promising results in NLP tasks such as machine translation, sentiment analysis, and question-answering. This thesis aims to explore the use of deep learning in NLG and analyze the current state-of-the-art models, as well as potential future developments.

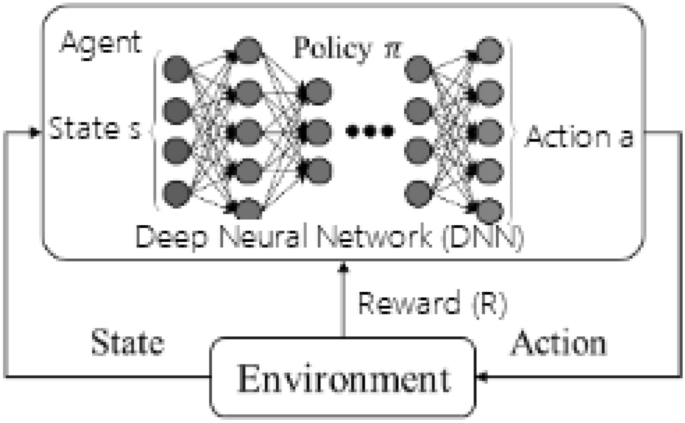

3. Development and evaluation of deep reinforcement learning (RL) for robotic navigation and control.

Introduction: Robotic navigation and control are challenging tasks, which require a high degree of intelligence and adaptability. Deep RL has shown promising results in various robotics tasks, such as robotic arm control, autonomous navigation, and manipulation. This thesis aims to develop and evaluate a deep RL-based approach for robotic navigation and control and evaluate its performance in various environments and tasks.

4. Investigating the use of deep learning for drug discovery and development.

Introduction: Drug discovery and development is a time-consuming and expensive process, which often involves high failure rates. Deep learning has been used to improve various tasks in bioinformatics and biotechnology, such as protein structure prediction and gene expression analysis. This thesis aims to investigate the use of deep learning for drug discovery and development and examine its potential to improve the efficiency and accuracy of the drug development process.

5. Comparison of deep learning and traditional machine learning methods for anomaly detection in time series data.

Introduction: Anomaly detection in time series data is a challenging task, which is important in various fields such as finance, healthcare, and manufacturing. Deep learning methods have been used to improve anomaly detection in time series data, while traditional machine learning methods have been widely used as well. This thesis aims to compare deep learning and traditional machine learning methods for anomaly detection in time series data and examine their respective strengths and weaknesses.

Photo by Joanna Kosinska on Unsplash

6. Use of deep transfer learning in speech recognition and synthesis.

Introduction: Speech recognition and synthesis are areas of natural language processing that focus on converting spoken language to text and vice versa. Transfer learning has been widely used in deep learning-based speech recognition and synthesis systems to improve their performance by reusing the features learned from other tasks. This thesis aims to investigate the use of transfer learning in speech recognition and synthesis and how it improves the performance of the system in comparison to traditional methods.

7. The use of deep learning for financial prediction.

Introduction: Financial prediction is a challenging task that requires a high degree of intelligence and adaptability, especially in the field of stock market prediction. Deep learning has shown promising results in various financial prediction tasks, such as stock price prediction and credit risk analysis. This thesis aims to investigate the use of deep learning for financial prediction and examine its potential to improve the accuracy of financial forecasting.

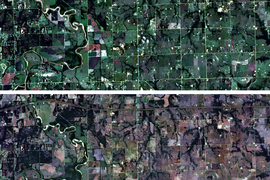

8. Investigating the use of deep learning for computer vision in agriculture.

Introduction: Computer vision has the potential to revolutionize the field of agriculture by improving crop monitoring, precision farming, and yield prediction. Deep learning has been used to improve various computer vision tasks, such as object detection, semantic segmentation, and image classification. This thesis aims to investigate the use of deep learning for computer vision in agriculture and examine its potential to improve the efficiency and accuracy of crop monitoring and precision farming.

9. Development and evaluation of deep learning models for generative design in engineering and architecture.

Introduction: Generative design is a powerful tool in engineering and architecture that can help optimize designs and reduce human error. Deep learning has been used to improve various generative design tasks, such as design optimization and form generation. This thesis aims to develop and evaluate deep learning models for generative design in engineering and architecture and examine their potential to improve the efficiency and accuracy of the design process.

10. Investigating the use of deep learning for natural language understanding.

Introduction: Natural language understanding is a complex task of natural language processing that involves extracting meaning from text. Deep learning has been used to improve various NLP tasks, such as machine translation, sentiment analysis, and question-answering. This thesis aims to investigate the use of deep learning for natural language understanding and examine its potential to improve the efficiency and accuracy of natural language understanding systems.

Photo by UX Indonesia on Unsplash

11. Comparing deep learning and traditional machine learning methods for image compression.

Introduction: Image compression is an important task in image processing and computer vision. It enables faster data transmission and storage of image files. Deep learning methods have been used to improve image compression, while traditional machine learning methods have been widely used as well. This thesis aims to compare deep learning and traditional machine learning methods for image compression and examine their respective strengths and weaknesses.

12. Using deep learning for sentiment analysis in social media.

Introduction: Sentiment analysis in social media is an important task that can help businesses and organizations understand their customers’ opinions and feedback. Deep learning has been used to improve sentiment analysis in social media, by training models on large datasets of social media text. This thesis aims to use deep learning for sentiment analysis in social media, and evaluate its performance against traditional machine learning methods.

13. Investigating the use of deep learning for image generation.

Introduction: Image generation is a task in computer vision that involves creating new images from scratch or modifying existing images. Deep learning has been used to improve various image generation tasks, such as super-resolution, style transfer, and face generation. This thesis aims to investigate the use of deep learning for image generation and examine its potential to improve the quality and diversity of generated images.

14. Development and evaluation of deep learning models for anomaly detection in cybersecurity.

Introduction: Anomaly detection in cybersecurity is an important task that can help detect and prevent cyber-attacks. Deep learning has been used to improve various anomaly detection tasks, such as intrusion detection and malware detection. This thesis aims to develop and evaluate deep learning models for anomaly detection in cybersecurity and examine their potential to improve the efficiency and accuracy of cybersecurity systems.

15. Investigating the use of deep learning for natural language summarization.

Introduction: Natural language summarization is an important task in natural language processing that involves creating a condensed version of a text that preserves its main meaning. Deep learning has been used to improve various natural language summarization tasks, such as document summarization and headline generation. This thesis aims to investigate the use of deep learning for natural language summarization and examine its potential to improve the efficiency and accuracy of natural language summarization systems.

Photo by Windows on Unsplash

16. Development and evaluation of deep learning models for facial expression recognition.

Introduction: Facial expression recognition is an important task in computer vision and has many practical applications, such as human-computer interaction, emotion recognition, and psychological studies. Deep learning has been used to improve facial expression recognition, by training models on large datasets of images. This thesis aims to develop and evaluate deep learning models for facial expression recognition and examine their performance against traditional machine learning methods.

17. Investigating the use of deep learning for generative models in music and audio.

Introduction: Music and audio synthesis is an important task in audio processing, which has many practical applications, such as music generation and speech synthesis. Deep learning has been used to improve generative models for music and audio, by training models on large datasets of audio data. This thesis aims to investigate the use of deep learning for generative models in music and audio and examine its potential to improve the quality and diversity of generated audio.

18. Study the comparison of deep learning models with traditional algorithms for anomaly detection in network traffic.

Introduction: Anomaly detection in network traffic is an important task that can help detect and prevent cyber-attacks. Deep learning models have been used for this task, and traditional methods such as clustering and rule-based systems are widely used as well. This thesis aims to compare deep learning models with traditional algorithms for anomaly detection in network traffic and analyze the trade-offs between the models in terms of accuracy and scalability.

19. Investigating the use of deep learning for improving recommender systems.

Introduction: Recommender systems are widely used in many applications such as online shopping, music streaming, and movie streaming. Deep learning has been used to improve the performance of recommender systems, by training models on large datasets of user-item interactions. This thesis aims to investigate the use of deep learning for improving recommender systems and compare its performance with traditional content-based and collaborative filtering approaches.

20. Development and evaluation of deep learning models for multi-modal data analysis.

Introduction: Multi-modal data analysis is the task of analyzing and understanding data from multiple sources such as text, images, and audio. Deep learning has been used to improve multi-modal data analysis, by training models on large datasets of multi-modal data. This thesis aims to develop and evaluate deep learning models for multi-modal data analysis and analyze their potential to improve performance in comparison to single-modal models.

I hope that this article has provided you with a useful guide for your thesis research in machine learning and deep learning. Remember to conduct a thorough literature review and to include proper citations in your work, as well as to be original in your research to avoid plagiarism. I wish you all the best of luck with your thesis and your research endeavors!

Continue Learning

Prompt engineering: how to turn your words into works of art, the best free ai tool for image generation: not midjourney, running llms on your personal pc: a cost-free guide to unleashing their potential, 6 best ai apis to build intelligent apps in 2023, fundamental tasks and techniques of computer vision, how to generate openai (gpt-3) output in json format for ruby developers.

Analytics Insight

Top 10 Research and Thesis Topics for ML Projects in 2022

This article features the top 10 research and thesis topics for ML projects for students to try in 2022

Text mining and text classification, image-based applications, machine vision, optimization, voice classification, sentiment analysis, recommendation framework project, mall customers’ project, object detection with deep learning.

Disclaimer: Any financial and crypto market information given on Analytics Insight are sponsored articles, written for informational purpose only and is not an investment advice. The readers are further advised that Crypto products and NFTs are unregulated and can be highly risky. There may be no regulatory recourse for any loss from such transactions. Conduct your own research by contacting financial experts before making any investment decisions. The decision to read hereinafter is purely a matter of choice and shall be construed as an express undertaking/guarantee in favour of Analytics Insight of being absolved from any/ all potential legal action, or enforceable claims. We do not represent nor own any cryptocurrency, any complaints, abuse or concerns with regards to the information provided shall be immediately informed here .

You May Also Like

Medical Web Development: Top 10 Programming Languages Used in Health Tech

AI Dungeon is Making the Best Out of Text and Image Generators

Automaker Porsche Joins the Non-Fungible Tokens Bandwagon

TMS Network (TMSN) Gains Traction as Fantom (FTM) Releases v2 of fUSD Stablecoin and Avalanche (AVAX) Eyes Blockchain Digitization

Analytics Insight® is an influential platform dedicated to insights, trends, and opinion from the world of data-driven technologies. It monitors developments, recognition, and achievements made by Artificial Intelligence, Big Data and Analytics companies across the globe.

- Select Language:

- Privacy Policy

- Content Licensing

- Terms & Conditions

- Submit an Interview

Special Editions

- Dec – Crypto Weekly Vol-1

- 40 Under 40 Innovators

- Women In Technology

- Market Reports

- AI Glossary

- Infographics

Latest Issue

Disclaimer: Any financial and crypto market information given on Analytics Insight is written for informational purpose only and is not an investment advice. Conduct your own research by contacting financial experts before making any investment decisions, more information here .

Second Menu

- Interesting

- Scholarships

- UGC-CARE Journals

50 Deep Learning Research Ideas

Deep learning Research and Project Ideas

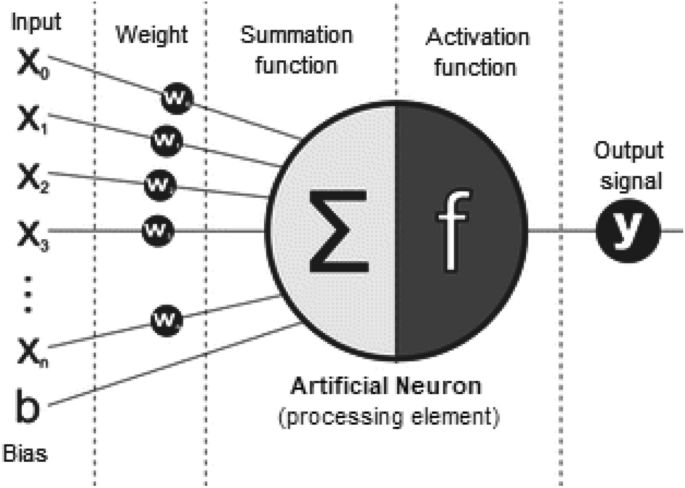

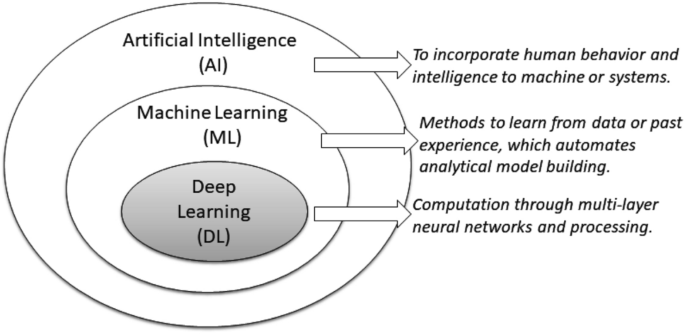

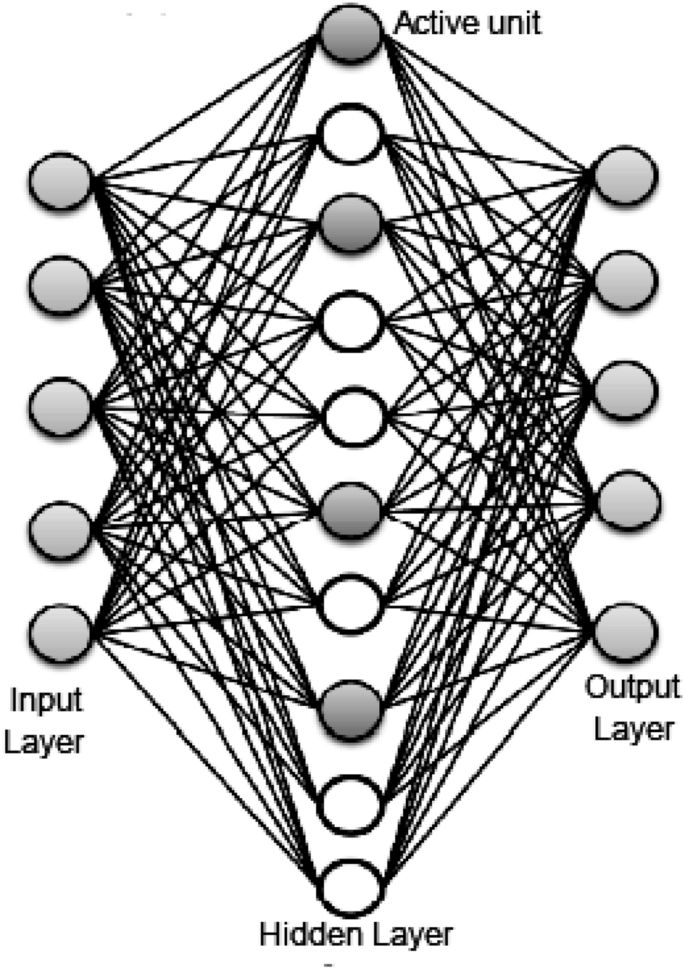

Deep learning is a branch of artificial intelligence that uses algorithms to model high-level abstractions in data by using multiple layers of processing. It is a subset of machine learning , which is a broader field of artificial intelligence that uses algorithms to learn from data.

Deep learning algorithms are used to recognize patterns in large datasets and make predictions based on those patterns. Deep learning research focuses on developing algorithms that can learn from data in an unsupervised manner, allowing them to learn complex representations of data without relying on explicit instructions from humans.

Deep learning research also focuses on developing methods for improving the accuracy and speed of deep learning algorithms. Additionally, deep learning research explores ways to make deep learning algorithms more efficient and effective in a variety of applications.

In this article, ilovephd listed the 50 interesting research and project ideas in deep learning

Deep Learning Research Ideas

1. Developing a deep learning model to detect and classify objects in images.

2. Developing a deep learning model to detect and classify objects in videos.

3. Developing a deep learning model to detect and classify objects in 3D scenes.

4. Developing a deep learning model to detect and classify objects in audio.

5. Developing a deep learning model to detect and classify objects in text.

6. Develop a deep learning model to generate new images from a given set of images.

7. Develop a deep learning model to generate new videos from a given set of videos.

8. Develop a deep learning model to generate new 3D scenes from a given set of 3D scenes.

9. Developing a deep learning model to generate new audio from a given set of audio.

10. Developing a deep learning model to generate new text from a given set of text.

11. Developing a deep learning model to detect and classify emotions in images.

12. Developing a deep learning model to detect and classify emotions in videos.

13. Developing a deep learning model to detect and classify emotions in audio.

14. Developing a deep learning model to detect and classify emotions in text.

15. Developing a deep learning model to detect and classify objects in medical images.

16. Developing a deep learning model to detect and classify objects in medical videos.

17. Developing a deep learning model to detect and classify objects in medical audio.

18. Developing a deep learning model to detect and classify objects in medical text.

19. Developing a deep learning model to detect and classify objects in satellite images.

20. Developing a deep learning model to detect and classify objects in aerial videos.

21. Developing a deep learning model to detect and classify objects in aerial audio.

22. Developing a deep learning model to detect and classify objects in aerial text.

23. Developing a deep learning model to detect and classify objects in street view images.

24. Developing a deep learning model to detect and classify objects in street view videos.

25. Developing a deep learning model to detect and classify objects in street view audio.

26. Developing a deep learning model to detect and classify objects in street view text.

27. Developing a deep learning model to detect and classify objects in industrial images.

28. Developing a deep learning model to detect and classify objects in industrial videos.

29. Developing a deep learning model to detect and classify objects in industrial audio.

30. Developing a deep learning model to detect and classify objects in industrial text.

31. Developing a deep learning model to detect and classify objects in autonomous vehicle images.

32. Developing a deep learning model to detect and classify objects in autonomous vehicle videos.

33. Developing a deep learning model to detect and classify objects in autonomous vehicle audio.

34. Developing a deep learning model to detect and classify objects in autonomous vehicle text.

35. Developing a deep learning model to detect and classify objects in robotics images.

36. Developing a deep learning model to detect and classify objects in robotics videos.

37. Developing a deep learning model to detect and classify objects in robotics audio.

38. Developing a deep learning model to detect and classify objects in robotics text.

39. Developing a deep learning model to detect and classify objects in natural language processing.

40. Developing a deep learning model to detect and classify objects in computer vision.

41. Developing a deep learning model to detect and classify objects in speech recognition.

42. Developing a deep learning model to detect and classify objects in natural language understanding.

43. Developing a deep learning model to detect and classify objects in facial recognition.

44. Developing a deep learning model to detect and classify objects in gesture recognition.

45. Developing a deep learning model to detect and classify objects in sentiment analysis.

46. Developing a deep learning model to detect and classify objects in time series analysis.

47. Developing a deep learning model to detect and classify objects in anomaly detection.

48. Developing a deep learning model to detect and classify objects in recommender systems.

49. Developing a deep learning model to detect and classify objects in medical diagnosis.

50. Developing a deep learning model to detect and classify objects in fraud detection.

I hope, this article would help you know various Deep Learning research ideas and project ideas.

- Deep Learning

- Machine Learning

- Research Ideas

Most Asked PhD Viva-Voce Questions and Answers

Tips to prepare phd viva-voce presentation slides, 480 ugc-care list of journals – science – 2024, most popular, birac call for proposals seeks innovative biotech products, 10 ai software tools to outlining a research paper, ugc-care list of journals – arts and humanities – 2024, choosing a phd supervisor 9 key factors to consider, 10 ideas to get 10x more google scholar citations, ms word vs latex: which is better to write your phd thesis, top scopus indexed computer science & engineering journals for fast publication – 2024, best for you, 14 best free plagiarism checkers online – 2024, what is phd, popular posts, top scopus indexed journals in aviation and aerospace engineering, popular category.

- POSTDOC 317

- Interesting 259

- Journals 234

- Fellowship 127

- Research Methodology 102

- All Scopus Indexed Journals 91

iLovePhD is a research education website to know updated research-related information. It helps researchers to find top journals for publishing research articles and get an easy manual for research tools. The main aim of this website is to help Ph.D. scholars who are working in various domains to get more valuable ideas to carry out their research. Learn the current groundbreaking research activities around the world, love the process of getting a Ph.D.

Contact us: [email protected]

Copyright © 2024 iLovePhD. All rights reserved

- Artificial intelligence

Available Master's thesis topics in machine learning

Main content.

Here we list topics that are available. You may also be interested in our list of completed Master's theses .

Learning and inference with large Bayesian networks

Most learning and inference tasks with Bayesian networks are NP-hard. Therefore, one often resorts to using different heuristics that do not give any quality guarantees.

Task: Evaluate quality of large-scale learning or inference algorithms empirically.

Advisor: Pekka Parviainen

Sum-product networks

Traditionally, probabilistic graphical models use a graph structure to represent dependencies and independencies between random variables. Sum-product networks are a relatively new type of a graphical model where the graphical structure models computations and not the relationships between variables. The benefit of this representation is that inference (computing conditional probabilities) can be done in linear time with respect to the size of the network.

Potential thesis topics in this area: a) Compare inference speed with sum-product networks and Bayesian networks. Characterize situations when one model is better than the other. b) Learning the sum-product networks is done using heuristic algorithms. What is the effect of approximation in practice?

Bayesian Bayesian networks

The naming of Bayesian networks is somewhat misleading because there is nothing Bayesian in them per se; A Bayesian network is just a representation of a joint probability distribution. One can, of course, use a Bayesian network while doing Bayesian inference. One can also learn Bayesian networks in a Bayesian way. That is, instead of finding an optimal network one computes the posterior distribution over networks.

Task: Develop algorithms for Bayesian learning of Bayesian networks (e.g., MCMC, variational inference, EM)

Large-scale (probabilistic) matrix factorization

The idea behind matrix factorization is to represent a large data matrix as a product of two or more smaller matrices.They are often used in, for example, dimensionality reduction and recommendation systems. Probabilistic matrix factorization methods can be used to quantify uncertainty in recommendations. However, large-scale (probabilistic) matrix factorization is computationally challenging.

Potential thesis topics in this area: a) Develop scalable methods for large-scale matrix factorization (non-probabilistic or probabilistic), b) Develop probabilistic methods for implicit feedback (e.g., recommmendation engine when there are no rankings but only knowledge whether a customer has bought an item)

Bayesian deep learning

Standard deep neural networks do not quantify uncertainty in predictions. On the other hand, Bayesian methods provide a principled way to handle uncertainty. Combining these approaches leads to Bayesian neural networks. The challenge is that Bayesian neural networks can be cumbersome to use and difficult to learn.

The task is to analyze Bayesian neural networks and different inference algorithms in some simple setting.

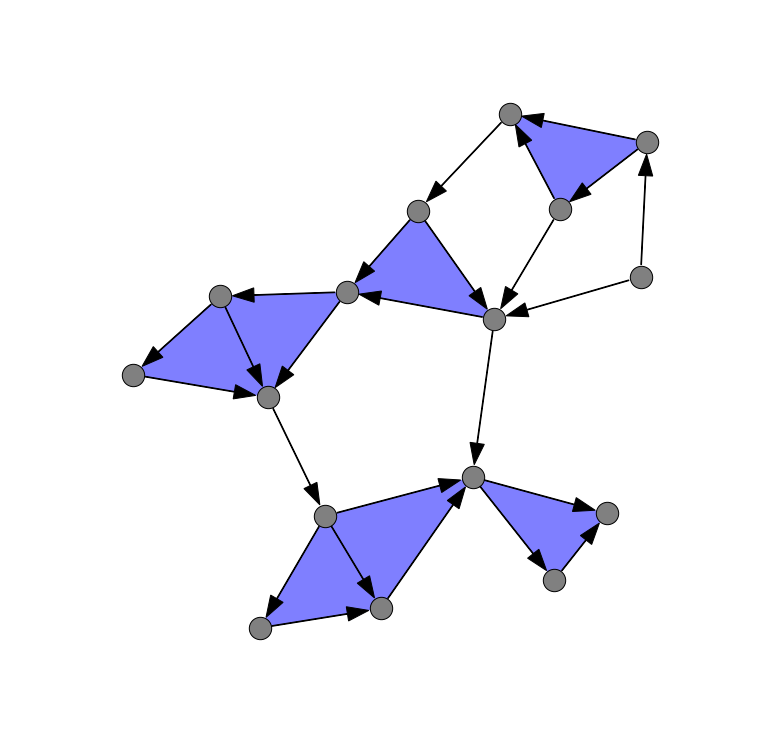

Deep learning for combinatorial problems

Deep learning is usually applied in regression or classification problems. However, there has been some recent work on using deep learning to develop heuristics for combinatorial optimization problems; see, e.g., [1] and [2].

Task: Choose a combinatorial problem (or several related problems) and develop deep learning methods to solve them.

References: [1] Vinyals, Fortunato and Jaitly: Pointer networks. NIPS 2015. [2] Dai, Khalil, Zhang, Dilkina and Song: Learning Combinatorial Optimization Algorithms over Graphs. NIPS 2017.

Advisors: Pekka Parviainen, Ahmad Hemmati

Estimating the number of modes of an unknown function

Mode seeking considers estimating the number of local maxima of a function f. Sometimes one can find modes by, e.g., looking for points where the derivative of the function is zero. However, often the function is unknown and we have only access to some (possibly noisy) values of the function.

In topological data analysis, we can analyze topological structures using persistent homologies. For 1-dimensional signals, this can translate into looking at the birth/death persistence diagram, i.e. the birth and death of connected topological components as we expand the space around each point where we have observed our function. These observations turn out to be closely related to the modes (local maxima) of the function. A recent paper [1] proposed an efficient method for mode seeking.

In this project, the task is to extend the ideas from [1] to get a probabilistic estimate on the number of modes. To this end, one has to use probabilistic methods such as Gaussian processes.

[1] U. Bauer, A. Munk, H. Sieling, and M. Wardetzky. Persistence barcodes versus Kolmogorov signatures: Detecting modes of one-dimensional signals. Foundations of computational mathematics17:1 - 33, 2017.

Advisors: Pekka Parviainen , Nello Blaser

Causal Abstraction Learning

We naturally make sense of the world around us by working out causal relationships between objects and by representing in our minds these objects with different degrees of approximation and detail. Both processes are essential to our understanding of reality, and likely to be fundamental for developing artificial intelligence. The first process may be expressed using the formalism of structural causal models, while the second can be grounded in the theory of causal abstraction.

This project will consider the problem of learning an abstraction between two given structural causal models. The primary goal will be the development of efficient algorithms able to learn a meaningful abstraction between the given causal models.

Advisor: Fabio Massimo Zennaro

Causal Bandits

"Multi-armed bandit" is an informal name for slot machines, and the formal name of a large class of problems where an agent has to choose an action among a range of possibilities without knowing the ensuing rewards. Multi-armed bandit problems are one of the most essential reinforcement learning problems where an agent is directly faced with an exploitation-exploration trade-off.

This project will consider a class of multi-armed bandits where an agent, upon taking an action, interacts with a causal system. The primary goal will be the development of learning strategies that takes advantage of the underlying causal system in order to learn optimal policies in a shortest amount of time.

Causal Modelling for Battery Manufacturing

Lithium-ion batteries are poised to be one of the most important sources of energy in the near future. Yet, the process of manufacturing these batteries is very hard to model and control. Optimizing the different phases of production to maximize the lifetime of the batteries is a non-trivial challenge since physical models are limited in scope and collecting experimental data is extremely expensive and time-consuming.

This project will consider the problem of aggregating and analyzing data regarding a few stages in the process of battery manufacturing. The primary goal will be the development of algorithms for transporting and integrating data collected in different contexts, as well as the use of explainable algorithms to interpret them.

Reinforcement Learning for Computer Security

The field of computer security presents a wide variety of challenging problems for artificial intelligence and autonomous agents. Guaranteeing the security of a system against attacks and penetrations by malicious hackers has always been a central concern of this field, and machine learning could now offer a substantial contribution. Security capture-the-flag simulations are particularly well-suited as a testbed for the application and development of reinforcement learning algorithms.

This project will consider the use of reinforcement learning for the preventive purpose of testing systems and discovering vulnerabilities before they can be exploited. The primary goal will be the modelling of capture-the-flag challenges of interest and the development of reinforcement learning algorithms that can solve them.

Approaches to AI Safety

The world and the Internet are more and more populated by artificial autonomous agents carrying out tasks on our behalf. Many of these agents are provided with an objective and they learn their behaviour trying to achieve their objective as best as they can. However, this approach can not guarantee that an agent, while learning its behaviour, will not undertake actions that may have unforeseen and undesirable effects. Research in AI safety tries to design autonomous agent that will behave in a predictable and safe way.

This project will consider specific problems and novel solution in the domain of AI safety and reinforcement learning. The primary goal will be the development of innovative algorithms and their implementation withing established frameworks.

Reinforcement Learning for Super-modelling

Super-modelling [1] is a technique designed for combining together complex dynamical models: pre-trained models are aggregated with messages and information being exchanged in order synchronize the behavior of the different modles and produce more accurate and reliable predictions. Super-models are used, for instance, in weather or climate science, where pre-existing models are ensembled together and their states dynamically aggregated to generate more realistic simulations.

This project will consider how reinforcement learning algorithms may be used to solve the coordination problem among the individual models forming a super-model. The primary goal will be the formulation of the super-modelling problem within the reinforcement learning framework and the study of custom RL algorithms to improve the overall performance of super-models.

[1] Schevenhoven, Francine, et al. "Supermodeling: improving predictions with an ensemble of interacting models." Bulletin of the American Meteorological Society 104.9 (2023): E1670-E1686.

Advisor: Fabio Massimo Zennaro , Francine Janneke Schevenhoven

The Topology of Flight Paths

Air traffic data tells us the position, direction, and speed of an aircraft at a given time. In other words, if we restrict our focus to a single aircraft, we are looking at a multivariate time-series. We can visualize the flight path as a curve above earth's surface quite geometrically. Topological data analysis (TDA) provides different methods for analysing the shape of data. Consequently, TDA may help us to extract meaningful features from the air traffic data. Although the typical flight path shapes may not be particularly intriguing, we can attempt to identify more intriguing patterns or “abnormal” manoeuvres, such as aborted landings, go-arounds, or diverts.

Advisor: Odin Hoff Gardå , Nello Blaser

Automatic hyperparameter selection for isomap

Isomap is a non-linear dimensionality reduction method with two free hyperparameters (number of nearest neighbors and neighborhood radius). Different hyperparameters result in dramatically different embeddings. Previous methods for selecting hyperparameters focused on choosing one optimal hyperparameter. In this project, you will explore the use of persistent homology to find parameter ranges that result in stable embeddings. The project has theoretic and computational aspects.

Advisor: Nello Blaser

Validate persistent homology

Persistent homology is a generalization of hierarchical clustering to find more structure than just the clusters. Traditionally, hierarchical clustering has been evaluated using resampling methods and assessing stability properties. In this project you will generalize these resampling methods to develop novel stability properties that can be used to assess persistent homology. This project has theoretic and computational aspects.

Topological Ancombs quartet

This topic is based on the classical Ancombs quartet and families of point sets with identical 1D persistence ( https://arxiv.org/abs/2202.00577 ). The goal is to generate more interesting datasets using the simulated annealing methods presented in ( http://library.usc.edu.ph/ACM/CHI%202017/1proc/p1290.pdf ). This project is mostly computational.

Persistent homology vectorization with cycle location

There are many methods of vectorizing persistence diagrams, such as persistence landscapes, persistence images, PersLay and statistical summaries. Recently we have designed algorithms to in some cases efficiently detect the location of persistence cycles. In this project, you will vectorize not just the persistence diagram, but additional information such as the location of these cycles. This project is mostly computational with some theoretic aspects.

Divisive covers

Divisive covers are a divisive technique for generating filtered simplicial complexes. They original used a naive way of dividing data into a cover. In this project, you will explore different methods of dividing space, based on principle component analysis, support vector machines and k-means clustering. In addition, you will explore methods of using divisive covers for classification. This project will be mostly computational.

Learning Acquisition Functions for Cost-aware Bayesian Optimization

This is a follow-up project of an earlier Master thesis that developed a novel method for learning Acquisition Functions in Bayesian Optimization through the use of Reinforcement Learning. The goal of this project is to further generalize this method (more general input, learned cost-functions) and apply it to hyperparameter optimization for neural networks.

Advisors: Nello Blaser , Audun Ljone Henriksen

Stable updates

This is a follow-up project of an earlier Master thesis that introduced and studied empirical stability in the context of tree-based models. The goal of this project is to develop stable update methods for deep learning models. You will design sevaral stable methods and empirically compare them (in terms of loss and stability) with a baseline and with one another.

Advisors: Morten Blørstad , Nello Blaser

Multimodality in Bayesian neural network ensembles

One method to assess uncertainty in neural network predictions is to use dropout or noise generators at prediction time and run every prediction many times. This leads to a distribution of predictions. Informatively summarizing such probability distributions is a non-trivial task and the commonly used means and standard deviations result in the loss of crucial information, especially in the case of multimodal distributions with distinct likely outcomes. In this project, you will analyze such multimodal distributions with mixture models and develop ways to exploit such multimodality to improve training. This project can have theoretical, computational and applied aspects.

Learning a hierarchical metric

Often, labels have defined relationships to each other, for instance in a hierarchical taxonomy. E.g. ImageNet labels are derived from the WordNet graph, and biological species are taxonomically related, and can have similarities depending on life stage, sex, or other properties.

ArcFace is an alternative loss function that aims for an embedding that is more generally useful than softmax. It is commonly used in metric learning/few shot learning cases.

Here, we will develop a metric learning method that learns from data with hierarchical labels. Using multiple ArcFace heads, we will simultaneously learn to place representations to optimize the leaf label as well as intermediate labels on the path from leaf to root of the label tree. Using taxonomically classified plankton image data, we will measure performance as a function of ArcFace parameters (sharpness/temperature and margins -- class-wise or level-wise), and compare the results to existing methods.

Advisor: Ketil Malde ( [email protected] )

Self-supervised object detection in video

One challenge with learning object detection is that in many scenes that stretch off into the distance, annotating small, far-off, or blurred objects is difficult. It is therefore desirable to learn from incompletely annotated scenes, and one-shot object detectors may suffer from incompletely annotated training data.

To address this, we will use a region-propsal algorithm (e.g. SelectiveSearch) to extract potential crops from each frame. Classification will be based on two approaches: a) training based on annotated fish vs random similarly-sized crops without annotations, and b) using a self-supervised method to build a representation for crops, and building a classifier for the extracted regions. The method will be evaluated against one-shot detectors and other training regimes.

If successful, the method will be applied to fish detection and tracking in videos from baited and unbaited underwater traps, and used to estimate abundance of various fish species.

See also: Benettino (2016): https://link.springer.com/chapter/10.1007/978-3-319-48881-3_56

Representation learning for object detection

While traditional classifiers work well with data that is labeled with disjoint classes and reasonably balanced class abundances, reality is often less clean. An alternative is to learn a vectors space embedding that reflects semantic relationships between objects, and deriving classes from this representation. This is especially useful for few-shot classification (ie. very few examples in the training data).

The task here is to extend a modern object detector (e.g. Yolo v8) to output an embedding of the identified object. Instead of a softmax classifier, we can learn the embedding either in a supervised manner (using annotations on frames) by attaching an ArcFace or other supervised metric learning head. Alternatively, the representation can be learned from tracked detections over time using e.g. a contrastive loss function to keep the representation for an object (approximately) constant over time. The performance of the resulting object detector will be measured on underwater videos, targeting species detection and/or indiviual recognition (re-ID).

Time-domain object detection

Object detectors for video are normally trained on still frames, but it is evident (from human experience) that using time domain information is more effective. I.e., it can be hard to identify far-off or occluded objects in still images, but movement in time often reveals them.

Here we will extend a state of the art object detector (e.g. yolo v8) with time domain data. Instead of using a single frame as input, the model will be modified to take a set of frames surrounding the annotated frame as input. Performance will be compared to using single-frame detection.

Large-scale visualization of acoustic data

The Institute of Marine Research has decades of acoustic data collected in various surveys. These data are in the process of being converted to data formats that can be processed and analyzed more easily using packages like Xarray and Dask.

The objective is to make these data more accessible to regular users by providing a visual front end. The user should be able to quickly zoom in and out, perform selection, export subsets, apply various filters and classifiers, and overlay annotations and other relevant auxiliary data.

Learning acoustic target classification from simulation

Broadband echosounders emit a complex signal that spans a large frequency band. Different targets will reflect, absorb, and generate resonance at different amplitudes and frequencies, and it is therefore possible to classify targets at much higher resolution and accuracy than before. Due to the complexity of the received signals, deriving effective profiles that can be used to identify targets is difficult.

Here we will use simulated frequency spectra from geometric objects with various shapes, orientation, and other properties. We will train ML models to estimate (recover) the geometric and material properties of objects based on these spectra. The resulting model will be applied to read broadband data, and compared to traditional classification methods.

Online learning in real-time systems

Build a model for the drilling process by using the Virtual simulator OpenLab ( https://openlab.app/ ) for real-time data generation and online learning techniques. The student will also do a short survey of existing online learning techniques and learn how to cope with errors and delays in the data.

Advisor: Rodica Mihai

Building a finite state automaton for the drilling process by using queries and counterexamples

Datasets will be generated by using the Virtual simulator OpenLab ( https://openlab.app/ ). The student will study the datasets and decide upon a good setting to extract a finite state automaton for the drilling process. The student will also do a short survey of existing techniques for extracting finite state automata from process data. We present a novel algorithm that uses exact learning and abstraction to extract a deterministic finite automaton describing the state dynamics of a given trained RNN. We do this using Angluin's L*algorithm as a learner and the trained RNN as an oracle. Our technique efficiently extracts accurate automata from trained RNNs, even when the state vectors are large and require fine differentiation.arxiv.org

Scaling Laws for Language Models in Generative AI

Large Language Models (LLM) power today's most prominent language technologies in Generative AI like ChatGPT, which, in turn, are changing the way that people access information and solve tasks of many kinds.

A recent interest on scaling laws for LLMs has shown trends on understanding how well they perform in terms of factors like the how much training data is used, how powerful the models are, or how much computational cost is allocated. (See, for example, Kaplan et al. - "Scaling Laws for Neural Language Models”, 2020.)

In this project, the task will consider to study scaling laws for different language models and with respect with one or multiple modeling factors.

Advisor: Dario Garigliotti

Applications of causal inference methods to omics data

Many hard problems in machine learning are directly linked to causality [1]. The graphical causal inference framework developed by Judea Pearl can be traced back to pioneering work by Sewall Wright on path analysis in genetics and has inspired research in artificial intelligence (AI) [1].

The Michoel group has developed the open-source tool Findr [2] which provides efficient implementations of mediation and instrumental variable methods for applications to large sets of omics data (genomics, transcriptomics, etc.). Findr works well on a recent data set for yeast [3].

We encourage students to explore promising connections between the fiels of causal inference and machine learning. Feel free to contact us to discuss projects related to causal inference. Possible topics include: a) improving methods based on structural causal models, b) evaluating causal inference methods on data for model organisms, c) comparing methods based on causal models and neural network approaches.

References:

1. Schölkopf B, Causality for Machine Learning, arXiv (2019): https://arxiv.org/abs/1911.10500

2. Wang L and Michoel T. Efficient and accurate causal inference with hidden confounders from genome-transcriptome variation data. PLoS Computational Biology 13:e1005703 (2017). https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1005703

3. Ludl A and and Michoel T. Comparison between instrumental variable and mediation-based methods for reconstructing causal gene networks in yeast. arXiv:2010.07417 https://arxiv.org/abs/2010.07417

Advisors: Adriaan Ludl , Tom Michoel

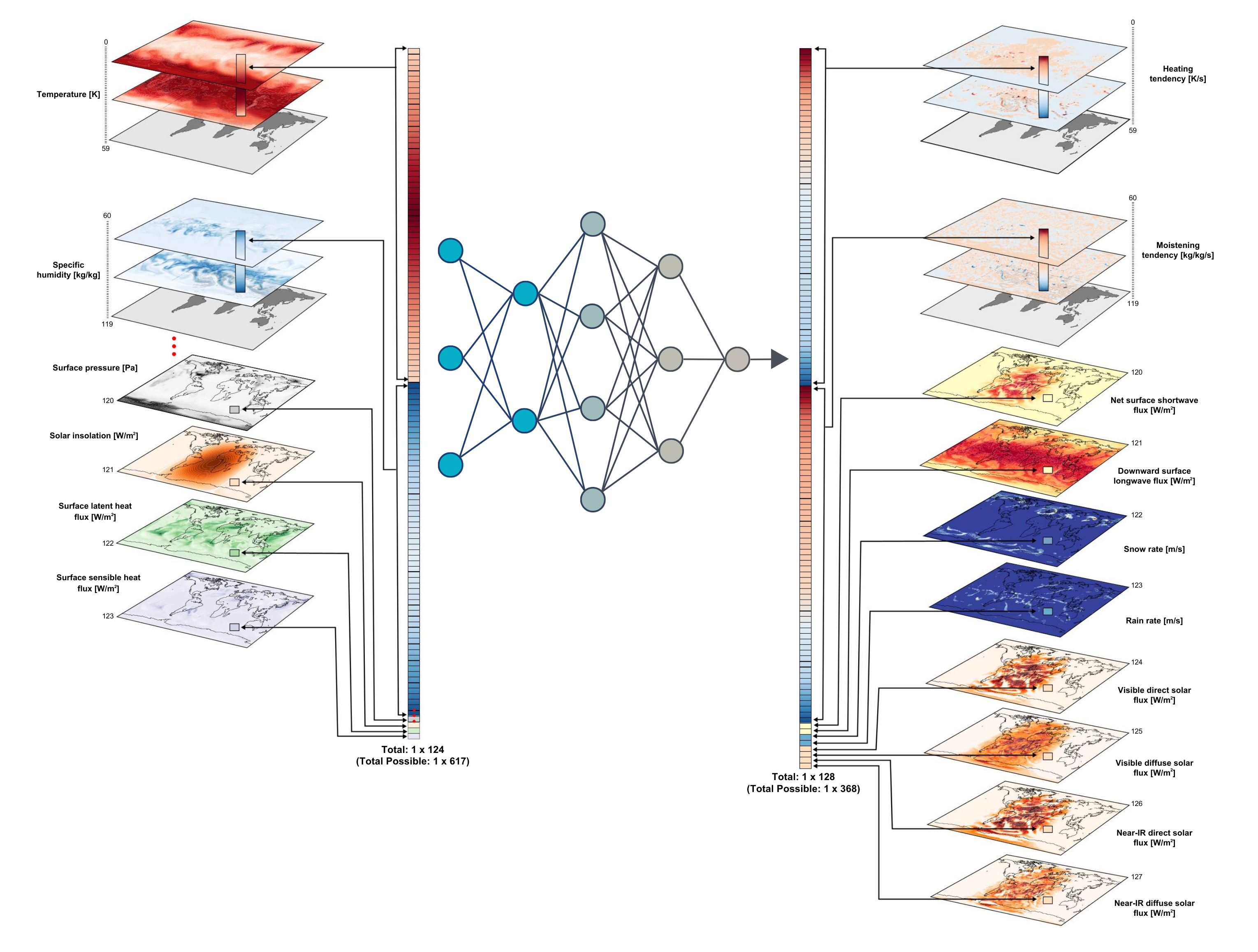

Space-Time Linkage of Fish Distribution to Environmental Conditions

Conditions in the marine environment, such as, temperature and currents, influence the spatial distribution and migration patterns of marine species. Hence, understanding the link between environmental factors and fish behavior is crucial in predicting, e.g., how fish populations may respond to climate change. Deriving this link is challenging because it requires analysis of two types of datasets (i) large environmental (currents, temperature) datasets that vary in space and time, and (ii) sparse and sporadic spatial observations of fish populations.

Project goal

The primary goal of the project is to develop a methodology that helps predict how spatial distribution of two fish stocks (capelin and mackerel) change in response to variability in the physical marine environment (ocean currents and temperature). The information can also be used to optimize data collection by minimizing time spent in spatial sampling of the populations.

The project will focus on the use of machine learning and/or causal inference algorithms. As a first step, we use synthetic (fish and environmental) data from analytic models that couple the two data sources. Because the ‘truth’ is known, we can judge the efficiency and error margins of the methodologies. We then apply the methodologies to real world (empirical) observations.

Advisors: Tom Michoel , Sam Subbey .

Towards precision medicine for cancer patient stratification

On average, a drug or a treatment is effective in only about half of patients who take it. This means patients need to try several until they find one that is effective at the cost of side effects associated with every treatment. The ultimate goal of precision medicine is to provide a treatment best suited for every individual. Sequencing technologies have now made genomics data available in abundance to be used towards this goal.

In this project we will specifically focus on cancer. Most cancer patients get a particular treatment based on the cancer type and the stage, though different individuals will react differently to a treatment. It is now well established that genetic mutations cause cancer growth and spreading and importantly, these mutations are different in individual patients. The aim of this project is use genomic data allow to better stratification of cancer patients, to predict the treatment most likely to work. Specifically, the project will use machine learning approach to integrate genomic data and build a classifier for stratification of cancer patients.

Advisor: Anagha Joshi

Unraveling gene regulation from single cell data

Multi-cellularity is achieved by precise control of gene expression during development and differentiation and aberrations of this process leads to disease. A key regulatory process in gene regulation is at the transcriptional level where epigenetic and transcriptional regulators control the spatial and temporal expression of the target genes in response to environmental, developmental, and physiological cues obtained from a signalling cascade. The rapid advances in sequencing technology has now made it feasible to study this process by understanding the genomewide patterns of diverse epigenetic and transcription factors as well as at a single cell level.

Single cell RNA sequencing is highly important, particularly in cancer as it allows exploration of heterogenous tumor sample, obstructing therapeutic targeting which leads to poor survival. Despite huge clinical relevance and potential, analysis of single cell RNA-seq data is challenging. In this project, we will develop strategies to infer gene regulatory networks using network inference approaches (both supervised and un-supervised). It will be primarily tested on the single cell datasets in the context of cancer.

Developing a Stress Granule Classifier

To carry out the multitude of functions 'expected' from a human cell, the cell employs a strategy of division of labour, whereby sub-cellular organelles carry out distinct functions. Thus we traditionally understand organelles as distinct units defined both functionally and physically with a distinct shape and size range. More recently a new class of organelles have been discovered that are assembled and dissolved on demand and are composed of liquid droplets or 'granules'. Granules show many properties characteristic of liquids, such as flow and wetting, but they can also assume many shapes and indeed also fluctuate in shape. One such liquid organelle is a stress granule (SG).

Stress granules are pro-survival organelles that assemble in response to cellular stress and important in cancer and neurodegenerative diseases like Alzheimer's. They are liquid or gel-like and can assume varying sizes and shapes depending on their cellular composition.

In a given experiment we are able to image the entire cell over a time series of 1000 frames; from which we extract a rough estimation of the size and shape of each granule. Our current method is susceptible to noise and a granule may be falsely rejected if the boundary is drawn poorly in a small majority of frames. Ideally, we would also like to identify potentially interesting features, such as voids, in the accepted granules.

We are interested in applying a machine learning approach to develop a descriptor for a 'classic' granule and furthermore classify them into different functional groups based on disease status of the cell. This method would be applied across thousands of granules imaged from control and disease cells. We are a multi-disciplinary group consisting of biologists, computational scientists and physicists.

Advisors: Sushma Grellscheid , Carl Jones

Machine Learning based Hyperheuristic algorithm

Develop a Machine Learning based Hyper-heuristic algorithm to solve a pickup and delivery problem. A hyper-heuristic is a heuristics that choose heuristics automatically. Hyper-heuristic seeks to automate the process of selecting, combining, generating or adapting several simpler heuristics to efficiently solve computational search problems [Handbook of Metaheuristics]. There might be multiple heuristics for solving a problem. Heuristics have their own strength and weakness. In this project, we want to use machine-learning techniques to learn the strength and weakness of each heuristic while we are using them in an iterative search for finding high quality solutions and then use them intelligently for the rest of the search. Once a new information is gathered during the search the hyper-heuristic algorithm automatically adjusts the heuristics.

Advisor: Ahmad Hemmati

Machine learning for solving satisfiability problems and applications in cryptanalysis

Advisor: Igor Semaev

Hybrid modeling approaches for well drilling with Sintef

Several topics are available.

"Flow models" are first-principles models simulating the flow, temperature and pressure in a well being drilled. Our project is exploring "hybrid approaches" where these models are combined with machine learning models that either learn from time series data from flow model runs or from real-world measurements during drilling. The goal is to better detect drilling problems such as hole cleaning, make more accurate predictions and correctly learn from and interpret real-word data.

The "surrogate model" refers to a ML model which learns to mimic the flow model by learning from the model inputs and outputs. Use cases for surrogate models include model predictions where speed is favoured over accuracy and exploration of parameter space.

Surrogate models with active Learning

While it is possible to produce a nearly unlimited amount of training data by running the flow model, the surrogate model may still perform poorly if it lacks training data in the part of the parameter space it operates in or if it "forgets" areas of the parameter space by being fed too much data from a narrow range of parameters.

The goal of this thesis is to build a surrogate model (with any architecture) for some restricted parameter range and implement an active learning approach where the ML requests more model runs from the flow model in the parts of the parameter space where it is needed the most. The end result should be a surrogate model that is quick and performs acceptably well over the whole defined parameter range.

Surrogate models trained via adversarial learning

How best to train surrogate models from runs of the flow model is an open question. This master thesis would use the adversarial learning approach to build a surrogate model which to its "adversary" becomes indistinguishable from the output of an actual flow model run.

GPU-based Surrogate models for parameter search

While CPU speed largely stalled 20 years ago in terms of working frequency on single cores, multi-core CPUs and especially GPUs took off and delivered increases in computational power by parallelizing computations.

Modern machine learning such as deep learning takes advantage this boom in computing power by running on GPUs.

The SINTEF flow models in contrast, are software programs that runs on a CPU and does not happen to utilize multi-core CPU functionality. The model runs advance time-step by time-step and each time step relies on the results from the previous time step. The flow models are therefore fundamentally sequential and not well suited to massive parallelization.

It is however of interest to run different model runs in parallel, to explore parameter spaces. The use cases for this includes model calibration, problem detection and hypothesis generation and testing.

The task of this thesis is to implement an ML-based surrogate model in such a way that many surrogate model outputs can be produced at the same time using a single GPU. This will likely entail some trade off with model size and maybe some coding tricks.

Uncertainty estimates of hybrid predictions (Lots of room for creativity, might need to steer it more, needs good background literature)

When using predictions from a ML model trained on time series data, it is useful to know if it's accurate or should be trusted. The student is challenged to develop hybrid approaches that incorporates estimates of uncertainty. Components could include reporting variance from ML ensembles trained on a diversity of time series data, implementation of conformal predictions, analysis of training data parameter ranges vs current input, etc. The output should be a "traffic light signal" roughly indicating the accuracy of the predictions.

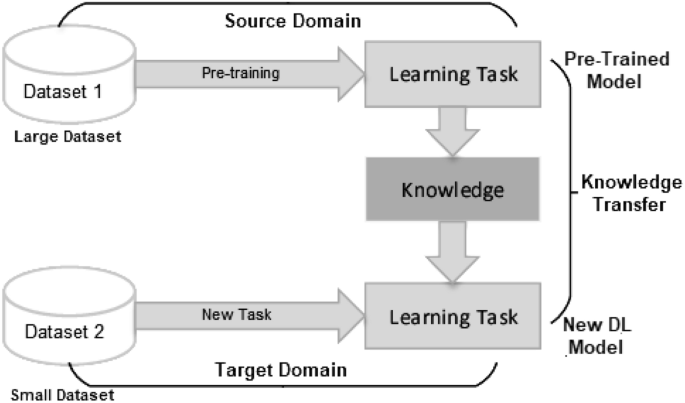

Transfer learning approaches

We're assuming an ML model is to be used for time series prediction

It is possible to train an ML on a wide range of scenarios in the flow models, but we expect that to perform well, the model also needs to see model runs representative of the type of well and drilling operation it will be used in. In this thesis the student implements a transfer learning approach, where the model is trained on general model runs and fine-tuned on a most representative data set.

(Bonus1: implementing one-shot learning, Bonus2: Using real-world data in the fine-tuning stage)

ML capable of reframing situations

When a human oversees an operation like well drilling, she has a mental model of the situation and new data such as pressure readings from the well is interpreted in light of this model. This is referred to as "framing" and is the normal mode of work. However, when a problem occurs, it becomes harder to reconcile the data with the mental model. The human then goes into "reframing", building a new mental model that includes the ongoing problem. This can be seen as a process of hypothesis generation and testing.

A computer model however, lacks re-framing. A flow model will keep making predictions under the assumption of no problems and a separate alarm system will use the deviation between the model predictions and reality to raise an alarm. This is in a sense how all alarm systems work, but it means that the human must discard the computer model as a tool at the same time as she's handling a crisis.

The student is given access to a flow model and a surrogate model which can learn from model runs both with and without hole cleaning and is challenged to develop a hybrid approach where the ML+flow model continuously performs hypothesis generation and testing and is able to "switch" into predictions of a hole cleaning problem and different remediations of this.

Advisor: Philippe Nivlet at Sintef together with advisor from UiB

Explainable AI at Equinor

In the project Machine Teaching for XAI (see https://xai.w.uib.no ) a master thesis in collaboration between UiB and Equinor.

Advisor: One of Pekka Parviainen/Jan Arne Telle/Emmanuel Arrighi + Bjarte Johansen from Equinor.

Explainable AI at Eviny

In the project Machine Teaching for XAI (see https://xai.w.uib.no ) a master thesis in collaboration between UiB and Eviny.

Advisor: One of Pekka Parviainen/Jan Arne Telle/Emmanuel Arrighi + Kristian Flikka from Eviny.

If you want to suggest your own topic, please contact Pekka Parviainen , Fabio Massimo Zennaro or Nello Blaser .

Machine Learning - CMU

PhD Dissertations

[all are .pdf files].

Learning Models that Match Jacob Tyo, 2024

Improving Human Integration across the Machine Learning Pipeline Charvi Rastogi, 2024

Reliable and Practical Machine Learning for Dynamic Healthcare Settings Helen Zhou, 2023

Automatic customization of large-scale spiking network models to neuronal population activity (unavailable) Shenghao Wu, 2023

Estimation of BVk functions from scattered data (unavailable) Addison J. Hu, 2023

Rethinking object categorization in computer vision (unavailable) Jayanth Koushik, 2023

Advances in Statistical Gene Networks Jinjin Tian, 2023 Post-hoc calibration without distributional assumptions Chirag Gupta, 2023

The Role of Noise, Proxies, and Dynamics in Algorithmic Fairness Nil-Jana Akpinar, 2023

Collaborative learning by leveraging siloed data Sebastian Caldas, 2023

Modeling Epidemiological Time Series Aaron Rumack, 2023

Human-Centered Machine Learning: A Statistical and Algorithmic Perspective Leqi Liu, 2023

Uncertainty Quantification under Distribution Shifts Aleksandr Podkopaev, 2023

Probabilistic Reinforcement Learning: Using Data to Define Desired Outcomes, and Inferring How to Get There Benjamin Eysenbach, 2023

Comparing Forecasters and Abstaining Classifiers Yo Joong Choe, 2023

Using Task Driven Methods to Uncover Representations of Human Vision and Semantics Aria Yuan Wang, 2023

Data-driven Decisions - An Anomaly Detection Perspective Shubhranshu Shekhar, 2023

Applied Mathematics of the Future Kin G. Olivares, 2023

METHODS AND APPLICATIONS OF EXPLAINABLE MACHINE LEARNING Joon Sik Kim, 2023

NEURAL REASONING FOR QUESTION ANSWERING Haitian Sun, 2023

Principled Machine Learning for Societally Consequential Decision Making Amanda Coston, 2023

Long term brain dynamics extend cognitive neuroscience to timescales relevant for health and physiology Maxwell B. Wang, 2023

Long term brain dynamics extend cognitive neuroscience to timescales relevant for health and physiology Darby M. Losey, 2023

Calibrated Conditional Density Models and Predictive Inference via Local Diagnostics David Zhao, 2023

Towards an Application-based Pipeline for Explainability Gregory Plumb, 2022

Objective Criteria for Explainable Machine Learning Chih-Kuan Yeh, 2022

Making Scientific Peer Review Scientific Ivan Stelmakh, 2022

Facets of regularization in high-dimensional learning: Cross-validation, risk monotonization, and model complexity Pratik Patil, 2022

Active Robot Perception using Programmable Light Curtains Siddharth Ancha, 2022

Strategies for Black-Box and Multi-Objective Optimization Biswajit Paria, 2022

Unifying State and Policy-Level Explanations for Reinforcement Learning Nicholay Topin, 2022

Sensor Fusion Frameworks for Nowcasting Maria Jahja, 2022

Equilibrium Approaches to Modern Deep Learning Shaojie Bai, 2022

Towards General Natural Language Understanding with Probabilistic Worldbuilding Abulhair Saparov, 2022

Applications of Point Process Modeling to Spiking Neurons (Unavailable) Yu Chen, 2021

Neural variability: structure, sources, control, and data augmentation Akash Umakantha, 2021

Structure and time course of neural population activity during learning Jay Hennig, 2021

Cross-view Learning with Limited Supervision Yao-Hung Hubert Tsai, 2021

Meta Reinforcement Learning through Memory Emilio Parisotto, 2021

Learning Embodied Agents with Scalably-Supervised Reinforcement Learning Lisa Lee, 2021

Learning to Predict and Make Decisions under Distribution Shift Yifan Wu, 2021

Statistical Game Theory Arun Sai Suggala, 2021

Towards Knowledge-capable AI: Agents that See, Speak, Act and Know Kenneth Marino, 2021

Learning and Reasoning with Fast Semidefinite Programming and Mixing Methods Po-Wei Wang, 2021

Bridging Language in Machines with Language in the Brain Mariya Toneva, 2021

Curriculum Learning Otilia Stretcu, 2021

Principles of Learning in Multitask Settings: A Probabilistic Perspective Maruan Al-Shedivat, 2021

Towards Robust and Resilient Machine Learning Adarsh Prasad, 2021

Towards Training AI Agents with All Types of Experiences: A Unified ML Formalism Zhiting Hu, 2021

Building Intelligent Autonomous Navigation Agents Devendra Chaplot, 2021

Learning to See by Moving: Self-supervising 3D Scene Representations for Perception, Control, and Visual Reasoning Hsiao-Yu Fish Tung, 2021

Statistical Astrophysics: From Extrasolar Planets to the Large-scale Structure of the Universe Collin Politsch, 2020

Causal Inference with Complex Data Structures and Non-Standard Effects Kwhangho Kim, 2020

Networks, Point Processes, and Networks of Point Processes Neil Spencer, 2020

Dissecting neural variability using population recordings, network models, and neurofeedback (Unavailable) Ryan Williamson, 2020

Predicting Health and Safety: Essays in Machine Learning for Decision Support in the Public Sector Dylan Fitzpatrick, 2020

Towards a Unified Framework for Learning and Reasoning Han Zhao, 2020

Learning DAGs with Continuous Optimization Xun Zheng, 2020

Machine Learning and Multiagent Preferences Ritesh Noothigattu, 2020

Learning and Decision Making from Diverse Forms of Information Yichong Xu, 2020

Towards Data-Efficient Machine Learning Qizhe Xie, 2020

Change modeling for understanding our world and the counterfactual one(s) William Herlands, 2020

Machine Learning in High-Stakes Settings: Risks and Opportunities Maria De-Arteaga, 2020

Data Decomposition for Constrained Visual Learning Calvin Murdock, 2020

Structured Sparse Regression Methods for Learning from High-Dimensional Genomic Data Micol Marchetti-Bowick, 2020

Towards Efficient Automated Machine Learning Liam Li, 2020

LEARNING COLLECTIONS OF FUNCTIONS Emmanouil Antonios Platanios, 2020

Provable, structured, and efficient methods for robustness of deep networks to adversarial examples Eric Wong , 2020

Reconstructing and Mining Signals: Algorithms and Applications Hyun Ah Song, 2020

Probabilistic Single Cell Lineage Tracing Chieh Lin, 2020

Graphical network modeling of phase coupling in brain activity (unavailable) Josue Orellana, 2019

Strategic Exploration in Reinforcement Learning - New Algorithms and Learning Guarantees Christoph Dann, 2019 Learning Generative Models using Transformations Chun-Liang Li, 2019

Estimating Probability Distributions and their Properties Shashank Singh, 2019

Post-Inference Methods for Scalable Probabilistic Modeling and Sequential Decision Making Willie Neiswanger, 2019

Accelerating Text-as-Data Research in Computational Social Science Dallas Card, 2019

Multi-view Relationships for Analytics and Inference Eric Lei, 2019

Information flow in networks based on nonstationary multivariate neural recordings Natalie Klein, 2019

Competitive Analysis for Machine Learning & Data Science Michael Spece, 2019

The When, Where and Why of Human Memory Retrieval Qiong Zhang, 2019

Towards Effective and Efficient Learning at Scale Adams Wei Yu, 2019

Towards Literate Artificial Intelligence Mrinmaya Sachan, 2019

Learning Gene Networks Underlying Clinical Phenotypes Under SNP Perturbations From Genome-Wide Data Calvin McCarter, 2019

Unified Models for Dynamical Systems Carlton Downey, 2019

Anytime Prediction and Learning for the Balance between Computation and Accuracy Hanzhang Hu, 2019

Statistical and Computational Properties of Some "User-Friendly" Methods for High-Dimensional Estimation Alnur Ali, 2019

Nonparametric Methods with Total Variation Type Regularization Veeranjaneyulu Sadhanala, 2019

New Advances in Sparse Learning, Deep Networks, and Adversarial Learning: Theory and Applications Hongyang Zhang, 2019

Gradient Descent for Non-convex Problems in Modern Machine Learning Simon Shaolei Du, 2019

Selective Data Acquisition in Learning and Decision Making Problems Yining Wang, 2019

Anomaly Detection in Graphs and Time Series: Algorithms and Applications Bryan Hooi, 2019

Neural dynamics and interactions in the human ventral visual pathway Yuanning Li, 2018

Tuning Hyperparameters without Grad Students: Scaling up Bandit Optimisation Kirthevasan Kandasamy, 2018

Teaching Machines to Classify from Natural Language Interactions Shashank Srivastava, 2018

Statistical Inference for Geometric Data Jisu Kim, 2018

Representation Learning @ Scale Manzil Zaheer, 2018

Diversity-promoting and Large-scale Machine Learning for Healthcare Pengtao Xie, 2018

Distribution and Histogram (DIsH) Learning Junier Oliva, 2018

Stress Detection for Keystroke Dynamics Shing-Hon Lau, 2018

Sublinear-Time Learning and Inference for High-Dimensional Models Enxu Yan, 2018

Neural population activity in the visual cortex: Statistical methods and application Benjamin Cowley, 2018

Efficient Methods for Prediction and Control in Partially Observable Environments Ahmed Hefny, 2018

Learning with Staleness Wei Dai, 2018

Statistical Approach for Functionally Validating Transcription Factor Bindings Using Population SNP and Gene Expression Data Jing Xiang, 2017

New Paradigms and Optimality Guarantees in Statistical Learning and Estimation Yu-Xiang Wang, 2017

Dynamic Question Ordering: Obtaining Useful Information While Reducing User Burden Kirstin Early, 2017

New Optimization Methods for Modern Machine Learning Sashank J. Reddi, 2017

Active Search with Complex Actions and Rewards Yifei Ma, 2017

Why Machine Learning Works George D. Montañez , 2017

Source-Space Analyses in MEG/EEG and Applications to Explore Spatio-temporal Neural Dynamics in Human Vision Ying Yang , 2017

Computational Tools for Identification and Analysis of Neuronal Population Activity Pengcheng Zhou, 2016

Expressive Collaborative Music Performance via Machine Learning Gus (Guangyu) Xia, 2016

Supervision Beyond Manual Annotations for Learning Visual Representations Carl Doersch, 2016

Exploring Weakly Labeled Data Across the Noise-Bias Spectrum Robert W. H. Fisher, 2016

Optimizing Optimization: Scalable Convex Programming with Proximal Operators Matt Wytock, 2016

Combining Neural Population Recordings: Theory and Application William Bishop, 2015

Discovering Compact and Informative Structures through Data Partitioning Madalina Fiterau-Brostean, 2015

Machine Learning in Space and Time Seth R. Flaxman, 2015

The Time and Location of Natural Reading Processes in the Brain Leila Wehbe, 2015

Shape-Constrained Estimation in High Dimensions Min Xu, 2015

Spectral Probabilistic Modeling and Applications to Natural Language Processing Ankur Parikh, 2015 Computational and Statistical Advances in Testing and Learning Aaditya Kumar Ramdas, 2015

Corpora and Cognition: The Semantic Composition of Adjectives and Nouns in the Human Brain Alona Fyshe, 2015

Learning Statistical Features of Scene Images Wooyoung Lee, 2014

Towards Scalable Analysis of Images and Videos Bin Zhao, 2014

Statistical Text Analysis for Social Science Brendan T. O'Connor, 2014

Modeling Large Social Networks in Context Qirong Ho, 2014

Semi-Cooperative Learning in Smart Grid Agents Prashant P. Reddy, 2013

On Learning from Collective Data Liang Xiong, 2013

Exploiting Non-sequence Data in Dynamic Model Learning Tzu-Kuo Huang, 2013

Mathematical Theories of Interaction with Oracles Liu Yang, 2013

Short-Sighted Probabilistic Planning Felipe W. Trevizan, 2013

Statistical Models and Algorithms for Studying Hand and Finger Kinematics and their Neural Mechanisms Lucia Castellanos, 2013

Approximation Algorithms and New Models for Clustering and Learning Pranjal Awasthi, 2013

Uncovering Structure in High-Dimensions: Networks and Multi-task Learning Problems Mladen Kolar, 2013

Learning with Sparsity: Structures, Optimization and Applications Xi Chen, 2013

GraphLab: A Distributed Abstraction for Large Scale Machine Learning Yucheng Low, 2013

Graph Structured Normal Means Inference James Sharpnack, 2013 (Joint Statistics & ML PhD)

Probabilistic Models for Collecting, Analyzing, and Modeling Expression Data Hai-Son Phuoc Le, 2013

Learning Large-Scale Conditional Random Fields Joseph K. Bradley, 2013

New Statistical Applications for Differential Privacy Rob Hall, 2013 (Joint Statistics & ML PhD)

Parallel and Distributed Systems for Probabilistic Reasoning Joseph Gonzalez, 2012

Spectral Approaches to Learning Predictive Representations Byron Boots, 2012

Attribute Learning using Joint Human and Machine Computation Edith L. M. Law, 2012

Statistical Methods for Studying Genetic Variation in Populations Suyash Shringarpure, 2012

Data Mining Meets HCI: Making Sense of Large Graphs Duen Horng (Polo) Chau, 2012

Learning with Limited Supervision by Input and Output Coding Yi Zhang, 2012

Target Sequence Clustering Benjamin Shih, 2011

Nonparametric Learning in High Dimensions Han Liu, 2010 (Joint Statistics & ML PhD)

Structural Analysis of Large Networks: Observations and Applications Mary McGlohon, 2010

Modeling Purposeful Adaptive Behavior with the Principle of Maximum Causal Entropy Brian D. Ziebart, 2010

Tractable Algorithms for Proximity Search on Large Graphs Purnamrita Sarkar, 2010

Rare Category Analysis Jingrui He, 2010

Coupled Semi-Supervised Learning Andrew Carlson, 2010

Fast Algorithms for Querying and Mining Large Graphs Hanghang Tong, 2009

Efficient Matrix Models for Relational Learning Ajit Paul Singh, 2009

Exploiting Domain and Task Regularities for Robust Named Entity Recognition Andrew O. Arnold, 2009

Theoretical Foundations of Active Learning Steve Hanneke, 2009

Generalized Learning Factors Analysis: Improving Cognitive Models with Machine Learning Hao Cen, 2009

Detecting Patterns of Anomalies Kaustav Das, 2009

Dynamics of Large Networks Jurij Leskovec, 2008

Computational Methods for Analyzing and Modeling Gene Regulation Dynamics Jason Ernst, 2008

Stacked Graphical Learning Zhenzhen Kou, 2007

Actively Learning Specific Function Properties with Applications to Statistical Inference Brent Bryan, 2007

Approximate Inference, Structure Learning and Feature Estimation in Markov Random Fields Pradeep Ravikumar, 2007

Scalable Graphical Models for Social Networks Anna Goldenberg, 2007

Measure Concentration of Strongly Mixing Processes with Applications Leonid Kontorovich, 2007

Tools for Graph Mining Deepayan Chakrabarti, 2005

Automatic Discovery of Latent Variable Models Ricardo Silva, 2005

- Faculty of Arts and Sciences

- FAS Theses and Dissertations

- Communities & Collections

- By Issue Date

- FAS Department

- Quick submit

- Waiver Generator

- DASH Stories

- Accessibility

- COVID-related Research

Terms of Use

- Privacy Policy

- By Collections

- By Departments

Building the Theoretical Foundations of Deep Learning: An Empirical Approach

Citable link to this page

Collections.

- FAS Theses and Dissertations [6136]

Contact administrator regarding this item (to report mistakes or request changes)

Advertisement

Deep Learning: A Comprehensive Overview on Techniques, Taxonomy, Applications and Research Directions

- Review Article

- Published: 18 August 2021

- Volume 2 , article number 420 , ( 2021 )

Cite this article

- Iqbal H. Sarker ORCID: orcid.org/0000-0003-1740-5517 1 , 2

192k Accesses

662 Citations

24 Altmetric

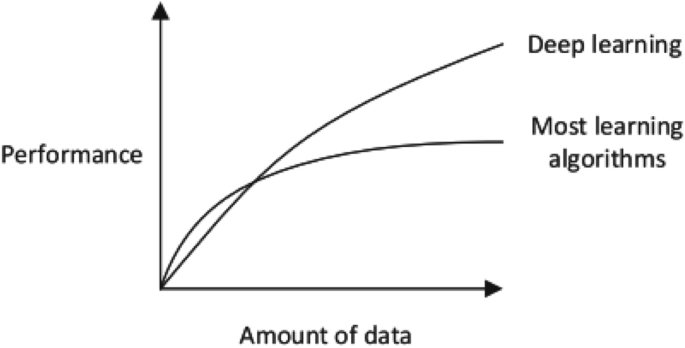

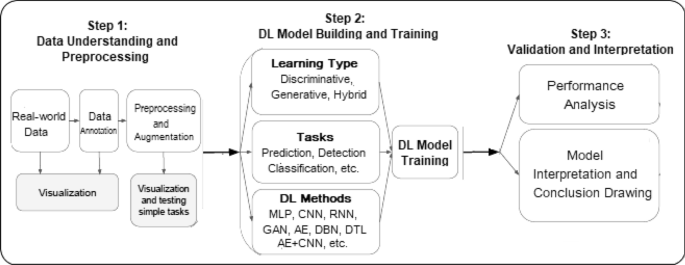

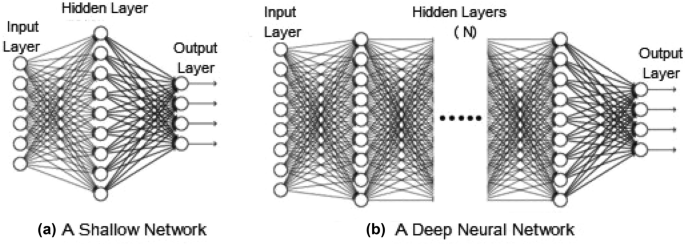

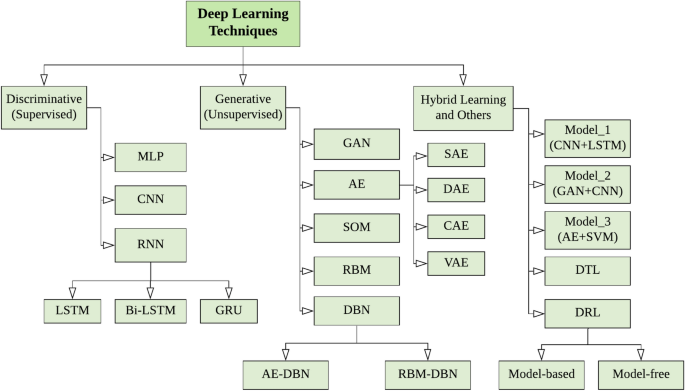

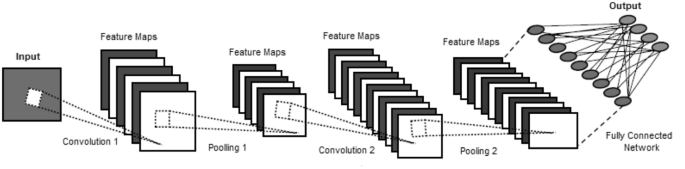

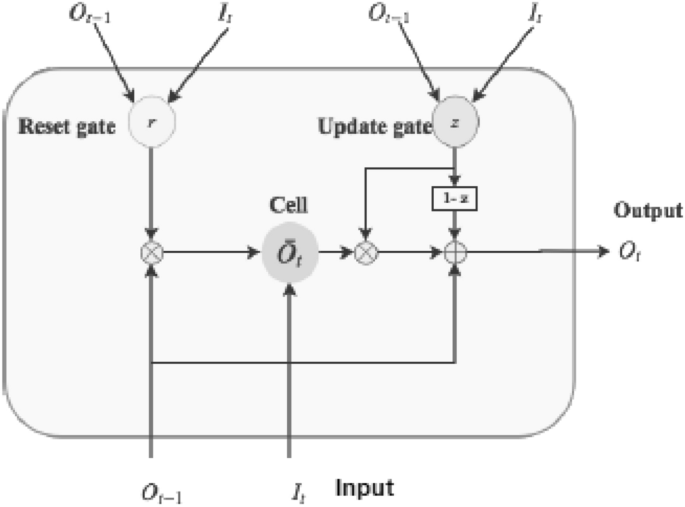

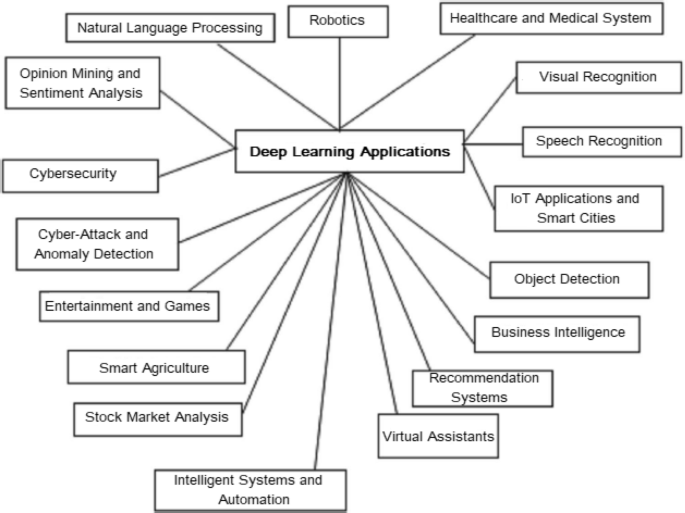

Explore all metrics

Deep learning (DL), a branch of machine learning (ML) and artificial intelligence (AI) is nowadays considered as a core technology of today’s Fourth Industrial Revolution (4IR or Industry 4.0). Due to its learning capabilities from data, DL technology originated from artificial neural network (ANN), has become a hot topic in the context of computing, and is widely applied in various application areas like healthcare, visual recognition, text analytics, cybersecurity, and many more. However, building an appropriate DL model is a challenging task, due to the dynamic nature and variations in real-world problems and data. Moreover, the lack of core understanding turns DL methods into black-box machines that hamper development at the standard level. This article presents a structured and comprehensive view on DL techniques including a taxonomy considering various types of real-world tasks like supervised or unsupervised. In our taxonomy, we take into account deep networks for supervised or discriminative learning , unsupervised or generative learning as well as hybrid learning and relevant others. We also summarize real-world application areas where deep learning techniques can be used. Finally, we point out ten potential aspects for future generation DL modeling with research directions . Overall, this article aims to draw a big picture on DL modeling that can be used as a reference guide for both academia and industry professionals.

Similar content being viewed by others

Machine Learning: Algorithms, Real-World Applications and Research Directions

Machine learning and deep learning

Review of deep learning: concepts, CNN architectures, challenges, applications, future directions

Avoid common mistakes on your manuscript.

Introduction