Reflections on the digital age: 7 improvements that brought about a decade of positive change

The new digital age enabled billions of people to collaborate and mobilize to fight climate change. Image: Photo by kazuend on Unsplash

.chakra .wef-1c7l3mo{-webkit-transition:all 0.15s ease-out;transition:all 0.15s ease-out;cursor:pointer;-webkit-text-decoration:none;text-decoration:none;outline:none;color:inherit;}.chakra .wef-1c7l3mo:hover,.chakra .wef-1c7l3mo[data-hover]{-webkit-text-decoration:underline;text-decoration:underline;}.chakra .wef-1c7l3mo:focus,.chakra .wef-1c7l3mo[data-focus]{box-shadow:0 0 0 3px rgba(168,203,251,0.5);} Don Tapscott C.M.

.chakra .wef-9dduvl{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-9dduvl{font-size:1.125rem;}} Explore and monitor how .chakra .wef-15eoq1r{margin-top:16px;margin-bottom:16px;line-height:1.388;font-size:1.25rem;color:#F7DB5E;}@media screen and (min-width:56.5rem){.chakra .wef-15eoq1r{font-size:1.125rem;}} The Digital Economy is affecting economies, industries and global issues

.chakra .wef-1nk5u5d{margin-top:16px;margin-bottom:16px;line-height:1.388;color:#2846F8;font-size:1.25rem;}@media screen and (min-width:56.5rem){.chakra .wef-1nk5u5d{font-size:1.125rem;}} Get involved with our crowdsourced digital platform to deliver impact at scale

Stay up to date:, the digital economy.

Listen to the article

September 2030 . The early 2020s were full of dramatic turning points in global history.

Powerful new technologies like artificial intelligence, blockchain, the internet of things and the metaverse upended traditional systems, institutions and ways of life. Meanwhile, the COVID-19 pandemic of 2020-22 accelerated these trends as people everywhere moved much of their lives online. The pandemic also exposed deep problems in our governments and systems for everything from supply chains to public health data.

Moreover, the early 2020s were jolted by political upheaval. Notably, in January 2021, the American election was challenged, exacerbating deep fissures in the United States and emboldening populists and extremists around the world. The Russian invasion of Ukraine, global sanctions and significant disruptions to food supplies further convulsed the global economy and exacerbated tensions. These challenges, among others, created a perfect storm and resulted in extraordinary social anxiety and unrest.

Fortunately, a miracle of sorts occurred. Driven by a deep hope for a brighter future, people everywhere began to reimagine the relationship between government and civil society, ushering in a new societal framework for the digital age. This was not some kind of academic process but rather the result of mass mobilizations around broad change.

Reflecting on the digital age

Today, looking back a decade, let’s examine seven key improvements that stemmed from this period of positive change:

1. New models of prosperity and work

Given the bifurcation of wealth and structural unemployment in many economies engendered by the new digital age, expectations of employment shifted, with people understanding that the private sector cannot provide jobs and prosperous life for all. New rules and regulations were instituted that created a strong social safety net for workers. These reforms helped mitigate the gross inequality that plagued the early years of the 21 st century. New technologies also brought more underserved people into the global economy and readied workers for lifelong learning.

2. New models of digital identity

New regulations allowed individuals to own and benefit from the digital data they create. This ended the era of “digital feudalism,” which was characterised by a centralized group of “digital landlords” who collected, aggregated and profited from the data that collectively constituted our digital identities. Furthermore, Web3 gave people the ability to harvest their data trail and use it to plan their lives, enhancing their prosperity and protecting their privacy.

3. More informed digital age society

Through public and private partnerships, media systems were rebuilt in ways that safeguarded independence and free speech. New tools were implemented that enabled citizens to track the veracity and provenance of information. This helped reduce the ability of bad-faith actors to spread false information about everything from climate change to public health. Clear rules were also set that ensured large media companies were prohibited from supporting hate on their platforms in the digital age. These reforms helped us rebuild public education systems to ensure that every young person can function fully, not just as a worker or entrepreneur, but as a citizen. Media literacy programs were also introduced into schools to help young people develop their capabilities to handle the onslaught of information and discern the truth.

4. Renewed trust in government and democracy

Innovative technologies and other modern reforms enabled us to create a new era of democracy based on public deliberation, transparency, active citizenship and accountability. Technology also helped to embed electoral promises into smart contracts that allowed citizens to track and engage in their democracies through the mobile platforms they use every day. These reforms helped boost trust in politicians and the legitimacy of our governments as leaders are now more beholden to the people and not the powerful interests that funded their campaigns in the years prior. Moreover, these improvements helped stifle radical populists and extreme politicians on both the right and left.

5. A new commitment to justice

It was clear that new technologies exacerbated racial divides, so governments and organisations throughout civil society committed to ending racial inequities. In the United States, action was taken to end the era of mass incarceration and the financial hamstringing of minority groups. The criminal subjection of indigenous peoples as evidenced by Canada’s “Residential School System” was also readdressed. These steps helped move racism, class oppression and subjugation of all peoples into the dustbin of history, along with those who perpetrate these vile relics of the past. The reforms also went past the tropes about bad apples and forgiveness. They recognized that racism and oppression are systemic and must be addressed society-wide.

6. A deep commitment to sustainability

Through major reforms, the world is now on track to reduce carbon emissions by 90% by the year 2050. The new digital age enabled billions of people to collaborate and mobilize to fight climate change. This included not just governments but businesses large and small, commuters, vacationers, employees, students, consumers – everyone – from every walk of life. Public pressure and new regulations have also forced business executives to participate responsibly in the reindustrialization of our planet and embrace carbon pricing.

7. Global interdependence

The crises of the past decade—the COVID-19 pandemic, the political legitimacy crisis, the war in Ukraine and the climate catastrophes—demonstrated that no country could succeed fully in a world that is in trouble. And while significant national differences remain, countries have embraced common interests and an understanding of a common fate. The new way of thinking also allowed governments, companies and NGOs to better organise around solving major problems like public health, education, social justice, environmental stability and peace.

These positive changes did not bring about a utopia. But they were improvements—and ones that were achieved through bottom-up struggle.

Victor Hugo said there is nothing so powerful as an idea whose time has come. In our case, there was nothing so powerful as ideas that had become necessities.

The World Economic Forum’s Platform for Shaping the Future of Digital Economy and New Value Creation helps companies and governments leverage technology to develop digitally-driven business models that ensure growth and equity for an inclusive and sustainable economy.

- The Digital Transformation for Long-Term Growth programme is bringing together industry leaders, innovators, experts and policymakers to accelerate new digital business models that create the sustainable and resilient industries of tomorrow.

- The Forum’s EDISON Alliance is mobilizing leaders from across sectors to accelerate digital inclusion . Its 1 Billion Lives Challenge harnesses cross-sector commitments and action to improve people’s lives through affordable access to digital solutions in education, healthcare, and financial services by 2025.

Contact us for more information on how to get involved.

This article is abridged from an major essay written by Don Tapscott called “A Declaration of Interdependence: Towards a New Social Contract for the Digital Age” and a recent short essay entitled “ Why We Built a Social Contract for the New Digital Age.”

Don Tapscott is author of 16 widely read books about technology in business and society, including the best-seller Blockchain Revolution , which he co-authored with his son Alex. His most recent book is Platform Revolution: Blockchain Technology as the Operating System of the Digital Age. He is Co-Founder of the Blockchain Research Institute , an Adjunct Professor at INSEAD, and Chancellor Emeritus of Trent University in Canada. He is a Member of the Order of Canada and drafted a framework for “ A New Social Contract for the Digital Economy.”

Have you read?

Why businesses must embrace change in the digital age, companies' esg strategies must stand up to scrutiny in the digital age, what is the role of government in the digital age, don't miss any update on this topic.

Create a free account and access your personalized content collection with our latest publications and analyses.

License and Republishing

World Economic Forum articles may be republished in accordance with the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License, and in accordance with our Terms of Use.

The views expressed in this article are those of the author alone and not the World Economic Forum.

The Agenda .chakra .wef-n7bacu{margin-top:16px;margin-bottom:16px;line-height:1.388;font-weight:400;} Weekly

A weekly update of the most important issues driving the global agenda

.chakra .wef-1dtnjt5{display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;} More on Forum Institutional .chakra .wef-nr1rr4{display:-webkit-inline-box;display:-webkit-inline-flex;display:-ms-inline-flexbox;display:inline-flex;white-space:normal;vertical-align:middle;text-transform:uppercase;font-size:0.75rem;border-radius:0.25rem;font-weight:700;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;line-height:1.2;-webkit-letter-spacing:1.25px;-moz-letter-spacing:1.25px;-ms-letter-spacing:1.25px;letter-spacing:1.25px;background:none;padding:0px;color:#B3B3B3;-webkit-box-decoration-break:clone;box-decoration-break:clone;-webkit-box-decoration-break:clone;}@media screen and (min-width:37.5rem){.chakra .wef-nr1rr4{font-size:0.875rem;}}@media screen and (min-width:56.5rem){.chakra .wef-nr1rr4{font-size:1rem;}} See all

Day 2 #SpecialMeeting24: Key insights and what to know

Gayle Markovitz

April 28, 2024

Day 1 #SpecialMeeting24: Key insights and what just happened

April 27, 2024

#SpecialMeeting24: What to know about the programme and who's coming

Mirek Dušek and Maroun Kairouz

Climate finance: What are debt-for-nature swaps and how can they help countries?

Kate Whiting

April 26, 2024

What to expect at the Special Meeting on Global Collaboration, Growth and Energy for Development

Spencer Feingold and Gayle Markovitz

April 19, 2024

From 'Quit-Tok' to proximity bias, here are 11 buzzwords from the world of hybrid work

April 17, 2024

Visual Life

- Creative Projects

- Write Here!

June 9, 2023 / 1 comment / Reading Time: ~ 10 minutes

Connecting in the Digital Age: Navigating Technology and social media

Shawndeep Virk

In the contemporary era, technology and social media have revolutionized how we connect with others, significantly impacting various aspects of our lives. This article explores the pervasive influence of technology and social media on individuals and society, shedding light on the benefits and drawbacks of this digital transformation. As technology advances, social media platforms have become integral to our daily routines, shaping our interactions, communication patterns, and self-perceptions. The convenience of instant messaging and virtual communities has facilitated global connectivity, transcending geographical barriers and fostering relationships. However, the constant exposure to virtual environments has also led to many challenges. One significant concern is the potential erosion of face-to-face interactions, as the allure of digital communication often replaces genuine human connection. In addition, the addictive nature of social media can lead to diminished social skills and a sense of loneliness, exacerbating mental health issues. Moreover, the proliferation of online misinformation and the echo chamber effect has introduced new challenges to critical thinking and public discourse. This article delves into the impact of technology on various domains, including education, relationships, and self-identity. The prevalence of online learning platforms has transformed the traditional classroom, offering new opportunities while raising concerns about unequal access and diminished interpersonal engagement. Relationships have been reshaped as virtual connections become more prevalent, impacting intimacy, trust, and the overall quality of social interactions. Furthermore, social media platforms have fueled the rise of personal branding and the cultivation of idealized digital personas, contributing to the “digital self” and its effects on self-esteem and mental well-being. Technology and social media have undeniably become ingrained in our lives, revolutionizing how we connect and interact with others. Yet, while they offer unprecedented connectivity and vast opportunities, we must also navigate their potential pitfalls. Recognizing the importance of balancing digital and real-world experiences can help us harness the benefits of technology while preserving the essential elements of human connection and well-being in the digital age.

————

Introduction:

In today’s fast-paced society, the pervasive influence of technology and social media on our lives cannot be denied. These platforms have transformed the way we communicate, work, and interact with others. From the convenience of instant messaging to the accessibility of online communities, technology has undoubtedly made our lives more convenient and connected. However, amidst the undeniable benefits, it is essential to acknowledge the challenges that arise from our increasing reliance on these digital platforms.

One significant concern that has emerged in recent years is the impact of excessive screen time on mental health. Twenge and Campbell’s (2019) longitudinal study shed light on the potential adverse effects of constant exposure to social media. They found a strong correlation between heavy social media use and increased feelings of anxiety, depression, and loneliness. In addition, the endless stream of carefully curated posts, the constant comparison to others’ highlight reels, and the fear of missing out (FOMO) contribute to a sense of inadequacy and disconnection from real-life experiences. Hence, individuals must be mindful of their digital consumption and take steps to strike a healthy balance.

Sherry Turkle (2011), a renowned expert in the field of social psychology, highlights another aspect of technology’s impact on interpersonal relationships. While social media allows us to stay connected with a vast network of friends and acquaintances, it often needs to fulfill our innate need for genuine connections. Turkle argues that we have come to expect more from technology and less from each other. Virtual interactions lack the depth and authenticity of face-to-face communication, leaving us craving for deeper emotional connections. In an era where emojis and likes have become substitutes for heartfelt conversations, it is essential to recognize the limitations of digital interactions and actively nurture meaningful relationships in real life.

Research conducted by Hampton et al. (2014) further underscores the importance of face-to-face communication in establishing genuine connections. Their studies reveal that virtual interactions, devoid of nonverbal cues like body language and facial expressions, hinder our ability to understand and empathize with others truly. These nonverbal cues provide crucial context and emotional depth that is often lost in digital conversations. Meeting in person lets us fully engage with others, pick up on subtle cues, and forge stronger bonds. While technology enables us to bridge geographical gaps and connect with individuals across the globe, it is vital to recognize the value of physical presence and direct human interaction.

Achieving a healthy balance between technology and real-life connections may seem daunting in a world increasingly reliant on digital platforms. However, recognizing the potential consequences of excessive technology use is the first step toward cultivating healthier relationships. McEwan and Zanolla (2020) assert that the impact of smartphone use on human interaction should be considered. As individuals, we must proactively set boundaries, manage our screen time, and consciously engage in face-to-face interactions. This may involve establishing designated “tech-free” zones or allocating specific periods for uninterrupted personal interactions.

Moreover, fostering a culture that values genuine connections and offline experiences is crucial. Educational institutions, workplaces, and communities can be pivotal in promoting face-to-face interactions and organizing activities that encourage meaningful human connections. By creating spaces where individuals can engage in open dialogue, practice active listening, and collaborate on shared goals, we can build stronger communities and nurture relationships that transcend the digital realm.

In conclusion, while technology and social media have undeniably revolutionized how we connect and communicate, we must approach them with caution and mindfulness. Excessive screen time and overreliance on digital platforms can harm our mental health and interpersonal relationships. Striking a balance between technology and real-life connections is paramount to fostering meaningful relationships, empathy, and emotional well-being. By recognizing the limitations of virtual interactions and actively engaging in face-to-face.

Dangers Of Excessive Social Media Use:

In today’s digital age, social media has become an indispensable part of our lives. While it may seem harmless to stay connected with friends and family, excessive use of social media can harm our physical and mental health. According to Twenge and Campbell (2019), spending too much time scrolling through social media feeds can lead to feelings of anxiety, depression, and loneliness. In addition, they found that individuals who reported higher levels of screen time experienced lower levels of mental well-being over time.

However, the dangers of excessive social media use go beyond mental health. One of the concerning aspects highlighted by Turkle (2011) is the decrease in face-to-face interactions and interpersonal connections. She argues that people often expect more from technology and less from each other, leading to a sense of detachment and a decline in genuine human interaction. In addition, the constant scrolling and engagement with virtual connections can detract from meaningful real-life experiences, leaving individuals feeling isolated and disconnected.

Furthermore, excessive social media use can lead to the “spiral of silence” phenomenon described by Hampton, Rainie, Lu, Shin, and Purcell (2014). They explain that social media platforms can create an environment where individuals feel pressured to conform to popular opinions and are less likely to express dissenting views. This can limit the diversity of perspectives and hinder open dialogue and meaningful discussions. As a result, social media can inadvertently contribute to echo chambers, where people are exposed to only one side of an issue, reinforcing their existing beliefs without being challenged.

In addition to its impact on mental health and social dynamics, excessive social media use can adversely affect our physical well-being. McEwan and Zanolla (2020) emphasize that prolonged sitting while using electronic devices has been linked to various health problems, such as obesity, back pain, and eye strain. They further state that the blue light emitted from electronic devices disrupts sleep patterns and can lead to insomnia. The passive nature of social media consumption, combined with its addictive qualities, can contribute to a more sedentary lifestyle, posing risks to our overall physical health and well-being.

Moreover, the spread of misinformation on social media is a growing concern. Hall, Baym, and Miltner (2019) emphasize that false information about politics, health issues, or current events spreads quickly across these platforms due to their viral nature. They note that people often share information without verifying its accuracy or source, leading to the propagation of false information. This can be particularly dangerous when it comes to making important decisions related to public health or political issues. Incorrect information can misguide individuals, shape public opinion, and have real-world consequences.

While social media has revolutionized how we connect with people worldwide and stay informed about current events, it is crucial to be mindful of our social media use and set healthy boundaries to prevent its detrimental effects. By quoting these studies, we can better understand the potential risks associated with excessive social media use and make informed decisions about our online behavior. Furthermore, awareness of the dangers can empower individuals to strike a balance between the benefits and drawbacks of social media, fostering healthier habits and more meaningful connections in both the digital and real world.

Importance Of Face-to-face Communication:

In the digital age, technology and social media have become the primary means of communication for many individuals. However, it is crucial to recognize that face-to-face communication remains an essential aspect of interpersonal interactions. While digital communication can be convenient and efficient, it lacks the depth and nuance that comes with in-person conversations. In a world where we are bombarded with endless notifications and distractions, taking the time to engage in face-to-face conversations can help us connect on a deeper level.

According to Twenge and Campbell (2019), “screen time has been associated with various negative mental health outcomes, including increased levels of anxiety and depression.” This highlights the potential detrimental effects of excessive reliance on digital communication, emphasizing the need for balanced interaction that includes face-to-face communication.

One of the most significant benefits of face-to-face communication is its ability to convey nonverbal cues effectively. Turkle (2011) asserts that “up to 90% of communication is nonverbal,” indicating the importance of facial expressions, body language, and tone of voice in conveying meaning accurately. These nonverbal cues can often be lost or misinterpreted through digital communication channels such as email or instant messaging. In-person conversations allow us to read these cues accurately, providing valuable context that helps us understand each other better.

Additionally, Hampton et al. (2014) discuss the “spiral of silence” phenomenon, where people are more likely to express their opinions in face-to-face conversations compared to online platforms. This suggests that face-to-face communication encourages a more open and honest exchange of ideas, fostering empathy and building trust between individuals.

Face-to-face communication promotes a sense of connection often lacking in digital interactions. Being physically present with someone shows a level of commitment and engagement that cannot be replicated online. It allows for genuine human connection and more profound, more meaningful relationships. In an age of loneliness and isolation, face-to-face communication can help alleviate these issues by fostering a sense of belonging and community. Face-to-face conversations offer an opportunity for spontaneity and improvisation. In digital communication, messages can be carefully.

Furthermore, face-to-face communication allows for more meaningful collaboration and problem-solving opportunities than digital channels do. McEwan and Zanolla (2020) highlight that “physical presence facilitates spontaneous exchanges and enhances creativity.” When working on complex projects or brainstorming ideas, being physically present with colleagues allows for immediate feedback and interactive discussions that lead to creative breakthroughs. Additionally, when conflicts arise within teams or organizations, having difficult conversations in person helps ensure all parties are heard and understood.

While technology has revolutionized how we communicate with one another in many positive ways, it should not replace face-to-face communication altogether. In-person conversations allow us to convey nonverbal cues, foster empathy and trust, and facilitate more meaningful collaboration opportunities. As we navigate the digital age, it is essential not to lose sight of the value that face-to-face communication brings to our personal and professional relationships (Hall, Baym, & Miltner, 2019).

Balancing Technology And Real-life Connections:

In today’s digital age, it is easy to get lost in the world of technology and social media. It seems like everyone is constantly glued to their screens, whether it be their phone, computer, or tablet. According to Twenge and Campbell (2019), extensive screen time has been associated with negative effects on mental health. They found that excessive use of digital devices, especially social media, was linked to higher levels of depression and loneliness. Therefore, it is essential that we learn how to balance technology with real-life connections.

One way to achieve this balance is by setting boundaries for ourselves when it comes to technology use. Turkle (2011) highlights the importance of consciously limiting the amount of time we spend on our devices each day and making an effort to engage in face-to-face interactions with people around us. She emphasizes the need to have meals together with family or friends without any distractions from our phones or other gadgets.

Furthermore, using social media platforms wisely can contribute to the balance between technology and real-life connections. Hampton et al. (2014) discuss the concept of the “spiral of silence,” which suggests that people may be hesitant to express their opinions online due to the fear of social isolation. However, when used effectively, social media can serve as a tool for connecting with others in a meaningful way. Hall, Baym, and Miltner (2019) suggest joining groups or communities that share our interests or values, participating in online discussions, and even arranging meetups with people we have met through these platforms.

It’s also important to recognize the value of unplugging from technology altogether from time to time. McEwan and Zanolla (2020) argue that excessive smartphone use can have a detrimental impact on human interaction. Taking breaks from our screens can help us feel more present and connected in the moment, fostering deeper relationships with those around us.

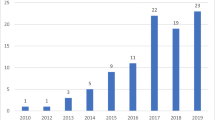

Ultimately, finding balance between technology and real-life connections requires intentionality and discipline. We must be willing to prioritize human interaction over virtual communication at times, even if it means stepping outside of our comfort zones. As Turkle (2011) reminds us, “We expect more from technology and less from each other.” Therefore, let us strive to navigate the digital age with mindfulness and intentionality so that we can cultivate meaningful relationships both online and offline. This graph shows why teens in America think social media has positive effects on people and the number 1 reason being that they are able to connect with friends and family.

Conclusion:

In conclusion, as we reflect on the impact of technology and social media on our lives, it becomes evident that while they have undoubtedly revolutionized the way we connect with others, they also carry potential dangers when used excessively. The prevalence of social media addiction has raised concerns about its detrimental effects on mental health, relationships, and productivity. It is therefore crucial for individuals to recognize the importance of striking a balance between online and offline interactions.

While social media platforms offer a myriad of benefits, such as connecting people across distances and providing access to information, it is essential to approach their use mindfully and with intentionality. All too often, individuals find themselves consumed by the virtual world, neglecting the tangible relationships and experiences that await in the offline realm. Face-to-face communication remains a cornerstone of human connection, offering depth, empathy, and emotional resonance that cannot be replicated through a screen.

By prioritizing real-life connections and setting healthy boundaries on our social media usage, we can cultivate a healthier and more fulfilling existence. Engaging in meaningful conversations, actively listening to others, and nurturing personal relationships allow us to experience genuine human connections that contribute to our overall well-being. It is in these face-to-face interactions that we can truly understand the nuances of non-verbal communication, interpret emotions, and forge deeper bonds.

In navigating the digital age, it is crucial to strike a delicate balance. Rather than completely rejecting technology or mindlessly indulging in its vast offerings, we should strive for a harmonious coexistence. This entails embracing the positive aspects of technology while being aware of its limitations and potential pitfalls. By intentionally carving out time for offline activities, such as hobbies, physical exercise, and spending quality time with loved ones, we can create a more well-rounded and fulfilling lifestyle.

Additionally, developing a healthy relationship with technology involves being mindful of the impact it has on our mental health. It is essential to recognize when social media usage becomes excessive or starts to negatively affect our well-being. Setting boundaries, such as designating specific times for technology use or limiting the number of social media platforms we engage with, can help prevent addiction and promote a healthier lifestyle.

In conclusion, while technology and social media have undoubtedly transformed the way we connect with others, it is vital to approach their use with caution and mindfulness. By striking a balance between online and offline interactions, prioritizing face-to-face communication, and setting healthy boundaries, we can harness the benefits of technology while maintaining genuine human connections. By doing so, we can cultivate a more fulfilling and balanced life, fostering our mental well-being, nurturing relationships, and maximizing our potential in both the virtual and physical realms.

References:

Twenge, J.M., & Campbell,W.K.(2019). The association between screen time and mental health: A longitudinal study. Psychological Science.

Turkle,S.(2011). Alone together: Why we expect more from technology and less from each other.New York: Basic Books.

Hampton,K.N., Rainie,L., Lu,W., Shin,I., & Purcell,K.(2014). Social mediaand the ‘‘spiral of silence.’’ Pew Research Center.

McEwan,B., & Zanolla,E.(2020). The impact of smartphone use on human interaction.Philosophical Transactions B. 5) Hall,J.A., Baym,N.K., & Miltner,K.M.(2019). Momentary pleasuresand lingering costs of using social media in daily life.Journalof Social Psychology.

And So It Was Written

Author: Shawndeep Virk

Published: June 9, 2023

Word Count: 2922

Reading time: ~ 10 minutes

Edit Link: (emailed to author) Request Now

ORGANIZED BY

Articles , Creative Projects , In Progress

MORE TO READ

Add yours →.

- My Homepage

Provide Feedback Cancel reply

You must be logged in to post a comment.

A TRU Writer powered SPLOT : Visual Life

Blame @cogdog — Up ↑

Student Writing in the Digital Age

Essays filled with “LOL” and emojis? College student writing today actually is longer and contains no more errors than it did in 1917.

“Kids these days” laments are nothing new, but the substance of the lament changes. Lately, it has become fashionable to worry that “kids these days” will be unable to write complex, lengthy essays. After all, the logic goes, social media and text messaging reward short, abbreviated expression. Student writing will be similarly staccato, rushed, or even—horror of horrors—filled with LOL abbreviations and emojis.

In fact, the opposite seems to be the case. Students in first-year composition classes are, on average, writing longer essays (from an average of 162 words in 1917, to 422 words in 1986, to 1,038 words in 2006), using more complex rhetorical techniques, and making no more errors than those committed by freshman in 1917. That’s according to a longitudinal study of student writing by Andrea A. Lunsford and Karen J. Lunsford, “ Mistakes Are a Fact of Life: A National Comparative Study. ”

In 2006, two rhetoric and composition professors, Lunsford and Lunsford, decided, in reaction to government studies worrying that students’ literacy levels were declining, to crunch the numbers and determine if students were making more errors in the digital age.

They began by replicating previous studies of American college student errors. There were four similar studies over the past century. In 1917, a professor analyzed the errors in 198 college student papers; in 1930, researchers completed similar studies of 170 and 20,000 papers, respectively. In 1986, Robert Connors and Andrea Lunsford (of the 2006 study) decided to see if contemporary students were making more or fewer errors than those earlier studies showed, and analyzed 3,000 student papers from 1984. The 2006 study (published in 2008) follows the process of these earlier studies and was based on 877 papers (one of the most interesting sections of “Mistakes Are a Fact of Life” discusses how new IRB regulations forced researchers to work with far fewer papers than they had before.

Remarkably, the number of errors students made in their papers stayed consistent over the past 100 years. Students in 2006 committed roughly the same number of errors as students did in 1917. The average has stayed at about 2 errors per 100 words.

What has changed are the kinds of errors students make. The four 20th-century studies show that, when it came to making mistakes, spelling tripped up students the most. Spelling was by far the most common error in 1986 and 1917, “the most frequent student mistake by some 300 percent.” Going down the list of “top 10 errors,” the patterns shifted: Capitalization was the second most frequent error 1917; in 1986, that spot went to “no comma after introductory element.”

In 2006, spelling lost its prominence, dropping down the list of errors to number five. Spell-check and similar word-processing tools are the undeniable cause. But spell-check creates new errors, too: The new number-one error in student writing is now “wrong word.” Spell-check, as most of us know, sometimes corrects spelling to a different word than intended; if the writing is not later proof-read, this computer-created error goes unnoticed. The second most common error in 2006 was “incomplete or missing documentation,” a result, the authors theorize, of a shift in college assignments toward research papers and away from personal essays.

Additionally, capitalization errors have increased, perhaps, as Lunsford and Lunsford note, because of neologisms like eBay and iPod. But students have also become much better at punctuation and apostrophes, which were the third and fifth most common errors in 1917. These had dropped off the top 10 list by 2006.

The study found no evidence for claims that kids are increasingly using “text speak” or emojis in their papers. Lunsford and Lunsford did not find a single such instance of this digital-era error. Ironically, they did find such text speak and emoticons in teachers’ comments to students. (Teachers these days?)

The most startling discovery Lunsford and Lunsford made had nothing to do with errors or emojis. They found that college students are writing much more and submitting much longer papers than ever. The average college essay in 2006 was more than double the length of the average 1986 paper, which was itself much longer than the average length of papers written earlier in the century. In 1917, student papers averaged 162 words; in 1930, the average was 231 words. By 1986, the average grew to 422 words. And just 20 years later, in 2006, it jumped to 1,038 words.

Why are 21st-century college students writing so much more? Computers allow students to write faster. (Other advances in writing technology may explain the upticks between 1917, 1930, and 1986. Ballpoint pens and manual and electric typewriters allowed students to write faster than inkwells or fountain pens.) The internet helps, too: Research shows that computers connected to the internet lead K-12 students to “conduct more background research for their writing; they write, revise, and publish more; they get more feedback on their writing; they write in a wider variety of genres and formats; and they produce higher quality writing.”

The digital revolution has been largely text-based. Over the course of an average day, Americans in 2006 wrote more than they did in 1986 (and in 2015 they wrote more than in 2006). New forms of written communication—texting, social media, and email—are often used instead of spoken ones—phone calls, meetings, and face-to-face discussions. With each text and Facebook update, students become more familiar with and adept at written expression. Today’s students have more experience with writing, and they practice it more than any group of college students in history.

Get Our Newsletter

Get your fix of JSTOR Daily’s best stories in your inbox each Thursday.

Privacy Policy Contact Us You may unsubscribe at any time by clicking on the provided link on any marketing message.

In shifting from texting to writing their English papers, college students must become adept at code-switching, using one form of writing for certain purposes (gossiping with friends) and another for others (summarizing plots). As Kristen Hawley Turner writes in “ Flipping the Switch: Code-Switching from Text Speak to Standard English ,” students do know how to shift from informal to formal discourse, changing their writing as occasions demand. Just as we might speak differently to a supervisor than to a child, so too do students know that they should probably not use “conversely” in a text to a friend or “LOL” in their Shakespeare paper. “As digital natives who have had access to computer technology all of their lives, they often demonstrate in theses arenas proficiencies that the adults in their lives lack,” Turner writes. Instructors should “teach them to negotiate the technology-driven discourse within the confines of school language.”

Responses to Lunsford and Lunsford’s study focused on what the results revealed about mistakes in writing: Error is often in the eye of the beholder . Teachers mark some errors and neglect to mention (or find) others. And, as a pioneering scholar of this field wrote in the 1970s, context is key when analyzing error: Students who make mistakes are not “indifferent…or incapable” but “beginners and must, like all beginners, learn by making mistakes.”

College students are making mistakes, of course, and they have much to learn about writing. But they are not making more mistakes than did their parents, grandparents, and great-grandparents. Since they now use writing to communicate with friends and family, they are more comfortable expressing themselves in words. Plus, most have access to technology that allows them to write faster than ever. If Lunsford and Lunsford’s findings about the average length of student papers stays true, today’s college students will graduate with more pages of completed prose to their name than any other generation.

If we want to worry about college student writing, then perhaps what we should attend to is not clipped, abbreviated writing, but overly verbose, rambling writing. It might be that editing skills—deciding what not to say, and what to delete—may be what most ails the kids these days.

JSTOR is a digital library for scholars, researchers, and students. JSTOR Daily readers can access the original research behind our articles for free on JSTOR.

More Stories

- The Fashionable Tour : or, The First American Tourist Guidebook

- Celebrating Asian American and Pacific Islander Heritage Month

The Metaphysical Story of Chiropractic

A Bodhisattva for Japanese Women

Recent posts.

- The Development of Central American Film

- Remembering Maud Lewis

- Rice, Famine, and the Seven Wonders of the World

Support JSTOR Daily

Sign up for our weekly newsletter.

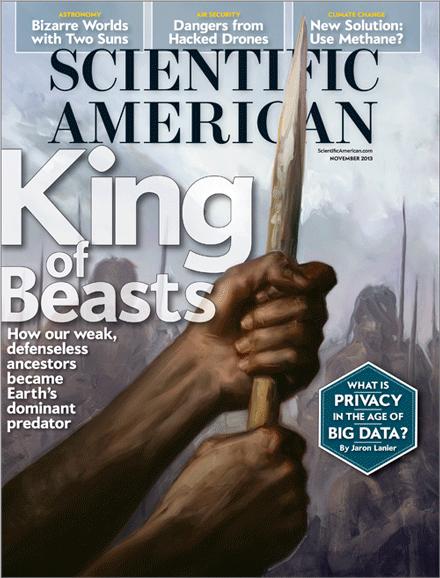

Privacy in the digital age: comparing and contrasting individual versus social approaches towards privacy

- Original Paper

- Open access

- Published: 17 July 2019

- Volume 21 , pages 307–317, ( 2019 )

Cite this article

You have full access to this open access article

- Marcel Becker ORCID: orcid.org/0000-0003-2848-5305 1

34k Accesses

18 Citations

19 Altmetric

Explore all metrics

This paper takes as a starting point a recent development in privacy-debates: the emphasis on social and institutional environments in the definition and the defence of privacy. Recognizing the merits of this approach I supplement it in two respects. First, an analysis of the relation between privacy and autonomy teaches that in the digital age more than ever individual autonomy is threatened. The striking contrast between on the one hand offline vocabulary, where autonomy and individual decision making prevail, and on the other online practices is a challenge that cannot be met in a social approach. Secondly, I elucidate the background of the social approach. Its importance is not exclusively related to the digital age. In public life we regularly face privacy-moments, when in a small distinguished social domain few people are commonly involved in common experiences. In the digital age the contextual integrity model of Helen Nissenbaum has become very influential. However this model has some problems. Nissenbaum refers to a variety of sources and uses several terms to explain the normativity in her model. The notion ‘context’ is not specific and faces the reproach of conservatism. We elaborate on the most promising suggestion: an elaboration on the notion ‘goods’ as it can be found in the works of Michael Walzer and Alisdair Macintyre. Developing criteria for defining a normative framework requires making explicit the substantive goods that are at stake in a context, and take them as the starting point for decisions about the flow of information. Doing so delivers stronger and more specific orientations that are indispensible in discussions about digital privacy.

Similar content being viewed by others

What is a social pattern? Rethinking a central social science term

Social Media and its Negative Impacts on Autonomy

Security, Privacy and Risks Within Smart Cities: Literature Review and Development of a Smart City Interaction Framework

Avoid common mistakes on your manuscript.

Introduction

Rethinking the concept of privacy in the digital age inevitably entangles the descriptive and the normative dimensions of this concept. Theoretically these two dimensions of privacy can be distinguished. One dimension can describe the degree of privacy people enjoy, without taking a normative stance about the desirable degree of privacy. In normative discussions, the focus is on the reasons why privacy is important for leading a fulfilling life. This distinction should not distract us from the fact that privacy is not a completely neutral concept; instead, it has a positive connotation. For example, an invasion of privacy is a violation of or intrusion into something valuable that should be protected. Discussion of the concept, however, brings into question why privacy should be cherished and protected. In the digital age, the normative dimension is the object of intense discussion. Existing dangers to privacy—because of big data applications, cloud computing, and profiling—are widely recognized, but feelings of resignation and why should we bother lie dormant. Defenders of privacy are regularly faced with scepticism, which is fueled by Schmidt’s ‘Innocent people have nothing to hide’ (Esguerra 2009 ) and Zuckerberg’s ‘Having two identities for yourself is a lack of integrity’ (Boyd 2014 ).

Traditionally in defences of privacy the focus has been on the individual (Rule 2015 ). Privacy was defined in terms of an individual’s space , which was seen as necessary for meeting the individual’s vital interests. In the last decade, however, we have seen a shift in the emphasis. A view of privacy as the norm that regulates and structures social life (the social dimension of privacy) has gained importance in both law and philosophical literature. For instance, the European Court of Human Rights previously stressed that data protection was an individual’s right not to be interfered with. However, more and more the Court is focusing on individuals’ privacy as protection of their relationships with other human beings (van der Sloot 2014 ). In philosophical literature on privacy, many scholars have explicitly distanced themselves from the individual approach and instead study the social dimensions of privacy (Roessler and Mokrosinska 2015 ). Helen Nissenbaum is by far the most important spokesperson for the social approach. She has introduced the notion of contextual integrity as an alternative to what she describes as too much focus on individuals’ rights based notions of privacy (Nissenbaum 2009 ). Nissenbaum criticizes the so-called interest-based approach, which defines conflicts in terms of (violated) interests of the parties involved. For instance, ‘Uncontroversial acceptance of healthcare monitoring systems can be explained by pointing to the roughly even service to the interest of patients, hospitals, healthcare professionals and so on’. The problem with this approach, according to Nissenbaum, is that it sooner or later leads to ‘hard fought interest brawls’, which more often than not are settled to the advantage of the more powerful parties (Nissenbaum 2009 , p. 8). It is necessary to create a justificatory platform to reason in moral terms. As a rights-based approach is not satisfactory, she proposes a normative approach that does more justice to the social dimension.

The distinction between a focus on the individual and privacy as social value is not only of academic importance. For policies on privacy, this makes quite a difference. On the one hand, the emphasis can be on an individual’s right to decide about personal interests and transparency for empowering the individual , as for instance the European Data Protection Supervisor asserts (EDPS Opinion 2015 ). On the other hand, the emphasis can also be on institutional arrangements that protect social relationships. The fact that good privacy policies require measures should not be a reason to overlook their fundamental differences.

In this paper, we compare individual-based justifications of privacy with the social approach. We open with a discussion of the strengths of the individual-focused approach by relating privacy to a concept that has a strong normative sense and is most closely associated with individual-based privacy conceptions: autonomy. As we will see, a defence of privacy along these lines is both possible and necessary. In our discussion of the social approach, we focus on Helen Nissenbaum’s model. A critical discussion of the normative dimension will lead to suggestions for strengthening this model.

The individual approach

The importance of privacy: autonomy.

The history of justifications of privacy starts with Warren and Brandeis’s ( 1890 ) legal definition of privacy as the right to be left alone ( 1890 ). This classic definition is completely in line with the literal meaning of privacy. The word is a negativum (related to deprive ) of public . The right to privacy is essentially the right of individuals to have their own domain , separated from the public (Solove 2015 ). The basic way to describe this right to be left alone is in terms of access to a person. In classic articles, Gavison and Reiman characterize privacy as the degree of access that others have to you through information, attendance, and proximity (Gavison 1984 ; Reiman 1984 ).

Discussion about the importance of privacy for the individual intensified in the second half of the twentieth century, as patterns of living in societies became more and more individualistic. Privacy became linked to the valued notion of autonomy and the underlying idea of individual freedom. In both literature on privacy and judicial statements, this connection between privacy and autonomy has been a topic of intense discussion. Sometimes the two concepts were even blended together, even though they should remain distinct. A sharp distinction between privacy and autonomy is necessary to get to grips with the normative dimension of privacy.

The concept autonomy is derived from the ancient Greek words autos (self) and nomos (law). Especially within the Kantian framework, the concept is explicated in terms of a rational individual who, reflecting independently, takes his own decisions. Being autonomous was thus understood mainly as having control over one’s own life. In many domains of professional ethics (healthcare, consumer protection, and scientific research), autonomy is a key concept in defining how human beings should be treated. The right of individuals to control their own life should always be respected. The patient, the consumer, and the research participant each must be able to make his or her own choices (Strandburg 2014 ). Physicians are supposed to fully inform patients; advertisers who are caught lying are censured; and informed consent is a standard requirement of research ethics. In each of these cases, persons should not be forced, tempted, or seduced into performing actions they do not want to do.

When privacy and autonomy are connected, privacy is described as a way of controlling one’s own personal environment. An invasion of privacy disturbs control over (or access to) one’s personal sphere. This notion of privacy is closely related to secrecy. A person who deliberately gains access to information that the other person wants to keep secret is violating the other person’s autonomy through information control. We see the emphasis on privacy as control over information in, for instance, Marmor’s description of privacy as ‘grounded in people’s interest in having a reasonable measure of control over the ways in which they can present themselves to others’ (Marmor 2015 ). Autonomy, however, does not entail an exhaustive description of privacy. It is possible that someone could have the ability to control, yet he or she lacks privacy. For instance, a woman who frequently absentmindedly forgets to close the curtains before she undresses enables her neighbour to watch her. If the neighbour does so, we can speak about a loss of the woman’s privacy. Nevertheless, the woman still has the ability to control. At any moment, she could choose to close the curtains. Thus, privacy requires more than just autonomy.

The distinction between privacy and autonomy becomes clearer in Judith Jarvis Thompson’s classic thought experiment (Taylor 2002 ). Imagine that my neighbour invented some elaborate X-ray device that enabled him to look through the walls. I would thereby lose control over who can look at me, but my privacy would not be violated until my neighbour actually started to look through the walls. It is the actual looking that violates privacy, not the acquisition of the power to look. If my neighbour starts observing through the walls but I’m not aware of it and believe that I am carrying out my duties in the privacy of my own home, my autonomy would not be directly undermined. Not only in thought experiments, but also in literature and everyday life, we witness the difference between autonomy and privacy. Taylor refers to Scrooge in Dickens’ A Christmas Carol who is present as a ghost at family parties. His covert observation of the intimate Christmas dinner party implies a breach of privacy, although he does not influence the behaviour of the other people. In everyday life, we do not experience an inadvertent breach of privacy (for instance, a passer by randomly picking up some information) as loss of autonomy.

These examples make it clear that there is a difference between autonomy, which is about control, and privacy, which is about knowledge and access to information. The most natural way to connect the two concepts is to consider privacy as a tool that fosters and encourages autonomy. Privacy thus understood contributes to demarcation of a personal sphere , which makes it easier for a person to make decisions independently of other people. But a loss of privacy does not automatically imply loss of autonomy. A violation of privacy will result in autonomy being undermined only when at least one additional condition is met: the observing (privacy-violating) person is in one way or another influencing the other person (Taylor 2002 ). Such a violation of privacy can take various forms. For instance, the person involved might feel pressure to alter her behaviour just because she knows she is being observed. Or a person who is not aware of being observed is being manipulated. This, in fact, occurs more than ever before in the digital age.

Loss of autonomy in the digital age

In the more than 100 years following Warren and Brandeis’ publication of their definition, privacy was mainly considered to be a spatial notion. For example, the right to be left alone was the right to have one’s own space in a territorial sense, e.g., at home behind closed curtains, where other people were not allowed. An important topic in discussions of privacy was the embarrassment experienced when someone else entered the private spatial domain. Consider, for example, public figures whose privacy is invaded by obtrusive photographers or people who feel invaded when someone unexpectedly enters their home (Roessler 2009 ; Gavison 1984 ).

The digital age is characterized by the omnipresence of hidden cameras and other surveillance devices. This kind of observation and the corresponding embarrassment that it can cause have changed our ideas about privacy. The main concern is not the intrusive eye of another person, but the constant observation, which can lead to the panopticon experience of the interiorized gaze of the other. It is self-evident that the additional conditions are now being met, viz., the person’s autonomy is threatened. In situations in which the observed person feels impeded to follow his impulses (Van Otterloo 2014 ), the loss of privacy leads to diminished autonomy.

The loss of autonomy resulting from persistent surveillance becomes even more striking when we take into consideration the unprecedented collection and storage of non-visual information. Collecting data on individuals, such as through the activity of profiling, offers commercial parties and other institutions endless possibilities for approaching people in ways that meet the institution’s own interests. Driven by invisible algorithms, these institutions temp, nudge, seduce, and convince individuals to participate for reasons that are advantageous to the institution. The widespread application of algorithms in decision-making processes intensifies the problem of loss of autonomy in at least two respects. First, when algorithms are used to track people’s behaviour, there is no ‘observer’ in the strict sense of the word; no human (or other ‘cognitive entity’) actually ever checks the individual’s search profile. Nevertheless, the invisibility of the watchful entity does not diminish the precision with which the behaviour is being tracked; in fact, it is quite the opposite. Second, in the digital age mere awareness of the possibility that surveillance techniques exist has an impact on human behaviour, independently of whether there is actually an observing entity. More than ever before, Foucault’s ( 1975 ) addition to Bentham’s panopticon model is relevant. The gaze of the other person is internalized.

This brings us to the conclusion that, despite the fact that a loss of privacy does not necessarily involve a loss of autonomy, in the digital age when privacy is under threat, the independence of individual decisions is typically also compromised.

These observations are striking when we consider that Western societies in particular focus on the individual person, whose autonomy is esteemed very highly. We can contrast the self-image and ego vocabulary that prevail in everyday life with online situations where an individual’s autonomy is lost. There are two examples of this from domains where autonomy has traditionally been considered to be very important and where it has come under threat.

Advertising

In consumer and advertising ethics, the consumer’s free choice is the moral cornerstone. In the online world, this ethical value is scarcely met. Digitalisation facilitates customised advertising, which originally was presented as a service for the individual. Tailored information was supposed to strengthen a person’s capacities to make choices to his own advantage. But now the procedure has become degenerated; people are placed into a filter bubble based on algorithms and corporate policies that are unknown to the target persons. Individuals’ control and knowledge about the flow of information are lost. As we all are keenly aware, requiring people to agree with terms and conditions does nothing to solve the problem. In the first place, very few people even read them. This kind of autonomy is apparently too demanding for most people to exercise. Secondly, the terms and conditions do not themselves say anything about the algorithms. Today’s consumer finds himself in a grey area, where he struggles between exercising autonomy and being influenced by others.

Of course, it is an empirical question as to what degree the algorithms influence customers’ behaviour. The least we can say is that the wide application of algorithms suggests that they must have a substantial effect. Following the critical study of Sunstein ( 2009 ) in which he warns that the political landscape might become fragmented (‘cyberbalkanization’), much research has been undertaken on the influence of algorithms on political opinions. This has resulted in a nuanced view of the widespread existence of ‘confirmation bias’. For instance, it has been shown that the need for information that confirms one’s opinion differs from other kinds of information and that it is stronger in those people who have more extreme political opinions. Furthermore, there turns out to be a major difference between how often individuals actively search for opinions similar to their own (what people usually do) and how often they consciously avoid noticing opinions that differ from their own (which are far more infrequent). People surfing the Internet often encounter news they were not consciously looking for, but which they nevertheless take seriously. This is called ‘inadvertent’ attention for news (Garret 2009 ; Tewksbury and Rittenberg 2009 ; Becker 2015 , Chap. 4).

The question how online networks influence exposure to perspectives that cut across ideological lines received a lot of attention after the Brexit referendum and Trump election. Using data of 10.1 million Facebook users Bakshy et al. confirm that digital technologies have the potential to limit exposure to attitude-challenging information. The authors observed substantial polarization among hard content shared by users, with the most frequently shared links clearly aligned with largely liberal or conservative populations. But one-sided algorithms are not always of decisive importance. The flow of information on Facebook is structured by how individuals are connected in the network. How much cross-cutting content an individual encounters depends on who his friends are and what information those friends share. According to Bakshy et al. on average more than 20% of an individual’s Facebook friends who report an ideological affiliation are from the opposing party, leaving substantial room for exposure to opposing viewpoints (Bakshy et al. 2015 ). Dubois and Blank, using a nationally representative survey of adult internet users in the UK found that individuals do tend to expose themselves to information and ideas they agree with. But they do not tend to avoid information and ideas that are conflicting. Particularly those who are interested in politics and those with diverse media diets tend to avoid echo chambers. Dubois & Blank observe that many studies are single platform studies, whereas most individuals use a variety of media in their news and political information seeking practices. Measuring exposure to conflicting ideas on one platform does not account for the ways in with individuals collect information across the entire media environment. Even individuals who have a strong partisan affiliation report using both general newssites which are largely non-partisan and include a variety of issues (Dubois and Blank 2018 , see also Alcott et al.). These findings are consistent with other studies that indicate that only a subset of Americans have heavily skewed media consumption patterns (Guess et al. 2016 ).

Research ethics

Corporations such as Google and Facebook, as well as data brokers use people’s personal information in their research activities. One disturbing example is the research that Facebook conducted in 2014. The corporation experimented on hundreds of thousands of unwitting users, attempting to induce an emotional state in them by selectively showing either positive or negative stories in their news feeds (Kramer et al. 2014 ; Fiske and Hauser 2014 ). Acquiring information by manipulating people without their informed consent and without debriefing them is a gross violation of the ethical standards that established research institutions must follow.

Such violations of people’s autonomy indicate a striking contrast between the offline ideals of most users and their online practices. Whereas in the offline world we typically take autonomy as a moral cornerstone, on the Internet this ideal is not upheld. How to deal with this discrepancy in values upheld in the real world and on the Internet is one of the central challenges in discussions about privacy. When we do not strive for more clarity and transparency in the flow of information, we relinquish autonomy, a value that is deeply embedded in Western cultures.

The social approach

We might be tempted to associate the emergence of the social approach in discussions about privacy with the digital age, as if only in these times of rapid information flow reflection on the social dimension of privacy is justified. This, however, would be a false suggestion. During the twentieth century, an important undercurrent in discussions of privacy was an emphasis on the importance of privacy for social relationships. Privacy was seen as a component of a well- functioning society (Regan 2015 ), in that it plays an important role in what is described as a differentiated society. Privacy guarantees social boundaries that help to maintain the variety of social environments. Because privacy provides contexts for people to develop in different kinds of relationships, respect for privacy enriches social life. Privacy also facilitates interactions among people along generally agreed patterns (Schoemann 1984 ). As the poet Robert Frost remarked in Mending Wall ( 1914 ), Good fences make good neighbors .

This characteristic of privacy is important not only at an institutional level. In people’s private lives the creation and maintenance of different kinds of relationships is possible only when subtle differences in patterns of social behaviour and social expectations are recognized (Rachels 1984 , Marmor 2015 ). Remarkably, this subtlety becomes clearest in examples of intrusions of privacy in unoccupied public places. Consider (a) someone who deliberately attempts to sit beside lovers who are sitting together on a park bench, or (b) intrusive bystanders at the scene of a car accident. In both cases, the intrusions of the privacy of the persons involved are very important. The most trivial words and gestures can reflect a deep dedication and intense relationship between two people. In one of the first descriptions of the core of privacy, the English jurist and philosopher Stephens depicted it as an observation which is sympathetic (Schoemann 1984 ). Sympathetic is derived from the Greek word sympathein , which means being involved with the same. Indeed, in private situations, different people experience the same things as important. A small, clearly distinguished domain is created, and the events should be shared only by those who directly participate in them. The persons involved are tied together by having undergone common experiences. They have an immediate relationship to what is at stake, and in this relationship they are deeply engrossed. An outside observer who has not participated in the common experience is viewed as invading their privacy. He cannot share the meaning of what is going on because he has not been directly involved.

When understood this way the concept of privacy is helpful in explaining the difference between occasionally being noticed and being eavesdropped upon. In cases involving eavesdropping, someone participates in an indirect and corrupt way in what is going on. The participation is indirect because the person acquires knowledge without participating directly; the things that are at stake should not concern him. The participation is corrupt because the indirect participant is not genuinely interested in what is going on. He sees the others involved not primarily as people with their own sensibilities, goals, and aspirations, but as the objects of his own curiosity. When the other people become aware that they are being observed, they begin to see themselves through the eyes of the observing person, and they thereby lose spontaneity. Their direct involvement in the meaning of what is at stake is lost.

In cases like these, neither the content of the action nor the secrecy surrounding it qualifies the actions as belonging to the private sphere. The content might be very trivial, but it would be offensive to the lovers sitting on the park bench to suggest that what they are expressing to each other could be made public. The most commonplace of actions—for instance, walking with one’s children down the street—can be private. Note the indignation of people in the public eye about obtrusive photographers who take photographs of public figures while they are doing ordinary things like we all do. The essence of secrecy is intentional concealment, but the private situations that we discuss here concern behaviour, inward emotions, and convictions that can be shown and experienced in various places that are accessible to everyone, as for instance in the case of the young couple we saw sitting in the park (Belsey 1992 ).

This characteristic of privacy in social relationships cannot be captured by the concept of autonomy in the sense of an individual independently and deliberately making his or her own choices. What is at stake in situations like these is not a lack of transparency. There is no question about the autonomy of an independent individual. The person would be deeply engrossed in precarious and delicate situations involving social relationships. An intrusion on this person’s privacy would mean that he feels inhibited in being immersed in the social interaction and share the meaning at stake.

In order to do justice to this notion of privacy, other strategies for protecting privacy are required. It is not primarily an individual’s mastery that must be protected; rather, it is the possibility for the individual to be properly embedded in social relationships. To answer the question of how this concept of privacy manifests itself in the digital age, we turn to Helen Nissenbaum’s contextual integrity model, which is an elaboration of socially embedded privacy in the digital age.

Helen Nissenbaum’s contextual integrity model

After having conducted several preliminary studies, Helen Nissenbaum published Privacy in Context (2009), a book that became very influential in philosophical and political debates on privacy. It inspired the Obama administration in the United States to focus on the principle of respect for context as an important notion in a document on the privacy of consumer data (Nissenbaum 2015 ). The core idea of Nissenbaum’s model is presented in the opening pages of her book: ‘What people care most about is not simply restricting the flow of information but ensuring that it flows appropriately .’ In Nissenbaum’s view, the notion ‘appropriate’ can be understood to mean that normative standards are not determined by an abstract, theoretically developed default. The criteria for people’s actions and the expectations of the actions of other people are developed in the context of social structures that have evolved over time, and which are experienced in daily life. As examples of contexts, Nissenbaum mentions health care, education, religion, and family. The storage, monitoring, and tracking of data are allowed insofar as they serve the goals of the context. Privacy rules are characterized by an emphasis on data security and confidentiality, in order to ensure that the flow of information is limited only to the people directly involved. The key players in the context have the responsibility to prevent the data from falling into the wrong hands.

Nissenbaum’s model is well-suited for the information age. It describes privacy in terms of the flow of information, and the model is easy to apply to institutional gatekeepers who deal with data streams. At the same time, the contextual approach deviates from the classical view of autonomy. The personal control of information loses ground, and shared responsibility that is expressed through broader principles becomes more important. Nissenbaum considers it a serious disadvantage of the autonomy approach that it is usually associated with notions of privacy that are based on individuals’ rights. In the articulation of justificatory frameworks in policymaking and the legal arena, we often see major conflicts among parties who insist that their rights and interests should be protected. She also distances herself from the connection between privacy and secrecy (for a recent description of this connection, see Solove 2015 ). Privacy is not forfeited by the fact that someone knows something about another person. Within contexts, information about persons might flow relatively freely. In line with this, Nissenbaum puts into perspective the classic distinction between the private and the public realm. Contexts might transgress borders between the public and the private. For instance, professionals in social healthcare work with information that comes from intimate spheres. As professionals, they are, however, part of the public domain. It is their professional responsibility to deal properly with the flow of information within the realm of their own activities.

Normative weakness and the threat of conservatism

Nissenbaum’s rejection of autonomy as the basis for privacy raises questions about the normative strength of her model. Does she indeed deliver the justificatory platform or framework to reason in moral terms? She asserts that her model does do so when she claims that the context procures a clear orientation, which can guide policies on privacy. This claim suggests that it is completely clear what a context is, as is the way in which it delivers a normative framework. In this respect, Nissenbaum’s work has some flaws.

In her description of context as a structured social setting that guides behaviour, Nissenbaum refers to a wide array of scholars from social theory and philosophy. Nissenbaum ( 2009 ), for instance, reviews Bourdieu’s field theory, Schatzki’s notion of practice in which activities are structured teleologically, and Walzer’s Spheres of Justice . There are, however, major differences among these authors. Schatzki focuses on action theory and the way in which people develop meaningful activities; Walzer describes the plural distribution of social goods in different spheres of human activity; and Bourdieu focuses on power relationships. When searching for a normative framework, it matters which of these approaches is being taken as the starting point. The theories also differ in their emphasis on a descriptive (Bourdieu) versus a normative (Walzer) analysis.

This vagueness about the normative framework is a serious problem because protection of privacy in the digital age requires systemic criteria to measure new developments against established customs. Nissenbaum assumes at the start that online technologies change the way in which information flows, but they do not change the principles that guide the flow of information. The principles by which digital information flows must be derived from the institutions as they function in the off-line world, i.e., the background social institutions (Nissenbaum 2009 ). Consider online banking as an example. In the digital age, contacts between costumers and banks have completely changed. Impressive buildings in which people previously made financial transactions have been partly replaced by the digital flow of information. But the core principles regarding the actions of the actors (the so called information and transmission principles) have not changed. This implies that people working within the context are familiar with the sensible issues, and they have the final say. The only thing that must be done is to translate the principles to the new situation. In case the novel practice results in a departure from entrenched norms, as Nissenbaum says, the novel practice is flagged as a breach, and we have prima facie evidence that contextual integrity has been violated (Nissenbaum 2009 ). Indeed, Nissenbaum admits that this starting point is inherently conservative, and she flags departures from entrenched practice as problematic ( 2009 ). She leaves open the possibility that completely new developments can lead to a revision of existing standards, and she gives ample guidelines about how to implement such a revision (Nissenbaum 2015 ).

Nissenbaum’s emphasis on existing practices must be understood in the context of a non-philosophical and non-sociological source, e.g., the notion of reasonable expectation, which plays an important role in United States jurisprudence on privacy. In the conclusion of her book, Nissenbaum ( 2009 ) describes privacy as ‘a right to live in a world in which our expectations about the flow of personal information are, for the most part, met’. Reasonable expectation was the core notion in the famous case of Katz versus United States , which laid the foundation for privacy discussions in the United States. Before Katz , it had already been recognized that within one ’ s own home, there was a justified expectation of privacy. Katz dealt with the kind of privacy situations in the public sphere that was described in the preceding paragraph. In this case, a phone call had been made from a public phone booth while enforcement agents used an external listening device to listen to the conversation. The Court considered this to be unjustified. The Fourth Amendment to the United States Constitution protects people, but not places; therefore, the actions of the enforcement agents constituted an intrusion. Regardless of location, oral statements are protected if there is a reasonable expectation of privacy. This extension of privacy was a revolutionary development, and the notion of reasonable expectation turned out to work well. For instance, in cases where the distinction between hard-to-obtain information and information that is in plain view plays an important role. In many cases, however, just because information is in plain view does not mean there is a reasonable expectation of privacy. Consider the situation where the police accidentally uncover illegal drugs concealed in an automobile. In cases like this, an appeal to privacy to protect criminals cannot be justified.